DINO: DETR with Improved DeNoising Anchor Boxes for End-to-End Object Detection

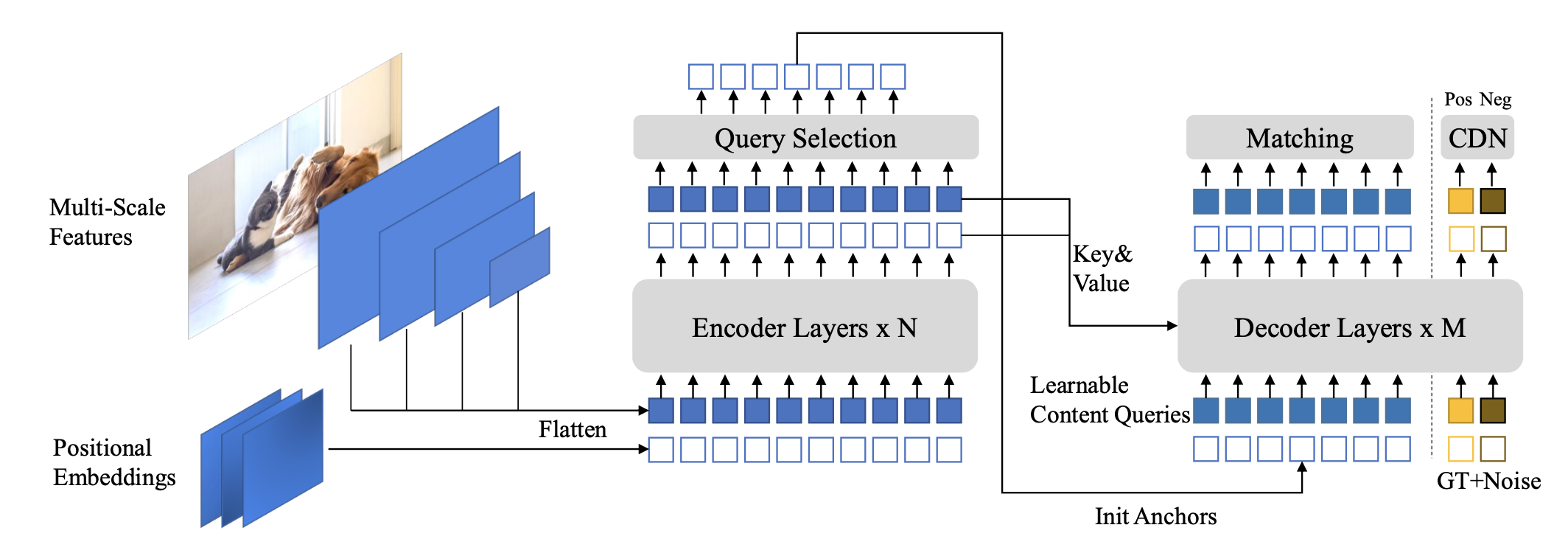

We present DINO(DETR with Improved deNoising anchOr boxes), a state-of-the-art end-to-end object detector. DINO improves over previous DETR-like models in performance and efficiency by using a contrastive way for denoising training, a mixed query selection method for anchor initialization, and a look forward twice scheme for box pre- diction. DINO achieves 49.4AP in 12 epochs and 51.3AP in 24 epochs on COCO with a ResNet-50 backbone and multi-scale features, yield- ing a significant improvement of +6.0AP and +2.7AP, respectively, compared to DN-DETR, the previous best DETR-like model. DINO scales well in both model size and data size. Without bells and whistles, after pre-training on the Objects365 dataset with a SwinL backbone, DINO obtains the best results on both COCO val2017 (63.2AP) and test-dev (63.3AP). Compared to other models on the leaderboard, DINO significantly reduces its model size and pre-training data size while achieving better results.

| Algorithm | Config | Params (backbone/total) |

inference time(V100) (ms/img) |

bbox_mAPval 0.5:0.95 |

APval 50 |

Download |

|---|---|---|---|---|---|---|

| DINO_4sc_r50_12e | DINO_4sc_r50_12e | 23M/47M | 184ms | 48.71 | 66.27 | model - log |

| DINO_4sc_r50_36e | DINO_4sc_r50_36e | 23M/47M | 184ms | 50.69 | 68.60 | model - log |

| DINO_4sc_swinl_12e | DINO_4sc_swinl_12e | 195M/217M | 155ms | 56.86 | 75.61 | model - log |

| DINO_4sc_swinl_36e | DINO_4sc_swinl_36e | 195M/217M | 155ms | 58.04 | 76.76 | model - log |

| DINO_5sc_swinl_36e | DINO_5sc_swinl_36e | 195M/217M | 235ms | 58.47 | 77.10 | model - log |

| DINO++_5sc_swinl_18e | DINO++_5sc_swinl_18e | 195M/218M | 325ms | 63.39 | 80.25 | model - log |

| (objects365 dataset processing tools: https://pai-vision-data-hz.oss-cn-zhangjiakou.aliyuncs.com/EasyCV/modelzoo/detection/dino/obj365_download_tools.tar.gz) |

@misc{zhang2022dino,

title={DINO: DETR with Improved DeNoising Anchor Boxes for End-to-End Object Detection},

author={Hao Zhang and Feng Li and Shilong Liu and Lei Zhang and Hang Su and Jun Zhu and Lionel M. Ni and Heung-Yeung Shum},

year={2022},

eprint={2203.03605},

archivePrefix={arXiv},

primaryClass={cs.CV}

}