+

+ S-Lab, Nanyang Technological University

+

+ * Equal Contribution

+ ♠ Equal appreciation on assistance

+ ✉ Corresponding Author

+

+

+

+[Technical Report](link) | [Demo](https://huggingface.co/spaces/Otter-AI/OtterHD-8B-demo) | [Benchmarks](https://huggingface.co/spaces/Otter-AI)

+

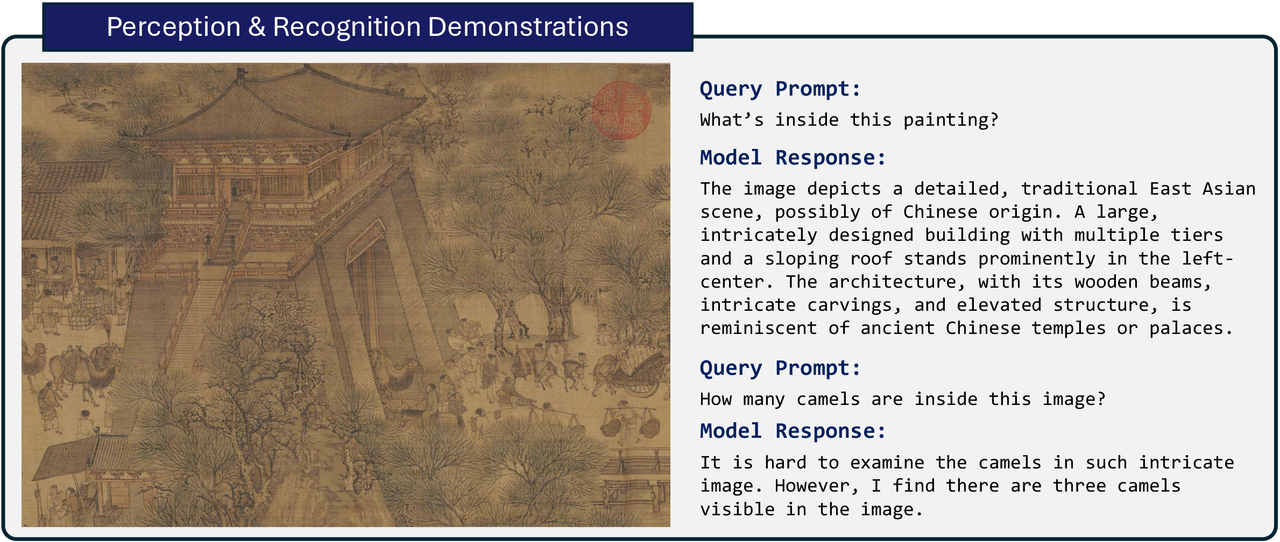

+We introduce OtterHD-8B, a multimodal model fine-tuned from [Fuyu-8B](https://huggingface.co/adept/fuyu-8b) to facilitate a more fine-grained interpretation of high-resolution visual input without requiring a vision encoder. OtterHD-8B also supports flexible input sizes at test time, ensuring adaptability to diverse inference budgets.

+

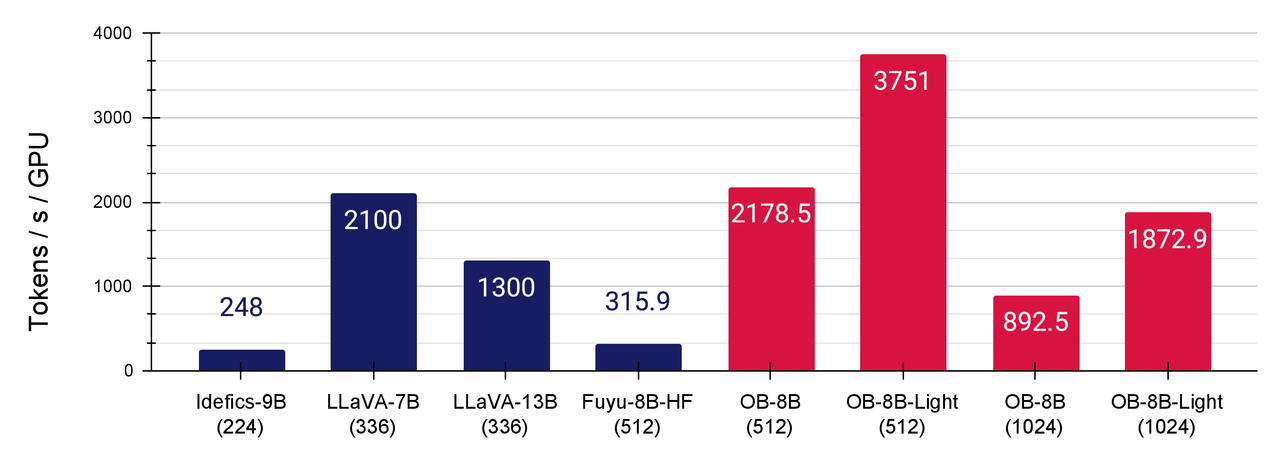

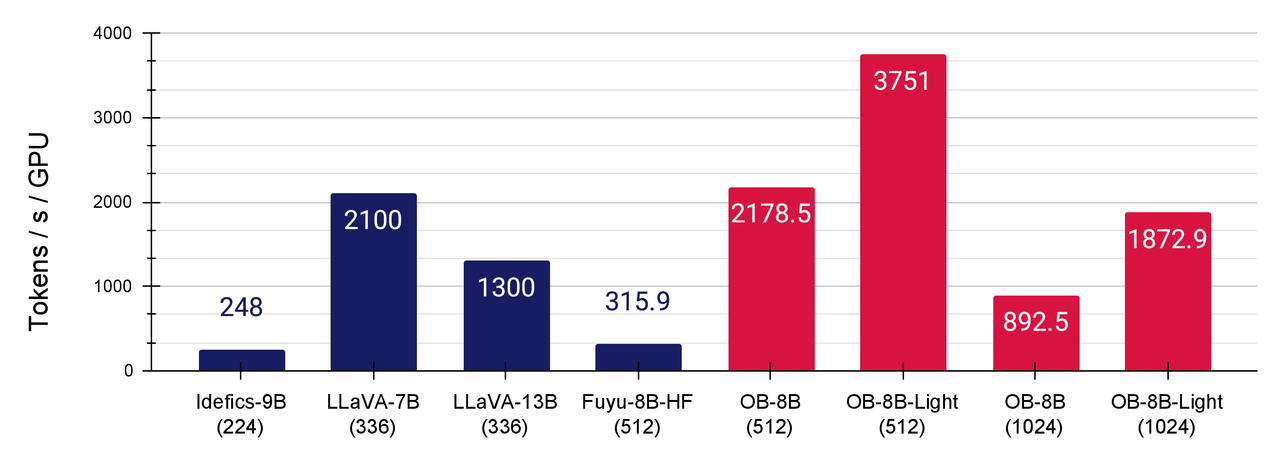

+We improve the native HuggingFace implementation of Fuyu-8B is highly unoptimized with [FlashAttention-2](https://github.com/Dao-AILab/flash-attention) and other fused operators including fused layernorm, fused square ReLU, and fused rotary positional embedding. Fuyu's simplified architecture facilitates us to do this in a fairly convenient way. As illustrated in the following, the modifications substantially enhance GPU utilization and training throughput (> 5 times larger than the vanilla HF implementation of Fuyu). Checkout the details at [here](../src/otter_ai/models/fuyu/modeling_fuyu.py).

+

+To our best knowledge and experiment trials, OtterHD achieves fastest training throughput among current leading LMMs, as it can be fully optimized and benefit from the simplified architecture.

+

+

+ +

+

+

+### Installation

+On top of the regular Otter environment, we need to install Flash-Attention 2 and other fused operators:

+```bash

+pip uninstall -y ninja && pip install ninja

+git clone https://github.com/Dao-AILab/flash-attention

+cd flash-attention

+python setup.py install

+cd csrc/rotary && pip install .

+cd ../csrc/fused_dense_lib && pip install .

+cd ../layer_norm && pip install .

+cd ../xentropy && pip install .

+cd ../.. && rm -rf flash-attention

+```

+### How to Finetune

+

+```bash

+accelerate launch \

+--config_file=pipeline/accelerate_configs/accelerate_config_zero2.yaml \

+--num_processes=8 \

+--main_process_port=25000 \

+pipeline/train/instruction_following.py \

+--pretrained_model_name_or_path=adept/fuyu-8b \

+--training_data_yaml=./Demo_Data.yaml \

+--model_name=fuyu \

+--instruction_format=fuyu \

+--batch_size=8 \

+--gradient_accumulation_steps=2 \

+--num_epochs=3 \

+--wandb_entity=ntu-slab \

+--external_save_dir=./checkpoints \

+--save_hf_model \

+--run_name=OtterHD_Tester \

+--wandb_project=Fuyu \

+--report_to_wandb \

+--workers=1 \

+--lr_scheduler=linear \

+--learning_rate=1e-5 \

+--warmup_steps_ratio=0.01 \

+--dynamic_resolution \

+--weight_decay 0.1 \

+```

+

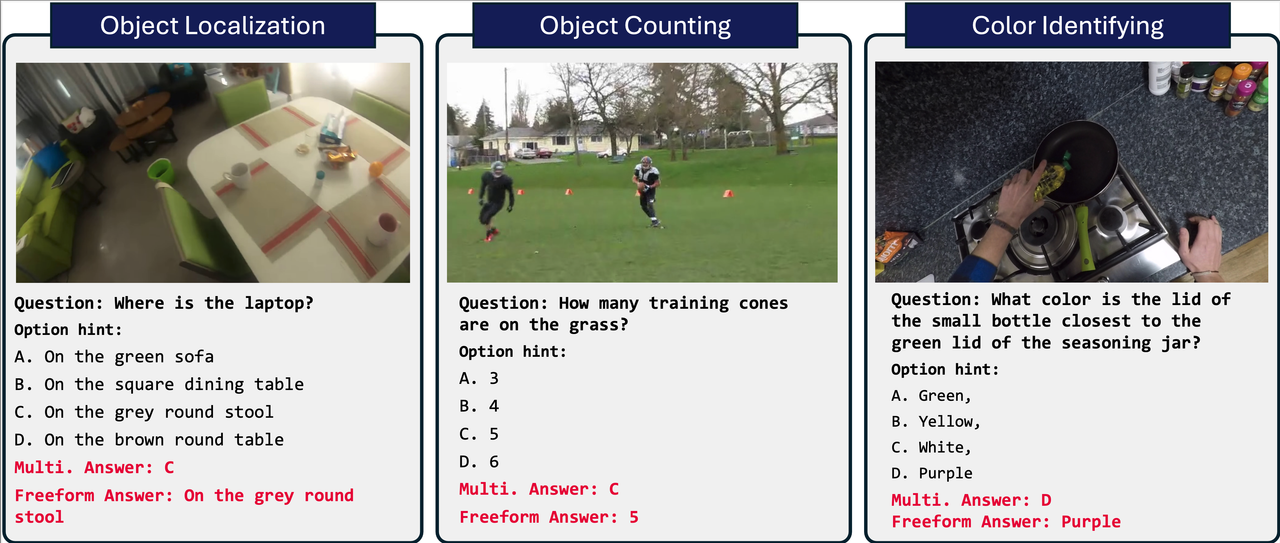

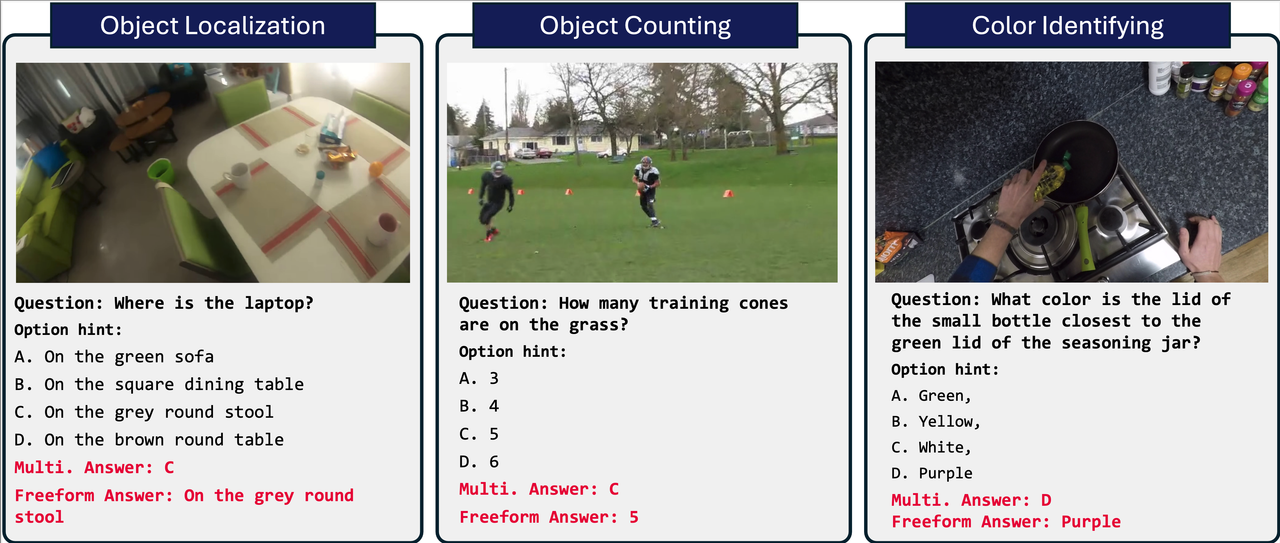

+## MagnifierBench

+

+ +

+

+

+The human visual system can naturally perceive the details of small objects within a wide field of view, but current benchmarks for testing LMMs have not specifically focused on assessing this ability. This may be because the input sizes of mainstream Vision-Language models are constrained to relatively small resolutions. With the advent of the Fuyu and OtterHD models, we can extend the input resolution to a much larger range. Therefore, there is an urgent need for a benchmark that can test the ability to discern the details of small objects (often 1% image size) in high-resolution input images.

+

+### Evaluation

+Create a yaml file `benchmark.yaml` with below content:

+```yaml

+datasets:

+ - name: magnifierbench

+ split: test

+ data_path: Otter-AI/MagnifierBench

+ prompt: Answer with the option letter from the given choices directly.

+ api_key: [You GPT-4 API]

+models:

+ - name: fuyu

+ model_path: azure_storage/fuyu-8b

+ resolution: 1440

+```

+

+Then run

+

+```python

+python -m pipeline.benchmarks.evaluate --confg benchmark.yaml

+```

diff --git a/docs/benchmark_eval.md b/docs/benchmark_eval.md

new file mode 100644

index 00000000..e01595bd

--- /dev/null

+++ b/docs/benchmark_eval.md

@@ -0,0 +1,59 @@

+# Welcome to the benchmark evaluation page!

+

+The evaluation pipeline is designed to be one-clickable and easy to use. However, you may encounter some problems when running the models (e.g. LLaVA, LLaMA-Adapter) that require you to clone their repo to local path. Please feel free to contact us if you have any questions.

+

+We support the following benchmarks:

+- MagnifierBench

+- MMBench

+- MM-VET

+- MathVista

+- POPE

+- MME

+- SicenceQA

+- SeedBench

+

+And following models:

+- LLaVA

+- Fuyu

+- OtterHD

+- Otter-Image

+- Otter-Video

+- Idefics

+- LLaMA-Adapter

+- Qwen-VL

+

+many more, see `/pipeline/benchmarks/models`

+

+Create a yaml file `benchmark.yaml` with below content:

+```yaml

+datasets:

+ - name: magnifierbench

+ split: test

+ data_path: Otter-AI/MagnifierBench

+ prompt: Answer with the option letter from the given choices directly.

+ api_key: [You GPT-4 API]

+ - name: mme

+ split: test

+ - name: pope

+ split: test

+ default_output_path: ./logs

+ - name: mmvet

+ split: test

+ api_key: [You GPT-4 API]

+ gpt_model: gpt-4-0613

+ - name: mathvista

+ split: test

+ api_key: [You GPT-4 API]

+ gpt_model: gpt-4-0613

+ - name: mmbench

+ split: test

+models:

+ - name: fuyu

+ model_path: adept/fuyu-8b

+```

+

+Then run

+

+```python

+python -m pipeline.benchmarks.evaluate --confg benchmark.yaml

+```

\ No newline at end of file

diff --git a/docs/huggingface_compatible.md b/docs/huggingface_compatible.md

old mode 100644

new mode 100755

diff --git a/docs/mimicit_format.md b/docs/mimicit_format.md

new file mode 100755

index 00000000..d21ab271

--- /dev/null

+++ b/docs/mimicit_format.md

@@ -0,0 +1,89 @@

+# Breaking Down the MIMIC-IT Format

+

+We mainly use one integrate dataset format and we refer it to MIMIC-IT format since.

+

+The mimic-it format contains the following data yaml file. Within this data yaml file, you could assign the path of the instruction json file and the image parquet file, and also the number of samples you want to use. The number of samples within each group will be uniformly sampled, and the `number_samples / total_numbers`` will decide sampling ratio of each dataset.

+

+```yaml

+IMAGE_TEXT: # Group name should be in [IMAGE_TEXT, TEXT_ONLY, IMAGE_TEXT_IN_CONTEXT]

+ LADD: # Dataset name can be assigned at any name you want

+ mimicit_path: azure_storage/json/LA/LADD_instructions.json # Path of the instruction json file

+ images_path: azure_storage/Parquets/LA.parquet # Path of the image parquet file

+ num_samples: -1 # Number of samples you want to use, -1 means use all samples, if not set, default is -1.

+ LACR_T2T:

+ mimicit_path: azure_storage/json/LA/LACR_T2T_instructions.json

+ images_path: azure_storage/Parquets/LA.parquet

+ num_samples: -1

+ M3IT_CAPTIONING:

+ mimicit_path: azure_storage/json/M3IT/captioning/coco/coco_instructions.json

+ images_path: azure_storage/Parquets/coco.parquet

+ num_samples: 20000

+

+TEXT_ONLY:

+ LIMA:

+ mimicit_path: azure_storage/json/LANG_Only/LIMA/LIMA_instructions_max_1K_tokens.json

+ num_samples: 20000

+ SHAREGPT:

+ mimicit_path: azure_storage/json/LANG_Only/SHAREGPT/SHAREGPT_instructions_max_1K_tokens.json

+ num_samples: 10000

+ AL:

+ mimicit_path: azure_storage/json/LANG_Only/AL/AL_instructions_max_1K_tokens.json

+ num_samples: 20000

+```

+

+The data yaml file mainly include two groups of data (1) IMAGE_TEXT (2) TEXT_ONLY.

+

+For each group, one dataset contains the `instruction.json` file and `images.parquet` file. You can browse the `instruction.json` file at [here](https://entuedu-my.sharepoint.com/:f:/g/personal/libo0013_e_ntu_edu_sg/Eo9bgNV5cjtEswfA-HfjNNABiKsjDzSWAl5QYAlRZPiuZA?e=nNUhJH) and the `images.parquet` file at [here](https://entuedu-my.sharepoint.com/:f:/g/personal/libo0013_e_ntu_edu_sg/EmwHqgRtYtBNryTcFmrGWCgBjvWQMo1XeCN250WuM2_51Q?e=sCymXx). We will provide more at the same Onedrive folder gradually due to the limited internet bandwith, you send emails to push us.

+

+You are also welcome to make your own data into this format, let's breakdown what's inside them:

+

+## DallE3_instructions.json

+```

+{

+ "meta": { "version": "0.0.1", "time": "2023-10-29", "author": "Jingkang Yang" },

+ "data": {

+ "D3_INS_000000": {

+ "instruction": "What do you think is the prompt for this AI-generated picture?",

+ "answer": "photo of a gigantic hand coming from the sky reaching out people who are holding hands at a beach, there is also a giant eye in the sky look at them",

+ "image_ids": ["D3_IMG_000000"],

+ "rel_ins_ids": []

+ },

+ "D3_INS_000001": {

+ "instruction": "This is an AI generated image, can you infer what's the prompt behind this image?",

+ "answer": "photography of a a soccer stadium on the moon, players are dressed as astronauts",

+ "image_ids": ["D3_IMG_000001"],

+ "rel_ins_ids": []

+ }...

+ }

+}

+```

+

+Note that the `image_ids` is the key of the `DallE3_images.parquet` file, you can use the `image_ids` to index the `base64` string of the image.

+

+## DallE3_images.parquet

+

+```

+import pandas as pd

+images = "./DallE3_images.parquet"

+image_parquet = pd.read_parquet(images)

+

+image_parquet.head()

+ base64

+D3_IMG_000000 /9j/4AAQSkZJRgABAQEASABIAAD/2wBDAAEBAQEBAQEBAQ...

+D3_IMG_000001 /9j/4AAQSkZJRgABAQEASABIAAD/5FolU0NBTEFETwAAAg...

+```

+

+

+Note that before September, we mainly use `images.json` to store the `key:base64_str` pairs, but we found it causes too much CPU memory during decoding large json files. So we switch to parquet, the parquet file is the same as previous json file and you can use the script to convert it from json to parquet.

+

+```python

+json_file_path = "LA.json"

+with open(json_file_path, "r") as f:

+ data_dict = json.load(f)

+

+df = pd.DataFrame.from_dict(resized_data_dict, orient="index", columns=["base64"])

+parquet_file_path = os.path.join(

+ parquet_root_path, os.path.basename(json_file_path).split(".")[0].replace("_image", "") + ".parquet"

+)

+df.to_parquet(parquet_file_path, engine="pyarrow")

+```

\ No newline at end of file

diff --git a/docs/server_host.md b/docs/server_host.md

old mode 100644

new mode 100755

diff --git a/environment.yml b/environment.yml

old mode 100644

new mode 100755

diff --git a/mimic-it/README.md b/mimic-it/README.md

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/README.md b/mimic-it/convert-it/README.md

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/__init__.py b/mimic-it/convert-it/__init__.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/abstract_dataset.py b/mimic-it/convert-it/abstract_dataset.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/datasets/2d.py b/mimic-it/convert-it/datasets/2d.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/datasets/3d.py b/mimic-it/convert-it/datasets/3d.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/datasets/__init__.py b/mimic-it/convert-it/datasets/__init__.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/datasets/change.py b/mimic-it/convert-it/datasets/change.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/datasets/fpv.py b/mimic-it/convert-it/datasets/fpv.py

old mode 100644

new mode 100755

index cd00fce8..6d174378

--- a/mimic-it/convert-it/datasets/fpv.py

+++ b/mimic-it/convert-it/datasets/fpv.py

@@ -56,7 +56,11 @@ def get_image(video_path):

final_images_dict = {}

with ThreadPoolExecutor(max_workers=num_thread) as executor:

- process_bar = tqdm(total=len(video_paths), unit="video", desc="Processing videos into images")

+ process_bar = tqdm(

+ total=len(video_paths),

+ unit="video",

+ desc="Processing videos into images",

+ )

for images_dict in executor.map(get_image, video_paths):

final_images_dict.update(images_dict)

process_bar.update()

diff --git a/mimic-it/convert-it/datasets/utils/scene_navigation_utils.py b/mimic-it/convert-it/datasets/utils/scene_navigation_utils.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/datasets/utils/visual_story_telling_utils.py b/mimic-it/convert-it/datasets/utils/visual_story_telling_utils.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/datasets/video.py b/mimic-it/convert-it/datasets/video.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/image_utils.py b/mimic-it/convert-it/image_utils.py

old mode 100644

new mode 100755

diff --git a/mimic-it/convert-it/main.py b/mimic-it/convert-it/main.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/abstract_dataset.py b/mimic-it/syphus/abstract_dataset.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/datasets/3d.py b/mimic-it/syphus/datasets/3d.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/datasets/__init__.py b/mimic-it/syphus/datasets/__init__.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/datasets/change.py b/mimic-it/syphus/datasets/change.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/datasets/fpv.py b/mimic-it/syphus/datasets/fpv.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/datasets/funqa.py b/mimic-it/syphus/datasets/funqa.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/datasets/translate.py b/mimic-it/syphus/datasets/translate.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/datasets/video.py b/mimic-it/syphus/datasets/video.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/file_utils.py b/mimic-it/syphus/file_utils.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/main.py b/mimic-it/syphus/main.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/coco_spot_the_difference_prompt.py b/mimic-it/syphus/prompts/coco_spot_the_difference_prompt.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/dense_captions.json b/mimic-it/syphus/prompts/dense_captions.json

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/ego4d.json b/mimic-it/syphus/prompts/ego4d.json

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/funqa_dia.json b/mimic-it/syphus/prompts/funqa_dia.json

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/funqa_mcqa.json b/mimic-it/syphus/prompts/funqa_mcqa.json

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/funqa_translation.json b/mimic-it/syphus/prompts/funqa_translation.json

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/scene_navigation.json b/mimic-it/syphus/prompts/scene_navigation.json

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/spot_the_difference.json b/mimic-it/syphus/prompts/spot_the_difference.json

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/translation_prompt.py b/mimic-it/syphus/prompts/translation_prompt.py

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/tv_captions.json b/mimic-it/syphus/prompts/tv_captions.json

old mode 100644

new mode 100755

diff --git a/mimic-it/syphus/prompts/visual_story_telling.json b/mimic-it/syphus/prompts/visual_story_telling.json

old mode 100644

new mode 100755

diff --git a/pipeline/accelerate_configs/accelerate_config_zero1.yaml b/pipeline/accelerate_configs/accelerate_config_zero1.yaml

new file mode 100755

index 00000000..2948da7b

--- /dev/null

+++ b/pipeline/accelerate_configs/accelerate_config_zero1.yaml

@@ -0,0 +1,18 @@

+compute_environment: LOCAL_MACHINE

+deepspeed_config:

+ gradient_accumulation_steps: 1

+ gradient_clipping: 1.0

+ offload_optimizer_device: none

+ offload_param_device: none

+ zero3_init_flag: false

+ zero_stage: 1

+distributed_type: DEEPSPEED

+fsdp_config: {}

+machine_rank: 0

+main_process_ip: null

+main_process_port: null

+main_training_function: main

+mixed_precision: bf16

+num_machines: 1

+num_processes: 8

+use_cpu: false

\ No newline at end of file

diff --git a/pipeline/accelerate_configs/accelerate_config_zero2.yaml b/pipeline/accelerate_configs/accelerate_config_zero2.yaml

index b6c41a90..5b3439f1 100755

--- a/pipeline/accelerate_configs/accelerate_config_zero2.yaml

+++ b/pipeline/accelerate_configs/accelerate_config_zero2.yaml

@@ -1,6 +1,6 @@

compute_environment: LOCAL_MACHINE

deepspeed_config:

- gradient_accumulation_steps: 1

+ gradient_accumulation_steps: 4

gradient_clipping: 1.0

offload_optimizer_device: none

offload_param_device: none

diff --git a/pipeline/accelerate_configs/accelerate_config_zero3.yaml b/pipeline/accelerate_configs/accelerate_config_zero3.yaml

old mode 100644

new mode 100755

index f5fb5bf6..a6c303c6

--- a/pipeline/accelerate_configs/accelerate_config_zero3.yaml

+++ b/pipeline/accelerate_configs/accelerate_config_zero3.yaml

@@ -1,5 +1,5 @@

compute_environment: LOCAL_MACHINE

-deepspeed_config:

+deepspeed_config:

gradient_accumulation_steps: 1

gradient_clipping: 1.0

offload_optimizer_device: none

@@ -11,9 +11,9 @@ distributed_type: DEEPSPEED

fsdp_config: {}

machine_rank: 0

main_process_ip: null

-main_process_port: 20222

+main_process_port: 20333

main_training_function: main

mixed_precision: bf16

num_machines: 1

num_processes: 8

-use_cpu: false

+use_cpu: false

\ No newline at end of file

diff --git a/pipeline/accelerate_configs/accelerate_config_zero3_offload.yaml b/pipeline/accelerate_configs/accelerate_config_zero3_offload.yaml

new file mode 100755

index 00000000..4f9775eb

--- /dev/null

+++ b/pipeline/accelerate_configs/accelerate_config_zero3_offload.yaml

@@ -0,0 +1,19 @@

+compute_environment: LOCAL_MACHINE

+deepspeed_config:

+ gradient_accumulation_steps: 1

+ gradient_clipping: 1.0

+ offload_optimizer_device: cpu

+ offload_param_device: cpu

+ zero3_init_flag: true

+ zero3_save_16bit_model: true

+ zero_stage: 3

+distributed_type: DEEPSPEED

+fsdp_config: {}

+machine_rank: 0

+main_process_ip: null

+main_process_port: 20333

+main_training_function: main

+mixed_precision: bf16

+num_machines: 1

+num_processes: 8

+use_cpu: false

\ No newline at end of file

diff --git a/pipeline/accelerate_configs/accelerate_config_zero3_slurm.yaml b/pipeline/accelerate_configs/accelerate_config_zero3_slurm.yaml

index d9b965bb..67e5598a 100755

--- a/pipeline/accelerate_configs/accelerate_config_zero3_slurm.yaml

+++ b/pipeline/accelerate_configs/accelerate_config_zero3_slurm.yaml

@@ -1,11 +1,12 @@

compute_environment: LOCAL_MACHINE

deepspeed_config:

deepspeed_multinode_launcher: standard

- gradient_accumulation_steps: 1

+ gradient_accumulation_steps: 2

gradient_clipping: 1.0

offload_optimizer_device: none

offload_param_device: none

zero3_init_flag: true

+ zero3_save_16bit_model: true

zero_stage: 3

distributed_type: DEEPSPEED

fsdp_config: {}

diff --git a/pipeline/accelerate_configs/ds_zero3_config.json b/pipeline/accelerate_configs/ds_zero3_config.json

new file mode 100755

index 00000000..6917317a

--- /dev/null

+++ b/pipeline/accelerate_configs/ds_zero3_config.json

@@ -0,0 +1,28 @@

+{

+ "fp16": {

+ "enabled": "auto",

+ "loss_scale": 0,

+ "loss_scale_window": 1000,

+ "initial_scale_power": 16,

+ "hysteresis": 2,

+ "min_loss_scale": 1

+ },

+ "bf16": {

+ "enabled": "auto"

+ },

+ "train_micro_batch_size_per_gpu": "auto",

+ "train_batch_size": "auto",

+ "gradient_accumulation_steps": "auto",

+ "zero_optimization": {

+ "stage": 3,

+ "overlap_comm": true,

+ "contiguous_gradients": true,

+ "sub_group_size": 1e9,

+ "reduce_bucket_size": "auto",

+ "stage3_prefetch_bucket_size": "auto",

+ "stage3_param_persistence_threshold": "auto",

+ "stage3_max_live_parameters": 1e9,

+ "stage3_max_reuse_distance": 1e9,

+ "stage3_gather_16bit_weights_on_model_save": true

+ }

+}

\ No newline at end of file

diff --git a/pipeline/benchmarks/.gitignore b/pipeline/benchmarks/.gitignore

new file mode 100644

index 00000000..a5394700

--- /dev/null

+++ b/pipeline/benchmarks/.gitignore

@@ -0,0 +1 @@

+config.yaml

\ No newline at end of file

diff --git a/pipeline/__init__.py b/pipeline/benchmarks/__init__.py

similarity index 100%

rename from pipeline/__init__.py

rename to pipeline/benchmarks/__init__.py

diff --git a/pipeline/eval/__init__.py b/pipeline/benchmarks/datasets/__init__.py

similarity index 100%

rename from pipeline/eval/__init__.py

rename to pipeline/benchmarks/datasets/__init__.py

diff --git a/pipeline/benchmarks/datasets/base_eval_dataset.py b/pipeline/benchmarks/datasets/base_eval_dataset.py

new file mode 100644

index 00000000..4bbd74e5

--- /dev/null

+++ b/pipeline/benchmarks/datasets/base_eval_dataset.py

@@ -0,0 +1,50 @@

+from abc import ABC, abstractmethod

+from PIL import Image

+from typing import Dict, List, Any

+

+import importlib

+

+AVAILABLE_EVAL_DATASETS: Dict[str, str] = {

+ "mmbench": "MMBenchDataset",

+ "mme": "MMEDataset",

+ "mathvista": "MathVistaDataset",

+ "mmvet": "MMVetDataset",

+ "seedbench": "SEEDBenchDataset",

+ "pope": "PopeDataset",

+ "scienceqa": "ScienceQADataset",

+ "magnifierbench": "MagnifierBenchDataset",

+}

+

+

+class BaseEvalDataset(ABC):

+ def __init__(self, name: str, dataset_path: str, *, max_batch_size: int = 1):

+ self.name = name

+ self.dataset_path = dataset_path

+ self.max_batch_size = max_batch_size

+

+ def evaluate(self, model, **kwargs):

+ return self._evaluate(model, **kwargs)

+ # batch = min(model.max_batch_size, self.max_batch_size)

+ # if batch == 1:

+ # return self._evaluate(model, **kwargs)

+ # else:

+ # kwargs["batch"] = batch

+ # return self._evaluate(model, **kwargs)

+

+ @abstractmethod

+ def _evaluate(self, model: str):

+ pass

+

+

+def load_dataset(dataset_name: str, dataset_args: Dict[str, str] = {}) -> BaseEvalDataset:

+ assert dataset_name in AVAILABLE_EVAL_DATASETS, f"{dataset_name} is not an available eval dataset."

+ module_path = "pipeline.benchmarks.datasets." + dataset_name

+ dataset_formal_name = AVAILABLE_EVAL_DATASETS[dataset_name]

+ imported_module = importlib.import_module(module_path)

+ dataset_class = getattr(imported_module, dataset_formal_name)

+ print(f"Imported class: {dataset_class}")

+ # import pdb;pdb.set_trace()

+ # get dataset args without "name"

+ init_args = dataset_args.copy()

+ init_args.pop("name")

+ return dataset_class(**init_args)

diff --git a/pipeline/benchmarks/datasets/magnifierbench.py b/pipeline/benchmarks/datasets/magnifierbench.py

new file mode 100644

index 00000000..a0c4ed97

--- /dev/null

+++ b/pipeline/benchmarks/datasets/magnifierbench.py

@@ -0,0 +1,212 @@

+import base64

+import io

+from PIL import Image

+import json

+from sklearn.metrics import accuracy_score, precision_score, recall_score, confusion_matrix

+import os

+import numpy as np

+from datasets import load_dataset

+from typing import Union

+from .base_eval_dataset import BaseEvalDataset

+from tqdm import tqdm

+import datetime

+import pytz

+import re

+

+import time

+import requests

+

+utc_plus_8 = pytz.timezone("Asia/Singapore") # You can also use 'Asia/Shanghai', 'Asia/Taipei', etc.

+utc_now = pytz.utc.localize(datetime.datetime.utcnow())

+utc_plus_8_time = utc_now.astimezone(utc_plus_8)

+

+

+def get_chat_response(promot, api_key, model="gpt-4-0613", temperature=0, max_tokens=256, n=1, patience=5, sleep_time=5):

+ headers = {

+ "Authorization": f"Bearer {api_key}",

+ "Content-Type": "application/json",

+ }

+

+ messages = [

+ {"role": "system", "content": "You are a helpful AI assistant. Your task is to judge whether the model response is correct to answer the given question or not."},

+ {"role": "user", "content": promot},

+ ]

+

+ payload = {"model": model, "messages": messages}

+

+ while patience > 0:

+ patience -= 1

+ try:

+ response = requests.post(

+ "https://api.openai.com/v1/chat/completions",

+ headers=headers,

+ data=json.dumps(payload),

+ timeout=30,

+ )

+ response.raise_for_status()

+ response_data = response.json()

+

+ prediction = response_data["choices"][0]["message"]["content"].strip()

+ if prediction != "" and prediction is not None:

+ return prediction

+

+ except Exception as e:

+ if "Rate limit" not in str(e):

+ print(e)

+ time.sleep(sleep_time)

+

+ return ""

+

+

+def prepare_query(model_answer_item, api_key):

+ freeform_question = model_answer_item["freeform_question"]

+ freeform_response = model_answer_item["freeform_response"]

+ correct_answer = model_answer_item["freeform_answer"]

+

+ # Formulating the prompt for ChatGPT

+ prompt = f"Question: {freeform_question}\nModel Response: {freeform_response}\nGround Truth: {correct_answer}\nWill the model response be considered correct? You should only answer yes or no."

+

+ # Querying ChatGPT

+ chat_response = get_chat_response(prompt, api_key)

+

+ return chat_response

+

+

+class MagnifierBenchDataset(BaseEvalDataset):

+ def __init__(

+ self,

+ data_path: str = "Otter-AI/MagnifierBench",

+ *,

+ cache_dir: Union[str, None] = None,

+ default_output_path: str = "./logs/MagBench",

+ split: str = "test",

+ debug: bool = False,

+ prompt="",

+ api_key=None,

+ ):

+ super().__init__("MagnifierBench", data_path)

+

+ self.default_output_path = default_output_path

+ if not os.path.exists(self.default_output_path):

+ os.makedirs(self.default_output_path)

+

+ self.cur_datetime = utc_plus_8_time.strftime("%Y-%m-%d_%H-%M-%S")

+ self.data = load_dataset(data_path, split=split, cache_dir=cache_dir, revision="main")

+ self.debug = debug

+ self.prompt = prompt

+ self.api_key = api_key

+

+ def parse_pred_ans(self, pred_ans, question):

+ match = re.search(r"The answer is ([A-D])", pred_ans)

+ if match:

+ return match.group(1)

+ choices = ["A", "B", "C", "D"]

+ for selection in choices:

+ if selection in pred_ans:

+ return selection

+ pattern = "A\\. (.+?), B\\. (.+?), C\\. (.+?), D\\. (.+)"

+ matches = re.search(pattern, question)

+ if matches:

+ options = {"A": matches.group(1), "B": matches.group(2), "C": matches.group(3), "D": matches.group(4)}

+ for c, option in options.items():

+ option = option.strip()

+ if option.endswith(".") or option.endswith(",") or option.endswith("?"):

+ option = option[:-1]

+ if option.upper() in pred_ans.upper():

+ return c

+ for selection in choices:

+ if selection in pred_ans.upper():

+ return selection

+ return "other"

+

+ def _evaluate(self, model):

+ model_score_dict = {}

+

+ # output_path = os.path.join(self.default_output_path, f"{model.name}_{self.cur_datetime}")

+ # if not os.path.exists(output_path):

+ # os.makedirs(output_path)

+ # model_path: str = "Salesforce/instructblip-vicuna-7b"

+ model_version = model.name.split("/")[-1]

+ model_answer_path = os.path.join(self.default_output_path, f"{model_version}_{self.cur_datetime}_answer.json")

+ result_path = os.path.join(self.default_output_path, f"{model_version}_{self.cur_datetime}_score.json")

+ model_answer = {}

+

+ score = 0

+ num_data = 0

+

+ ff_score = 0

+

+ for data in tqdm(self.data, desc="Evaluating", total=len(self.data)):

+ question = f"{self.prompt} {data['instruction']}" if self.prompt else data["instruction"]

+ if len(data["images"]) != 1:

+ print(f"Warning: {data['id']} has {len(data['images'])} images.")

+ print(f"Skipping {data['id']}")

+ continue

+

+ model_response = model.generate(question, data["images"][0])

+

+ pred_ans = self.parse_pred_ans(model_response, question)

+

+ freeform_question = (question.split("?")[0] + "?").replace(self.prompt, "").strip()

+ options = question.split("?")[1]

+ answer_option = data["answer"]

+ for single_opt in options.split(","):

+ single_opt = single_opt.strip()

+ if single_opt.startswith(answer_option.upper()):

+ freeform_answer = single_opt.split(".")[1].strip()

+ break

+

+ ff_response = model.generate(freeform_question, data["images"][0])

+ if self.debug:

+ print(f"Question: {question}")

+ print(f"Answer: {data['answer']}")

+ print(f"Raw prediction: {model_response}")

+ print(f"Parsed prediction: {pred_ans}\n")

+ print(f"Freeform question: {freeform_question}")

+ print(f"Freeform answer: {freeform_answer}")

+ print(f"Freeform response: {ff_response}\n")

+

+ num_data += 1

+ if pred_ans == data["answer"]:

+ score += 1

+ model_answer[data["id"]] = {

+ "question": question,

+ "options": options,

+ "model_response": model_response,

+ "parsed_output": pred_ans,

+ "answer": data["answer"],

+ "freeform_question": freeform_question,

+ "freeform_response": ff_response,

+ "freeform_answer": freeform_answer,

+ }

+ with open(model_answer_path, "w") as f:

+ json.dump(model_answer, f, indent=2)

+

+ model_score_dict["score"] = score

+ model_score_dict["total"] = len(self.data)

+ model_score_dict["accuracy"] = score / len(self.data)

+

+ print(f"Start query GPT-4 for free-form evaluation...")

+ for data_id in tqdm(model_answer.keys(), desc="Querying GPT-4"):

+ model_answer_item = model_answer[data_id]

+ gpt_response = prepare_query(model_answer_item, self.api_key)

+ if gpt_response.lower() == "yes":

+ ff_score += 1

+ elif gpt_response.lower() == "no":

+ ff_score += 0

+ else:

+ print(f"Warning: {data_id} has invalid GPT-4 response: {gpt_response}")

+ print(f"Skipping {data_id}")

+ continue

+

+ model_score_dict["freeform_score"] = ff_score

+ model_score_dict["freeform_accuracy"] = ff_score / len(model_answer)

+

+ with open(result_path, "w") as f:

+ json.dump(model_score_dict, f, indent=2)

+

+ print(f"Model answer saved to {model_answer_path}")

+ print(f"Model score saved to {result_path}")

+ print(json.dumps(model_score_dict, indent=2))

+

+ return model_score_dict

diff --git a/pipeline/benchmarks/datasets/mathvista.py b/pipeline/benchmarks/datasets/mathvista.py

new file mode 100644

index 00000000..939f7bb4

--- /dev/null

+++ b/pipeline/benchmarks/datasets/mathvista.py

@@ -0,0 +1,480 @@

+import base64

+import os

+import pandas as pd

+from PIL import Image

+from tqdm import tqdm

+from datasets import load_dataset

+from .base_eval_dataset import BaseEvalDataset

+import json

+from io import BytesIO

+import pytz

+import datetime

+import openai

+import time

+import re

+import io

+from Levenshtein import distance

+

+utc_plus_8 = pytz.timezone("Asia/Singapore") # You can also use 'Asia/Shanghai', 'Asia/Taipei', etc.

+utc_now = pytz.utc.localize(datetime.datetime.utcnow())

+utc_plus_8_time = utc_now.astimezone(utc_plus_8)

+

+demo_prompt = """

+Please read the following example. Then extract the answer from the model response and type it at the end of the prompt.

+

+Please answer the question requiring an integer answer and provide the final value, e.g., 1, 2, 3, at the end.

+Question: Which number is missing?

+

+Model response: The number missing in the sequence is 14.

+

+Extracted answer: 14

+

+Please answer the question requiring a floating-point number with one decimal place and provide the final value, e.g., 1.2, 1.3, 1.4, at the end.

+Question: What is the fraction of females facing the camera?

+

+Model response: The fraction of females facing the camera is 0.6, which means that six out of ten females in the group are facing the camera.

+

+Extracted answer: 0.6

+

+Please answer the question requiring a floating-point number with two decimal places and provide the final value, e.g., 1.23, 1.34, 1.45, at the end.

+Question: How much money does Luca need to buy a sour apple candy and a butterscotch candy? (Unit: $)

+

+Model response: Luca needs $1.45 to buy a sour apple candy and a butterscotch candy.

+

+Extracted answer: 1.45

+

+Please answer the question requiring a Python list as an answer and provide the final list, e.g., [1, 2, 3], [1.2, 1.3, 1.4], at the end.

+Question: Between which two years does the line graph saw its maximum peak?

+

+Model response: The line graph saw its maximum peak between 2007 and 2008.

+

+Extracted answer: [2007, 2008]

+

+Please answer the question and provide the correct option letter, e.g., A, B, C, D, at the end.

+Question: What fraction of the shape is blue?\nChoices:\n(A) 3/11\n(B) 8/11\n(C) 6/11\n(D) 3/5

+

+Model response: The correct answer is (B) 8/11.

+

+Extracted answer: B

+"""

+

+

+import time

+import requests

+import json

+import ast

+

+

+def get_chat_response(promot, api_key, model="gpt-3.5-turbo", temperature=0, max_tokens=256, n=1, patience=5, sleep_time=5):

+ headers = {

+ "Authorization": f"Bearer {api_key}",

+ "Content-Type": "application/json",

+ }

+

+ messages = [

+ {"role": "system", "content": "You are a helpful AI assistant."},

+ {"role": "user", "content": promot},

+ ]

+

+ payload = {"model": model, "messages": messages}

+

+ while patience > 0:

+ patience -= 1

+ try:

+ response = requests.post(

+ "https://api.openai.com/v1/chat/completions",

+ headers=headers,

+ data=json.dumps(payload),

+ timeout=30,

+ )

+ response.raise_for_status()

+ response_data = response.json()

+

+ prediction = response_data["choices"][0]["message"]["content"].strip()

+ if prediction != "" and prediction is not None:

+ return prediction

+

+ except Exception as e:

+ if "Rate limit" not in str(e):

+ print(e)

+ time.sleep(sleep_time)

+

+ return ""

+

+

+def create_test_prompt(demo_prompt, query, response):

+ demo_prompt = demo_prompt.strip()

+ test_prompt = f"{query}\n\n{response}"

+ full_prompt = f"{demo_prompt}\n\n{test_prompt}\n\nExtracted answer: "

+ return full_prompt

+

+

+def extract_answer(response, problem, quick_extract=False, api_key=None, pid=None, gpt_model="gpt-4-0613"):

+ question_type = problem["question_type"]

+ answer_type = problem["answer_type"]

+ choices = problem["choices"]

+ query = problem["query"]

+

+ if response == "":

+ return ""

+

+ if question_type == "multi_choice" and response in choices:

+ return response

+

+ if answer_type == "integer":

+ try:

+ extraction = int(response)

+ return str(extraction)

+ except:

+ pass

+

+ if answer_type == "float":

+ try:

+ extraction = str(float(response))

+ return extraction

+ except:

+ pass

+

+ # quick extraction

+ if quick_extract:

+ # The answer is "text". -> "text"

+ try:

+ result = re.search(r'The answer is "(.*)"\.', response)

+ if result:

+ extraction = result.group(1)

+ return extraction

+ except:

+ pass

+

+ else:

+ # general extraction

+ try:

+ full_prompt = create_test_prompt(demo_prompt, query, response)

+ extraction = get_chat_response(full_prompt, api_key=api_key, model=gpt_model, n=1, patience=5, sleep_time=5)

+ return extraction

+ except Exception as e:

+ print(e)

+ print(f"Error in extracting answer for {pid}")

+

+ return ""

+

+

+def get_acc_with_contion(res_pd, key, value):

+ if key == "skills":

+ # if value in res_pd[key]:

+ total_pd = res_pd[res_pd[key].apply(lambda x: value in x)]

+ else:

+ total_pd = res_pd[res_pd[key] == value]

+

+ correct_pd = total_pd[total_pd["true_false"] == True]

+ acc = "{:.2f}".format(len(correct_pd) / len(total_pd) * 100)

+ return len(correct_pd), len(total_pd), acc

+

+

+def get_most_similar(prediction, choices):

+ """

+ Use the Levenshtein distance (or edit distance) to determine which of the choices is most similar to the given prediction

+ """

+ distances = [distance(prediction, choice) for choice in choices]

+ ind = distances.index(min(distances))

+ return choices[ind]

+ # return min(choices, key=lambda choice: distance(prediction, choice))

+

+

+def normalize_extracted_answer(extraction, choices, question_type, answer_type, precision):

+ """

+ Normalize the extracted answer to match the answer type

+ """

+ if question_type == "multi_choice":

+ # make sure the extraction is a string

+ if isinstance(extraction, str):

+ extraction = extraction.strip()

+ else:

+ try:

+ extraction = str(extraction)

+ except:

+ extraction = ""

+

+ # extract "A" from "(A) text"

+ letter = re.findall(r"\(([a-zA-Z])\)", extraction)

+ if len(letter) > 0:

+ extraction = letter[0].upper()

+

+ options = [chr(ord("A") + i) for i in range(len(choices))]

+

+ if extraction in options:

+ # convert option letter to text, e.g. "A" -> "text"

+ ind = options.index(extraction)

+ extraction = choices[ind]

+ else:

+ # select the most similar option

+ extraction = get_most_similar(extraction, choices)

+ assert extraction in choices

+

+ elif answer_type == "integer":

+ try:

+ extraction = str(int(float(extraction)))

+ except:

+ extraction = None

+

+ elif answer_type == "float":

+ try:

+ extraction = str(round(float(extraction), precision))

+ except:

+ extraction = None

+

+ elif answer_type == "list":

+ try:

+ extraction = str(extraction)

+ except:

+ extraction = None

+

+ return extraction

+

+

+def get_pil_image(raw_image_data) -> Image.Image:

+ if isinstance(raw_image_data, Image.Image):

+ return raw_image_data

+

+ elif isinstance(raw_image_data, dict) and "bytes" in raw_image_data:

+ return Image.open(io.BytesIO(raw_image_data["bytes"]))

+

+ elif isinstance(raw_image_data, str): # Assuming this is a base64 encoded string

+ image_bytes = base64.b64decode(raw_image_data)

+ return Image.open(io.BytesIO(image_bytes))

+

+ else:

+ raise ValueError("Unsupported image data format")

+

+

+def safe_equal(prediction, answer):

+ """

+ Check if the prediction is equal to the answer, even if they are of different types

+ """

+ try:

+ if prediction == answer:

+ return True

+ return False

+ except Exception as e:

+ print(e)

+ return False

+

+

+class MathVistaDataset(BaseEvalDataset):

+ def __init__(

+ self,

+ data_path="Otter-AI/MathVista",

+ split="test",

+ default_output_path="./logs/MathVista",

+ cache_dir=None,

+ api_key=None,

+ gpt_model="gpt-4-0613",

+ debug=False,

+ quick_extract=False,

+ ):

+ super().__init__("MathVistaDataset", data_path)

+ name_converter = {"dev": "validation", "test": "test"}

+ self.data = load_dataset("Otter-AI/MathVista", split=name_converter[split], cache_dir=cache_dir).to_pandas()

+ if debug:

+ self.data = self.data.sample(5)

+ # data_path = "/home/luodian/projects/Otter/archived/testmini_image_inside.json"

+ # with open(data_path, "r", encoding="utf-8") as f:

+ # self.data = json.load(f)

+

+ self.debug = debug

+ self.quick_extract = quick_extract

+

+ self.default_output_path = default_output_path

+ if os.path.exists(self.default_output_path) is False:

+ os.makedirs(self.default_output_path)

+ self.cur_datetime = utc_plus_8_time.strftime("%Y-%m-%d_%H-%M-%S")

+ self.api_key = api_key

+ self.gpt_model = gpt_model

+

+ def create_query(self, problem, shot_type):

+ ### [2] Test query

+ # problem info

+ question = problem["question"]

+ unit = problem["unit"]

+ choices = problem["choices"]

+ precision = problem["precision"]

+ question_type = problem["question_type"]

+ answer_type = problem["answer_type"]

+

+ # hint

+ if shot_type == "solution":

+ if question_type == "multi_choice":

+ assert answer_type == "text"

+ hint_text = f"Please answer the question and provide the correct option letter, e.g., A, B, C, D, at the end."

+ else:

+ assert answer_type in ["integer", "float", "list"]

+ if answer_type == "integer":

+ hint_text = f"Please answer the question requiring an integer answer and provide the final value, e.g., 1, 2, 3, at the end."

+

+ elif answer_type == "float" and str(precision) == "1":

+ hint_text = f"Please answer the question requiring a floating-point number with one decimal place and provide the final value, e.g., 1.2, 1.3, 1.4, at the end."

+

+ elif answer_type == "float" and str(precision) == "2":

+ hint_text = f"Please answer the question requiring a floating-point number with two decimal places and provide the final value, e.g., 1.23, 1.34, 1.45, at the end."

+

+ elif answer_type == "list":

+ hint_text = f"Please answer the question requiring a Python list as an answer and provide the final list, e.g., [1, 2, 3], [1.2, 1.3, 1.4], at the end."

+ else:

+ assert shot_type == "code"

+ hint_text = "Please generate a python code to solve the problem"

+

+ # question

+ question_text = f"Question: {question}"

+ if unit:

+ question_text += f" (Unit: {unit})"

+

+ # choices

+ if choices is not None and len(choices) != 0:

+ # choices: (A) 1.2 (B) 1.3 (C) 1.4 (D) 1.5

+ texts = ["Choices:"]

+ for i, choice in enumerate(choices):

+ texts.append(f"({chr(ord('A')+i)}) {choice}")

+ choices_text = "\n".join(texts)

+ else:

+ choices_text = ""

+

+ # prompt

+ if shot_type == "solution":

+ prompt = "Solution: "

+ else:

+ assert shot_type == "code"

+ prompt = "Python code: "

+

+ elements = [hint_text, question_text, choices_text, prompt]

+ query = "\n".join([e for e in elements if e != ""])

+

+ query = query.strip()

+ return query

+

+ def _evaluate(self, model):

+ output_file = os.path.join(self.default_output_path, f"{model.name}_mathvista_eval_submit_{self.cur_datetime}.json") # directly match Lu Pan's repo format e.g. output_bard.json

+

+ results = {}

+

+ print(f"Number of test problems in total: {len(self.data)}")

+ for idx_key, query_data in tqdm(self.data.iterrows(), desc=f"Evaluating {model.name}", total=len(self.data)):

+ # query_data = self.data[idx_key]

+ results[idx_key] = {}

+ results[idx_key].update(query_data)

+ if results[idx_key]["choices"] is not None:

+ results[idx_key]["choices"] = list(results[idx_key]["choices"])

+ results[idx_key].pop("image")

+ # problem = query_data["problem"]

+ query = self.create_query(problem=query_data, shot_type="solution")

+ base64_image = query_data["image"]

+ # image = Image.open(BytesIO(base64.b64decode(base64_image)))

+ image = get_pil_image(base64_image)

+ response = model.generate(query, image)

+ if self.debug:

+ print(f"\n# Query: {query}")

+ print(f"\n# Response: {response}")

+ results[idx_key].update({"query": query})

+ results[idx_key].update({"response": response})

+

+ with open(output_file, "w") as outfile:

+ json.dump(results, outfile)

+

+ results = json.load(open(output_file, "r"))

+

+ print(f"MathVista Evaluator: Results saved to {output_file}")

+

+ for idx_key, row in tqdm(self.data.iterrows(), desc=f"Extracting answers from {model.name}", total=len(self.data)):

+ idx_key = str(idx_key)

+ response = results[idx_key]["response"]

+ extraction = extract_answer(

+ response,

+ results[idx_key],

+ quick_extract=self.quick_extract,

+ api_key=self.api_key,

+ pid=idx_key,

+ gpt_model=self.gpt_model,

+ )

+ results[idx_key].update({"extraction": extraction})

+ answer = results[idx_key]["answer"]

+ choices = results[idx_key]["choices"]

+ question_type = results[idx_key]["question_type"]

+ answer_type = results[idx_key]["answer_type"]

+ precision = results[idx_key]["precision"]

+ extraction = results[idx_key]["extraction"]

+

+ prediction = normalize_extracted_answer(extraction, choices, question_type, answer_type, precision)

+ true_false = safe_equal(prediction, answer)

+

+ results[idx_key]["prediction"] = prediction

+ results[idx_key]["true_false"] = true_false

+

+ full_pids = list(results.keys())

+ ## [2] Calculate the average accuracy

+ total = len(full_pids)

+ correct = 0

+ for pid in full_pids:

+ if results[pid]["true_false"]:

+ correct += 1

+ accuracy = str(round(correct / total * 100, 2))

+ print(f"\nCorrect: {correct}, Total: {total}, Accuracy: {accuracy}%")

+

+ scores = {"average": {"accuracy": accuracy, "correct": correct, "total": total}}

+ ## [3] Calculate the fine-grained accuracy scores

+ # merge the 'metadata' attribute into the data

+ success_parse = True

+ try:

+ for pid in results:

+ cur_meta = results[pid]["metadata"]

+ cur_meta_dict = ast.literal_eval(cur_meta)

+ results[pid].update(cur_meta_dict)

+ except:

+ success_parse = False

+ # results[pid].update(results[pid].pop("metadata"))

+

+ # convert the data to a pandas DataFrame

+ df = pd.DataFrame(results).T

+

+ print("Number of test problems:", len(df))

+ # assert len(df) == 1000 # Important!!!

+

+ if success_parse:

+ # asign the target keys for evaluation

+ target_keys = [

+ "question_type",

+ "answer_type",

+ "language",

+ "source",

+ "category",

+ "task",

+ "context",

+ "grade",

+ "skills",

+ ]

+

+ for key in target_keys:

+ print(f"\nType: [{key}]")

+ # get the unique values of the key

+ if key == "skills":

+ # the value is a list

+ values = []

+ for i in range(len(df)):

+ values += df[key][i]

+ values = list(set(values))

+ else:

+ values = df[key].unique()

+ # calculate the accuracy for each value

+ scores[key] = {}

+ for value in values:

+ correct, total, acc = get_acc_with_contion(df, key, value)

+ if total > 0:

+ print(f"[{value}]: {acc}% ({correct}/{total})")

+ scores[key][value] = {"accuracy": acc, "correct": correct, "total": total}

+

+ # sort the scores by accuracy

+ scores[key] = dict(sorted(scores[key].items(), key=lambda item: float(item[1]["accuracy"]), reverse=True))

+

+ # save the scores

+ scores_file = os.path.join(self.default_output_path, f"{model.name}_mathvista_eval_score_{self.cur_datetime}.json")

+ print(f"MathVista Evaluator: Score results saved to {scores_file}...")

+ with open(scores_file, "w") as outfile:

+ json.dump(scores, outfile)

diff --git a/pipeline/benchmarks/datasets/mmbench.py b/pipeline/benchmarks/datasets/mmbench.py

new file mode 100644

index 00000000..b38e6590

--- /dev/null

+++ b/pipeline/benchmarks/datasets/mmbench.py

@@ -0,0 +1,126 @@

+import os

+import pandas as pd

+from tqdm import tqdm, trange

+from datasets import load_dataset

+from .base_eval_dataset import BaseEvalDataset

+import pytz

+import datetime

+

+utc_plus_8 = pytz.timezone("Asia/Singapore") # You can also use 'Asia/Shanghai', 'Asia/Taipei', etc.

+utc_now = pytz.utc.localize(datetime.datetime.utcnow())

+utc_plus_8_time = utc_now.astimezone(utc_plus_8)

+

+

+class MMBenchDataset(BaseEvalDataset):

+ def __init__(

+ self,

+ data_path: str = "Otter-AI/MMBench",

+ *,

+ sys_prompt="There are several options:",

+ version="20230712",

+ split="test",

+ cache_dir=None,

+ default_output_path="./logs/MMBench",

+ debug=False,

+ ):

+ super().__init__("MMBenchDataset", data_path)

+ self.version = str(version)

+ self.name_converter = {"dev": "validation", "test": "test"}

+ self.df = load_dataset(data_path, self.version, split=self.name_converter[split], cache_dir=cache_dir).to_pandas()

+ self.default_output_path = default_output_path

+ if os.path.exists(self.default_output_path) is False:

+ os.makedirs(self.default_output_path)

+ self.sys_prompt = sys_prompt

+ self.cur_datetime = utc_plus_8_time.strftime("%Y-%m-%d_%H-%M-%S")

+ self.debug = debug

+

+ def load_from_df(self, idx, key):

+ if key in self.df.columns:

+ value = self.df.loc[idx, key]

+ return value if pd.notna(value) else None

+ return None

+

+ def create_options_prompt(self, idx, option_candidate):

+ available_keys = set(self.df.columns) & set(option_candidate)

+ options = {cand: self.load_from_df(idx, cand) for cand in available_keys if self.load_from_df(idx, cand)}

+ sorted_options = dict(sorted(options.items()))

+ options_prompt = f"{self.sys_prompt}\n"

+ for key, item in sorted_options.items():

+ options_prompt += f"{key}. {item}\n"

+ return options_prompt.rstrip("\n"), sorted_options

+

+ def get_data(self, idx):

+ row = self.df.loc[idx]

+ option_candidate = ["A", "B", "C", "D", "E"]

+ options_prompt, options_dict = self.create_options_prompt(idx, option_candidate)

+

+ data = {

+ "img": row["image"],

+ "question": row["question"],

+ "answer": row.get("answer"),

+ "options": options_prompt,

+ "category": row["category"],

+ "l2-category": row["l2-category"],

+ "options_dict": options_dict,

+ "index": row["index"],

+ "hint": self.load_from_df(idx, "hint"),

+ "source": row["source"],

+ "split": row["split"],

+ }

+ return data

+

+ def query_batch(self, model, batch_data):

+ batch_data = list(map(self.get_data, batch_data))

+ batch_img = [data["img"] for data in batch_data]

+ batch_prompt = [f"{data['hint']} {data['question']} {data['options']}" if pd.notna(data["hint"]) else f"{data['question']} {data['options']}" for data in batch_data]

+ if len(batch_prompt) == 1:

+ batch_pred_answer = [model.generate(batch_prompt[0], batch_img[0])]

+ else:

+ batch_pred_answer = model.generate(batch_prompt, batch_img)

+ return [

+ {

+ "question": data["question"],

+ "answer": data["answer"],

+ **data["options_dict"],

+ "prediction": pred_answer,

+ "hint": data["hint"],

+ "source": data["source"],

+ "split": data["split"],

+ "category": data["category"],

+ "l2-category": data["l2-category"],

+ "index": data["index"],

+ }

+ for data, pred_answer in zip(batch_data, batch_pred_answer)

+ ]

+

+ def _evaluate(self, model, *, batch=1):

+ output_file = os.path.join(self.default_output_path, f"{model.name}_mmbench_eval_result_{self.cur_datetime}.xlsx")

+ results = []

+

+ for idx in tqdm(range(len(self.df))):

+ cur_data = self.get_data(idx)

+ cur_prompt = f"{cur_data['hint']} {cur_data['question']} {cur_data['options']}" if pd.notna(cur_data["hint"]) and cur_data["hint"] != "nan" else f"{cur_data['question']} {cur_data['options']}"

+ pred_answer = model.generate(cur_prompt, cur_data["img"])

+

+ if self.debug:

+ print(f"# Query: {cur_prompt}")

+ print(f"# Response: {pred_answer}")

+

+ result = {

+ "question": cur_data["question"],

+ "answer": cur_data["answer"],

+ **cur_data["options_dict"],

+ "prediction": pred_answer,

+ "hint": cur_data["hint"],

+ "source": cur_data["source"],

+ "split": cur_data["split"],

+ "category": cur_data["category"],

+ "l2-category": cur_data["l2-category"],

+ "index": cur_data["index"],

+ }

+ results.append(result)

+

+ df = pd.DataFrame(results)

+ with pd.ExcelWriter(output_file, engine="xlsxwriter") as writer:

+ df.to_excel(writer, index=False)

+ print(f"MMBench Evaluator: Result saved to {output_file}.")

diff --git a/pipeline/benchmarks/datasets/mme.py b/pipeline/benchmarks/datasets/mme.py

new file mode 100644

index 00000000..c69f9764

--- /dev/null

+++ b/pipeline/benchmarks/datasets/mme.py

@@ -0,0 +1,217 @@

+import base64

+import io

+from PIL import Image

+import json

+from sklearn.metrics import accuracy_score, precision_score, recall_score, confusion_matrix

+import os

+import numpy as np

+from datasets import load_dataset

+from typing import Union

+from .base_eval_dataset import BaseEvalDataset

+from tqdm import tqdm

+import datetime

+import pytz

+

+utc_plus_8 = pytz.timezone("Asia/Singapore") # You can also use 'Asia/Shanghai', 'Asia/Taipei', etc.

+utc_now = pytz.utc.localize(datetime.datetime.utcnow())

+utc_plus_8_time = utc_now.astimezone(utc_plus_8)

+

+eval_type_dict = {

+ "Perception": [

+ "existence",

+ "count",

+ "position",

+ "color",

+ "posters",

+ "celebrity",

+ "scene",

+ "landmark",

+ "artwork",

+ "ocr",

+ ],

+ "Cognition": ["commonsense", "numerical", "text", "code"],

+}

+

+

+class MMEDataset(BaseEvalDataset):

+ def decode_base64_to_image(self, base64_string):

+ image_data = base64.b64decode(base64_string)

+ image = Image.open(io.BytesIO(image_data))

+ return image

+

+ def __init__(

+ self,

+ data_path: str = "Otter-AI/MME",

+ *,

+ cache_dir: Union[str, None] = None,

+ default_output_path: str = "./logs/MME",

+ split: str = "test",

+ debug: bool = False,

+ ):

+ super().__init__("MMEDataset", data_path)

+

+ self.default_output_path = default_output_path

+ self.cur_datetime = utc_plus_8_time.strftime("%Y-%m-%d_%H-%M-%S")

+ self.data = load_dataset(data_path, split=split, cache_dir=cache_dir)

+ self.debug = debug

+

+ self.category_data = {}

+ # for idx in range(len(self.ids)):

+ for item in tqdm(self.data, desc="Loading data"):

+ id = item["id"]

+ category = id.split("_")[0].lower()

+ question = item["instruction"]

+ answer = item["answer"]

+ image_id = item["image_ids"][0]

+ image = item["images"][0]

+

+ data = {"question": question, "answer": answer, "image": image}

+

+ if category in eval_type_dict["Cognition"]:

+ eval_type = "Cognition"

+ elif category in eval_type_dict["Perception"]:

+ eval_type = "Perception"

+ else:

+ raise ValueError(f"Unknown category {category}")

+

+ if eval_type not in self.category_data:

+ self.category_data[eval_type] = {}

+

+ if category not in self.category_data[eval_type]:

+ self.category_data[eval_type][category] = {}

+

+ if image_id not in self.category_data[eval_type][category]:

+ self.category_data[eval_type][category][image_id] = []

+

+ self.category_data[eval_type][category][image_id].append(data)

+

+ def parse_pred_ans(self, pred_ans):

+ pred_ans = pred_ans.lower().strip().replace(".", "")

+ pred_label = None

+ if pred_ans in ["yes", "no"]:

+ pred_label = pred_ans

+ else:

+ prefix_pred_ans = pred_ans[:4]

+ if "yes" in prefix_pred_ans:

+ pred_label = "yes"

+ elif "no" in prefix_pred_ans:

+ pred_label = "no"

+ else:

+ pred_label = "other"

+ return pred_label

+

+ def compute_metric(self, gts, preds):

+ assert len(gts) == len(preds)

+

+ label_map = {

+ "yes": 1,

+ "no": 0,

+ "other": -1,

+ }

+

+ gts = [label_map[x] for x in gts]

+ preds = [label_map[x] for x in preds]

+

+ acc = accuracy_score(gts, preds)

+

+ clean_gts = []

+ clean_preds = []

+ other_num = 0

+ for gt, pred in zip(gts, preds):

+ if pred == -1:

+ other_num += 1

+ continue

+ clean_gts.append(gt)

+ clean_preds.append(pred)

+

+ conf_mat = confusion_matrix(clean_gts, clean_preds, labels=[1, 0])

+ precision = precision_score(clean_gts, clean_preds, average="binary")

+ recall = recall_score(clean_gts, clean_preds, average="binary")

+ tp, fn = conf_mat[0]

+ fp, tn = conf_mat[1]

+

+ metric_dict = dict()

+ metric_dict = {

+ "TP": tp,

+ "FN": fn,

+ "TN": tn,

+ "FP": fp,

+ "precision": precision,

+ "recall": recall,

+ "other_num": other_num,

+ "acc": acc,

+ }

+

+ for key, value in metric_dict.items():

+ if isinstance(value, np.int64):

+ metric_dict[key] = int(value)

+

+ return metric_dict

+

+ def _evaluate(self, model):

+ model_score_dict = {}

+

+ self.default_output_path = os.path.join(self.default_output_path, f"{model.name}_{self.cur_datetime}")

+ if not os.path.exists(self.default_output_path):

+ os.makedirs(self.default_output_path)

+

+ for eval_type in self.category_data.keys():

+ print("===========", eval_type, "===========")

+

+ scores = 0

+ task_score_dict = {}

+ for task_name in tqdm(self.category_data[eval_type].keys(), desc=f"Evaluating {eval_type}"):

+ img_num = len(self.category_data[eval_type][task_name])

+ task_other_ans_num = 0

+ task_score = 0

+ acc_plus_correct_num = 0

+ gts = []

+ preds = []

+ for image_pair in tqdm(self.category_data[eval_type][task_name].values(), desc=f"Evaluating {eval_type} {task_name}"):

+ assert len(image_pair) == 2

+ img_correct_num = 0

+

+ for item in image_pair:

+ question = item["question"]

+ image = item["image"]

+ gt_ans = item["answer"].lower().strip().replace(".", "")

+ response = model.generate(question, image)

+ if self.debug:

+ print(f"\n# Query: {question}")

+ print(f"\n# Response: {response}")

+ pred_ans = self.parse_pred_ans(response)

+

+ assert gt_ans in ["yes", "no"]

+ assert pred_ans in ["yes", "no", "other"]

+

+ gts.append(gt_ans)

+ preds.append(pred_ans)

+

+ if gt_ans == pred_ans:

+ img_correct_num += 1

+

+ if pred_ans not in ["yes", "no"]:

+ task_other_ans_num += 1

+

+ if img_correct_num == 2:

+ acc_plus_correct_num += 1

+

+ # cal TP precision acc, etc.

+ metric_dict = self.compute_metric(gts, preds)

+ acc_plus = acc_plus_correct_num / img_num

+ metric_dict["acc_plus"] = acc_plus

+

+ for k, v in metric_dict.items():

+ if k in ["acc", "acc_plus"]:

+ task_score += v * 100

+

+ task_score_dict[task_name] = task_score

+ scores += task_score

+

+ output_path = os.path.join(self.default_output_path, f"{task_name}.json")

+ with open(output_path, "w") as f:

+ json.dump(metric_dict, f)

+

+ print(f"total score: {scores}")

+ for task_name, score in task_score_dict.items():

+ print(f"\t {task_name} score: {score}")

diff --git a/pipeline/benchmarks/datasets/mmvet.py b/pipeline/benchmarks/datasets/mmvet.py

new file mode 100644

index 00000000..d27c01d8

--- /dev/null

+++ b/pipeline/benchmarks/datasets/mmvet.py

@@ -0,0 +1,303 @@

+import base64

+import os

+import pandas as pd

+from PIL import Image

+from tqdm import tqdm

+from datasets import load_dataset

+from .base_eval_dataset import BaseEvalDataset

+from collections import Counter

+from typing import Union

+import numpy as np

+from openai import OpenAI

+import time

+import json

+import pytz

+import datetime

+from Levenshtein import distance

+

+utc_plus_8 = pytz.timezone("Asia/Singapore") # You can also use 'Asia/Shanghai', 'Asia/Taipei', etc.

+utc_now = pytz.utc.localize(datetime.datetime.utcnow())

+utc_plus_8_time = utc_now.astimezone(utc_plus_8)

+

+MM_VET_PROMPT = """Compare the ground truth and prediction from AI models, to give a correctness score for the prediction.

in the ground truth means it is totally right only when all elements in the ground truth are present in the prediction, and means it is totally right when any one element in the ground truth is present in the prediction. The correctness score is 0.0 (totally wrong), 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, or 1.0 (totally right). Just complete the last space of the correctness score.

+

+Question | Ground truth | Prediction | Correctness

+--- | --- | --- | ---

+What is x in the equation? | -1 -5 | x = 3 | 0.0

+What is x in the equation? | -1 -5 | x = -1 | 0.5

+What is x in the equation? | -1 -5 | x = -5 | 0.5

+What is x in the equation? | -1 -5 | x = -5 or 5 | 0.5

+What is x in the equation? | -1 -5 | x = -1 or x = -5 | 1.0

+Can you explain this meme? | This meme is poking fun at the fact that the names of the countries Iceland and Greenland are misleading. Despite its name, Iceland is known for its beautiful green landscapes, while Greenland is mostly covered in ice and snow. The meme is saying that the person has trust issues because the names of these countries do not accurately represent their landscapes. | The meme talks about Iceland and Greenland. It's pointing out that despite their names, Iceland is not very icy and Greenland isn't very green. | 0.4

+Can you explain this meme? | This meme is poking fun at the fact that the names of the countries Iceland and Greenland are misleading. Despite its name, Iceland is known for its beautiful green landscapes, while Greenland is mostly covered in ice and snow. The meme is saying that the person has trust issues because the names of these countries do not accurately represent their landscapes. | The meme is using humor to point out the misleading nature of Iceland's and Greenland's names. Iceland, despite its name, has lush green landscapes while Greenland is mostly covered in ice and snow. The text 'This is why I have trust issues' is a playful way to suggest that these contradictions can lead to distrust or confusion. The humor in this meme is derived from the unexpected contrast between the names of the countries and their actual physical characteristics. | 1.0

+"""

+

+

+class MMVetDataset(BaseEvalDataset):

+ def __init__(

+ self,

+ data_path: str = "Otter-AI/MMVet",

+ gpt_model: str = "gpt-4-0613",

+ *,

+ api_key: str,

+ split: str = "test",

+ cache_dir: Union[str, None] = None,

+ default_output_path: str = "./logs/MMVet",

+ num_run: int = 1,

+ prompt: str = MM_VET_PROMPT,

+ decimail_places: int = 1, # number of decimal places to round to

+ debug: bool = False,

+ ):

+ super().__init__("MMVetDataset", data_path)

+ self.df = load_dataset(data_path, split=split, cache_dir=cache_dir).to_pandas()

+ self.default_output_path = default_output_path

+ self.prompt = prompt

+ self.gpt_model = gpt_model

+ self.num_run = num_run

+ self.decimal_places = decimail_places

+ self.api_key = api_key

+ self.cur_datetime = utc_plus_8_time.strftime("%Y-%m-%d_%H-%M-%S")

+ self.debug = debug

+ self.prepare()

+ self.client = OpenAI(api_key=api_key)

+

+ def prepare(self):

+ self.counter = Counter()

+ self.cap_set_list = []

+ self.cap_set_counter = []

+ self.len_data = 0

+ self.caps = {}

+

+ for index, row in self.df.iterrows():

+ self.caps[row["id"]] = row["capability"]

+

+ for cap in self.df["capability"]:

+ cap = set(cap)

+ self.counter.update(cap)

+ if cap not in self.cap_set_list:

+ self.cap_set_list.append(cap)

+ self.cap_set_counter.append(1)

+ else:

+ self.cap_set_counter[self.cap_set_list.index(cap)] += 1

+

+ self.len_data += 1

+

+ sorted_list = self.counter.most_common()

+ self.columns = [k for k, v in sorted_list]

+ self.columns.append("total")

+ self.columns.append("std")

+ self.columns.append("runs")

+ self.result1 = pd.DataFrame(columns=self.columns)

+

+ cap_set_sorted_indices = np.argsort(-np.array(self.cap_set_counter))

+ new_cap_set_list = []

+ new_cap_set_counter = []

+ for index in cap_set_sorted_indices:

+ new_cap_set_list.append(self.cap_set_list[index])

+ new_cap_set_counter.append(self.cap_set_counter[index])

+

+ self.cap_set_list = new_cap_set_list

+ self.cap_set_counter = new_cap_set_counter

+ self.cap_set_names = ["_".join(list(cap_set)) for cap_set in self.cap_set_list]

+

+ self.columns2 = self.cap_set_names

+ self.columns2.append("total")

+ self.columns2.append("std")

+ self.columns2.append("runs")

+ self.result2 = pd.DataFrame(columns=self.columns2)

+

+ def get_output_file_name(self, model, *, output_path: str = None, num_run: int = 1) -> str:

+ if output_path is None:

+ result_path = self.default_output_path

+ else:

+ result_path = output_path

+ if not os.path.exists(result_path):

+ os.makedirs(result_path)

+ model_results_file = os.path.join(result_path, f"{model.name}.json")

+ grade_file = f"{model.name}-{self.gpt_model}-grade-{num_run}runs-{self.cur_datetime}.json"

+ grade_file = os.path.join(result_path, grade_file)

+ cap_score_file = f"{model.name}-{self.gpt_model}-cap-score-{num_run}runs-{self.cur_datetime}.csv"

+ cap_score_file = os.path.join(result_path, cap_score_file)

+ cap_int_score_file = f"{model.name}-{self.gpt_model}-cap-int-score-{num_run}runs-{self.cur_datetime}.csv"

+ cap_int_score_file = os.path.join(result_path, cap_int_score_file)

+ return model_results_file, grade_file, cap_score_file, cap_int_score_file

+

+ def _evaluate(self, model):

+ model_results_file, grade_file, cap_score_file, cap_int_score_file = self.get_output_file_name(model)

+

+ if os.path.exists(grade_file):

+ with open(grade_file, "r") as f:

+ grade_results = json.load(f)

+ else:

+ grade_results = {}

+

+ def need_more_runs():

+ need_more_runs = False

+ if len(grade_results) > 0:

+ for k, v in grade_results.items():

+ if len(v["score"]) < self.num_run:

+ need_more_runs = True

+ break

+ return need_more_runs or len(grade_results) < self.len_data

+

+ print(f"grade results saved to {grade_file}")

+ while need_more_runs():

+ for j in range(self.num_run):

+ print(f"eval run {j}")

+ for _, line in tqdm(self.df.iterrows(), total=len(self.df)):

+ id = line["id"]

+ # if sub_set is not None and id not in sub_set:

+ # continue

+ if id in grade_results and len(grade_results[id]["score"]) >= (j + 1):

+ continue

+

+ model_pred = model.generate(line["instruction"], line["images"][0])

+ if self.debug:

+ print(f"# Query: {line['instruction']}")

+ print(f"# Response: {model_pred}")

+ print(f"# Ground Truth: {line['answer']}")

+

+ question = (

+ self.prompt

+ + "\n"

+ + " | ".join(

+ [

+ line["instruction"],

+ line["answer"].replace("", " ").replace("", " "),

+ model_pred,

+ "",

+ ]

+ )

+ )

+ messages = [

+ {"role": "user", "content": question},

+ ]

+

+ if id not in grade_results:

+ sample_grade = {"model": [], "content": [], "score": []}

+ else:

+ sample_grade = grade_results[id]

+

+ grade_sample_run_complete = False

+ temperature = 0.0

+

+ while not grade_sample_run_complete:

+ try:

+ response = self.client.chat.completions.create(model=self.gpt_model, max_tokens=3, temperature=temperature, messages=messages, timeout=15)

+ content = response["choices"][0]["message"]["content"]

+ flag = True

+ try_time = 1

+ while flag:

+ try:

+ content = content.split(" ")[0].strip()

+ score = float(content)

+ if score > 1.0 or score < 0.0:

+ assert False

+ flag = False

+ except:

+ question = (

+ self.prompt

+ + "\n"

+ + " | ".join(

+ [

+ line["instruction"],

+ line["answer"].replace("", " ").replace("", " "),

+ model_pred,

+ "",

+ ]

+ )

+ + "\nPredict the correctness of the answer (digit): "

+ )

+ messages = [

+ {"role": "user", "content": question},

+ ]

+ response = self.client.chat.completions.create(model=self.gpt_model, max_tokens=3, temperature=temperature, messages=messages, timeout=15)

+ content = response["choices"][0]["message"]["content"]

+ try_time += 1

+ temperature += 0.5

+ print(f"{id} try {try_time} times")

+ print(content)

+ if try_time > 5:

+ score = 0.0

+ flag = False

+ grade_sample_run_complete = True

+ except Exception as e:

+ # gpt4 may have token rate limit

+ print(e)

+ print("sleep 15s")

+ time.sleep(15)

+

+ if len(sample_grade["model"]) >= j + 1:

+ sample_grade["model"][j] = response["model"]

+ sample_grade["content"][j] = content

+ sample_grade["score"][j] = score

+ else:

+ sample_grade["model"].append(response["model"])

+ sample_grade["content"].append(content)

+ sample_grade["score"].append(score)

+ sample_grade["query"] = line["instruction"]

+ sample_grade["response"] = model_pred

+ sample_grade["ground_truth"] = line["answer"]

+ grade_results[id] = sample_grade

+

+ with open(grade_file, "w") as f:

+ json.dump(grade_results, f, indent=4)

+

+ cap_socres = {k: [0.0] * self.num_run for k in self.columns[:-2]}

+ self.counter["total"] = self.len_data

+

+ cap_socres2 = {k: [0.0] * self.num_run for k in self.columns2[:-2]}

+ counter2 = {self.columns2[i]: self.cap_set_counter[i] for i in range(len(self.cap_set_counter))}

+ counter2["total"] = self.len_data

+

+ for k, v in grade_results.items():

+ # if sub_set is not None and k not in sub_set:

+ # continue

+ for i in range(self.num_run):

+ score = v["score"][i]

+ caps = set(self.caps[k])

+ for c in caps:

+ cap_socres[c][i] += score

+

+ cap_socres["total"][i] += score

+

+ index = self.cap_set_list.index(caps)

+ cap_socres2[self.cap_set_names[index]][i] += score

+ cap_socres2["total"][i] += score

+

+ for k, v in cap_socres.items():

+ cap_socres[k] = np.array(v) / self.counter[k] * 100

+

+ std = round(cap_socres["total"].std(), self.decimal_places)

+ total_copy = cap_socres["total"].copy()

+ runs = str(list(np.round(total_copy, self.decimal_places)))

+

+ for k, v in cap_socres.items():

+ cap_socres[k] = round(v.mean(), self.decimal_places)

+

+ cap_socres["std"] = std

+ cap_socres["runs"] = runs

+ self.result1.loc[model.name] = cap_socres

+

+ for k, v in cap_socres2.items():

+ cap_socres2[k] = round(np.mean(np.array(v) / counter2[k] * 100), self.decimal_places)

+ cap_socres2["std"] = std

+ cap_socres2["runs"] = runs

+ self.result2.loc[model.name] = cap_socres2

+

+ self.result1.to_csv(cap_score_file)

+ self.result2.to_csv(cap_int_score_file)

+

+ print(f"cap score saved to {cap_score_file}")

+ print(f"cap int score saved to {cap_int_score_file}")

+ print("=" * 20)

+ print(f"cap score:")

+ print(self.result1)

+ print("=" * 20)

+ print(f"cap int score:")

+ print(self.result2)

+ print("=" * 20)

+

+

+if __name__ == "__main__":

+ data = MMVetDataset(api_key=None, cache_dir="/data/pufanyi/cache")

diff --git a/pipeline/benchmarks/datasets/pope.py b/pipeline/benchmarks/datasets/pope.py

new file mode 100644

index 00000000..bd1d463c

--- /dev/null

+++ b/pipeline/benchmarks/datasets/pope.py

@@ -0,0 +1,167 @@

+import os

+import datetime

+from tqdm import tqdm, trange

+from .base_eval_dataset import BaseEvalDataset

+from datasets import load_dataset

+import json

+from typing import Union

+

+

+class PopeDataset(BaseEvalDataset):

+ def __init__(

+ self,

+ data_path="Otter-AI/POPE",

+ split="test",

+ default_output_path="./logs/POPE",

+ cache_dir=None,

+ batch_size=1,

+ ):

+ super().__init__("PopeDataset", data_path, max_batch_size=batch_size)

+ print("Loading dataset from", data_path)

+ self.data = load_dataset(data_path, split=split, cache_dir=cache_dir)

+ print("Dataset loaded")

+ self.default_output_path = default_output_path

+ if not os.path.exists(default_output_path):

+ os.makedirs(default_output_path)

+ self.batch_gen_size = batch_size

+

+ def parse_pred(self, text):

+ if text.find(".") != -1:

+ text = text.split(".")[0]

+

+ text = text.replace(",", "").lower()

+ words = text.split(" ")

+

+ if "not" in words or "no" in words:

+ return "no"

+ else:

+ return "yes"

+