-

Notifications

You must be signed in to change notification settings - Fork 129

Tests at Dublin Site

This page displays the performance results for reference NIC 10G and 1G at the Xilinx Ireland (Dublin) site.

This control test measures the performance of the Myricom Myri-10G 10G-PCIE2-8C2-2S network interface cards (pictured below) as installed in our test hosts.

- The test setup uses two Core-i7 Linux hosts, each with an identical Myricom PCIe NIC;

- The NICs are connected directly via a GORE SFP+ cable between their lower SFP+ ports as shown below:

- Host A has the fixed IP address 1.1.1.1 and runs the netserver component of netperf;

- Host B has the fixed IP address 1.1.1.2 and runs the netserver component of netperf;

- For each test, the tcp_stream_script and udp_stream_script netperf test scripts (located in the tools/scripts/ directory) were used;

- Four tests were conducted in all, using TCP and UDP with- and without forcing CPU affinity (i.e. forcing netperf processes to reside on CPU 1);

- The duration (in seconds) was reduced to 10 (from 60), resulting in a total test time of approximately 70 minutes.

| Test | Result |

|---|---|

| tcp_stream_script 1.1.1.2 CPU | nicresultsdublin_myri_a_tx_myri_b_rx_10g_tcp_default.txt |

| udp_stream_script 1.1.1.2 CPU | nicresultsdublin_myri_a_tx_myri_b_rx_10g_udp_default.txt |

| tcp_stream_script 1.1.1.2 CPU -T1,1 | nicresultsdublin_myri_a_tx_myri_b_rx_10g_tcp_affinity.txt |

| udp_stream_script 1.1.1.2 CPU -T1,1 | nicresultsdublin_myri_a_tx_myri_b_rx_10g_udp_affinity.txt |

| Test | Result |

|---|---|

| tcp_stream_script 1.1.1.1 CPU | nicresultsdublin_myri_a_rx_myri_b_tx_10g_tcp_default.txt |

| udp_stream_script 1.1.1.1 CPU | nicresultsdublin_myri_a_rx_myri_b_tx_10g_udp_default.txt |

| tcp_stream_script 1.1.1.1 CPU -T1,1 | nicresultsdublin_myri_a_rx_myri_b_tx_10g_tcp_affinity.txt |

| udp_stream_script 1.1.1.1 CPU -T1,1 | nicresultsdublin_myri_a_rx_myri_b_tx_10g_udp_affinity.txt |

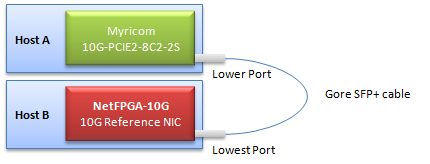

This test measures the performance of the NetFPGA-10G (10G Reference NIC) connected directly to a Myricom Myri-10G 10G-PCIE2-8C2-2S NIC.

- The test setup uses two Core-i7 Linux hosts, where Host A is fitted with a Myricom PCIe NIC and Host B is fitted with the NetFPGA-10G card, programmed with the 10G Reference NIC. Host B uses the NetFPGA-10G Reference NIC driver. The NICs are connected directly via a GORE SFP+ cable between their lowermost SFP+ ports as shown below:

- Host A has the fixed IP address 1.1.1.1 and runs the netserver component of netperf;

- Host B has the fixed IP address 1.1.1.2 and runs the netserver component of netperf;

- For each test, the tcp_stream_script and udp_stream_script netperf test scripts (located in the tools/scripts/ directory) were used;

- Four tests were conducted in all, using TCP and UDP with- and without forcing CPU affinity (i.e. forcing netperf processes to reside on CPU 1);

- The duration (in seconds) was reduced to 10 (from 60), resulting in a total test time of approximately 70 minutes.

| Test | Result |

|---|---|

| tcp_stream_script 1.1.1.2 CPU | nicresultsdublin_myri_tx_nf10_rx_10g_tcp_default.txt |

| udp_stream_script 1.1.1.2 CPU | nicresultsdublin_myri_tx_nf10_rx_10g_udp_default.txt |

| tcp_stream_script 1.1.1.2 CPU -T1,1 | nicresultsdublin_myri_tx_nf10_rx_10g_tcp_affinity.txt |

| udp_stream_script 1.1.1.2 CPU -T1,1 | nicresultsdublin_myri_tx_nf10_rx_10g_udp_affinity.txt |

| Test | Result |

|---|---|

| tcp_stream_script 1.1.1.1 CPU | nicresultsdublin_myri_rx_nf10_tx_10g_tcp_default.txt |

| udp_stream_script 1.1.1.1 CPU | nicresultsdublin_myri_rx_nf10_tx_10g_udp_default.txt |

| tcp_stream_script 1.1.1.1 CPU -T1,1 | nicresultsdublin_myri_rx_nf10_tx_10g_tcp_affinity.txt |

| udp_stream_script 1.1.1.1 CPU -T1,1 | nicresultsdublin_myri_rx_nf10_tx_10g_udp_affinity.txt |

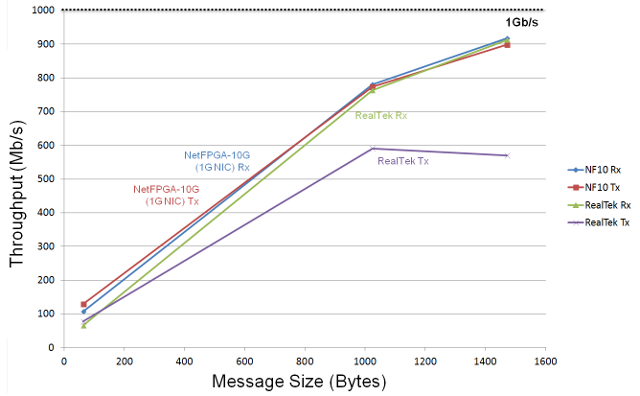

The graph below illustrates the recorded performance from netperf UDP stream tests. The X axis depicts message size (in bytes). The Y axis plots the recorded average throughput (Mb/s). The Myricom Myri-10G back-to-back test results are shown in green and purple. The results from the NetFPGA-10G 10G Reference NIC back-to-back with the Myricom NIC are shown in blue and red. The NetFPGA-10G NIC achieves a maximum throughput of around 1500 Mb/s in this test. The back-to-back Myricom tests record at least 3x higher bandwidth. (Even a figure of 4500Mb/s is relatively poor for a 10G NIC; Myricom's own reported results indicate this is significantly below the maximum achievable rate for this card.)

A series of latency measurements was also performed on the Myricom Myri-10G NIC and the NetFPGA-10G Reference NIC. For these tests, a piece of C code opens a UDP socket, sends a packet down through the stack, out over a network interface port and back up the stack to the application. A libpcap-based method was used to timestamp the packets on the outward and return paths (on entry to and exit from the NIC driver). On the Myricom NIC, both 10G SFP+ ports were connected in a cable loop. For the NetFPGA-10G card, interface 0 and 1 were connected in a loop. The difference between the packet send and receive timestamps was recorded for a series of 600 consecutive measurements. The mean of these measurements gives a crude estimate of relative NIC latency, including the driver, the PCIe interface and the NIC hardware itself. The graph below plots these measurements for the Myricom 10G NIC and the NetFPGA-10G Reference NIC.

Average latency from measurements of the NetFPGA-10G are approximately 180us. This is the round trip time down and up through the driver, PCIe interface, NIC and SFP+ ports. There are regular bursts of increased latency which do not appear to be related to activity on the machine.

The Myricom 10G NIC achieves an average latency in these tests of around 30us. This is the round trip time down and up through the Myricom driver, PCIe interface, NIC and SFP+ ports.

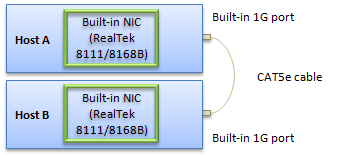

This control test measures the performance of the RealTek RTL8111/8168B NICs built into our test hosts.

- The test setup uses two Core-i7 Linux hosts, where both Hosts A and B are fitted with on-board RealTek RTL8111/8168B Gigabit Ethernet NICs (rev 03). Both machines use the RealTek NIC driver incorporated into the Fedora 14 Linux kernel. The NICs are connected directly via a Category 5e ethernet patch cable as shown below:

- Host A has the fixed IP address 1.1.1.1 and runs the netserver component of netperf;

- Host B has the fixed IP address 1.1.1.2 and runs the netserver component of netperf;

- For each test, the tcp_stream_script and udp_stream_script netperf test scripts (located in the tools/scripts/ directory) were used;

- Four tests were conducted in all, using TCP and UDP with- and without forcing CPU affinity (i.e. forcing netperf processes to reside on CPU 1);

- The duration (in seconds) was reduced to 10 (from 60), resulting in a total test time of approximately 70 minutes.

| Test | Result |

|---|---|

| tcp_stream_script 1.1.1.2 CPU | nicresultsdublin_rltk_a_tx_rltk_b_rx_1g_tcp_default.txt |

| udp_stream_script 1.1.1.2 CPU | nicresultsdublin_rltk_a_tx_rltk_b_rx_1g_udp_default.txt |

| tcp_stream_script 1.1.1.2 CPU -T1,1 | nicresultsdublin_rltk_a_tx_rltk_b_rx_1g_tcp_affinity.txt |

| udp_stream_script 1.1.1.2 CPU -T1,1 | nicresultsdublin_rltk_a_tx_rltk_b_rx_1g_udp_affinity.txt |

| Test | Result |

|---|---|

| tcp_stream_script 1.1.1.1 CPU | nicresultsdublin_rltk_a_rx_rltk_b_tx_1g_tcp_default.txt |

| udp_stream_script 1.1.1.1 CPU | nicresultsdublin_rltk_a_rx_rltk_b_tx_1g_udp_default.txt |

| tcp_stream_script 1.1.1.1 CPU -T1,1 | nicresultsdublin_rltk_a_rx_rltk_b_tx_1g_tcp_affinity.txt |

| udp_stream_script 1.1.1.1 CPU -T1,1 | nicresultsdublin_rltk_a_rx_rltk_b_tx_1g_udp_affinity.txt |

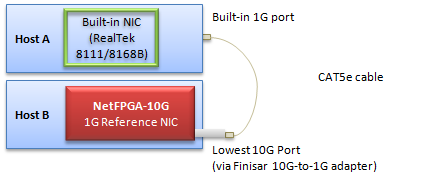

This test measures the performance of the NetFPGA-10G (1G Reference NIC) connected to RealTek RTL8111/8168B 1G NIC built into our test hosts.

- The test setup uses two Core-i7 Linux hosts, where Host A has a built-in RealTek RTL8111/8168B NIC and Host B is fitted with the NetFPGA-10G card, programmed with the 1G Reference NIC. Host B uses the NetFPGA-10G Reference NIC driver. The NICs are connected directly via a Category 5e ethernet patch cable as shown below:

- Host A has the fixed IP address 1.1.1.1 and runs the netserver component of netperf;

- Host B has the fixed IP address 1.1.1.2 and runs the netserver component of netperf;

- For each test, the tcp_stream_script and udp_stream_script netperf test scripts (located in the tools/scripts/ directory) were used;

- Four tests were conducted in all, using TCP and UDP with- and without forcing CPU affinity (i.e. forcing netperf processes to reside on CPU 1);

- The duration (in seconds) was reduced to 10 (from 60), resulting in a total test time of approximately 70 minutes.

| Test | Result |

|---|---|

| tcp_stream_script 1.1.1.2 CPU | nicresultsdublin_rltk_tx_nf10_rx_1g_tcp_default.txt |

| udp_stream_script 1.1.1.2 CPU | nicresultsdublin_rltk_tx_nf10_rx_1g_udp_default.txt |

| tcp_stream_script 1.1.1.2 CPU -T1,1 | nicresultsdublin_rltk_tx_nf10_rx_1g_tcp_affinity.txt |

| udp_stream_script 1.1.1.2 CPU -T1,1 | nicresultsdublin_rltk_tx_nf10_rx_1g_udp_affinity.txt |

| Test | Result |

|---|---|

| tcp_stream_script 1.1.1.1 CPU | nicresultsdublin_rltk_rx_nf10_tx_1g_tcp_default.txt |

| udp_stream_script 1.1.1.1 CPU | nicresultsdublin_rltk_rx_nf10_tx_1g_udp_default.txt |

| tcp_stream_script 1.1.1.1 CPU -T1,1 | nicresultsdublin_rltk_rx_nf10_tx_1g_tcp_affinity.txt |

| udp_stream_script 1.1.1.1 CPU -T1,1 | nicresultsdublin_rltk_rx_nf10_tx_1g_udp_affinity.txt |

The graph below illustrates the recorded performance from netperf UDP stream tests in 1G mode. The X axis depicts message size (in bytes). The Y axis plots the recorded average throughput (Mb/s). The Realtek 1G NIC back-to-back test results are shown in green and purple. The results from the NetFPGA-10G 1G Reference NIC back-to-back with the Realtek 1G NIC are shown in blue and red. The NetFPGA-10G 1G Reference NIC achieves a maximum throughput of around 900 Mb/s in this test. The back-to-back Realtek tests record slightly slower figures.