Charset Detection, for Everyone 👋

- -

- The Real First Universal Charset Detector

-

-

-

-

- Featured Packages

-

-

- In other language (unofficial port - by the community)

-

-

Motivated by `chardet`, -> I'm trying to resolve the issue by taking a new approach. -> All IANA character set names for which the Python core library provides codecs are supported. - -

- >>>>> 👉 Try Me Online Now, Then Adopt Me 👈 <<<<< -

- -This project offers you an alternative to **Universal Charset Encoding Detector**, also known as **Chardet**. - -| Feature | [Chardet](https://github.com/chardet/chardet) | Charset Normalizer | [cChardet](https://github.com/PyYoshi/cChardet) | -|--------------------------------------------------|:---------------------------------------------:|:--------------------------------------------------------------------------------------------------:|:-----------------------------------------------:| -| `Fast` | ❌ | ✅ | ✅ | -| `Universal**` | ❌ | ✅ | ❌ | -| `Reliable` **without** distinguishable standards | ❌ | ✅ | ✅ | -| `Reliable` **with** distinguishable standards | ✅ | ✅ | ✅ | -| `License` | LGPL-2.1_restrictive_ | MIT | MPL-1.1

_restrictive_ | -| `Native Python` | ✅ | ✅ | ❌ | -| `Detect spoken language` | ❌ | ✅ | N/A | -| `UnicodeDecodeError Safety` | ❌ | ✅ | ❌ | -| `Whl Size (min)` | 193.6 kB | 42 kB | ~200 kB | -| `Supported Encoding` | 33 | 🎉 [99](https://charset-normalizer.readthedocs.io/en/latest/user/support.html#supported-encodings) | 40 | - -

-

-

-

-Did you got there because of the logs? See [https://charset-normalizer.readthedocs.io/en/latest/user/miscellaneous.html](https://charset-normalizer.readthedocs.io/en/latest/user/miscellaneous.html) - -## ⚡ Performance - -This package offer better performance than its counterpart Chardet. Here are some numbers. - -| Package | Accuracy | Mean per file (ms) | File per sec (est) | -|-----------------------------------------------|:--------:|:------------------:|:------------------:| -| [chardet](https://github.com/chardet/chardet) | 86 % | 200 ms | 5 file/sec | -| charset-normalizer | **98 %** | **10 ms** | 100 file/sec | - -| Package | 99th percentile | 95th percentile | 50th percentile | -|-----------------------------------------------|:---------------:|:---------------:|:---------------:| -| [chardet](https://github.com/chardet/chardet) | 1200 ms | 287 ms | 23 ms | -| charset-normalizer | 100 ms | 50 ms | 5 ms | - -Chardet's performance on larger file (1MB+) are very poor. Expect huge difference on large payload. - -> Stats are generated using 400+ files using default parameters. More details on used files, see GHA workflows. -> And yes, these results might change at any time. The dataset can be updated to include more files. -> The actual delays heavily depends on your CPU capabilities. The factors should remain the same. -> Keep in mind that the stats are generous and that Chardet accuracy vs our is measured using Chardet initial capability -> (eg. Supported Encoding) Challenge-them if you want. - -## ✨ Installation - -Using pip: - -```sh -pip install charset-normalizer -U -``` - -## 🚀 Basic Usage - -### CLI -This package comes with a CLI. - -``` -usage: normalizer [-h] [-v] [-a] [-n] [-m] [-r] [-f] [-t THRESHOLD] - file [file ...] - -The Real First Universal Charset Detector. Discover originating encoding used -on text file. Normalize text to unicode. - -positional arguments: - files File(s) to be analysed - -optional arguments: - -h, --help show this help message and exit - -v, --verbose Display complementary information about file if any. - Stdout will contain logs about the detection process. - -a, --with-alternative - Output complementary possibilities if any. Top-level - JSON WILL be a list. - -n, --normalize Permit to normalize input file. If not set, program - does not write anything. - -m, --minimal Only output the charset detected to STDOUT. Disabling - JSON output. - -r, --replace Replace file when trying to normalize it instead of - creating a new one. - -f, --force Replace file without asking if you are sure, use this - flag with caution. - -t THRESHOLD, --threshold THRESHOLD - Define a custom maximum amount of chaos allowed in - decoded content. 0. <= chaos <= 1. - --version Show version information and exit. -``` - -```bash -normalizer ./data/sample.1.fr.srt -``` - -or - -```bash -python -m charset_normalizer ./data/sample.1.fr.srt -``` - -🎉 Since version 1.4.0 the CLI produce easily usable stdout result in JSON format. - -```json -{ - "path": "/home/default/projects/charset_normalizer/data/sample.1.fr.srt", - "encoding": "cp1252", - "encoding_aliases": [ - "1252", - "windows_1252" - ], - "alternative_encodings": [ - "cp1254", - "cp1256", - "cp1258", - "iso8859_14", - "iso8859_15", - "iso8859_16", - "iso8859_3", - "iso8859_9", - "latin_1", - "mbcs" - ], - "language": "French", - "alphabets": [ - "Basic Latin", - "Latin-1 Supplement" - ], - "has_sig_or_bom": false, - "chaos": 0.149, - "coherence": 97.152, - "unicode_path": null, - "is_preferred": true -} -``` - -### Python -*Just print out normalized text* -```python -from charset_normalizer import from_path - -results = from_path('./my_subtitle.srt') - -print(str(results.best())) -``` - -*Upgrade your code without effort* -```python -from charset_normalizer import detect -``` - -The above code will behave the same as **chardet**. We ensure that we offer the best (reasonable) BC result possible. - -See the docs for advanced usage : [readthedocs.io](https://charset-normalizer.readthedocs.io/en/latest/) - -## 😇 Why - -When I started using Chardet, I noticed that it was not suited to my expectations, and I wanted to propose a -reliable alternative using a completely different method. Also! I never back down on a good challenge! - -I **don't care** about the **originating charset** encoding, because **two different tables** can -produce **two identical rendered string.** -What I want is to get readable text, the best I can. - -In a way, **I'm brute forcing text decoding.** How cool is that ? 😎 - -Don't confuse package **ftfy** with charset-normalizer or chardet. ftfy goal is to repair unicode string whereas charset-normalizer to convert raw file in unknown encoding to unicode. - -## 🍰 How - - - Discard all charset encoding table that could not fit the binary content. - - Measure noise, or the mess once opened (by chunks) with a corresponding charset encoding. - - Extract matches with the lowest mess detected. - - Additionally, we measure coherence / probe for a language. - -**Wait a minute**, what is noise/mess and coherence according to **YOU ?** - -*Noise :* I opened hundred of text files, **written by humans**, with the wrong encoding table. **I observed**, then -**I established** some ground rules about **what is obvious** when **it seems like** a mess. - I know that my interpretation of what is noise is probably incomplete, feel free to contribute in order to - improve or rewrite it. - -*Coherence :* For each language there is on earth, we have computed ranked letter appearance occurrences (the best we can). So I thought -that intel is worth something here. So I use those records against decoded text to check if I can detect intelligent design. - -## ⚡ Known limitations - - - Language detection is unreliable when text contains two or more languages sharing identical letters. (eg. HTML (english tags) + Turkish content (Sharing Latin characters)) - - Every charset detector heavily depends on sufficient content. In common cases, do not bother run detection on very tiny content. - -## ⚠️ About Python EOLs - -**If you are running:** - -- Python >=2.7,<3.5: Unsupported -- Python 3.5: charset-normalizer < 2.1 -- Python 3.6: charset-normalizer < 3.1 -- Python 3.7: charset-normalizer < 4.0 - -Upgrade your Python interpreter as soon as possible. - -## 👤 Contributing - -Contributions, issues and feature requests are very much welcome.

-Feel free to check [issues page](https://github.com/ousret/charset_normalizer/issues) if you want to contribute. - -## 📝 License - -Copyright © [Ahmed TAHRI @Ousret](https://github.com/Ousret).

-This project is [MIT](https://github.com/Ousret/charset_normalizer/blob/master/LICENSE) licensed. - -Characters frequencies used in this project © 2012 [Denny Vrandečić](http://simia.net/letters/) - -## 💼 For Enterprise - -Professional support for charset-normalizer is available as part of the [Tidelift -Subscription][1]. Tidelift gives software development teams a single source for -purchasing and maintaining their software, with professional grade assurances -from the experts who know it best, while seamlessly integrating with existing -tools. - -[1]: https://tidelift.com/subscription/pkg/pypi-charset-normalizer?utm_source=pypi-charset-normalizer&utm_medium=readme - -# Changelog -All notable changes to charset-normalizer will be documented in this file. This project adheres to [Semantic Versioning](https://semver.org/spec/v2.0.0.html). -The format is based on [Keep a Changelog](https://keepachangelog.com/en/1.0.0/). - -## [3.3.1](https://github.com/Ousret/charset_normalizer/compare/3.3.0...3.3.1) (2023-10-22) - -### Changed -- Optional mypyc compilation upgraded to version 1.6.1 for Python >= 3.8 -- Improved the general detection reliability based on reports from the community - -## [3.3.0](https://github.com/Ousret/charset_normalizer/compare/3.2.0...3.3.0) (2023-09-30) - -### Added -- Allow to execute the CLI (e.g. normalizer) through `python -m charset_normalizer.cli` or `python -m charset_normalizer` -- Support for 9 forgotten encoding that are supported by Python but unlisted in `encoding.aliases` as they have no alias (#323) - -### Removed -- (internal) Redundant utils.is_ascii function and unused function is_private_use_only -- (internal) charset_normalizer.assets is moved inside charset_normalizer.constant - -### Changed -- (internal) Unicode code blocks in constants are updated using the latest v15.0.0 definition to improve detection -- Optional mypyc compilation upgraded to version 1.5.1 for Python >= 3.8 - -### Fixed -- Unable to properly sort CharsetMatch when both chaos/noise and coherence were close due to an unreachable condition in \_\_lt\_\_ (#350) - -## [3.2.0](https://github.com/Ousret/charset_normalizer/compare/3.1.0...3.2.0) (2023-06-07) - -### Changed -- Typehint for function `from_path` no longer enforce `PathLike` as its first argument -- Minor improvement over the global detection reliability - -### Added -- Introduce function `is_binary` that relies on main capabilities, and optimized to detect binaries -- Propagate `enable_fallback` argument throughout `from_bytes`, `from_path`, and `from_fp` that allow a deeper control over the detection (default True) -- Explicit support for Python 3.12 - -### Fixed -- Edge case detection failure where a file would contain 'very-long' camel cased word (Issue #289) - -## [3.1.0](https://github.com/Ousret/charset_normalizer/compare/3.0.1...3.1.0) (2023-03-06) - -### Added -- Argument `should_rename_legacy` for legacy function `detect` and disregard any new arguments without errors (PR #262) - -### Removed -- Support for Python 3.6 (PR #260) - -### Changed -- Optional speedup provided by mypy/c 1.0.1 - -## [3.0.1](https://github.com/Ousret/charset_normalizer/compare/3.0.0...3.0.1) (2022-11-18) - -### Fixed -- Multi-bytes cutter/chunk generator did not always cut correctly (PR #233) - -### Changed -- Speedup provided by mypy/c 0.990 on Python >= 3.7 - -## [3.0.0](https://github.com/Ousret/charset_normalizer/compare/2.1.1...3.0.0) (2022-10-20) - -### Added -- Extend the capability of explain=True when cp_isolation contains at most two entries (min one), will log in details of the Mess-detector results -- Support for alternative language frequency set in charset_normalizer.assets.FREQUENCIES -- Add parameter `language_threshold` in `from_bytes`, `from_path` and `from_fp` to adjust the minimum expected coherence ratio -- `normalizer --version` now specify if current version provide extra speedup (meaning mypyc compilation whl) - -### Changed -- Build with static metadata using 'build' frontend -- Make the language detection stricter -- Optional: Module `md.py` can be compiled using Mypyc to provide an extra speedup up to 4x faster than v2.1 - -### Fixed -- CLI with opt --normalize fail when using full path for files -- TooManyAccentuatedPlugin induce false positive on the mess detection when too few alpha character have been fed to it -- Sphinx warnings when generating the documentation - -### Removed -- Coherence detector no longer return 'Simple English' instead return 'English' -- Coherence detector no longer return 'Classical Chinese' instead return 'Chinese' -- Breaking: Method `first()` and `best()` from CharsetMatch -- UTF-7 will no longer appear as "detected" without a recognized SIG/mark (is unreliable/conflict with ASCII) -- Breaking: Class aliases CharsetDetector, CharsetDoctor, CharsetNormalizerMatch and CharsetNormalizerMatches -- Breaking: Top-level function `normalize` -- Breaking: Properties `chaos_secondary_pass`, `coherence_non_latin` and `w_counter` from CharsetMatch -- Support for the backport `unicodedata2` - -## [3.0.0rc1](https://github.com/Ousret/charset_normalizer/compare/3.0.0b2...3.0.0rc1) (2022-10-18) - -### Added -- Extend the capability of explain=True when cp_isolation contains at most two entries (min one), will log in details of the Mess-detector results -- Support for alternative language frequency set in charset_normalizer.assets.FREQUENCIES -- Add parameter `language_threshold` in `from_bytes`, `from_path` and `from_fp` to adjust the minimum expected coherence ratio - -### Changed -- Build with static metadata using 'build' frontend -- Make the language detection stricter - -### Fixed -- CLI with opt --normalize fail when using full path for files -- TooManyAccentuatedPlugin induce false positive on the mess detection when too few alpha character have been fed to it - -### Removed -- Coherence detector no longer return 'Simple English' instead return 'English' -- Coherence detector no longer return 'Classical Chinese' instead return 'Chinese' - -## [3.0.0b2](https://github.com/Ousret/charset_normalizer/compare/3.0.0b1...3.0.0b2) (2022-08-21) - -### Added -- `normalizer --version` now specify if current version provide extra speedup (meaning mypyc compilation whl) - -### Removed -- Breaking: Method `first()` and `best()` from CharsetMatch -- UTF-7 will no longer appear as "detected" without a recognized SIG/mark (is unreliable/conflict with ASCII) - -### Fixed -- Sphinx warnings when generating the documentation - -## [3.0.0b1](https://github.com/Ousret/charset_normalizer/compare/2.1.0...3.0.0b1) (2022-08-15) - -### Changed -- Optional: Module `md.py` can be compiled using Mypyc to provide an extra speedup up to 4x faster than v2.1 - -### Removed -- Breaking: Class aliases CharsetDetector, CharsetDoctor, CharsetNormalizerMatch and CharsetNormalizerMatches -- Breaking: Top-level function `normalize` -- Breaking: Properties `chaos_secondary_pass`, `coherence_non_latin` and `w_counter` from CharsetMatch -- Support for the backport `unicodedata2` - -## [2.1.1](https://github.com/Ousret/charset_normalizer/compare/2.1.0...2.1.1) (2022-08-19) - -### Deprecated -- Function `normalize` scheduled for removal in 3.0 - -### Changed -- Removed useless call to decode in fn is_unprintable (#206) - -### Fixed -- Third-party library (i18n xgettext) crashing not recognizing utf_8 (PEP 263) with underscore from [@aleksandernovikov](https://github.com/aleksandernovikov) (#204) - -## [2.1.0](https://github.com/Ousret/charset_normalizer/compare/2.0.12...2.1.0) (2022-06-19) - -### Added -- Output the Unicode table version when running the CLI with `--version` (PR #194) - -### Changed -- Re-use decoded buffer for single byte character sets from [@nijel](https://github.com/nijel) (PR #175) -- Fixing some performance bottlenecks from [@deedy5](https://github.com/deedy5) (PR #183) - -### Fixed -- Workaround potential bug in cpython with Zero Width No-Break Space located in Arabic Presentation Forms-B, Unicode 1.1 not acknowledged as space (PR #175) -- CLI default threshold aligned with the API threshold from [@oleksandr-kuzmenko](https://github.com/oleksandr-kuzmenko) (PR #181) - -### Removed -- Support for Python 3.5 (PR #192) - -### Deprecated -- Use of backport unicodedata from `unicodedata2` as Python is quickly catching up, scheduled for removal in 3.0 (PR #194) - -## [2.0.12](https://github.com/Ousret/charset_normalizer/compare/2.0.11...2.0.12) (2022-02-12) - -### Fixed -- ASCII miss-detection on rare cases (PR #170) - -## [2.0.11](https://github.com/Ousret/charset_normalizer/compare/2.0.10...2.0.11) (2022-01-30) - -### Added -- Explicit support for Python 3.11 (PR #164) - -### Changed -- The logging behavior have been completely reviewed, now using only TRACE and DEBUG levels (PR #163 #165) - -## [2.0.10](https://github.com/Ousret/charset_normalizer/compare/2.0.9...2.0.10) (2022-01-04) - -### Fixed -- Fallback match entries might lead to UnicodeDecodeError for large bytes sequence (PR #154) - -### Changed -- Skipping the language-detection (CD) on ASCII (PR #155) - -## [2.0.9](https://github.com/Ousret/charset_normalizer/compare/2.0.8...2.0.9) (2021-12-03) - -### Changed -- Moderating the logging impact (since 2.0.8) for specific environments (PR #147) - -### Fixed -- Wrong logging level applied when setting kwarg `explain` to True (PR #146) - -## [2.0.8](https://github.com/Ousret/charset_normalizer/compare/2.0.7...2.0.8) (2021-11-24) -### Changed -- Improvement over Vietnamese detection (PR #126) -- MD improvement on trailing data and long foreign (non-pure latin) data (PR #124) -- Efficiency improvements in cd/alphabet_languages from [@adbar](https://github.com/adbar) (PR #122) -- call sum() without an intermediary list following PEP 289 recommendations from [@adbar](https://github.com/adbar) (PR #129) -- Code style as refactored by Sourcery-AI (PR #131) -- Minor adjustment on the MD around european words (PR #133) -- Remove and replace SRTs from assets / tests (PR #139) -- Initialize the library logger with a `NullHandler` by default from [@nmaynes](https://github.com/nmaynes) (PR #135) -- Setting kwarg `explain` to True will add provisionally (bounded to function lifespan) a specific stream handler (PR #135) - -### Fixed -- Fix large (misleading) sequence giving UnicodeDecodeError (PR #137) -- Avoid using too insignificant chunk (PR #137) - -### Added -- Add and expose function `set_logging_handler` to configure a specific StreamHandler from [@nmaynes](https://github.com/nmaynes) (PR #135) -- Add `CHANGELOG.md` entries, format is based on [Keep a Changelog](https://keepachangelog.com/en/1.0.0/) (PR #141) - -## [2.0.7](https://github.com/Ousret/charset_normalizer/compare/2.0.6...2.0.7) (2021-10-11) -### Added -- Add support for Kazakh (Cyrillic) language detection (PR #109) - -### Changed -- Further, improve inferring the language from a given single-byte code page (PR #112) -- Vainly trying to leverage PEP263 when PEP3120 is not supported (PR #116) -- Refactoring for potential performance improvements in loops from [@adbar](https://github.com/adbar) (PR #113) -- Various detection improvement (MD+CD) (PR #117) - -### Removed -- Remove redundant logging entry about detected language(s) (PR #115) - -### Fixed -- Fix a minor inconsistency between Python 3.5 and other versions regarding language detection (PR #117 #102) - -## [2.0.6](https://github.com/Ousret/charset_normalizer/compare/2.0.5...2.0.6) (2021-09-18) -### Fixed -- Unforeseen regression with the loss of the backward-compatibility with some older minor of Python 3.5.x (PR #100) -- Fix CLI crash when using --minimal output in certain cases (PR #103) - -### Changed -- Minor improvement to the detection efficiency (less than 1%) (PR #106 #101) - -## [2.0.5](https://github.com/Ousret/charset_normalizer/compare/2.0.4...2.0.5) (2021-09-14) -### Changed -- The project now comply with: flake8, mypy, isort and black to ensure a better overall quality (PR #81) -- The BC-support with v1.x was improved, the old staticmethods are restored (PR #82) -- The Unicode detection is slightly improved (PR #93) -- Add syntax sugar \_\_bool\_\_ for results CharsetMatches list-container (PR #91) - -### Removed -- The project no longer raise warning on tiny content given for detection, will be simply logged as warning instead (PR #92) - -### Fixed -- In some rare case, the chunks extractor could cut in the middle of a multi-byte character and could mislead the mess detection (PR #95) -- Some rare 'space' characters could trip up the UnprintablePlugin/Mess detection (PR #96) -- The MANIFEST.in was not exhaustive (PR #78) - -## [2.0.4](https://github.com/Ousret/charset_normalizer/compare/2.0.3...2.0.4) (2021-07-30) -### Fixed -- The CLI no longer raise an unexpected exception when no encoding has been found (PR #70) -- Fix accessing the 'alphabets' property when the payload contains surrogate characters (PR #68) -- The logger could mislead (explain=True) on detected languages and the impact of one MBCS match (PR #72) -- Submatch factoring could be wrong in rare edge cases (PR #72) -- Multiple files given to the CLI were ignored when publishing results to STDOUT. (After the first path) (PR #72) -- Fix line endings from CRLF to LF for certain project files (PR #67) - -### Changed -- Adjust the MD to lower the sensitivity, thus improving the global detection reliability (PR #69 #76) -- Allow fallback on specified encoding if any (PR #71) - -## [2.0.3](https://github.com/Ousret/charset_normalizer/compare/2.0.2...2.0.3) (2021-07-16) -### Changed -- Part of the detection mechanism has been improved to be less sensitive, resulting in more accurate detection results. Especially ASCII. (PR #63) -- According to the community wishes, the detection will fall back on ASCII or UTF-8 in a last-resort case. (PR #64) - -## [2.0.2](https://github.com/Ousret/charset_normalizer/compare/2.0.1...2.0.2) (2021-07-15) -### Fixed -- Empty/Too small JSON payload miss-detection fixed. Report from [@tseaver](https://github.com/tseaver) (PR #59) - -### Changed -- Don't inject unicodedata2 into sys.modules from [@akx](https://github.com/akx) (PR #57) - -## [2.0.1](https://github.com/Ousret/charset_normalizer/compare/2.0.0...2.0.1) (2021-07-13) -### Fixed -- Make it work where there isn't a filesystem available, dropping assets frequencies.json. Report from [@sethmlarson](https://github.com/sethmlarson). (PR #55) -- Using explain=False permanently disable the verbose output in the current runtime (PR #47) -- One log entry (language target preemptive) was not show in logs when using explain=True (PR #47) -- Fix undesired exception (ValueError) on getitem of instance CharsetMatches (PR #52) - -### Changed -- Public function normalize default args values were not aligned with from_bytes (PR #53) - -### Added -- You may now use charset aliases in cp_isolation and cp_exclusion arguments (PR #47) - -## [2.0.0](https://github.com/Ousret/charset_normalizer/compare/1.4.1...2.0.0) (2021-07-02) -### Changed -- 4x to 5 times faster than the previous 1.4.0 release. At least 2x faster than Chardet. -- Accent has been made on UTF-8 detection, should perform rather instantaneous. -- The backward compatibility with Chardet has been greatly improved. The legacy detect function returns an identical charset name whenever possible. -- The detection mechanism has been slightly improved, now Turkish content is detected correctly (most of the time) -- The program has been rewritten to ease the readability and maintainability. (+Using static typing)+ -- utf_7 detection has been reinstated. - -### Removed -- This package no longer require anything when used with Python 3.5 (Dropped cached_property) -- Removed support for these languages: Catalan, Esperanto, Kazakh, Baque, Volapük, Azeri, Galician, Nynorsk, Macedonian, and Serbocroatian. -- The exception hook on UnicodeDecodeError has been removed. - -### Deprecated -- Methods coherence_non_latin, w_counter, chaos_secondary_pass of the class CharsetMatch are now deprecated and scheduled for removal in v3.0 - -### Fixed -- The CLI output used the relative path of the file(s). Should be absolute. - -## [1.4.1](https://github.com/Ousret/charset_normalizer/compare/1.4.0...1.4.1) (2021-05-28) -### Fixed -- Logger configuration/usage no longer conflict with others (PR #44) - -## [1.4.0](https://github.com/Ousret/charset_normalizer/compare/1.3.9...1.4.0) (2021-05-21) -### Removed -- Using standard logging instead of using the package loguru. -- Dropping nose test framework in favor of the maintained pytest. -- Choose to not use dragonmapper package to help with gibberish Chinese/CJK text. -- Require cached_property only for Python 3.5 due to constraint. Dropping for every other interpreter version. -- Stop support for UTF-7 that does not contain a SIG. -- Dropping PrettyTable, replaced with pure JSON output in CLI. - -### Fixed -- BOM marker in a CharsetNormalizerMatch instance could be False in rare cases even if obviously present. Due to the sub-match factoring process. -- Not searching properly for the BOM when trying utf32/16 parent codec. - -### Changed -- Improving the package final size by compressing frequencies.json. -- Huge improvement over the larges payload. - -### Added -- CLI now produces JSON consumable output. -- Return ASCII if given sequences fit. Given reasonable confidence. - -## [1.3.9](https://github.com/Ousret/charset_normalizer/compare/1.3.8...1.3.9) (2021-05-13) - -### Fixed -- In some very rare cases, you may end up getting encode/decode errors due to a bad bytes payload (PR #40) - -## [1.3.8](https://github.com/Ousret/charset_normalizer/compare/1.3.7...1.3.8) (2021-05-12) - -### Fixed -- Empty given payload for detection may cause an exception if trying to access the `alphabets` property. (PR #39) - -## [1.3.7](https://github.com/Ousret/charset_normalizer/compare/1.3.6...1.3.7) (2021-05-12) - -### Fixed -- The legacy detect function should return UTF-8-SIG if sig is present in the payload. (PR #38) - -## [1.3.6](https://github.com/Ousret/charset_normalizer/compare/1.3.5...1.3.6) (2021-02-09) - -### Changed -- Amend the previous release to allow prettytable 2.0 (PR #35) - -## [1.3.5](https://github.com/Ousret/charset_normalizer/compare/1.3.4...1.3.5) (2021-02-08) - -### Fixed -- Fix error while using the package with a python pre-release interpreter (PR #33) - -### Changed -- Dependencies refactoring, constraints revised. - -### Added -- Add python 3.9 and 3.10 to the supported interpreters - -MIT License - -Copyright (c) 2019 TAHRI Ahmed R. - -Permission is hereby granted, free of charge, to any person obtaining a copy -of this software and associated documentation files (the "Software"), to deal -in the Software without restriction, including without limitation the rights -to use, copy, modify, merge, publish, distribute, sublicense, and/or sell -copies of the Software, and to permit persons to whom the Software is -furnished to do so, subject to the following conditions: - -The above copyright notice and this permission notice shall be included in all -copies or substantial portions of the Software. - -THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR -IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, -FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE -AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER -LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, -OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE -SOFTWARE. diff --git a/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/RECORD b/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/RECORD deleted file mode 100644 index 5ba16ad..0000000 --- a/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/RECORD +++ /dev/null @@ -1,36 +0,0 @@ -../../Scripts/normalizer.exe,sha256=FGbAjFbxfNRHgqipIJNM4bp_ySebnxRF6Sz0-CPStpk,108474 -charset_normalizer-3.3.1.dist-info/INSTALLER,sha256=zuuue4knoyJ-UwPPXg8fezS7VCrXJQrAP7zeNuwvFQg,4 -charset_normalizer-3.3.1.dist-info/LICENSE,sha256=znnj1Var_lZ-hzOvD5W50wcQDp9qls3SD2xIau88ufc,1090 -charset_normalizer-3.3.1.dist-info/METADATA,sha256=36RHagcJsy9CFkHN56ndhmYJEZ5hah_4qTIXFkrTe7g,33802 -charset_normalizer-3.3.1.dist-info/RECORD,, -charset_normalizer-3.3.1.dist-info/REQUESTED,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0 -charset_normalizer-3.3.1.dist-info/WHEEL,sha256=badvNS-y9fEq0X-qzdZYvql_JFjI7Xfw-wR8FsjoK0I,102 -charset_normalizer-3.3.1.dist-info/entry_points.txt,sha256=ADSTKrkXZ3hhdOVFi6DcUEHQRS0xfxDIE_pEz4wLIXA,65 -charset_normalizer-3.3.1.dist-info/top_level.txt,sha256=7ASyzePr8_xuZWJsnqJjIBtyV8vhEo0wBCv1MPRRi3Q,19 -charset_normalizer/__init__.py,sha256=m1cUEsb9K5v831m9P_lv2JlUEKD7MhxL7fxw3hn75o4,1623 -charset_normalizer/__main__.py,sha256=nVnMo31hTPN2Yy045GJIvHj3dKDJz4dAQR3cUSdvYyc,77 -charset_normalizer/__pycache__/__init__.cpython-311.pyc,, -charset_normalizer/__pycache__/__main__.cpython-311.pyc,, -charset_normalizer/__pycache__/api.cpython-311.pyc,, -charset_normalizer/__pycache__/cd.cpython-311.pyc,, -charset_normalizer/__pycache__/constant.cpython-311.pyc,, -charset_normalizer/__pycache__/legacy.cpython-311.pyc,, -charset_normalizer/__pycache__/md.cpython-311.pyc,, -charset_normalizer/__pycache__/models.cpython-311.pyc,, -charset_normalizer/__pycache__/utils.cpython-311.pyc,, -charset_normalizer/__pycache__/version.cpython-311.pyc,, -charset_normalizer/api.py,sha256=qFL0frUrcfcYEJmGpqoJ4Af68ToVue3f5SK1gp8UC5Q,21723 -charset_normalizer/cd.py,sha256=Yfk3sbee0Xqo1-vmQYbOqM51-SajXPLzFVG89nTsZzc,12955 -charset_normalizer/cli/__init__.py,sha256=COwP8fK2qbuldMem2lL81JieY-PIA2G2GZ5IdAPMPFA,106 -charset_normalizer/cli/__main__.py,sha256=rs-cBipBzr7d0TAaUa0nG4qrjXhdddeCVB-f6Xt_wS0,10040 -charset_normalizer/cli/__pycache__/__init__.cpython-311.pyc,, -charset_normalizer/cli/__pycache__/__main__.cpython-311.pyc,, -charset_normalizer/constant.py,sha256=2tVrXQ9cvC8jt0b8gZzRXvXte1pVbRra0A5dOWDQSao,42476 -charset_normalizer/legacy.py,sha256=KbJxEpu7g6zE2uXSB3T-3178cgiSQdVJlJmY-gv3EAM,2125 -charset_normalizer/md.cp311-win_amd64.pyd,sha256=UDBnVSFaRQU254hkZwWPK4e18urbul6MwekkhKccpZs,10752 -charset_normalizer/md.py,sha256=HAl9wANkpchzq7UYGZ0kHpkRLRyfQQYLsZOTv8xNHa0,19417 -charset_normalizer/md__mypyc.cp311-win_amd64.pyd,sha256=k_wCBRZrGNGywTzPm8M_PtefmXiSAN4b1IwyTPAm-gM,113664 -charset_normalizer/models.py,sha256=GUMoL9BqGd2o844SVZSkrdWnf0nSyyJGdhMJMkMNZ68,11824 -charset_normalizer/py.typed,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0 -charset_normalizer/utils.py,sha256=ewRcfYnB9jFol0UhmBBaVY0Q63q1Wf91sMZnT-KvbR4,11697 -charset_normalizer/version.py,sha256=n3AAdOnVw6mALeTbSozGt2jXwL3cnfNDWMBhP2ueB-c,85 diff --git a/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/REQUESTED b/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/REQUESTED deleted file mode 100644 index e69de29..0000000 diff --git a/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/WHEEL b/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/WHEEL deleted file mode 100644 index 6d16045..0000000 --- a/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/WHEEL +++ /dev/null @@ -1,5 +0,0 @@ -Wheel-Version: 1.0 -Generator: bdist_wheel (0.41.2) -Root-Is-Purelib: false -Tag: cp311-cp311-win_amd64 - diff --git a/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/entry_points.txt b/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/entry_points.txt deleted file mode 100644 index 65619e7..0000000 --- a/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/entry_points.txt +++ /dev/null @@ -1,2 +0,0 @@ -[console_scripts] -normalizer = charset_normalizer.cli:cli_detect diff --git a/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/top_level.txt b/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/top_level.txt deleted file mode 100644 index 66958f0..0000000 --- a/server/venv/Lib/site-packages/charset_normalizer-3.3.1.dist-info/top_level.txt +++ /dev/null @@ -1 +0,0 @@ -charset_normalizer diff --git a/server/venv/Lib/site-packages/charset_normalizer/__init__.py b/server/venv/Lib/site-packages/charset_normalizer/__init__.py deleted file mode 100644 index 4077a44..0000000 --- a/server/venv/Lib/site-packages/charset_normalizer/__init__.py +++ /dev/null @@ -1,46 +0,0 @@ -# -*- coding: utf-8 -*- -""" -Charset-Normalizer -~~~~~~~~~~~~~~ -The Real First Universal Charset Detector. -A library that helps you read text from an unknown charset encoding. -Motivated by chardet, This package is trying to resolve the issue by taking a new approach. -All IANA character set names for which the Python core library provides codecs are supported. - -Basic usage: - >>> from charset_normalizer import from_bytes - >>> results = from_bytes('Bсеки човек има право на образование. Oбразованието!'.encode('utf_8')) - >>> best_guess = results.best() - >>> str(best_guess) - 'Bсеки човек има право на образование. Oбразованието!' - -Others methods and usages are available - see the full documentation -at

- FastAPI framework, high performance, easy to learn, fast to code, ready for production -

- - ---- - -**Documentation**: https://fastapi.tiangolo.com - -**Source Code**: https://github.com/tiangolo/fastapi - ---- - -FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.8+ based on standard Python type hints. - -The key features are: - -* **Fast**: Very high performance, on par with **NodeJS** and **Go** (thanks to Starlette and Pydantic). [One of the fastest Python frameworks available](#performance). -* **Fast to code**: Increase the speed to develop features by about 200% to 300%. * -* **Fewer bugs**: Reduce about 40% of human (developer) induced errors. * -* **Intuitive**: Great editor support. Completion everywhere. Less time debugging. -* **Easy**: Designed to be easy to use and learn. Less time reading docs. -* **Short**: Minimize code duplication. Multiple features from each parameter declaration. Fewer bugs. -* **Robust**: Get production-ready code. With automatic interactive documentation. -* **Standards-based**: Based on (and fully compatible with) the open standards for APIs: OpenAPI (previously known as Swagger) and JSON Schema. - -* estimation based on tests on an internal development team, building production applications. - -## Sponsors - - - - -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

- -

-

-

-Other sponsors

-

-## Opinions

-

-"_[...] I'm using **FastAPI** a ton these days. [...] I'm actually planning to use it for all of my team's **ML services at Microsoft**. Some of them are getting integrated into the core **Windows** product and some **Office** products._"

-

-

-

-

-

-Other sponsors

-

-## Opinions

-

-"_[...] I'm using **FastAPI** a ton these days. [...] I'm actually planning to use it for all of my team's **ML services at Microsoft**. Some of them are getting integrated into the core **Windows** product and some **Office** products._"

-

-Kabir Khan - Microsoft (ref)

-

----

-

-"_We adopted the **FastAPI** library to spawn a **REST** server that can be queried to obtain **predictions**. [for Ludwig]_"

-

-Piero Molino, Yaroslav Dudin, and Sai Sumanth Miryala - Uber (ref)

-

----

-

-"_**Netflix** is pleased to announce the open-source release of our **crisis management** orchestration framework: **Dispatch**! [built with **FastAPI**]_"

-

-Kevin Glisson, Marc Vilanova, Forest Monsen - Netflix (ref)

-

----

-

-"_I’m over the moon excited about **FastAPI**. It’s so fun!_"

-

-Brian Okken - Python Bytes podcast host (ref)

-

----

-

-"_Honestly, what you've built looks super solid and polished. In many ways, it's what I wanted **Hug** to be - it's really inspiring to see someone build that._"

-

-

-

----

-

-"_If you're looking to learn one **modern framework** for building REST APIs, check out **FastAPI** [...] It's fast, easy to use and easy to learn [...]_"

-

-"_We've switched over to **FastAPI** for our **APIs** [...] I think you'll like it [...]_"

-

-

-

----

-

-"_If anyone is looking to build a production Python API, I would highly recommend **FastAPI**. It is **beautifully designed**, **simple to use** and **highly scalable**, it has become a **key component** in our API first development strategy and is driving many automations and services such as our Virtual TAC Engineer._"

-

-Deon Pillsbury - Cisco (ref)

-

----

-

-## **Typer**, the FastAPI of CLIs

-

- -

-If you are building a CLI app to be used in the terminal instead of a web API, check out **Typer**.

-

-**Typer** is FastAPI's little sibling. And it's intended to be the **FastAPI of CLIs**. ⌨️ 🚀

-

-## Requirements

-

-Python 3.8+

-

-FastAPI stands on the shoulders of giants:

-

-* Starlette for the web parts.

-* Pydantic for the data parts.

-

-## Installation

-

-

-

-If you are building a CLI app to be used in the terminal instead of a web API, check out **Typer**.

-

-**Typer** is FastAPI's little sibling. And it's intended to be the **FastAPI of CLIs**. ⌨️ 🚀

-

-## Requirements

-

-Python 3.8+

-

-FastAPI stands on the shoulders of giants:

-

-* Starlette for the web parts.

-* Pydantic for the data parts.

-

-## Installation

-

-

-

-```console

-$ pip install fastapi

-

----> 100%

-```

-

-

-

-You will also need an ASGI server, for production such as Uvicorn or Hypercorn.

-

-

-

-```console

-$ pip install "uvicorn[standard]"

-

----> 100%

-```

-

-

-

-## Example

-

-### Create it

-

-* Create a file `main.py` with:

-

-```Python

-from typing import Union

-

-from fastapi import FastAPI

-

-app = FastAPI()

-

-

-@app.get("/")

-def read_root():

- return {"Hello": "World"}

-

-

-@app.get("/items/{item_id}")

-def read_item(item_id: int, q: Union[str, None] = None):

- return {"item_id": item_id, "q": q}

-```

-

-

-Or use

-

-If your code uses `async` / `await`, use `async def`:

-

-```Python hl_lines="9 14"

-from typing import Union

-

-from fastapi import FastAPI

-

-app = FastAPI()

-

-

-@app.get("/")

-async def read_root():

- return {"Hello": "World"}

-

-

-@app.get("/items/{item_id}")

-async def read_item(item_id: int, q: Union[str, None] = None):

- return {"item_id": item_id, "q": q}

-```

-

-**Note**:

-

-If you don't know, check the _"In a hurry?"_ section about `async` and `await` in the docs.

-

-

-

-### Run it

-

-Run the server with:

-

-Or use async def...

-

-If your code uses `async` / `await`, use `async def`:

-

-```Python hl_lines="9 14"

-from typing import Union

-

-from fastapi import FastAPI

-

-app = FastAPI()

-

-

-@app.get("/")

-async def read_root():

- return {"Hello": "World"}

-

-

-@app.get("/items/{item_id}")

-async def read_item(item_id: int, q: Union[str, None] = None):

- return {"item_id": item_id, "q": q}

-```

-

-**Note**:

-

-If you don't know, check the _"In a hurry?"_ section about `async` and `await` in the docs.

-

-

-

-```console

-$ uvicorn main:app --reload

-

-INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

-INFO: Started reloader process [28720]

-INFO: Started server process [28722]

-INFO: Waiting for application startup.

-INFO: Application startup complete.

-```

-

-

-

-

-About the command

-

-The command `uvicorn main:app` refers to:

-

-* `main`: the file `main.py` (the Python "module").

-* `app`: the object created inside of `main.py` with the line `app = FastAPI()`.

-* `--reload`: make the server restart after code changes. Only do this for development.

-

-

-

-### Check it

-

-Open your browser at http://127.0.0.1:8000/items/5?q=somequery.

-

-You will see the JSON response as:

-

-```JSON

-{"item_id": 5, "q": "somequery"}

-```

-

-You already created an API that:

-

-* Receives HTTP requests in the _paths_ `/` and `/items/{item_id}`.

-* Both _paths_ take `GET` operations (also known as HTTP _methods_).

-* The _path_ `/items/{item_id}` has a _path parameter_ `item_id` that should be an `int`.

-* The _path_ `/items/{item_id}` has an optional `str` _query parameter_ `q`.

-

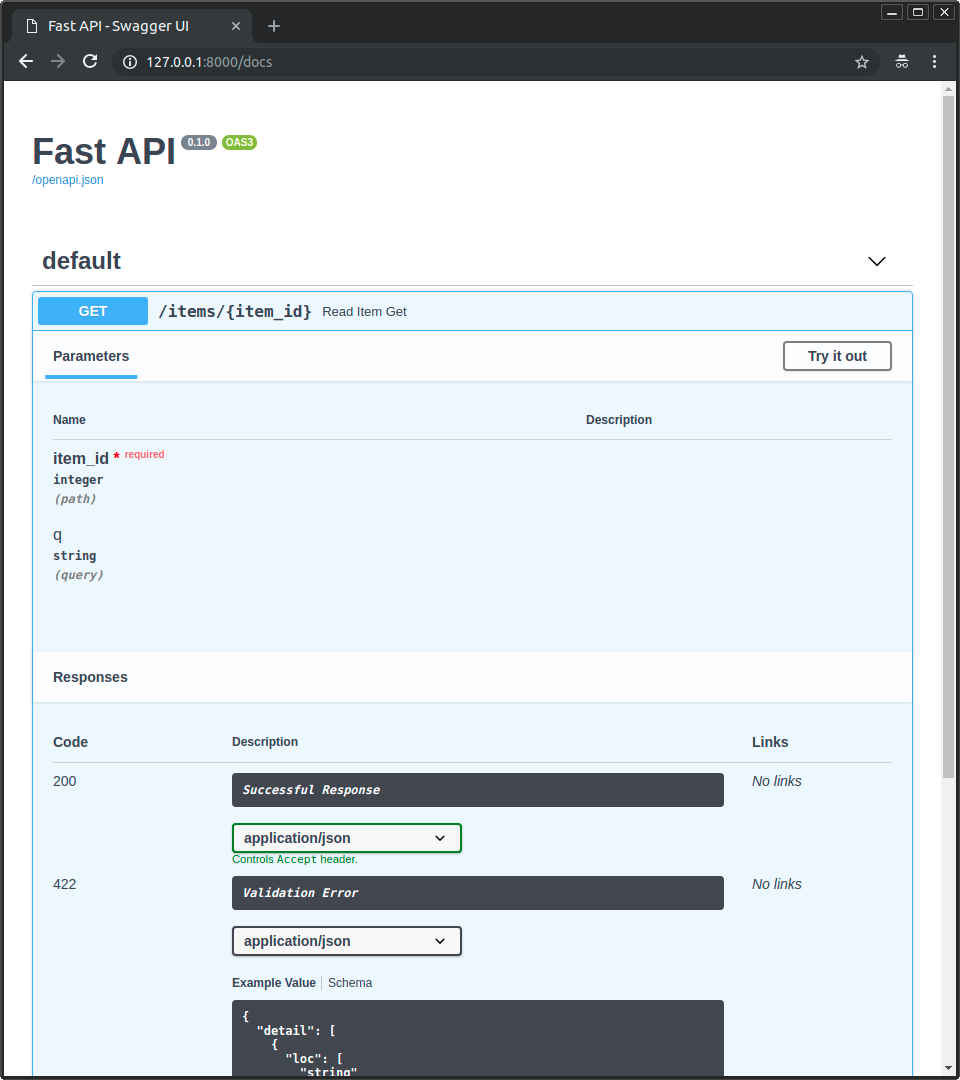

-### Interactive API docs

-

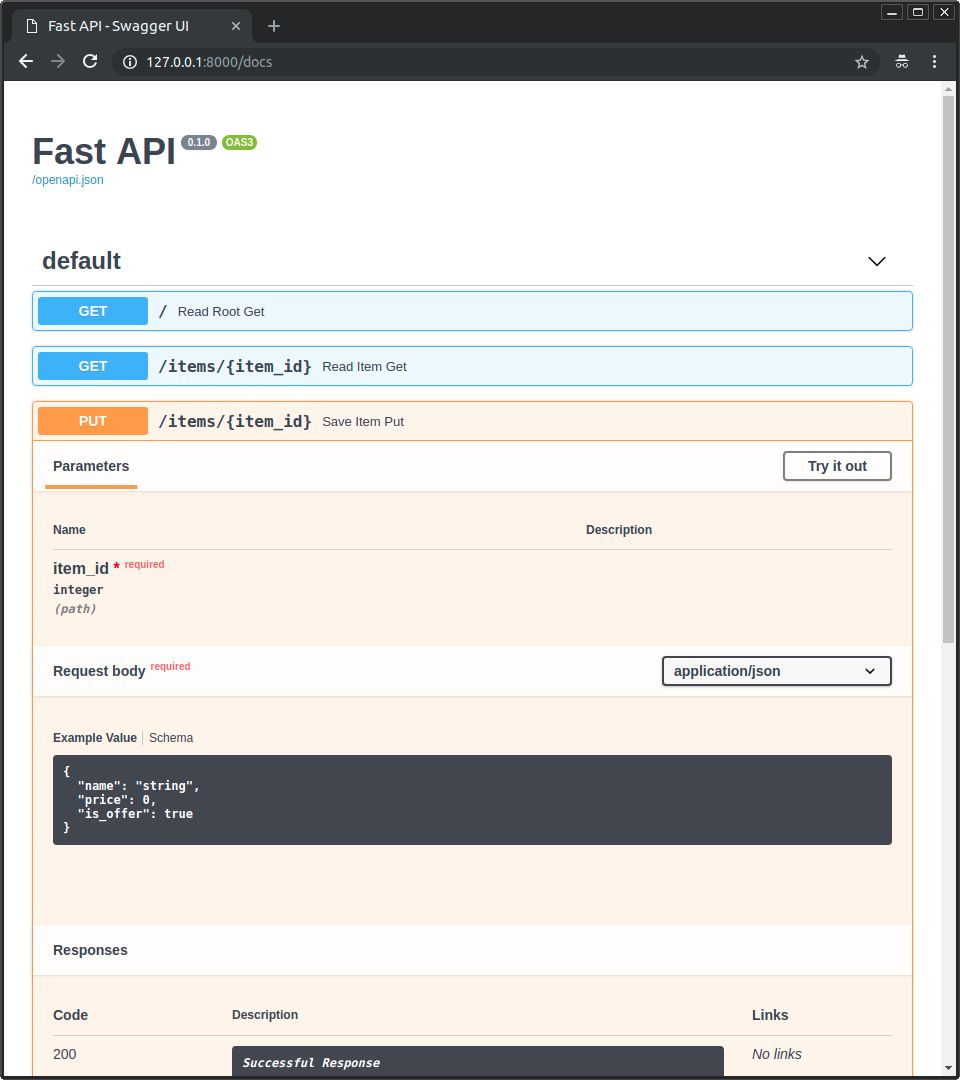

-Now go to http://127.0.0.1:8000/docs.

-

-You will see the automatic interactive API documentation (provided by Swagger UI):

-

-

-

-### Alternative API docs

-

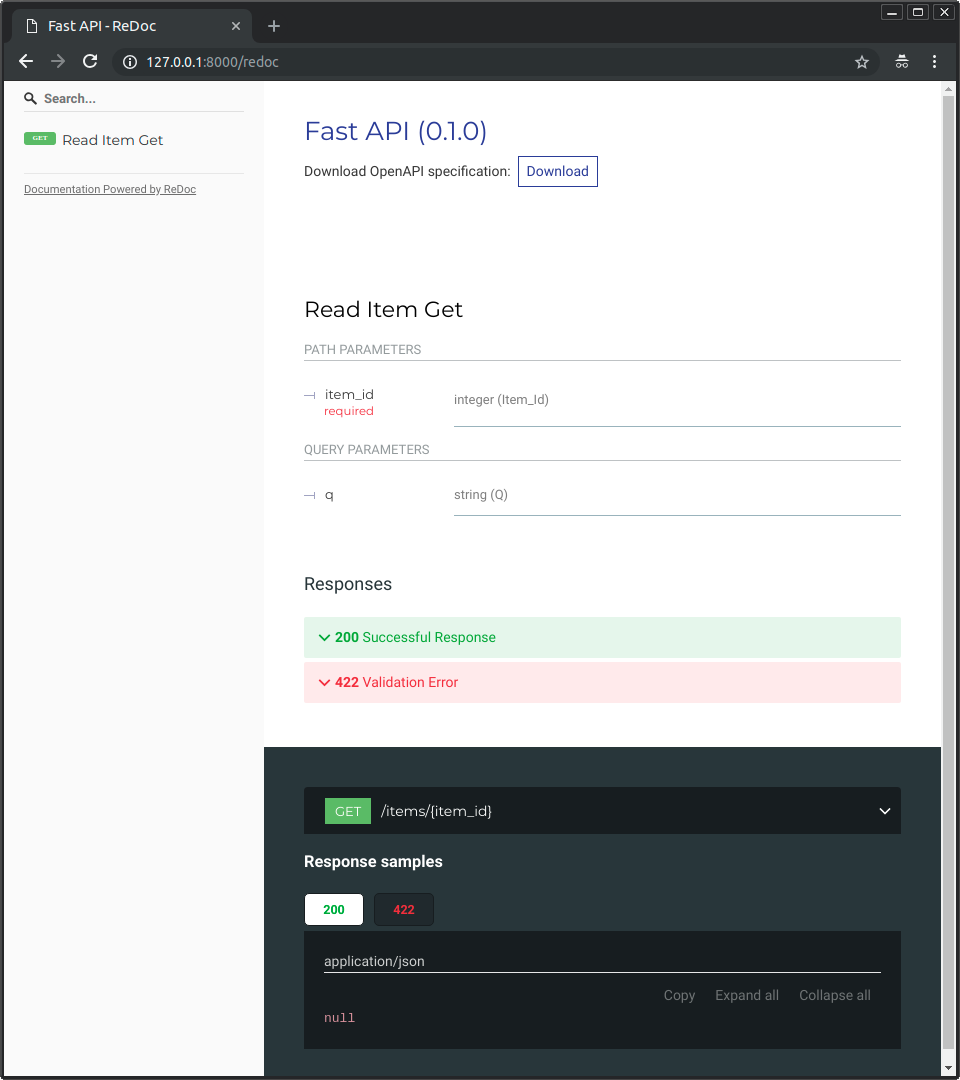

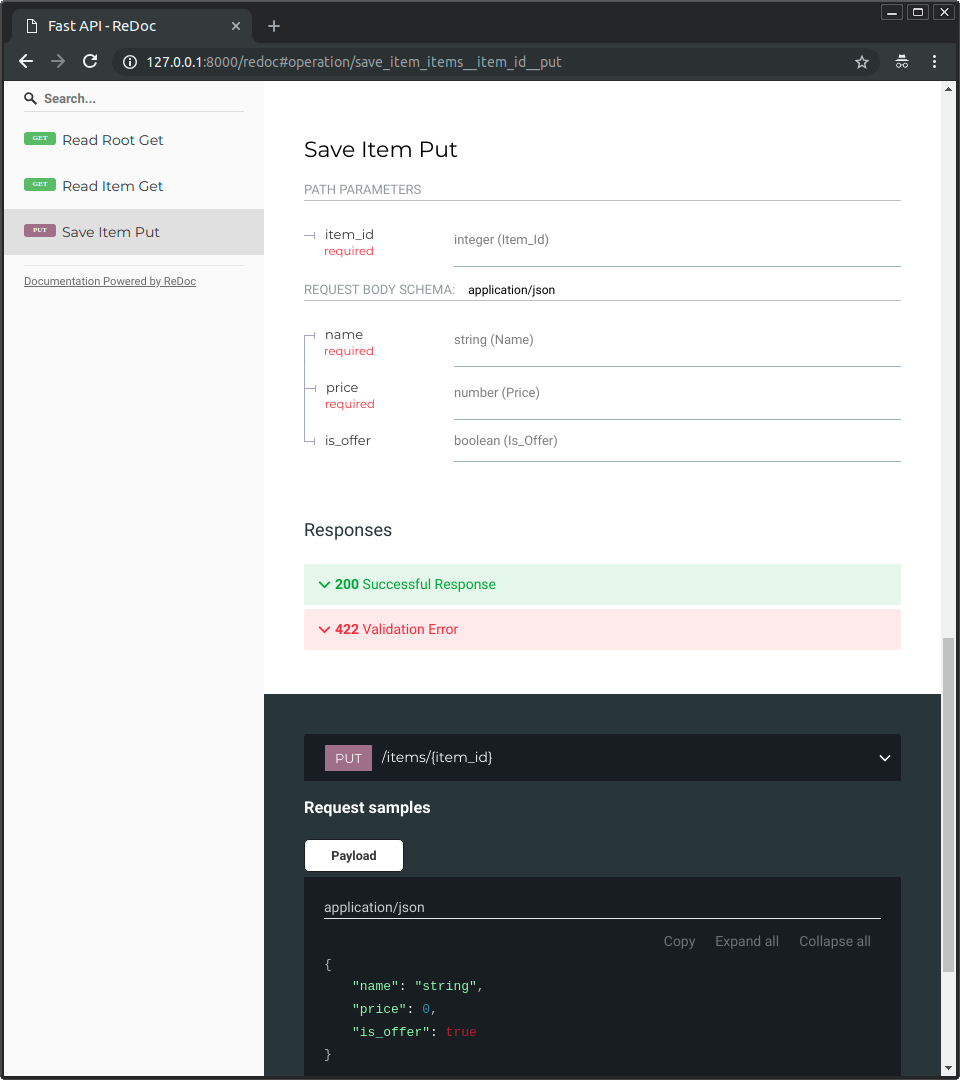

-And now, go to http://127.0.0.1:8000/redoc.

-

-You will see the alternative automatic documentation (provided by ReDoc):

-

-

-

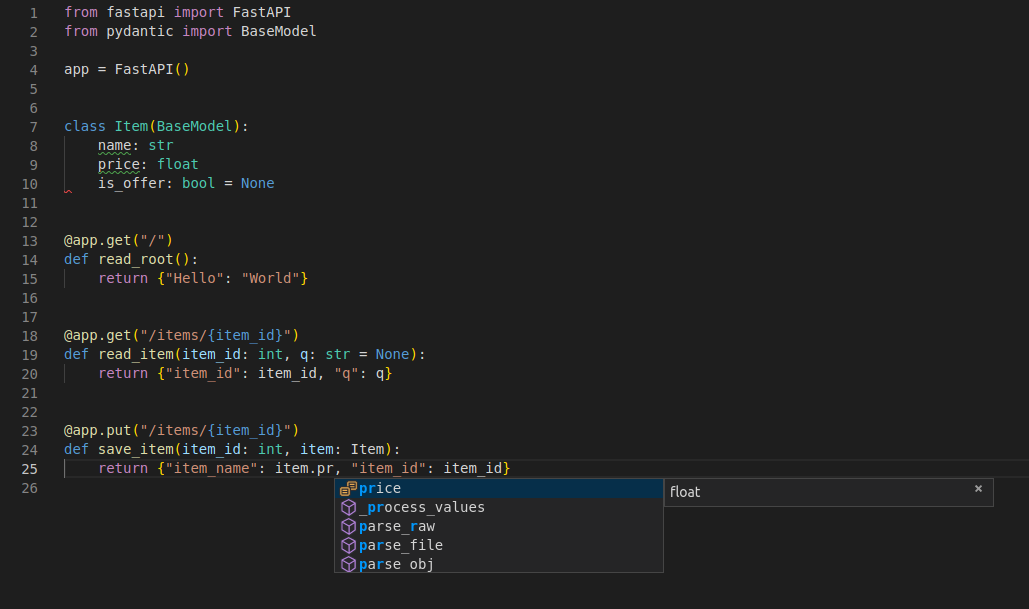

-## Example upgrade

-

-Now modify the file `main.py` to receive a body from a `PUT` request.

-

-Declare the body using standard Python types, thanks to Pydantic.

-

-```Python hl_lines="4 9-12 25-27"

-from typing import Union

-

-from fastapi import FastAPI

-from pydantic import BaseModel

-

-app = FastAPI()

-

-

-class Item(BaseModel):

- name: str

- price: float

- is_offer: Union[bool, None] = None

-

-

-@app.get("/")

-def read_root():

- return {"Hello": "World"}

-

-

-@app.get("/items/{item_id}")

-def read_item(item_id: int, q: Union[str, None] = None):

- return {"item_id": item_id, "q": q}

-

-

-@app.put("/items/{item_id}")

-def update_item(item_id: int, item: Item):

- return {"item_name": item.name, "item_id": item_id}

-```

-

-The server should reload automatically (because you added `--reload` to the `uvicorn` command above).

-

-### Interactive API docs upgrade

-

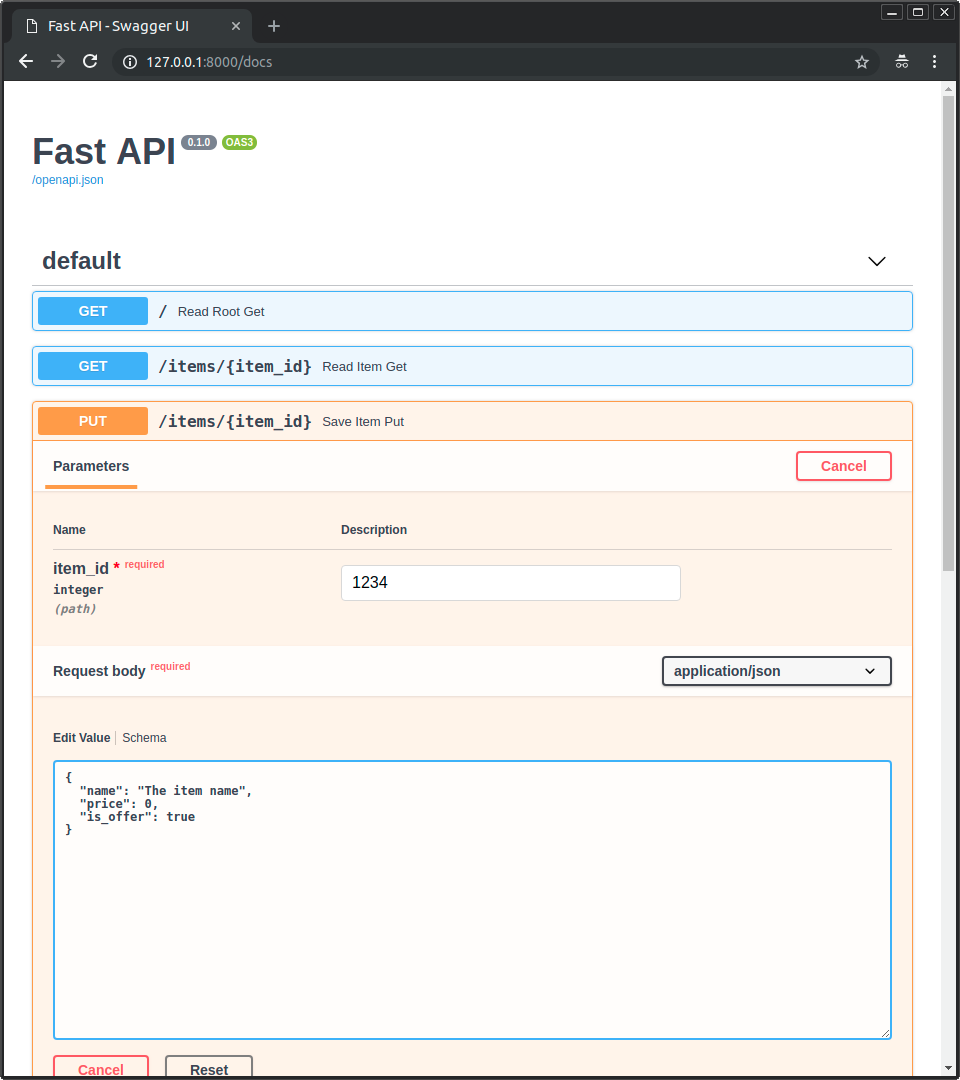

-Now go to http://127.0.0.1:8000/docs.

-

-* The interactive API documentation will be automatically updated, including the new body:

-

-

-

-* Click on the button "Try it out", it allows you to fill the parameters and directly interact with the API:

-

-

-

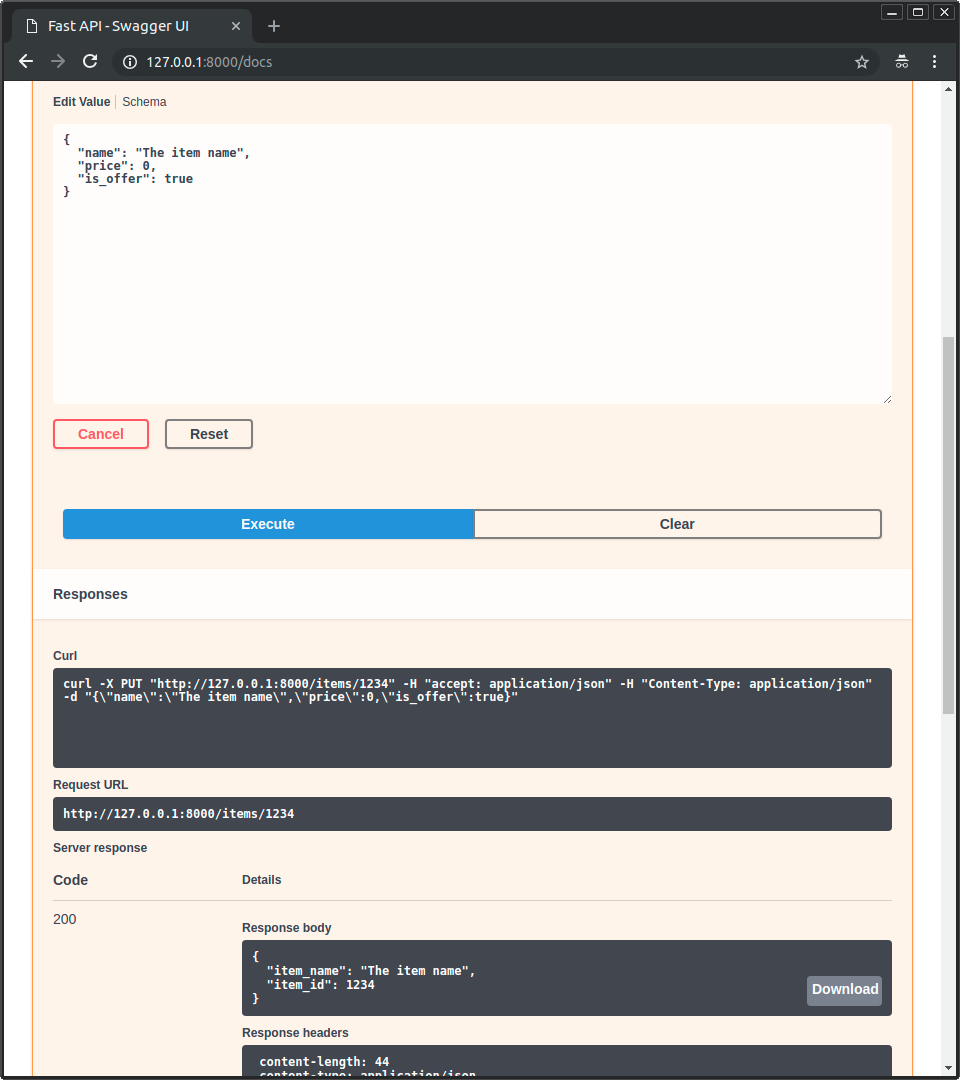

-* Then click on the "Execute" button, the user interface will communicate with your API, send the parameters, get the results and show them on the screen:

-

-

-

-### Alternative API docs upgrade

-

-And now, go to http://127.0.0.1:8000/redoc.

-

-* The alternative documentation will also reflect the new query parameter and body:

-

-

-

-### Recap

-

-In summary, you declare **once** the types of parameters, body, etc. as function parameters.

-

-You do that with standard modern Python types.

-

-You don't have to learn a new syntax, the methods or classes of a specific library, etc.

-

-Just standard **Python 3.8+**.

-

-For example, for an `int`:

-

-```Python

-item_id: int

-```

-

-or for a more complex `Item` model:

-

-```Python

-item: Item

-```

-

-...and with that single declaration you get:

-

-* Editor support, including:

- * Completion.

- * Type checks.

-* Validation of data:

- * Automatic and clear errors when the data is invalid.

- * Validation even for deeply nested JSON objects.

-* Conversion of input data: coming from the network to Python data and types. Reading from:

- * JSON.

- * Path parameters.

- * Query parameters.

- * Cookies.

- * Headers.

- * Forms.

- * Files.

-* Conversion of output data: converting from Python data and types to network data (as JSON):

- * Convert Python types (`str`, `int`, `float`, `bool`, `list`, etc).

- * `datetime` objects.

- * `UUID` objects.

- * Database models.

- * ...and many more.

-* Automatic interactive API documentation, including 2 alternative user interfaces:

- * Swagger UI.

- * ReDoc.

-

----

-

-Coming back to the previous code example, **FastAPI** will:

-

-* Validate that there is an `item_id` in the path for `GET` and `PUT` requests.

-* Validate that the `item_id` is of type `int` for `GET` and `PUT` requests.

- * If it is not, the client will see a useful, clear error.

-* Check if there is an optional query parameter named `q` (as in `http://127.0.0.1:8000/items/foo?q=somequery`) for `GET` requests.

- * As the `q` parameter is declared with `= None`, it is optional.

- * Without the `None` it would be required (as is the body in the case with `PUT`).

-* For `PUT` requests to `/items/{item_id}`, Read the body as JSON:

- * Check that it has a required attribute `name` that should be a `str`.

- * Check that it has a required attribute `price` that has to be a `float`.

- * Check that it has an optional attribute `is_offer`, that should be a `bool`, if present.

- * All this would also work for deeply nested JSON objects.

-* Convert from and to JSON automatically.

-* Document everything with OpenAPI, that can be used by:

- * Interactive documentation systems.

- * Automatic client code generation systems, for many languages.

-* Provide 2 interactive documentation web interfaces directly.

-

----

-

-We just scratched the surface, but you already get the idea of how it all works.

-

-Try changing the line with:

-

-```Python

- return {"item_name": item.name, "item_id": item_id}

-```

-

-...from:

-

-```Python

- ... "item_name": item.name ...

-```

-

-...to:

-

-```Python

- ... "item_price": item.price ...

-```

-

-...and see how your editor will auto-complete the attributes and know their types:

-

-

-

-For a more complete example including more features, see the Tutorial - User Guide.

-

-**Spoiler alert**: the tutorial - user guide includes:

-

-* Declaration of **parameters** from other different places as: **headers**, **cookies**, **form fields** and **files**.

-* How to set **validation constraints** as `maximum_length` or `regex`.

-* A very powerful and easy to use **Dependency Injection** system.

-* Security and authentication, including support for **OAuth2** with **JWT tokens** and **HTTP Basic** auth.

-* More advanced (but equally easy) techniques for declaring **deeply nested JSON models** (thanks to Pydantic).

-* **GraphQL** integration with Strawberry and other libraries.

-* Many extra features (thanks to Starlette) as:

- * **WebSockets**

- * extremely easy tests based on HTTPX and `pytest`

- * **CORS**

- * **Cookie Sessions**

- * ...and more.

-

-## Performance

-

-Independent TechEmpower benchmarks show **FastAPI** applications running under Uvicorn as one of the fastest Python frameworks available, only below Starlette and Uvicorn themselves (used internally by FastAPI). (*)

-

-To understand more about it, see the section Benchmarks.

-

-## Optional Dependencies

-

-Used by Pydantic:

-

-* About the command uvicorn main:app --reload...

-

-The command `uvicorn main:app` refers to:

-

-* `main`: the file `main.py` (the Python "module").

-* `app`: the object created inside of `main.py` with the line `app = FastAPI()`.

-* `--reload`: make the server restart after code changes. Only do this for development.

-

-email_validator - for email validation.

-* pydantic-settings - for settings management.

-* pydantic-extra-types - for extra types to be used with Pydantic.

-

-Used by Starlette:

-

-* httpx - Required if you want to use the `TestClient`.

-* jinja2 - Required if you want to use the default template configuration.

-* python-multipart - Required if you want to support form "parsing", with `request.form()`.

-* itsdangerous - Required for `SessionMiddleware` support.

-* pyyaml - Required for Starlette's `SchemaGenerator` support (you probably don't need it with FastAPI).

-* ujson - Required if you want to use `UJSONResponse`.

-

-Used by FastAPI / Starlette:

-

-* uvicorn - for the server that loads and serves your application.

-* orjson - Required if you want to use `ORJSONResponse`.

-

-You can install all of these with `pip install "fastapi[all]"`.

-

-## License

-

-This project is licensed under the terms of the MIT license.

diff --git a/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/RECORD b/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/RECORD

deleted file mode 100644

index 7aa86a0..0000000

--- a/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/RECORD

+++ /dev/null

@@ -1,93 +0,0 @@

-fastapi-0.104.0.dist-info/INSTALLER,sha256=zuuue4knoyJ-UwPPXg8fezS7VCrXJQrAP7zeNuwvFQg,4

-fastapi-0.104.0.dist-info/METADATA,sha256=CkzeBpixDOSeV0EHeSYTRlEY06F6nJwCcgTBbBTqcyY,24298

-fastapi-0.104.0.dist-info/RECORD,,

-fastapi-0.104.0.dist-info/REQUESTED,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

-fastapi-0.104.0.dist-info/WHEEL,sha256=9QBuHhg6FNW7lppboF2vKVbCGTVzsFykgRQjjlajrhA,87

-fastapi-0.104.0.dist-info/licenses/LICENSE,sha256=Tsif_IFIW5f-xYSy1KlhAy7v_oNEU4lP2cEnSQbMdE4,1086

-fastapi/__init__.py,sha256=gn98glQhIF340JPgqEZxbeyUUHwWya-Wdin8FALRQc0,1081

-fastapi/__pycache__/__init__.cpython-311.pyc,,

-fastapi/__pycache__/_compat.cpython-311.pyc,,

-fastapi/__pycache__/applications.cpython-311.pyc,,

-fastapi/__pycache__/background.cpython-311.pyc,,

-fastapi/__pycache__/concurrency.cpython-311.pyc,,

-fastapi/__pycache__/datastructures.cpython-311.pyc,,

-fastapi/__pycache__/encoders.cpython-311.pyc,,

-fastapi/__pycache__/exception_handlers.cpython-311.pyc,,

-fastapi/__pycache__/exceptions.cpython-311.pyc,,

-fastapi/__pycache__/logger.cpython-311.pyc,,

-fastapi/__pycache__/param_functions.cpython-311.pyc,,

-fastapi/__pycache__/params.cpython-311.pyc,,

-fastapi/__pycache__/requests.cpython-311.pyc,,

-fastapi/__pycache__/responses.cpython-311.pyc,,

-fastapi/__pycache__/routing.cpython-311.pyc,,

-fastapi/__pycache__/staticfiles.cpython-311.pyc,,

-fastapi/__pycache__/templating.cpython-311.pyc,,

-fastapi/__pycache__/testclient.cpython-311.pyc,,

-fastapi/__pycache__/types.cpython-311.pyc,,

-fastapi/__pycache__/utils.cpython-311.pyc,,

-fastapi/__pycache__/websockets.cpython-311.pyc,,

-fastapi/_compat.py,sha256=xAFxZVkeuyOGlAHgCU_tmCtSLZjGkXvUElaeyoKoVrk,22796

-fastapi/applications.py,sha256=0x_D9p1AwI8EoBWgL_AsG0Qw8bp6TSZiDKqoAXR5W-w,179291

-fastapi/background.py,sha256=F1tsrJKfDZaRchNgF9ykB2PcRaPBJTbL4htN45TJAIc,1799

-fastapi/concurrency.py,sha256=NAK9SMlTCOALLjTAR6KzWUDEkVj7_EyNRz0-lDVW_W8,1467

-fastapi/datastructures.py,sha256=FF1s2g6cAQ5XxlNToB3scgV94Zf3DjdzcaI7ToaTrmg,5797

-fastapi/dependencies/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

-fastapi/dependencies/__pycache__/__init__.cpython-311.pyc,,

-fastapi/dependencies/__pycache__/models.cpython-311.pyc,,

-fastapi/dependencies/__pycache__/utils.cpython-311.pyc,,

-fastapi/dependencies/models.py,sha256=-n-YCxzgVBkurQi49qOTooT71v_oeAhHJ-qQFonxh5o,2494

-fastapi/dependencies/utils.py,sha256=DjRdd_NVdXh_jDYKTRjUIXkwkLD0WE4oFXQC4peMr2c,29915

-fastapi/encoders.py,sha256=90lbmIW8NZjpPVzbgKhpY49B7TFqa7hrdQDQa70SM9U,11024

-fastapi/exception_handlers.py,sha256=MBrIOA-ugjJDivIi4rSsUJBdTsjuzN76q4yh0q1COKw,1332

-fastapi/exceptions.py,sha256=SQsPxq-QYBZUhq6L4K3B3W7gaSD3Gub2f17erStRagY,5000

-fastapi/logger.py,sha256=I9NNi3ov8AcqbsbC9wl1X-hdItKgYt2XTrx1f99Zpl4,54

-fastapi/middleware/__init__.py,sha256=oQDxiFVcc1fYJUOIFvphnK7pTT5kktmfL32QXpBFvvo,58

-fastapi/middleware/__pycache__/__init__.cpython-311.pyc,,

-fastapi/middleware/__pycache__/asyncexitstack.cpython-311.pyc,,

-fastapi/middleware/__pycache__/cors.cpython-311.pyc,,

-fastapi/middleware/__pycache__/gzip.cpython-311.pyc,,

-fastapi/middleware/__pycache__/httpsredirect.cpython-311.pyc,,

-fastapi/middleware/__pycache__/trustedhost.cpython-311.pyc,,

-fastapi/middleware/__pycache__/wsgi.cpython-311.pyc,,

-fastapi/middleware/asyncexitstack.py,sha256=LvMyVI1QdmWNWYPZqx295VFavssUfVpUsonPOsMWz1E,1035

-fastapi/middleware/cors.py,sha256=ynwjWQZoc_vbhzZ3_ZXceoaSrslHFHPdoM52rXr0WUU,79

-fastapi/middleware/gzip.py,sha256=xM5PcsH8QlAimZw4VDvcmTnqQamslThsfe3CVN2voa0,79

-fastapi/middleware/httpsredirect.py,sha256=rL8eXMnmLijwVkH7_400zHri1AekfeBd6D6qs8ix950,115

-fastapi/middleware/trustedhost.py,sha256=eE5XGRxGa7c5zPnMJDGp3BxaL25k5iVQlhnv-Pk0Pss,109

-fastapi/middleware/wsgi.py,sha256=Z3Ue-7wni4lUZMvH3G9ek__acgYdJstbnpZX_HQAboY,79

-fastapi/openapi/__init__.py,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

-fastapi/openapi/__pycache__/__init__.cpython-311.pyc,,

-fastapi/openapi/__pycache__/constants.cpython-311.pyc,,

-fastapi/openapi/__pycache__/docs.cpython-311.pyc,,

-fastapi/openapi/__pycache__/models.cpython-311.pyc,,

-fastapi/openapi/__pycache__/utils.cpython-311.pyc,,

-fastapi/openapi/constants.py,sha256=adGzmis1L1HJRTE3kJ5fmHS_Noq6tIY6pWv_SFzoFDU,153

-fastapi/openapi/docs.py,sha256=gnnivCh7N0AOTVLpGrDOlo4P4h1CrbU6vcqeNhvZGkI,10379

-fastapi/openapi/models.py,sha256=DEmsWA-9sNqv2H4YneZUW86r1nMwD920EiTvan5kndI,17763

-fastapi/openapi/utils.py,sha256=PUuz_ISarHVPBRyIgfyHz8uwH0eEsDY3rJUfW__I9GI,22303

-fastapi/param_functions.py,sha256=VWEsJbkH8lJZgcJ6fI6uzquui1kgHrDv1i_wXM7cW3M,63896

-fastapi/params.py,sha256=LzjihAvODd3w7-GddraUyVtH1xfwR9smIoQn-Z_g4mg,27807

-fastapi/py.typed,sha256=47DEQpj8HBSa-_TImW-5JCeuQeRkm5NMpJWZG3hSuFU,0

-fastapi/requests.py,sha256=zayepKFcienBllv3snmWI20Gk0oHNVLU4DDhqXBb4LU,142

-fastapi/responses.py,sha256=QNQQlwpKhQoIPZTTWkpc9d_QGeGZ_aVQPaDV3nQ8m7c,1761

-fastapi/routing.py,sha256=wtZXvIphGDMUBkBkZHMWgiiiQZX28HXNvPxxT0HWwXA,172396

-fastapi/security/__init__.py,sha256=bO8pNmxqVRXUjfl2mOKiVZLn0FpBQ61VUYVjmppnbJw,881

-fastapi/security/__pycache__/__init__.cpython-311.pyc,,

-fastapi/security/__pycache__/api_key.cpython-311.pyc,,

-fastapi/security/__pycache__/base.cpython-311.pyc,,

-fastapi/security/__pycache__/http.cpython-311.pyc,,

-fastapi/security/__pycache__/oauth2.cpython-311.pyc,,

-fastapi/security/__pycache__/open_id_connect_url.cpython-311.pyc,,

-fastapi/security/__pycache__/utils.cpython-311.pyc,,

-fastapi/security/api_key.py,sha256=bcZbUzTqeR_CI_LXuJdDq1qL322kmhgy5ApOCqgGDi4,9399

-fastapi/security/base.py,sha256=dl4pvbC-RxjfbWgPtCWd8MVU-7CB2SZ22rJDXVCXO6c,141

-fastapi/security/http.py,sha256=RVnZNavpUNHr6wguijiM3uNKt-b4i_bXgdT_JInuVZY,13523

-fastapi/security/oauth2.py,sha256=sjJgO5WL7j0cxnigzfF1zIIR1hgK_YSvh05B8KtxE94,21636

-fastapi/security/open_id_connect_url.py,sha256=Mb8wFxrRh4CrsFW0RcjBEQLASPHGDtZRP6c2dCrspAg,2753

-fastapi/security/utils.py,sha256=bd8T0YM7UQD5ATKucr1bNtAvz_Y3__dVNAv5UebiPvc,293

-fastapi/staticfiles.py,sha256=iirGIt3sdY2QZXd36ijs3Cj-T0FuGFda3cd90kM9Ikw,69

-fastapi/templating.py,sha256=4zsuTWgcjcEainMJFAlW6-gnslm6AgOS1SiiDWfmQxk,76

-fastapi/testclient.py,sha256=nBvaAmX66YldReJNZXPOk1sfuo2Q6hs8bOvIaCep6LQ,66

-fastapi/types.py,sha256=WZJ1jvm1MCwIrxxRYxKwtXS9HqcGk0RnCbLzrMZh-lI,428

-fastapi/utils.py,sha256=_vUwlqa4dq8M0Rl3Pro051teIccx36Z4hgecGH8F_oA,8179

-fastapi/websockets.py,sha256=419uncYObEKZG0YcrXscfQQYLSWoE10jqxVMetGdR98,222

diff --git a/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/REQUESTED b/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/REQUESTED

deleted file mode 100644

index e69de29..0000000

diff --git a/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/WHEEL b/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/WHEEL

deleted file mode 100644

index ba1a8af..0000000

--- a/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/WHEEL

+++ /dev/null

@@ -1,4 +0,0 @@

-Wheel-Version: 1.0

-Generator: hatchling 1.18.0

-Root-Is-Purelib: true

-Tag: py3-none-any

diff --git a/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/licenses/LICENSE b/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/licenses/LICENSE

deleted file mode 100644

index 3e92463..0000000

--- a/server/venv/Lib/site-packages/fastapi-0.104.0.dist-info/licenses/LICENSE

+++ /dev/null

@@ -1,21 +0,0 @@

-The MIT License (MIT)

-

-Copyright (c) 2018 Sebastián Ramírez

-

-Permission is hereby granted, free of charge, to any person obtaining a copy

-of this software and associated documentation files (the "Software"), to deal

-in the Software without restriction, including without limitation the rights

-to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

-copies of the Software, and to permit persons to whom the Software is

-furnished to do so, subject to the following conditions:

-

-The above copyright notice and this permission notice shall be included in

-all copies or substantial portions of the Software.

-

-THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

-IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

-FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

-AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

-LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

-OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

-THE SOFTWARE.

diff --git a/server/venv/Lib/site-packages/fastapi/__init__.py b/server/venv/Lib/site-packages/fastapi/__init__.py

deleted file mode 100644

index 4fdb155..0000000

--- a/server/venv/Lib/site-packages/fastapi/__init__.py

+++ /dev/null

@@ -1,25 +0,0 @@

-"""FastAPI framework, high performance, easy to learn, fast to code, ready for production"""

-

-__version__ = "0.104.0"

-

-from starlette import status as status

-

-from .applications import FastAPI as FastAPI

-from .background import BackgroundTasks as BackgroundTasks

-from .datastructures import UploadFile as UploadFile

-from .exceptions import HTTPException as HTTPException

-from .exceptions import WebSocketException as WebSocketException

-from .param_functions import Body as Body

-from .param_functions import Cookie as Cookie

-from .param_functions import Depends as Depends

-from .param_functions import File as File

-from .param_functions import Form as Form

-from .param_functions import Header as Header

-from .param_functions import Path as Path

-from .param_functions import Query as Query

-from .param_functions import Security as Security

-from .requests import Request as Request

-from .responses import Response as Response

-from .routing import APIRouter as APIRouter

-from .websockets import WebSocket as WebSocket

-from .websockets import WebSocketDisconnect as WebSocketDisconnect

diff --git a/server/venv/Lib/site-packages/fastapi/_compat.py b/server/venv/Lib/site-packages/fastapi/_compat.py

deleted file mode 100644

index a4b305d..0000000

--- a/server/venv/Lib/site-packages/fastapi/_compat.py

+++ /dev/null

@@ -1,629 +0,0 @@

-from collections import deque

-from copy import copy

-from dataclasses import dataclass, is_dataclass

-from enum import Enum

-from typing import (

- Any,

- Callable,

- Deque,

- Dict,

- FrozenSet,

- List,

- Mapping,

- Sequence,

- Set,

- Tuple,

- Type,

- Union,

-)

-

-from fastapi.exceptions import RequestErrorModel

-from fastapi.types import IncEx, ModelNameMap, UnionType

-from pydantic import BaseModel, create_model

-from pydantic.version import VERSION as PYDANTIC_VERSION

-from starlette.datastructures import UploadFile

-from typing_extensions import Annotated, Literal, get_args, get_origin

-

-PYDANTIC_V2 = PYDANTIC_VERSION.startswith("2.")

-

-

-sequence_annotation_to_type = {

- Sequence: list,

- List: list,

- list: list,

- Tuple: tuple,

- tuple: tuple,

- Set: set,

- set: set,

- FrozenSet: frozenset,

- frozenset: frozenset,

- Deque: deque,

- deque: deque,

-}

-

-sequence_types = tuple(sequence_annotation_to_type.keys())

-

-if PYDANTIC_V2:

- from pydantic import PydanticSchemaGenerationError as PydanticSchemaGenerationError

- from pydantic import TypeAdapter

- from pydantic import ValidationError as ValidationError

- from pydantic._internal._schema_generation_shared import ( # type: ignore[attr-defined]

- GetJsonSchemaHandler as GetJsonSchemaHandler,

- )

- from pydantic._internal._typing_extra import eval_type_lenient

- from pydantic._internal._utils import lenient_issubclass as lenient_issubclass

- from pydantic.fields import FieldInfo

- from pydantic.json_schema import GenerateJsonSchema as GenerateJsonSchema

- from pydantic.json_schema import JsonSchemaValue as JsonSchemaValue

- from pydantic_core import CoreSchema as CoreSchema

- from pydantic_core import PydanticUndefined, PydanticUndefinedType

- from pydantic_core import Url as Url

-

- try:

- from pydantic_core.core_schema import (

- with_info_plain_validator_function as with_info_plain_validator_function,

- )

- except ImportError: # pragma: no cover

- from pydantic_core.core_schema import (

- general_plain_validator_function as with_info_plain_validator_function, # noqa: F401

- )

-

- Required = PydanticUndefined

- Undefined = PydanticUndefined

- UndefinedType = PydanticUndefinedType

- evaluate_forwardref = eval_type_lenient

- Validator = Any

-

- class BaseConfig:

- pass

-

- class ErrorWrapper(Exception):

- pass

-

- @dataclass

- class ModelField:

- field_info: FieldInfo

- name: str

- mode: Literal["validation", "serialization"] = "validation"

-

- @property

- def alias(self) -> str:

- a = self.field_info.alias

- return a if a is not None else self.name

-

- @property

- def required(self) -> bool:

- return self.field_info.is_required()

-

- @property

- def default(self) -> Any:

- return self.get_default()

-

- @property

- def type_(self) -> Any:

- return self.field_info.annotation

-

- def __post_init__(self) -> None:

- self._type_adapter: TypeAdapter[Any] = TypeAdapter(

- Annotated[self.field_info.annotation, self.field_info]

- )

-

- def get_default(self) -> Any:

- if self.field_info.is_required():

- return Undefined

- return self.field_info.get_default(call_default_factory=True)

-

- def validate(

- self,

- value: Any,

- values: Dict[str, Any] = {}, # noqa: B006

- *,

- loc: Tuple[Union[int, str], ...] = (),

- ) -> Tuple[Any, Union[List[Dict[str, Any]], None]]:

- try:

- return (

- self._type_adapter.validate_python(value, from_attributes=True),

- None,

- )

- except ValidationError as exc:

- return None, _regenerate_error_with_loc(

- errors=exc.errors(), loc_prefix=loc

- )

-

- def serialize(

- self,

- value: Any,

- *,

- mode: Literal["json", "python"] = "json",

- include: Union[IncEx, None] = None,

- exclude: Union[IncEx, None] = None,

- by_alias: bool = True,

- exclude_unset: bool = False,

- exclude_defaults: bool = False,

- exclude_none: bool = False,

- ) -> Any:

- # What calls this code passes a value that already called

- # self._type_adapter.validate_python(value)

- return self._type_adapter.dump_python(

- value,

- mode=mode,

- include=include,

- exclude=exclude,

- by_alias=by_alias,

- exclude_unset=exclude_unset,

- exclude_defaults=exclude_defaults,

- exclude_none=exclude_none,

- )

-

- def __hash__(self) -> int:

- # Each ModelField is unique for our purposes, to allow making a dict from

- # ModelField to its JSON Schema.

- return id(self)

-

- def get_annotation_from_field_info(

- annotation: Any, field_info: FieldInfo, field_name: str

- ) -> Any:

- return annotation

-

- def _normalize_errors(errors: Sequence[Any]) -> List[Dict[str, Any]]:

- return errors # type: ignore[return-value]

-

- def _model_rebuild(model: Type[BaseModel]) -> None:

- model.model_rebuild()

-

- def _model_dump(

- model: BaseModel, mode: Literal["json", "python"] = "json", **kwargs: Any

- ) -> Any:

- return model.model_dump(mode=mode, **kwargs)

-

- def _get_model_config(model: BaseModel) -> Any:

- return model.model_config

-

- def get_schema_from_model_field(

- *,

- field: ModelField,

- schema_generator: GenerateJsonSchema,

- model_name_map: ModelNameMap,

- field_mapping: Dict[

- Tuple[ModelField, Literal["validation", "serialization"]], JsonSchemaValue

- ],

- separate_input_output_schemas: bool = True,

- ) -> Dict[str, Any]:

- override_mode: Union[Literal["validation"], None] = (

- None if separate_input_output_schemas else "validation"

- )

- # This expects that GenerateJsonSchema was already used to generate the definitions

- json_schema = field_mapping[(field, override_mode or field.mode)]

- if "$ref" not in json_schema:

- # TODO remove when deprecating Pydantic v1

- # Ref: https://github.com/pydantic/pydantic/blob/d61792cc42c80b13b23e3ffa74bc37ec7c77f7d1/pydantic/schema.py#L207

- json_schema[

- "title"

- ] = field.field_info.title or field.alias.title().replace("_", " ")

- return json_schema

-

- def get_compat_model_name_map(fields: List[ModelField]) -> ModelNameMap:

- return {}

-

- def get_definitions(

- *,

- fields: List[ModelField],

- schema_generator: GenerateJsonSchema,

- model_name_map: ModelNameMap,

- separate_input_output_schemas: bool = True,

- ) -> Tuple[

- Dict[

- Tuple[ModelField, Literal["validation", "serialization"]], JsonSchemaValue

- ],

- Dict[str, Dict[str, Any]],

- ]:

- override_mode: Union[Literal["validation"], None] = (

- None if separate_input_output_schemas else "validation"

- )

- inputs = [

- (field, override_mode or field.mode, field._type_adapter.core_schema)

- for field in fields

- ]

- field_mapping, definitions = schema_generator.generate_definitions(

- inputs=inputs

- )

- return field_mapping, definitions # type: ignore[return-value]

-

- def is_scalar_field(field: ModelField) -> bool:

- from fastapi import params

-

- return field_annotation_is_scalar(

- field.field_info.annotation

- ) and not isinstance(field.field_info, params.Body)

-

- def is_sequence_field(field: ModelField) -> bool:

- return field_annotation_is_sequence(field.field_info.annotation)

-

- def is_scalar_sequence_field(field: ModelField) -> bool:

- return field_annotation_is_scalar_sequence(field.field_info.annotation)

-

- def is_bytes_field(field: ModelField) -> bool:

- return is_bytes_or_nonable_bytes_annotation(field.type_)

-

- def is_bytes_sequence_field(field: ModelField) -> bool:

- return is_bytes_sequence_annotation(field.type_)

-

- def copy_field_info(*, field_info: FieldInfo, annotation: Any) -> FieldInfo:

- return type(field_info).from_annotation(annotation)

-

- def serialize_sequence_value(*, field: ModelField, value: Any) -> Sequence[Any]:

- origin_type = (

- get_origin(field.field_info.annotation) or field.field_info.annotation

- )

- assert issubclass(origin_type, sequence_types) # type: ignore[arg-type]

- return sequence_annotation_to_type[origin_type](value) # type: ignore[no-any-return]

-

- def get_missing_field_error(loc: Tuple[str, ...]) -> Dict[str, Any]:

- error = ValidationError.from_exception_data(

- "Field required", [{"type": "missing", "loc": loc, "input": {}}]

- ).errors()[0]

- error["input"] = None

- return error # type: ignore[return-value]

-

- def create_body_model(

- *, fields: Sequence[ModelField], model_name: str

- ) -> Type[BaseModel]:

- field_params = {f.name: (f.field_info.annotation, f.field_info) for f in fields}

- BodyModel: Type[BaseModel] = create_model(model_name, **field_params) # type: ignore[call-overload]

- return BodyModel

-

-else:

- from fastapi.openapi.constants import REF_PREFIX as REF_PREFIX

- from pydantic import AnyUrl as Url # noqa: F401

- from pydantic import ( # type: ignore[assignment]

- BaseConfig as BaseConfig, # noqa: F401

- )

- from pydantic import ValidationError as ValidationError # noqa: F401

- from pydantic.class_validators import ( # type: ignore[no-redef]

- Validator as Validator, # noqa: F401

- )

- from pydantic.error_wrappers import ( # type: ignore[no-redef]

- ErrorWrapper as ErrorWrapper, # noqa: F401

- )

- from pydantic.errors import MissingError

- from pydantic.fields import ( # type: ignore[attr-defined]

- SHAPE_FROZENSET,

- SHAPE_LIST,

- SHAPE_SEQUENCE,

- SHAPE_SET,

- SHAPE_SINGLETON,

- SHAPE_TUPLE,

- SHAPE_TUPLE_ELLIPSIS,

- )

- from pydantic.fields import FieldInfo as FieldInfo

- from pydantic.fields import ( # type: ignore[no-redef,attr-defined]

- ModelField as ModelField, # noqa: F401

- )

- from pydantic.fields import ( # type: ignore[no-redef,attr-defined]

- Required as Required, # noqa: F401

- )

- from pydantic.fields import ( # type: ignore[no-redef,attr-defined]

- Undefined as Undefined,

- )

- from pydantic.fields import ( # type: ignore[no-redef, attr-defined]

- UndefinedType as UndefinedType, # noqa: F401

- )

- from pydantic.schema import (

- field_schema,

- get_flat_models_from_fields,

- get_model_name_map,

- model_process_schema,

- )

- from pydantic.schema import ( # type: ignore[no-redef] # noqa: F401

- get_annotation_from_field_info as get_annotation_from_field_info,

- )

- from pydantic.typing import ( # type: ignore[no-redef]

- evaluate_forwardref as evaluate_forwardref, # noqa: F401

- )

- from pydantic.utils import ( # type: ignore[no-redef]

- lenient_issubclass as lenient_issubclass, # noqa: F401

- )

-

- GetJsonSchemaHandler = Any # type: ignore[assignment,misc]

- JsonSchemaValue = Dict[str, Any] # type: ignore[misc]

- CoreSchema = Any # type: ignore[assignment,misc]

-

- sequence_shapes = {

- SHAPE_LIST,

- SHAPE_SET,

- SHAPE_FROZENSET,

- SHAPE_TUPLE,

- SHAPE_SEQUENCE,

- SHAPE_TUPLE_ELLIPSIS,

- }

- sequence_shape_to_type = {

- SHAPE_LIST: list,

- SHAPE_SET: set,

- SHAPE_TUPLE: tuple,

- SHAPE_SEQUENCE: list,

- SHAPE_TUPLE_ELLIPSIS: list,

- }

-

- @dataclass

- class GenerateJsonSchema: # type: ignore[no-redef]

- ref_template: str

-

- class PydanticSchemaGenerationError(Exception): # type: ignore[no-redef]

- pass

-

- def with_info_plain_validator_function( # type: ignore[misc]

- function: Callable[..., Any],

- *,

- ref: Union[str, None] = None,

- metadata: Any = None,

- serialization: Any = None,

- ) -> Any:

- return {}

-

- def get_model_definitions(

- *,

- flat_models: Set[Union[Type[BaseModel], Type[Enum]]],

- model_name_map: Dict[Union[Type[BaseModel], Type[Enum]], str],

- ) -> Dict[str, Any]:

- definitions: Dict[str, Dict[str, Any]] = {}

- for model in flat_models:

- m_schema, m_definitions, m_nested_models = model_process_schema(

- model, model_name_map=model_name_map, ref_prefix=REF_PREFIX

- )

- definitions.update(m_definitions)

- model_name = model_name_map[model]

- if "description" in m_schema:

- m_schema["description"] = m_schema["description"].split("\f")[0]

- definitions[model_name] = m_schema

- return definitions

-

- def is_pv1_scalar_field(field: ModelField) -> bool:

- from fastapi import params

-

- field_info = field.field_info

- if not (

- field.shape == SHAPE_SINGLETON # type: ignore[attr-defined]

- and not lenient_issubclass(field.type_, BaseModel)

- and not lenient_issubclass(field.type_, dict)

- and not field_annotation_is_sequence(field.type_)

- and not is_dataclass(field.type_)

- and not isinstance(field_info, params.Body)

- ):

- return False

- if field.sub_fields: # type: ignore[attr-defined]

- if not all(

- is_pv1_scalar_field(f)

- for f in field.sub_fields # type: ignore[attr-defined]

- ):

- return False

- return True

-

- def is_pv1_scalar_sequence_field(field: ModelField) -> bool:

- if (field.shape in sequence_shapes) and not lenient_issubclass( # type: ignore[attr-defined]

- field.type_, BaseModel

- ):

- if field.sub_fields is not None: # type: ignore[attr-defined]

- for sub_field in field.sub_fields: # type: ignore[attr-defined]

- if not is_pv1_scalar_field(sub_field):

- return False

- return True

- if _annotation_is_sequence(field.type_):

- return True

- return False

-

- def _normalize_errors(errors: Sequence[Any]) -> List[Dict[str, Any]]:

- use_errors: List[Any] = []

- for error in errors:

- if isinstance(error, ErrorWrapper):

- new_errors = ValidationError( # type: ignore[call-arg]

- errors=[error], model=RequestErrorModel

- ).errors()

- use_errors.extend(new_errors)

- elif isinstance(error, list):

- use_errors.extend(_normalize_errors(error))

- else:

- use_errors.append(error)

- return use_errors

-

- def _model_rebuild(model: Type[BaseModel]) -> None:

- model.update_forward_refs()

-

- def _model_dump(

- model: BaseModel, mode: Literal["json", "python"] = "json", **kwargs: Any

- ) -> Any:

- return model.dict(**kwargs)

-

- def _get_model_config(model: BaseModel) -> Any:

- return model.__config__ # type: ignore[attr-defined]

-

- def get_schema_from_model_field(

- *,

- field: ModelField,

- schema_generator: GenerateJsonSchema,

- model_name_map: ModelNameMap,

- field_mapping: Dict[

- Tuple[ModelField, Literal["validation", "serialization"]], JsonSchemaValue

- ],

- separate_input_output_schemas: bool = True,

- ) -> Dict[str, Any]:

- # This expects that GenerateJsonSchema was already used to generate the definitions

- return field_schema( # type: ignore[no-any-return]

- field, model_name_map=model_name_map, ref_prefix=REF_PREFIX

- )[0]

-

- def get_compat_model_name_map(fields: List[ModelField]) -> ModelNameMap:

- models = get_flat_models_from_fields(fields, known_models=set())

- return get_model_name_map(models) # type: ignore[no-any-return]

-

- def get_definitions(

- *,

- fields: List[ModelField],

- schema_generator: GenerateJsonSchema,

- model_name_map: ModelNameMap,

- separate_input_output_schemas: bool = True,

- ) -> Tuple[

- Dict[

- Tuple[ModelField, Literal["validation", "serialization"]], JsonSchemaValue

- ],

- Dict[str, Dict[str, Any]],

- ]:

- models = get_flat_models_from_fields(fields, known_models=set())

- return {}, get_model_definitions(

- flat_models=models, model_name_map=model_name_map

- )

-

- def is_scalar_field(field: ModelField) -> bool:

- return is_pv1_scalar_field(field)

-

- def is_sequence_field(field: ModelField) -> bool:

- return field.shape in sequence_shapes or _annotation_is_sequence(field.type_) # type: ignore[attr-defined]

-

- def is_scalar_sequence_field(field: ModelField) -> bool:

- return is_pv1_scalar_sequence_field(field)

-

- def is_bytes_field(field: ModelField) -> bool:

- return lenient_issubclass(field.type_, bytes)

-

- def is_bytes_sequence_field(field: ModelField) -> bool:

- return field.shape in sequence_shapes and lenient_issubclass(field.type_, bytes) # type: ignore[attr-defined]

-

- def copy_field_info(*, field_info: FieldInfo, annotation: Any) -> FieldInfo:

- return copy(field_info)

-