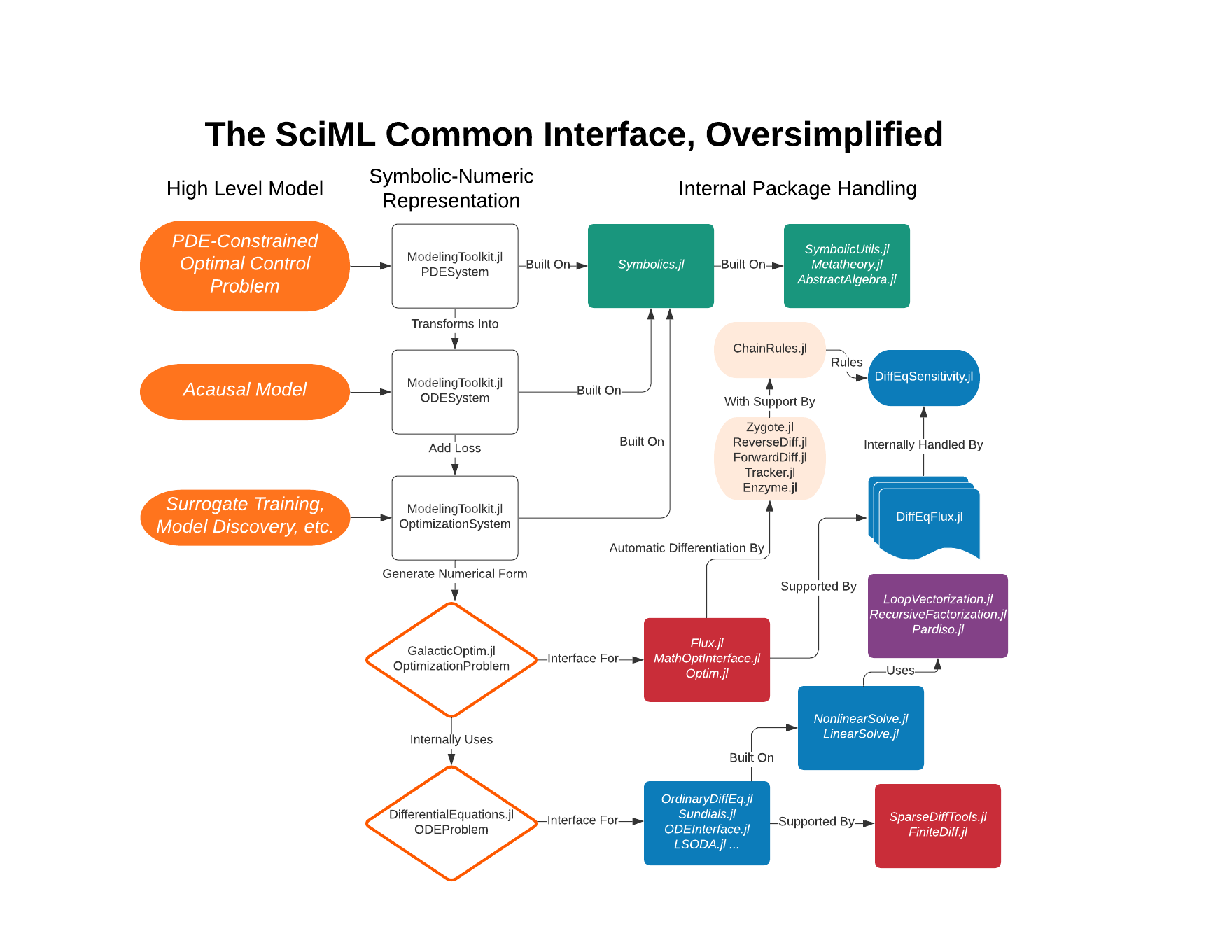

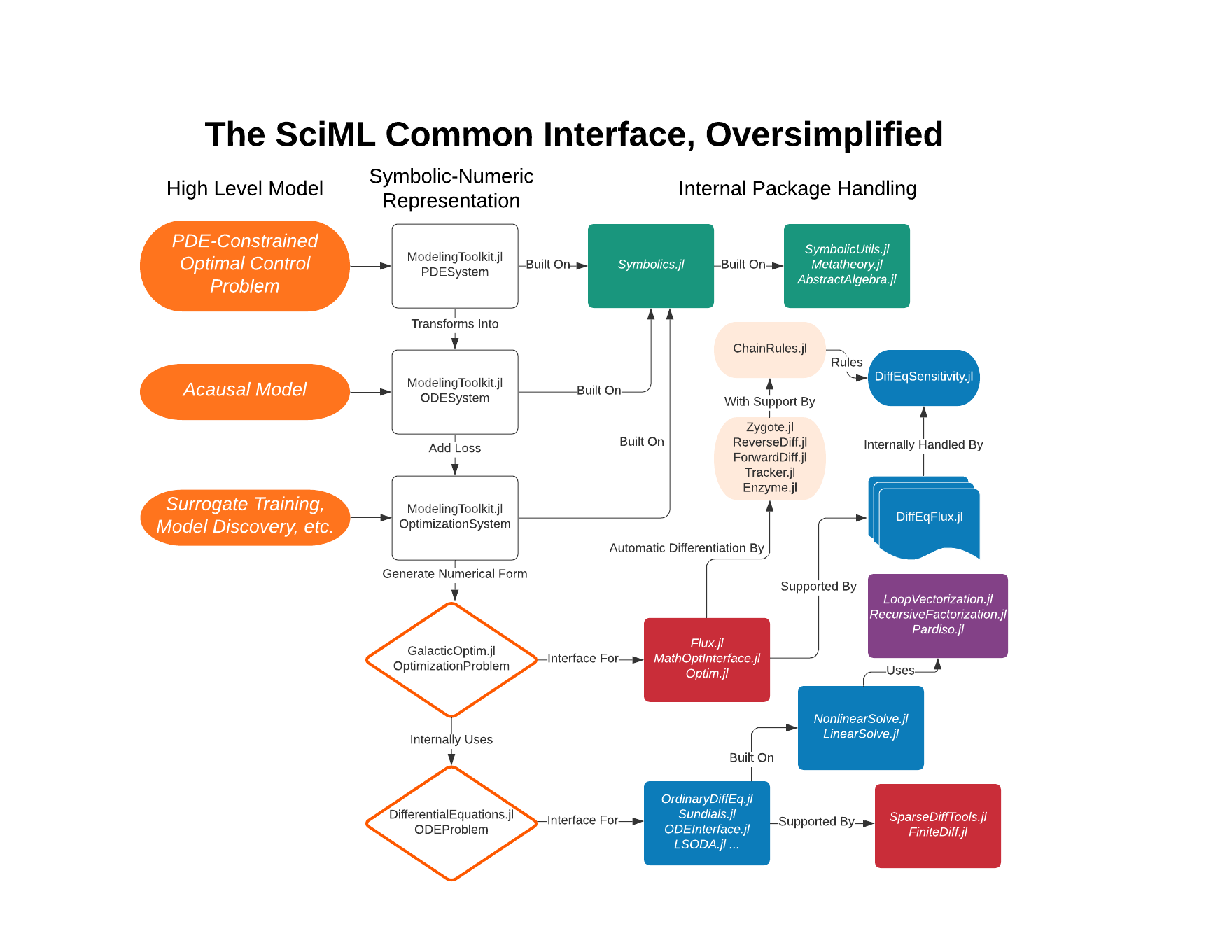

The SciML common interface ties together the numerical solvers of the Julia package ecosystem into a single unified interface. It is designed for maximal efficiency and parallelism, while incorporating essential features for large-scale scientific machine learning such as differentiability, composability, and sparsity.

This documentation is made to pool together the docs of the various SciML libraries to paint the overarching picture, establish development norms, and document the shared/common functionality.

The SciML common interface covers the following domains:

- Linear systems (

LinearProblem)- Direct methods for dense and sparse

- Iterative solvers with preconditioning

- Nonlinear Systems (

NonlinearProblem)- Rootfinding for systems of nonlinear equations

- Interval Nonlinear Systems

- Bracketing rootfinders for nonlinear equations with interval bounds

- Integrals (quadrature) (

IntegralProblem) - Differential Equations

- Discrete equations (function maps, discrete stochastic (Gillespie/Markov) simulations) (

DiscreteProblem) - Ordinary differential equations (ODEs) (

ODEProblem) - Split and Partitioned ODEs (Symplectic integrators, IMEX Methods) (

SplitODEProblem) - Stochastic ordinary differential equations (SODEs or SDEs) (

SDEProblem) - Stochastic differential-algebraic equations (SDAEs) (

SDEProblem with mass matrices) - Random differential equations (RODEs or RDEs) (

RODEProblem) - Differential algebraic equations (DAEs) (

DAEProblem and ODEProblem with mass matrices) - Delay differential equations (DDEs) (

DDEProblem) - Neutral, retarded, and algebraic delay differential equations (NDDEs, RDDEs, and DDAEs)

- Stochastic delay differential equations (SDDEs) (

SDDEProblem) - Experimental support for stochastic neutral, retarded, and algebraic delay differential equations (SNDDEs, SRDDEs, and SDDAEs)

- Mixed discrete and continuous equations (Hybrid Equations, Jump Diffusions) (

AbstractDEProblems with callbacks)

- Optimization (

OptimizationProblem)- Nonlinear (constrained) optimization

- (Stochastic/Delay/Differential-Algebraic) Partial Differential Equations (

PDESystem)- Finite difference and finite volume methods

- Interfaces to finite element methods

- Physics-Informed Neural Networks (PINNs)

- Integro-Differential Equations

- Fractional Differential Equations

The SciML common interface also includes ModelingToolkit.jl for defining such systems symbolically, allowing for optimizations like automated generation of parallel code, symbolic simplification, and generation of sparsity patterns.

In addition to the purely numerical representations of mathematical objects, there are also sets of problem types associated with common mathematical algorithms. These are:

- Data-driven modeling

- Discrete-time data-driven dynamical systems (

DiscreteDataDrivenProblem) - Continuous-time data-driven dynamical systems (

ContinuousDataDrivenProblem) - Symbolic regression (

DirectDataDrivenProblem)

- Uncertainty quantification and expected values (

ExpectationProblem)

We note that parameter estimation and inverse problems are solved directly on their constituent problem types using tools like DiffEqFlux.jl. Thus for example, there is no ODEInverseProblem, and instead ODEProblem is used to find the parameters p that solve the inverse problem.

The SciML interface is common as the usage of arguments is standardized across all of the problem domains. Underlying high level ideas include:

- All domains use the same interface of defining a

AbstractSciMLProblem which is then solved via solve(prob,alg;kwargs), where alg is a AbstractSciMLAlgorithm. The keyword argument namings are standardized across the organization. AbstractSciMLProblems are generally defined by a SciMLFunction which can define extra details about a model function, such as its analytical Jacobian, its sparsity patterns and so on.- There is an organization-wide method for defining linear and nonlinear solvers used within other solvers, giving maximum control of performance to the user.

- Types used within the packages are defined by the input types. For example, packages attempt to internally use the type of the initial condition as the type for the state within differential equation solvers.

solve calls should be thread-safe and parallel-safe.init(prob,alg;kwargs) returns an iterator which allows for directly iterating over the solution process- High performance is key. Any performance that is not at the top level is considered a bug and should be reported as such.

- All functions have an in-place and out-of-place form, where the in-place form is made to utilize mutation for high performance on large-scale problems and the out-of-place form is for compatibility with tooling like static arrays and some reverse-mode automatic differentiation systems.

- DifferentialEquations.jl

- Multi-package interface of high performance numerical solvers of differential equations

- ModelingToolkit.jl

- The symbolic modeling package which implements the SciML symbolic common interface.

- LinearSolve.jl

- Multi-package interface for specifying linear solvers (direct, sparse, and iterative), along with tools for caching and preconditioners for use in large-scale modeling.

- NonlinearSolve.jl

- High performance numerical solving of nonlinear systems.

- Integrals.jl

- Multi-package interface for high performance, batched, and parallelized numerical quadrature.

- Optimization.jl

- Multi-package interface for numerical solving of optimization problems.

- NeuralPDE.jl

- Physics-Informed Neural Network (PINN) package for transforming partial differential equations into optimization problems.

- DiffEqOperators.jl

- Automated finite difference method (FDM) package for transforming partial differential equations into nonlinear problems and ordinary differential equations.

- DiffEqFlux.jl

- High level package for scientific machine learning applications, such as neural and universal differential equations, solving of inverse problems, parameter estimation, nonlinear optimal control, and more.

- DataDrivenDiffEq.jl

- Multi-package interface for data-driven modeling, Koopman dynamic mode decomposition, symbolic regression/sparsification, and automated model discovery.

- SciMLExpectations.jl

- Extension to the dynamical modeling tools for calculating expectations.

- SciMLBase.jl

- The core package defining the interface which is consumed by the modeling and solver packages.

- DiffEqBase.jl

- The core package defining the extended interface which is consumed by the differential equation solver packages.

- SciMLSensitivity.jl

- A package which pools together the definition of derivative overloads to define the common

sensealg automatic differentiation interface.

- DiffEqNoiseProcess.jl

- A package which defines the stochastic

AbstractNoiseProcess interface for the SciML ecosystem.

- RecursiveArrayTools.jl

- A package which defines the underlying

AbstractVectorOfArray structure used as the output for all time series results.

- ArrayInterface.jl

- The package which defines the extended

AbstractArray interface employed throughout the SciML ecosystem.

There are too many to name here and this will be populated when there is time!

The following example showcases how the pieces of the common interface connect to solve a problem that mixes inference, symbolics, and numerics.

- diffeqr

- Solving differential equations in R using DifferentialEquations.jl with ModelingToolkit for JIT compilation and GPU-acceleration

- diffeqpy

- Solving differential equations in Python using DifferentialEquations.jl

There are too many to name here. Check out the SciML Organization Github Page for details.

The documentation of this SciML package was built using these direct dependencies,

Status `~/work/SciMLBase.jl/SciMLBase.jl/docs/Project.toml`

[e30172f5] Documenter v1.2.1

[961ee093] ModelingToolkit v8.75.0

- [0bca4576] SciMLBase v2.18.0 `~/work/SciMLBase.jl/SciMLBase.jl`

and using this machine and Julia version.

Julia Version 1.10.0

+ [0bca4576] SciMLBase v2.19.0 `~/work/SciMLBase.jl/SciMLBase.jl`

and using this machine and Julia version.

Julia Version 1.10.0

Commit 3120989f39b (2023-12-25 18:01 UTC)

Build Info:

Official https://julialang.org/ release

@@ -25,13 +25,13 @@

[2a0fbf3d] CPUSummary v0.2.4

[00ebfdb7] CSTParser v3.4.0

[49dc2e85] Calculus v0.5.1

- [d360d2e6] ChainRulesCore v1.19.0

+ [d360d2e6] ChainRulesCore v1.19.1

[fb6a15b2] CloseOpenIntervals v0.1.12

[861a8166] Combinatorics v1.0.2

[a80b9123] CommonMark v0.8.12

[38540f10] CommonSolve v0.2.4

[bbf7d656] CommonSubexpressions v0.3.0

- [34da2185] Compat v4.11.0

+ [34da2185] Compat v4.12.0

[b152e2b5] CompositeTypes v0.1.3

[2569d6c7] ConcreteStructs v0.2.3

[187b0558] ConstructionBase v1.5.4

@@ -124,7 +124,7 @@

[d236fae5] PreallocationTools v0.4.17

[aea7be01] PrecompileTools v1.2.0

[21216c6a] Preferences v1.4.1

- [1fd47b50] QuadGK v2.9.1

+ [1fd47b50] QuadGK v2.9.4

[e6cf234a] RandomNumbers v1.5.3

[3cdcf5f2] RecipesBase v1.3.4

[731186ca] RecursiveArrayTools v3.5.2

@@ -136,10 +136,10 @@

[7e49a35a] RuntimeGeneratedFunctions v0.5.12

[94e857df] SIMDTypes v0.1.0

[476501e8] SLEEFPirates v0.6.42

- [0bca4576] SciMLBase v2.18.0 `~/work/SciMLBase.jl/SciMLBase.jl`

+ [0bca4576] SciMLBase v2.19.0 `~/work/SciMLBase.jl/SciMLBase.jl`

[c0aeaf25] SciMLOperators v0.3.7

[efcf1570] Setfield v1.1.1

- [727e6d20] SimpleNonlinearSolve v1.2.0

+ [727e6d20] SimpleNonlinearSolve v1.2.1

[699a6c99] SimpleTraits v0.9.4

[ce78b400] SimpleUnPack v1.1.0

[a2af1166] SortingAlgorithms v1.2.1

@@ -148,7 +148,7 @@

[276daf66] SpecialFunctions v2.3.1

[aedffcd0] Static v0.8.8

[0d7ed370] StaticArrayInterface v1.5.0

- [90137ffa] StaticArrays v1.9.0

+ [90137ffa] StaticArrays v1.9.1

[1e83bf80] StaticArraysCore v1.4.2

[82ae8749] StatsAPI v1.7.0

[2913bbd2] StatsBase v0.34.2

@@ -172,7 +172,7 @@

[3d5dd08c] VectorizationBase v0.21.65

[19fa3120] VertexSafeGraphs v0.2.0

[2e619515] Expat_jll v2.5.0+0

- [f8c6e375] Git_jll v2.42.0+0

+ [f8c6e375] Git_jll v2.43.0+0

[1d5cc7b8] IntelOpenMP_jll v2024.0.2+0

[94ce4f54] Libiconv_jll v1.17.0+0

[856f044c] MKL_jll v2024.0.0+0

@@ -227,4 +227,4 @@

[8e850b90] libblastrampoline_jll v5.8.0+1

[8e850ede] nghttp2_jll v1.52.0+1

[3f19e933] p7zip_jll v17.4.0+2

-Info Packages marked with ⌃ and ⌅ have new versions available. Those with ⌃ may be upgradable, but those with ⌅ are restricted by compatibility constraints from upgrading. To see why use `status --outdated -m`

You can also download the manifest file and the project file.