FPGA-based system that monitors facemask use through artificial intelligence, includes a thermometer and facemask dispenser.

- Facemask-Detector-ZCU104

- Welcome

- Table of contents

- Introduction

- Problem

- Solution

- Materials

- Connection Diagram

- Project:

- Final Product

- Epic DEMO

- References

- APPENDIX A

COVID-19 has changed our daily lives and continues to do so. Many retail stores and companies have gone out of business, unable to offer their services to their clients. However some others which have opted for the reopening of their businesses in turn have had to adapt to the times.This includes public institutions such as museums, libraries, etc. All these establishments have taken their precautionary measures, generating new regulations, such as forcing customers and / or visitors to wear face masks at all times. 1.

Given this new environment, businesses have had to create neew kinds of jobs or jobs to be done. One of these is to check the use of the mask and check the temperature of the customers before entering the establishment, risking not only the worker who is checking the temperature of the customers, but to the customers who enter the place.

This job generates an annual cost per worker of at least $30,000 [1] and if that employee were to become ill with covid-19, by law (in the United States) they would have to pay him at least $511 a day [2].

These costs (both moneetary and health wise) for an employee who performs a repetitive task is excessive but necessary, because the solutions currently created are not yet sufficient to replace this position.

- The Xovis all-in-one solution - https://www.xovis.com/en/products/detail/face-mask-detection/

- Only detection of the use of face masks, without temperature measurement, or access control.

- SecurOS™ Face Mask Detection - https://issivs.com/facemask/

- Only detection of the use of face masks, without temperature measurement, or access control.

- Leewayhertz - https://www.leewayhertz.com/face-mask-detection-system/

- Only detection of the use of face masks, without temperature measurement, or access control.

Most of the devices on the market only detect the use of the mask, however this project seeks to generate a complete solution for access control.

- https://www.ziprecruiter.com/Salaries/Retail-Security-Officer-Salary

- https://www.dol.gov/sites/dolgov/files/WHD/posters/FFCRA_Poster_WH1422_Non-Federal.pdf

Hardware:

- ZCU104. x1. https://www.xilinx.com/products/boards-and-kits/zcu104.html

- ESP32. https://www.adafruit.com/product/3405

- DEVMO 8448 LCD. https://www.amazon.com/dp/B07R55C8PV/ref=cm_sw_em_r_mt_dp_giyUFbRP14KMC

- MLX90614ESF Non-contact Infrared Temperature Sensor. x1. https://www.amazon.com/dp/B071VF2RWM/ref=cm_sw_em_r_mt_dp_UgyUFbECN8FZT?_encoding=UTF8&psc=1

- Micro Digital Servo Motor. x1. https://www.amazon.com/dp/B01M5LIKLQ/ref=cm_sw_em_r_mt_dp_4jyUFb74BZM780

- USB TP-Link USB Wifi Adapter TL-WN823N. x1. https://www.amazon.com/dp/B0088TKTY2/ref=cm_sw_em_r_mt_dp_.CyUFbCJWK6S3

- UGREEN USB Bluetooth 4.0 Adapter x1. https://www.amazon.com/dp/B01LX6HISL/ref=cm_sw_em_r_mt_dp_U_iK-BEbFBQ76BW

- See3CAM_CU30 - 3.4 MP. (Included in the kit) x1. https://www.e-consystems.com/ar0330-lowlight-usb-cameraboard.asp

- 16 GB MicroSD Card (Included in the kit). x1. https://www.amazon.com/dp/B07CMW9ZQ6/ref=cm_sw_em_r_mt_dp_dNyUFbJQ1MAR5?_encoding=UTF8&psc=1

- 12-5A AC/DC Adapter Power Supply Jack Connector. (Included in the kit) x1. https://www.amazon.com/dp/B08764G7HT/ref=cm_sw_em_r_mt_dp_UKyUFbTW57FT4?_encoding=UTF8&psc=1

- Targus 4-Port USB 3.0 Hub (Included in the kit). x1. https://www.amazon.com/dp/B00P937GQ4/ref=cm_sw_em_r_mt_dp_BIyUFbRTB52V3

Software:

- Pynq: http://www.pynq.io/

- DPU Pynq: https://github.com/Xilinx/DPU-PYNQ

- TensorFlow: https://www.tensorflow.org/

- Keras: https://keras.io/

- OpenCV: https://opencv.org/

- Arduino IDE: https://www.arduino.cc/en/Main/Software

- Python: https://www.python.org/

This is the connection diagram of the system:

ZCU104 Processing:

In order to solve the problem of detecting the use of masks, it is necessary to carry out a CCN (convolutional neural network) which is capable of identifying if a human face is wearing a mask.

To train a CCN as we know, it is necessary to use a large number of images. Which will serve the convolutions as examples to be able to correctly filter the characteristics of the images and thus be able to give a result.

In this case, we used 1916 positive images and 1930 negative images as the dataset.

https://www.kaggle.com/altaga/facemaskdataset https://github.com/altaga/Facemask-Detector-ZCU104/tree/main/facemask-dataset

I invite the reader to review the dataset and to check the images yourself, the structure of the folders is:

- Dataset

- Yes

- IMG#

- IMG#

- No

- IMG#

- IMG#

- Yes

The classification that we seek to achieve with this CNN is the following:

To perform the neural network training correctly, it is necessary to use the environment that Xilinx offers us for AI adapted to models focused on DPU which runs on Ubuntu 18.04.3.

http://old-releases.ubuntu.com/releases/18.04.3/

NOTE: ONLY IN THIS UBUNTU VEERSION IS ENVIROMENT COMPATIBLE, ONCE YOU INSTALL THE VIRTUAL MACHINE, DO NOT UPDATE ANYTHING, SINCE YOU WILL NOT BE ABLE TO USE THE ENVIROMENT AND YOU WILL HAVE TO INSTALL EVERYTHING AGAIN.

In my case I use a machine with Windows 10, so to do the training I had to use a virtual machine in VMware.

https://www.vmware.com/mx.html

Within the options to install the environment there is one to use the GPU and another CPU, since I use the virtual machine I will use the CPU installation.

https://github.com/Xilinx/Vitis-AI

Open the linux terminal and type the following commands.

In the Scripts folder I have already left several .sh files with which you can easily install all the necessary files, these files must be in the /home folder for them to work properly.

-

Install Docker (1 - 2 minutes) if you already have Docker go to Script 2.

sudo bash install_docker.sh -

Install Vitis (10 - 20 minutes).

sudo bash install_vitis.sh

NOTE: install only one of the following ENV according to your preference.

-

Install CPU or GPU Support.

-

Installing CPU (20 - 30 minutes).

sudo bash install_cpu.sh

OR

-

Installing the GPU environment (20 - 30 minutes).

sudo bash install_gpu.sh

-

-

Start base Env.

sudo bash run_env.sh -

Start Vitis-AI-TensorFlow

conda activate vitis-ai-tensorflow -

Run this command once (IMPORTANT).

yes | pip install matplotlib keras==2.2.5

If you did everything right, you should see a console like this one.

In the Appendix A you can see the Scripts content.

To carry out the training, copy all the files inside the "Setup Notebook and Dataset" repository folder to the Vitis-AI folder for the code to run properly.

Just as in the picture.

Now in the command console we will execute the following command to open Jupyter Notebooks.

jupyter notebook --allow-root

Open the browser and paste the link that appeared in the terminal and open the file.

Once the code is open in the Kernel tab, it executes everything as shown in the image.

All the code is explained in detail. To understand it fully, please review it.

After the excecution, if everything worked well we should see the following result.

From this process we will obtain a file called "dpu_face_binary_classifier_0.elf".

This file has saved the model that we will use later and that has been already provided in the "Main Notebook" folder.

The board setup is very simple, first you will have to download the PYNQ operating system.

Once you have this, flash the operating system with a program like Balena Etcher onto the SD included in the Kit. I recommend a memory of at least 16Gb.

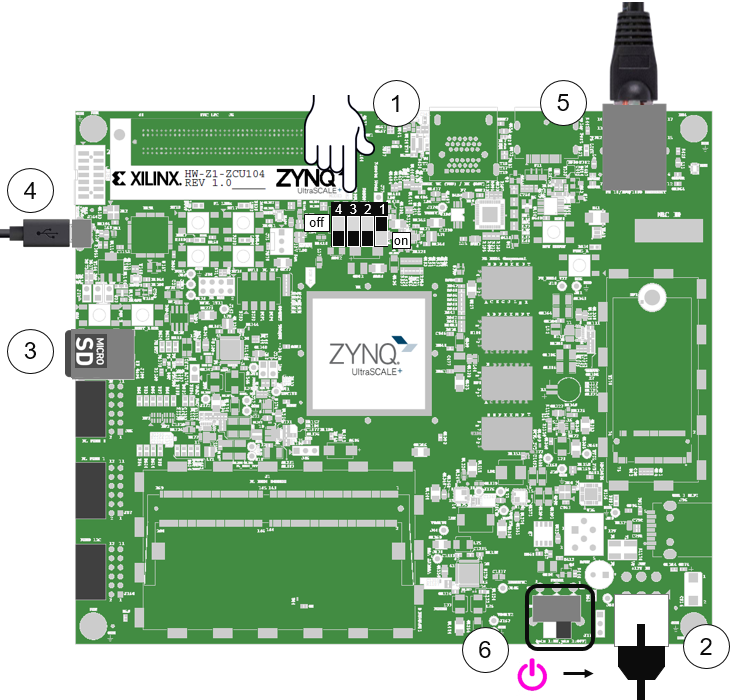

Now, before attaching the SD to the Board, plug it to power and turn it on. Check that the switches above the board are in the following position. This will enable boot from SD.

Steps to follow when turning on the board for the first time:

Connect the 12V power cable. Note that the connector is keyed and can only be connected in one way.

Insert the Micro SD card loaded with the appropriate PYNQ image into the MicroSD card slot underneath the board

Connect the Ethernet port to your PC.

-

You will have to assign a static IP to your ethernet connection to have access to the board, follow the instructions of the following link to the official documentation.

-

https://pynq.readthedocs.io/en/v2.5.1/appendix.html#assign-your-computer-a-static-ip

Turn on the board and check the boot sequence.

Once the board has finished booting, from your browser enter the following IP address to access a portal with Jupyer Notebook.

Password:xilinx

First we will do a small test to see if the operating system is working correctly. We will set the WiFi, this in addition to indicating that we installed the OS correctly will help us to download the missing libraries for our project.

NOTE: As we indicated in the materials section, this needs an external usb wifi card to conneect to the internet.

If everything works well, we will get the following response from the ping line:

Now we will install the missing libraries to make the DPU work on the ZCU104. We are going to open a command terminal as shown in the image.

From the terminal we have to write the following command, you can copy and paste it all at once.

git clone --recursive --shallow-submodules https://github.com/Xilinx/DPU-PYNQ \

&& cd DPU-PYNQ/upgrade \

&& make \

&& pip3 install pynq-dpu \

&& cd $PYNQ_JUPYTER_NOTEBOOKS \

&& pynq get-notebooks pynq-dpu -p .

This process may take some time depending on your internet connection.

To test the model we have to download the github folder to our board with the following command.

git clone https://github.com/altaga/Facemask-Detector-ZCU104

If you prefer you can also transfer only the files from the "Test Notebook" and "Main Notebook" folders to the board.

Inside the Test Notebook folder, we will enter the file "Facemask-ZCU104.ipynb".

All the code is explained in detail, to deepen please review it.

When running everything we see an image like this, it means that everything has worked correctly.

For this contest I thought it was important to demonstrate the superiority of FPGAs over conventional HW and AI dedicated HW, when processing neural networks. So, I adapted the code of my model to run on 2 HW that anyone could have as a developer.

Because all the codes display the result on screen, which takes a lot of time, the calculation of the FPS was carried out with the following algorithm.

- RPi4 with 4gb:

- Tensor Flow Lite Model.

- Tensor Flow Lite Optimized Model.

- Jetson Nano 4gb:

- Tensor Flow Model MAX Consumption Mode.

- Tensor Flow Model 5W Consumption Mode.

- ZCU104:

- Vitis AI - Keras optimized Model.

| Board | Model | Mode | FPS |

|---|---|---|---|

| Rpi 4 - 4gb | TfLite | Standard | 55 |

| Rpi 4 - 4gb | TfLite Optimized | Standard | 47 |

| Jetson Nano | Tf Model | Max | 90 |

| Jetson Nano | TfLite | 5W | 41 |

| ZCU104 | Vitis AI Optimized Model |

Standard | 400 |

For more detail go to the folder, within each folder there is code(s) to train and run the model(s) as well as video and photographic evidence of how it works in real time.

https://github.com/altaga/Facemask-Detector-ZCU104/tree/main/Benchmarks%20Notebooks

To measure the useer's temperature and the Facemask dispenser, we created the following circuit with an ESP32.

Control of this device was done through BLE, in order to use the least amount of battery.

The display has the function of showing different messages, according to the result of the reading of the ZCU104 and the temperature sensor.

Page to convert images to scrollable images on the screen, the images have to be made almost by hand like PIXELART to be able to display them well:

https://sparks.gogo.co.nz/pcd8554-bmp.html

Description:

While a reading is not taking place, we will get the following message.

If the user does not have a facemask, we will display this message.

Before taking the temperature, this image will be displayed so that the useer may bring his hand next to the sensor.

If the user wears a mask but his/her temperature is very high.

If the user has his face mask and his temperature is normal.

Here is an example already on the screen.

If the ZCU104 reading indicates that the person does not have a facemask, the servomotor will offer one to the customer so that the customer can move on to the temperature measurement stage.

When the ZCU104 indicates that the person is wearing a mask, the temperature of the hand will be taken.

To calculate the real temperature of the body, a multivariable linear regression model was performed to obtain an equation that would relate the temperature of the back of the hand and the ambient temperature, to obtain the real internal temperature of the body.

In this case we will take as the maximum reference temperature the one suggested by the CDC [1], which is 100.4 ° F. In the event that the temperature is higher than 100.4 ° F, we will not be able to let the person enter the establishment.

- https://www.cdc.gov/coronavirus/2019-ncov/downloads/COVID-19_CAREKit_ENG.pdf

- http://manuals.serverscheck.com/EST-Difference_between_core_and_skin_temperature.pdf

In turn, as we have a device that is autonomous in its task. We must have a way to visualize what the device is seeing remotely, so a simple application based on the React Native framework was created for this task.

Features:

- MQTT based communication.

- Save images with a button.

- View the IP and port of the server.

- Capacity to switch between devices in the event that the establishment has multiple entrances.

- Plug and Play.

(Smartphone: Huawei Y6P).

(Smartphone: Huawei Y6P).

You can install the APK which is in the "MonitorMqtt-APK" folder or build it yourself, all the source code of the app is in the "MonitorMqtt" folder. Remember that we use the React Native framework.

If your cellphone has USB debugger mode enabled, you can install the app from your pc with the following command while in the "MonitorMqtt-APK" folder.

adb install App.apk

Gif with the app running.

At this time we already have a BLE device with which we can communicate wirelessly.

Once the ZCU104 obtains at least 3 correct readings, we will send the signal to the ESP32 to go to the temperature taking phase. Once the client passes this phase, they will be allowed to enter the establishment.

The code in the "Main Notebook" folder carriees out all this process.

Link: https://github.com/altaga/Facemask-Detector-ZCU104/tree/main/Main%20Notebook

This was perhaps the most important part of the whole project. We have had built a pretty good proof of concept, but technology exists to be used and businesses have been hit the hardest by COVID-19, specially the small and medium ones. So , first and foremost we attempted to find a business nearby that operates and has to have its clients wearing a mask (and that will allow us to test the PoC). What came to mind first were restaurants, 7 eleven-ish stores and supermarkets. As naive as we were, we went first to several supermarkets to just try and se if they would allow us to test the PoC and record its functionality. Of course, we were soundly rejected. Later we tried with an Ice-cream store after several attempts with supermarkets and 7elevens. And they finally allowed us to test it and record some results that you can see in our demo video!

ZCU104:

Face Mask Dispenser:

Display:

Complete solution working in local business

Sorry github does not allow embed videos.

The final PoC offers an alternative for access control for several kinds of businesses. This is a problem that nowadays affects everyone and will do for the near future. Most experts are expecting this kind of measures to be necessary for the foreseeable future. It is true that the vaccines will start to roll out in December, but for herd immunity and its effects to take place, it will take a couple years if not more. The problem of access control to businesses and institutions has to be automated without question, we have shown to have the proper technology to accomplish this and it should be done via creative use of bleeding edge technology such as the application here presented. Thank you for reading and supporting the project.

Links:

(2) https://www.dol.gov/sites/dolgov/files/WHD/posters/FFCRA_Poster_WH1422_Non-Federal.pdf

(3) https://www.ziprecruiter.com/Salaries/Retail-Security-Officer-Salary

(4) https://www.cdc.gov/coronavirus/2019-ncov/downloads/COVID-19_CAREKit_ENG.pdf

install_docker.sh

#!/bin/sh

sudo apt-get remove docker docker-engine docker.io containerd runc -y

sudo apt-get update

sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-common -y

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo apt-key fingerprint 0EBFCD88

sudo add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io -y

sudo groupadd docker

sudo usermod -aG docker $USER

docker run hello-world

install_vitis.sh

#!/bin/sh

git clone --recurse-submodules https://github.com/Xilinx/Vitis-AI

cd Vitis-AI

docker pull xilinx/vitis-ai:latest

cd

install_cpu.sh

#!/bin/sh

cd Vitis-AI/docker

sudo bash ./docker_build_cpu.sh

cd

install_gpu.sh

#!/bin/sh

cd Vitis-AI/docker

sudo bash ./docker_build_gpu.sh

cd

install_vitis.sh

#!/bin/sh

cd Vitis-AI

sudo bash docker_run.sh xilinx/vitis-ai-cpu:latest