-

Notifications

You must be signed in to change notification settings - Fork 3

Nested parallelism in OpenMP

We use nested parallelism in multi-event clustering to exploit the computing capabilities of the hardware in multicore architectures. In an OpenMP code, the most intuitive method of implementing nested parallelism is simply to create two (or more) levels of OpenMP parallel regions, one inside the other. In the multi-event clustering case, we set two levels of parallel-for loops: an outer level to handle different events, and an inner level to handle sets of strips within a single event. Moreover, the inner parallel loops can be vectorized via the OpenMP simd clause where appropriate. As we will see, care must be taken to ensure that the right numbers of threads with proper thread affinities are given to the parallel regions at each level.

By default, OpenMP turns off nested parallelism. In the default case, only a single thread is generated whenever a parallel region is encountered in the inner loops. Nested parallelism can be turned on at run time either through calling the function omp_set_nested(true) in the code or setting the environment variable OMP_NESTED=TRUE in the run-time environment. Similarly, we can control the number of threads in each level at run time with num_thread() clauses in the code or through setting the environment variable OMP_NUM_THREADS=a,b (where the number of threads for each level is separated by a comma).

One might imagine that entering and exiting the nested levels generates a lot of overhead due to repeated fork/join operations in OpenMP. The Intel compiler supports an experimental feature known as "hot teams" which reduces the overhead of spawning threads for the innermost nested regions by keeping a pool of threads alive (but idle) during the execution of the outer parallel region(s). The use of hot teams is controlled by two environment variables: KMP_HOT_TEAMS_MODE and KMP_HOT_TEAMS_MAX_LEVEL. To keep unused team members alive during execution of the non-nested regions, we set KMP_HOT_TEAMS_MODE=1. Since we have a maximum of two levels in our case, we set KMP_HOT_TEAMS_MAX_LEVEL=2.

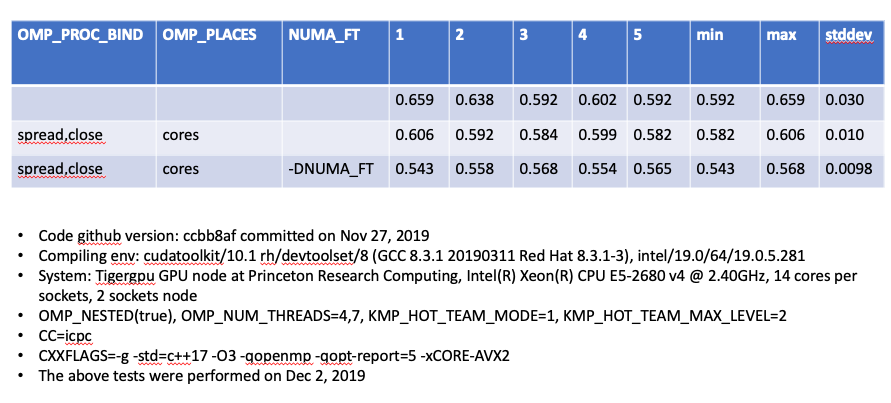

To maintain good memory locality in nested parallelism, it is also important to ensure that threads are given affinity to specific cores. Fortunately, OpenMP 4.0 provides two convenient environment variables to assist in the placement of threads. In order to place team leaders on widely separated cores, while placing team members on cores that are close together, we set OMP_PROC_BIND=spread,close and OMP_PLACES=cores. Thread affinity can also be enforced in the code using certain OpenMP clauses, e.g., proc_bind().

Note that whenever relevant OpenMP functions and clauses are present in the code, e.g., omp_set_nested(), num_threads(), proc_bind(), etc., they take priority over the corresponding OpenMP environment variables, e.g., OMP_NESTED, OMP_NUM_THREADS, OMP_PROC_BIND etc. We choose to use environment variables for greater ease in experimentation.

Below we show a performance comparison (wrt running time in seconds) on a two-socket system with Intel Broadwell processors:

Overall, we find that setting environment variables as shown leads to the best performance:

export OMP_NESTED=TRUE

export OMP_NUM_THREADS=4,7

export OMP_PLACES=cores

export OMP_PROC_BIND=spread, close

export KMP_HOT_TEAMS_MODE=1 (Intel only)

export KMP_HOT_TEAMS_MAX_LEVEL=2 (Intel only)

We find that setting OMP_DISPLAY_ENV=TRUE and KMP_AFFINITY=verbose (Intel only) is helpful for checking thread placement.

Ref:

- https://software.intel.com/en-us/forums/intel-fortran-compiler/topic/721790

- Cosmic Microwave background analysis: nested parallelism in practice, High Performance Parallelism Pearls, Volume 2, p.178