diff --git a/README.md b/README.md

index 885d740ca..1ac582cae 100644

--- a/README.md

+++ b/README.md

@@ -1,34 +1,14 @@

[](https://github.com/blacklanternsecurity/bbot)

-# BEE·bot

-

-### A Recursive Internet Scanner for Hackers.

+#### /ˈBEE·bot/ (noun): A recursive internet scanner for hackers.

[](https://www.python.org) [](https://github.com/blacklanternsecurity/bbot/blob/dev/LICENSE) [](https://forum.defcon.org/node/246338) [](https://pepy.tech/project/bbot) [](https://github.com/psf/black) [](https://github.com/blacklanternsecurity/bbot/actions?query=workflow%3A"tests") [](https://codecov.io/gh/blacklanternsecurity/bbot) [](https://discord.com/invite/PZqkgxu5SA)

-BBOT (Bighuge BLS OSINT Tool) is a recursive internet scanner inspired by [Spiderfoot](https://github.com/smicallef/spiderfoot), but designed to be faster, more reliable, and friendlier to pentesters, bug bounty hunters, and developers.

-

-Special features include:

-

-- Support for Multiple Targets

-- Web Screenshots

-- Suite of Offensive Web Modules

-- AI-powered Subdomain Mutations

-- Native Output to Neo4j (and more)

-- Python API + Developer [Documentation](https://www.blacklanternsecurity.com/bbot/)

-

-https://github.com/blacklanternsecurity/bbot/assets/20261699/742df3fe-5d1f-4aea-83f6-f990657bf695

+https://github.com/blacklanternsecurity/bbot/assets/20261699/e539e89b-92ea-46fa-b893-9cde94eebf81

_A BBOT scan in real-time - visualization with [VivaGraphJS](https://github.com/blacklanternsecurity/bbot-vivagraphjs)_

-## Quick Start Guide

-

-Below are some short help sections to get you up and running.

-

-

-Installation ( Pip )

-

-Note: BBOT's [PyPi package](https://pypi.org/project/bbot/) requires Linux and Python 3.9+.

+## Installation

```bash

# stable version

@@ -36,81 +16,140 @@ pipx install bbot

# bleeding edge (dev branch)

pipx install --pip-args '\--pre' bbot

-

-bbot --help

```

-

+_For more installation methods, including [Docker](https://hub.docker.com/r/blacklanternsecurity/bbot), see [Getting Started](https://www.blacklanternsecurity.com/bbot/)_

-

-Installation ( Docker )

+## What is BBOT?

+

+### BBOT is...

-[Docker images](https://hub.docker.com/r/blacklanternsecurity/bbot) are provided, along with helper script `bbot-docker.sh` to persist your scan data.

+## 1) A Subdomain Finder

+

+Passive API sources plus a recursive DNS brute-force with target-specific subdomain mutations.

```bash

-# bleeding edge (dev)

-docker run -it blacklanternsecurity/bbot --help

+# find subdomains of evilcorp.com

+bbot -t evilcorp.com -p subdomain-enum

+```

-# stable

-docker run -it blacklanternsecurity/bbot:stable --help

+

+subdomain-enum.yml

-# helper script

-git clone https://github.com/blacklanternsecurity/bbot && cd bbot

-./bbot-docker.sh --help

+```yaml

+description: Enumerate subdomains via APIs, brute-force

+

+flags:

+ - subdomain-enum

+

+output_modules:

+ - subdomains

+

+config:

+ modules:

+ stdout:

+ format: text

+ # only output DNS_NAMEs to the console

+ event_types:

+ - DNS_NAME

+ # only show in-scope subdomains

+ in_scope_only: True

+ # display the raw subdomains, nothing else

+ event_fields:

+ - data

+ # automatically dedupe

+ accept_dups: False

```

-Example Usage

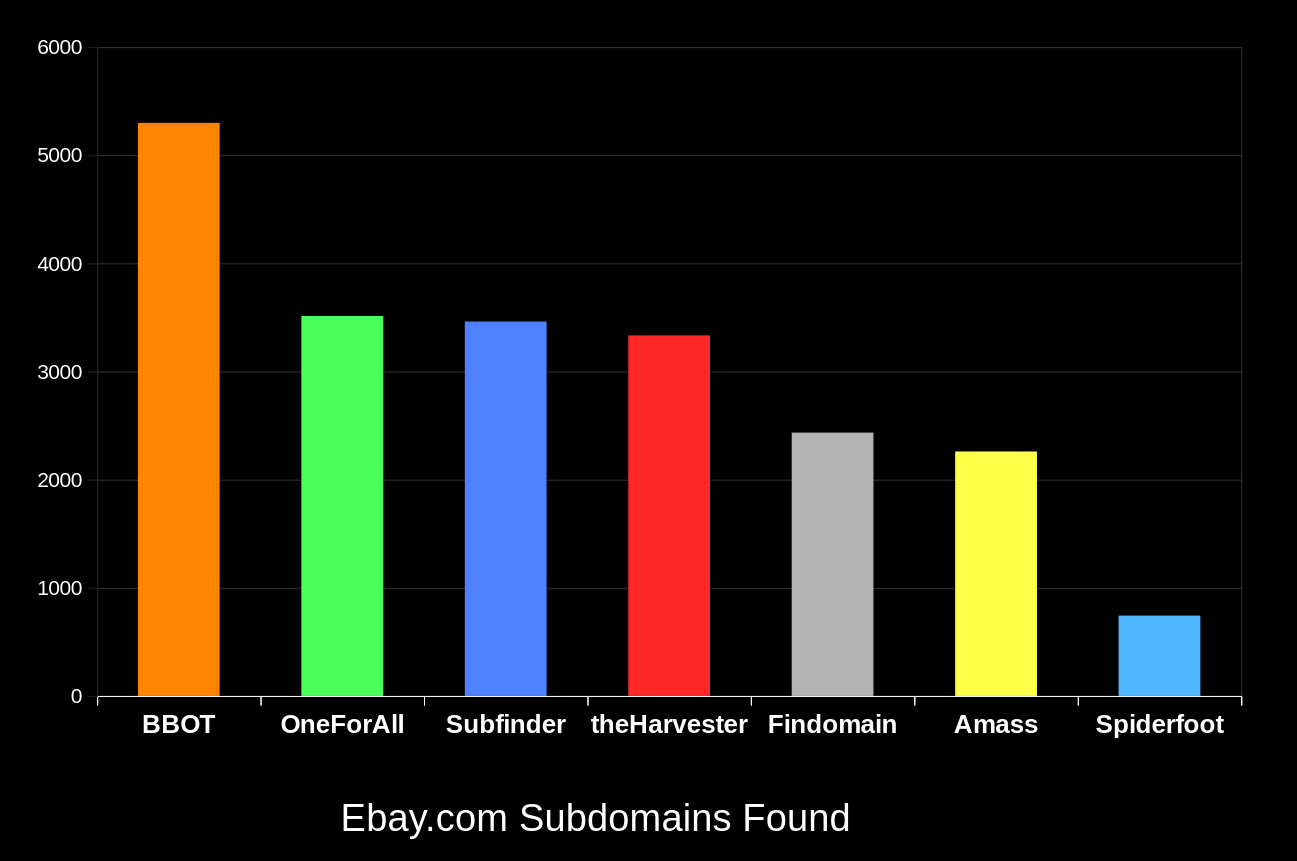

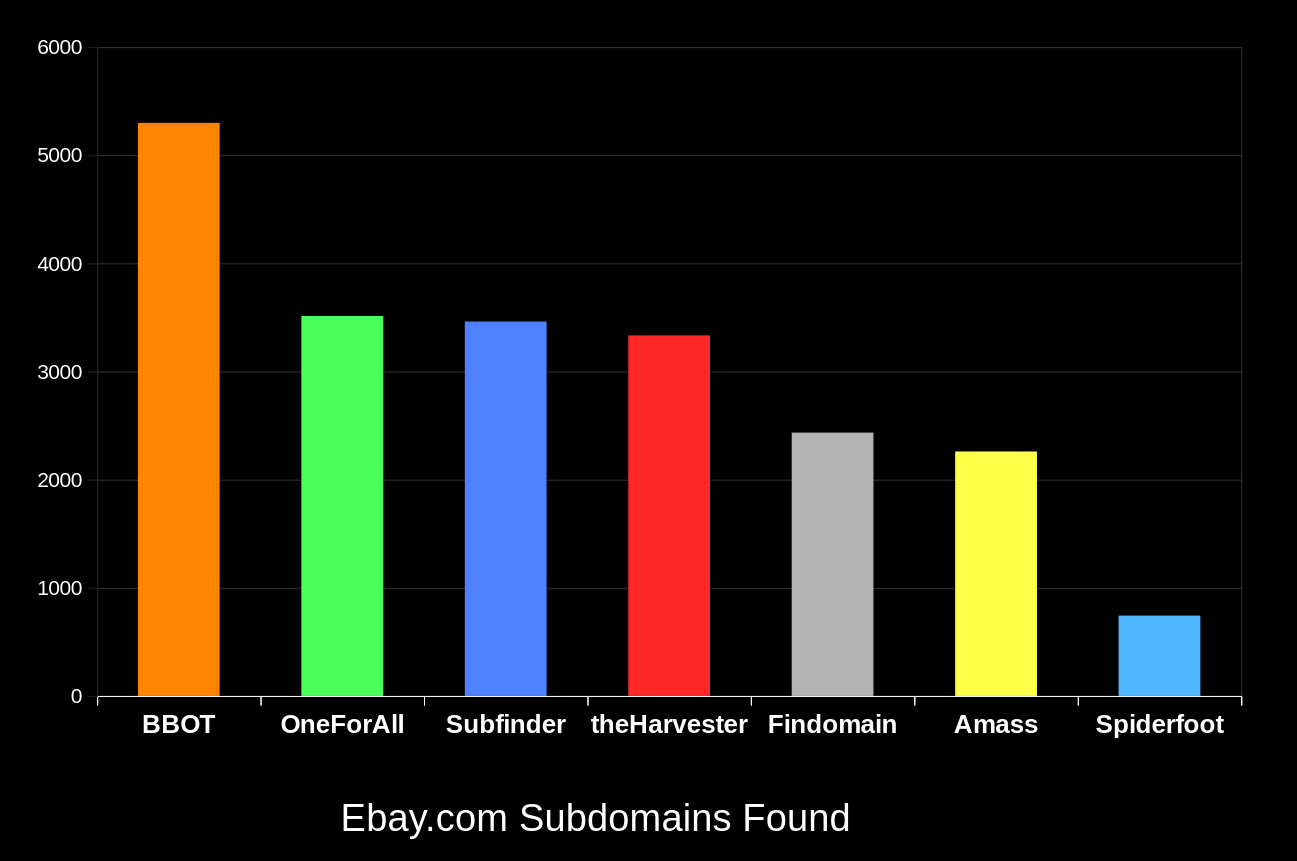

+SEE: Comparison to Other Subdomain Enumeration Tools

-## Example Commands

+BBOT consistently finds 20-50% more subdomains than other tools. The bigger the domain, the bigger the difference. To learn how this is possible, see [How It Works](https://www.blacklanternsecurity.com/bbot/how_it_works/).

-Scan output, logs, etc. are saved to `~/.bbot`. For more detailed examples and explanations, see [Scanning](https://www.blacklanternsecurity.com/bbot/scanning).

+

-

-**Subdomains:**

+

+

+

+## 2) A Web Spider

```bash

-# Perform a full subdomain enumeration on evilcorp.com

-bbot -t evilcorp.com -f subdomain-enum

+# crawl evilcorp.com, extracting emails and other goodies

+bbot -t evilcorp.com -p spider

```

-**Subdomains (passive only):**

+## 3) An Email Gatherer

```bash

-# Perform a passive-only subdomain enumeration on evilcorp.com

-bbot -t evilcorp.com -f subdomain-enum -rf passive

+# enumerate evilcorp.com email addresses

+bbot -t evilcorp.com -p subdomain-enum spider email-enum

```

-**Subdomains + port scan + web screenshots:**

+## 4) A Web Scanner

```bash

-# Port-scan every subdomain, screenshot every webpage, output to current directory

-bbot -t evilcorp.com -f subdomain-enum -m nmap gowitness -n my_scan -o .

+# run a light web scan against www.evilcorp.com

+bbot -t www.evilcorp.com -p web-basic

+

+# run a heavy web scan against www.evilcorp.com

+bbot -t www.evilcorp.com -p web-thorough

```

-**Subdomains + basic web scan:**

+## 5) ...And Much More

```bash

-# A basic web scan includes wappalyzer, robots.txt, and other non-intrusive web modules

-bbot -t evilcorp.com -f subdomain-enum web-basic

+# everything everywhere all at once

+bbot -t evilcorp.com -p kitchen-sink

+

+# roughly equivalent to:

+bbot -t evilcorp.com -p subdomain-enum cloud-enum code-enum email-enum spider web-basic paramminer dirbust-light web-screenshots

```

-**Web spider:**

+## 6) It's Also a Python Library

-```bash

-# Crawl www.evilcorp.com up to a max depth of 2, automatically extracting emails, secrets, etc.

-bbot -t www.evilcorp.com -m httpx robots badsecrets secretsdb -c web_spider_distance=2 web_spider_depth=2

+#### Synchronous

+```python

+from bbot.scanner import Scanner

+

+scan = Scanner("evilcorp.com", presets=["subdomain-enum"])

+for event in scan.start():

+ print(event)

```

-**Everything everywhere all at once:**

+#### Asynchronous

+```python

+from bbot.scanner import Scanner

+

+async def main():

+ scan = Scanner("evilcorp.com", presets=["subdomain-enum"])

+ async for event in scan.async_start():

+ print(event.json())

-```bash

-# Subdomains, emails, cloud buckets, port scan, basic web, web screenshots, nuclei

-bbot -t evilcorp.com -f subdomain-enum email-enum cloud-enum web-basic -m nmap gowitness nuclei --allow-deadly

+import asyncio

+asyncio.run(main())

```

-

+

+

+SEE: This Nefarious Discord Bot

+

+A [BBOT Discord Bot](https://www.blacklanternsecurity.com/bbot/dev/discord_bot/) that responds to the `/scan` command. Scan the internet from the comfort of your discord server!

+

+

+

+## Feature Overview

+

+BBOT (Bighuge BLS OSINT Tool) is a recursive internet scanner inspired by [Spiderfoot](https://github.com/smicallef/spiderfoot), but designed to be faster, more reliable, and friendlier to pentesters, bug bounty hunters, and developers.

+

+Special features include:

+

+- Support for Multiple Targets

+- Web Screenshots

+- Suite of Offensive Web Modules

+- AI-powered Subdomain Mutations

+- Native Output to Neo4j (and more)

+- Python API + Developer Documentation

## Targets

@@ -134,7 +173,7 @@ For more information, see [Targets](https://www.blacklanternsecurity.com/bbot/sc

Similar to Amass or Subfinder, BBOT supports API keys for various third-party services such as SecurityTrails, etc.

-The standard way to do this is to enter your API keys in **`~/.config/bbot/secrets.yml`**:

+The standard way to do this is to enter your API keys in **`~/.config/bbot/bbot.yml`**:

```yaml

modules:

shodan_dns:

@@ -154,41 +193,7 @@ bbot -c modules.virustotal.api_key=dd5f0eee2e4a99b71a939bded450b246

For details, see [Configuration](https://www.blacklanternsecurity.com/bbot/scanning/configuration/)

-## BBOT as a Python Library

-

-BBOT exposes a Python API that allows it to be used for all kinds of fun and nefarious purposes, like a [Discord Bot](https://www.blacklanternsecurity.com/bbot/dev/#bbot-python-library-advanced-usage#discord-bot-example) that responds to the `/scan` command.

-

-

-

-**Synchronous**

-

-```python

-from bbot.scanner import Scanner

-

-# any number of targets can be specified

-scan = Scanner("example.com", "scanme.nmap.org", modules=["nmap", "sslcert"])

-for event in scan.start():

- print(event.json())

-```

-

-**Asynchronous**

-

-```python

-from bbot.scanner import Scanner

-

-async def main():

- scan = Scanner("example.com", "scanme.nmap.org", modules=["nmap", "sslcert"])

- async for event in scan.async_start():

- print(event.json())

-

-import asyncio

-asyncio.run(main())

-```

-

-

-

-

-Documentation - Table of Contents

+## Documentation

- **User Manual**

@@ -198,6 +203,9 @@ asyncio.run(main())

- [Comparison to Other Tools](https://www.blacklanternsecurity.com/bbot/comparison)

- **Scanning**

- [Scanning Overview](https://www.blacklanternsecurity.com/bbot/scanning/)

+ - **Presets**

+ - [Overview](https://www.blacklanternsecurity.com/bbot/scanning/presets)

+ - [List of Presets](https://www.blacklanternsecurity.com/bbot/scanning/presets_list)

- [Events](https://www.blacklanternsecurity.com/bbot/scanning/events)

- [Output](https://www.blacklanternsecurity.com/bbot/scanning/output)

- [Tips and Tricks](https://www.blacklanternsecurity.com/bbot/scanning/tips_and_tricks)

@@ -226,12 +234,9 @@ asyncio.run(main())

- [Word Cloud](https://www.blacklanternsecurity.com/bbot/dev/helpers/wordcloud)

-

-

-

-Contribution

+## Contribution

-BBOT is constantly being improved by the community. Every day it grows more powerful!

+Some of the best BBOT modules were written by the community. BBOT is being constantly improved; every day it grows more powerful!

We welcome contributions. Not just code, but ideas too! If you have an idea for a new feature, please let us know in [Discussions](https://github.com/blacklanternsecurity/bbot/discussions). If you want to get your hands dirty, see [Contribution](https://www.blacklanternsecurity.com/bbot/contribution/). There you can find setup instructions and a simple tutorial on how to write a BBOT module. We also have extensive [Developer Documentation](https://www.blacklanternsecurity.com/bbot/dev/).

@@ -243,71 +248,12 @@ Thanks to these amazing people for contributing to BBOT! :heart:

-Special thanks to the following people who made BBOT possible:

+Special thanks to:

- @TheTechromancer for creating [BBOT](https://github.com/blacklanternsecurity/bbot)

-- @liquidsec for his extensive work on BBOT's web hacking features, including [badsecrets](https://github.com/blacklanternsecurity/badsecrets)

+- @liquidsec for his extensive work on BBOT's web hacking features, including [badsecrets](https://github.com/blacklanternsecurity/badsecrets) and [baddns](https://github.com/blacklanternsecurity/baddns)

- Steve Micallef (@smicallef) for creating Spiderfoot

- @kerrymilan for his Neo4j and Ansible expertise

+- @domwhewell-sage for his family of badass code-looting modules

- @aconite33 and @amiremami for their ruthless testing

- Aleksei Kornev (@alekseiko) for allowing us ownership of the bbot Pypi repository <3

-

-

-

-## Comparison to Other Tools

-

-BBOT consistently finds 20-50% more subdomains than other tools. The bigger the domain, the bigger the difference. To learn how this is possible, see [How It Works](https://www.blacklanternsecurity.com/bbot/how_it_works/).

-

-

-

-## BBOT Modules By Flag

-For a full list of modules, including the data types consumed and emitted by each one, see [List of Modules](https://www.blacklanternsecurity.com/bbot/modules/list_of_modules/).

-

-

-| Flag | # Modules | Description | Modules |

-|------------------|-------------|----------------------------------------------------|--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

-| safe | 80 | Non-intrusive, safe to run | affiliates, aggregate, ajaxpro, anubisdb, asn, azure_realm, azure_tenant, baddns, baddns_zone, badsecrets, bevigil, binaryedge, bucket_amazon, bucket_azure, bucket_digitalocean, bucket_file_enum, bucket_firebase, bucket_google, builtwith, c99, censys, certspotter, chaos, columbus, credshed, crobat, crt, dehashed, digitorus, dnscommonsrv, dnsdumpster, docker_pull, dockerhub, emailformat, filedownload, fingerprintx, fullhunt, git, git_clone, github_codesearch, github_org, gitlab, gowitness, hackertarget, httpx, hunt, hunterio, iis_shortnames, internetdb, ip2location, ipstack, leakix, myssl, newsletters, ntlm, oauth, otx, passivetotal, pgp, postman, rapiddns, riddler, robots, secretsdb, securitytrails, shodan_dns, sitedossier, skymem, social, sslcert, subdomaincenter, sublist3r, threatminer, trufflehog, urlscan, viewdns, virustotal, wappalyzer, wayback, zoomeye |

-| passive | 59 | Never connects to target systems | affiliates, aggregate, anubisdb, asn, azure_realm, azure_tenant, bevigil, binaryedge, bucket_file_enum, builtwith, c99, censys, certspotter, chaos, columbus, credshed, crobat, crt, dehashed, digitorus, dnscommonsrv, dnsdumpster, docker_pull, emailformat, excavate, fullhunt, git_clone, github_codesearch, github_org, hackertarget, hunterio, internetdb, ip2location, ipneighbor, ipstack, leakix, massdns, myssl, otx, passivetotal, pgp, postman, rapiddns, riddler, securitytrails, shodan_dns, sitedossier, skymem, social, speculate, subdomaincenter, sublist3r, threatminer, trufflehog, urlscan, viewdns, virustotal, wayback, zoomeye |

-| subdomain-enum | 45 | Enumerates subdomains | anubisdb, asn, azure_realm, azure_tenant, baddns_zone, bevigil, binaryedge, builtwith, c99, censys, certspotter, chaos, columbus, crt, digitorus, dnscommonsrv, dnsdumpster, fullhunt, github_codesearch, github_org, hackertarget, httpx, hunterio, internetdb, ipneighbor, leakix, massdns, myssl, oauth, otx, passivetotal, postman, rapiddns, riddler, securitytrails, shodan_dns, sitedossier, sslcert, subdomaincenter, subdomains, threatminer, urlscan, virustotal, wayback, zoomeye |

-| active | 43 | Makes active connections to target systems | ajaxpro, baddns, baddns_zone, badsecrets, bucket_amazon, bucket_azure, bucket_digitalocean, bucket_firebase, bucket_google, bypass403, dastardly, dockerhub, dotnetnuke, ffuf, ffuf_shortnames, filedownload, fingerprintx, generic_ssrf, git, gitlab, gowitness, host_header, httpx, hunt, iis_shortnames, masscan, newsletters, nmap, ntlm, nuclei, oauth, paramminer_cookies, paramminer_getparams, paramminer_headers, robots, secretsdb, smuggler, sslcert, telerik, url_manipulation, vhost, wafw00f, wappalyzer |

-| web-thorough | 29 | More advanced web scanning functionality | ajaxpro, azure_realm, badsecrets, bucket_amazon, bucket_azure, bucket_digitalocean, bucket_firebase, bucket_google, bypass403, dastardly, dotnetnuke, ffuf_shortnames, filedownload, generic_ssrf, git, host_header, httpx, hunt, iis_shortnames, nmap, ntlm, oauth, robots, secretsdb, smuggler, sslcert, telerik, url_manipulation, wappalyzer |

-| aggressive | 20 | Generates a large amount of network traffic | bypass403, dastardly, dotnetnuke, ffuf, ffuf_shortnames, generic_ssrf, host_header, ipneighbor, masscan, massdns, nmap, nuclei, paramminer_cookies, paramminer_getparams, paramminer_headers, smuggler, telerik, url_manipulation, vhost, wafw00f |

-| web-basic | 17 | Basic, non-intrusive web scan functionality | azure_realm, baddns, badsecrets, bucket_amazon, bucket_azure, bucket_firebase, bucket_google, filedownload, git, httpx, iis_shortnames, ntlm, oauth, robots, secretsdb, sslcert, wappalyzer |

-| cloud-enum | 12 | Enumerates cloud resources | azure_realm, azure_tenant, baddns, baddns_zone, bucket_amazon, bucket_azure, bucket_digitalocean, bucket_file_enum, bucket_firebase, bucket_google, httpx, oauth |

-| slow | 10 | May take a long time to complete | bucket_digitalocean, dastardly, docker_pull, fingerprintx, git_clone, paramminer_cookies, paramminer_getparams, paramminer_headers, smuggler, vhost |

-| affiliates | 8 | Discovers affiliated hostnames/domains | affiliates, azure_realm, azure_tenant, builtwith, oauth, sslcert, viewdns, zoomeye |

-| email-enum | 7 | Enumerates email addresses | dehashed, emailformat, emails, hunterio, pgp, skymem, sslcert |

-| deadly | 4 | Highly aggressive | dastardly, ffuf, nuclei, vhost |

-| portscan | 3 | Discovers open ports | internetdb, masscan, nmap |

-| web-paramminer | 3 | Discovers HTTP parameters through brute-force | paramminer_cookies, paramminer_getparams, paramminer_headers |

-| baddns | 2 | Runs all modules from the DNS auditing tool BadDNS | baddns, baddns_zone |

-| iis-shortnames | 2 | Scans for IIS Shortname vulnerability | ffuf_shortnames, iis_shortnames |

-| report | 2 | Generates a report at the end of the scan | affiliates, asn |

-| social-enum | 2 | Enumerates social media | httpx, social |

-| service-enum | 1 | Identifies protocols running on open ports | fingerprintx |

-| subdomain-hijack | 1 | Detects hijackable subdomains | baddns |

-| web-screenshots | 1 | Takes screenshots of web pages | gowitness |

-

-

-## BBOT Output Modules

-BBOT can save its data to TXT, CSV, JSON, and tons of other destinations including [Neo4j](https://www.blacklanternsecurity.com/bbot/scanning/output/#neo4j), [Splunk](https://www.blacklanternsecurity.com/bbot/scanning/output/#splunk), and [Discord](https://www.blacklanternsecurity.com/bbot/scanning/output/#discord-slack-teams). For instructions on how to use these, see [Output Modules](https://www.blacklanternsecurity.com/bbot/scanning/output).

-

-

-| Module | Type | Needs API Key | Description | Flags | Consumed Events | Produced Events |

-|-----------------|--------|-----------------|-----------------------------------------------------------------------------------------|----------------|--------------------------------------------------------------------------------------------------|---------------------------|

-| asset_inventory | output | No | Merge hosts, open ports, technologies, findings, etc. into a single asset inventory CSV | | DNS_NAME, FINDING, HTTP_RESPONSE, IP_ADDRESS, OPEN_TCP_PORT, TECHNOLOGY, URL, VULNERABILITY, WAF | IP_ADDRESS, OPEN_TCP_PORT |

-| csv | output | No | Output to CSV | | * | |

-| discord | output | No | Message a Discord channel when certain events are encountered | | * | |

-| emails | output | No | Output any email addresses found belonging to the target domain | email-enum | EMAIL_ADDRESS | |

-| http | output | No | Send every event to a custom URL via a web request | | * | |

-| human | output | No | Output to text | | * | |

-| json | output | No | Output to Newline-Delimited JSON (NDJSON) | | * | |

-| neo4j | output | No | Output to Neo4j | | * | |

-| python | output | No | Output via Python API | | * | |

-| slack | output | No | Message a Slack channel when certain events are encountered | | * | |

-| splunk | output | No | Send every event to a splunk instance through HTTP Event Collector | | * | |

-| subdomains | output | No | Output only resolved, in-scope subdomains | subdomain-enum | DNS_NAME, DNS_NAME_UNRESOLVED | |

-| teams | output | No | Message a Teams channel when certain events are encountered | | * | |

-| web_report | output | No | Create a markdown report with web assets | | FINDING, TECHNOLOGY, URL, VHOST, VULNERABILITY | |

-| websocket | output | No | Output to websockets | | * | |

-

diff --git a/bbot/__init__.py b/bbot/__init__.py

index 1d95273e3..8e016095f 100644

--- a/bbot/__init__.py

+++ b/bbot/__init__.py

@@ -1,10 +1,2 @@

# version placeholder (replaced by poetry-dynamic-versioning)

-__version__ = "0.0.0"

-

-# global app config

-from .core import configurator

-

-config = configurator.config

-

-# helpers

-from .core import helpers

+__version__ = "v0.0.0"

diff --git a/bbot/agent/__init__.py b/bbot/agent/__init__.py

deleted file mode 100644

index d2361b7a3..000000000

--- a/bbot/agent/__init__.py

+++ /dev/null

@@ -1 +0,0 @@

-from .agent import Agent

diff --git a/bbot/agent/agent.py b/bbot/agent/agent.py

deleted file mode 100644

index 1c8debc1e..000000000

--- a/bbot/agent/agent.py

+++ /dev/null

@@ -1,204 +0,0 @@

-import json

-import asyncio

-import logging

-import traceback

-import websockets

-from omegaconf import OmegaConf

-

-from . import messages

-import bbot.core.errors

-from bbot.scanner import Scanner

-from bbot.scanner.dispatcher import Dispatcher

-from bbot.core.helpers.misc import urlparse, split_host_port

-from bbot.core.configurator.environ import prepare_environment

-

-log = logging.getLogger("bbot.core.agent")

-

-

-class Agent:

- def __init__(self, config):

- self.config = config

- prepare_environment(self.config)

- self.url = self.config.get("agent_url", "")

- self.parsed_url = urlparse(self.url)

- self.host, self.port = split_host_port(self.parsed_url.netloc)

- self.token = self.config.get("agent_token", "")

- self.scan = None

- self.task = None

- self._ws = None

- self._scan_lock = asyncio.Lock()

-

- self.dispatcher = Dispatcher()

- self.dispatcher.on_status = self.on_scan_status

- self.dispatcher.on_finish = self.on_scan_finish

-

- def setup(self):

- if not self.url:

- log.error(f"Must specify agent_url")

- return False

- if not self.token:

- log.error(f"Must specify agent_token")

- return False

- return True

-

- async def ws(self, rebuild=False):

- if self._ws is None or rebuild:

- kwargs = {"close_timeout": 0.5}

- if self.token:

- kwargs.update({"extra_headers": {"Authorization": f"Bearer {self.token}"}})

- verbs = ("Building", "Built")

- if rebuild:

- verbs = ("Rebuilding", "Rebuilt")

- url = f"{self.url}/control/"

- log.debug(f"{verbs[0]} websocket connection to {url}")

- while 1:

- try:

- self._ws = await websockets.connect(url, **kwargs)

- break

- except Exception as e:

- log.error(f'Failed to establish websockets connection to URL "{url}": {e}')

- log.trace(traceback.format_exc())

- await asyncio.sleep(1)

- log.debug(f"{verbs[1]} websocket connection to {url}")

- return self._ws

-

- async def start(self):

- rebuild = False

- while 1:

- ws = await self.ws(rebuild=rebuild)

- rebuild = False

- try:

- message = await ws.recv()

- log.debug(f"Got message: {message}")

- try:

- message = json.loads(message)

- message = messages.Message(**message)

-

- if message.command == "ping":

- if self.scan is None:

- await self.send({"conversation": str(message.conversation), "message_type": "pong"})

- continue

-

- command_type = getattr(messages, message.command, None)

- if command_type is None:

- log.warning(f'Invalid command: "{message.command}"')

- continue

-

- command_args = command_type(**message.arguments)

- command_fn = getattr(self, message.command)

- response = await self.err_handle(command_fn, **command_args.dict())

- log.info(str(response))

- await self.send({"conversation": str(message.conversation), "message": response})

-

- except json.decoder.JSONDecodeError as e:

- log.warning(f'Failed to decode message "{message}": {e}')

- log.trace(traceback.format_exc())

- continue

- except Exception as e:

- log.debug(f"Error receiving message: {e}")

- log.debug(traceback.format_exc())

- await asyncio.sleep(1)

- rebuild = True

-

- async def send(self, message):

- rebuild = False

- while 1:

- try:

- ws = await self.ws(rebuild=rebuild)

- j = json.dumps(message)

- log.debug(f"Sending message of length {len(message)}")

- await ws.send(j)

- rebuild = False

- break

- except Exception as e:

- log.warning(f"Error sending message: {e}, retrying")

- log.trace(traceback.format_exc())

- await asyncio.sleep(1)

- # rebuild = True

-

- async def start_scan(self, scan_id, name=None, targets=[], modules=[], output_modules=[], config={}):

- async with self._scan_lock:

- if self.scan is None:

- log.success(

- f"Starting scan with targets={targets}, modules={modules}, output_modules={output_modules}"

- )

- output_module_config = OmegaConf.create(

- {"output_modules": {"websocket": {"url": f"{self.url}/scan/{scan_id}/", "token": self.token}}}

- )

- config = OmegaConf.create(config)

- config = OmegaConf.merge(self.config, config, output_module_config)

- output_modules = list(set(output_modules + ["websocket"]))

- scan = Scanner(

- *targets,

- scan_id=scan_id,

- name=name,

- modules=modules,

- output_modules=output_modules,

- config=config,

- dispatcher=self.dispatcher,

- )

- self.task = asyncio.create_task(self._start_scan_task(scan))

-

- return {"success": f"Started scan", "scan_id": scan.id}

- else:

- msg = f"Scan {self.scan.id} already in progress"

- log.warning(msg)

- return {"error": msg, "scan_id": self.scan.id}

-

- async def _start_scan_task(self, scan):

- self.scan = scan

- try:

- await scan.async_start_without_generator()

- except bbot.core.errors.ScanError as e:

- log.error(f"Scan error: {e}")

- log.trace(traceback.format_exc())

- except Exception:

- log.critical(f"Encountered error: {traceback.format_exc()}")

- self.on_scan_status("FAILED", scan.id)

- finally:

- self.task = None

-

- async def stop_scan(self):

- log.warning("Stopping scan")

- try:

- async with self._scan_lock:

- if self.scan is None:

- msg = "Scan not in progress"

- log.warning(msg)

- return {"error": msg}

- scan_id = str(self.scan.id)

- self.scan.stop()

- msg = f"Stopped scan {scan_id}"

- log.warning(msg)

- self.scan = None

- return {"success": msg, "scan_id": scan_id}

- except Exception as e:

- log.warning(f"Error while stopping scan: {e}")

- log.trace(traceback.format_exc())

- finally:

- self.scan = None

- self.task = None

-

- async def scan_status(self):

- async with self._scan_lock:

- if self.scan is None:

- msg = "Scan not in progress"

- log.warning(msg)

- return {"error": msg}

- return {"success": "Polled scan", "scan_status": self.scan.status}

-

- async def on_scan_status(self, status, scan_id):

- await self.send({"message_type": "scan_status_change", "status": str(status), "scan_id": scan_id})

-

- async def on_scan_finish(self, scan):

- self.scan = None

- self.task = None

-

- async def err_handle(self, callback, *args, **kwargs):

- try:

- return await callback(*args, **kwargs)

- except Exception as e:

- msg = f"Error in {callback.__qualname__}(): {e}"

- log.error(msg)

- log.trace(traceback.format_exc())

- return {"error": msg}

diff --git a/bbot/agent/messages.py b/bbot/agent/messages.py

deleted file mode 100644

index 34fd2c15c..000000000

--- a/bbot/agent/messages.py

+++ /dev/null

@@ -1,29 +0,0 @@

-from uuid import UUID

-from typing import Optional

-from pydantic import BaseModel

-

-

-class Message(BaseModel):

- conversation: UUID

- command: str

- arguments: Optional[dict] = {}

-

-

-### COMMANDS ###

-

-

-class start_scan(BaseModel):

- scan_id: str

- targets: list

- modules: list

- output_modules: list = []

- config: dict = {}

- name: Optional[str] = None

-

-

-class stop_scan(BaseModel):

- pass

-

-

-class scan_status(BaseModel):

- pass

diff --git a/bbot/cli.py b/bbot/cli.py

index 7b91c964f..8e308d6f8 100755

--- a/bbot/cli.py

+++ b/bbot/cli.py

@@ -1,78 +1,66 @@

#!/usr/bin/env python3

-import os

-import re

import sys

-import asyncio

import logging

-import traceback

-from omegaconf import OmegaConf

-from contextlib import suppress

-

-# fix tee buffering

-sys.stdout.reconfigure(line_buffering=True)

+from bbot.errors import *

+from bbot import __version__

+from bbot.logger import log_to_stderr

-# logging

-from bbot.core.logger import get_log_level, toggle_log_level

+silent = "-s" in sys.argv or "--silent" in sys.argv

-import bbot.core.errors

-from bbot import __version__

-from bbot.modules import module_loader

-from bbot.core.configurator.args import parser

-from bbot.core.helpers.logger import log_to_stderr

-from bbot.core.configurator import ensure_config_files, check_cli_args, environ

+if not silent:

+ ascii_art = f""" �[1;38;5;208m ______ �[0m _____ ____ _______

+ �[1;38;5;208m| ___ \�[0m| __ \ / __ \__ __|

+ �[1;38;5;208m| |___) �[0m| |__) | | | | | |

+ �[1;38;5;208m| ___ <�[0m| __ <| | | | | |

+ �[1;38;5;208m| |___) �[0m| |__) | |__| | | |

+ �[1;38;5;208m|______/�[0m|_____/ \____/ |_|

+ �[1;38;5;208mBIGHUGE�[0m BLS OSINT TOOL {__version__}

-log = logging.getLogger("bbot.cli")

+ www.blacklanternsecurity.com/bbot

+"""

+ print(ascii_art, file=sys.stderr)

+scan_name = ""

-log_level = get_log_level()

+async def _main():

-from . import config

+ import asyncio

+ import traceback

+ from contextlib import suppress

+ # fix tee buffering

+ sys.stdout.reconfigure(line_buffering=True)

-err = False

-scan_name = ""

+ log = logging.getLogger("bbot.cli")

+ from bbot.scanner import Scanner

+ from bbot.scanner.preset import Preset

-async def _main():

- global err

global scan_name

- environ.cli_execution = True

-

- # async def monitor_tasks():

- # in_row = 0

- # while 1:

- # try:

- # print('looooping')

- # tasks = asyncio.all_tasks()

- # current_task = asyncio.current_task()

- # if len(tasks) == 1 and list(tasks)[0] == current_task:

- # print('no tasks')

- # in_row += 1

- # else:

- # in_row = 0

- # for t in tasks:

- # print(t)

- # if in_row > 2:

- # break

- # await asyncio.sleep(1)

- # except BaseException as e:

- # print(traceback.format_exc())

- # with suppress(BaseException):

- # await asyncio.sleep(.1)

-

- # monitor_tasks_task = asyncio.create_task(monitor_tasks())

-

- ensure_config_files()

try:

+

+ # start by creating a default scan preset

+ preset = Preset(_log=True, name="bbot_cli_main")

+ # populate preset symlinks

+ preset.all_presets

+ # parse command line arguments and merge into preset

+ try:

+ preset.parse_args()

+ except BBOTArgumentError as e:

+ log_to_stderr(str(e), level="WARNING")

+ log.trace(traceback.format_exc())

+ return

+ # ensure arguments (-c config options etc.) are valid

+ options = preset.args.parsed

+

+ # print help if no arguments

if len(sys.argv) == 1:

- parser.print_help()

+ log.stdout(preset.args.parser.format_help())

sys.exit(1)

-

- options = parser.parse_args()

- check_cli_args()

+ return

# --version

if options.version:

@@ -80,328 +68,194 @@ async def _main():

sys.exit(0)

return

- # --current-config

- if options.current_config:

- log.stdout(f"{OmegaConf.to_yaml(config)}")

- sys.exit(0)

+ # --list-presets

+ if options.list_presets:

+ log.stdout("")

+ log.stdout("### PRESETS ###")

+ log.stdout("")

+ for row in preset.presets_table().splitlines():

+ log.stdout(row)

return

- if options.agent_mode:

- from bbot.agent import Agent

-

- agent = Agent(config)

- success = agent.setup()

- if success:

- await agent.start()

-

- else:

- from bbot.scanner import Scanner

-

- try:

- output_modules = set(options.output_modules)

- module_filtering = False

- if (options.list_modules or options.help_all) and not any([options.flags, options.modules]):

- module_filtering = True

- modules = set(module_loader.preloaded(type="scan"))

- else:

- modules = set(options.modules)

- # enable modules by flags

- for m, c in module_loader.preloaded().items():

- module_type = c.get("type", "scan")

- if m not in modules:

- flags = c.get("flags", [])

- if "deadly" in flags:

- continue

- for f in options.flags:

- if f in flags:

- log.verbose(f'Enabling {m} because it has flag "{f}"')

- if module_type == "output":

- output_modules.add(m)

- else:

- modules.add(m)

-

- default_output_modules = ["human", "json", "csv"]

-

- # Make a list of the modules which can be output to the console

- consoleable_output_modules = [

- k for k, v in module_loader.preloaded(type="output").items() if "console" in v["config"]

- ]

-

- # if none of the output modules provided on the command line are consoleable, don't turn off the defaults. Instead, just add the one specified to the defaults.

- if not any(o in consoleable_output_modules for o in output_modules):

- output_modules.update(default_output_modules)

-

- scanner = Scanner(

- *options.targets,

- modules=list(modules),

- output_modules=list(output_modules),

- output_dir=options.output_dir,

- config=config,

- name=options.name,

- whitelist=options.whitelist,

- blacklist=options.blacklist,

- strict_scope=options.strict_scope,

- force_start=options.force,

- )

-

- if options.install_all_deps:

- all_modules = list(module_loader.preloaded())

- scanner.helpers.depsinstaller.force_deps = True

- succeeded, failed = await scanner.helpers.depsinstaller.install(*all_modules)

- log.info("Finished installing module dependencies")

- return False if failed else True

-

- scan_name = str(scanner.name)

-

- # enable modules by dependency

- # this is only a basic surface-level check

- # todo: recursive dependency graph with networkx or topological sort?

- all_modules = list(set(scanner._scan_modules + scanner._internal_modules + scanner._output_modules))

- while 1:

- changed = False

- dep_choices = module_loader.recommend_dependencies(all_modules)

- if not dep_choices:

- break

- for event_type, deps in dep_choices.items():

- if event_type in ("*", "all"):

- continue

- # skip resolving dependency if a target provides the missing type

- if any(e.type == event_type for e in scanner.target.events):

- continue

- required_by = deps.get("required_by", [])

- recommended = deps.get("recommended", [])

- if not recommended:

- log.hugewarning(

- f"{len(required_by):,} modules ({','.join(required_by)}) rely on {event_type} but no modules produce it"

- )

- elif len(recommended) == 1:

- log.verbose(

- f"Enabling {next(iter(recommended))} because {len(required_by):,} modules ({','.join(required_by)}) rely on it for {event_type}"

- )

- all_modules = list(set(all_modules + list(recommended)))

- scanner._scan_modules = list(set(scanner._scan_modules + list(recommended)))

- changed = True

- else:

- log.hugewarning(

- f"{len(required_by):,} modules ({','.join(required_by)}) rely on {event_type} but no enabled module produces it"

- )

- log.hugewarning(

- f"Recommend enabling one or more of the following modules which produce {event_type}:"

- )

- for m in recommended:

- log.warning(f" - {m}")

- if not changed:

- break

-

- # required flags

- modules = set(scanner._scan_modules)

- for m in scanner._scan_modules:

- flags = module_loader._preloaded.get(m, {}).get("flags", [])

- if not all(f in flags for f in options.require_flags):

- log.verbose(

- f"Removing {m} because it does not have the required flags: {'+'.join(options.require_flags)}"

- )

- with suppress(KeyError):

- modules.remove(m)

-

- # excluded flags

- for m in scanner._scan_modules:

- flags = module_loader._preloaded.get(m, {}).get("flags", [])

- if any(f in flags for f in options.exclude_flags):

- log.verbose(f"Removing {m} because of excluded flag: {','.join(options.exclude_flags)}")

- with suppress(KeyError):

- modules.remove(m)

-

- # excluded modules

- for m in options.exclude_modules:

- if m in modules:

- log.verbose(f"Removing {m} because it is excluded")

- with suppress(KeyError):

- modules.remove(m)

- scanner._scan_modules = list(modules)

-

- log_fn = log.info

- if options.list_modules or options.help_all:

- log_fn = log.stdout

-

- help_modules = list(modules)

- if module_filtering:

- help_modules = None

-

- if options.help_all:

- log_fn(parser.format_help())

-

- if options.list_flags:

- log.stdout("")

- log.stdout("### FLAGS ###")

- log.stdout("")

- for row in module_loader.flags_table(flags=options.flags).splitlines():

- log.stdout(row)

- return

-

- log_fn("")

- log_fn("### MODULES ###")

- log_fn("")

- for row in module_loader.modules_table(modules=help_modules).splitlines():

- log_fn(row)

-

- if options.help_all:

- log_fn("")

- log_fn("### MODULE OPTIONS ###")

- log_fn("")

- for row in module_loader.modules_options_table(modules=help_modules).splitlines():

- log_fn(row)

-

- if options.list_modules or options.list_flags or options.help_all:

- return

-

- module_list = module_loader.filter_modules(modules=modules)

- deadly_modules = []

- active_modules = []

- active_aggressive_modules = []

- slow_modules = []

- for m in module_list:

- if m[0] in scanner._scan_modules:

- if "deadly" in m[-1]["flags"]:

- deadly_modules.append(m[0])

- if "active" in m[-1]["flags"]:

- active_modules.append(m[0])

- if "aggressive" in m[-1]["flags"]:

- active_aggressive_modules.append(m[0])

- if "slow" in m[-1]["flags"]:

- slow_modules.append(m[0])

- if scanner._scan_modules:

- if deadly_modules and not options.allow_deadly:

- log.hugewarning(f"You enabled the following deadly modules: {','.join(deadly_modules)}")

- log.hugewarning(f"Deadly modules are highly intrusive")

- log.hugewarning(f"Please specify --allow-deadly to continue")

- return False

- if active_modules:

- if active_modules:

- if active_aggressive_modules:

- log.hugewarning(

- "This is an (aggressive) active scan! Intrusive connections will be made to target"

- )

- else:

- log.hugewarning(

- "This is a (safe) active scan. Non-intrusive connections will be made to target"

- )

- else:

- log.hugeinfo("This is a passive scan. No connections will be made to target")

- if slow_modules:

- log.warning(

- f"You have enabled the following slow modules: {','.join(slow_modules)}. Scan may take a while"

- )

-

- scanner.helpers.word_cloud.load()

-

- await scanner._prep()

-

- if not options.dry_run:

- log.trace(f"Command: {' '.join(sys.argv)}")

-

- # if we're on the terminal, enable keyboard interaction

- if sys.stdin.isatty():

-

- import fcntl

- from bbot.core.helpers.misc import smart_decode

-

- if not options.agent_mode and not options.yes:

- log.hugesuccess(f"Scan ready. Press enter to execute {scanner.name}")

- input()

-

- def handle_keyboard_input(keyboard_input):

- kill_regex = re.compile(r"kill (?P[a-z0-9_]+)")

- if keyboard_input:

- log.verbose(f'Got keyboard input: "{keyboard_input}"')

- kill_match = kill_regex.match(keyboard_input)

- if kill_match:

- module = kill_match.group("module")

- if module in scanner.modules:

- log.hugewarning(f'Killing module: "{module}"')

- scanner.manager.kill_module(module, message="killed by user")

- else:

- log.warning(f'Invalid module: "{module}"')

- else:

- toggle_log_level(logger=log)

- scanner.manager.modules_status(_log=True)

+ # if we're listing modules or their options

+ if options.list_modules or options.list_module_options:

+

+ # if no modules or flags are specified, enable everything

+ if not (options.modules or options.output_modules or options.flags):

+ for module, preloaded in preset.module_loader.preloaded().items():

+ module_type = preloaded.get("type", "scan")

+ preset.add_module(module, module_type=module_type)

+

+ preset.bake()

+

+ # --list-modules

+ if options.list_modules:

+ log.stdout("")

+ log.stdout("### MODULES ###")

+ log.stdout("")

+ for row in preset.module_loader.modules_table(preset.modules).splitlines():

+ log.stdout(row)

+ return

+

+ # --list-module-options

+ if options.list_module_options:

+ log.stdout("")

+ log.stdout("### MODULE OPTIONS ###")

+ log.stdout("")

+ for row in preset.module_loader.modules_options_table(preset.modules).splitlines():

+ log.stdout(row)

+ return

+

+ # --list-flags

+ if options.list_flags:

+ flags = preset.flags if preset.flags else None

+ log.stdout("")

+ log.stdout("### FLAGS ###")

+ log.stdout("")

+ for row in preset.module_loader.flags_table(flags=flags).splitlines():

+ log.stdout(row)

+ return

- reader = asyncio.StreamReader()

- protocol = asyncio.StreamReaderProtocol(reader)

- await asyncio.get_event_loop().connect_read_pipe(lambda: protocol, sys.stdin)

+ try:

+ scan = Scanner(preset=preset)

+ except (PresetAbortError, ValidationError) as e:

+ log.warning(str(e))

+ return

- # set stdout and stderr to blocking mode

- # this is needed to prevent BlockingIOErrors in logging etc.

- fds = [sys.stdout.fileno(), sys.stderr.fileno()]

- for fd in fds:

- flags = fcntl.fcntl(fd, fcntl.F_GETFL)

- fcntl.fcntl(fd, fcntl.F_SETFL, flags & ~os.O_NONBLOCK)

+ deadly_modules = [

+ m for m in scan.preset.scan_modules if "deadly" in preset.preloaded_module(m).get("flags", [])

+ ]

+ if deadly_modules and not options.allow_deadly:

+ log.hugewarning(f"You enabled the following deadly modules: {','.join(deadly_modules)}")

+ log.hugewarning(f"Deadly modules are highly intrusive")

+ log.hugewarning(f"Please specify --allow-deadly to continue")

+ return False

+

+ # --current-preset

+ if options.current_preset:

+ print(scan.preset.to_yaml())

+ sys.exit(0)

+ return

+

+ # --current-preset-full

+ if options.current_preset_full:

+ print(scan.preset.to_yaml(full_config=True))

+ sys.exit(0)

+ return

- async def akeyboard_listen():

+ # --install-all-deps

+ if options.install_all_deps:

+ all_modules = list(preset.module_loader.preloaded())

+ scan.helpers.depsinstaller.force_deps = True

+ succeeded, failed = await scan.helpers.depsinstaller.install(*all_modules)

+ log.info("Finished installing module dependencies")

+ return False if failed else True

+

+ scan_name = str(scan.name)

+

+ log.verbose("")

+ log.verbose("### MODULES ENABLED ###")

+ log.verbose("")

+ for row in scan.preset.module_loader.modules_table(scan.preset.modules).splitlines():

+ log.verbose(row)

+

+ scan.helpers.word_cloud.load()

+ await scan._prep()

+

+ if not options.dry_run:

+ log.trace(f"Command: {' '.join(sys.argv)}")

+

+ if sys.stdin.isatty():

+ if not options.yes:

+ log.hugesuccess(f"Scan ready. Press enter to execute {scan.name}")

+ input()

+

+ import os

+ import re

+ import fcntl

+ from bbot.core.helpers.misc import smart_decode

+

+ def handle_keyboard_input(keyboard_input):

+ kill_regex = re.compile(r"kill (?P[a-z0-9_]+)")

+ if keyboard_input:

+ log.verbose(f'Got keyboard input: "{keyboard_input}"')

+ kill_match = kill_regex.match(keyboard_input)

+ if kill_match:

+ module = kill_match.group("module")

+ if module in scan.modules:

+ log.hugewarning(f'Killing module: "{module}"')

+ scan.kill_module(module, message="killed by user")

+ else:

+ log.warning(f'Invalid module: "{module}"')

+ else:

+ scan.preset.core.logger.toggle_log_level(logger=log)

+ scan.modules_status(_log=True)

+

+ reader = asyncio.StreamReader()

+ protocol = asyncio.StreamReaderProtocol(reader)

+ await asyncio.get_event_loop().connect_read_pipe(lambda: protocol, sys.stdin)

+

+ # set stdout and stderr to blocking mode

+ # this is needed to prevent BlockingIOErrors in logging etc.

+ fds = [sys.stdout.fileno(), sys.stderr.fileno()]

+ for fd in fds:

+ flags = fcntl.fcntl(fd, fcntl.F_GETFL)

+ fcntl.fcntl(fd, fcntl.F_SETFL, flags & ~os.O_NONBLOCK)

+

+ async def akeyboard_listen():

+ try:

+ allowed_errors = 10

+ while 1:

+ keyboard_input = None

try:

+ keyboard_input = smart_decode((await reader.readline()).strip())

allowed_errors = 10

- while 1:

- keyboard_input = None

- try:

- keyboard_input = smart_decode((await reader.readline()).strip())

- allowed_errors = 10

- except Exception as e:

- log_to_stderr(f"Error in keyboard listen loop: {e}", level="TRACE")

- log_to_stderr(traceback.format_exc(), level="TRACE")

- allowed_errors -= 1

- if keyboard_input is not None:

- handle_keyboard_input(keyboard_input)

- if allowed_errors <= 0:

- break

except Exception as e:

- log_to_stderr(f"Error in keyboard listen task: {e}", level="ERROR")

+ log_to_stderr(f"Error in keyboard listen loop: {e}", level="TRACE")

log_to_stderr(traceback.format_exc(), level="TRACE")

+ allowed_errors -= 1

+ if keyboard_input is not None:

+ handle_keyboard_input(keyboard_input)

+ if allowed_errors <= 0:

+ break

+ except Exception as e:

+ log_to_stderr(f"Error in keyboard listen task: {e}", level="ERROR")

+ log_to_stderr(traceback.format_exc(), level="TRACE")

- asyncio.create_task(akeyboard_listen())

+ asyncio.create_task(akeyboard_listen())

- await scanner.async_start_without_generator()

+ await scan.async_start_without_generator()

- except bbot.core.errors.ScanError as e:

- log_to_stderr(str(e), level="ERROR")

- except Exception:

- raise

-

- except bbot.core.errors.BBOTError as e:

- log_to_stderr(f"{e} (--debug for details)", level="ERROR")

- if log_level <= logging.DEBUG:

- log_to_stderr(traceback.format_exc(), level="DEBUG")

- err = True

-

- except Exception:

- log_to_stderr(f"Encountered unknown error: {traceback.format_exc()}", level="ERROR")

- err = True

+ return True

finally:

# save word cloud

with suppress(BaseException):

- save_success, filename = scanner.helpers.word_cloud.save()

+ save_success, filename = scan.helpers.word_cloud.save()

if save_success:

- log_to_stderr(f"Saved word cloud ({len(scanner.helpers.word_cloud):,} words) to {filename}")

+ log_to_stderr(f"Saved word cloud ({len(scan.helpers.word_cloud):,} words) to {filename}")

# remove output directory if empty

with suppress(BaseException):

- scanner.home.rmdir()

- if err:

- os._exit(1)

+ scan.home.rmdir()

def main():

+ import asyncio

+ import traceback

+ from bbot.core import CORE

+

global scan_name

try:

asyncio.run(_main())

except asyncio.CancelledError:

- if get_log_level() <= logging.DEBUG:

+ if CORE.logger.log_level <= logging.DEBUG:

log_to_stderr(traceback.format_exc(), level="DEBUG")

except KeyboardInterrupt:

msg = "Interrupted"

if scan_name:

msg = f"You killed {scan_name}"

log_to_stderr(msg, level="WARNING")

- if get_log_level() <= logging.DEBUG:

+ if CORE.logger.log_level <= logging.DEBUG:

log_to_stderr(traceback.format_exc(), level="DEBUG")

exit(1)

diff --git a/bbot/core/__init__.py b/bbot/core/__init__.py

index 52cf06cc5..6cfaecf0f 100644

--- a/bbot/core/__init__.py

+++ b/bbot/core/__init__.py

@@ -1,4 +1,3 @@

-# logging

-from .logger import init_logging

+from .core import BBOTCore

-init_logging()

+CORE = BBOTCore()

diff --git a/bbot/core/config/__init__.py b/bbot/core/config/__init__.py

new file mode 100644

index 000000000..c36d91f48

--- /dev/null

+++ b/bbot/core/config/__init__.py

@@ -0,0 +1,12 @@

+import sys

+import multiprocessing as mp

+

+try:

+ mp.set_start_method("spawn")

+except Exception:

+ start_method = mp.get_start_method()

+ if start_method != "spawn":

+ print(

+ f"[WARN] Multiprocessing spawn method is set to {start_method}. This may negatively affect performance.",

+ file=sys.stderr,

+ )

diff --git a/bbot/core/config/files.py b/bbot/core/config/files.py

new file mode 100644

index 000000000..6547d02ec

--- /dev/null

+++ b/bbot/core/config/files.py

@@ -0,0 +1,55 @@

+import sys

+from pathlib import Path

+from omegaconf import OmegaConf

+

+from ..helpers.misc import mkdir

+from ...logger import log_to_stderr

+from ...errors import ConfigLoadError

+

+

+bbot_code_dir = Path(__file__).parent.parent.parent

+

+

+class BBOTConfigFiles:

+

+ config_dir = (Path.home() / ".config" / "bbot").resolve()

+ defaults_filename = (bbot_code_dir / "defaults.yml").resolve()

+ config_filename = (config_dir / "bbot.yml").resolve()

+

+ def __init__(self, core):

+ self.core = core

+

+ def ensure_config_file(self):

+ mkdir(self.config_dir)

+

+ comment_notice = (

+ "# NOTICE: THESE ENTRIES ARE COMMENTED BY DEFAULT\n"

+ + "# Please be sure to uncomment when inserting API keys, etc.\n"

+ )

+

+ # ensure bbot.yml

+ if not self.config_filename.exists():

+ log_to_stderr(f"Creating BBOT config at {self.config_filename}")

+ yaml = OmegaConf.to_yaml(self.core.default_config)

+ yaml = comment_notice + "\n".join(f"# {line}" for line in yaml.splitlines())

+ with open(str(self.config_filename), "w") as f:

+ f.write(yaml)

+

+ def _get_config(self, filename, name="config"):

+ filename = Path(filename).resolve()

+ try:

+ conf = OmegaConf.load(str(filename))

+ cli_silent = any(x in sys.argv for x in ("-s", "--silent"))

+ if __name__ == "__main__" and not cli_silent:

+ log_to_stderr(f"Loaded {name} from {filename}")

+ return conf

+ except Exception as e:

+ if filename.exists():

+ raise ConfigLoadError(f"Error parsing config at {filename}:\n\n{e}")

+ return OmegaConf.create()

+

+ def get_custom_config(self):

+ return self._get_config(self.config_filename, name="config")

+

+ def get_default_config(self):

+ return self._get_config(self.defaults_filename, name="defaults")

diff --git a/bbot/core/config/logger.py b/bbot/core/config/logger.py

new file mode 100644

index 000000000..b6aec39aa

--- /dev/null

+++ b/bbot/core/config/logger.py

@@ -0,0 +1,258 @@

+import sys

+import atexit

+import logging

+from copy import copy

+import multiprocessing

+import logging.handlers

+from pathlib import Path

+

+from ..helpers.misc import mkdir, error_and_exit

+from ...logger import colorize, loglevel_mapping

+

+

+debug_format = logging.Formatter("%(asctime)s [%(levelname)s] %(name)s %(filename)s:%(lineno)s %(message)s")

+

+

+class ColoredFormatter(logging.Formatter):

+ """

+ Pretty colors for terminal

+ """

+

+ formatter = logging.Formatter("%(levelname)s %(message)s")

+ module_formatter = logging.Formatter("%(levelname)s %(name)s: %(message)s")

+

+ def format(self, record):

+ colored_record = copy(record)

+ levelname = colored_record.levelname

+ levelshort = loglevel_mapping.get(levelname, "INFO")

+ colored_record.levelname = colorize(f"[{levelshort}]", level=levelname)

+ if levelname == "CRITICAL" or levelname.startswith("HUGE"):

+ colored_record.msg = colorize(colored_record.msg, level=levelname)

+ # remove name

+ if colored_record.name.startswith("bbot.modules."):

+ colored_record.name = colored_record.name.split("bbot.modules.")[-1]

+ return self.module_formatter.format(colored_record)

+ return self.formatter.format(colored_record)

+

+

+class BBOTLogger:

+ """

+ The main BBOT logger.

+

+ The job of this class is to manage the different log handlers in BBOT,

+ allow adding new log handlers, and easily switching log levels on the fly.

+ """

+

+ def __init__(self, core):

+ # custom logging levels

+ if getattr(logging, "STDOUT", None) is None:

+ self.addLoggingLevel("STDOUT", 100)

+ self.addLoggingLevel("TRACE", 49)

+ self.addLoggingLevel("HUGEWARNING", 31)

+ self.addLoggingLevel("HUGESUCCESS", 26)

+ self.addLoggingLevel("SUCCESS", 25)

+ self.addLoggingLevel("HUGEINFO", 21)

+ self.addLoggingLevel("HUGEVERBOSE", 16)

+ self.addLoggingLevel("VERBOSE", 15)

+ self.verbosity_levels_toggle = [logging.INFO, logging.VERBOSE, logging.DEBUG]

+

+ self._loggers = None

+ self._log_handlers = None

+ self._log_level = None

+ self.root_logger = logging.getLogger()

+ self.core_logger = logging.getLogger("bbot")

+ self.core = core

+

+ self.listener = None

+

+ self.process_name = multiprocessing.current_process().name

+ if self.process_name == "MainProcess":

+ self.queue = multiprocessing.Queue()

+ self.setup_queue_handler()

+ # Start the QueueListener

+ self.listener = logging.handlers.QueueListener(self.queue, *self.log_handlers.values())

+ self.listener.start()

+ atexit.register(self.listener.stop)

+

+ self.log_level = logging.INFO

+

+ def setup_queue_handler(self, logging_queue=None, log_level=logging.DEBUG):

+ if logging_queue is None:

+ logging_queue = self.queue

+ else:

+ self.queue = logging_queue

+ self.queue_handler = logging.handlers.QueueHandler(logging_queue)

+

+ self.root_logger.addHandler(self.queue_handler)

+

+ self.core_logger.setLevel(log_level)

+ # disable asyncio logging for child processes

+ if self.process_name != "MainProcess":

+ logging.getLogger("asyncio").setLevel(logging.ERROR)

+

+ def addLoggingLevel(self, levelName, levelNum, methodName=None):

+ """

+ Comprehensively adds a new logging level to the `logging` module and the

+ currently configured logging class.

+

+ `levelName` becomes an attribute of the `logging` module with the value

+ `levelNum`. `methodName` becomes a convenience method for both `logging`

+ itself and the class returned by `logging.getLoggerClass()` (usually just

+ `logging.Logger`). If `methodName` is not specified, `levelName.lower()` is

+ used.

+

+ To avoid accidental clobberings of existing attributes, this method will

+ raise an `AttributeError` if the level name is already an attribute of the

+ `logging` module or if the method name is already present

+

+ Example

+ -------

+ >>> addLoggingLevel('TRACE', logging.DEBUG - 5)

+ >>> logging.getLogger(__name__).setLevel('TRACE')

+ >>> logging.getLogger(__name__).trace('that worked')

+ >>> logging.trace('so did this')

+ >>> logging.TRACE

+ 5

+

+ """

+ if not methodName:

+ methodName = levelName.lower()

+

+ if hasattr(logging, levelName):

+ raise AttributeError(f"{levelName} already defined in logging module")

+ if hasattr(logging, methodName):

+ raise AttributeError(f"{methodName} already defined in logging module")

+ if hasattr(logging.getLoggerClass(), methodName):

+ raise AttributeError(f"{methodName} already defined in logger class")

+

+ # This method was inspired by the answers to Stack Overflow post

+ # http://stackoverflow.com/q/2183233/2988730, especially

+ # http://stackoverflow.com/a/13638084/2988730

+ def logForLevel(self, message, *args, **kwargs):

+ if self.isEnabledFor(levelNum):

+ self._log(levelNum, message, args, **kwargs)

+

+ def logToRoot(message, *args, **kwargs):

+ logging.log(levelNum, message, *args, **kwargs)

+

+ logging.addLevelName(levelNum, levelName)

+ setattr(logging, levelName, levelNum)

+ setattr(logging.getLoggerClass(), methodName, logForLevel)

+ setattr(logging, methodName, logToRoot)

+

+ @property

+ def loggers(self):

+ if self._loggers is None:

+ self._loggers = [

+ logging.getLogger("bbot"),

+ logging.getLogger("asyncio"),

+ ]

+ return self._loggers

+

+ def add_log_handler(self, handler, formatter=None):

+ if self.listener is None:

+ return

+ if handler.formatter is None:

+ handler.setFormatter(debug_format)

+ if handler not in self.listener.handlers:

+ self.listener.handlers = self.listener.handlers + (handler,)

+

+ def remove_log_handler(self, handler):

+ if self.listener is None:

+ return

+ if handler in self.listener.handlers:

+ new_handlers = list(self.listener.handlers)

+ new_handlers.remove(handler)

+ self.listener.handlers = tuple(new_handlers)

+

+ def include_logger(self, logger):

+ if logger not in self.loggers:

+ self.loggers.append(logger)

+ if self.log_level is not None:

+ logger.setLevel(self.log_level)

+ for handler in self.log_handlers.values():

+ self.add_log_handler(handler)

+

+ @property

+ def log_handlers(self):

+ if self._log_handlers is None:

+ log_dir = Path(self.core.home) / "logs"

+ if not mkdir(log_dir, raise_error=False):

+ error_and_exit(f"Failure creating or error writing to BBOT logs directory ({log_dir})")

+

+ # Main log file

+ main_handler = logging.handlers.TimedRotatingFileHandler(

+ f"{log_dir}/bbot.log", when="d", interval=1, backupCount=14

+ )

+

+ # Separate log file for debugging

+ debug_handler = logging.handlers.TimedRotatingFileHandler(

+ f"{log_dir}/bbot.debug.log", when="d", interval=1, backupCount=14

+ )

+

+ def stderr_filter(record):

+ if record.levelno == logging.STDOUT or (

+ record.levelno == logging.TRACE and self.log_level > logging.DEBUG

+ ):

+ return False

+ if record.levelno < self.log_level:

+ return False

+ return True

+

+ # Log to stderr

+ stderr_handler = logging.StreamHandler(sys.stderr)

+ stderr_handler.addFilter(stderr_filter)

+ # Log to stdout

+ stdout_handler = logging.StreamHandler(sys.stdout)

+ stdout_handler.addFilter(lambda x: x.levelno == logging.STDOUT)

+ # log to files

+ debug_handler.addFilter(

+ lambda x: x.levelno == logging.TRACE or (x.levelno < logging.VERBOSE and x.levelno != logging.STDOUT)

+ )

+ main_handler.addFilter(

+ lambda x: x.levelno not in (logging.STDOUT, logging.TRACE) and x.levelno >= logging.VERBOSE

+ )

+

+ # Set log format

+ debug_handler.setFormatter(debug_format)

+ main_handler.setFormatter(debug_format)

+ stderr_handler.setFormatter(ColoredFormatter("%(levelname)s %(name)s: %(message)s"))

+ stdout_handler.setFormatter(logging.Formatter("%(message)s"))

+

+ self._log_handlers = {

+ "stderr": stderr_handler,

+ "stdout": stdout_handler,

+ "file_debug": debug_handler,

+ "file_main": main_handler,

+ }

+ return self._log_handlers

+

+ @property

+ def log_level(self):

+ if self._log_level is None:

+ return logging.INFO

+ return self._log_level

+

+ @log_level.setter

+ def log_level(self, level):

+ self.set_log_level(level)

+

+ def set_log_level(self, level, logger=None):

+ if isinstance(level, str):

+ level = logging.getLevelName(level)

+ if logger is not None:

+ logger.hugeinfo(f"Setting log level to {logging.getLevelName(level)}")

+ self._log_level = level

+ for logger in self.loggers:

+ logger.setLevel(level)

+

+ def toggle_log_level(self, logger=None):

+ if self.log_level in self.verbosity_levels_toggle:

+ for i, level in enumerate(self.verbosity_levels_toggle):

+ if self.log_level == level:

+ self.set_log_level(

+ self.verbosity_levels_toggle[(i + 1) % len(self.verbosity_levels_toggle)], logger=logger

+ )

+ break

+ else:

+ self.set_log_level(self.verbosity_levels_toggle[0], logger=logger)

diff --git a/bbot/core/configurator/__init__.py b/bbot/core/configurator/__init__.py

deleted file mode 100644

index 15962ce59..000000000

--- a/bbot/core/configurator/__init__.py

+++ /dev/null

@@ -1,103 +0,0 @@

-import re

-from omegaconf import OmegaConf

-

-from . import files, args, environ

-from ..errors import ConfigLoadError

-from ...modules import module_loader

-from ..helpers.logger import log_to_stderr

-from ..helpers.misc import error_and_exit, filter_dict, clean_dict, match_and_exit, is_file

-

-# cached sudo password

-bbot_sudo_pass = None

-

-modules_config = OmegaConf.create(

- {

- "modules": module_loader.configs(type="scan"),

- "output_modules": module_loader.configs(type="output"),

- "internal_modules": module_loader.configs(type="internal"),

- }

-)

-

-try:

- config = OmegaConf.merge(

- # first, pull module defaults

- modules_config,

- # then look in .yaml files

- files.get_config(),

- # finally, pull from CLI arguments

- args.get_config(),

- )

-except ConfigLoadError as e:

- error_and_exit(e)

-

-

-config = environ.prepare_environment(config)

-default_config = OmegaConf.merge(files.default_config, modules_config)

-

-

-sentinel = object()

-

-

-exclude_from_validation = re.compile(r".*modules\.[a-z0-9_]+\.(?:batch_size|max_event_handlers)$")

-

-

-def check_cli_args():

- conf = [a for a in args.cli_config if not is_file(a)]

- all_options = None

- for c in conf:

- c = c.split("=")[0].strip()

- v = OmegaConf.select(default_config, c, default=sentinel)

- # if option isn't in the default config

- if v is sentinel:

- if exclude_from_validation.match(c):

- continue

- if all_options is None:

- from ...modules import module_loader

-

- modules_options = set()

- for module_options in module_loader.modules_options().values():

- modules_options.update(set(o[0] for o in module_options))

- global_options = set(default_config.keys()) - {"modules", "output_modules"}

- all_options = global_options.union(modules_options)

- match_and_exit(c, all_options, msg="module option")

-

-

-def ensure_config_files():

- secrets_strings = ["api_key", "username", "password", "token", "secret", "_id"]

- exclude_keys = ["modules", "output_modules", "internal_modules"]

-

- comment_notice = (

- "# NOTICE: THESE ENTRIES ARE COMMENTED BY DEFAULT\n"

- + "# Please be sure to uncomment when inserting API keys, etc.\n"

- )

-

- # ensure bbot.yml

- if not files.config_filename.exists():

- log_to_stderr(f"Creating BBOT config at {files.config_filename}")

- no_secrets_config = OmegaConf.to_object(default_config)

- no_secrets_config = clean_dict(

- no_secrets_config,

- *secrets_strings,

- fuzzy=True,

- exclude_keys=exclude_keys,

- )

- yaml = OmegaConf.to_yaml(no_secrets_config)

- yaml = comment_notice + "\n".join(f"# {line}" for line in yaml.splitlines())

- with open(str(files.config_filename), "w") as f:

- f.write(yaml)

-

- # ensure secrets.yml

- if not files.secrets_filename.exists():

- log_to_stderr(f"Creating BBOT secrets at {files.secrets_filename}")

- secrets_only_config = OmegaConf.to_object(default_config)

- secrets_only_config = filter_dict(

- secrets_only_config,

- *secrets_strings,

- fuzzy=True,

- exclude_keys=exclude_keys,

- )

- yaml = OmegaConf.to_yaml(secrets_only_config)

- yaml = comment_notice + "\n".join(f"# {line}" for line in yaml.splitlines())

- with open(str(files.secrets_filename), "w") as f:

- f.write(yaml)

- files.secrets_filename.chmod(0o600)

diff --git a/bbot/core/configurator/args.py b/bbot/core/configurator/args.py

deleted file mode 100644

index 173583827..000000000

--- a/bbot/core/configurator/args.py

+++ /dev/null

@@ -1,255 +0,0 @@

-import sys

-import argparse

-from pathlib import Path

-from omegaconf import OmegaConf

-from contextlib import suppress

-

-from ...modules import module_loader

-from ..helpers.logger import log_to_stderr

-from ..helpers.misc import chain_lists, match_and_exit, is_file

-

-module_choices = sorted(set(module_loader.configs(type="scan")))

-output_module_choices = sorted(set(module_loader.configs(type="output")))

-

-flag_choices = set()

-for m, c in module_loader.preloaded().items():

- flag_choices.update(set(c.get("flags", [])))

-

-

-class BBOTArgumentParser(argparse.ArgumentParser):

- _dummy = False

-

- def parse_args(self, *args, **kwargs):

- """

- Allow space or comma-separated entries for modules and targets

- For targets, also allow input files containing additional targets

- """

- ret = super().parse_args(*args, **kwargs)

- # silent implies -y

- if ret.silent:

- ret.yes = True

- ret.modules = chain_lists(ret.modules)

- ret.exclude_modules = chain_lists(ret.exclude_modules)

- ret.output_modules = chain_lists(ret.output_modules)

- ret.targets = chain_lists(ret.targets, try_files=True, msg="Reading targets from file: {filename}")

- ret.whitelist = chain_lists(ret.whitelist, try_files=True, msg="Reading whitelist from file: {filename}")

- ret.blacklist = chain_lists(ret.blacklist, try_files=True, msg="Reading blacklist from file: {filename}")

- ret.flags = chain_lists(ret.flags)

- ret.exclude_flags = chain_lists(ret.exclude_flags)

- ret.require_flags = chain_lists(ret.require_flags)

- for m in ret.modules:

- if m not in module_choices and not self._dummy:

- match_and_exit(m, module_choices, msg="module")

- for m in ret.exclude_modules:

- if m not in module_choices and not self._dummy:

- match_and_exit(m, module_choices, msg="module")

- for m in ret.output_modules:

- if m not in output_module_choices and not self._dummy:

- match_and_exit(m, output_module_choices, msg="output module")

- for f in set(ret.flags + ret.require_flags):

- if f not in flag_choices and not self._dummy:

- if f not in flag_choices and not self._dummy:

- match_and_exit(f, flag_choices, msg="flag")

- return ret

-

-

-class DummyArgumentParser(BBOTArgumentParser):

- _dummy = True

-

- def error(self, message):

- pass

-

-

-scan_examples = [

- (

- "Subdomains",

- "Perform a full subdomain enumeration on evilcorp.com",

- "bbot -t evilcorp.com -f subdomain-enum",

- ),

- (

- "Subdomains (passive only)",

- "Perform a passive-only subdomain enumeration on evilcorp.com",

- "bbot -t evilcorp.com -f subdomain-enum -rf passive",

- ),

- (

- "Subdomains + port scan + web screenshots",

- "Port-scan every subdomain, screenshot every webpage, output to current directory",

- "bbot -t evilcorp.com -f subdomain-enum -m nmap gowitness -n my_scan -o .",

- ),

- (

- "Subdomains + basic web scan",

- "A basic web scan includes wappalyzer, robots.txt, and other non-intrusive web modules",

- "bbot -t evilcorp.com -f subdomain-enum web-basic",

- ),

- (

- "Web spider",

- "Crawl www.evilcorp.com up to a max depth of 2, automatically extracting emails, secrets, etc.",

- "bbot -t www.evilcorp.com -m httpx robots badsecrets secretsdb -c web_spider_distance=2 web_spider_depth=2",

- ),

- (

- "Everything everywhere all at once",

- "Subdomains, emails, cloud buckets, port scan, basic web, web screenshots, nuclei",

- "bbot -t evilcorp.com -f subdomain-enum email-enum cloud-enum web-basic -m nmap gowitness nuclei --allow-deadly",

- ),

-]

-

-usage_examples = [

- (

- "List modules",

- "",

- "bbot -l",

- ),

- (

- "List flags",

- "",

- "bbot -lf",

- ),

-]

-

-

-epilog = "EXAMPLES\n"

-for example in (scan_examples, usage_examples):

- for title, description, command in example:

- epilog += f"\n {title}:\n {command}\n"

-

-

-parser = BBOTArgumentParser(

- description="Bighuge BLS OSINT Tool", formatter_class=argparse.RawTextHelpFormatter, epilog=epilog

-)

-dummy_parser = DummyArgumentParser(

- description="Bighuge BLS OSINT Tool", formatter_class=argparse.RawTextHelpFormatter, epilog=epilog

-)

-for p in (parser, dummy_parser):

- p.add_argument("--help-all", action="store_true", help="Display full help including module config options")

- target = p.add_argument_group(title="Target")

- target.add_argument("-t", "--targets", nargs="+", default=[], help="Targets to seed the scan", metavar="TARGET")

- target.add_argument(

- "-w",

- "--whitelist",

- nargs="+",

- default=[],

- help="What's considered in-scope (by default it's the same as --targets)",

- )

- target.add_argument("-b", "--blacklist", nargs="+", default=[], help="Don't touch these things")

- target.add_argument(

- "--strict-scope",

- action="store_true",

- help="Don't consider subdomains of target/whitelist to be in-scope",

- )

- modules = p.add_argument_group(title="Modules")

- modules.add_argument(

- "-m",

- "--modules",

- nargs="+",

- default=[],

- help=f'Modules to enable. Choices: {",".join(module_choices)}',

- metavar="MODULE",

- )

- modules.add_argument("-l", "--list-modules", action="store_true", help=f"List available modules.")

- modules.add_argument(

- "-em", "--exclude-modules", nargs="+", default=[], help=f"Exclude these modules.", metavar="MODULE"

- )

- modules.add_argument(

- "-f",

- "--flags",

- nargs="+",

- default=[],

- help=f'Enable modules by flag. Choices: {",".join(sorted(flag_choices))}',

- metavar="FLAG",

- )

- modules.add_argument("-lf", "--list-flags", action="store_true", help=f"List available flags.")

- modules.add_argument(

- "-rf",

- "--require-flags",

- nargs="+",

- default=[],

- help=f"Only enable modules with these flags (e.g. -rf passive)",

- metavar="FLAG",

- )

- modules.add_argument(

- "-ef",

- "--exclude-flags",

- nargs="+",

- default=[],

- help=f"Disable modules with these flags. (e.g. -ef aggressive)",

- metavar="FLAG",

- )

- modules.add_argument(

- "-om",

- "--output-modules",

- nargs="+",

- default=["human", "json", "csv"],

- help=f'Output module(s). Choices: {",".join(output_module_choices)}',

- metavar="MODULE",

- )

- modules.add_argument("--allow-deadly", action="store_true", help="Enable the use of highly aggressive modules")

- scan = p.add_argument_group(title="Scan")

- scan.add_argument("-n", "--name", help="Name of scan (default: random)", metavar="SCAN_NAME")

- scan.add_argument(

- "-o",

- "--output-dir",

- metavar="DIR",

- )

- scan.add_argument(

- "-c",

- "--config",

- nargs="*",

- help="custom config file, or configuration options in key=value format: 'modules.shodan.api_key=1234'",

- metavar="CONFIG",

- )

- scan.add_argument("-v", "--verbose", action="store_true", help="Be more verbose")

- scan.add_argument("-d", "--debug", action="store_true", help="Enable debugging")

- scan.add_argument("-s", "--silent", action="store_true", help="Be quiet")

- scan.add_argument("--force", action="store_true", help="Run scan even if module setups fail")

- scan.add_argument("-y", "--yes", action="store_true", help="Skip scan confirmation prompt")

- scan.add_argument("--dry-run", action="store_true", help=f"Abort before executing scan")

- scan.add_argument(

- "--current-config",

- action="store_true",

- help="Show current config in YAML format",

- )

- deps = p.add_argument_group(

- title="Module dependencies", description="Control how modules install their dependencies"

- )

- g2 = deps.add_mutually_exclusive_group()

- g2.add_argument("--no-deps", action="store_true", help="Don't install module dependencies")

- g2.add_argument("--force-deps", action="store_true", help="Force install all module dependencies")

- g2.add_argument("--retry-deps", action="store_true", help="Try again to install failed module dependencies")

- g2.add_argument(

- "--ignore-failed-deps", action="store_true", help="Run modules even if they have failed dependencies"

- )

- g2.add_argument("--install-all-deps", action="store_true", help="Install dependencies for all modules")

- agent = p.add_argument_group(title="Agent", description="Report back to a central server")

- agent.add_argument("-a", "--agent-mode", action="store_true", help="Start in agent mode")

- misc = p.add_argument_group(title="Misc")

- misc.add_argument("--version", action="store_true", help="show BBOT version and exit")