generated from databricks-industry-solutions/industry-solutions-blueprints

-

Notifications

You must be signed in to change notification settings - Fork 2

/

Copy path01_csrd_parsing.py

241 lines (172 loc) · 9.82 KB

/

01_csrd_parsing.py

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

# Databricks notebook source

# MAGIC %md

# MAGIC ## Parsing CSRD directive

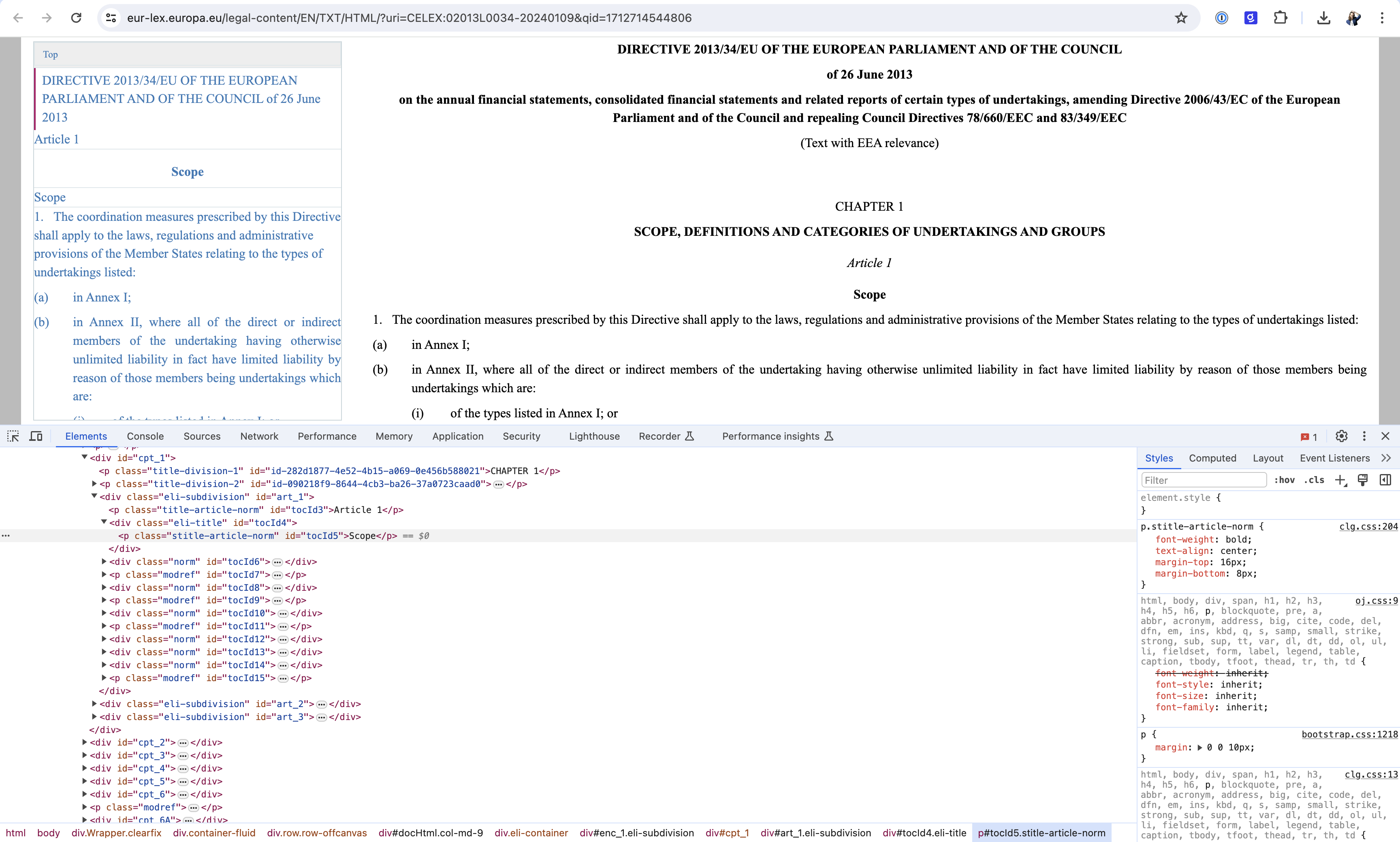

# MAGIC In this section, we programmatically extract chapters / articles / paragraphs from the CSRD [directive](https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:02013L0034-20240109&qid=1712714544806) (available as HTML) and provide users with solid data foundations for them to build generative AI applications in the context of regulatory compliance.

# COMMAND ----------

# MAGIC %run ./config/00_environment

# COMMAND ----------

import requests

act_url = 'https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:02013L0034-20240109&qid=1712714544806'

html_page = requests.get(act_url).text

# COMMAND ----------

# MAGIC %md

# MAGIC There are different scraping strategies we could apply to extract content from the CSRD directive. The simplest approach would be to extract raw content and extract "chunks" as corpus documents. Whilst this approach would certainly be the easiest route (and often times the preferred option for generative AI use cases of lower materiality), splitting key paragraphs might contribute to the concept of model hallucination since the primary purpose of large language models is to "infer" missing words. This would generate content that may not 100% in line with a given regulatory articles that are left at the discretion of a model. Making up new regulations is probably something we may want to avoid...

# COMMAND ----------

# MAGIC %md

# MAGIC Instead, we may roll up our sleeves and go down the "boring" and "outdated" approach of scraping documents. We assume that the efforts done upfront will certainly pay off at a later stage when extracting facts around specific chapters, articles, paragraphs and citations. For that purpose, we make use of the [Beautiful soup](https://beautiful-soup-4.readthedocs.io/en/latest/) library against our HTML content.

# COMMAND ----------

# MAGIC %md

# MAGIC

# COMMAND ----------

# MAGIC %md

# MAGIC Relatively complex HTML structure (regulators are not famous for their web development skills), we could still observe delimiter tags and classes that can be used to define our scraping logic.

# COMMAND ----------

def get_directive_section(main_content):

return main_content.find('div', {'class': 'eli-main-title'})

def get_content_section(main_content):

return main_content.find('div', {'class': 'eli-subdivision'})

def get_chapter_sections(content_section):

return content_section.find_all('div', recursive=False)

def get_article_sections(chapter_section):

return chapter_section.find_all('div', {'class': 'eli-subdivision'}, recursive=False)

# COMMAND ----------

def get_directive_name(directive_section) -> str:

title_doc = directive_section.find_all('p', {'class': 'title-doc-first'})

title_doc = ' '.join([t.text.strip() for t in title_doc])

return title_doc

def get_chapter_name(chapter_section) -> str:

return chapter_section.find('p', {'class': 'title-division-2'}).text.strip().capitalize()

def get_chapter_id(chapter_section) -> str:

chapter_id = chapter_section.find('p', {'class': 'title-division-1'}).text.strip()

chapter_id = chapter_id.replace('CHAPTER', '').strip()

return chapter_id

def get_article_name(article_section) -> str:

return article_section.find('p', {'class': 'stitle-article-norm'}).text.strip()

def get_article_id(article_section) -> str:

article_id = article_section.find('p', {'class': 'title-article-norm'}).text.strip()

article_id = re.sub('\"?Article\s*', '', article_id).strip()

return article_id

# COMMAND ----------

# MAGIC %md

# MAGIC We went as deep in the document as possible before applying a less elegant solution (using regular expression) due to the non standard structure of our HTML file. But our logic applied upfront guaranteed the isolation of specific chapter, articles and paragraphs content, hence improved reliability of source documents being returned.

# COMMAND ----------

from bs4.element import Tag

import re

def _clean_paragraph(txt):

# remove multiple break lines

txt = re.sub('\n+', '\n', txt)

# simplifies bullet points

txt = re.sub('(\([\d\w]+\)\s?)\n', r'\1\t', txt)

# simplifies quote

txt = re.sub('‘', '\'', txt)

# some weird references to other articles

txt = re.sub('\(\\n[\d\w]+\n\)', '', txt)

# remove spaces before punctuation

txt = re.sub(f'\s([\.;:])', r'\1', txt)

# remove reference links

txt = re.sub('▼\w+\n', '', txt)

# format numbers

txt = re.sub('(?<=\d)\s(?=\d)', '', txt)

# remove consecutive spaces

txt = re.sub('\s{2,}', ' ', txt)

# remove leading / trailing spaces

txt = txt.strip()

return txt

def get_paragraphs(article_section):

content = {}

paragraph_number = '0'

paragraph_content = []

for child in article_section.children:

if isinstance(child, Tag):

if 'norm' in child.attrs.get('class'):

if child.name == 'p':

paragraph_content.append(child.text.strip())

elif child.name == 'div':

content[paragraph_number] = _clean_paragraph('\n'.join(paragraph_content))

paragraph_number = child.find('span', {'class': 'no-parag'}).text.strip().split('.')[0]

paragraph_content = [child.find('div', {'class': 'inline-element'}).text]

elif 'grid-container' in child.attrs.get('class'):

paragraph_content.append(child.text)

content[paragraph_number] = _clean_paragraph('\n'.join(paragraph_content))

return {k:v for k, v in content.items() if len(v) > 0}

# COMMAND ----------

# MAGIC %md

# MAGIC Finally, we extract the content hierarchy buried beneath the CSRD directive, from chapter to articles and paragraph.

# COMMAND ----------

from bs4 import BeautifulSoup

main_content = BeautifulSoup(html_page, 'html.parser')

directive_section = get_directive_section(main_content)

directive_name = get_directive_name(directive_section)

content_section = get_content_section(main_content)

for chapter_section in get_chapter_sections(content_section):

chapter_id = get_chapter_id(chapter_section)

chapter_name = get_chapter_name(chapter_section)

articles = len(get_article_sections(chapter_section))

print(f'Chapter {chapter_id}: {chapter_name}')

print(f'{articles} article(s)')

print('')

# COMMAND ----------

# MAGIC %md

# MAGIC ## Knowledge graph

# MAGIC Our content follows a tree structure where each chapter has multiple articles and each article has multiple paragraphs. A graph structure becomes a logical representation of our data. Prior to jumping into graph theory concepts, let's first create 2 dataframes representing both our nodes (article content) and edges (relationships).

# COMMAND ----------

import pandas as pd

nodes = []

edges = []

# root node, starting with Id 0

nodes.append(['0', 'CSRD', directive_name, 'DIRECTIVE'])

for chapter_section in get_chapter_sections(content_section):

chapter_id = get_chapter_id(chapter_section)

chapter_name = get_chapter_name(chapter_section)

# level 1, chapter

# chapters are included in root node

nodes.append([ chapter_id, f'Chapter {chapter_id}', chapter_name, 'CHAPTER'])

edges.append(['0', f'{chapter_id}', 'CONTAINS'])

for article_section in get_article_sections(chapter_section):

article_id = get_article_id(article_section)

article_name = get_article_name(article_section)

article_paragraphs = get_paragraphs(article_section)

# level 2, article

# articles are included in chapters

nodes.append([f'{chapter_id}.{article_id}', f'Article {article_id}', article_name, 'ARTICLE'])

edges.append([chapter_id, f'{chapter_id}.{article_id}', 'CONTAINS'])

for paragraph_id, paragraph_text in article_paragraphs.items():

# level 3, paragraph

# paragraphs are included in articles

nodes.append([f'{chapter_id}.{article_id}.{paragraph_id}', f'Article {article_id}({paragraph_id})', paragraph_text, 'PARAGRAPH'])

edges.append([f'{chapter_id}.{article_id}', f'{chapter_id}.{article_id}.{paragraph_id}', 'CONTAINS'])

# COMMAND ----------

nodes_df = pd.DataFrame(nodes, columns=['id', 'label', 'content', 'group'])

edges_df = pd.DataFrame(edges, columns=['src', 'dst', 'label'])

display(nodes_df)

# COMMAND ----------

_ = spark.createDataFrame(nodes_df).write.format('delta').mode('overwrite').saveAsTable(table_nodes)

_ = spark.createDataFrame(edges_df).write.format('delta').mode('overwrite').saveAsTable(table_edges)

# COMMAND ----------

# MAGIC %md

# MAGIC Physically stored as delta tables, the same can be rendered in memory and visualized through the [NetworkX](https://networkx.org/) and [pyvis](https://pypi.org/project/pyvis/) libaries.

# COMMAND ----------

nodes_df = spark.read.table(table_nodes).toPandas()

edges_df = spark.read.table(table_edges).toPandas()

# COMMAND ----------

import networkx as nx

CSRD = nx.DiGraph()

for i, n in nodes_df.iterrows():

CSRD.add_node(n['id'], label=n['label'], title=n['content'], group=n['group'])

for i, e in edges_df.iterrows():

if e['label'] == 'CONTAINS':

CSRD.add_edge(e['src'], e['dst'], label=e['label'])

# COMMAND ----------

# MAGIC %md

# MAGIC Our directive contains ~ 350 nodes where each node is connected to maximum 1 parent (this is expected from a tree structure), as represented below. Feel free to Zoom in and out, hovering some interesting nodes to access their actual text content.

# COMMAND ----------

from scripts.graph import displayGraph

displayHTML(displayGraph(CSRD))

# COMMAND ----------

# MAGIC %md

# MAGIC Please note that we carefully designed our graph identifiers so that one can access a given paragraph through a simple reference, expressed in the form of `chapter.article.paragraph` coordinates (e.g. `3.9.7`). This will prove to be particularly handy at later stage of the process.

# COMMAND ----------

from scripts.html_output import *

p_id = '3.9.7'

p = CSRD.nodes[p_id]

displayHTML(article_html(p['label'], p['title']))