-

Notifications

You must be signed in to change notification settings - Fork 42

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

ONVIF-triggered motion not shown in Home Assistant state #199

Comments

|

Deleted malicious comments. |

|

This happens to me as well, the integration has one sensor for MOTION and another for EXTERNAL. If you use ONVIF alerts in Blue Iris, the only sensor that will change state is the EXTERNAL one, the name of the entity is "binary_sensor_camera_external" check to see if you have it (in the integration setup you have to select the external sensor for that camera). I'm not sure why it was done this way but one quick fix/hack is to change the MQTT message for cameras that rely on external ONVIF alerts. Instead of {

"type": "MOTION",

"trigger": "ON"

}The same for the OFF trigger. |

Thank you very much for this! This did the trick for me with my Reolink PoE doorbell. Now, I just need to figure out the proper settings/syntax to get BlueIris to announce via MQTT when the doorbell button is pressed. I can see the ONVIF alert for the button press in BI5, I just don’t know how to send that message to my MQTT broker. Any help would be greatly appreciated |

@tumtumsback I don't have a smart doorbell, but I do have alerts for when my security cameras detect someone outside, which uses similar ONVIF events. I have Blue Iris configured to send an event via MQTT including a snapshot from the camera. I then listen for in a Node-RED flow. The Node-RED flow then uses Home Assistant to send announcements via a few devices - to our Google Nest speakers plus our phones and TV (the latter two showing a picture from the camera) I can get the Blue Iris and Node-RED configs to you tomorrow or Friday :) |

|

@Daniel15 that could be nice to see -- I appreciate it! I don't use Node-RED currently, but being a Home Assistant enthusiast, I've seen it referenced many times. I currently am having success sending motion events from BI to HA. And, in HA, I can use those motion events to trigger automations, like announcements to my Google Home Mini cluster. However, the doorbell press event is going to be huge for me. I cannot rely on motion alone, as I am specifically trying to solve for when there is a package I have to sign for. The idea is, the delivery driver will ring the Reolink PoE doorbell camera, and I can then use that event in HA to fire off a number of notifications so that I can get to the door before they run back to their truck and don't actually give me the opportunity to sign for the package. So, more important to me than anything, is getting that doorbell press event over to HA. |

|

You may have your doorbell blocked from the internet but I've been using the Reolink integration to capture the button press for my POE doorbell. |

|

@kramttocs, thanks for the response! It is blocked from the internet, yes. And, the camera itself is on a different subnet than Home Assistant, and is not visible by Home Assistant. However, the camera can talk to Blue Iris, and Blue Iris can talk to Home Assistant, so the hope is that I can simply forward the doorbell press ONVIF events from BI to HA via MQTT. |

|

@tumtumsback Here's the config in Blue Iris: Camera settings -> Alert tab -> click "On alert" button in actions section

Blue Iris listens for a bunch of ONVIF events, but your doorbell may send one that it doesn't listen for. For adding another ONVIF event: This is what my Node-RED workflow looks like: The function node ("process payload and build image") is probably the most interesting thing. It's JavaScript code: const {img, ...otherPayloadFields} = msg.payload;

const objectsToFilter = new Set([

'ball',

'sports ball',

'horse',

'book',

'suitcase',

]);

let formattedObjectNames = 'Nothing';

const objectsData = (otherPayloadFields.json || [])

.find(x => x.api === 'objects');

// Would normally use `objectData?.found?.success` but Node-RED doesn't support it

const hasObjects = objectsData && objectsData.found && objectsData.found.success;

if (hasObjects) {

// Using Array.from(new Set(...)) to dedupe object names

const objectNames = Array.from(new Set(

(objectsData.found.predictions || [])

.sort(x => -x.confidence)

.map(x => x.label)

.filter(x => !objectsToFilter.has(x))

));

if (objectNames.length > 0) {

// Capitalise first letter of first object

objectNames[0] = objectNames[0][0].toUpperCase() + objectNames[0].substring(1)

formattedObjectNames = objectNames.join(' and ');

}

}

const message = formattedObjectNames + ' detected at ' + otherPayloadFields.name;

return {

image_filename: Date.now() + '-' + otherPayloadFields.id + '.jpg',

message,

object_names: formattedObjectNames,

payload: Buffer.from(img, 'base64'),

original_payload: otherPayloadFields,

};Example Android notification from when I was backing out of a driveway: Here's the full Node-RED flow, excluding the "test" button (which just sends a test payload so I can test it without actually having to trigger my cameras). You can import it using the "import" option: [

{

"id": "f64fc2c05427d1ff",

"type": "mqtt in",

"z": "329ba4b7632a95c8",

"name": "BlueIris/+/alert-image-b64",

"topic": "BlueIris/+/alert-image-b64",

"qos": "2",

"datatype": "json",

"broker": "e811c8eb637d26a5",

"nl": false,

"rap": true,

"rh": 0,

"inputs": 0,

"x": 130,

"y": 40,

"wires": [

[

"5d5d6c7623201f34"

]

]

},

{

"id": "5d5d6c7623201f34",

"type": "function",

"z": "329ba4b7632a95c8",

"name": "Process payload and build message",

"func": "const {img, ...otherPayloadFields} = msg.payload;\nconst objectsToFilter = new Set([\n 'ball',\n 'sports ball',\n 'horse',\n 'book',\n 'suitcase',\n]);\n\nlet formattedObjectNames = 'Nothing';\nconst objectsData = (otherPayloadFields.json || [])\n .find(x => x.api === 'objects');\n// Would normally use `objectData?.found?.success` but Node-RED doesn't support it\nconst hasObjects = objectsData && objectsData.found && objectsData.found.success;\nif (hasObjects) {\n // Using Array.from(new Set(...)) to dedupe object names\n const objectNames = Array.from(new Set(\n (objectsData.found.predictions || [])\n .sort(x => -x.confidence)\n .map(x => x.label)\n .filter(x => !objectsToFilter.has(x))\n ));\n if (objectNames.length > 0) {\n // Capitalise first letter of first object\n objectNames[0] = objectNames[0][0].toUpperCase() + objectNames[0].substring(1)\n formattedObjectNames = objectNames.join(' and ');\n }\n}\n\nconst message = formattedObjectNames + ' detected at ' + otherPayloadFields.name;\nreturn {\n image_filename: Date.now() + '-' + otherPayloadFields.id + '.jpg',\n message,\n object_names: formattedObjectNames,\n payload: Buffer.from(img, 'base64'),\n original_payload: otherPayloadFields,\n};",

"outputs": 1,

"timeout": "",

"noerr": 0,

"initialize": "",

"finalize": "",

"libs": [],

"x": 430,

"y": 40,

"wires": [

[

"31138938746cf6fd",

"53f4a59537f2893e"

]

]

},

{

"id": "31138938746cf6fd",

"type": "file",

"z": "329ba4b7632a95c8",

"name": "write image file",

"filename": "\"/media/alerts/\" & image_filename",

"filenameType": "jsonata",

"appendNewline": false,

"createDir": false,

"overwriteFile": "true",

"encoding": "none",

"x": 700,

"y": 40,

"wires": [

[

"6490fa5f55043f2a",

"1ca18fe555c917fa"

]

]

},

{

"id": "6490fa5f55043f2a",

"type": "api-call-service",

"z": "329ba4b7632a95c8",

"name": "Notify phones",

"server": "b6f128b45d1d76f7",

"version": 5,

"debugenabled": false,

"domain": "notify",

"service": "all_phones",

"areaId": [],

"deviceId": [],

"entityId": [],

"data": "{\t \"message\": message,\t \"data\": {\t \"clickAction\": \"http://cameras.vpn.d.sb:81/ui3.htm?maximize=1&tab=alerts&cam=\" & original_payload.camera & \"&rec=\" & original_payload.id,\t \"image\": \"/media/local/alerts/\" & image_filename,\t \"actions\": [{\t \"action\": \"URI\",\t \"title\": \"Live View\",\t \"uri\": \"http://cameras.vpn.d.sb:81/ui3.htm?maximize=1&cam=\" & original_payload.camera\t }, {\t \"action\": \"URI\",\t \"title\": \"View Clip\",\t \"uri\": \"http://cameras.vpn.d.sb:81/ui3.htm?maximize=1&tab=alerts&cam=\" & original_payload.camera & \"&rec=\" & original_payload.id\t }],\t \t \"channel\": \"Camera\",\t \"ttl\": 0,\t \"priority\": \"high\",\t \"importance\": \"high\"\t }\t}",

"dataType": "jsonata",

"mergeContext": "",

"mustacheAltTags": false,

"outputProperties": [],

"queue": "none",

"x": 900,

"y": 40,

"wires": [

[]

]

},

{

"id": "1ca18fe555c917fa",

"type": "api-call-service",

"z": "329ba4b7632a95c8",

"name": "Notify TV",

"server": "b6f128b45d1d76f7",

"version": 3,

"debugenabled": false,

"service": "shield",

"entityId": "",

"data": "{\t \"message\": message,\t \"data\": {\t \"image\": {\t \"path\": \"/media/alerts/\" & image_filename\t }\t }\t}",

"dataType": "jsonata",

"mustacheAltTags": false,

"outputProperties": [],

"queue": "none",

"service_domain": "notify",

"mergecontext": "",

"x": 880,

"y": 100,

"wires": [

[]

]

},

{

"id": "53f4a59537f2893e",

"type": "switch",

"z": "329ba4b7632a95c8",

"name": "Is person?",

"property": "message",

"propertyType": "msg",

"rules": [

{

"t": "cont",

"v": "person",

"vt": "str"

},

{

"t": "cont",

"v": "Person",

"vt": "str"

}

],

"checkall": "false",

"repair": false,

"outputs": 2,

"x": 710,

"y": 160,

"wires": [

[

"31ec03e4d62909c3"

],

[

"31ec03e4d62909c3"

]

]

},

{

"id": "31ec03e4d62909c3",

"type": "api-call-service",

"z": "329ba4b7632a95c8",

"name": "Notify Google Speakers",

"server": "b6f128b45d1d76f7",

"version": 3,

"debugenabled": false,

"service": "google_assistant_sdk",

"entityId": "",

"data": "{\"message\": message}",

"dataType": "jsonata",

"mustacheAltTags": false,

"outputProperties": [],

"queue": "none",

"service_domain": "notify",

"mergecontext": "",

"x": 930,

"y": 160,

"wires": [

[]

]

},

{

"id": "e811c8eb637d26a5",

"type": "mqtt-broker",

"name": "MQTT",

"broker": "mqtt.int.d.sb",

"port": "1883",

"clientid": "",

"autoConnect": true,

"usetls": false,

"protocolVersion": "4",

"keepalive": "60",

"cleansession": true,

"birthTopic": "",

"birthQos": "0",

"birthPayload": "",

"birthMsg": {},

"closeTopic": "",

"closeQos": "0",

"closePayload": "",

"closeMsg": {},

"willTopic": "",

"willQos": "0",

"willPayload": "",

"willMsg": {},

"userProps": "",

"sessionExpiry": ""

},

{

"id": "b6f128b45d1d76f7",

"type": "server",

"name": "Home Assistant",

"version": 2,

"addon": false,

"rejectUnauthorizedCerts": true,

"ha_boolean": "y|yes|true|on|home|open",

"connectionDelay": true,

"cacheJson": true,

"heartbeat": false,

"heartbeatInterval": "30"

}

] |

|

Holy cow -- that is amazing -- I seriously need to study that some more, and maybe dive into Node-RED at some point. Thanks for the tip. I was able to get the ONVIF Event in question. Blue Iris gave me this for the doorbell press: <SOAP-ENV:Envelope xmlns:tad="http://www.onvif.org/ver20/analytics/wsdl" xmlns:tns1="http://www.onvif.org/ver10/topics" xmlns:ter="http://www.onvif.org/ver10/error" xmlns:tse="http://www.onvif.org/ver10/search/wsdl" xmlns:trv="http://www.onvif.org/ver10/receiver/wsdl" xmlns:trt="http://www.onvif.org/ver10/media/wsdl" xmlns:trp="http://www.onvif.org/ver10/replay/wsdl" xmlns:trc="http://www.onvif.org/ver10/recording/wsdl" xmlns:tptz="http://www.onvif.org/ver20/ptz/wsdl" xmlns:tmd="http://www.onvif.org/ver10/deviceIO/wsdl" xmlns:timg="http://www.onvif.org/ver20/imaging/wsdl" xmlns:wsnt="http://docs.oasis-open.org/wsn/b-2" xmlns:tev="http://www.onvif.org/ver10/events/wsdl" xmlns:tds="http://www.onvif.org/ver10/device/wsdl" xmlns:tdn="http://www.onvif.org/ver10/network/wsdl" xmlns:ns1="http://www.onvif.org/ver20/media/wsdl" xmlns:wsrfr="http://docs.oasis-open.org/wsrf/r-2" xmlns:wstop="http://docs.oasis-open.org/wsn/t-1" xmlns:wsrfbf="http://docs.oasis-open.org/wsrf/bf-2" xmlns:tt="http://www.onvif.org/ver10/schema" xmlns:wsse="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-secext-1.0.xsd" xmlns:wsc="http://docs.oasis-open.org/ws-sx/ws-secureconversation/200512" xmlns:xenc="http://www.w3.org/2001/04/xmlenc#" xmlns:wsu="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd" xmlns:saml2="urn:oasis:names:tc:SAML:2.0:assertion" xmlns:saml1="urn:oasis:names:tc:SAML:1.0:assertion" xmlns:ds="http://www.w3.org/2000/09/xmldsig#" xmlns:c14n="http://www.w3.org/2001/10/xml-exc-c14n#" xmlns:wsa5="http://www.w3.org/2005/08/addressing" xmlns:chan="http://schemas.microsoft.com/ws/2005/02/duplex" xmlns:xop="http://www.w3.org/2004/08/xop/include" xmlns:wsdd="http://schemas.xmlsoap.org/ws/2005/04/discovery" xmlns:wsa="http://schemas.xmlsoap.org/ws/2004/08/addressing" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:SOAP-ENC="http://www.w3.org/2003/05/soap-encoding" xmlns:SOAP-ENV="http://www.w3.org/2003/05/soap-envelope"> Correct me if I am wrong, but, I think the important part there is: <wsnt:Topic Dialect="http://www.onvif.org/ver10/tev/topicExpression/ConcreteSet">tns1:RuleEngine/MyRuleDetector/Visitor</wsnt:Topic> I am curious though - how can I send that to my MQTT broker and reference that in Home Assistant? It would be nice if I could utilize the existing BlueIris HACS integration, but ... |

|

@Daniel15 just wanted to say thanks a bunch. You were super helpful. I am sure 'YMMV' for different people, but I was getting unreliable ONVIF events from BlueIris for the doorbell press event (visitor). The main reason for getting this doorbell was simply for these doorbell press events and I needed a rock solid reliable, yet local (no cloud), way to get notified when the doorbell is pressed. I stumbled upon this post, and ended up setting it up this way: https://ipcamtalk.com/threads/onvif-2-mqtt.69906/ I eneded up doing exactly what Grisu2 did, and it's working like a charm. No BlueIris involved at all. Just Camera --> cam2mqtt --> Home Assistant. |

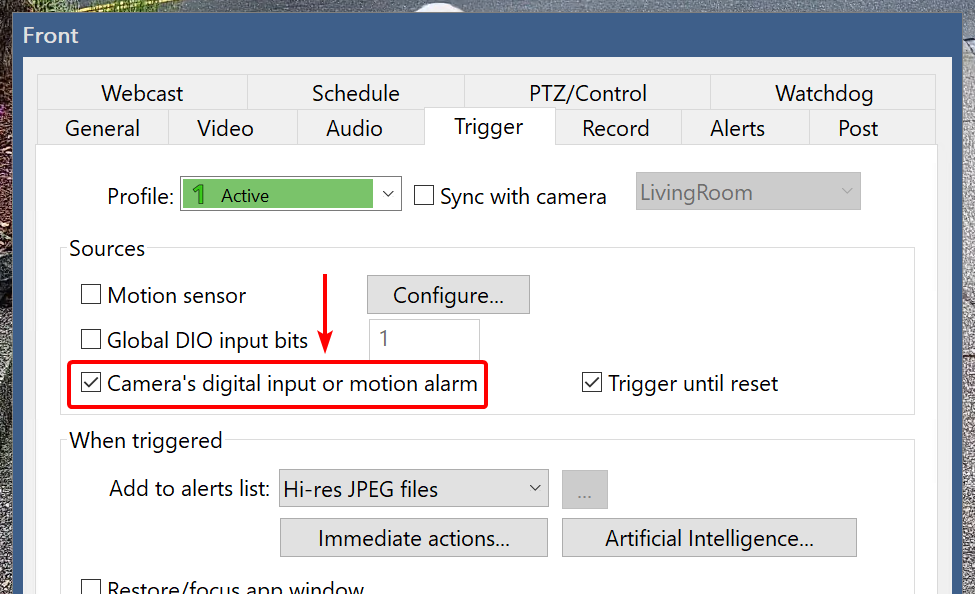

I'm using the motion sensing built-in to my camera (via ONVIF), rather than Blue Iris' motion sensing:

I've configured Blue Iris to publish to MQTT topic

BlueIris/&CAM/Statuson alerts. In this case, thetypeis"EXTERNAL". This is what I see in MQTT Explorer:{ "type": "EXTERNAL", "trigger": "ON" }However, the status in Home Assistant always says "clear".

Does this integration not support type=EXTERNAL?

The text was updated successfully, but these errors were encountered: