diff --git a/.all-contributorsrc b/.all-contributorsrc

index ec7ff5a1c..05e429904 100644

--- a/.all-contributorsrc

+++ b/.all-contributorsrc

@@ -7,10 +7,19 @@

],

"contributors": [

{

- "login": "ShvetankPrakash",

- "name": "Shvetank Prakash",

- "avatar_url": "https://avatars.githubusercontent.com/ShvetankPrakash",

- "profile": "https://github.com/ShvetankPrakash",

+ "login": "Mjrovai",

+ "name": "Marcelo Rovai",

+ "avatar_url": "https://avatars.githubusercontent.com/Mjrovai",

+ "profile": "https://github.com/Mjrovai",

+ "contributions": [

+ "doc"

+ ]

+ },

+ {

+ "login": "ishapira1",

+ "name": "ishapira",

+ "avatar_url": "https://avatars.githubusercontent.com/ishapira1",

+ "profile": "https://github.com/ishapira1",

"contributions": [

"doc"

]

@@ -25,118 +34,118 @@

]

},

{

- "login": "sjohri20",

- "name": "sjohri20",

- "avatar_url": "https://avatars.githubusercontent.com/sjohri20",

- "profile": "https://github.com/sjohri20",

+ "login": "18jeffreyma",

+ "name": "Jeffrey Ma",

+ "avatar_url": "https://avatars.githubusercontent.com/18jeffreyma",

+ "profile": "https://github.com/18jeffreyma",

"contributions": [

"doc"

]

},

{

- "login": "jaysonzlin",

- "name": "Jayson Lin",

- "avatar_url": "https://avatars.githubusercontent.com/jaysonzlin",

- "profile": "https://github.com/jaysonzlin",

+ "login": "uchendui",

+ "name": "Ikechukwu Uchendu",

+ "avatar_url": "https://avatars.githubusercontent.com/uchendui",

+ "profile": "https://github.com/uchendui",

"contributions": [

"doc"

]

},

{

- "login": "BaeHenryS",

- "name": "Henry Bae",

- "avatar_url": "https://avatars.githubusercontent.com/BaeHenryS",

- "profile": "https://github.com/BaeHenryS",

+ "login": "sophiacho1",

+ "name": "sophiacho1",

+ "avatar_url": "https://avatars.githubusercontent.com/sophiacho1",

+ "profile": "https://github.com/sophiacho1",

"contributions": [

"doc"

]

},

{

- "login": "Naeemkh",

- "name": "naeemkh",

- "avatar_url": "https://avatars.githubusercontent.com/Naeemkh",

- "profile": "https://github.com/Naeemkh",

+ "login": "ShvetankPrakash",

+ "name": "Shvetank Prakash",

+ "avatar_url": "https://avatars.githubusercontent.com/ShvetankPrakash",

+ "profile": "https://github.com/ShvetankPrakash",

"contributions": [

"doc"

]

},

{

- "login": "mmaz",

- "name": "Mark Mazumder",

- "avatar_url": "https://avatars.githubusercontent.com/mmaz",

- "profile": "https://github.com/mmaz",

+ "login": "mpstewart1",

+ "name": "Matthew Stewart",

+ "avatar_url": "https://avatars.githubusercontent.com/mpstewart1",

+ "profile": "https://github.com/mpstewart1",

"contributions": [

"doc"

]

},

{

- "login": "18jeffreyma",

- "name": "Jeffrey Ma",

- "avatar_url": "https://avatars.githubusercontent.com/18jeffreyma",

- "profile": "https://github.com/18jeffreyma",

+ "login": "Naeemkh",

+ "name": "naeemkh",

+ "avatar_url": "https://avatars.githubusercontent.com/Naeemkh",

+ "profile": "https://github.com/Naeemkh",

"contributions": [

"doc"

]

},

{

- "login": "uchendui",

- "name": "Ikechukwu Uchendu",

- "avatar_url": "https://avatars.githubusercontent.com/uchendui",

- "profile": "https://github.com/uchendui",

+ "login": "profvjreddi",

+ "name": "Vijay Janapa Reddi",

+ "avatar_url": "https://avatars.githubusercontent.com/profvjreddi",

+ "profile": "https://github.com/profvjreddi",

"contributions": [

"doc"

]

},

{

- "login": "jessicaquaye",

- "name": "Jessica Quaye",

- "avatar_url": "https://avatars.githubusercontent.com/jessicaquaye",

- "profile": "https://github.com/jessicaquaye",

+ "login": "mmaz",

+ "name": "Mark Mazumder",

+ "avatar_url": "https://avatars.githubusercontent.com/mmaz",

+ "profile": "https://github.com/mmaz",

"contributions": [

"doc"

]

},

{

- "login": "DivyaAmirtharaj",

- "name": "Divya",

- "avatar_url": "https://avatars.githubusercontent.com/DivyaAmirtharaj",

- "profile": "https://github.com/DivyaAmirtharaj",

+ "login": "BaeHenryS",

+ "name": "Henry Bae",

+ "avatar_url": "https://avatars.githubusercontent.com/BaeHenryS",

+ "profile": "https://github.com/BaeHenryS",

"contributions": [

"doc"

]

},

{

- "login": "mpstewart1",

- "name": "Matthew Stewart",

- "avatar_url": "https://avatars.githubusercontent.com/mpstewart1",

- "profile": "https://github.com/mpstewart1",

+ "login": "oishib",

+ "name": "oishib",

+ "avatar_url": "https://avatars.githubusercontent.com/oishib",

+ "profile": "https://github.com/oishib",

"contributions": [

"doc"

]

},

{

- "login": "Mjrovai",

- "name": "Marcelo Rovai",

- "avatar_url": "https://avatars.githubusercontent.com/Mjrovai",

- "profile": "https://github.com/Mjrovai",

+ "login": "marcozennaro",

+ "name": "Marco Zennaro",

+ "avatar_url": "https://avatars.githubusercontent.com/marcozennaro",

+ "profile": "https://github.com/marcozennaro",

"contributions": [

"doc"

]

},

{

- "login": "oishib",

- "name": "oishib",

- "avatar_url": "https://avatars.githubusercontent.com/oishib",

- "profile": "https://github.com/oishib",

+ "login": "jessicaquaye",

+ "name": "Jessica Quaye",

+ "avatar_url": "https://avatars.githubusercontent.com/jessicaquaye",

+ "profile": "https://github.com/jessicaquaye",

"contributions": [

"doc"

]

},

{

- "login": "profvjreddi",

- "name": "Vijay Janapa Reddi",

- "avatar_url": "https://avatars.githubusercontent.com/profvjreddi",

- "profile": "https://github.com/profvjreddi",

+ "login": "sjohri20",

+ "name": "sjohri20",

+ "avatar_url": "https://avatars.githubusercontent.com/sjohri20",

+ "profile": "https://github.com/sjohri20",

"contributions": [

"doc"

]

@@ -151,28 +160,19 @@

]

},

{

- "login": "marcozennaro",

- "name": "Marco Zennaro",

- "avatar_url": "https://avatars.githubusercontent.com/marcozennaro",

- "profile": "https://github.com/marcozennaro",

- "contributions": [

- "doc"

- ]

- },

- {

- "login": "ishapira1",

- "name": "ishapira",

- "avatar_url": "https://avatars.githubusercontent.com/ishapira1",

- "profile": "https://github.com/ishapira1",

+ "login": "jaysonzlin",

+ "name": "Jayson Lin",

+ "avatar_url": "https://avatars.githubusercontent.com/jaysonzlin",

+ "profile": "https://github.com/jaysonzlin",

"contributions": [

"doc"

]

},

{

- "login": "sophiacho1",

- "name": "sophiacho1",

- "avatar_url": "https://avatars.githubusercontent.com/sophiacho1",

- "profile": "https://github.com/sophiacho1",

+ "login": "DivyaAmirtharaj",

+ "name": "Divya",

+ "avatar_url": "https://avatars.githubusercontent.com/DivyaAmirtharaj",

+ "profile": "https://github.com/DivyaAmirtharaj",

"contributions": [

"doc"

]

diff --git a/README.md b/README.md

index 3519e09c2..9dec61d9a 100644

--- a/README.md

+++ b/README.md

@@ -88,31 +88,31 @@ quarto render

diff --git a/contributors.qmd b/contributors.qmd

index 24df99e18..37d013133 100644

--- a/contributors.qmd

+++ b/contributors.qmd

@@ -8,31 +8,31 @@ We extend our sincere thanks to the diverse group of individuals who have genero

diff --git a/images/efficientnumerics_PTQQATsummary.png b/images/efficientnumerics_PTQQATsummary.png

index bf1ba26ef..3d950998b 100644

Binary files a/images/efficientnumerics_PTQQATsummary.png and b/images/efficientnumerics_PTQQATsummary.png differ

diff --git a/images/efficientnumerics_alexnet.png b/images/efficientnumerics_alexnet.png

index 74bf5c950..be44cb78c 100644

Binary files a/images/efficientnumerics_alexnet.png and b/images/efficientnumerics_alexnet.png differ

diff --git a/images/efficientnumerics_benefitsofprecision.png b/images/efficientnumerics_benefitsofprecision.png

index 9ac3e811a..210f5abfb 100644

Binary files a/images/efficientnumerics_benefitsofprecision.png and b/images/efficientnumerics_benefitsofprecision.png differ

diff --git a/images/efficientnumerics_calibration.png b/images/efficientnumerics_calibration.png

index e37f858bf..76de8ff43 100644

Binary files a/images/efficientnumerics_calibration.png and b/images/efficientnumerics_calibration.png differ

diff --git a/images/efficientnumerics_calibrationcopy.png b/images/efficientnumerics_calibrationcopy.png

new file mode 100644

index 000000000..76de8ff43

Binary files /dev/null and b/images/efficientnumerics_calibrationcopy.png differ

diff --git a/images/efficientnumerics_edgequant.png b/images/efficientnumerics_edgequant.png

index 71d278bb9..ea136f2a4 100644

Binary files a/images/efficientnumerics_edgequant.png and b/images/efficientnumerics_edgequant.png differ

diff --git a/images/efficientnumerics_modelsizes.png b/images/efficientnumerics_modelsizes.png

index 8d6aa902c..5dad5fbce 100644

Binary files a/images/efficientnumerics_modelsizes.png and b/images/efficientnumerics_modelsizes.png differ

diff --git a/images/efficientnumerics_qp1.png b/images/efficientnumerics_qp1.png

index 9862a00db..9d9596344 100644

Binary files a/images/efficientnumerics_qp1.png and b/images/efficientnumerics_qp1.png differ

diff --git a/images/efficientnumerics_qp2.png b/images/efficientnumerics_qp2.png

index a3b799cfc..e8ee6442f 100644

Binary files a/images/efficientnumerics_qp2.png and b/images/efficientnumerics_qp2.png differ

diff --git a/images/modeloptimization_color_mappings.jpeg b/images/modeloptimization_color_mappings.jpeg

new file mode 100644

index 000000000..0531a9d8c

Binary files /dev/null and b/images/modeloptimization_color_mappings.jpeg differ

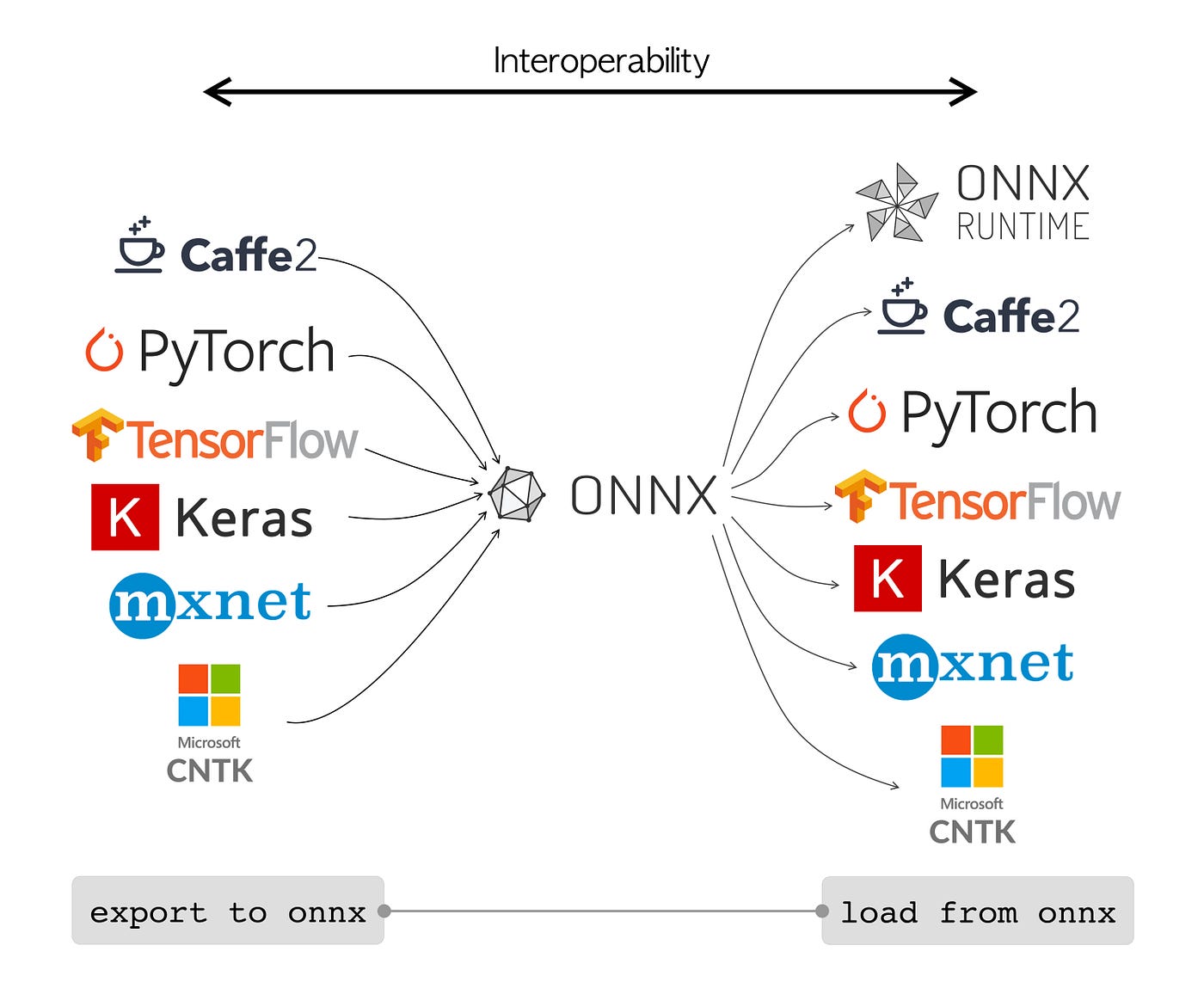

diff --git a/images/modeloptimization_onnx.jpg b/images/modeloptimization_onnx.jpg

new file mode 100644

index 000000000..e5218a9c3

Binary files /dev/null and b/images/modeloptimization_onnx.jpg differ

diff --git a/images/modeloptimization_quant_hist.png b/images/modeloptimization_quant_hist.png

new file mode 100644

index 000000000..a9cdff8e1

Binary files /dev/null and b/images/modeloptimization_quant_hist.png differ

diff --git a/optimizations.qmd b/optimizations.qmd

index 5c4e246a7..cdb9c346c 100644

--- a/optimizations.qmd

+++ b/optimizations.qmd

@@ -29,7 +29,7 @@ Going one level lower, in @sec-model_ops_numerics, we study the role of numerica

Finally, as we go lower closer to the hardware, in @sec-model_ops_hw, we will navigate through the landscape of hardware-software co-design, exploring how models can be optimized by tailoring them to the specific characteristics and capabilities of the target hardware. We will discuss how models can be adapted to exploit the available hardware resources effectively.

-

+{width=50%}

## Efficient Model Representation {#sec-model_ops_representation}

@@ -55,7 +55,7 @@ So how does one choose the type of pruning methods? Many variations of pruning t

#### Structured Pruning

-We start with structured pruning, a technique that reduces the size of a neural network by eliminating entire model-specific substructures while maintaining the overall model structure. It removes entire neurons/filters or layers based on importance criteria. For example, for a convolutional neural network (CNN), this could be certain filter instances or channels. For fully connected networks, this could be neurons themselves while maintaining full connectivity or even be elimination of entire model layers that are deemed to be insignificant. This type of pruning often leads to regular, structured sparse networks that are hardware friendly.

+We start with structured pruning, a technique that reduces the size of a neural network by eliminating entire model-specific substructures while maintaining the overall model structure. It removes entire neurons/channels or layers based on importance criteria. For example, for a convolutional neural network (CNN), this could be certain filter instances or channels. For fully connected networks, this could be neurons themselves while maintaining full connectivity or even be elimination of entire model layers that are deemed to be insignificant. This type of pruning often leads to regular, structured sparse networks that are hardware friendly.

##### Components

@@ -69,7 +69,7 @@ Best practices have started to emerge on how to think about structured pruning.

Given that there are different strategies, each of these structures (i.e., neurons, channels and layers) is pruned based on specific criteria and strategies, ensuring that the reduced model maintains as much of the predictive prowess of the original model as possible while gaining in computational efficiency and reduction in size.

-The primary structures targeted for pruning include **neurons** , channels, and sometimes, entire layers, each having its unique implications and methodologies. When neurons are pruned, we are removing entire neurons along with their associated weights and biases, thereby reducing the width of the layer. This type of pruning is often utilized in fully connected layers.

+The primary structures targeted for pruning include **neurons**, channels, and sometimes, entire layers, each having its unique implications and methodologies. When neurons are pruned, we are removing entire neurons along with their associated weights and biases, thereby reducing the width of the layer. This type of pruning is often utilized in fully connected layers.

With **channel** pruning, which is predominantly applied in convolutional neural networks (CNNs), it involves eliminating entire channels or filters, which in turn reduces the depth of the feature maps and impacts the network's ability to extract certain features from the input data. This is particularly crucial in image processing tasks where computational efficiency is paramount.

@@ -120,7 +120,7 @@ The pruned model, while smaller, retains its original architectural form, which

Unstructured pruning is, as its name suggests, pruning the model without regard to model-specific substructure. As mentioned above, it offers a greater aggression in pruning and can achieve higher model sparsities while maintaining accuracy given less constraints on what can and can't be pruned. Generally, post-training unstructured pruning consists of an importance criterion for individual model parameters/weights, pruning/removal of weights that fall below the criteria, and optional fine-tuning after to try and recover the accuracy lost during weight removal.

-Unstructured pruning has some advantages over structured pruning: removing individual weights instead of entire model substructures often leads in practice to lower model accuracy hits. Furthermore, generally determining the criterion of importance for an individual weight is much simpler than for an entire substructure of parameters in structured pruning, making the former preferable for cases where that overhead is hard or unclear to compute. Similarly, the actual process of structured pruning is generally less flexible, as removing individual weights is generally simpler than removing entire substructures and ensuring the model still works.

+Unstructured pruning has some advantages over structured pruning: removing individual weights instead of entire model substructures often leads in practice to lower model accuracy decreases. Furthermore, generally determining the criterion of importance for an individual weight is much simpler than for an entire substructure of parameters in structured pruning, making the former preferable for cases where that overhead is hard or unclear to compute. Similarly, the actual process of structured pruning is generally less flexible, as removing individual weights is generally simpler than removing entire substructures and ensuring the model still works.

Unstructured pruning, while offering the potential for significant model size reduction and enhanced deployability, brings with it challenges related to managing sparse representations and ensuring computational efficiency. It is particularly useful in scenarios where achieving the highest possible model compression is paramount and where the deployment environment can handle sparse computations efficiently.

@@ -136,22 +136,22 @@ The following compact table provides a concise comparison between structured and

| **Implementation Complexity**| Often simpler to implement and manage due to maintaining network structure. | Can be complex to manage and compute due to sparse representations. |

| **Fine-Tuning Complexity** | May require less complex fine-tuning strategies post-pruning. | Might necessitate more complex retraining or fine-tuning strategies post-pruning. |

-

+

#### Lottery Ticket Hypothesis

Pruning has evolved from a purely post-training technique that came at the cost of some accuracy, to a powerful meta-learning approach applied during training to reduce model complexity. This advancement in turn improves compute, memory, and latency efficiency at both training and inference.

-A breakthrough finding that catalyzed this evolution was the [lottery ticket hypothesis](https://arxiv.org/abs/1803.03635) empirically discovered by Jonathan Frankle and Michael Carbin. Their work states that within dense neural networks, there exist sparse subnetworks, referred to as "winning tickets," that can match or even exceed the performance of the original model when trained in isolation. Specifically, these winning tickets, when initialized using the same weights as the original network, can achieve similarly high training convergence and accuracy on a given task. It is worthwhile pointing out that they empirically discovered the lottery ticket hypothesis, which was later formalized.

+A breakthrough finding that catalyzed this evolution was the [lottery ticket hypothesis](https://arxiv.org/abs/1803.03635) by @frankle_lottery_2019. They empirically discovered by Jonathan Frankle and Michael Carbin. Their work states that within dense neural networks, there exist sparse subnetworks, referred to as "winning tickets," that can match or even exceed the performance of the original model when trained in isolation. Specifically, these winning tickets, when initialized using the same weights as the original network, can achieve similarly high training convergence and accuracy on a given task. It is worthwhile pointing out that they empirically discovered the lottery ticket hypothesis, which was later formalized.

-More formally, the lottery ticket hypothesis is a concept in deep learning that suggests that within a neural network, there exist sparse subnetworks (or "winning tickets") that, when initialized with the right weights, are capable of achieving high training convergence and inference performance on a given task. The intuition behind this hypothesis is that, during the training process of a neural network, many neurons and connections become redundant or unimportant, particularly with the inclusion of training techniques encouraging redundancy like dropout. Identifying, pruning out, and initializing these "winning tickets'' allows for faster training and more efficient models, as they contain the essential model decision information for the task. Furthermore, as generally known with the bias-variance tradeoff theory, these tickets suffer less from overparameterization and thus generalize better rather than overfitting to the task.

+More formally, the lottery ticket hypothesis is a concept in deep learning that suggests that within a neural network, there exist sparse subnetworks (or "winning tickets") that, when initialized with the right weights, are capable of achieving high training convergence and inference performance on a given task. The intuition behind this hypothesis is that, during the training process of a neural network, many neurons and connections become redundant or unimportant, particularly with the inclusion of training techniques encouraging redundancy like dropout. Identifying, pruning out, and initializing these "winning tickets'' allows for faster training and more efficient models, as they contain the essential model decision information for the task. Furthermore, as generally known with the bias-variance tradeoff theory, these tickets suffer less from overparameterization and thus generalize better rather than overfitting to the task.

-

+

#### Challenges & Limitations

-There is no free lunch with pruning optimizations.

+There is no free lunch with pruning optimizations, with some choices coming with wboth improvements and costs to considers. Below we discuss some tradeoffs for practitioners to consider.

##### Quality vs. Size Reduction

@@ -187,7 +187,7 @@ Model compression techniques are crucial for deploying deep learning models on r

#### Knowledge Distillation {#sec-kd}

-One popular technique is knowledge distillation (KD), which transfers knowledge from a large, complex "teacher" model to a smaller "student" model. The key idea is to train the student model to mimic the teacher's outputs.The concept of KD was first popularized by the work of Geoffrey Hinton, Oriol Vinyals, and Jeff Dean in their paper ["Distilling the Knowledge in a Neural Network" (2015)](https://arxiv.org/abs/1503.02531).

+One popular technique is knowledge distillation (KD), which transfers knowledge from a large, complex "teacher" model to a smaller "student" model. The key idea is to train the student model to mimic the teacher's outputs. The concept of KD was first popularized by @hinton2015distilling.

##### Overview and Benefits

@@ -199,7 +199,7 @@ Another core concept is "temperature scaling" in the softmax function. It plays

These components, when adeptly configured and harmonized, enable the student model to assimilate the teacher model's knowledge, crafting a pathway towards efficient and robust smaller models that retain the predictive prowess of their larger counterparts.

-

+

##### Challenges

@@ -211,21 +211,21 @@ These challenges underscore the necessity for a thorough and nuanced approach to

#### Low-rank Matrix Factorization

-Similar in approximation theme, low-rank matrix factorization (LRFM) is a mathematical technique used in linear algebra and data analysis to approximate a given matrix by decomposing it into two or more lower-dimensional matrices. The fundamental idea is to express a high-dimensional matrix as a product of lower-rank matrices, which can help reduce the complexity of data while preserving its essential structure. Mathematically, given a matrix $A \in \mathbb{R}^{m \times n}$, LRMF seeks matrices $U \in \mathbb{R}^{m \times k}$ and $V \in \mathbb{R}^{k \times n}$ such that $A \approx UV$, where $k$ is the rank and is typically much smaller than $m$ and $n$.

+Similar in approximation theme, low-rank matrix factorization (LRMF) is a mathematical technique used in linear algebra and data analysis to approximate a given matrix by decomposing it into two or more lower-dimensional matrices. The fundamental idea is to express a high-dimensional matrix as a product of lower-rank matrices, which can help reduce the complexity of data while preserving its essential structure. Mathematically, given a matrix $A \in \mathbb{R}^{m \times n}$, LRMF seeks matrices $U \in \mathbb{R}^{m \times k}$ and $V \in \mathbb{R}^{k \times n}$ such that $A \approx UV$, where $k$ is the rank and is typically much smaller than $m$ and $n$.

##### Background and Benefits

-One of the seminal works in the realm of matrix factorization, particularly in the context of recommendation systems, is the paper by Yehuda Koren, Robert Bell, and Chris Volinsky, ["Matrix Factorization Techniques for Recommender Systems" (2009)]([https://ieeexplore.ieee.org/document/5197422](https://ieeexplore.ieee.org/document/5197422)). The authors delve into various factorization models, providing insights into their efficacy in capturing the underlying patterns in the data and enhancing predictive accuracy in collaborative filtering. LRFM has been widely applied in recommendation systems (such as Netflix, Facebook, etc.), where the user-item interaction matrix is factorized to capture latent factors corresponding to user preferences and item attributes.

+One of the seminal works in the realm of matrix factorization, particularly in the context of recommendation systems, is the paper by Yehuda Koren, Robert Bell, and Chris Volinsky, ["Matrix Factorization Techniques for Recommender Systems" (2009)]([https://ieeexplore.ieee.org/document/5197422](https://ieeexplore.ieee.org/document/5197422)). The authors delve into various factorization models, providing insights into their efficacy in capturing the underlying patterns in the data and enhancing predictive accuracy in collaborative filtering. LRMF has been widely applied in recommendation systems (such as Netflix, Facebook, etc.), where the user-item interaction matrix is factorized to capture latent factors corresponding to user preferences and item attributes.

The main advantage of low-rank matrix factorization lies in its ability to reduce data dimensionality as shown in the image below where there are fewer parameters to store, making it computationally more efficient and reducing storage requirements at the cost of some additional compute. This can lead to faster computations and more compact data representations, which is especially valuable when dealing with large datasets. Additionally, it may aid in noise reduction and can reveal underlying patterns and relationships in the data.

-

+

##### Challenges

But practitioners and researchers encounter a spectrum of challenges and considerations that necessitate careful attention and strategic approaches. As with any lossy compression technique, we may lose information during this approximation process: choosing the correct rank that balances the information lost and the computational costs is tricky as well and adds an additional hyper-parameter to tune for.

-Low-rank matrix factorization is a valuable tool for dimensionality reduction and making compute fit onto edge devices but, like other techniques, needs to be carefully tuned to the model and task at hand. A key challenge resides in managing the computational complexity inherent to LRMF, especially when grappling with high-dimensional and large-scale data. The computational burden, particularly in the context of real-time applications and massive datasets, remains a significant hurdle for effectively using LRFM.

+Low-rank matrix factorization is a valuable tool for dimensionality reduction and making compute fit onto edge devices but, like other techniques, needs to be carefully tuned to the model and task at hand. A key challenge resides in managing the computational complexity inherent to LRMF, especially when grappling with high-dimensional and large-scale data. The computational burden, particularly in the context of real-time applications and massive datasets, remains a significant hurdle for effectively using LRMF.

Moreover, the conundrum of choosing the optimal rank, \(k\), for the factorization introduces another layer of complexity. The selection of \(k\) inherently involves a trade-off between approximation accuracy and model simplicity, and identifying a rank that adeptly balances these conflicting objectives often demands a combination of domain expertise, empirical validation, and sometimes, heuristic approaches. The challenge is further amplified when the data encompasses noise or when the inherent low-rank structure is not pronounced, making the determination of a suitable \(k\) even more elusive.

@@ -237,13 +237,13 @@ Furthermore, in scenarios where data evolves or grows over time, developing LRMF

Similar to low-rank matrix factorization, more complex models may store weights in higher dimensions, such as tensors: tensor decomposition is the higher-dimensional analogue of matrix factorization, where a model tensor is decomposed into lower rank components, which again are easier to compute on and store but may suffer from the same issues as mentioned above of information loss and nuanced hyperparameter tuning. Mathematically, given a tensor $\mathcal{A}$, tensor decomposition seeks to represent $\mathcal{A}$ as a combination of simpler tensors, facilitating a compressed representation that approximates the original data while minimizing the loss of information.

-The work of Tamara G. Kolda and Brett W. Bader, ["Tensor Decompositions and Applications"](https://epubs.siam.org/doi/abs/10.1137/07070111X) (2009), stands out as a seminal paper in the field of tensor decompositions. The authors provide a comprehensive overview of various tensor decomposition methods, exploring their mathematical underpinnings, algorithms, and a wide array of applications, ranging from signal processing to data mining. Of course, the reason we are discussing it is because it has huge potential for system performance improvements, particularly in the space of TinyML, where throughput and memory footprint savings are crucial to feasibility of deployments .

+The work of Tamara G. Kolda and Brett W. Bader, ["Tensor Decompositions and Applications"](https://epubs.siam.org/doi/abs/10.1137/07070111X) (2009), stands out as a seminal paper in the field of tensor decompositions. The authors provide a comprehensive overview of various tensor decomposition methods, exploring their mathematical underpinnings, algorithms, and a wide array of applications, ranging from signal processing to data mining. Of course, the reason we are discussing it is because it has huge potential for system performance improvements, particularly in the space of TinyML, where throughput and memory footprint savings are crucial to feasibility of deployments.

-

+

### Edge-Aware Model Design

-Finally, we reach the other end of the gradient, where we specifically make model architecture decisions directly given knowledge of the edge devices we wish to deploy on.

+Finally, we reach the other end of the hardware-software gradient, where we specifically make model architecture decisions directly given knowledge of the edge devices we wish to deploy on.

As covered in previous sections, edge devices are constrained specifically with limitations on memory and parallelizable computations: as such, if there are critical inference speed requirements, computations must be flexible enough to satisfy hardware constraints, something that can be designed at the model architecture level. Furthermore, trying to cram SOTA large ML models onto edge devices even after pruning and compression is generally infeasible purely due to size: the model complexity itself must be chosen with more nuance as to more feasibly fit the device. Edge ML developers have approached this architectural challenge both through designing bespoke edge ML model architectures and through device-aware neural architecture search (NAS), which can more systematically generate feasible on-device model architectures.

@@ -251,17 +251,25 @@ As covered in previous sections, edge devices are constrained specifically with

One edge friendly architecture design is depthwise separable convolutions. Commonly used in deep learning for image processing, it consists of two distinct steps: the first is the depthwise convolution, where each input channel is convolved independently with its own set of learnable filters. This step reduces computational complexity by a significant margin compared to standard convolutions, as it drastically reduces the number of parameters and computations involved. The second step is the pointwise convolution, which combines the output of the depthwise convolution channels through a 1x1 convolution, creating inter-channel interactions. This approach offers several advantages. Pros include reduced model size, faster inference times, and often better generalization due to fewer parameters, making it suitable for mobile and embedded applications. However, depthwise separable convolutions may not capture complex spatial interactions as effectively as standard convolutions and might require more depth (layers) to achieve the same level of representational power, potentially leading to longer training times. Nonetheless, their efficiency in terms of parameters and computation makes them a popular choice in modern convolutional neural network architectures.

-

+

#### Example Model Architectures

-In this vein, a number of recent architectures have been, from inception, specifically designed for maximizing accuracy on an edge deployment, notably SqueezeNet, MobileNet, and EfficientNet. [SqueezeNet]([https://arxiv.org/abs/1602.07360](https://arxiv.org/abs/1602.07360)), for instance, utilizes a compact architecture with 1x1 convolutions and fire modules to minimize the number of parameters while maintaining strong accuracy. [MobileNet]([https://arxiv.org/abs/1704.04861](https://arxiv.org/abs/1704.04861)), on the other hand, employs the aforementioned depthwise separable convolutions to reduce both computation and model size. [EfficientNet]([https://arxiv.org/abs/1905.11946](https://arxiv.org/abs/1905.11946)) takes a different approach by optimizing network scaling (i.e. varying the depth, width and resolution of a network) and compound scaling, a more nuanced variation network scaling, to achieve superior performance with fewer parameters. These models are essential in the context of edge computing where limited processing power and memory require lightweight yet effective models that can efficiently perform tasks such as image recognition, object detection, and more. Their design principles showcase the importance of intentionally tailored model architecture for edge computing, where performance and efficiency must fit within constraints.

+In this vein, a number of recent architectures have been, from inception, specifically designed for maximizing accuracy on an edge deployment, notably SqueezeNet, MobileNet, and EfficientNet.

+

+* [SqueezeNet]([https://arxiv.org/abs/1602.07360](https://arxiv.org/abs/1602.07360)) by @iandola2016squeezenet for instance, utilizes a compact architecture with 1x1 convolutions and fire modules to minimize the number of parameters while maintaining strong accuracy.

+

+* [MobileNet]([https://arxiv.org/abs/1704.04861](https://arxiv.org/abs/1704.04861)) by @howard2017mobilenets, on the other hand, employs the aforementioned depthwise separable convolutions to reduce both computation and model size.

+

+* [EfficientNet]([https://arxiv.org/abs/1905.11946](https://arxiv.org/abs/1905.11946)) by @tan2020efficientnet takes a different approach by optimizing network scaling (i.e. varying the depth, width and resolution of a network) and compound scaling, a more nuanced variation network scaling, to achieve superior performance with fewer parameters.

+

+These models are essential in the context of edge computing where limited processing power and memory require lightweight yet effective models that can efficiently perform tasks such as image recognition, object detection, and more. Their design principles showcase the importance of intentionally tailored model architecture for edge computing, where performance and efficiency must fit within constraints.

#### Streamlining Model Architecture Search

-Finally, systematized pipelines for searching for performant edge-compatible model architectures are possible through frameworks like [TinyNAS](https://arxiv.org/abs/2007.10319) and [MorphNet]([https://arxiv.org/abs/1711.06798](https://arxiv.org/abs/1711.06798)).

+Finally, systematized pipelines for searching for performant edge-compatible model architectures are possible through frameworks like [TinyNAS](https://arxiv.org/abs/2007.10319) by @lin2020mcunet and [MorphNet]([https://arxiv.org/abs/1711.06798](https://arxiv.org/abs/1711.06798)) by @gordon2018morphnet.

-TinyNAS is an innovative neural architecture search framework introduced in the MCUNet paper, designed to efficiently discover lightweight neural network architectures for edge devices with limited computational resources. Leveraging reinforcement learning and a compact search space of micro neural modules, TinyNAS optimizes for both accuracy and latency, enabling the deployment of deep learning models on microcontrollers, IoT devices, and other resource-constrained platforms. Specifically, TinyNAS, in conjunction with a network optimizer TinyEngine, generates different search spaces by scaling the input resolution and the model width of a model, then collects the computation FLOPs distribution of satisfying networks within the search space to evaluate its priority. TinyNAS relies on the assumption that a search space that accommodates higher FLOPs under memory constraint can produce higher accuracy models, something that the authors verified in practice in their work. In empirical performance, TinyEngine reduced models the peak memory usage by around 3.4 times and accelerated inference by 1.7 to 3.3 times compared to [TFLite]([https://www.tensorflow.org/lite](https://www.tensorflow.org/lite)) and [CMSIS-NN]([https://www.keil.com/pack/doc/CMSIS/NN/html/index.html](https://www.keil.com/pack/doc/CMSIS/NN/html/index.html))..

+TinyNAS is an innovative neural architecture search framework introduced in the MCUNet paper, designed to efficiently discover lightweight neural network architectures for edge devices with limited computational resources. Leveraging reinforcement learning and a compact search space of micro neural modules, TinyNAS optimizes for both accuracy and latency, enabling the deployment of deep learning models on microcontrollers, IoT devices, and other resource-constrained platforms. Specifically, TinyNAS, in conjunction with a network optimizer TinyEngine, generates different search spaces by scaling the input resolution and the model width of a model, then collects the computation FLOPs distribution of satisfying networks within the search space to evaluate its priority. TinyNAS relies on the assumption that a search space that accommodates higher FLOPs under memory constraint can produce higher accuracy models, something that the authors verified in practice in their work. In empirical performance, TinyEngine reduced the peak memory usage of models by around 3.4 times and accelerated inference by 1.7 to 3.3 times compared to [TFLite]([https://www.tensorflow.org/lite](https://www.tensorflow.org/lite)) and [CMSIS-NN]([https://www.keil.com/pack/doc/CMSIS/NN/html/index.html](https://www.keil.com/pack/doc/CMSIS/NN/html/index.html))..

Similarly, MorphNet is a neural network optimization framework designed to automatically reshape and morph the architecture of deep neural networks, optimizing them for specific deployment requirements. It achieves this through two steps: first, it leverages a set of customizable network morphing operations, such as widening or deepening layers, to dynamically adjust the network's structure. These operations enable the network to adapt to various computational constraints, including model size, latency, and accuracy targets, which are extremely prevalent in edge computing usage. In the second step, MorphNet uses a reinforcement learning-based approach to search for the optimal permutation of morphing operations, effectively balancing the trade-off between model size and performance. This innovative method allows deep learning practitioners to automatically tailor neural network architectures to specific application and hardware requirements, ensuring efficient and effective deployment across various platforms.

@@ -272,13 +280,13 @@ TinyNAS and MorphNet represent a few of the many significant advancements in the

Numerics representation involves a myriad of considerations, including but not limited to, the precision of numbers, their encoding formats, and the arithmetic operations facilitated. It invariably involves a rich array of different trade-offs, where practitioners are tasked with navigating between numerical accuracy and computational efficiency. For instance, while lower-precision numerics may offer the allure of reduced memory usage and expedited computations, they concurrently present challenges pertaining to numerical stability and potential degradation of model accuracy.

-### Motivation

+#### Motivation

The imperative for efficient numerics representation arises, particularly as efficient model optimization alone falls short when adapting models for deployment on low-powered edge devices operating under stringent constraints.

Beyond minimizing memory demands, the tremendous potential of efficient numerics representation lies in but is not limited to these fundamental ways. By diminishing computational intensity, efficient numerics can thereby amplify computational speed, allowing more complex models to compute on low-powered devices. Reducing the bit precision of weights and activations on heavily over-parameterized models enables condensation of model size for edge devices without significantly harming the model's predictive accuracy. With the omnipresence of neural networks in models, efficient numerics has a unique advantage in leveraging the layered structure of NNs to vary numeric precision across layers, minimizing precision in resistant layers while preserving higher precision in sensitive layers.

-In this segment, we'll delve into how practitioners can harness the principles of hardware-software co-design at the lowest levels of a model to facilitate compatibility with edge devices. Kicking off with an introduction to the numerics, we will examine its implications for device memory and computational complexity. Subsequently, we will embark on a discussion regarding the trade-offs entailed in adopting this strategy, followed by a deep dive into a paramount method of efficient numerics: quantization.

+In this section, we will dive into how practitioners can harness the principles of hardware-software co-design at the lowest levels of a model to facilitate compatibility with edge devices. Kicking off with an introduction to the numerics, we will examine its implications for device memory and computational complexity. Subsequently, we will embark on a discussion regarding the trade-offs entailed in adopting this strategy, followed by a deep dive into a paramount method of efficient numerics: quantization.

### The Basics

@@ -286,7 +294,7 @@ In this segment, we'll delve into how practitioners can harness the principles o

Numerical data, the bedrock upon which machine learning models stand, manifest in two primary forms. These are integers and floating point numbers.

-**Integers** : Whole numbers, devoid of fractional components, integers (e.g., -3, 0, 42) are key in scenarios demanding discrete values. For instance, in ML, class labels in a classification task might be represented as integers, where "cat", "dog", and "bird" could be encoded as 0, 1, and 2 respectively.

+**Integers:** Whole numbers, devoid of fractional components, integers (e.g., -3, 0, 42) are key in scenarios demanding discrete values. For instance, in ML, class labels in a classification task might be represented as integers, where "cat", "dog", and "bird" could be encoded as 0, 1, and 2 respectively.

**Floating-Point Numbers:** Encompassing real numbers, floating-point numbers (e.g., -3.14, 0.01, 2.71828) afford the representation of values with fractional components. In ML model parameters, weights might be initialized with small floating-point values, such as 0.001 or -0.045, to commence the training process. Currently, there are 4 popular precision formats discussed below.

@@ -304,25 +312,19 @@ Precision, delineating the exactness with which a number is represented, bifurca

**Bfloat16:** Brain Floating-Point Format or Bfloat16, also employs 16 bits but allocates them differently compared to FP16: 1 bit for the sign, 8 bits for the exponent, and 7 bits for the fraction. This format, developed by Google, prioritizes a larger exponent range over precision, making it particularly useful in deep learning applications where the dynamic range is crucial.

-](https://storage.googleapis.com/gweb-cloudblog-publish/images/Three_floating-point_formats.max-624x261.png)

+

**Integer:** Integer representations are made using 8, 4, and 2 bits. They are often used during the inference phase of neural networks, where the weights and activations of the model are quantized to these lower precisions. Integer representations are deterministic and offer significant speed and memory advantages over floating-point representations. For many inference tasks, especially on edge devices, the slight loss in accuracy due to quantization is often acceptable given the efficiency gains. An extreme form of integer numerics is for binary neural networks (BNNs), where weights and activations are constrained to one of two values: either +1 or -1.

-| Precision | Pros | Cons |

-

-|------------|-----------------------------------------------------------|--------------------------------------------------|

-

-| **FP32** (Floating Point 32-bit) | - Standard precision used in most deep learning frameworks.\ - High accuracy due to ample representational capacity.\ - Well-suited for training. | - High memory usage.\ - Slower inference times compared to quantized models.\ - Higher energy consumption. |

-

-| **FP16** (Floating Point 16-bit) | - Reduces memory usage compared to FP32.\ - Speeds up computations on hardware that supports FP16.\ - Often used in mixed-precision training to balance speed and accuracy. | - Lower representational capacity compared to FP32.\ - Risk of numerical instability in some models or layers. |

-

-| **INT8** (8-bit Integer) | - Significantly reduced memory footprint compared to floating-point representations.\ - Faster inference if hardware supports INT8 computations.\ - Suitable for many post-training quantization scenarios. | - Quantization can lead to some accuracy loss.\ - Requires careful calibration during quantization to minimize accuracy degradation. |

+| **Precision** | **Pros** | **Cons** |

+|---------------------------------------|------------------------------------------------------------------------------------------------------|----------------------------------------------------------------------------------------------------------------------------|

+| **FP32** (Floating Point 32-bit) | • Standard precision used in most deep learning frameworks.

• High accuracy due to ample representational capacity.

• Well-suited for training. | • High memory usage.

• Slower inference times compared to quantized models.

• Higher energy consumption. |

+| **FP16** (Floating Point 16-bit) | • Reduces memory usage compared to FP32.

• Speeds up computations on hardware that supports FP16.

• Often used in mixed-precision training to balance speed and accuracy. | • Lower representational capacity compared to FP32.

• Risk of numerical instability in some models or layers. |

+| **INT8** (8-bit Integer) | • Significantly reduced memory footprint compared to floating-point representations.

• Faster inference if hardware supports INT8 computations.

• Suitable for many post-training quantization scenarios. | • Quantization can lead to some accuracy loss.

• Requires careful calibration during quantization to minimize accuracy degradation. |

+| **INT4** (4-bit Integer) | • Even lower memory usage than INT8.

• Further speed-up potential for inference. | • Higher risk of accuracy loss compared to INT8.

• Calibration during quantization becomes more critical. |

+| **Binary** | • Minimal memory footprint (only 1 bit per parameter).

• Extremely fast inference due to bitwise operations.

• Power efficient. | • Significant accuracy drop for many tasks.

• Complex training dynamics due to extreme quantization. |

+| **Ternary** | • Low memory usage but slightly more than binary.

• Offers a middle ground between representation and efficiency. | • Accuracy might still be lower than higher precision models.

• Training dynamics can be complex. |

-| **INT4** (4-bit Integer) | - Even lower memory usage than INT8.\ - Further speed-up potential for inference. | - Higher risk of accuracy loss compared to INT8.\ - Calibration during quantization becomes more critical. |

-

-| **Binary** | - Minimal memory footprint (only 1 bit per parameter).\ - Extremely fast inference due to bitwise operations.\ - Power efficient. | - Significant accuracy drop for many tasks.\ - Complex training dynamics due to extreme quantization. |

-

-| **Ternary** | - Low memory usage but slightly more than binary.\ - Offers a middle ground between representation and efficiency. | - Accuracy might still be lower than higher precision models.\ - Training dynamics can be complex. |

#### Numeric Encoding and Storage

@@ -371,17 +373,18 @@ Numerical precision directly impacts computational complexity, influencing the t

In addition to pure runtimes, there is also a concern over energy efficiency. Not all numerical computations are created equal from the underlying hardware standpoint. Some numerical operations are more energy efficient than others. For example, the figure below shows that integer addition is much more energy efficient than integer multiplication.

-

-

-Source: [https://ieeexplore.ieee.org/document/6757323](https://ieeexplore.ieee.org/document/6757323)

-

+

+

+

+

+

#### Hardware Compatibility

Ensuring compatibility and optimized performance across diverse hardware platforms is another challenge in numerics representation. Different hardware, such as CPUs, GPUs, TPUs, and FPGAs, have varying capabilities and optimizations for handling different numeric precisions. For example, certain GPUs might be optimized for Float32 computations, while others might provide accelerations for Float16. Developing and optimizing ML models that can leverage the specific numerical capabilities of different hardware, while ensuring that the model maintains its accuracy and robustness, requires careful consideration and potentially additional development and testing efforts.

-Precision and Accuracy Trade-offs

+#### Precision and Accuracy Trade-offs

The trade-off between numerical precision and model accuracy is a nuanced challenge in numerics representation. Utilizing lower-precision numerics, such as Float16, might conserve memory and expedite computations but can also introduce issues like quantization error and reduced numerical range. For instance, training a model with Float16 might introduce challenges in representing very small gradient values, potentially impacting the convergence and stability of the training process. Furthermore, in certain applications, such as scientific simulations or financial computations, where high precision is paramount, the use of lower-precision numerics might not be permissible due to the risk of accruing significant errors.

@@ -428,13 +431,13 @@ These examples illustrate diverse scenarios where the challenges of numerics rep

### Quantization {#sec-quant}

-Quantization is prevalent in various scientific and technological domains, essentially involves the **mapping or constraining of a continuous set or range into a discrete counterpart to minimize the number of bits required**.

+Quantization is prevalent in various scientific and technological domains, and it essentially involves the **mapping or constraining of a continuous set or range into a discrete counterpart to minimize the number of bits required**.

#### History

Historically, the idea of quantization is not novel and can be traced back to ancient times, particularly in the realm of music and astronomy. In music, the Greeks utilized a system of tetrachords, segmenting the continuous range of pitches into discrete notes, thereby quantizing musical sounds. In astronomy and physics, the concept of quantization was present in the discretized models of planetary orbits, as seen in the Ptolemaic and Copernican systems.

-During the 1800s, quantization-based discretization was used to approximate the calculation of integrals, and further used to investigate the impact of rounding errors on the integration result. However, the term "quantization" was firmly embedded in scientific literature with the advent of quantum mechanics in the early 20th century, where it was used to describe the phenomenon that certain physical properties, such as energy, exist only in discrete, quantized states. This principle was pivotal in explaining phenomena at the atomic and subatomic levels. In the digital age, quantization found its application in signal processing, where continuous signals are converted into a discrete digital form, and in numerical algorithms, where computations on real-valued numbers are performed with finite-precision arithmetic.

+During the 1800s, quantization-based discretization was used to approximate the calculation of integrals, and further used to investigate the impact of rounding errors on the integration result. With algorithms, Lloyd's K-Means Algorithm is a classic example of quantization. However, the term "quantization" was firmly embedded in scientific literature with the advent of quantum mechanics in the early 20th century, where it was used to describe the phenomenon that certain physical properties, such as energy, exist only in discrete, quantized states. This principle was pivotal in explaining phenomena at the atomic and subatomic levels. In the digital age, quantization found its application in signal processing, where continuous signals are converted into a discrete digital form, and in numerical algorithms, where computations on real-valued numbers are performed with finite-precision arithmetic.

Extending upon this second application and relevant to this section, it is used in computer science to optimize neural networks by reducing the precision of the network weights. Thus, quantization, as a concept, has been subtly woven into the tapestry of scientific and technological development, evolving and adapting to the needs and discoveries of various epochs.

@@ -446,15 +449,13 @@ In signal processing, the continuous sine wave can be quantized into discrete va

-In the quantized version shown below, the continuous sine wave is sampled at regular intervals (in this case, every \(\frac{\pi}{4}\) radians), and only these sampled values are represented in the digital version of the signal. The step-wise lines between the points show one way to represent the quantized signal in a piecewise-constant form. This is a simplified example of how analog-to-digital conversion works, where a continuous signal is mapped to a discrete set of values, enabling it to be represented and processed digitally.

+In the quantized version shown below, the continuous sine wave is sampled at regular intervals (in this case, every $\frac{\pi}{4}$ radians), and only these sampled values are represented in the digital version of the signal. The step-wise lines between the points show one way to represent the quantized signal in a piecewise-constant form. This is a simplified example of how analog-to-digital conversion works, where a continuous signal is mapped to a discrete set of values, enabling it to be represented and processed digitally.

-Returning to the context of Machine Learning (ML), quantization refers to the process of constraining the possible values that numerical parameters (such as weights and biases) can take to a discrete set, thereby reducing the precision of the parameters and consequently, the model's memory footprint. When properly implemented, quantization can reduce model size by up to 4x and improve inference latency and throughput by up to 2-3x. For example, an Image Classification model like ResNet-50 can be compressed from 96MB down to 24MB with 8-bit quantization.There is typically less than 1% loss in model accuracy from well tuned quantization. Accuracy can often be recovered by re-training the quantized model with quantization aware training techniques. Therefore, this technique has emerged to be very important in deploying ML models to resource-constrained environments, such as mobile devices, IoT devices, and edge computing platforms, where computational resources (memory and processing power) are limited.

-

-

+Returning to the context of Machine Learning (ML), quantization refers to the process of constraining the possible values that numerical parameters (such as weights and biases) can take to a discrete set, thereby reducing the precision of the parameters and consequently, the model's memory footprint. When properly implemented, quantization can reduce model size by up to 4x and improve inference latency and throughput by up to 2-3x. For example, an Image Classification model like ResNet-50 can be compressed from 96MB down to 24MB with 8-bit quantization. There is typically less than 1% loss in model accuracy from well tuned quantization. Accuracy can often be recovered by re-training the quantized model with quantization aware training techniques. Therefore, this technique has emerged to be very important in deploying ML models to resource-constrained environments, such as mobile devices, IoT devices, and edge computing platforms, where computational resources (memory and processing power) are limited.

-[Quantization figure - Example figure showing reduced model size from quantization]()

+

There are several dimensions to quantization such as uniformity, stochasticity (or determinism), symmetry, granularity (across layers/channels/groups or even within channels), range calibration considerations (static vs dynamic), and fine-tuning methods (QAT, PTQ, ZSQ). We examine these below.

@@ -466,29 +467,33 @@ Uniform quantization involves mapping continuous or high-precision values to a l

The process for implementing uniform quantization starts with choosing a range of real numbers to be quantized. The next step is to select a quantization function and map the real values to the integers representable by the bit-width of the quantized representation. For instance, a popular choice for a quantization function is:

+$$

Q(r)=Int(r/S) - Z

+$$

where Q is the quantization operator, r is a real valued input (in our case, an activation or weight), S is a real valued scaling factor, and Z is an integer zero point. The Int function maps a real value to an integer value through a rounding operation. Through this function, we have effectively mapped real values r to some integer values, resulting in quantized levels which are uniformly spaced.

When the need arises for practitioners to retrieve the original higher precision values, real values r can be recovered from quantized values through an operation known as **dequantization**. In the example above, this would mean performing the following operation on our quantized value:

-r ̃ = S(Q(r) + Z) (~ should be on top, ignore)

+$$

+\bar{r} = S(Q(r) + Z)

+$$

-As discussed, some precision in the real value is lost by quantization. In this case, the recovered value r ̃ will not exactly match r due to the rounding operation. This is an important tradeoff to note; however, in many successful uses of quantization, the loss of precision can be negligible and the test accuracy remains high. Despite this, uniform quantization continues to be the current de-facto choice due to its simplicity and efficient mapping to hardware.

+As discussed, some precision in the real value is lost by quantization. In this case, the recovered value $\bar{r}$ will not exactly match r due to the rounding operation. This is an important tradeoff to note; however, in many successful uses of quantization, the loss of precision can be negligible and the test accuracy remains high. Despite this, uniform quantization continues to be the current de-facto choice due to its simplicity and efficient mapping to hardware.

#### Non-uniform Quantization

Non-uniform quantization, on the other hand, does not maintain a consistent interval between quantized values. This approach might be used to allocate more possible discrete values in regions where the parameter values are more densely populated, thereby preserving more detail where it is most needed. For instance, in bell-shaped distributions of weights with long tails, a set of weights in a model predominantly lies within a certain range; thus, more quantization levels might be allocated to that range to preserve finer details, enabling us to better capture information. However, one major weakness of non-uniform quantization is that it requires dequantization before higher precision computations due to its non-uniformity, restricting its ability to accelerate computation compared to uniform quantization.

-Typically, a rule-based non-uniform quantization uses a logarithmic distribution of exponentially increasing steps and levels as opposed to linearly. Another popular branch lies in binary-code-based quantization where real number vectors are quantized into binary vectors with a scaling factor. Notably, there is no closed form solution for minimizing errors between the real value and non-uniformly quantized value, so most quantizations in this field rely on heuristic solutions. For instance, recent work formulates non-uniform quantization as an optimization problem where the quantization steps/levels in quantizer Q are adjusted to minimize the difference between the original tensor and quantized counterpart.

+Typically, a rule-based non-uniform quantization uses a logarithmic distribution of exponentially increasing steps and levels as opposed to linearly. Another popular branch lies in binary-code-based quantization where real number vectors are quantized into binary vectors with a scaling factor. Notably, there is no closed form solution for minimizing errors between the real value and non-uniformly quantized value, so most quantizations in this field rely on heuristic solutions. For instance, [recent work](https://arxiv.org/abs/1802.00150) by @xu2018alternating formulates non-uniform quantization as an optimization problem where the quantization steps/levels in quantizer Q are adjusted to minimize the difference between the original tensor and quantized counterpart.

-\min\_Q ||Q(r)-r||^2

+$$

+\min_Q ||Q(r)-r||^2

+$$

Furthermore, learnable quantizers can be jointly trained with model parameters, and the quantization steps/levels are generally trained with iterative optimization or gradient descent. Additionally, clustering has been used to alleviate information loss from quantization. While capable of capturing higher levels of detail, non-uniform quantization schemes can be difficult to deploy efficiently on general computation hardware, making it less-preferred to methods which use uniform quantization.

-

-

-_Comparison between uniform quantization (left) and non-uniform quantization (right) (Credit: __**A Survey of Quantization Methods for Efficient Neural Network Inference**__ )._

+

#### Stochastic Quantization

@@ -504,7 +509,7 @@ Zero-shot quantization refers to the process of converting a full-precision deep

### Calibration

-Calibration is the process of selecting the most effective clipping range [\alpha, \beta] for weights and activations to be quantized to. For example, consider quantizing activations that originally have a floating-point range between -6 and 6 to 8-bit integers. If you just take the minimum and maximum possible 8-bit integer values (-128 to 127) as your quantization range, it might not be the most effective. Instead, calibration would involve passing a representative dataset then use this observed range for quantization.

+Calibration is the process of selecting the most effective clipping range [$\alpha$, $\beta$] for weights and activations to be quantized to. For example, consider quantizing activations that originally have a floating-point range between -6 and 6 to 8-bit integers. If you just take the minimum and maximum possible 8-bit integer values (-128 to 127) as your quantization range, it might not be the most effective. Instead, calibration would involve passing a representative dataset then use this observed range for quantization.

There are many calibration methods but a few commonly used include:

@@ -514,41 +519,36 @@ Entropy: Use KL divergence to minimize information loss between the original flo

Percentile: Set the range to a percentile of the distribution of absolute values seen during calibration. For example, 99% calibration would clip 1% of the largest magnitude values.

-

-

-Src: Integer quantization for deep learning inference

+

Importantly, the quality of calibration can make a difference between a quantized model that retains most of its accuracy and one that degrades significantly. Hence, it's an essential step in the quantization process. When choosing a calibration range, there are two types: symmetric and asymmetric.

#### Symmetric Quantization

-Symmetric quantization maps real values to a symmetrical clipping range centered around 0. This involves choosing a range [\alpha, \beta] where \alpha = -\beta. For example, one symmetrical range would be based on the min/max values of the real values such that: -\alpha = \beta = max(abs(r\_max), abs(r\_min)).

+Symmetric quantization maps real values to a symmetrical clipping range centered around 0. This involves choosing a range [$\alpha$, $\beta$] where $\alpha = -\beta$. For example, one symmetrical range would be based on the min/max values of the real values such that: -$\alpha = \beta = max(abs(r_{max}), abs(r_{min}))$.

-Symmetric clipping ranges are the most widely adopted in practice as they have the advantage of easier implementation. In particular, the zeroing out of the zero point can lead to reduction in computational cost during inference ["Integer Quantization for Deep Learning Inference: Principles and Empirical Evaluation" (2023)]([https://arxiv.org/abs/2004.09602](https://arxiv.org/abs/2004.09602)) .

+Symmetric clipping ranges are the most widely adopted in practice as they have the advantage of easier implementation. In particular, the mapping of zero to zero in the clipping range (sometimes called "zeroing out of the zero point") can lead to reduction in computational cost during inference [(@intquantfordeepinf)](https://arxiv.org/abs/2004.09602) .

#### Asymmetric Quantization

-Asymmetric quantization maps real values to an asymmetrical clipping range that isn't necessarily centered around 0. It involves choosing a range [\alpha, \beta] where \alpha \neq -\beta. For example, selecting a range based on the minimum and maximum real values, or where \alpha = r\_min and \beta = r\_max, creates an asymmetric range. Typically, asymmetric quantization produces tighter clipping ranges compared to symmetric quantization, which is important when target weights and activations are imbalanced, e.g., the activation after the ReLU always has non-negative values. Despite producing tighter clipping ranges, asymmetric quantization is less preferred to symmetric quantization as it doesn't always zero out the real value zero.

-

-

+Asymmetric quantization maps real values to an asymmetrical clipping range that isn't necessarily centered around 0. It involves choosing a range [$\alpha$, $\beta$] where $\alpha \neq -\beta$. For example, selecting a range based on the minimum and maximum real values, or where $\alpha = r_{min}$ and $\beta = r_{max}$, creates an asymmetric range. Typically, asymmetric quantization produces tighter clipping ranges compared to symmetric quantization, which is important when target weights and activations are imbalanced, e.g., the activation after the ReLU always has non-negative values. Despite producing tighter clipping ranges, asymmetric quantization is less preferred to symmetric quantization as it doesn't always zero out the real value zero.

-_Illustration of symmetric quantization (left) and asymmetric quantization (right). Symmetric quantization maps real values to [-127, 127], and asymmetric maps to [-128, 127]. (Credit: __**A Survey of Quantization Methods for Efficient Neural Network Inference**__ )._

+![Illustration of symmetric quantization (left) and asymmetric quantization (right). Symmetric quantization maps real values to [-127, 127], and asymmetric maps to [-128, 127] (@surveyofquant).](images/efficientnumerics_symmetry.png)

-### Granularity

+#### Granularity

Upon deciding the type of clipping range, it is essential to tighten the range to allow a model to retain as much of its accuracy as possible. We'll be taking a look at convolutional neural networks as our way of exploring methods that fine tune the granularity of clipping ranges for quantization. The input activation of a layer in our CNN undergoes convolution with multiple convolutional filters. Every convolutional filter can possess a unique range of values. Consequently, one distinguishing feature of quantization approaches is the precision with which the clipping range [α,β] is determined for the weights.

-

-_Illustration of the main forms of quantization granularities. In layerwise quantization, the same clipping range is applied to all filters which belong to the same layer. Notice how this can result in lower quantization resolutions for channels with narrow distributions, e.g. Filter 1, Filter 2, and Filter C. A higher quantization resolution can be achieved using channelwise quantization which dedicates different clipping ranges to different channels. (Credit: __**A Survey of Quantization Methods for Efficient Neural Network Inference**__ )._

+

-1. Layerwise Quantization: This approach determines the clipping range by considering all of the weights in the convolutional filters of a layer. Then, the same clipping range is used for all convolutional filters. It's the simplest to implement, and, as such, it often results in sub-optimal accuracy due the wide variety of differing ranges between filters. For example, a convolutional kernel with a narrower range of parameters loses its quantization resolution due to another kernel in the same layer having a wider range. .

+1. Layerwise Quantization: This approach determines the clipping range by considering all of the weights in the convolutional filters of a layer. Then, the same clipping range is used for all convolutional filters. It's the simplest to implement, and, as such, it often results in sub-optimal accuracy due the wide variety of differing ranges between filters. For example, a convolutional kernel with a narrower range of parameters loses its quantization resolution due to another kernel in the same layer having a wider range.

2. Groupwise Quantization: This approach groups different channels inside a layer to calculate the clipping range. This method can be helpful when the distribution of parameters across a single convolution/activation varies a lot. In practice, this method was useful in Q-BERT [Q-BERT: Hessian based ultra low precision quantization of bert] for quantizing Transformer [Attention Is All You Need] models that consist of fully-connected attention layers. The downside with this approach comes with the extra cost of accounting for different scaling factors.

3. Channelwise Quantization: This popular method uses a fixed range for each convolutional filter that is independent of other channels. Because each channel is assigned a dedicated scaling factor, this method ensures a higher quantization resolution and often results in higher accuracy.

4. Sub-channelwise Quantization: Taking channelwise quantization to the extreme, this method determines the clipping range with respect to any groups of parameters in a convolution or fully-connected layer. It may result in considerable overhead since different scaling factors need to be taken into account when processing a single convolution or fully-connected layer.

Of these, channelwise quantization is the current standard used for quantizing convolutional kernels, since it enables the adjustment of clipping ranges for each individual kernel with negligible overhead.

-### Static and Dynamic Quantization

+#### Static and Dynamic Quantization

After determining the type and granularity of the clipping range, practitioners must decide when ranges are determined in their range calibration algorithms. There are two approaches to quantizing activations: static quantization and dynamic quantization.

@@ -562,92 +562,76 @@ Between the two, calculating the range dynamically usually is very costly, so mo

The two prevailing techniques for quantizing models are Post Training Quantization and Quantization Aware Training.

-**Post Training Quantization** - Post-training quantization (PTQ) is a quantization technique where the model is quantized after it has been trained.The model is trained in floating point and then weights and activations are quantized as a post-processing step. This is the simplest approach and does not require access to the training data. Unlike Quantization-Aware Training (QAT), PTQ sets weight and activation quantization parameters directly, making it low-overhead and suitable for limited or unlabeled data situations. However, not readjusting the weights after quantizing, especially in low-precision quantization can lead to very different behavior and thus lower accuracy. To tackle this, techniques like bias correction, equalizing weight ranges, and adaptive rounding methods have been developed. PTQ can also be applied in zero-shot scenarios, where no training or testing data are available. This method has been made even more efficient to benefit compute- and memory- intensive large language models. Recently, SmoothQuant, a training-free, accuracy-preserving, and general-purpose PTQ solution which enables 8-bit weight, 8-bit activation quantization for LLMs, has been developed, demonstrating up to 1.56x speedup and 2x memory reduction for LLMs with negligible loss in accuracy [SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models](2023)(https://arxiv.org/abs/2211.10438).

-

-

-

-

-_In PTQ, a pretrained model is calibrated using calibration data (e.g., a small subset of training data) to compute the clipping ranges and scaling factors. (Credit: __**A Survey of Quantization Methods for Efficient Neural Network Inference**__ )_

+**Post Training Quantization** - Post-training quantization (PTQ) is a quantization technique where the model is quantized after it has been trained. The model is trained in floating point and then weights and activations are quantized as a post-processing step. This is the simplest approach and does not require access to the training data. Unlike Quantization-Aware Training (QAT), PTQ sets weight and activation quantization parameters directly, making it low-overhead and suitable for limited or unlabeled data situations. However, not readjusting the weights after quantizing, especially in low-precision quantization can lead to very different behavior and thus lower accuracy. To tackle this, techniques like bias correction, equalizing weight ranges, and adaptive rounding methods have been developed. PTQ can also be applied in zero-shot scenarios, where no training or testing data are available. This method has been made even more efficient to benefit compute- and memory- intensive large language models. Recently, SmoothQuant, a training-free, accuracy-preserving, and general-purpose PTQ solution which enables 8-bit weight, 8-bit activation quantization for LLMs, has been developed, demonstrating up to 1.56x speedup and 2x memory reduction for LLMs with negligible loss in accuracy [(@smoothquant)](https://arxiv.org/abs/2211.10438).

-**Quantization Aware Training** - Quantization-aware training (QAT) is a fine-tuning of the PTQ model. The model is trained aware of quantization, allowing it to adjust for quantization effects. This produces better accuracy with quantized inference. Quantizing a trained neural network model with methods such as PTQ introduces perturbations that can deviate the model from its original convergence point. For instance, Krishnamoorthi showed that even with per-channel quantization, networks like MobileNet do not reach baseline accuracy with int8 Post Training Quantization (PTQ) and require Quantization Aware Training (QAT) [Quantizing deep convolutional networks for efficient inference](2018)([https://arxiv.org/abs/1806.08342](https://arxiv.org/abs/1806.08342)).To address this, QAT retrains the model with quantized parameters, employing forward and backward passes in floating point but quantizing parameters after each gradient update. Handling the non-differentiable quantization operator is crucial; a widely used method is the Straight Through Estimator (STE), approximating the rounding operation as an identity function. While other methods and variations exist, STE remains the most commonly used due to its practical effectiveness.

+

-

+

-_In QAT, a pretrained model is quantized and then finetuned using training data to adjust parameters and recover accuracy degradation. Note: the calibration process is often conducted in parallel with the finetuning process for QAT. (Credit: __**A Survey of Quantization Methods for Efficient Neural Network Inference**__ )._