diff --git a/efficient_ai.qmd b/efficient_ai.qmd

index 6b94339b..098b3865 100644

--- a/efficient_ai.qmd

+++ b/efficient_ai.qmd

@@ -2,91 +2,145 @@

## Introduction

-Explanation: The introduction sets the stage for the entire chapter, offering readers insight into the critical role efficiency plays in the sphere of AI. It outlines the core objectives of the chapter, providing context and framing the ensuing discussion.

+In this chapter, we dive into the concepts that govern efficiency in AI systems. It is of paramount importance, especially in the context of embedded TinyML systems. The computational demands of neural networks can be overwhelming, even in the smallest of systems. Efficiency in AI isn't just a luxury---it's a necessity. For AI to truly be integrated into everyday devices and critical systems, it must operate within the constraints of limited resources without compromising its effectiveness. The drive for efficiency ensures that AI models are lean, fast, and sustainable, making their application viable across a broader range of platforms and scenarios.

-- Background and Importance of Efficiency in AI

-- Discussion on how Cloud, Edge, and TinyML differ (again)

+Training models can consume a significant amount of energy, sometimes equivalent to the carbon footprint of sizable industrial processes. We will cover some of these sustainability details in the [AI Sustainability](./sustainable_ai.qmd) chapter. On the deployment side, if these models are not optimized for efficiency, they can quickly drain device batteries, demand excessive memory, or fall short of real-time processing needs. Through this introduction, our objective is to elucidate the nuances of efficiency, setting the groundwork for a comprehensive exploration in the subsequent chapters.

## The Need for Efficient AI

-Explanation: This section articulates the pressing necessity for efficiency in AI systems, particularly in resource-constrained environments. Discussing these aspects will underline the crucial role of efficient AI in modern technology deployments and facilitate a smooth transition to discussing potential approaches in the next section.

-

-- Resource Constraints in Embedded Systems

-- Striving for Energy Efficiency

-- Improving Computational Efficiency

-- Latency Reduction

-- Meeting Real-time Processing Requirements

-

-## Approaches to Efficient AI

-

-Explanation: After establishing the necessity for efficient AI, this section delves into various strategies and methodologies to achieve it. It explores the technical avenues available for optimizing AI models and algorithms, serving as a bridge between the identified needs and the practical solutions presented in the following sections on specific efficient AI models.

-

-- Algorithm Optimization

-- Model Compression

-- Hardware-Aware Neural Architecture Search (NAS)

-- Compiler Optimizations for AI

-- ML for ML Systems

-

-## Efficient AI Models

-

-Explanation: This section offers an in-depth exploration of different AI models designed to be efficient in terms of computational resources and energy. It discusses not only the models but also provides insights into how they are optimized, preparing the ground for the benchmarking and evaluation section where these models are assessed and compared.

-

-- Model compression techniques

- - Pruning

- - Quantization

- - Knowledge distillation

-- Efficient model architectures

- - MobileNet

- - SqueezeNet

- - ResNet variants

-

-## Efficient Inference

-

-- Optimized inference engines

- - TPUs

- - Edge TPU

- - NN accelerators

-- Model optimizations

- - Quantization

- - Pruning

- - Neural architecture search

-- Framework optimizations

- - TensorFlow Lite

- - PyTorch Mobile

-

-## Efficient Training

-

-- Techniques

- - Pruning

- - Quantization-aware training

- - Knowledge distillation

-- Low precision training

- - FP16

- - INT8

- - Lower bit widths

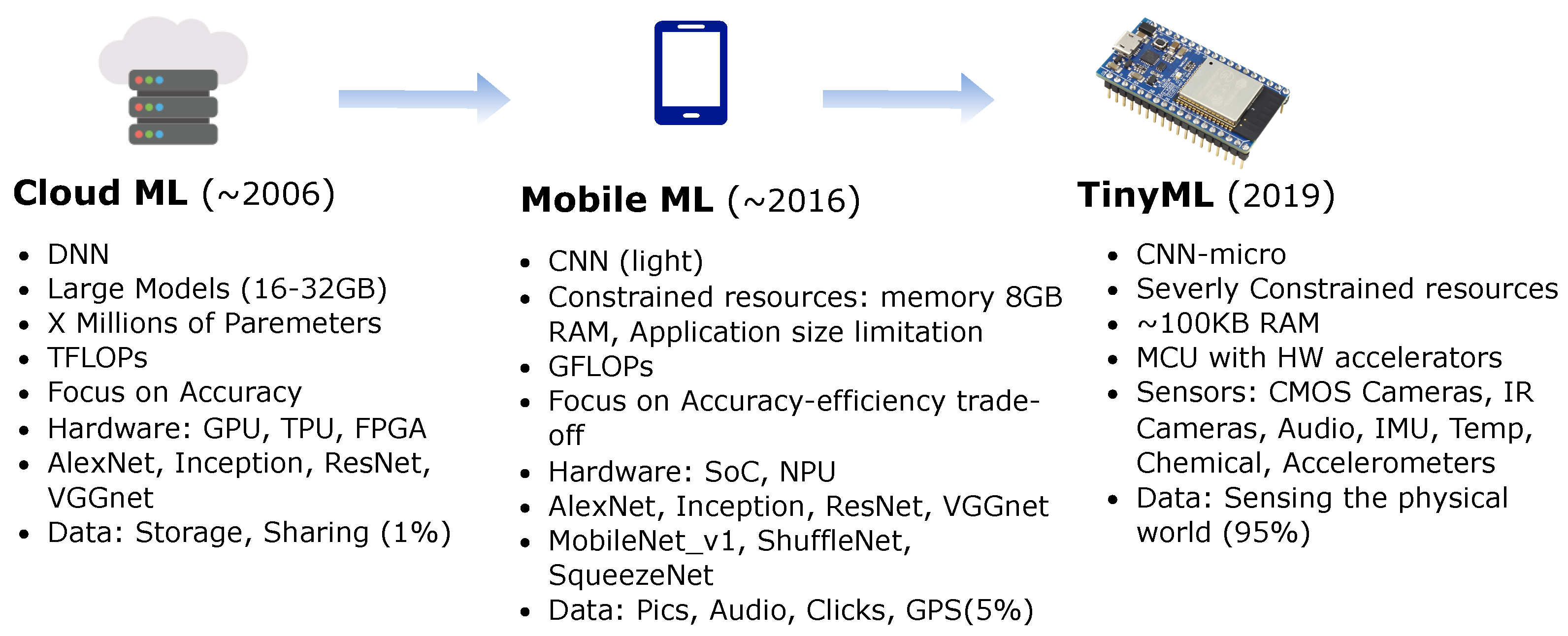

+Efficiency takes on different connotations based on where AI computations occur. Let's take a brief moment to revisit and differentiate between Cloud, Edge, and TinyML in terms of efficiency.

+

+

+

+For cloud AI, traditional AI models often ran in the large—scale data centers equipped with powerful GPUs and TPUs [@barroso2019datacenter]. Here, efficiency pertains to optimizing computational resources, reducing costs, and ensuring timely data processing and return. However, relying on the cloud introduced latency, especially when dealing with large data streams that needed to be uploaded, processed, and then downloaded.

+

+For edge AI, edge computing brought AI closer to the data source, processing information directly on local devices like smartphones, cameras, or industrial machines [@li2019edge]. Here, efficiency encompasses quick real-time responses and reduced data transmission needs. The constraints, however, are tighter—these devices, while more powerful than microcontrollers, have limited computational power compared to cloud setups.

+

+Pushing the frontier even further is TinyML, where AI models run on microcontrollers or extremely resource-constrained environments. The difference in performance for processors and memory between TinyML and cloud or mobile systems can be several orders of magnitude [@warden2019tinyml]. Efficiency in TinyML is about ensuring models are lightweight enough to fit on these devices, use minimal energy (critical for battery-powered devices), and still perform their tasks effectively.

+

+The spectrum from Cloud to TinyML represents a shift from vast, centralized computational resources to distributed, localized, and constrained environments. As we transition from one to the other, the challenges and strategies related to efficiency evolve, underlining the need for specialized approaches tailored to each scenario. Having underscored the need for efficient AI, especially within the context of TinyML, we will transition to exploring the methodologies devised to meet these challenges. The following sections outline at a high level the main concepts that we will dwelve into deeper at a later point. As we delve into these strategies, we will demonstrate the breadth and depth of innovation needed to achieve efficient AI.

+

+## Efficient Model Architectures

+

+Choosing the right model architecture is as crucial as optimizing it. In recent years, researchers have explored some novel architectures that can have inherently fewer parameters while maintaining strong performance.

+

+**MobileNets**: MobileNets are a class of efficient models for mobile and embedded vision applications [@howard2017mobilenets]. The key idea that led to the success of MobileNets is the use of depth-wise separable convolutions which significantly reduce the number of parameters and computations in the network. MobileNetV2 and V3 further enhance this design with the introduction of inverted residuals and linear bottlenecks.

+

+**SqueezeNet**: SqueezeNet is a class of ML models known for its smaller size without sacrificing accuracy. It achieves this by using a "fire module" that reduces the number of input channels to 3x3 filters, thus reducing the parameters [@iandola2016squeezenet]. Moreover, it employs delayed downsampling to increase the accuracy by maintaining a larger feature map.

+

+**ResNet variants**: The Residual Network (ResNet) architecture allows introduced skip connections, or shortcuts [@he2016deep]. Some variants of ResNet are designed to be more efficient. For instance, ResNet-SE incorporates the "squeeze and excitation" mechanism to recalibrate feature maps, while ResNeXt offers grouped convolutions for efficiency.

+

+## Efficient Model Compression

+

+Model compression methods are very important for bringing deep learning models to devices with limited resources. These techniques reduce the size, energy consumption, and computational demands of models without a significant loss in accuracy. At a high level, the methods can briefly be binned into the following fundamental methods:

+

+**Pruning**: This is akin to trimming the branches of a tree. In pruning, certain weights or even entire neurons are removed from the network, based on specific criteria. This can significantly reduce the model size. There are various strategies, like weight pruning, neuron pruning, and structured pruning.

+

+**Quantization**: Quantization is the process of constraining an input from a large set to output in a smaller set, primarily in deep learning, this means reducing the number of bits that represent the weights and biases of the model. For example, using 16-bit or 8-bit representations instead of 32-bit can reduce model size and speed up computations, with a minor trade-off in accuracy.

+

+**Knowledge Distillation**: Knowledge distillation involves training a smaller model (student) to replicate the behavior of a larger model (teacher). The idea is to transfer the knowledge from the cumbersome model to the lightweight one, so the smaller model attains performance close to its larger counterpart but with significantly fewer parameters.

+

+## Efficient Inference Hardware

+

+[Training](./training.qmd) an AI model is an intensive task that requires powerful hardware and can take hours to weeks, but inference needs to be as fast as possible, especially in real-time applications. This is where efficient inference hardware comes into play. By optimizing the hardware specifically for inference tasks, we can achieve rapid response times and power-efficient operation, especially crucial for edge devices and embedded systems.

+

+**TPUs (Tensor Processing Units)**: [TPUs](https://cloud.google.com/tpu) are custom-built ASICs (Application-Specific Integrated Circuits) by Google to accelerate machine learning workloads [@jouppi2017datacenter]. They are optimized for tensor operations, offering high throughput for low-precision arithmetic, and are designed specifically for neural network machine learning. TPUs deliver a significant acceleration in model training and inference as compared to general-purpose GPU/CPUs. This boost means faster model training and real-time or near-real-time inference capabilities, crucial for applications like voice search and augmented reality.

+

+[Edge TPUs](https://cloud.google.com/edge-tpu) are a smaller, power-efficient version of Google's TPUs, tailored for edge devices. They provide fast on-device ML inferencing for TensorFlow Lite models. Edge TPUs allow for low-latency, high-efficiency inference on edge devices like smartphones, IoT devices, and embedded systems. This means AI capabilities can be deployed in real-time applications without needing to communicate with a central server, thus saving bandwidth and reducing latency.

+

+**NN Accelerators**: Fixed function neural network accelerators are hardware accelerators designed explicitly for neural network computations. These can be standalone chips or part of a larger system-on-chip (SoC) solution. By optimizing the hardware for the specific operations that neural networks require, such as matrix multiplications and convolutions, NN accelerators can achieve faster inference times and lower power consumption compared to general-purpose CPUs and GPUs. They are especially beneficial in TinyML devices with power or thermal constraints, such as smartwatches, micro-drones, or robotics.

+

+But these are all but the most common place examples, there are a number of other types of hardware that are emerging that have the potential to offer signficiant advantages for inference. These include but are not limited to neuromorphic hardware, photonic computing, and so forth. In [@sec-ai_hw] we will explore these in greater detail.

+

+Efficient hardware for inference not only speeds up the process but also saves energy, extends battery life, and can operate in real-time conditions. As AI continues to be integrated into a myriad of applications – from smart cameras to voice assistants – the role of optimized hardware will only become more prominent. By leveraging these specialized hardware components, developers and engineers can bring the power of AI to devices and situations that were previously unthinkable.

+

+## Efficient Numerics

+

+Machine learning, and especially deep learning, involves enormous amounts of computation. Models can have millions to billions of parameters, and these are often trained on vast datasets. Every operation, every multiplication or addition, demands computational resources. Therefore, the precision of the numbers used in these operations can have a significant impact on the computational speed, energy consumption, and memory requirements. This is where the concept of efficient numerics comes into play.

+

+### Numerical Formats

+

+There are many different types of numerics. Numerics have a long history in computing systems.

+

+**Floating point**: Known as single-precision floating-point, FP32 utilizes 32 bits to represent a number, incorporating its sign, exponent, and fraction. FP32 is widely adopted in many deep learning frameworks and offers a balance between accuracy and computational requirements. It's prevalent in the training phase for many neural networks due to its sufficient precision in capturing minute details during weight updates.

+

+Also known as half-precision floating point, FP16 uses 16 bits to represent a number, including its sign, exponent, and fraction. FP16 offers a good balance between precision and memory savings. It's particularly popular in deep learning training on GPUs that support mixed-precision arithmetic, combining the speed benefits of FP16 with the precision of FP32 where needed.

+

+There are also several other numerical formats that fall into an exotic calss. An exotic example is BF16, or Brain Floating Point. It is a 16-bit numerical format that is designed explicitly for deep learning applications. It's a compromise between FP32 and FP16, retaining the 8-bit exponent from FP32 while reducing the mantissa to 7 bits (as compared to FP32's 23-bit mantissa). This structure prioritizes range over precision. BF16 has been shown to achieve training results that are comparable in accuracy to FP32 while using significantly less memory and computational resources. This makes it suitable not just for inference but also for training deep neural networks.

+

+By retaining the 8-bit exponent of FP32, BF16 offers a similar range, which is crucial for deep learning tasks where certain operations can result in very large or very small numbers. At the same time, by truncating precision, BF16 allows for reduced memory and computational requirements compared to FP32. BF16 has emerged as a promising middle ground in the landscape of numerical formats for deep learning, providing an efficient and effective alternative to the more traditional FP32 and FP16 formats.

+

+](https://storage.googleapis.com/gweb-cloudblog-publish/images/Three_floating-point_formats.max-624x261.png)

+

+**Integer**: These are integer representations using 8, 4, and 2 bits. They are often used during the inference phase of neural networks, where the weights and activations of the model are quantized to these lower precisions. Integer representations are deterministic and offer significant speed and memory advantages over floating-point representations. For many inference tasks, especially on edge devices, the slight loss in accuracy due to quantization is often acceptable given the efficiency gains. An extreme form of integer numerics is for binary neural networks (BNNs), where weights and activations are constrained to one of two values: either +1 or -1.

+

+**Variable bit widths**: Beyond the standard widths, research is ongoing into extremely low bit-width numerics, even down to binary or ternary representations. Extremely low bit-width operations can offer significant speedups and reduce power consumption even further. While challenges remain in maintaining model accuracy with such drastic quantization, advances continue to be made in this area.

+

+Efficient numerics is not just about reducing the bit-width of numbers but understanding the trade-offs between accuracy and efficiency. As machine learning models become more pervasive, especially in real-world, resource-constrained environments, the focus on efficient numerics will continue to grow. By thoughtfully selecting and leveraging the appropriate numeric precision, one can achieve robust model performance while optimizing for speed, memory, and energy. The table below summarizes them.

+

+| Precision | Pros | Cons |

+|------------|-----------------------------------------------------------|--------------------------------------------------|

+| **FP32** (Floating Point 32-bit) | - Standard precision used in most deep learning frameworks.

- High accuracy due to ample representational capacity.

- Well-suited for training. | - High memory usage.

- Slower inference times compared to quantized models.

- Higher energy consumption. |

+| **FP16** (Floating Point 16-bit) | - Reduces memory usage compared to FP32.

- Speeds up computations on hardware that supports FP16.

- Often used in mixed-precision training to balance speed and accuracy. | - Lower representational capacity compared to FP32.

- Risk of numerical instability in some models or layers. |

+| **INT8** (8-bit Integer) | - Significantly reduced memory footprint compared to floating-point representations.

- Faster inference if hardware supports INT8 computations.

- Suitable for many post-training quantization scenarios. | - Quantization can lead to some accuracy loss.

- Requires careful calibration during quantization to minimize accuracy degradation. |

+| **INT4** (4-bit Integer) | - Even lower memory usage than INT8.

- Further speed-up potential for inference. | - Higher risk of accuracy loss compared to INT8.

- Calibration during quantization becomes more critical. |

+| **Binary** | - Minimal memory footprint (only 1 bit per parameter).

- Extremely fast inference due to bitwise operations.

- Power efficient. | - Significant accuracy drop for many tasks.

- Complex training dynamics due to extreme quantization. |

+| **Ternary** | - Low memory usage but slightly more than binary.

- Offers a middle ground between representation and efficiency. | - Accuracy might still be lower than higher precision models.

- Training dynamics can be complex. |

+

+### Efficiency Benefits

+

+Numerical efficiency matters for machine learning workloads for a number of reasons:

+

+**Computational Efficiency**: High-precision computations (like FP32 or FP64) can be slow and resource-intensive. By reducing numeric precision, one can achieve faster computation times, especially on specialized hardware that supports lower precision.

+

+**Memory Efficiency**: Storage requirements decrease with reduced numeric precision. For instance, FP16 requires half the memory of FP32. This is crucial when deploying models to edge devices with limited memory or when working with very large models.

+

+**Power Efficiency**: Lower precision computations often consume less power, which is especially important for battery-operated devices.

+

+**Noise Introduction**: Interestingly, the noise introduced by using lower precision can sometimes act as a regularizer, helping to prevent overfitting in some models.

+

+**Hardware Acceleration**: Many modern AI accelerators and GPUs are optimized for lower precision operations, leveraging the efficiency benefits of such numerics.

## Evaluating Models

-Explanation: This part of the chapter emphasizes the importance of evaluating the efficiency of AI models using appropriate metrics and benchmarks. This process is vital to ensuring the effectiveness of the approaches discussed earlier and seamlessly connects with case studies where these benchmarks can be seen in a real-world context.

+It's worth noting that the actual benefits and trade-offs can vary based on the specific architecture of the neural network, the dataset, the task, and the hardware being used. Before deciding on a numeric precision, it's advisable to perform experiments to evaluate the impact on the desired application.

+

+### Efficiency Metrics

-- Metrics for Efficiency

- - FLOPs (Floating Point Operations)

- - Memory Usage

- - Power Consumption

- - Inference Time

-- Benchmark Datasets and Tools

-- Comparative Analysis of AI Models

-- EEMBC, MLPerf Tiny, Edge

+To guide this process systematically, it is important to have a deep understanding of model evaluation methods. When assessing AI models' effectiveness and suitability for various applications, efficiency metrics come to the forefront.

-## Emerging Directions

+**FLOPs (Floating Point Operations)** gauge the computational demands of a model. For instance, a modern neural network like BERT has billions of FLOPs, which might be manageable on a powerful cloud server but would be taxing on a smartphone. Higher FLOPs can lead to more prolonged inference times and more significant power drain, especially on devices without specialized hardware accelerators. Hence, for real-time applications such as video streaming or gaming, models with lower FLOPs might be more desirable.

-- Automated model search

-- Multi-task learning

-- Meta learning

-- Lottery ticket hypothesis

-- Hardware-algorithm co-design

-- Data-Aware NAS

+**Memory Usage** pertains to how much storage the model requires, which affects both the storage and RAM of the deploying device. Consider deploying a model onto a smartphone: a model that occupies several gigabytes of space not only consumes precious storage but might also be slower due to the need to load large weights into memory. This becomes especially crucial for edge devices like security cameras or drones, where minimal memory footprints are vital for both storage and rapid data processing.

+

+**Power Consumption** becomes especially crucial for devices that rely on batteries. For instance, a wearable health monitor using a power-hungry model could drain its battery in hours, rendering it impractical for continuous health monitoring. As we move toward an era dominated by IoT devices, where many devices operate on battery power, optimizing models for low power consumption becomes essential.

+

+**Inference Time** is about how swiftly a model can produce results. In applications like autonomous driving, where split-second decisions are the difference between safety and calamity, models must operate rapidly. If a self-driving car's model takes even a few seconds too long to recognize an obstacle, the consequences could be dire. Hence, ensuring a model's inference time aligns with the real-time demands of its application is paramount.

+

+In essence, these efficiency metrics are more than mere numbers—they dictate where and how a model can be effectively deployed. A model might boast high accuracy, but if its FLOPs, memory usage, power consumption, or inference time make it unsuitable for its intended platform or real-world scenarios, its practical utility becomes limited.

+

+### Efficiency Comparisons

+

+There is an abundance of models in the ecosystem, each boasting its unique strengths and idiosyncrasies. However, pure model accuracy figures or training and inference speeds don't paint the complete picture. When we dive deeper into comparative analyses, several critical nuances emerge.

+

+Often, we encounter the delicate balance between accuracy and efficiency. For instance, while a dense deep learning model and a lightweight MobileNet variant might both excel in image classification, their computational demands could be at two extremes. This differentiation is especially pronounced when comparing deployments on resource-abundant cloud servers versus constrained TinyML devices. In many real-world scenarios, the marginal gains in accuracy could be overshadowed by the inefficiencies of a resource-intensive model.

+

+Moreover, the optimal model choice isn't always universal but often depends on the specifics of an application. Consider object detection: a model that excels in general scenarios might falter in niche environments like detecting manufacturing defects on a factory floor. This adaptability—or the lack of it—can dictate a model's real-world utility.

+

+Another important consideration is the relationship between model complexity and its practical benefits. Take voice-activated assistants as an example such as "Alexa" or "OK Google." While a complex model might demonstrate a marginally superior understanding of user speech, if it's slower to respond than a simpler counterpart, the user experience could be compromised. Thus, adding layers or parameters doesn't always equate to better real-world outcomes.

+

+Furthermore, while benchmark datasets, such as ImageNet, COCO, Visual Wake Words, Google Speech Commands, etc. provide a standardized performance metric, they might not capture the diversity and unpredictability of real-world data. Two facial recognition models with similar benchmark scores might exhibit varied competencies when faced with diverse ethnic backgrounds or challenging lighting conditions. Such disparities underscore the importance of robustness and consistency across varied data. For example, the image below from the Dollar Street dataset shows stove images across extreme monthly incomes.

+

+

+

+In essence, a thorough comparative analysis transcends numerical metrics. It's a holistic assessment, intertwined with real-world applications, costs, and the intricate subtleties that each model brings to the table. This is why it becomes important to have standard benchmarks and metrics that are widely estabslished and adopted by the community.

## Conclusion

-Explanation: This section synthesizes the information presented throughout the chapter, offering a coherent summary, and emphasizing the critical takeaways. It helps consolidate the knowledge acquired, setting the stage for the subsequent chapters on optimization and deployment.

\ No newline at end of file

+Efficient AI is extremely important as we push towards broader and more diverse real-world deployment of machine learning. This chapter provided an overview, exploring the various methodologies and considerations behind achieving efficient AI, starting with the fundamental need, similarities and differences across cloud, edge, and TinyML systems.

+

+We saw that efficient model architectures can be useful for optimizations. Model compression techniques such as pruning, quantization, and knowledge distillation exist to help reduce computational demands and memory footprint without significantly impacting accuracy. Specialized hardware like TPUs and NN accelerators offer optimized silicon for the operations and data flow of neural networks. And efficient numerics strike a balance between precision and efficiency, enabling models to attain robust performance using minimal resources. In the subsequent chapters, we will dive deeper into each of these different topics and explore them in great depth and detail.

+

+Together, these form a holistic framework for efficient AI. But the journey doesn't end here. Achieving optimally efficient intelligence requires continued research and innovation. As models become more sophisticated, datasets grow larger, and applications diversify into specialized domains, efficiency must evolve in lockstep. Measuring real-world impact would need nuanced benchmarks and standardized metrics beyond simplistic accuracy figures.

+

+Moreover, efficient AI expands beyond technological optimization but also encompasses costs, environmental impact, and ethical considerations for the broader societal good. As AI permeates across industries and daily lives, a comprehensive outlook on efficiency underpins its sustainable and responsible progress. The subsequent chapters will build upon these foundational concepts, providing actionable insights and hands-on best practices for developing and deploying efficient AI solutions.

\ No newline at end of file

diff --git a/hw_acceleration.qmd b/hw_acceleration.qmd

index c3ca10a3..33d25551 100644

--- a/hw_acceleration.qmd

+++ b/hw_acceleration.qmd

@@ -1,4 +1,4 @@

-# AI Acceleration

+# AI Acceleration {#sec-ai_hw}

## Introduction

diff --git a/references.bib b/references.bib

index 498acd34..02e3795a 100644

--- a/references.bib

+++ b/references.bib

@@ -7,6 +7,55 @@ @misc{Thefutur92:online

note = {(Accessed on 09/16/2023)}

}

+@book{barroso2019datacenter,

+ title={The datacenter as a computer: Designing warehouse-scale machines},

+ author={Barroso, Luiz Andr{\'e} and H{\"o}lzle, Urs and Ranganathan, Parthasarathy},

+ year={2019},

+ publisher={Springer Nature}

+}

+

+@article{howard2017mobilenets,

+ title={Mobilenets: Efficient convolutional neural networks for mobile vision applications},

+ author={Howard, Andrew G and Zhu, Menglong and Chen, Bo and Kalenichenko, Dmitry and Wang, Weijun and Weyand, Tobias and Andreetto, Marco and Adam, Hartwig},

+ journal={arXiv preprint arXiv:1704.04861},

+ year={2017}

+}

+

+@inproceedings{he2016deep,

+ title={Deep residual learning for image recognition},

+ author={He, Kaiming and Zhang, Xiangyu and Ren, Shaoqing and Sun, Jian},

+ booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition},

+ pages={770--778},

+ year={2016}

+}

+

+@inproceedings{jouppi2017datacenter,

+ title={In-datacenter performance analysis of a tensor processing unit},

+ author={Jouppi, Norman P and Young, Cliff and Patil, Nishant and Patterson, David and Agrawal, Gaurav and Bajwa, Raminder and Bates, Sarah and Bhatia, Suresh and Boden, Nan and Borchers, Al and others},

+ booktitle={Proceedings of the 44th annual international symposium on computer architecture},

+ pages={1--12},

+ year={2017}

+}

+

+@article{iandola2016squeezenet,

+ title={SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size},

+ author={Iandola, Forrest N and Han, Song and Moskewicz, Matthew W and Ashraf, Khalid and Dally, William J and Keutzer, Kurt},

+ journal={arXiv preprint arXiv:1602.07360},

+ year={2016}

+}

+

+@article{li2019edge,

+ title={Edge AI: On-demand accelerating deep neural network inference via edge computing},

+ author={Li, En and Zeng, Liekang and Zhou, Zhi and Chen, Xu},

+ journal={IEEE Transactions on Wireless Communications},

+ volume={19},

+ number={1},

+ pages={447--457},

+ year={2019},

+ publisher={IEEE}

+}

+

+

@book{rosenblatt1957perceptron,

title={The perceptron, a perceiving and recognizing automaton Project Para},

author={Rosenblatt, Frank},