Add all 2024 conference sessions to your calendar. You can add this address to your online calendaring system if you want to receive updates dynamically.

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

VIS Full Papers

Look, Learn, Language Models

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

Chair: Nicole Sultanum

6 presentations in this session. See more »

VIS Full Papers

Where the Networks Are

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

Chair: Oliver Deussen

6 presentations in this session. See more »

VIS Full Papers

Human and Machine Visualization Literacy

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

Chair: Bum Chul Kwon

6 presentations in this session. See more »

VIS Full Papers

Flow, Topology, and Uncertainty

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

Chair: Bei Wang

6 presentations in this session. See more »

2024-10-18T14:15:00Z – 2024-10-18T15:00:00Z

Conference Events

Test of Time Awards

2024-10-18T14:15:00Z – 2024-10-18T15:00:00Z

Chair: Ross Maciejewski

1 presentations in this session. See more »

2024-10-18T15:00:00Z – 2024-10-18T16:30:00Z

Conference Events

IEEE VIS Capstone and Closing

2024-10-18T15:00:00Z – 2024-10-18T16:30:00Z

Chair: Paul Rosen, Kristi Potter, Remco Chang

3 presentations in this session. See more »

2024-10-15T12:30:00Z – 2024-10-15T13:45:00Z

Conference Events

Opening Session

2024-10-15T12:30:00Z – 2024-10-15T13:45:00Z

Chair: Paul Rosen, Kristi Potter, Remco Chang

2 presentations in this session. See more »

2024-10-15T14:15:00Z – 2024-10-15T15:45:00Z

VIS Short Papers

VGTC Awards & Best Short Papers

2024-10-15T14:15:00Z – 2024-10-15T15:45:00Z

Chair: Chaoli Wang

4 presentations in this session. See more »

2024-10-15T15:35:00Z – 2024-10-15T16:00:00Z

Conference Events

VIS Governance

2024-10-15T15:35:00Z – 2024-10-15T16:00:00Z

Chair: Petra Isenberg, Jean-Daniel Fekete

2 presentations in this session. See more »

2024-10-15T16:00:00Z – 2024-10-15T17:30:00Z

VIS Full Papers

Best Full Papers

2024-10-15T16:00:00Z – 2024-10-15T17:30:00Z

Chair: Claudio Silva

6 presentations in this session. See more »

2024-10-15T18:00:00Z – 2024-10-15T19:00:00Z

VIS Arts Program

VISAP Keynote: The Golden Age of Visualization Dissensus

2024-10-15T18:00:00Z – 2024-10-15T19:00:00Z

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner, Alberto Cairo

0 presentations in this session. See more »

2024-10-15T19:00:00Z – 2024-10-15T21:00:00Z

Conference Events

Posters

2024-10-15T19:00:00Z – 2024-10-15T21:00:00Z

0 presentations in this session. See more »

VIS Arts Program

VISAP Artist Talks

2024-10-15T19:00:00Z – 2024-10-15T21:00:00Z

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

16 presentations in this session. See more »

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

VIS Full Papers

Visualization Recommendation

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Johannes Knittel

6 presentations in this session. See more »

VIS Full Papers

Model-checking and Validation

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Michael Correll

6 presentations in this session. See more »

VIS Full Papers

Embeddings and Document Spatialization

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Alex Endert

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Perception and Representation

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Anjana Arunkumar

8 presentations in this session. See more »

VIS Panels

Panel: Human-Centered Computing Research in South America: Status Quo, Opportunities, and Challenges

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Chaoli Wang

0 presentations in this session. See more »

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

VIS Full Papers

Applications: Sports. Games, and Finance

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Marc Streit

6 presentations in this session. See more »

VIS Full Papers

Visual Design: Sketching and Labeling

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Jonathan C. Roberts

6 presentations in this session. See more »

VIS Full Papers

Topological Data Analysis

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Ingrid Hotz

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Text and Multimedia

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Min Lu

8 presentations in this session. See more »

VIS Panels

Panel: (Yet Another) Evaluation Needed? A Panel Discussion on Evaluation Trends in Visualization

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Ghulam Jilani Quadri, Danielle Albers Szafir, Arran Zeyu Wang, Hyeon Jeon

0 presentations in this session. See more »

VIS Arts Program

VISAP Pictorials

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

8 presentations in this session. See more »

2024-10-17T15:30:00Z – 2024-10-17T16:00:00Z

Conference Events

IEEE VIS 2025 Kickoff

2024-10-17T15:30:00Z – 2024-10-17T16:00:00Z

Chair: Johanna Schmidt, Kresimir Matković, Barbora Kozlíková, Eduard Gröller

1 presentations in this session. See more »

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

VIS Full Papers

Once Upon a Visualization

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Marti Hearst

6 presentations in this session. See more »

VIS Full Papers

Visualization Design Methods

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Miriah Meyer

6 presentations in this session. See more »

VIS Full Papers

The Toolboxes of Visualization

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Dominik Moritz

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Analytics and Applications

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Anna Vilanova

8 presentations in this session. See more »

CG&A Invited Partnership Presentations

CG&A: Systems, Theory, and Evaluations

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Francesca Samsel

6 presentations in this session. See more »

VIS Panels

Panel: Vogue or Visionary? Current Challenges and Future Opportunities in Situated Visualizations

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Michelle A. Borkin, Melanie Tory

0 presentations in this session. See more »

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

VIS Full Papers

Journalism and Public Policy

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Sungahn Ko

6 presentations in this session. See more »

VIS Full Papers

Applications: Industry, Computing, and Medicine

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Joern Kohlhammer

6 presentations in this session. See more »

VIS Full Papers

Accessibility and Touch

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Narges Mahyar

6 presentations in this session. See more »

VIS Full Papers

Motion and Animated Notions

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Catherine d'Ignazio

6 presentations in this session. See more »

VIS Short Papers

Short Papers: AI and LLM

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Cindy Xiong Bearfield

8 presentations in this session. See more »

VIS Panels

Panel: Dear Younger Me: A Dialog About Professional Development Beyond The Initial Career Phases

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Robert M Kirby, Michael Gleicher

0 presentations in this session. See more »

2024-10-16T12:30:00Z – 2024-10-16T13:30:00Z

VIS Full Papers

Virtual: VIS from around the world

2024-10-16T12:30:00Z – 2024-10-16T13:30:00Z

Chair: Mahmood Jasim

6 presentations in this session. See more »

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

VIS Full Papers

Text, Annotation, and Metaphor

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Melanie Tory

6 presentations in this session. See more »

VIS Full Papers

Immersive Visualization and Visual Analytics

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Lingyun Yu

6 presentations in this session. See more »

VIS Full Papers

Machine Learning for Visualization

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Joshua Levine

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Graph, Hierarchy and Multidimensional

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Alfie Abdul-Rahman

8 presentations in this session. See more »

VIS Panels

Panel: What Do Visualization Art Projects Bring to the VIS Community?

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Xinhuan Shu, Yifang Wang, Junxiu Tang

0 presentations in this session. See more »

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

VIS Full Papers

Biological Data Visualization

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Nils Gehlenborg

6 presentations in this session. See more »

VIS Full Papers

Judgment and Decision-making

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Wenwen Dou

6 presentations in this session. See more »

VIS Full Papers

Time and Sequences

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Silvia Miksch

6 presentations in this session. See more »

VIS Full Papers

Dimensionality Reduction

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Jian Zhao

6 presentations in this session. See more »

VIS Full Papers

Urban Planning, Construction, and Disaster Management

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Siming Chen

6 presentations in this session. See more »

VIS Arts Program

VISAP Papers

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

6 presentations in this session. See more »

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

VIS Full Papers

Natural Language and Multimodal Interaction

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Ana Crisan

6 presentations in this session. See more »

VIS Full Papers

Collaboration and Communication

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Vidya Setlur

6 presentations in this session. See more »

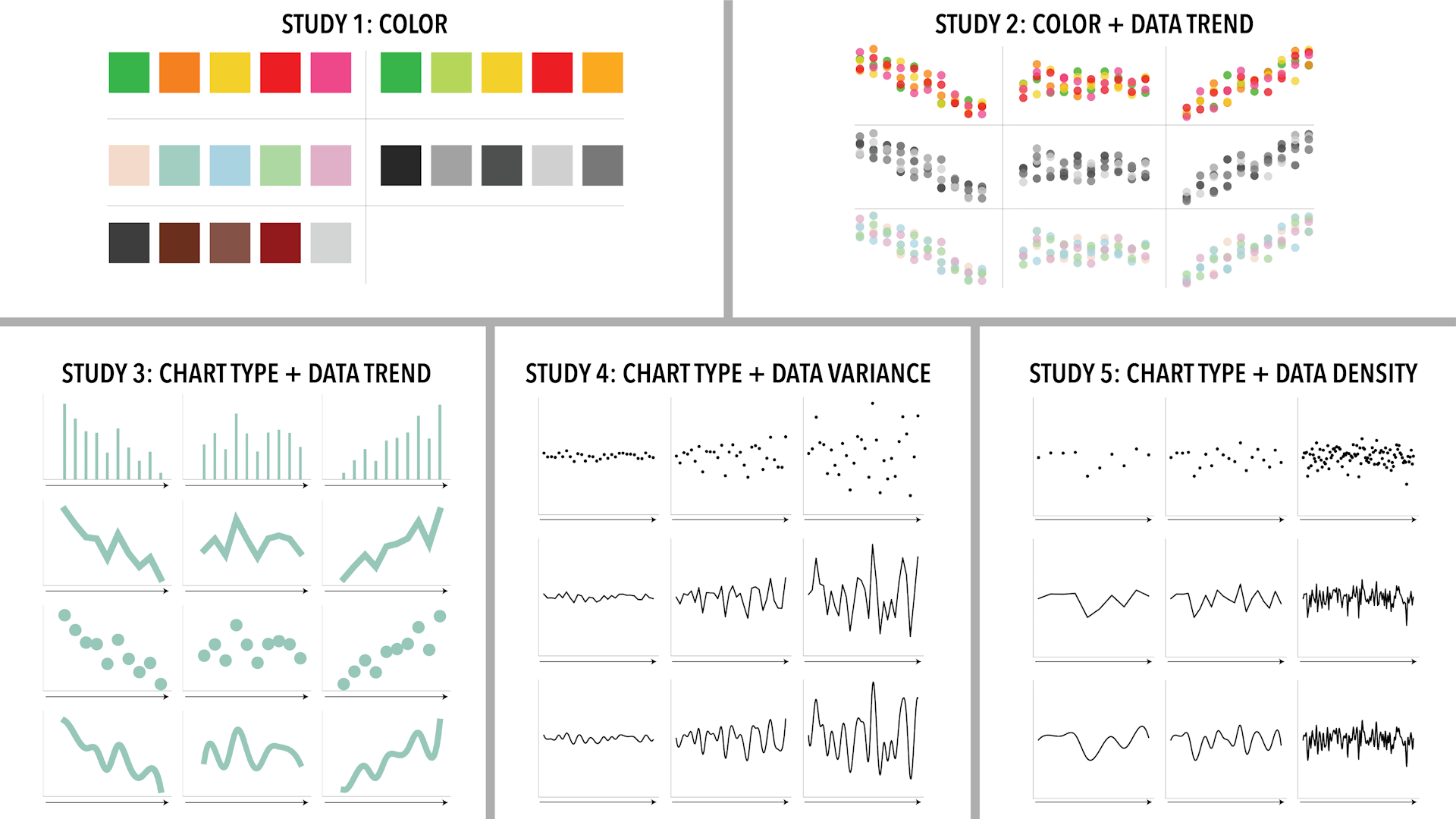

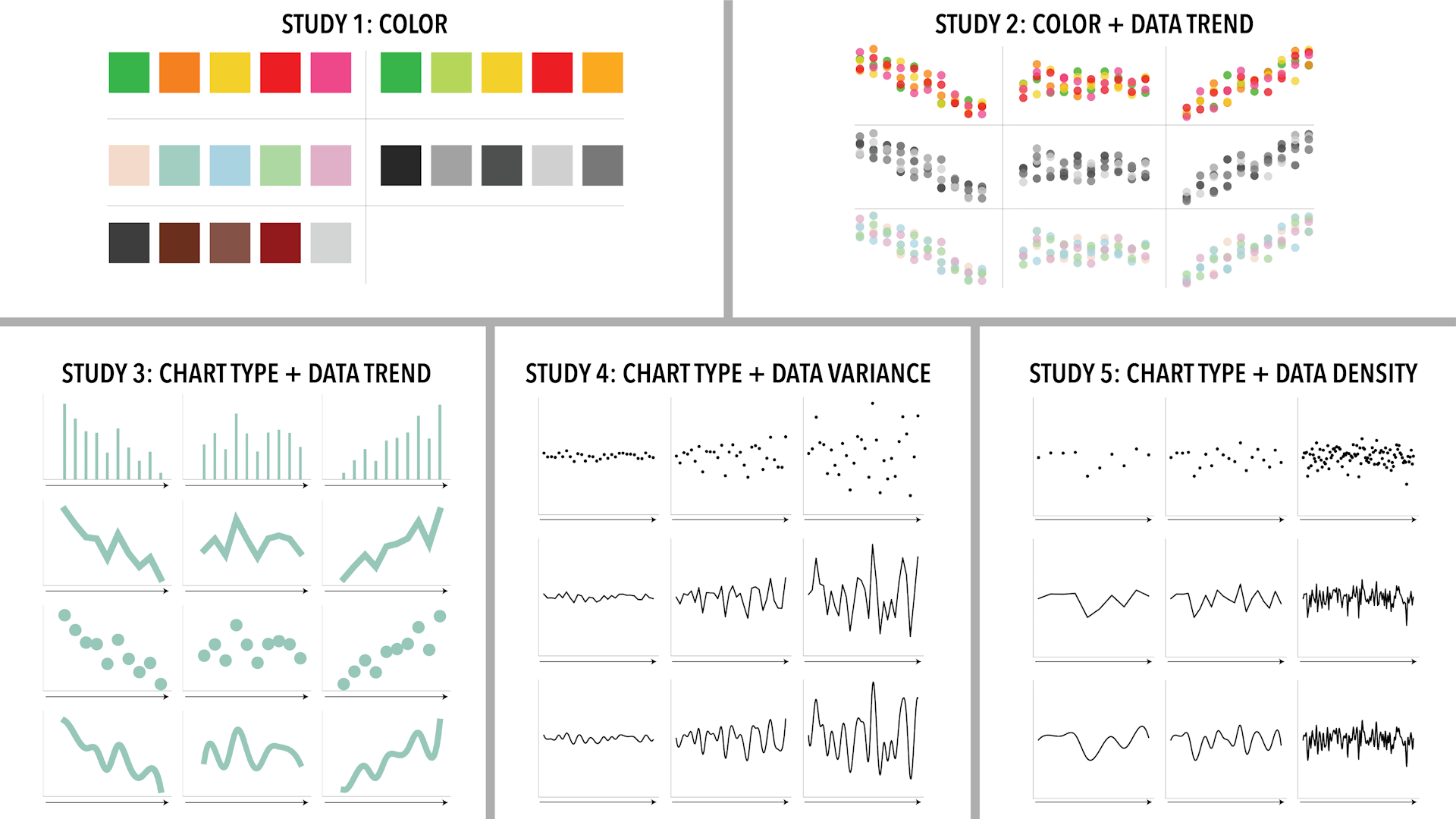

VIS Full Papers

Perception and Cognition

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Bernhard Preim

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Scientific and Immersive Visualization

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Bei Wang

8 presentations in this session. See more »

CG&A Invited Partnership Presentations

CG&A: Analytics and Applications

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Bruce Campbell

6 presentations in this session. See more »

VIS Panels

Panel: 20 Years of Visual Analytics

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: David Ebert, Wolfgang Jentner, Ross Maciejewski, Jieqiong Zhao

0 presentations in this session. See more »

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

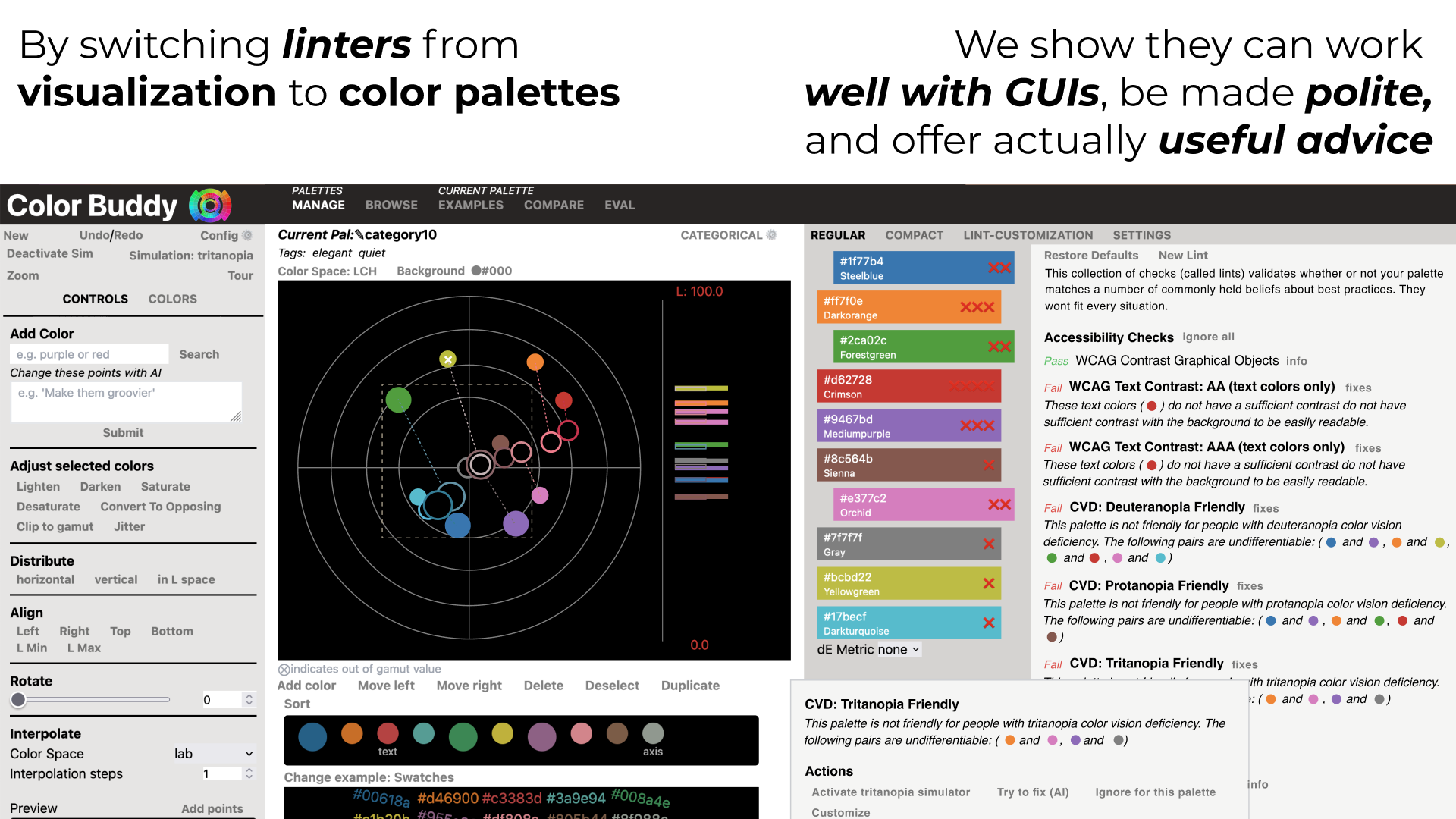

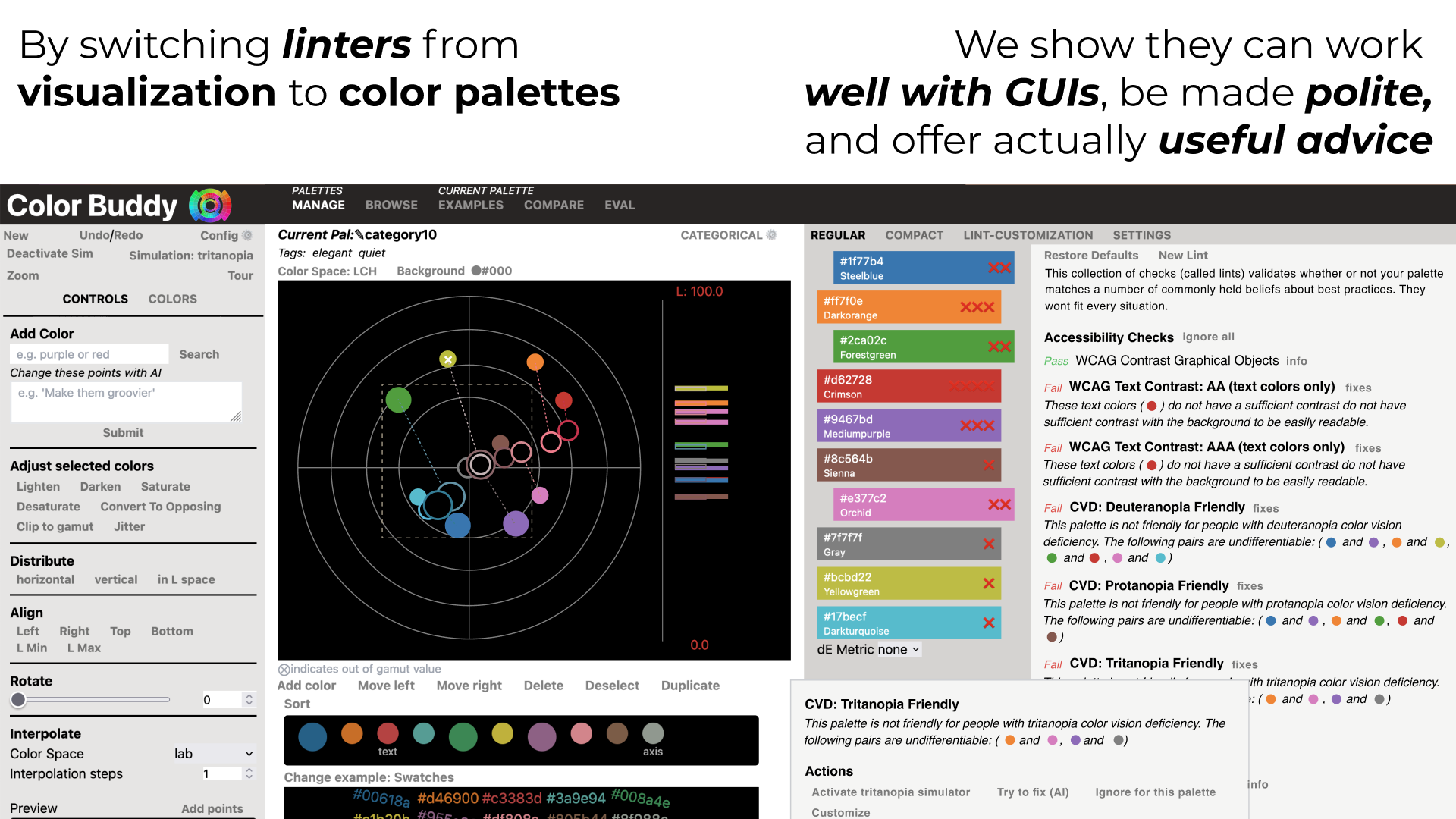

VIS Full Papers

Designing Palettes and Encodings

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Khairi Rheda

6 presentations in this session. See more »

VIS Full Papers

Of Nodes and Networks

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Carolina Nobre

6 presentations in this session. See more »

VIS Full Papers

Scripts, Notebooks, and Provenance

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Alex Lex

6 presentations in this session. See more »

VIS Short Papers

Short Papers: System design

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Chris Bryan

8 presentations in this session. See more »

VIS Panels

Panel: Past, Present, and Future of Data Storytelling

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Haotian Li, Yun Wang, Benjamin Bach, Sheelagh Carpendale, Fanny Chevalier, Nathalie Riche

0 presentations in this session. See more »

Application Spotlights

Application Spotlight: Visualization within the Department of Energy

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Ana Crisan, Menna El-Assady

0 presentations in this session. See more »

2024-10-16T19:00:00Z – 2024-10-16T19:30:00Z

Conference Events

IEEE VIS Town Hall

2024-10-16T19:00:00Z – 2024-10-16T19:30:00Z

Chair: Ross Maciejewski

0 presentations in this session. See more »

2024-10-16T19:30:00Z – 2024-10-16T20:30:00Z

VIS Panels

Panel: VIS Conference Futures: Community Opinions on Recent Experiences, Challenges, and Opportunities for Hybrid Event Formats

2024-10-16T19:30:00Z – 2024-10-16T20:30:00Z

Chair: Matthew Brehmer, Narges Mahyar

0 presentations in this session. See more »

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

VAST Challenge

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: R. Jordan Crouser, Steve Gomez, Jereme Haack

13 presentations in this session. See more »

VISxAI: 7th Workshop on Visualization for AI Explainability

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Alex Bäuerle, Angie Boggust, Fred Hohman

12 presentations in this session. See more »

1st Workshop on Accessible Data Visualization

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Brianna Wimer, Laura South

7 presentations in this session. See more »

First-Person Visualizations for Outdoor Physical Activities: Challenges and Opportunities

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Charles Perin, Tica Lin, Lijie Yao, Yalong Yang, Maxime Cordeil, Wesley Willett

0 presentations in this session. See more »

EduVis: Workshop on Visualization Education, Literacy, and Activities

EduVis: 2nd IEEE VIS Workshop on Visualization Education, Literacy, and Activities (Session 1)

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Fateme Rajabiyazdi, Mandy Keck, Lonni Besancon, Alon Friedman, Benjamin Bach

3 presentations in this session. See more »

Visualization Analysis and Design

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Tamara Munzner

0 presentations in this session. See more »

Developing Immersive and Collaborative Visualizations with Web-Technologies

Developing Immersive and Collaborative Visualizations with Web Technologies

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: David Saffo

0 presentations in this session. See more »

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

VDS: Visualization in Data Science Symposium

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Ana Crisan, Dylan Cashman

6 presentations in this session. See more »

LDAV: 13th IEEE Symposium on Large Data Analysis and Visualization

LDAV: 14th IEEE Symposium on Large Data Analysis and Visualization

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Silvio Rizzi, Gunther Weber, Guido Reina, Ken Moreland

6 presentations in this session. See more »

Bio+MedVis Challenges

Bio+Med+Vis Workshop

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Barbora Kozlikova, Nils Gehlenborg, Laura Garrison, Eric Mörth, Morgan Turner, Simon Warchol

6 presentations in this session. See more »

Workshop on Data Storytelling in an Era of Generative AI

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Xingyu Lan, Leni Yang, Zezhong Wang, Yun Wang, Danqing Shi, Sheelagh Carpendale

4 presentations in this session. See more »

EduVis: Workshop on Visualization Education, Literacy, and Activities

EduVis: 2nd IEEE VIS Workshop on Visualization Education, Literacy, and Activities (Session 2)

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Jillian Aurisano, Fateme Rajabiyazdi, Mandy Keck, Lonni Besancon, Alon Friedman, Benjamin Bach, Jonathan Roberts, Christina Stoiber, Magdalena Boucher, Lily Ge

3 presentations in this session. See more »

Generating Color Schemes for your Data Visualizations

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Theresa-Marie Rhyne

0 presentations in this session. See more »

Running Online User Studies with the reVISit Framework

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Jack Wilburn

0 presentations in this session. See more »

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

VisInPractice

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Arjun Srinivasan, Ayan Biswas

0 presentations in this session. See more »

SciVis Contest

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Karen Bemis, Tim Gerrits

0 presentations in this session. See more »

BELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization

BELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization (Session 1)

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Anastasia Bezerianos, Michael Correll, Kyle Hall, Jürgen Bernard, Dan Keefe, Mai Elshehaly, Mahsan Nourani

6 presentations in this session. See more »

Progressive Data Analysis and Visualization (PDAV) Workshop.

Progressive Data Analysis and Visualization (PDAV) Workshop

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Alex Ulmer, Jaemin Jo, Michael Sedlmair, Jean-Daniel Fekete

3 presentations in this session. See more »

Uncertainty Visualization: Applications, Techniques, Software, and Decision Frameworks

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Tushar M. Athawale, Chris R. Johnson, Kristi Potter, Paul Rosen, David Pugmire

12 presentations in this session. See more »

Visualization for Climate Action and Sustainability

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Benjamin Bach, Fanny Chevalier, Helen-Nicole Kostis, Mark SubbaRao, Yvonne Jansen, Robert Soden

13 presentations in this session. See more »

LLM4Vis: Large Language Models for Information Visualization

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Enamul Hoque

0 presentations in this session. See more »

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

TopoInVis: Workshop on Topological Data Analysis and Visualization

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Federico Iuricich, Yue Zhang

6 presentations in this session. See more »

BELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization

BELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization (Sesssion 2)

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Anastasia Bezerianos, Michael Correll, Kyle Hall, Jürgen Bernard, Dan Keefe, Mai Elshehaly, Mahsan Nourani

11 presentations in this session. See more »

NLVIZ Workshop: Exploring Research Opportunities for Natural Language, Text, and Data Visualization

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Vidya Setlur, Arjun Srinivasan

11 presentations in this session. See more »

EnergyVis 2024: 4th Workshop on Energy Data Visualization

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Kenny Gruchalla, Anjana Arunkumar, Sarah Goodwin, Arnaud Prouzeau, Lyn Bartram

11 presentations in this session. See more »

VISions of the Future: Workshop on Sustainable Practices within Visualization and Physicalisation

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Georgia Panagiotidou, Luiz Morais, Sarah Hayes, Derya Akbaba, Tatiana Losev, Andrew McNutt

5 presentations in this session. See more »

Enabling Scientific Discovery: A Tutorial for Harnessing the Power of the National Science Data Fabric for Large-Scale Data Analysis

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Amy Gooch

0 presentations in this session. See more »

Preparing, Conducting, and Analyzing Participatory Design Sessions for Information Visualizations

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Adriana Arcia

0 presentations in this session. See more »

Add all 2024 conference sessions to your calendar. You can add this address to your online calendaring system if you want to receive updates dynamically.

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

VIS Full Papers

Look, Learn, Language Models

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

Chair: Nicole Sultanum

6 presentations in this session. See more »

VIS Full Papers

Where the Networks Are

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

Chair: Oliver Deussen

6 presentations in this session. See more »

VIS Full Papers

Human and Machine Visualization Literacy

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

Chair: Bum Chul Kwon

6 presentations in this session. See more »

VIS Full Papers

Flow, Topology, and Uncertainty

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

Chair: Bei Wang

6 presentations in this session. See more »

2024-10-18T14:15:00Z – 2024-10-18T15:00:00Z

Conference Events

Test of Time Awards

2024-10-18T14:15:00Z – 2024-10-18T15:00:00Z

Chair: Ross Maciejewski

1 presentations in this session. See more »

2024-10-18T15:00:00Z – 2024-10-18T16:30:00Z

Conference Events

IEEE VIS Capstone and Closing

2024-10-18T15:00:00Z – 2024-10-18T16:30:00Z

Chair: Paul Rosen, Kristi Potter, Remco Chang

3 presentations in this session. See more »

2024-10-15T12:30:00Z – 2024-10-15T13:45:00Z

Conference Events

Opening Session

2024-10-15T12:30:00Z – 2024-10-15T13:45:00Z

Chair: Paul Rosen, Kristi Potter, Remco Chang

2 presentations in this session. See more »

2024-10-15T14:15:00Z – 2024-10-15T15:45:00Z

VIS Short Papers

VGTC Awards & Best Short Papers

2024-10-15T14:15:00Z – 2024-10-15T15:45:00Z

Chair: Chaoli Wang

4 presentations in this session. See more »

2024-10-15T15:35:00Z – 2024-10-15T16:00:00Z

Conference Events

VIS Governance

2024-10-15T15:35:00Z – 2024-10-15T16:00:00Z

Chair: Petra Isenberg, Jean-Daniel Fekete

2 presentations in this session. See more »

2024-10-15T16:00:00Z – 2024-10-15T17:30:00Z

VIS Full Papers

Best Full Papers

2024-10-15T16:00:00Z – 2024-10-15T17:30:00Z

Chair: Claudio Silva

6 presentations in this session. See more »

2024-10-15T18:00:00Z – 2024-10-15T19:00:00Z

VIS Arts Program

VISAP Keynote: The Golden Age of Visualization Dissensus

2024-10-15T18:00:00Z – 2024-10-15T19:00:00Z

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner, Alberto Cairo

0 presentations in this session. See more »

2024-10-15T19:00:00Z – 2024-10-15T21:00:00Z

Conference Events

Posters

2024-10-15T19:00:00Z – 2024-10-15T21:00:00Z

0 presentations in this session. See more »

VIS Arts Program

VISAP Artist Talks

2024-10-15T19:00:00Z – 2024-10-15T21:00:00Z

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

16 presentations in this session. See more »

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

VIS Full Papers

Visualization Recommendation

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Johannes Knittel

6 presentations in this session. See more »

VIS Full Papers

Model-checking and Validation

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Michael Correll

6 presentations in this session. See more »

VIS Full Papers

Embeddings and Document Spatialization

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Alex Endert

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Perception and Representation

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Anjana Arunkumar

8 presentations in this session. See more »

VIS Panels

Panel: Human-Centered Computing Research in South America: Status Quo, Opportunities, and Challenges

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

Chair: Chaoli Wang

0 presentations in this session. See more »

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

VIS Full Papers

Applications: Sports. Games, and Finance

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Marc Streit

6 presentations in this session. See more »

VIS Full Papers

Visual Design: Sketching and Labeling

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Jonathan C. Roberts

6 presentations in this session. See more »

VIS Full Papers

Topological Data Analysis

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Ingrid Hotz

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Text and Multimedia

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Min Lu

8 presentations in this session. See more »

VIS Panels

Panel: (Yet Another) Evaluation Needed? A Panel Discussion on Evaluation Trends in Visualization

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Ghulam Jilani Quadri, Danielle Albers Szafir, Arran Zeyu Wang, Hyeon Jeon

0 presentations in this session. See more »

VIS Arts Program

VISAP Pictorials

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

8 presentations in this session. See more »

2024-10-17T15:30:00Z – 2024-10-17T16:00:00Z

Conference Events

IEEE VIS 2025 Kickoff

2024-10-17T15:30:00Z – 2024-10-17T16:00:00Z

Chair: Johanna Schmidt, Kresimir Matković, Barbora Kozlíková, Eduard Gröller

1 presentations in this session. See more »

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

VIS Full Papers

Once Upon a Visualization

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Marti Hearst

6 presentations in this session. See more »

VIS Full Papers

Visualization Design Methods

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Miriah Meyer

6 presentations in this session. See more »

VIS Full Papers

The Toolboxes of Visualization

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Dominik Moritz

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Analytics and Applications

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Anna Vilanova

8 presentations in this session. See more »

CG&A Invited Partnership Presentations

CG&A: Systems, Theory, and Evaluations

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Francesca Samsel

6 presentations in this session. See more »

VIS Panels

Panel: Vogue or Visionary? Current Challenges and Future Opportunities in Situated Visualizations

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

Chair: Michelle A. Borkin, Melanie Tory

0 presentations in this session. See more »

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

VIS Full Papers

Journalism and Public Policy

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Sungahn Ko

6 presentations in this session. See more »

VIS Full Papers

Applications: Industry, Computing, and Medicine

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Joern Kohlhammer

6 presentations in this session. See more »

VIS Full Papers

Accessibility and Touch

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Narges Mahyar

6 presentations in this session. See more »

VIS Full Papers

Motion and Animated Notions

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Catherine d'Ignazio

6 presentations in this session. See more »

VIS Short Papers

Short Papers: AI and LLM

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Cindy Xiong Bearfield

8 presentations in this session. See more »

VIS Panels

Panel: Dear Younger Me: A Dialog About Professional Development Beyond The Initial Career Phases

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

Chair: Robert M Kirby, Michael Gleicher

0 presentations in this session. See more »

2024-10-16T12:30:00Z – 2024-10-16T13:30:00Z

VIS Full Papers

Virtual: VIS from around the world

2024-10-16T12:30:00Z – 2024-10-16T13:30:00Z

Chair: Mahmood Jasim

6 presentations in this session. See more »

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

VIS Full Papers

Text, Annotation, and Metaphor

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Melanie Tory

6 presentations in this session. See more »

VIS Full Papers

Immersive Visualization and Visual Analytics

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Lingyun Yu

6 presentations in this session. See more »

VIS Full Papers

Machine Learning for Visualization

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Joshua Levine

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Graph, Hierarchy and Multidimensional

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Alfie Abdul-Rahman

8 presentations in this session. See more »

VIS Panels

Panel: What Do Visualization Art Projects Bring to the VIS Community?

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

Chair: Xinhuan Shu, Yifang Wang, Junxiu Tang

0 presentations in this session. See more »

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

VIS Full Papers

Biological Data Visualization

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Nils Gehlenborg

6 presentations in this session. See more »

VIS Full Papers

Judgment and Decision-making

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Wenwen Dou

6 presentations in this session. See more »

VIS Full Papers

Time and Sequences

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Silvia Miksch

6 presentations in this session. See more »

VIS Full Papers

Dimensionality Reduction

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Jian Zhao

6 presentations in this session. See more »

VIS Full Papers

Urban Planning, Construction, and Disaster Management

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Siming Chen

6 presentations in this session. See more »

VIS Arts Program

VISAP Papers

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

6 presentations in this session. See more »

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

VIS Full Papers

Natural Language and Multimodal Interaction

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Ana Crisan

6 presentations in this session. See more »

VIS Full Papers

Collaboration and Communication

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Vidya Setlur

6 presentations in this session. See more »

VIS Full Papers

Perception and Cognition

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Tamara Munzner

6 presentations in this session. See more »

VIS Short Papers

Short Papers: Scientific and Immersive Visualization

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Bei Wang

8 presentations in this session. See more »

CG&A Invited Partnership Presentations

CG&A: Analytics and Applications

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: Bruce Campbell

6 presentations in this session. See more »

VIS Panels

Panel: 20 Years of Visual Analytics

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

Chair: David Ebert, Wolfgang Jentner, Ross Maciejewski, Jieqiong Zhao

0 presentations in this session. See more »

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

VIS Full Papers

Designing Palettes and Encodings

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Khairi Rheda

6 presentations in this session. See more »

VIS Full Papers

Of Nodes and Networks

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Carolina Nobre

6 presentations in this session. See more »

VIS Full Papers

Scripts, Notebooks, and Provenance

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Alex Lex

6 presentations in this session. See more »

VIS Short Papers

Short Papers: System design

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Chris Bryan

8 presentations in this session. See more »

VIS Panels

Panel: Past, Present, and Future of Data Storytelling

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Haotian Li, Yun Wang, Benjamin Bach, Sheelagh Carpendale, Fanny Chevalier, Nathalie Riche

0 presentations in this session. See more »

Application Spotlights

Application Spotlight: Visualization within the Department of Energy

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

Chair: Ana Crisan, Menna El-Assady

0 presentations in this session. See more »

2024-10-16T19:00:00Z – 2024-10-16T19:30:00Z

Conference Events

IEEE VIS Town Hall

2024-10-16T19:00:00Z – 2024-10-16T19:30:00Z

Chair: Ross Maciejewski

0 presentations in this session. See more »

2024-10-16T19:30:00Z – 2024-10-16T20:30:00Z

VIS Panels

Panel: VIS Conference Futures: Community Opinions on Recent Experiences, Challenges, and Opportunities for Hybrid Event Formats

2024-10-16T19:30:00Z – 2024-10-16T20:30:00Z

Chair: Matthew Brehmer, Narges Mahyar

0 presentations in this session. See more »

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

VAST Challenge

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: R. Jordan Crouser, Steve Gomez, Jereme Haack

10 presentations in this session. See more »

VISxAI: 7th Workshop on Visualization for AI Explainability

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Alex Bäuerle, Angie Boggust, Fred Hohman

12 presentations in this session. See more »

1st Workshop on Accessible Data Visualization

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Brianna Wimer, Laura South

7 presentations in this session. See more »

First-Person Visualizations for Outdoor Physical Activities: Challenges and Opportunities

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Charles Perin, Tica Lin, Lijie Yao, Yalong Yang, Maxime Cordeil, Wesley Willett

0 presentations in this session. See more »

EduVis: Workshop on Visualization Education, Literacy, and Activities

EduVis: 2nd IEEE VIS Workshop on Visualization Education, Literacy, and Activities (Session 1)

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Fateme Rajabiyazdi, Mandy Keck, Lonni Besancon, Alon Friedman, Benjamin Bach, Jonathan Roberts, Christina Stoiber, Magdalena Boucher, Lily Ge

3 presentations in this session. See more »

Visualization Analysis and Design

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: Tamara Munzner

0 presentations in this session. See more »

Developing Immersive and Collaborative Visualizations with Web-Technologies

Developing Immersive and Collaborative Visualizations with Web Technologies

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: David Saffo

0 presentations in this session. See more »

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

VDS: Visualization in Data Science Symposium

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Ana Crisan, Dylan Cashman, Saugat Pandey, Alvitta Ottley, John E Wenskovitch

6 presentations in this session. See more »

LDAV: 13th IEEE Symposium on Large Data Analysis and Visualization

LDAV: 14th IEEE Symposium on Large Data Analysis and Visualization

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Silvio Rizzi, Gunther Weber, Guido Reina, Ken Moreland

6 presentations in this session. See more »

Bio+MedVis Challenges

Bio+Med+Vis Workshop

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Barbora Kozlikova, Nils Gehlenborg, Laura Garrison, Eric Mörth, Morgan Turner, Simon Warchol

6 presentations in this session. See more »

Workshop on Data Storytelling in an Era of Generative AI

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Xingyu Lan, Leni Yang, Zezhong Wang, Yun Wang, Danqing Shi, Sheelagh Carpendale

4 presentations in this session. See more »

EduVis: Workshop on Visualization Education, Literacy, and Activities

EduVis: 2nd IEEE VIS Workshop on Visualization Education, Literacy, and Activities (Session 2)

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Jillian Aurisano, Fateme Rajabiyazdi, Mandy Keck, Lonni Besancon, Alon Friedman, Benjamin Bach, Jonathan Roberts, Christina Stoiber, Magdalena Boucher, Lily Ge

3 presentations in this session. See more »

Generating Color Schemes for your Data Visualizations

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Theresa-Marie Rhyne

0 presentations in this session. See more »

Running Online User Studies with the reVISit Framework

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

Chair: Jack Wilburn

0 presentations in this session. See more »

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

VisInPractice

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Arjun Srinivasan, Ayan Biswas

0 presentations in this session. See more »

SciVis Contest

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Karen Bemis, Tim Gerrits

3 presentations in this session. See more »

BELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization

BELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization (Session 1)

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Anastasia Bezerianos, Michael Correll, Kyle Hall, Jürgen Bernard, Dan Keefe, Mai Elshehaly, Mahsan Nourani

6 presentations in this session. See more »

Progressive Data Analysis and Visualization (PDAV) Workshop.

Progressive Data Analysis and Visualization (PDAV) Workshop

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Alex Ulmer, Jaemin Jo, Michael Sedlmair, Jean-Daniel Fekete

3 presentations in this session. See more »

Uncertainty Visualization: Applications, Techniques, Software, and Decision Frameworks

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Tushar M. Athawale, Chris R. Johnson, Kristi Potter, Paul Rosen, David Pugmire

12 presentations in this session. See more »

Visualization for Climate Action and Sustainability

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Benjamin Bach, Fanny Chevalier, Helen-Nicole Kostis, Mark SubbaRao, Yvonne Jansen, Robert Soden

13 presentations in this session. See more »

LLM4Vis: Large Language Models for Information Visualization

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Enamul Hoque

0 presentations in this session. See more »

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

TopoInVis: Workshop on Topological Data Analysis and Visualization

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Federico Iuricich, Yue Zhang

6 presentations in this session. See more »

BELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization

BELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization (Sesssion 2)

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Anastasia Bezerianos, Michael Correll, Kyle Hall, Jürgen Bernard, Dan Keefe, Mai Elshehaly, Mahsan Nourani

11 presentations in this session. See more »

NLVIZ Workshop: Exploring Research Opportunities for Natural Language, Text, and Data Visualization

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Vidya Setlur, Arjun Srinivasan

11 presentations in this session. See more »

EnergyVis 2024: 4th Workshop on Energy Data Visualization

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Kenny Gruchalla, Anjana Arunkumar, Sarah Goodwin, Arnaud Prouzeau, Lyn Bartram

11 presentations in this session. See more »

VISions of the Future: Workshop on Sustainable Practices within Visualization and Physicalisation

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Georgia Panagiotidou, Luiz Morais, Sarah Hayes, Derya Akbaba, Tatiana Losev, Andrew McNutt

5 presentations in this session. See more »

Enabling Scientific Discovery: A Tutorial for Harnessing the Power of the National Science Data Fabric for Large-Scale Data Analysis

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Amy Gooch

0 presentations in this session. See more »

Preparing, Conducting, and Analyzing Participatory Design Sessions for Information Visualizations

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

Chair: Adriana Arcia

0 presentations in this session. See more »

- associated

VAST Challenge

Bayshore II

Chair: R. Jordan Crouser, Steve Gomez, Jereme Haack

13 presentations in this session. See more »

workshopVISxAI: 7th Workshop on Visualization for AI Explainability

Bayshore I

Chair: Alex Bäuerle, Angie Boggust, Fred Hohman

12 presentations in this session. See more »

workshop1st Workshop on Accessible Data Visualization

Bayshore V

Chair: Brianna Wimer, Laura South

7 presentations in this session. See more »

workshopFirst-Person Visualizations for Outdoor Physical Activities: Challenges and Opportunities

Bayshore VII

Chair: Charles Perin, Tica Lin, Lijie Yao, Yalong Yang, Maxime Cordeil, Wesley Willett

0 presentations in this session. See more »

workshopEduVis: 2nd IEEE VIS Workshop on Visualization Education, Literacy, and Activities (Session 1)

Esplanade Suites I + II + III

Chair: Fateme Rajabiyazdi, Mandy Keck, Lonni Besancon, Alon Friedman, Benjamin Bach

3 presentations in this session. See more »

tutorialVisualization Analysis and Design

Bayshore VI

Chair: Tamara Munzner

0 presentations in this session. See more »

tutorialDeveloping Immersive and Collaborative Visualizations with Web Technologies

Bayshore III

Chair: David Saffo

0 presentations in this session. See more »

- associated

VDS: Visualization in Data Science Symposium

Bayshore I

Chair: Ana Crisan, Dylan Cashman

6 presentations in this session. See more »

associatedLDAV: 14th IEEE Symposium on Large Data Analysis and Visualization

Bayshore II

Chair: Silvio Rizzi, Gunther Weber, Guido Reina, Ken Moreland

6 presentations in this session. See more »

associatedBio+Med+Vis Workshop

Bayshore V

Chair: Barbora Kozlikova, Nils Gehlenborg, Laura Garrison, Eric Mörth, Morgan Turner, Simon Warchol

6 presentations in this session. See more »

workshopWorkshop on Data Storytelling in an Era of Generative AI

Bayshore VII

Chair: Xingyu Lan, Leni Yang, Zezhong Wang, Yun Wang, Danqing Shi, Sheelagh Carpendale

4 presentations in this session. See more »

workshopEduVis: 2nd IEEE VIS Workshop on Visualization Education, Literacy, and Activities (Session 2)

Esplanade Suites I + II + III

Chair: Jillian Aurisano, Fateme Rajabiyazdi, Mandy Keck, Lonni Besancon, Alon Friedman, Benjamin Bach, Jonathan Roberts, Christina Stoiber, Magdalena Boucher, Lily Ge

3 presentations in this session. See more »

tutorialGenerating Color Schemes for your Data Visualizations

Bayshore VI

Chair: Theresa-Marie Rhyne

0 presentations in this session. See more »

tutorialRunning Online User Studies with the reVISit Framework

Bayshore III

Chair: Jack Wilburn

0 presentations in this session. See more »

- associated

VisInPractice

Bayshore III

Chair: Arjun Srinivasan, Ayan Biswas

0 presentations in this session. See more »

associatedSciVis Contest

Bayshore V

Chair: Karen Bemis, Tim Gerrits

0 presentations in this session. See more »

workshopBELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization (Session 1)

Bayshore I

Chair: Anastasia Bezerianos, Michael Correll, Kyle Hall, Jürgen Bernard, Dan Keefe, Mai Elshehaly, Mahsan Nourani

6 presentations in this session. See more »

workshopProgressive Data Analysis and Visualization (PDAV) Workshop

Bayshore VII

Chair: Alex Ulmer, Jaemin Jo, Michael Sedlmair, Jean-Daniel Fekete

3 presentations in this session. See more »

workshopUncertainty Visualization: Applications, Techniques, Software, and Decision Frameworks

Bayshore VI

Chair: Tushar M. Athawale, Chris R. Johnson, Kristi Potter, Paul Rosen, David Pugmire

12 presentations in this session. See more »

workshopVisualization for Climate Action and Sustainability

Esplanade Suites I + II + III

Chair: Benjamin Bach, Fanny Chevalier, Helen-Nicole Kostis, Mark SubbaRao, Yvonne Jansen, Robert Soden

13 presentations in this session. See more »

tutorialLLM4Vis: Large Language Models for Information Visualization

Bayshore II

Chair: Enamul Hoque

0 presentations in this session. See more »

- workshop

TopoInVis: Workshop on Topological Data Analysis and Visualization

Bayshore III

Chair: Federico Iuricich, Yue Zhang

6 presentations in this session. See more »

workshopBELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization (Sesssion 2)

Bayshore I

Chair: Anastasia Bezerianos, Michael Correll, Kyle Hall, Jürgen Bernard, Dan Keefe, Mai Elshehaly, Mahsan Nourani

11 presentations in this session. See more »

workshopNLVIZ Workshop: Exploring Research Opportunities for Natural Language, Text, and Data Visualization

Bayshore II

Chair: Vidya Setlur, Arjun Srinivasan

11 presentations in this session. See more »

workshopEnergyVis 2024: 4th Workshop on Energy Data Visualization

Bayshore VI

Chair: Kenny Gruchalla, Anjana Arunkumar, Sarah Goodwin, Arnaud Prouzeau, Lyn Bartram

11 presentations in this session. See more »

workshopVISions of the Future: Workshop on Sustainable Practices within Visualization and Physicalisation

Esplanade Suites I + II + III

Chair: Georgia Panagiotidou, Luiz Morais, Sarah Hayes, Derya Akbaba, Tatiana Losev, Andrew McNutt

5 presentations in this session. See more »

tutorialEnabling Scientific Discovery: A Tutorial for Harnessing the Power of the National Science Data Fabric for Large-Scale Data Analysis

Bayshore V

Chair: Amy Gooch

0 presentations in this session. See more »

tutorialPreparing, Conducting, and Analyzing Participatory Design Sessions for Information Visualizations

Bayshore VII

Chair: Adriana Arcia

0 presentations in this session. See more »

- vis

Opening Session

Bayshore I + II + III

Chair: Paul Rosen, Kristi Potter, Remco Chang

2 presentations in this session. See more »

- short

VGTC Awards & Best Short Papers

Bayshore I + II + III

Chair: Chaoli Wang

4 presentations in this session. See more »

- vis

VIS Governance

None

Chair: Petra Isenberg, Jean-Daniel Fekete

2 presentations in this session. See more »

- full

Best Full Papers

Bayshore I + II + III

Chair: Claudio Silva

6 presentations in this session. See more »

- visap

VISAP Keynote: The Golden Age of Visualization Dissensus

Bayshore I + II + III

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner, Alberto Cairo

0 presentations in this session. See more »

- visap

VISAP Artist Talks

Bayshore III

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

16 presentations in this session. See more »

- full

Virtual: VIS from around the world

Palma Ceia I

Chair: Mahmood Jasim

6 presentations in this session. See more »

- full

Text, Annotation, and Metaphor

Bayshore V

Chair: Melanie Tory

6 presentations in this session. See more »

fullImmersive Visualization and Visual Analytics

Bayshore II

Chair: Lingyun Yu

6 presentations in this session. See more »

fullMachine Learning for Visualization

Bayshore I

Chair: Joshua Levine

6 presentations in this session. See more »

shortShort Papers: Graph, Hierarchy and Multidimensional

Bayshore VI

Chair: Alfie Abdul-Rahman

8 presentations in this session. See more »

panelPanel: What Do Visualization Art Projects Bring to the VIS Community?

Bayshore VII

Chair: Xinhuan Shu, Yifang Wang, Junxiu Tang

0 presentations in this session. See more »

- full

Biological Data Visualization

Bayshore I

Chair: Nils Gehlenborg

6 presentations in this session. See more »

fullJudgment and Decision-making

Bayshore II

Chair: Wenwen Dou

6 presentations in this session. See more »

fullTime and Sequences

Bayshore VI

Chair: Silvia Miksch

6 presentations in this session. See more »

fullDimensionality Reduction

Bayshore V

Chair: Jian Zhao

6 presentations in this session. See more »

fullUrban Planning, Construction, and Disaster Management

Bayshore VII

Chair: Siming Chen

6 presentations in this session. See more »

visapVISAP Papers

Bayshore III

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

6 presentations in this session. See more »

- full

Natural Language and Multimodal Interaction

Bayshore I

Chair: Ana Crisan

6 presentations in this session. See more »

fullCollaboration and Communication

Bayshore V

Chair: Vidya Setlur

6 presentations in this session. See more »

fullPerception and Cognition

Bayshore II

Chair: Bernhard Preim

6 presentations in this session. See more »

shortShort Papers: Scientific and Immersive Visualization

Bayshore VI

Chair: Bei Wang

8 presentations in this session. See more »

invitedCG&A: Analytics and Applications

Bayshore III

Chair: Bruce Campbell

6 presentations in this session. See more »

panelPanel: 20 Years of Visual Analytics

Bayshore VII

Chair: David Ebert, Wolfgang Jentner, Ross Maciejewski, Jieqiong Zhao

0 presentations in this session. See more »

- full

Designing Palettes and Encodings

Bayshore II

Chair: Khairi Rheda

6 presentations in this session. See more »

fullOf Nodes and Networks

Bayshore I

Chair: Carolina Nobre

6 presentations in this session. See more »

fullScripts, Notebooks, and Provenance

Bayshore V

Chair: Alex Lex

6 presentations in this session. See more »

shortShort Papers: System design

Bayshore VI

Chair: Chris Bryan

8 presentations in this session. See more »

panelPanel: Past, Present, and Future of Data Storytelling

Bayshore VII

Chair: Haotian Li, Yun Wang, Benjamin Bach, Sheelagh Carpendale, Fanny Chevalier, Nathalie Riche

0 presentations in this session. See more »

applicationApplication Spotlight: Visualization within the Department of Energy

Bayshore III

Chair: Ana Crisan, Menna El-Assady

0 presentations in this session. See more »

- vis

IEEE VIS Town Hall

Bayshore I + II + III

Chair: Ross Maciejewski

0 presentations in this session. See more »

- panel

Panel: VIS Conference Futures: Community Opinions on Recent Experiences, Challenges, and Opportunities for Hybrid Event Formats

Bayshore VII

Chair: Matthew Brehmer, Narges Mahyar

0 presentations in this session. See more »

- full

Visualization Recommendation

Bayshore II

Chair: Johannes Knittel

6 presentations in this session. See more »

fullModel-checking and Validation

Bayshore V

Chair: Michael Correll

6 presentations in this session. See more »

fullEmbeddings and Document Spatialization

Bayshore I

Chair: Alex Endert

6 presentations in this session. See more »

shortShort Papers: Perception and Representation

Bayshore VI

Chair: Anjana Arunkumar

8 presentations in this session. See more »

panelPanel: Human-Centered Computing Research in South America: Status Quo, Opportunities, and Challenges

Bayshore VII

Chair: Chaoli Wang

0 presentations in this session. See more »

- full

Applications: Sports. Games, and Finance

Bayshore V

Chair: Marc Streit

6 presentations in this session. See more »

fullVisual Design: Sketching and Labeling

Bayshore II

Chair: Jonathan C. Roberts

6 presentations in this session. See more »

fullTopological Data Analysis

Bayshore I

Chair: Ingrid Hotz

6 presentations in this session. See more »

shortShort Papers: Text and Multimedia

Bayshore VI

Chair: Min Lu

8 presentations in this session. See more »

panelPanel: (Yet Another) Evaluation Needed? A Panel Discussion on Evaluation Trends in Visualization

Bayshore VII

Chair: Ghulam Jilani Quadri, Danielle Albers Szafir, Arran Zeyu Wang, Hyeon Jeon

0 presentations in this session. See more »

visapVISAP Pictorials

Bayshore III

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

8 presentations in this session. See more »

- vis

IEEE VIS 2025 Kickoff

Bayshore I + II + III

Chair: Johanna Schmidt, Kresimir Matković, Barbora Kozlíková, Eduard Gröller

1 presentations in this session. See more »

- full

Once Upon a Visualization

Bayshore V

Chair: Marti Hearst

6 presentations in this session. See more »

fullVisualization Design Methods

Bayshore II

Chair: Miriah Meyer

6 presentations in this session. See more »

fullThe Toolboxes of Visualization

Bayshore I

Chair: Dominik Moritz

6 presentations in this session. See more »

shortShort Papers: Analytics and Applications

Bayshore VI

Chair: Anna Vilanova

8 presentations in this session. See more »

invitedCG&A: Systems, Theory, and Evaluations

Bayshore III

Chair: Francesca Samsel

6 presentations in this session. See more »

panelPanel: Vogue or Visionary? Current Challenges and Future Opportunities in Situated Visualizations

Bayshore VII

Chair: Michelle A. Borkin, Melanie Tory

0 presentations in this session. See more »

- full

Journalism and Public Policy

Bayshore II

Chair: Sungahn Ko

6 presentations in this session. See more »

fullApplications: Industry, Computing, and Medicine

Bayshore V

Chair: Joern Kohlhammer

6 presentations in this session. See more »

fullAccessibility and Touch

Bayshore I

Chair: Narges Mahyar

6 presentations in this session. See more »

fullMotion and Animated Notions

Bayshore III

Chair: Catherine d'Ignazio

6 presentations in this session. See more »

shortShort Papers: AI and LLM

Bayshore VI

Chair: Cindy Xiong Bearfield

8 presentations in this session. See more »

panelPanel: Dear Younger Me: A Dialog About Professional Development Beyond The Initial Career Phases

Bayshore VII

Chair: Robert M Kirby, Michael Gleicher

0 presentations in this session. See more »

- full

Look, Learn, Language Models

Bayshore V

Chair: Nicole Sultanum

6 presentations in this session. See more »

fullWhere the Networks Are

Bayshore VII

Chair: Oliver Deussen

6 presentations in this session. See more »

fullHuman and Machine Visualization Literacy

Bayshore I + II + III

Chair: Bum Chul Kwon

6 presentations in this session. See more »

fullFlow, Topology, and Uncertainty

Bayshore VI

Chair: Bei Wang

6 presentations in this session. See more »

- vis

Test of Time Awards

Bayshore I

Chair: Ross Maciejewski

1 presentations in this session. See more »

- vis

IEEE VIS Capstone and Closing

Bayshore I + II + III

Chair: Paul Rosen, Kristi Potter, Remco Chang

3 presentations in this session. See more »

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

2024-10-15T12:30:00Z – 2024-10-15T13:45:00Z

2024-10-15T14:15:00Z – 2024-10-15T15:45:00Z

2024-10-15T15:35:00Z – 2024-10-15T16:00:00Z

2024-10-15T16:00:00Z – 2024-10-15T17:30:00Z

2024-10-15T18:00:00Z – 2024-10-15T19:00:00Z

2024-10-15T19:00:00Z – 2024-10-15T21:00:00Z

2024-10-16T12:30:00Z – 2024-10-16T13:30:00Z

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

2024-10-16T19:00:00Z – 2024-10-16T19:30:00Z

2024-10-16T19:30:00Z – 2024-10-16T20:30:00Z

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

2024-10-17T15:30:00Z – 2024-10-17T16:00:00Z

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

2024-10-18T14:15:00Z – 2024-10-18T15:00:00Z

2024-10-18T15:00:00Z – 2024-10-18T16:30:00Z

- associated

VAST Challenge

Bayshore II

Chair: R. Jordan Crouser, Steve Gomez, Jereme Haack

10 presentations in this session. See more »

workshopVISxAI: 7th Workshop on Visualization for AI Explainability

Bayshore I

Chair: Alex Bäuerle, Angie Boggust, Fred Hohman

12 presentations in this session. See more »

workshop1st Workshop on Accessible Data Visualization

Bayshore V

Chair: Brianna Wimer, Laura South

7 presentations in this session. See more »

workshopFirst-Person Visualizations for Outdoor Physical Activities: Challenges and Opportunities

Bayshore VII

Chair: Charles Perin, Tica Lin, Lijie Yao, Yalong Yang, Maxime Cordeil, Wesley Willett

0 presentations in this session. See more »

workshopEduVis: 2nd IEEE VIS Workshop on Visualization Education, Literacy, and Activities (Session 1)

Esplanade Suites I + II + III

Chair: Fateme Rajabiyazdi, Mandy Keck, Lonni Besancon, Alon Friedman, Benjamin Bach, Jonathan Roberts, Christina Stoiber, Magdalena Boucher, Lily Ge

3 presentations in this session. See more »

tutorialVisualization Analysis and Design

Bayshore VI

Chair: Tamara Munzner

0 presentations in this session. See more »

tutorialDeveloping Immersive and Collaborative Visualizations with Web Technologies

Bayshore III

Chair: David Saffo

0 presentations in this session. See more »

- associated

VDS: Visualization in Data Science Symposium

Bayshore I

Chair: Ana Crisan, Dylan Cashman, Saugat Pandey, Alvitta Ottley, John E Wenskovitch

6 presentations in this session. See more »

associatedLDAV: 14th IEEE Symposium on Large Data Analysis and Visualization

Bayshore II

Chair: Silvio Rizzi, Gunther Weber, Guido Reina, Ken Moreland

6 presentations in this session. See more »

associatedBio+Med+Vis Workshop

Bayshore V

Chair: Barbora Kozlikova, Nils Gehlenborg, Laura Garrison, Eric Mörth, Morgan Turner, Simon Warchol

6 presentations in this session. See more »

workshopWorkshop on Data Storytelling in an Era of Generative AI

Bayshore VII

Chair: Xingyu Lan, Leni Yang, Zezhong Wang, Yun Wang, Danqing Shi, Sheelagh Carpendale

4 presentations in this session. See more »

workshopEduVis: 2nd IEEE VIS Workshop on Visualization Education, Literacy, and Activities (Session 2)

Esplanade Suites I + II + III

Chair: Jillian Aurisano, Fateme Rajabiyazdi, Mandy Keck, Lonni Besancon, Alon Friedman, Benjamin Bach, Jonathan Roberts, Christina Stoiber, Magdalena Boucher, Lily Ge

3 presentations in this session. See more »

tutorialGenerating Color Schemes for your Data Visualizations

Bayshore VI

Chair: Theresa-Marie Rhyne

0 presentations in this session. See more »

tutorialRunning Online User Studies with the reVISit Framework

Bayshore III

Chair: Jack Wilburn

0 presentations in this session. See more »

- associated

VisInPractice

Bayshore III

Chair: Arjun Srinivasan, Ayan Biswas

0 presentations in this session. See more »

associatedSciVis Contest

Bayshore V

Chair: Karen Bemis, Tim Gerrits

3 presentations in this session. See more »

workshopBELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization (Session 1)

Bayshore I

Chair: Anastasia Bezerianos, Michael Correll, Kyle Hall, Jürgen Bernard, Dan Keefe, Mai Elshehaly, Mahsan Nourani

6 presentations in this session. See more »

workshopProgressive Data Analysis and Visualization (PDAV) Workshop

Bayshore VII

Chair: Alex Ulmer, Jaemin Jo, Michael Sedlmair, Jean-Daniel Fekete

3 presentations in this session. See more »

workshopUncertainty Visualization: Applications, Techniques, Software, and Decision Frameworks

Bayshore VI

Chair: Tushar M. Athawale, Chris R. Johnson, Kristi Potter, Paul Rosen, David Pugmire

12 presentations in this session. See more »

workshopVisualization for Climate Action and Sustainability

Esplanade Suites I + II + III

Chair: Benjamin Bach, Fanny Chevalier, Helen-Nicole Kostis, Mark SubbaRao, Yvonne Jansen, Robert Soden

13 presentations in this session. See more »

tutorialLLM4Vis: Large Language Models for Information Visualization

Bayshore II

Chair: Enamul Hoque

0 presentations in this session. See more »

- workshop

TopoInVis: Workshop on Topological Data Analysis and Visualization

Bayshore III

Chair: Federico Iuricich, Yue Zhang

6 presentations in this session. See more »

workshopBELIV: evaluation and BEyond - methodoLogIcal approaches for Visualization (Sesssion 2)

Bayshore I

Chair: Anastasia Bezerianos, Michael Correll, Kyle Hall, Jürgen Bernard, Dan Keefe, Mai Elshehaly, Mahsan Nourani

11 presentations in this session. See more »

workshopNLVIZ Workshop: Exploring Research Opportunities for Natural Language, Text, and Data Visualization

Bayshore II

Chair: Vidya Setlur, Arjun Srinivasan

11 presentations in this session. See more »

workshopEnergyVis 2024: 4th Workshop on Energy Data Visualization

Bayshore VI

Chair: Kenny Gruchalla, Anjana Arunkumar, Sarah Goodwin, Arnaud Prouzeau, Lyn Bartram

11 presentations in this session. See more »

workshopVISions of the Future: Workshop on Sustainable Practices within Visualization and Physicalisation

Esplanade Suites I + II + III

Chair: Georgia Panagiotidou, Luiz Morais, Sarah Hayes, Derya Akbaba, Tatiana Losev, Andrew McNutt

5 presentations in this session. See more »

tutorialEnabling Scientific Discovery: A Tutorial for Harnessing the Power of the National Science Data Fabric for Large-Scale Data Analysis

Bayshore V

Chair: Amy Gooch

0 presentations in this session. See more »

tutorialPreparing, Conducting, and Analyzing Participatory Design Sessions for Information Visualizations

Bayshore VII

Chair: Adriana Arcia

0 presentations in this session. See more »

- vis

Opening Session

Bayshore I + II + III

Chair: Paul Rosen, Kristi Potter, Remco Chang

2 presentations in this session. See more »

- short

VGTC Awards & Best Short Papers

Bayshore I + II + III

Chair: Chaoli Wang

4 presentations in this session. See more »

- vis

VIS Governance

None

Chair: Petra Isenberg, Jean-Daniel Fekete

2 presentations in this session. See more »

- full

Best Full Papers

Bayshore I + II + III

Chair: Claudio Silva

6 presentations in this session. See more »

- visap

VISAP Keynote: The Golden Age of Visualization Dissensus

Bayshore I + II + III

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner, Alberto Cairo

0 presentations in this session. See more »

- visap

VISAP Artist Talks

Bayshore III

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

16 presentations in this session. See more »

- full

Virtual: VIS from around the world

Palma Ceia I

Chair: Mahmood Jasim

6 presentations in this session. See more »

- full

Text, Annotation, and Metaphor

Bayshore V

Chair: Melanie Tory

6 presentations in this session. See more »

fullImmersive Visualization and Visual Analytics

Bayshore II

Chair: Lingyun Yu

6 presentations in this session. See more »

fullMachine Learning for Visualization

Bayshore I

Chair: Joshua Levine

6 presentations in this session. See more »

shortShort Papers: Graph, Hierarchy and Multidimensional

Bayshore VI

Chair: Alfie Abdul-Rahman

8 presentations in this session. See more »

panelPanel: What Do Visualization Art Projects Bring to the VIS Community?

Bayshore VII

Chair: Xinhuan Shu, Yifang Wang, Junxiu Tang

0 presentations in this session. See more »

- full

Biological Data Visualization

Bayshore I

Chair: Nils Gehlenborg

6 presentations in this session. See more »

fullJudgment and Decision-making

Bayshore II

Chair: Wenwen Dou

6 presentations in this session. See more »

fullTime and Sequences

Bayshore VI

Chair: Silvia Miksch

6 presentations in this session. See more »

fullDimensionality Reduction

Bayshore V

Chair: Jian Zhao

6 presentations in this session. See more »

fullUrban Planning, Construction, and Disaster Management

Bayshore VII

Chair: Siming Chen

6 presentations in this session. See more »

visapVISAP Papers

Bayshore III

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

6 presentations in this session. See more »

- full

Natural Language and Multimodal Interaction

Bayshore I

Chair: Ana Crisan

6 presentations in this session. See more »

fullCollaboration and Communication

Bayshore V

Chair: Vidya Setlur

6 presentations in this session. See more »

fullPerception and Cognition

Bayshore II

Chair: Tamara Munzner

6 presentations in this session. See more »

shortShort Papers: Scientific and Immersive Visualization

Bayshore VI

Chair: Bei Wang

8 presentations in this session. See more »

invitedCG&A: Analytics and Applications

Bayshore III

Chair: Bruce Campbell

6 presentations in this session. See more »

panelPanel: 20 Years of Visual Analytics

Bayshore VII

Chair: David Ebert, Wolfgang Jentner, Ross Maciejewski, Jieqiong Zhao

0 presentations in this session. See more »

- full

Designing Palettes and Encodings

Bayshore II

Chair: Khairi Rheda

6 presentations in this session. See more »

fullOf Nodes and Networks

Bayshore I

Chair: Carolina Nobre

6 presentations in this session. See more »

fullScripts, Notebooks, and Provenance

Bayshore V

Chair: Alex Lex

6 presentations in this session. See more »

shortShort Papers: System design

Bayshore VI

Chair: Chris Bryan

8 presentations in this session. See more »

panelPanel: Past, Present, and Future of Data Storytelling

Bayshore VII

Chair: Haotian Li, Yun Wang, Benjamin Bach, Sheelagh Carpendale, Fanny Chevalier, Nathalie Riche

0 presentations in this session. See more »

applicationApplication Spotlight: Visualization within the Department of Energy

Bayshore III

Chair: Ana Crisan, Menna El-Assady

0 presentations in this session. See more »

- vis

IEEE VIS Town Hall

Bayshore I + II + III

Chair: Ross Maciejewski

0 presentations in this session. See more »

- panel

Panel: VIS Conference Futures: Community Opinions on Recent Experiences, Challenges, and Opportunities for Hybrid Event Formats

Bayshore VII

Chair: Matthew Brehmer, Narges Mahyar

0 presentations in this session. See more »

- full

Visualization Recommendation

Bayshore II

Chair: Johannes Knittel

6 presentations in this session. See more »

fullModel-checking and Validation

Bayshore V

Chair: Michael Correll

6 presentations in this session. See more »

fullEmbeddings and Document Spatialization

Bayshore I

Chair: Alex Endert

6 presentations in this session. See more »

shortShort Papers: Perception and Representation

Bayshore VI

Chair: Anjana Arunkumar

8 presentations in this session. See more »

panelPanel: Human-Centered Computing Research in South America: Status Quo, Opportunities, and Challenges

Bayshore VII

Chair: Chaoli Wang

0 presentations in this session. See more »

- full

Applications: Sports. Games, and Finance

Bayshore V

Chair: Marc Streit

6 presentations in this session. See more »

fullVisual Design: Sketching and Labeling

Bayshore II

Chair: Jonathan C. Roberts

6 presentations in this session. See more »

fullTopological Data Analysis

Bayshore I

Chair: Ingrid Hotz

6 presentations in this session. See more »

shortShort Papers: Text and Multimedia

Bayshore VI

Chair: Min Lu

8 presentations in this session. See more »

panelPanel: (Yet Another) Evaluation Needed? A Panel Discussion on Evaluation Trends in Visualization

Bayshore VII

Chair: Ghulam Jilani Quadri, Danielle Albers Szafir, Arran Zeyu Wang, Hyeon Jeon

0 presentations in this session. See more »

visapVISAP Pictorials

Bayshore III

Chair: Pedro Cruz, Rewa Wright, Rebecca Ruige Xu, Lori Jacques, Santiago Echeverry, Kate Terrado, Todd Linkner

8 presentations in this session. See more »

- vis

IEEE VIS 2025 Kickoff

Bayshore I + II + III

Chair: Johanna Schmidt, Kresimir Matković, Barbora Kozlíková, Eduard Gröller

1 presentations in this session. See more »

- full

Once Upon a Visualization

Bayshore V

Chair: Marti Hearst

6 presentations in this session. See more »

fullVisualization Design Methods

Bayshore II

Chair: Miriah Meyer

6 presentations in this session. See more »

fullThe Toolboxes of Visualization

Bayshore I

Chair: Dominik Moritz

6 presentations in this session. See more »

shortShort Papers: Analytics and Applications

Bayshore VI

Chair: Anna Vilanova

8 presentations in this session. See more »

invitedCG&A: Systems, Theory, and Evaluations

Bayshore III

Chair: Francesca Samsel

6 presentations in this session. See more »

panelPanel: Vogue or Visionary? Current Challenges and Future Opportunities in Situated Visualizations

Bayshore VII

Chair: Michelle A. Borkin, Melanie Tory

0 presentations in this session. See more »

- full

Journalism and Public Policy

Bayshore II

Chair: Sungahn Ko

6 presentations in this session. See more »

fullApplications: Industry, Computing, and Medicine

Bayshore V

Chair: Joern Kohlhammer

6 presentations in this session. See more »

fullAccessibility and Touch

Bayshore I

Chair: Narges Mahyar

6 presentations in this session. See more »

fullMotion and Animated Notions

Bayshore III

Chair: Catherine d'Ignazio

6 presentations in this session. See more »

shortShort Papers: AI and LLM

Bayshore VI

Chair: Cindy Xiong Bearfield

8 presentations in this session. See more »

panelPanel: Dear Younger Me: A Dialog About Professional Development Beyond The Initial Career Phases

Bayshore VII

Chair: Robert M Kirby, Michael Gleicher

0 presentations in this session. See more »

- full

Look, Learn, Language Models

Bayshore V

Chair: Nicole Sultanum

6 presentations in this session. See more »

fullWhere the Networks Are

Bayshore VII

Chair: Oliver Deussen

6 presentations in this session. See more »

fullHuman and Machine Visualization Literacy

Bayshore I + II + III

Chair: Bum Chul Kwon

6 presentations in this session. See more »

fullFlow, Topology, and Uncertainty

Bayshore VI

Chair: Bei Wang

6 presentations in this session. See more »

- vis

Test of Time Awards

Bayshore I

Chair: Ross Maciejewski

1 presentations in this session. See more »

- vis

IEEE VIS Capstone and Closing

Bayshore I + II + III

Chair: Paul Rosen, Kristi Potter, Remco Chang

3 presentations in this session. See more »

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

2024-10-13T16:00:00Z – 2024-10-13T19:00:00Z

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

2024-10-14T16:00:00Z – 2024-10-14T19:00:00Z

2024-10-15T12:30:00Z – 2024-10-15T13:45:00Z

2024-10-15T14:15:00Z – 2024-10-15T15:45:00Z

2024-10-15T15:35:00Z – 2024-10-15T16:00:00Z

2024-10-15T16:00:00Z – 2024-10-15T17:30:00Z

2024-10-15T18:00:00Z – 2024-10-15T19:00:00Z

2024-10-15T19:00:00Z – 2024-10-15T21:00:00Z

2024-10-16T12:30:00Z – 2024-10-16T13:30:00Z

2024-10-16T12:30:00Z – 2024-10-16T13:45:00Z

2024-10-16T14:15:00Z – 2024-10-16T15:30:00Z

2024-10-16T16:00:00Z – 2024-10-16T17:15:00Z

2024-10-16T17:45:00Z – 2024-10-16T19:00:00Z

2024-10-16T19:00:00Z – 2024-10-16T19:30:00Z

2024-10-16T19:30:00Z – 2024-10-16T20:30:00Z

2024-10-17T12:30:00Z – 2024-10-17T13:45:00Z

2024-10-17T14:15:00Z – 2024-10-17T15:30:00Z

2024-10-17T15:30:00Z – 2024-10-17T16:00:00Z

2024-10-17T16:00:00Z – 2024-10-17T17:15:00Z

2024-10-17T17:45:00Z – 2024-10-17T19:00:00Z

2024-10-18T12:30:00Z – 2024-10-18T13:45:00Z

2024-10-18T14:15:00Z – 2024-10-18T15:00:00Z

2024-10-18T15:00:00Z – 2024-10-18T16:30:00Z

SciVis Contest

https://ieeevis.org/year/2024/program/event_a-scivis-contest.html

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Add all of this event's sessions to your calendar.

SciVis Contest

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Karen Bemis, Tim Gerrits

0 presentations in this session. See more »

SciVis Contest

https://ieeevis.org/year/2024/program/event_a-scivis-contest.html

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Add all of this event's sessions to your calendar.

SciVis Contest

2024-10-14T12:30:00Z – 2024-10-14T15:30:00Z

Chair: Karen Bemis, Tim Gerrits

3 presentations in this session. See more »

VAST Challenge

https://ieeevis.org/year/2024/program/event_a-vast-challenge.html

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Add all of this event's sessions to your calendar.

VAST Challenge

2024-10-13T12:30:00Z – 2024-10-13T15:30:00Z

Chair: R. Jordan Crouser, Steve Gomez, Jereme Haack

13 presentations in this session. See more »