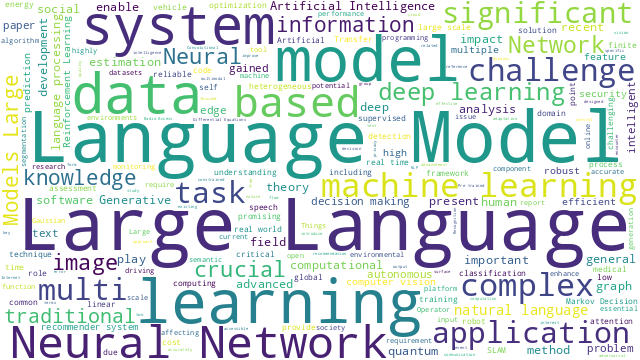

本篇博文主要展示每日从Arxiv论文网站获取的最新论文列表,以计算机视觉、自然语言处理、机器学习、人工智能等大方向进行划分。

+统计

+今日共更新405篇论文,其中:

+

+计算机视觉

+

+ 1. 标题:Active Stereo Without Pattern Projector

+ 编号:[1]

+ 链接:https://arxiv.org/abs/2309.12315

+ 作者:Luca Bartolomei, Matteo Poggi, Fabio Tosi, Andrea Conti, Stefano Mattoccia

+ 备注:ICCV 2023. Code: this https URL - Project page: this https URL

+ 关键词:standard passive camera, passive camera systems, physical pattern projector, paper proposes, integrating the principles

+

+ 点击查看摘要

+ This paper proposes a novel framework integrating the principles of active stereo in standard passive camera systems without a physical pattern projector. We virtually project a pattern over the left and right images according to the sparse measurements obtained from a depth sensor. Any such devices can be seamlessly plugged into our framework, allowing for the deployment of a virtual active stereo setup in any possible environment, overcoming the limitation of pattern projectors, such as limited working range or environmental conditions. Experiments on indoor/outdoor datasets, featuring both long and close-range, support the seamless effectiveness of our approach, boosting the accuracy of both stereo algorithms and deep networks.

+

+

+

+ 2. 标题:TinyCLIP: CLIP Distillation via Affinity Mimicking and Weight Inheritance

+ 编号:[2]

+ 链接:https://arxiv.org/abs/2309.12314

+ 作者:Kan Wu, Houwen Peng, Zhenghong Zhou, Bin Xiao, Mengchen Liu, Lu Yuan, Hong Xuan, Michael Valenzuela, Xi (Stephen) Chen, Xinggang Wang, Hongyang Chao, Han Hu

+ 备注:Accepted By ICCV 2023

+ 关键词:large-scale language-image pre-trained, large-scale language-image, language-image pre-trained models, cross-modal distillation method, weight inheritance

+

+ 点击查看摘要

+ In this paper, we propose a novel cross-modal distillation method, called TinyCLIP, for large-scale language-image pre-trained models. The method introduces two core techniques: affinity mimicking and weight inheritance. Affinity mimicking explores the interaction between modalities during distillation, enabling student models to mimic teachers' behavior of learning cross-modal feature alignment in a visual-linguistic affinity space. Weight inheritance transmits the pre-trained weights from the teacher models to their student counterparts to improve distillation efficiency. Moreover, we extend the method into a multi-stage progressive distillation to mitigate the loss of informative weights during extreme compression. Comprehensive experiments demonstrate the efficacy of TinyCLIP, showing that it can reduce the size of the pre-trained CLIP ViT-B/32 by 50%, while maintaining comparable zero-shot performance. While aiming for comparable performance, distillation with weight inheritance can speed up the training by 1.4 - 7.8 $\times$ compared to training from scratch. Moreover, our TinyCLIP ViT-8M/16, trained on YFCC-15M, achieves an impressive zero-shot top-1 accuracy of 41.1% on ImageNet, surpassing the original CLIP ViT-B/16 by 3.5% while utilizing only 8.9% parameters. Finally, we demonstrate the good transferability of TinyCLIP in various downstream tasks. Code and models will be open-sourced at this https URL.

+

+

+

+ 3. 标题:ForceSight: Text-Guided Mobile Manipulation with Visual-Force Goals

+ 编号:[3]

+ 链接:https://arxiv.org/abs/2309.12312

+ 作者:Jeremy A. Collins, Cody Houff, You Liang Tan, Charles C. Kemp

+ 备注:

+ 关键词:deep neural network, predicts visual-force goals, neural network, predicts visual-force, present ForceSight

+

+ 点击查看摘要

+ We present ForceSight, a system for text-guided mobile manipulation that predicts visual-force goals using a deep neural network. Given a single RGBD image combined with a text prompt, ForceSight determines a target end-effector pose in the camera frame (kinematic goal) and the associated forces (force goal). Together, these two components form a visual-force goal. Prior work has demonstrated that deep models outputting human-interpretable kinematic goals can enable dexterous manipulation by real robots. Forces are critical to manipulation, yet have typically been relegated to lower-level execution in these systems. When deployed on a mobile manipulator equipped with an eye-in-hand RGBD camera, ForceSight performed tasks such as precision grasps, drawer opening, and object handovers with an 81% success rate in unseen environments with object instances that differed significantly from the training data. In a separate experiment, relying exclusively on visual servoing and ignoring force goals dropped the success rate from 90% to 45%, demonstrating that force goals can significantly enhance performance. The appendix, videos, code, and trained models are available at this https URL.

+

+

+

+ 4. 标题:LLM-Grounder: Open-Vocabulary 3D Visual Grounding with Large Language Model as an Agent

+ 编号:[4]

+ 链接:https://arxiv.org/abs/2309.12311

+ 作者:Jianing Yang, Xuweiyi Chen, Shengyi Qian, Nikhil Madaan, Madhavan Iyengar, David F. Fouhey, Joyce Chai

+ 备注:Project website: this https URL

+ 关键词:Large Language Model, answer questions based, household robots, critical skill, skill for household

+

+ 点击查看摘要

+ 3D visual grounding is a critical skill for household robots, enabling them to navigate, manipulate objects, and answer questions based on their environment. While existing approaches often rely on extensive labeled data or exhibit limitations in handling complex language queries, we propose LLM-Grounder, a novel zero-shot, open-vocabulary, Large Language Model (LLM)-based 3D visual grounding pipeline. LLM-Grounder utilizes an LLM to decompose complex natural language queries into semantic constituents and employs a visual grounding tool, such as OpenScene or LERF, to identify objects in a 3D scene. The LLM then evaluates the spatial and commonsense relations among the proposed objects to make a final grounding decision. Our method does not require any labeled training data and can generalize to novel 3D scenes and arbitrary text queries. We evaluate LLM-Grounder on the ScanRefer benchmark and demonstrate state-of-the-art zero-shot grounding accuracy. Our findings indicate that LLMs significantly improve the grounding capability, especially for complex language queries, making LLM-Grounder an effective approach for 3D vision-language tasks in robotics. Videos and interactive demos can be found on the project website this https URL .

+

+

+

+ 5. 标题:TalkNCE: Improving Active Speaker Detection with Talk-Aware Contrastive Learning

+ 编号:[7]

+ 链接:https://arxiv.org/abs/2309.12306

+ 作者:Chaeyoung Jung, Suyeon Lee, Kihyun Nam, Kyeongha Rho, You Jin Kim, Youngjoon Jang, Joon Son Chung

+ 备注:

+ 关键词:Active Speaker Detection, Speaker Detection, Active Speaker, video frames, series of video

+

+ 点击查看摘要

+ The goal of this work is Active Speaker Detection (ASD), a task to determine whether a person is speaking or not in a series of video frames. Previous works have dealt with the task by exploring network architectures while learning effective representations has been less explored. In this work, we propose TalkNCE, a novel talk-aware contrastive loss. The loss is only applied to part of the full segments where a person on the screen is actually speaking. This encourages the model to learn effective representations through the natural correspondence of speech and facial movements. Our loss can be jointly optimized with the existing objectives for training ASD models without the need for additional supervision or training data. The experiments demonstrate that our loss can be easily integrated into the existing ASD frameworks, improving their performance. Our method achieves state-of-the-art performances on AVA-ActiveSpeaker and ASW datasets.

+

+

+

+ 6. 标题:SlowFast Network for Continuous Sign Language Recognition

+ 编号:[8]

+ 链接:https://arxiv.org/abs/2309.12304

+ 作者:Junseok Ahn, Youngjoon Jang, Joon Son Chung

+ 备注:

+ 关键词:Sign Language Recognition, Continuous Sign Language, Language Recognition, Continuous Sign, Sign Language

+

+ 点击查看摘要

+ The objective of this work is the effective extraction of spatial and dynamic features for Continuous Sign Language Recognition (CSLR). To accomplish this, we utilise a two-pathway SlowFast network, where each pathway operates at distinct temporal resolutions to separately capture spatial (hand shapes, facial expressions) and dynamic (movements) information. In addition, we introduce two distinct feature fusion methods, carefully designed for the characteristics of CSLR: (1) Bi-directional Feature Fusion (BFF), which facilitates the transfer of dynamic semantics into spatial semantics and vice versa; and (2) Pathway Feature Enhancement (PFE), which enriches dynamic and spatial representations through auxiliary subnetworks, while avoiding the need for extra inference time. As a result, our model further strengthens spatial and dynamic representations in parallel. We demonstrate that the proposed framework outperforms the current state-of-the-art performance on popular CSLR datasets, including PHOENIX14, PHOENIX14-T, and CSL-Daily.

+

+

+

+ 7. 标题:PanoVOS: Bridging Non-panoramic and Panoramic Views with Transformer for Video Segmentation

+ 编号:[9]

+ 链接:https://arxiv.org/abs/2309.12303

+ 作者:Shilin Yan, Xiaohao Xu, Lingyi Hong, Wenchao Chen, Wenqiang Zhang, Wei Zhang

+ 备注:

+ 关键词:attracted tremendous amounts, richer spatial information, virtual reality, richer spatial, attracted tremendous

+

+ 点击查看摘要

+ Panoramic videos contain richer spatial information and have attracted tremendous amounts of attention due to their exceptional experience in some fields such as autonomous driving and virtual reality. However, existing datasets for video segmentation only focus on conventional planar images. To address the challenge, in this paper, we present a panoramic video dataset, PanoVOS. The dataset provides 150 videos with high video resolutions and diverse motions. To quantify the domain gap between 2D planar videos and panoramic videos, we evaluate 15 off-the-shelf video object segmentation (VOS) models on PanoVOS. Through error analysis, we found that all of them fail to tackle pixel-level content discontinues of panoramic videos. Thus, we present a Panoramic Space Consistency Transformer (PSCFormer), which can effectively utilize the semantic boundary information of the previous frame for pixel-level matching with the current frame. Extensive experiments demonstrate that compared with the previous SOTA models, our PSCFormer network exhibits a great advantage in terms of segmentation results under the panoramic setting. Our dataset poses new challenges in panoramic VOS and we hope that our PanoVOS can advance the development of panoramic segmentation/tracking.

+

+

+

+ 8. 标题:Text-Guided Vector Graphics Customization

+ 编号:[10]

+ 链接:https://arxiv.org/abs/2309.12302

+ 作者:Peiying Zhang, Nanxuan Zhao, Jing Liao

+ 备注:Accepted by SIGGRAPH Asia 2023. Project page: this https URL

+ 关键词:Vector graphics, layer-wise topological properties, digital art, art and valued, valued by designers

+

+ 点击查看摘要

+ Vector graphics are widely used in digital art and valued by designers for their scalability and layer-wise topological properties. However, the creation and editing of vector graphics necessitate creativity and design expertise, leading to a time-consuming process. In this paper, we propose a novel pipeline that generates high-quality customized vector graphics based on textual prompts while preserving the properties and layer-wise information of a given exemplar SVG. Our method harnesses the capabilities of large pre-trained text-to-image models. By fine-tuning the cross-attention layers of the model, we generate customized raster images guided by textual prompts. To initialize the SVG, we introduce a semantic-based path alignment method that preserves and transforms crucial paths from the exemplar SVG. Additionally, we optimize path parameters using both image-level and vector-level losses, ensuring smooth shape deformation while aligning with the customized raster image. We extensively evaluate our method using multiple metrics from vector-level, image-level, and text-level perspectives. The evaluation results demonstrate the effectiveness of our pipeline in generating diverse customizations of vector graphics with exceptional quality. The project page is this https URL.

+

+

+

+ 9. 标题:Environment-biased Feature Ranking for Novelty Detection Robustness

+ 编号:[11]

+ 链接:https://arxiv.org/abs/2309.12301

+ 作者:Stefan Smeu, Elena Burceanu, Emanuela Haller, Andrei Liviu Nicolicioiu

+ 备注:ICCV 2024 - Workshop on Out Of Distribution Generalization in Computer Vision

+ 关键词:robust novelty detection, irrelevant factors, novelty detection, tackle the problem, problem of robust

+

+ 点击查看摘要

+ We tackle the problem of robust novelty detection, where we aim to detect novelties in terms of semantic content while being invariant to changes in other, irrelevant factors. Specifically, we operate in a setup with multiple environments, where we determine the set of features that are associated more with the environments, rather than to the content relevant for the task. Thus, we propose a method that starts with a pretrained embedding and a multi-env setup and manages to rank the features based on their environment-focus. First, we compute a per-feature score based on the feature distribution variance between envs. Next, we show that by dropping the highly scored ones, we manage to remove spurious correlations and improve the overall performance by up to 6%, both in covariance and sub-population shift cases, both for a real and a synthetic benchmark, that we introduce for this task.

+

+

+

+ 10. 标题:See to Touch: Learning Tactile Dexterity through Visual Incentives

+ 编号:[12]

+ 链接:https://arxiv.org/abs/2309.12300

+ 作者:Irmak Guzey, Yinlong Dai, Ben Evans, Soumith Chintala, Lerrel Pinto

+ 备注:

+ 关键词:Equipping multi-fingered robots, Equipping multi-fingered, achieving the precise, crucial for achieving, tactile sensing

+

+ 点击查看摘要

+ Equipping multi-fingered robots with tactile sensing is crucial for achieving the precise, contact-rich, and dexterous manipulation that humans excel at. However, relying solely on tactile sensing fails to provide adequate cues for reasoning about objects' spatial configurations, limiting the ability to correct errors and adapt to changing situations. In this paper, we present Tactile Adaptation from Visual Incentives (TAVI), a new framework that enhances tactile-based dexterity by optimizing dexterous policies using vision-based rewards. First, we use a contrastive-based objective to learn visual representations. Next, we construct a reward function using these visual representations through optimal-transport based matching on one human demonstration. Finally, we use online reinforcement learning on our robot to optimize tactile-based policies that maximize the visual reward. On six challenging tasks, such as peg pick-and-place, unstacking bowls, and flipping slender objects, TAVI achieves a success rate of 73% using our four-fingered Allegro robot hand. The increase in performance is 108% higher than policies using tactile and vision-based rewards and 135% higher than policies without tactile observational input. Robot videos are best viewed on our project website: this https URL.

+

+

+

+ 11. 标题:Learning to Drive Anywhere

+ 编号:[13]

+ 链接:https://arxiv.org/abs/2309.12295

+ 作者:Ruizhao Zhu, Peng Huang, Eshed Ohn-Bar, Venkatesh Saligrama

+ 备注:Conference on Robot Learning (CoRL) 2023. this https URL

+ 关键词:Human drivers, left vs. right-hand, drivers can seamlessly, decisions across geographical, diverse conditions

+

+ 点击查看摘要

+ Human drivers can seamlessly adapt their driving decisions across geographical locations with diverse conditions and rules of the road, e.g., left vs. right-hand traffic. In contrast, existing models for autonomous driving have been thus far only deployed within restricted operational domains, i.e., without accounting for varying driving behaviors across locations or model scalability. In this work, we propose AnyD, a single geographically-aware conditional imitation learning (CIL) model that can efficiently learn from heterogeneous and globally distributed data with dynamic environmental, traffic, and social characteristics. Our key insight is to introduce a high-capacity geo-location-based channel attention mechanism that effectively adapts to local nuances while also flexibly modeling similarities among regions in a data-driven manner. By optimizing a contrastive imitation objective, our proposed approach can efficiently scale across inherently imbalanced data distributions and location-dependent events. We demonstrate the benefits of our AnyD agent across multiple datasets, cities, and scalable deployment paradigms, i.e., centralized, semi-supervised, and distributed agent training. Specifically, AnyD outperforms CIL baselines by over 14% in open-loop evaluation and 30% in closed-loop testing on CARLA.

+

+

+

+ 12. 标题:Can We Reliably Improve the Robustness to Image Acquisition of Remote Sensing of PV Systems?

+ 编号:[49]

+ 链接:https://arxiv.org/abs/2309.12214

+ 作者:Gabriel Kasmi, Laurent Dubus, Yves-Marie Saint-Drenan, Philippe Blanc

+ 备注:13 pages, 9 figures, 3 tables. arXiv admin note: text overlap with arXiv:2305.14979

+ 关键词:acquisition conditions, current techniques lack, techniques lack reliability, Photovoltaic, energy

+

+ 点击查看摘要

+ Photovoltaic (PV) energy is crucial for the decarbonization of energy systems. Due to the lack of centralized data, remote sensing of rooftop PV installations is the best option to monitor the evolution of the rooftop PV installed fleet at a regional scale. However, current techniques lack reliability and are notably sensitive to shifts in the acquisition conditions. To overcome this, we leverage the wavelet scale attribution method (WCAM), which decomposes a model's prediction in the space-scale domain. The WCAM enables us to assess on which scales the representation of a PV model rests and provides insights to derive methods that improve the robustness to acquisition conditions, thus increasing trust in deep learning systems to encourage their use for the safe integration of clean energy in electric systems.

+

+

+

+ 13. 标题:SG-Bot: Object Rearrangement via Coarse-to-Fine Robotic Imagination on Scene Graphs

+ 编号:[58]

+ 链接:https://arxiv.org/abs/2309.12188

+ 作者:Guangyao Zhai, Xiaoni Cai, Dianye Huang, Yan Di, Fabian Manhardt, Federico Tombari, Nassir Navab, Benjamin Busam

+ 备注:8 pages, 6 figures. A video is uploaded here: this https URL

+ 关键词:robotic-environment interactions, representing a significant, pivotal in robotic-environment, significant capability, capability in embodied

+

+ 点击查看摘要

+ Object rearrangement is pivotal in robotic-environment interactions, representing a significant capability in embodied AI. In this paper, we present SG-Bot, a novel rearrangement framework that utilizes a coarse-to-fine scheme with a scene graph as the scene representation. Unlike previous methods that rely on either known goal priors or zero-shot large models, SG-Bot exemplifies lightweight, real-time, and user-controllable characteristics, seamlessly blending the consideration of commonsense knowledge with automatic generation capabilities. SG-Bot employs a three-fold procedure--observation, imagination, and execution--to adeptly address the task. Initially, objects are discerned and extracted from a cluttered scene during the observation. These objects are first coarsely organized and depicted within a scene graph, guided by either commonsense or user-defined criteria. Then, this scene graph subsequently informs a generative model, which forms a fine-grained goal scene considering the shape information from the initial scene and object semantics. Finally, for execution, the initial and envisioned goal scenes are matched to formulate robotic action policies. Experimental results demonstrate that SG-Bot outperforms competitors by a large margin.

+

+

+

+ 14. 标题:ORTexME: Occlusion-Robust Human Shape and Pose via Temporal Average Texture and Mesh Encoding

+ 编号:[59]

+ 链接:https://arxiv.org/abs/2309.12183

+ 作者:Yu Cheng, Bo Wang, Robby T. Tan

+ 备注:8 pages, 8 figures

+ 关键词:limited labeled data, human shape, initial human shape, models trained, shape and pose

+

+ 点击查看摘要

+ In 3D human shape and pose estimation from a monocular video, models trained with limited labeled data cannot generalize well to videos with occlusion, which is common in the wild videos. The recent human neural rendering approaches focusing on novel view synthesis initialized by the off-the-shelf human shape and pose methods have the potential to correct the initial human shape. However, the existing methods have some drawbacks such as, erroneous in handling occlusion, sensitive to inaccurate human segmentation, and ineffective loss computation due to the non-regularized opacity field. To address these problems, we introduce ORTexME, an occlusion-robust temporal method that utilizes temporal information from the input video to better regularize the occluded body parts. While our ORTexME is based on NeRF, to determine the reliable regions for the NeRF ray sampling, we utilize our novel average texture learning approach to learn the average appearance of a person, and to infer a mask based on the average texture. In addition, to guide the opacity-field updates in NeRF to suppress blur and noise, we propose the use of human body mesh. The quantitative evaluation demonstrates that our method achieves significant improvement on the challenging multi-person 3DPW dataset, where our method achieves 1.8 P-MPJPE error reduction. The SOTA rendering-based methods fail and enlarge the error up to 5.6 on the same dataset.

+

+

+

+ 15. 标题:Autoregressive Sign Language Production: A Gloss-Free Approach with Discrete Representations

+ 编号:[60]

+ 链接:https://arxiv.org/abs/2309.12179

+ 作者:Eui Jun Hwang, Huije Lee, Jong C. Park

+ 备注:5 pages, 3 figures, 6 tables

+ 关键词:Sign Language Production, Vector Quantization Network, Gloss-free Sign Language, spoken language sentences, language Vector Quantization

+

+ 点击查看摘要

+ Gloss-free Sign Language Production (SLP) offers a direct translation of spoken language sentences into sign language, bypassing the need for gloss intermediaries. This paper presents the Sign language Vector Quantization Network, a novel approach to SLP that leverages Vector Quantization to derive discrete representations from sign pose sequences. Our method, rooted in both manual and non-manual elements of signing, supports advanced decoding methods and integrates latent-level alignment for enhanced linguistic coherence. Through comprehensive evaluations, we demonstrate superior performance of our method over prior SLP methods and highlight the reliability of Back-Translation and Fréchet Gesture Distance as evaluation metrics.

+

+

+

+ 16. 标题:SANPO: A Scene Understanding, Accessibility, Navigation, Pathfinding, Obstacle Avoidance Dataset

+ 编号:[62]

+ 链接:https://arxiv.org/abs/2309.12172

+ 作者:Sagar M. Waghmare, Kimberly Wilber, Dave Hawkey, Xuan Yang, Matthew Wilson, Stephanie Debats, Cattalyya Nuengsigkapian, Astuti Sharma, Lars Pandikow, Huisheng Wang, Hartwig Adam, Mikhail Sirotenko

+ 备注:10 pages plus additional references. 13 figures

+ 关键词:outdoor environments, diverse outdoor environments, SANPO, video, environments

+

+ 点击查看摘要

+ We introduce SANPO, a large-scale egocentric video dataset focused on dense prediction in outdoor environments. It contains stereo video sessions collected across diverse outdoor environments, as well as rendered synthetic video sessions. (Synthetic data was provided by Parallel Domain.) All sessions have (dense) depth and odometry labels. All synthetic sessions and a subset of real sessions have temporally consistent dense panoptic segmentation labels. To our knowledge, this is the first human egocentric video dataset with both large scale dense panoptic segmentation and depth annotations. In addition to the dataset we also provide zero-shot baselines and SANPO benchmarks for future research. We hope that the challenging nature of SANPO will help advance the state-of-the-art in video segmentation, depth estimation, multi-task visual modeling, and synthetic-to-real domain adaptation, while enabling human navigation systems.

+SANPO is available here: this https URL

+

+

+

+ 17. 标题:Information Forensics and Security: A quarter-century-long journey

+ 编号:[70]

+ 链接:https://arxiv.org/abs/2309.12159

+ 作者:Mauro Barni, Patrizio Campisi, Edward J. Delp, Gwenael Doërr, Jessica Fridrich, Nasir Memon, Fernando Pérez-González, Anderson Rocha, Luisa Verdoliva, Min Wu

+ 备注:

+ 关键词:hold perpetrators accountable, Forensics and Security, IFS research area, Information Forensics, people use devices

+

+ 点击查看摘要

+ Information Forensics and Security (IFS) is an active R&D area whose goal is to ensure that people use devices, data, and intellectual properties for authorized purposes and to facilitate the gathering of solid evidence to hold perpetrators accountable. For over a quarter century since the 1990s, the IFS research area has grown tremendously to address the societal needs of the digital information era. The IEEE Signal Processing Society (SPS) has emerged as an important hub and leader in this area, and the article below celebrates some landmark technical contributions. In particular, we highlight the major technological advances on some selected focus areas in the field developed in the last 25 years from the research community and present future trends.

+

+

+

+ 18. 标题:Unsupervised Domain Adaptation for Self-Driving from Past Traversal Features

+ 编号:[76]

+ 链接:https://arxiv.org/abs/2309.12140

+ 作者:Travis Zhang, Katie Luo, Cheng Perng Phoo, Yurong You, Wei-Lun Chao, Bharath Hariharan, Mark Campbell, Kilian Q. Weinberger

+ 备注:

+ 关键词:significantly improved accuracy, improved accuracy, rapid development, self-driving cars, cars has significantly

+

+ 点击查看摘要

+ The rapid development of 3D object detection systems for self-driving cars has significantly improved accuracy. However, these systems struggle to generalize across diverse driving environments, which can lead to safety-critical failures in detecting traffic participants. To address this, we propose a method that utilizes unlabeled repeated traversals of multiple locations to adapt object detectors to new driving environments. By incorporating statistics computed from repeated LiDAR scans, we guide the adaptation process effectively. Our approach enhances LiDAR-based detection models using spatial quantized historical features and introduces a lightweight regression head to leverage the statistics for feature regularization. Additionally, we leverage the statistics for a novel self-training process to stabilize the training. The framework is detector model-agnostic and experiments on real-world datasets demonstrate significant improvements, achieving up to a 20-point performance gain, especially in detecting pedestrians and distant objects. Code is available at this https URL.

+

+

+

+ 19. 标题:Vulnerability of 3D Face Recognition Systems to Morphing Attacks

+ 编号:[83]

+ 链接:https://arxiv.org/abs/2309.12118

+ 作者:Sanjeet Vardam, Luuk Spreeuwers

+ 备注:

+ 关键词:recent years face, hardware and software, recent years, mainstream due, face

+

+ 点击查看摘要

+ In recent years face recognition systems have been brought to the mainstream due to development in hardware and software. Consistent efforts are being made to make them better and more secure. This has also brought developments in 3D face recognition systems at a rapid pace. These 3DFR systems are expected to overcome certain vulnerabilities of 2DFR systems. One such problem that the domain of 2DFR systems face is face image morphing. A substantial amount of research is being done for generation of high quality face morphs along with detection of attacks from these morphs. Comparatively the understanding of vulnerability of 3DFR systems against 3D face morphs is less. But at the same time an expectation is set from 3DFR systems to be more robust against such attacks. This paper attempts to research and gain more information on this matter. The paper describes a couple of methods that can be used to generate 3D face morphs. The face morphs that are generated using this method are then compared to the contributing faces to obtain similarity scores. The highest MMPMR is obtained around 40% with RMMR of 41.76% when 3DFRS are attacked with look-a-like morphs.

+

+

+

+ 20. 标题:Exploiting CLIP-based Multi-modal Approach for Artwork Classification and Retrieval

+ 编号:[89]

+ 链接:https://arxiv.org/abs/2309.12110

+ 作者:Alberto Baldrati, Marco Bertini, Tiberio Uricchio, Alberto Del Bimbo

+ 备注:Proc. of Florence Heri-Tech 2022: The Future of Heritage Science and Technologies: ICT and Digital Heritage, 2022

+ 关键词:semantically dense textual, dense textual supervision, textual supervision tend, visual models trained, recent CLIP model

+

+ 点击查看摘要

+ Given the recent advances in multimodal image pretraining where visual models trained with semantically dense textual supervision tend to have better generalization capabilities than those trained using categorical attributes or through unsupervised techniques, in this work we investigate how recent CLIP model can be applied in several tasks in artwork domain. We perform exhaustive experiments on the NoisyArt dataset which is a dataset of artwork images crawled from public resources on the web. On such dataset CLIP achieves impressive results on (zero-shot) classification and promising results in both artwork-to-artwork and description-to-artwork domain.

+

+

+

+ 21. 标题:FourierLoss: Shape-Aware Loss Function with Fourier Descriptors

+ 编号:[93]

+ 链接:https://arxiv.org/abs/2309.12106

+ 作者:Mehmet Bahadir Erden, Selahattin Cansiz, Onur Caki, Haya Khattak, Durmus Etiz, Melek Cosar Yakar, Kerem Duruer, Berke Barut, Cigdem Gunduz-Demir

+ 备注:

+ 关键词:loss function, image segmentation tasks, medical image segmentation, shape-aware loss function, popular choice

+

+ 点击查看摘要

+ Encoder-decoder networks become a popular choice for various medical image segmentation tasks. When they are trained with a standard loss function, these networks are not explicitly enforced to preserve the shape integrity of an object in an image. However, this ability of the network is important to obtain more accurate results, especially when there is a low-contrast difference between the object and its surroundings. In response to this issue, this work introduces a new shape-aware loss function, which we name FourierLoss. This loss function relies on quantifying the shape dissimilarity between the ground truth and the predicted segmentation maps through the Fourier descriptors calculated on their objects, and penalizing this dissimilarity in network training. Different than the previous studies, FourierLoss offers an adaptive loss function with trainable hyperparameters that control the importance of the level of the shape details that the network is enforced to learn in the training process. This control is achieved by the proposed adaptive loss update mechanism, which end-to-end learns the hyperparameters simultaneously with the network weights by backpropagation. As a result of using this mechanism, the network can dynamically change its attention from learning the general outline of an object to learning the details of its contour points, or vice versa, in different training epochs. Working on 2879 computed tomography images of 93 subjects, our experiments revealed that the proposed adaptive shape-aware loss function led to statistically significantly better results for liver segmentation, compared to its counterparts.

+

+

+

+ 22. 标题:Multi-Task Cooperative Learning via Searching for Flat Minima

+ 编号:[96]

+ 链接:https://arxiv.org/abs/2309.12090

+ 作者:Fuping Wu, Le Zhang, Yang Sun, Yuanhan Mo, Thomas Nichols, Bartlomiej W. Papiez

+ 备注:This paper has been accepted by MedAGI workshop in MICCAI2023

+ 关键词:medical image analysis, shown great potential, image analysis, improving the generalizability, shown great

+

+ 点击查看摘要

+ Multi-task learning (MTL) has shown great potential in medical image analysis, improving the generalizability of the learned features and the performance in individual tasks. However, most of the work on MTL focuses on either architecture design or gradient manipulation, while in both scenarios, features are learned in a competitive manner. In this work, we propose to formulate MTL as a multi/bi-level optimization problem, and therefore force features to learn from each task in a cooperative approach. Specifically, we update the sub-model for each task alternatively taking advantage of the learned sub-models of the other tasks. To alleviate the negative transfer problem during the optimization, we search for flat minima for the current objective function with regard to features from other tasks. To demonstrate the effectiveness of the proposed approach, we validate our method on three publicly available datasets. The proposed method shows the advantage of cooperative learning, and yields promising results when compared with the state-of-the-art MTL approaches. The code will be available online.

+

+

+

+ 23. 标题:Survey of Action Recognition, Spotting and Spatio-Temporal Localization in Soccer -- Current Trends and Research Perspectives

+ 编号:[102]

+ 链接:https://arxiv.org/abs/2309.12067

+ 作者:Karolina Seweryn, Anna Wróblewska, Szymon Łukasik

+ 备注:

+ 关键词:challenging task due, interactions between players, complex and dynamic, dynamic nature, challenging task

+

+ 点击查看摘要

+ Action scene understanding in soccer is a challenging task due to the complex and dynamic nature of the game, as well as the interactions between players. This article provides a comprehensive overview of this task divided into action recognition, spotting, and spatio-temporal action localization, with a particular emphasis on the modalities used and multimodal methods. We explore the publicly available data sources and metrics used to evaluate models' performance. The article reviews recent state-of-the-art methods that leverage deep learning techniques and traditional methods. We focus on multimodal methods, which integrate information from multiple sources, such as video and audio data, and also those that represent one source in various ways. The advantages and limitations of methods are discussed, along with their potential for improving the accuracy and robustness of models. Finally, the article highlights some of the open research questions and future directions in the field of soccer action recognition, including the potential for multimodal methods to advance this field. Overall, this survey provides a valuable resource for researchers interested in the field of action scene understanding in soccer.

+

+

+

+ 24. 标题:Self-Calibrating, Fully Differentiable NLOS Inverse Rendering

+ 编号:[111]

+ 链接:https://arxiv.org/abs/2309.12047

+ 作者:Kiseok Choi, Inchul Kim, Dongyoung Choi, Julio Marco, Diego Gutierrez, Min H. Kim

+ 备注:

+ 关键词:indirect illumination measured, hidden scenes, inverting the optical, optical paths, paths of indirect

+

+ 点击查看摘要

+ Existing time-resolved non-line-of-sight (NLOS) imaging methods reconstruct hidden scenes by inverting the optical paths of indirect illumination measured at visible relay surfaces. These methods are prone to reconstruction artifacts due to inversion ambiguities and capture noise, which are typically mitigated through the manual selection of filtering functions and parameters. We introduce a fully-differentiable end-to-end NLOS inverse rendering pipeline that self-calibrates the imaging parameters during the reconstruction of hidden scenes, using as input only the measured illumination while working both in the time and frequency domains. Our pipeline extracts a geometric representation of the hidden scene from NLOS volumetric intensities and estimates the time-resolved illumination at the relay wall produced by such geometric information using differentiable transient rendering. We then use gradient descent to optimize imaging parameters by minimizing the error between our simulated time-resolved illumination and the measured illumination. Our end-to-end differentiable pipeline couples diffraction-based volumetric NLOS reconstruction with path-space light transport and a simple ray marching technique to extract detailed, dense sets of surface points and normals of hidden scenes. We demonstrate the robustness of our method to consistently reconstruct geometry and albedo, even under significant noise levels.

+

+

+

+ 25. 标题:Beyond Image Borders: Learning Feature Extrapolation for Unbounded Image Composition

+ 编号:[112]

+ 链接:https://arxiv.org/abs/2309.12042

+ 作者:Xiaoyu Liu, Ming Liu, Junyi Li, Shuai Liu, Xiaotao Wang, Lei Lei, Wangmeng Zuo

+ 备注:

+ 关键词:image, image composition, view, camera view, improving image composition

+

+ 点击查看摘要

+ For improving image composition and aesthetic quality, most existing methods modulate the captured images by striking out redundant content near the image borders. However, such image cropping methods are limited in the range of image views. Some methods have been suggested to extrapolate the images and predict cropping boxes from the extrapolated image. Nonetheless, the synthesized extrapolated regions may be included in the cropped image, making the image composition result not real and potentially with degraded image quality. In this paper, we circumvent this issue by presenting a joint framework for both unbounded recommendation of camera view and image composition (i.e., UNIC). In this way, the cropped image is a sub-image of the image acquired by the predicted camera view, and thus can be guaranteed to be real and consistent in image quality. Specifically, our framework takes the current camera preview frame as input and provides a recommendation for view adjustment, which contains operations unlimited by the image borders, such as zooming in or out and camera movement. To improve the prediction accuracy of view adjustment prediction, we further extend the field of view by feature extrapolation. After one or several times of view adjustments, our method converges and results in both a camera view and a bounding box showing the image composition recommendation. Extensive experiments are conducted on the datasets constructed upon existing image cropping datasets, showing the effectiveness of our UNIC in unbounded recommendation of camera view and image composition. The source code, dataset, and pretrained models is available at this https URL.

+

+

+

+ 26. 标题:BASE: Probably a Better Approach to Multi-Object Tracking

+ 编号:[116]

+ 链接:https://arxiv.org/abs/2309.12035

+ 作者:Martin Vonheim Larsen, Sigmund Rolfsjord, Daniel Gusland, Jörgen Ahlberg, Kim Mathiassen

+ 备注:

+ 关键词:hoc schemes, visual object tracking, tracking algorithms, combine simple tracking, Bayesian Approximation Single-hypothesis

+

+ 点击查看摘要

+ The field of visual object tracking is dominated by methods that combine simple tracking algorithms and ad hoc schemes. Probabilistic tracking algorithms, which are leading in other fields, are surprisingly absent from the leaderboards. We found that accounting for distance in target kinematics, exploiting detector confidence and modelling non-uniform clutter characteristics is critical for a probabilistic tracker to work in visual tracking. Previous probabilistic methods fail to address most or all these aspects, which we believe is why they fall so far behind current state-of-the-art (SOTA) methods (there are no probabilistic trackers in the MOT17 top 100). To rekindle progress among probabilistic approaches, we propose a set of pragmatic models addressing these challenges, and demonstrate how they can be incorporated into a probabilistic framework. We present BASE (Bayesian Approximation Single-hypothesis Estimator), a simple, performant and easily extendible visual tracker, achieving state-of-the-art (SOTA) on MOT17 and MOT20, without using Re-Id. Code will be made available at this https URL

+

+

+

+ 27. 标题:Face Identity-Aware Disentanglement in StyleGAN

+ 编号:[117]

+ 链接:https://arxiv.org/abs/2309.12033

+ 作者:Adrian Suwała, Bartosz Wójcik, Magdalena Proszewska, Jacek Tabor, Przemysław Spurek, Marek Śmieja

+ 备注:

+ 关键词:Conditional GANs, GANs are frequently, person identity, face attributes, attributes

+

+ 点击查看摘要

+ Conditional GANs are frequently used for manipulating the attributes of face images, such as expression, hairstyle, pose, or age. Even though the state-of-the-art models successfully modify the requested attributes, they simultaneously modify other important characteristics of the image, such as a person's identity. In this paper, we focus on solving this problem by introducing PluGeN4Faces, a plugin to StyleGAN, which explicitly disentangles face attributes from a person's identity. Our key idea is to perform training on images retrieved from movie frames, where a given person appears in various poses and with different attributes. By applying a type of contrastive loss, we encourage the model to group images of the same person in similar regions of latent space. Our experiments demonstrate that the modifications of face attributes performed by PluGeN4Faces are significantly less invasive on the remaining characteristics of the image than in the existing state-of-the-art models.

+

+

+

+ 28. 标题:Unveiling the Hidden Realm: Self-supervised Skeleton-based Action Recognition in Occluded Environments

+ 编号:[121]

+ 链接:https://arxiv.org/abs/2309.12029

+ 作者:Yifei Chen, Kunyu Peng, Alina Roitberg, David Schneider, Jiaming Zhang, Junwei Zheng, Ruiping Liu, Yufan Chen, Kailun Yang, Rainer Stiefelhagen

+ 备注:The source code will be made publicly available at this https URL

+ 关键词:autonomous robotic systems, adverse situations involving, situations involving target, involving target occlusions, integrate action recognition

+

+ 点击查看摘要

+ To integrate action recognition methods into autonomous robotic systems, it is crucial to consider adverse situations involving target occlusions. Such a scenario, despite its practical relevance, is rarely addressed in existing self-supervised skeleton-based action recognition methods. To empower robots with the capacity to address occlusion, we propose a simple and effective method. We first pre-train using occluded skeleton sequences, then use k-means clustering (KMeans) on sequence embeddings to group semantically similar samples. Next, we employ K-nearest-neighbor (KNN) to fill in missing skeleton data based on the closest sample neighbors. Imputing incomplete skeleton sequences to create relatively complete sequences as input provides significant benefits to existing skeleton-based self-supervised models. Meanwhile, building on the state-of-the-art Partial Spatio-Temporal Learning (PSTL), we introduce an Occluded Partial Spatio-Temporal Learning (OPSTL) framework. This enhancement utilizes Adaptive Spatial Masking (ASM) for better use of high-quality, intact skeletons. The effectiveness of our imputation methods is verified on the challenging occluded versions of the NTURGB+D 60 and NTURGB+D 120. The source code will be made publicly available at this https URL.

+

+

+

+ 29. 标题:Precision in Building Extraction: Comparing Shallow and Deep Models using LiDAR Data

+ 编号:[123]

+ 链接:https://arxiv.org/abs/2309.12027

+ 作者:Muhammad Sulaiman, Mina Farmanbar, Ahmed Nabil Belbachir, Chunming Rong

+ 备注:Accepted at FAIEMA 2023

+ 关键词:Intersection over Union, population management, deep learning models, infrastructure development, geological observations

+

+ 点击查看摘要

+ Building segmentation is essential in infrastructure development, population management, and geological observations. This article targets shallow models due to their interpretable nature to assess the presence of LiDAR data for supervised segmentation. The benchmark data used in this article are published in NORA MapAI competition for deep learning model. Shallow models are compared with deep learning models based on Intersection over Union (IoU) and Boundary Intersection over Union (BIoU). In the proposed work, boundary masks from the original mask are generated to improve the BIoU score, which relates to building shapes' borderline. The influence of LiDAR data is tested by training the model with only aerial images in task 1 and a combination of aerial and LiDAR data in task 2 and then compared. shallow models outperform deep learning models in IoU by 8% using aerial images (task 1) only and 2% in combined aerial images and LiDAR data (task 2). In contrast, deep learning models show better performance on BIoU scores. Boundary masks improve BIoU scores by 4% in both tasks. Light Gradient-Boosting Machine (LightGBM) performs better than RF and Extreme Gradient Boosting (XGBoost).

+

+

+

+ 30. 标题:Demystifying Visual Features of Movie Posters for Multi-Label Genre Identification

+ 编号:[125]

+ 链接:https://arxiv.org/abs/2309.12022

+ 作者:Utsav Kumar Nareti, Chandranath Adak, Soumi Chattopadhyay

+ 备注:

+ 关键词:OTT platforms, media and OTT, social media, part of advertising, advertising and marketing

+

+ 点击查看摘要

+ In the film industry, movie posters have been an essential part of advertising and marketing for many decades, and continue to play a vital role even today in the form of digital posters through online, social media and OTT platforms. Typically, movie posters can effectively promote and communicate the essence of a film, such as its genre, visual style/ tone, vibe and storyline cue/ theme, which are essential to attract potential viewers. Identifying the genres of a movie often has significant practical applications in recommending the film to target audiences. Previous studies on movie genre identification are limited to subtitles, plot synopses, and movie scenes that are mostly accessible after the movie release. Posters usually contain pre-release implicit information to generate mass interest. In this paper, we work for automated multi-label genre identification only from movie poster images, without any aid of additional textual/meta-data information about movies, which is one of the earliest attempts of its kind. Here, we present a deep transformer network with a probabilistic module to identify the movie genres exclusively from the poster. For experimental analysis, we procured 13882 number of posters of 13 genres from the Internet Movie Database (IMDb), where our model performances were encouraging and even outperformed some major contemporary architectures.

+

+

+

+ 31. 标题:Elevating Skeleton-Based Action Recognition with Efficient Multi-Modality Self-Supervision

+ 编号:[129]

+ 链接:https://arxiv.org/abs/2309.12009

+ 作者:Yiping Wei, Kunyu Peng, Alina Roitberg, Jiaming Zhang, Junwei Zheng, Ruiping Liu, Yufan Chen, Kailun Yang, Rainer Stiefelhagen

+ 备注:The source code will be made publicly available at this https URL

+ 关键词:Self-supervised representation learning, human action recognition, Self-supervised representation, recent years, representation learning

+

+ 点击查看摘要

+ Self-supervised representation learning for human action recognition has developed rapidly in recent years. Most of the existing works are based on skeleton data while using a multi-modality setup. These works overlooked the differences in performance among modalities, which led to the propagation of erroneous knowledge between modalities while only three fundamental modalities, i.e., joints, bones, and motions are used, hence no additional modalities are explored.

+In this work, we first propose an Implicit Knowledge Exchange Module (IKEM) which alleviates the propagation of erroneous knowledge between low-performance modalities. Then, we further propose three new modalities to enrich the complementary information between modalities. Finally, to maintain efficiency when introducing new modalities, we propose a novel teacher-student framework to distill the knowledge from the secondary modalities into the mandatory modalities considering the relationship constrained by anchors, positives, and negatives, named relational cross-modality knowledge distillation. The experimental results demonstrate the effectiveness of our approach, unlocking the efficient use of skeleton-based multi-modality data. Source code will be made publicly available at this https URL.

+

+

+

+ 32. 标题:Neural Stochastic Screened Poisson Reconstruction

+ 编号:[137]

+ 链接:https://arxiv.org/abs/2309.11993

+ 作者:Silvia Sellán, Alec Jacobson

+ 备注:

+ 关键词:Reconstructing a surface, underdetermined problem, point cloud, Poisson smoothness prior, Poisson smoothness

+

+ 点击查看摘要

+ Reconstructing a surface from a point cloud is an underdetermined problem. We use a neural network to study and quantify this reconstruction uncertainty under a Poisson smoothness prior. Our algorithm addresses the main limitations of existing work and can be fully integrated into the 3D scanning pipeline, from obtaining an initial reconstruction to deciding on the next best sensor position and updating the reconstruction upon capturing more data.

+

+

+

+ 33. 标题:Crop Row Switching for Vision-Based Navigation: A Comprehensive Approach for Efficient Crop Field Navigation

+ 编号:[139]

+ 链接:https://arxiv.org/abs/2309.11989

+ 作者:Rajitha de Silva, Grzegorz Cielniak, Junfeng Gao

+ 备注:Submitted to IEEE ICRA 2024

+ 关键词:crop row, crop, limited to in-row, row, Vision-based mobile robot

+

+ 点击查看摘要

+ Vision-based mobile robot navigation systems in arable fields are mostly limited to in-row navigation. The process of switching from one crop row to the next in such systems is often aided by GNSS sensors or multiple camera setups. This paper presents a novel vision-based crop row-switching algorithm that enables a mobile robot to navigate an entire field of arable crops using a single front-mounted camera. The proposed row-switching manoeuvre uses deep learning-based RGB image segmentation and depth data to detect the end of the crop row, and re-entry point to the next crop row which would be used in a multi-state row switching pipeline. Each state of this pipeline use visual feedback or wheel odometry of the robot to successfully navigate towards the next crop row. The proposed crop row navigation pipeline was tested in a real sugar beet field containing crop rows with discontinuities, varying light levels, shadows and irregular headland surfaces. The robot could successfully exit from one crop row and re-enter the next crop row using the proposed pipeline with absolute median errors averaging at 19.25 cm and 6.77° for linear and rotational steps of the proposed manoeuvre.

+

+

+

+ 34. 标题:ZS6D: Zero-shot 6D Object Pose Estimation using Vision Transformers

+ 编号:[141]

+ 链接:https://arxiv.org/abs/2309.11986

+ 作者:Philipp Ausserlechner, David Haberger, Stefan Thalhammer, Jean-Baptiste Weibel, Markus Vincze

+ 备注:

+ 关键词:unconstrained real-world scenarios, robotic systems increasingly, systems increasingly encounter, increasingly encounter complex, pose estimation

+

+ 点击查看摘要

+ As robotic systems increasingly encounter complex and unconstrained real-world scenarios, there is a demand to recognize diverse objects. The state-of-the-art 6D object pose estimation methods rely on object-specific training and therefore do not generalize to unseen objects. Recent novel object pose estimation methods are solving this issue using task-specific fine-tuned CNNs for deep template matching. This adaptation for pose estimation still requires expensive data rendering and training procedures. MegaPose for example is trained on a dataset consisting of two million images showing 20,000 different objects to reach such generalization capabilities. To overcome this shortcoming we introduce ZS6D, for zero-shot novel object 6D pose estimation. Visual descriptors, extracted using pre-trained Vision Transformers (ViT), are used for matching rendered templates against query images of objects and for establishing local correspondences. These local correspondences enable deriving geometric correspondences and are used for estimating the object's 6D pose with RANSAC-based PnP. This approach showcases that the image descriptors extracted by pre-trained ViTs are well-suited to achieve a notable improvement over two state-of-the-art novel object 6D pose estimation methods, without the need for task-specific fine-tuning. Experiments are performed on LMO, YCBV, and TLESS. In comparison to one of the two methods we improve the Average Recall on all three datasets and compared to the second method we improve on two datasets.

+

+

+

+ 35. 标题:NeuralLabeling: A versatile toolset for labeling vision datasets using Neural Radiance Fields

+ 编号:[151]

+ 链接:https://arxiv.org/abs/2309.11966

+ 作者:Floris Erich, Naoya Chiba, Yusuke Yoshiyasu, Noriaki Ando, Ryo Hanai, Yukiyasu Domae

+ 备注:8 pages, project website: this https URL

+ 关键词:generating segmentation masks, bounding boxes, Neural Radiance Fields, segmentation masks, toolset for annotating

+

+ 点击查看摘要

+ We present NeuralLabeling, a labeling approach and toolset for annotating a scene using either bounding boxes or meshes and generating segmentation masks, affordance maps, 2D bounding boxes, 3D bounding boxes, 6DOF object poses, depth maps and object meshes. NeuralLabeling uses Neural Radiance Fields (NeRF) as renderer, allowing labeling to be performed using 3D spatial tools while incorporating geometric clues such as occlusions, relying only on images captured from multiple viewpoints as input. To demonstrate the applicability of NeuralLabeling to a practical problem in robotics, we added ground truth depth maps to 30000 frames of transparent object RGB and noisy depth maps of glasses placed in a dishwasher captured using an RGBD sensor, yielding the Dishwasher30k dataset. We show that training a simple deep neural network with supervision using the annotated depth maps yields a higher reconstruction performance than training with the previously applied weakly supervised approach.

+

+

+

+ 36. 标题:Ego3DPose: Capturing 3D Cues from Binocular Egocentric Views

+ 编号:[154]

+ 链接:https://arxiv.org/abs/2309.11962

+ 作者:Taeho Kang, Kyungjin Lee, Jinrui Zhang, Youngki Lee

+ 备注:12 pages, 10 figures, to be published as SIGGRAPH Asia 2023 Conference Papers

+ 关键词:highly accurate binocular, accurate binocular egocentric, highly accurate, pose reconstruction system, egocentric

+

+ 点击查看摘要

+ We present Ego3DPose, a highly accurate binocular egocentric 3D pose reconstruction system. The binocular egocentric setup offers practicality and usefulness in various applications, however, it remains largely under-explored. It has been suffering from low pose estimation accuracy due to viewing distortion, severe self-occlusion, and limited field-of-view of the joints in egocentric 2D images. Here, we notice that two important 3D cues, stereo correspondences, and perspective, contained in the egocentric binocular input are neglected. Current methods heavily rely on 2D image features, implicitly learning 3D information, which introduces biases towards commonly observed motions and leads to low overall accuracy. We observe that they not only fail in challenging occlusion cases but also in estimating visible joint positions. To address these challenges, we propose two novel approaches. First, we design a two-path network architecture with a path that estimates pose per limb independently with its binocular heatmaps. Without full-body information provided, it alleviates bias toward trained full-body distribution. Second, we leverage the egocentric view of body limbs, which exhibits strong perspective variance (e.g., a significantly large-size hand when it is close to the camera). We propose a new perspective-aware representation using trigonometry, enabling the network to estimate the 3D orientation of limbs. Finally, we develop an end-to-end pose reconstruction network that synergizes both techniques. Our comprehensive evaluations demonstrate that Ego3DPose outperforms state-of-the-art models by a pose estimation error (i.e., MPJPE) reduction of 23.1% in the UnrealEgo dataset. Our qualitative results highlight the superiority of our approach across a range of scenarios and challenges.

+

+

+

+ 37. 标题:A Study of Forward-Forward Algorithm for Self-Supervised Learning

+ 编号:[158]

+ 链接:https://arxiv.org/abs/2309.11955

+ 作者:Jonas Brenig, Radu Timofte

+ 备注:

+ 关键词:Self-supervised representation learning, representation learning, remarkable progress, learn useful image, Self-supervised representation

+

+ 点击查看摘要

+ Self-supervised representation learning has seen remarkable progress in the last few years, with some of the recent methods being able to learn useful image representations without labels. These methods are trained using backpropagation, the de facto standard. Recently, Geoffrey Hinton proposed the forward-forward algorithm as an alternative training method. It utilizes two forward passes and a separate loss function for each layer to train the network without backpropagation.

+In this study, for the first time, we study the performance of forward-forward vs. backpropagation for self-supervised representation learning and provide insights into the learned representation spaces. Our benchmark employs four standard datasets, namely MNIST, F-MNIST, SVHN and CIFAR-10, and three commonly used self-supervised representation learning techniques, namely rotation, flip and jigsaw.

+Our main finding is that while the forward-forward algorithm performs comparably to backpropagation during (self-)supervised training, the transfer performance is significantly lagging behind in all the studied settings. This may be caused by a combination of factors, including having a loss function for each layer and the way the supervised training is realized in the forward-forward paradigm. In comparison to backpropagation, the forward-forward algorithm focuses more on the boundaries and drops part of the information unnecessary for making decisions which harms the representation learning goal. Further investigation and research are necessary to stabilize the forward-forward strategy for self-supervised learning, to work beyond the datasets and configurations demonstrated by Geoffrey Hinton.

+

+

+

+ 38. 标题:Fully Transformer-Equipped Architecture for End-to-End Referring Video Object Segmentation

+ 编号:[165]

+ 链接:https://arxiv.org/abs/2309.11933

+ 作者:Ping Li, Yu Zhang, Li Yuan, Xianghua Xu

+ 备注:

+ 关键词:Video Object Segmentation, Referring Video Object, natural language query, Object Segmentation, referred object

+

+ 点击查看摘要

+ Referring Video Object Segmentation (RVOS) requires segmenting the object in video referred by a natural language query. Existing methods mainly rely on sophisticated pipelines to tackle such cross-modal task, and do not explicitly model the object-level spatial context which plays an important role in locating the referred object. Therefore, we propose an end-to-end RVOS framework completely built upon transformers, termed \textit{Fully Transformer-Equipped Architecture} (FTEA), which treats the RVOS task as a mask sequence learning problem and regards all the objects in video as candidate objects. Given a video clip with a text query, the visual-textual features are yielded by encoder, while the corresponding pixel-level and word-level features are aligned in terms of semantic similarity. To capture the object-level spatial context, we have developed the Stacked Transformer, which individually characterizes the visual appearance of each candidate object, whose feature map is decoded to the binary mask sequence in order directly. Finally, the model finds the best matching between mask sequence and text query. In addition, to diversify the generated masks for candidate objects, we impose a diversity loss on the model for capturing more accurate mask of the referred object. Empirical studies have shown the superiority of the proposed method on three benchmarks, e.g., FETA achieves 45.1% and 38.7% in terms of mAP on A2D Sentences (3782 videos) and J-HMDB Sentences (928 videos), respectively; it achieves 56.6% in terms of $\mathcal{J\&F}$ on Ref-YouTube-VOS (3975 videos and 7451 objects). Particularly, compared to the best candidate method, it has a gain of 2.1% and 3.2% in terms of P$@$0.5 on the former two, respectively, while it has a gain of 2.9% in terms of $\mathcal{J}$ on the latter one.

+

+

+

+ 39. 标题:Bridging the Gap: Learning Pace Synchronization for Open-World Semi-Supervised Learning

+ 编号:[168]

+ 链接:https://arxiv.org/abs/2309.11930

+ 作者:Bo Ye, Kai Gan, Tong Wei, Min-Ling Zhang

+ 备注:

+ 关键词:open-world semi-supervised learning, machine learning model, unlabeled data, labeled data, open-world semi-supervised

+

+ 点击查看摘要

+ In open-world semi-supervised learning, a machine learning model is tasked with uncovering novel categories from unlabeled data while maintaining performance on seen categories from labeled data. The central challenge is the substantial learning gap between seen and novel categories, as the model learns the former faster due to accurate supervisory information. To address this, we introduce 1) an adaptive margin loss based on estimated class distribution, which encourages a large negative margin for samples in seen classes, to synchronize learning paces, and 2) pseudo-label contrastive clustering, which pulls together samples which are likely from the same class in the output space, to enhance novel class discovery. Our extensive evaluations on multiple datasets demonstrate that existing models still hinder novel class learning, whereas our approach strikingly balances both seen and novel classes, achieving a remarkable 3% average accuracy increase on the ImageNet dataset compared to the prior state-of-the-art. Additionally, we find that fine-tuning the self-supervised pre-trained backbone significantly boosts performance over the default in prior literature. After our paper is accepted, we will release the code.

+

+

+

+ 40. 标题:Video Scene Location Recognition with Neural Networks

+ 编号:[169]

+ 链接:https://arxiv.org/abs/2309.11928

+ 作者:Lukáš Korel, Petr Pulc, Jiří Tumpach, Martin Holeňa

+ 备注:

+ 关键词:repeated shooting locations, artificial neural networks, Theory television series, Big Bang Theory, Bang Theory television

+

+ 点击查看摘要

+ This paper provides an insight into the possibility of scene recognition from a video sequence with a small set of repeated shooting locations (such as in television series) using artificial neural networks. The basic idea of the presented approach is to select a set of frames from each scene, transform them by a pre-trained singleimage pre-processing convolutional network, and classify the scene location with subsequent layers of the neural network. The considered networks have been tested and compared on a dataset obtained from The Big Bang Theory television series. We have investigated different neural network layers to combine individual frames, particularly AveragePooling, MaxPooling, Product, Flatten, LSTM, and Bidirectional LSTM layers. We have observed that only some of the approaches are suitable for the task at hand.

+

+

+

+ 41. 标题:TextCLIP: Text-Guided Face Image Generation And Manipulation Without Adversarial Training

+ 编号:[173]

+ 链接:https://arxiv.org/abs/2309.11923

+ 作者:Xiaozhou You, Jian Zhang

+ 备注:10 pages, 6 figures

+ 关键词:semantically edit parts, desired images conditioned, refers to semantically, semantically edit, edit parts

+

+ 点击查看摘要

+ Text-guided image generation aimed to generate desired images conditioned on given texts, while text-guided image manipulation refers to semantically edit parts of a given image based on specified texts. For these two similar tasks, the key point is to ensure image fidelity as well as semantic consistency. Many previous approaches require complex multi-stage generation and adversarial training, while struggling to provide a unified framework for both tasks. In this work, we propose TextCLIP, a unified framework for text-guided image generation and manipulation without adversarial training. The proposed method accepts input from images or random noise corresponding to these two different tasks, and under the condition of the specific texts, a carefully designed mapping network that exploits the powerful generative capabilities of StyleGAN and the text image representation capabilities of Contrastive Language-Image Pre-training (CLIP) generates images of up to $1024\times1024$ resolution that can currently be generated. Extensive experiments on the Multi-modal CelebA-HQ dataset have demonstrated that our proposed method outperforms existing state-of-the-art methods, both on text-guided generation tasks and manipulation tasks.

+

+

+

+ 42. 标题:Unlocking the Heart Using Adaptive Locked Agnostic Networks

+ 编号:[181]

+ 链接:https://arxiv.org/abs/2309.11899

+ 作者:Sylwia Majchrowska, Anders Hildeman, Philip Teare, Tom Diethe

+ 备注:The article was accepted to ICCV 2023 workshop PerDream: PERception, Decision making and REAsoning through Multimodal foundational modeling

+ 关键词:imaging applications requires, medical imaging applications, deep learning models, Locked Agnostic Network, Adaptive Locked Agnostic

+

+ 点击查看摘要

+ Supervised training of deep learning models for medical imaging applications requires a significant amount of labeled data. This is posing a challenge as the images are required to be annotated by medical professionals. To address this limitation, we introduce the Adaptive Locked Agnostic Network (ALAN), a concept involving self-supervised visual feature extraction using a large backbone model to produce anatomically robust semantic self-segmentation. In the ALAN methodology, this self-supervised training occurs only once on a large and diverse dataset. Due to the intuitive interpretability of the segmentation, downstream models tailored for specific tasks can be easily designed using white-box models with few parameters. This, in turn, opens up the possibility of communicating the inner workings of a model with domain experts and introducing prior knowledge into it. It also means that the downstream models become less data-hungry compared to fully supervised approaches. These characteristics make ALAN particularly well-suited for resource-scarce scenarios, such as costly clinical trials and rare diseases. In this paper, we apply the ALAN approach to three publicly available echocardiography datasets: EchoNet-Dynamic, CAMUS, and TMED-2. Our findings demonstrate that the self-supervised backbone model robustly identifies anatomical subregions of the heart in an apical four-chamber view. Building upon this, we design two downstream models, one for segmenting a target anatomical region, and a second for echocardiogram view classification.

+

+

+

+ 43. 标题:On-the-Fly SfM: What you capture is What you get

+ 编号:[192]

+ 链接:https://arxiv.org/abs/2309.11883

+ 作者:Zongqian Zhan, Rui Xia, Yifei Yu, Yibo Xu, Xin Wang

+ 备注:This work has been submitted to the IEEE International Conference on Robotics and Automation (ICRA 2024) for possible publication. Copyright may be transferred without notice, after which this version may no longer be accessible

+ 关键词:Structure from motion, made on Structure, ample achievements, Structure, SfM

+

+ 点击查看摘要

+ Over the last decades, ample achievements have been made on Structure from motion (SfM). However, the vast majority of them basically work in an offline manner, i.e., images are firstly captured and then fed together into a SfM pipeline for obtaining poses and sparse point cloud. In this work, on the contrary, we present an on-the-fly SfM: running online SfM while image capturing, the newly taken On-the-Fly image is online estimated with the corresponding pose and points, i.e., what you capture is what you get. Specifically, our approach firstly employs a vocabulary tree that is unsupervised trained using learning-based global features for fast image retrieval of newly fly-in image. Then, a robust feature matching mechanism with least squares (LSM) is presented to improve image registration performance. Finally, via investigating the influence of newly fly-in image's connected neighboring images, an efficient hierarchical weighted local bundle adjustment (BA) is used for optimization. Extensive experimental results demonstrate that on-the-fly SfM can meet the goal of robustly registering the images while capturing in an online way.

+

+

+

+ 44. 标题:Using Saliency and Cropping to Improve Video Memorability

+ 编号:[193]

+ 链接:https://arxiv.org/abs/2309.11881

+ 作者:Vaibhav Mudgal, Qingyang Wang, Lorin Sweeney, Alan F. Smeaton

+ 备注:12 pages

+ 关键词:emotional connection, video content, Video, viewer, memorability

+

+ 点击查看摘要

+ Video memorability is a measure of how likely a particular video is to be remembered by a viewer when that viewer has no emotional connection with the video content. It is an important characteristic as videos that are more memorable are more likely to be shared, viewed, and discussed. This paper presents results of a series of experiments where we improved the memorability of a video by selectively cropping frames based on image saliency. We present results of a basic fixed cropping as well as the results from dynamic cropping where both the size of the crop and the position of the crop within the frame, move as the video is played and saliency is tracked. Our results indicate that especially for videos of low initial memorability, the memorability score can be improved.

+

+

+

+ 45. 标题:Multi-level Asymmetric Contrastive Learning for Medical Image Segmentation Pre-training

+ 编号:[194]

+ 链接:https://arxiv.org/abs/2309.11876

+ 作者:Shuang Zeng, Lei Zhu, Xinliang Zhang, Zifeng Tian, Qian Chen, Lujia Jin, Jiayi Wang, Yanye Lu

+ 备注:

+ 关键词:limited labeled data, unlabeled data, labeled data, Contrastive learning, leads a promising

+

+ 点击查看摘要

+ Contrastive learning, which is a powerful technique for learning image-level representations from unlabeled data, leads a promising direction to dealing with the dilemma between large-scale pre-training and limited labeled data. However, most existing contrastive learning strategies are designed mainly for downstream tasks of natural images, therefore they are sub-optimal and even worse than learning from scratch when directly applied to medical images whose downstream tasks are usually segmentation. In this work, we propose a novel asymmetric contrastive learning framework named JCL for medical image segmentation with self-supervised pre-training. Specifically, (1) A novel asymmetric contrastive learning strategy is proposed to pre-train both encoder and decoder simultaneously in one-stage to provide better initialization for segmentation models. (2) A multi-level contrastive loss is designed to take the correspondence among feature-level, image-level and pixel-level projections, respectively into account to make sure multi-level representations can be learned by the encoder and decoder during pre-training. (3) Experiments on multiple medical image datasets indicate our JCL framework outperforms existing SOTA contrastive learning strategies.

+

+

+

+ 46. 标题:OSNet & MNetO: Two Types of General Reconstruction Architectures for Linear Computed Tomography in Multi-Scenarios

+ 编号:[205]

+ 链接:https://arxiv.org/abs/2309.11858

+ 作者:Zhisheng Wang, Zihan Deng, Fenglin Liu, Yixing Huang, Haijun Yu, Junning Cui

+ 备注:13 pages, 13 figures

+ 关键词:actively attracted attention, Hilbert filtering, LCT, linear computed tomography, DBP images

+

+ 点击查看摘要

+ Recently, linear computed tomography (LCT) systems have actively attracted attention. To weaken projection truncation and image the region of interest (ROI) for LCT, the backprojection filtration (BPF) algorithm is an effective solution. However, in BPF for LCT, it is difficult to achieve stable interior reconstruction, and for differentiated backprojection (DBP) images of LCT, multiple rotation-finite inversion of Hilbert transform (Hilbert filtering)-inverse rotation operations will blur the image. To satisfy multiple reconstruction scenarios for LCT, including interior ROI, complete object, and exterior region beyond field-of-view (FOV), and avoid the rotation operations of Hilbert filtering, we propose two types of reconstruction architectures. The first overlays multiple DBP images to obtain a complete DBP image, then uses a network to learn the overlying Hilbert filtering function, referred to as the Overlay-Single Network (OSNet). The second uses multiple networks to train different directional Hilbert filtering models for DBP images of multiple linear scannings, respectively, and then overlays the reconstructed results, i.e., Multiple Networks Overlaying (MNetO). In two architectures, we introduce a Swin Transformer (ST) block to the generator of pix2pixGAN to extract both local and global features from DBP images at the same time. We investigate two architectures from different networks, FOV sizes, pixel sizes, number of projections, geometric magnification, and processing time. Experimental results show that two architectures can both recover images. OSNet outperforms BPF in various scenarios. For the different networks, ST-pix2pixGAN is superior to pix2pixGAN and CycleGAN. MNetO exhibits a few artifacts due to the differences among the multiple models, but any one of its models is suitable for imaging the exterior edge in a certain direction.

+

+

+

+ 47. 标题:TCOVIS: Temporally Consistent Online Video Instance Segmentation

+ 编号:[206]

+ 链接:https://arxiv.org/abs/2309.11857

+ 作者:Junlong Li, Bingyao Yu, Yongming Rao, Jie Zhou, Jiwen Lu

+ 备注:11 pages, 4 figures. This paper has been accepted for ICCV 2023

+ 关键词:online methods achieving, video instance segmentation, recent years, significant progress, methods achieving

+

+ 点击查看摘要

+ In recent years, significant progress has been made in video instance segmentation (VIS), with many offline and online methods achieving state-of-the-art performance. While offline methods have the advantage of producing temporally consistent predictions, they are not suitable for real-time scenarios. Conversely, online methods are more practical, but maintaining temporal consistency remains a challenging task. In this paper, we propose a novel online method for video instance segmentation, called TCOVIS, which fully exploits the temporal information in a video clip. The core of our method consists of a global instance assignment strategy and a spatio-temporal enhancement module, which improve the temporal consistency of the features from two aspects. Specifically, we perform global optimal matching between the predictions and ground truth across the whole video clip, and supervise the model with the global optimal objective. We also capture the spatial feature and aggregate it with the semantic feature between frames, thus realizing the spatio-temporal enhancement. We evaluate our method on four widely adopted VIS benchmarks, namely YouTube-VIS 2019/2021/2022 and OVIS, and achieve state-of-the-art performance on all benchmarks without bells-and-whistles. For instance, on YouTube-VIS 2021, TCOVIS achieves 49.5 AP and 61.3 AP with ResNet-50 and Swin-L backbones, respectively. Code is available at this https URL.

+

+

+

+ 48. 标题:DEYOv3: DETR with YOLO for Real-time Object Detection

+ 编号:[209]

+ 链接:https://arxiv.org/abs/2309.11851

+ 作者:Haodong Ouyang

+ 备注:Work in process

+ 关键词:gained significant attention, research community due, training method, training, outstanding performance

+

+ 点击查看摘要