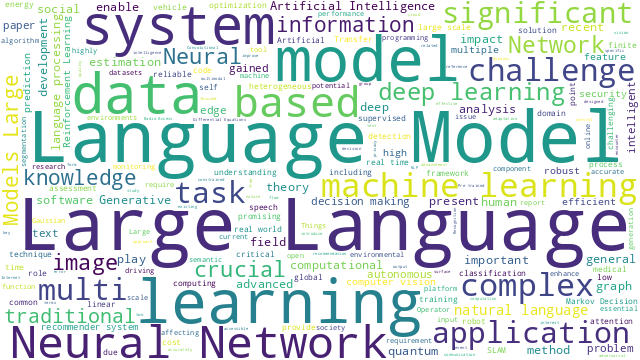

本篇博文主要展示每日从Arxiv论文网站获取的最新论文列表,以计算机视觉、自然语言处理、机器学习、人工智能等大方向进行划分。

+统计

+今日共更新314篇论文,其中:

+

+计算机视觉

+

+ 1. 标题:Alice Benchmarks: Connecting Real World Object Re-Identification with the Synthetic

+ 编号:[3]

+ 链接:https://arxiv.org/abs/2310.04416

+ 作者:Xiaoxiao Sun, Yue Yao, Shengjin Wang, Hongdong Li, Liang Zheng

+ 备注:9 pages, 4 figures, 4 tables

+ 关键词:cheaply acquire large-scale, Alice benchmarks, privacy concerns, synthetic data, cheaply acquire

+

+ 点击查看摘要

+ For object re-identification (re-ID), learning from synthetic data has become a promising strategy to cheaply acquire large-scale annotated datasets and effective models, with few privacy concerns. Many interesting research problems arise from this strategy, e.g., how to reduce the domain gap between synthetic source and real-world target. To facilitate developing more new approaches in learning from synthetic data, we introduce the Alice benchmarks, large-scale datasets providing benchmarks as well as evaluation protocols to the research community. Within the Alice benchmarks, two object re-ID tasks are offered: person and vehicle re-ID. We collected and annotated two challenging real-world target datasets: AlicePerson and AliceVehicle, captured under various illuminations, image resolutions, etc. As an important feature of our real target, the clusterability of its training set is not manually guaranteed to make it closer to a real domain adaptation test scenario. Correspondingly, we reuse existing PersonX and VehicleX as synthetic source domains. The primary goal is to train models from synthetic data that can work effectively in the real world. In this paper, we detail the settings of Alice benchmarks, provide an analysis of existing commonly-used domain adaptation methods, and discuss some interesting future directions. An online server will be set up for the community to evaluate methods conveniently and fairly.

+

+

+

+ 2. 标题:CIFAR-10-Warehouse: Broad and More Realistic Testbeds in Model Generalization Analysis

+ 编号:[5]

+ 链接:https://arxiv.org/abs/2310.04414

+ 作者:Xiaoxiao Sun, Xingjian Leng, Zijian Wang, Yang Yang, Zi Huang, Liang Zheng

+ 备注:9 pages, 5 figures, 3 tables

+ 关键词:machine learning community, critical research problem, Analyzing model performance, learning community, critical research

+

+ 点击查看摘要

+ Analyzing model performance in various unseen environments is a critical research problem in the machine learning community. To study this problem, it is important to construct a testbed with out-of-distribution test sets that have broad coverage of environmental discrepancies. However, existing testbeds typically either have a small number of domains or are synthesized by image corruptions, hindering algorithm design that demonstrates real-world effectiveness. In this paper, we introduce CIFAR-10-Warehouse, consisting of 180 datasets collected by prompting image search engines and diffusion models in various ways. Generally sized between 300 and 8,000 images, the datasets contain natural images, cartoons, certain colors, or objects that do not naturally appear. With CIFAR-10-W, we aim to enhance the evaluation and deepen the understanding of two generalization tasks: domain generalization and model accuracy prediction in various out-of-distribution environments. We conduct extensive benchmarking and comparison experiments and show that CIFAR-10-W offers new and interesting insights inherent to these tasks. We also discuss other fields that would benefit from CIFAR-10-W.

+

+

+

+ 3. 标题:FedConv: Enhancing Convolutional Neural Networks for Handling Data Heterogeneity in Federated Learning

+ 编号:[7]

+ 链接:https://arxiv.org/abs/2310.04412

+ 作者:Peiran Xu, Zeyu Wang, Jieru Mei, Liangqiong Qu, Alan Yuille, Cihang Xie, Yuyin Zhou

+ 备注:9 pages, 6 figures. Equal contribution by P. Xu and Z. Wang

+ 关键词:Convolutional Neural Networks, Federated learning, machine learning, outperforms Convolutional Neural, emerging paradigm

+

+ 点击查看摘要

+ Federated learning (FL) is an emerging paradigm in machine learning, where a shared model is collaboratively learned using data from multiple devices to mitigate the risk of data leakage. While recent studies posit that Vision Transformer (ViT) outperforms Convolutional Neural Networks (CNNs) in addressing data heterogeneity in FL, the specific architectural components that underpin this advantage have yet to be elucidated. In this paper, we systematically investigate the impact of different architectural elements, such as activation functions and normalization layers, on the performance within heterogeneous FL. Through rigorous empirical analyses, we are able to offer the first-of-its-kind general guidance on micro-architecture design principles for heterogeneous FL.

+Intriguingly, our findings indicate that with strategic architectural modifications, pure CNNs can achieve a level of robustness that either matches or even exceeds that of ViTs when handling heterogeneous data clients in FL. Additionally, our approach is compatible with existing FL techniques and delivers state-of-the-art solutions across a broad spectrum of FL benchmarks. The code is publicly available at this https URL

+

+

+

+ 4. 标题:Language Agent Tree Search Unifies Reasoning Acting and Planning in Language Models

+ 编号:[11]

+ 链接:https://arxiv.org/abs/2310.04406

+ 作者:Andy Zhou, Kai Yan, Michal Shlapentokh-Rothman, Haohan Wang, Yu-Xiong Wang

+ 备注:Website and code can be found at this https URL

+ 关键词:large language models, demonstrated impressive performance, simple acting processes, Language Agent Tree, Agent Tree Search

+

+ 点击查看摘要

+ While large language models (LLMs) have demonstrated impressive performance on a range of decision-making tasks, they rely on simple acting processes and fall short of broad deployment as autonomous agents. We introduce LATS (Language Agent Tree Search), a general framework that synergizes the capabilities of LLMs in planning, acting, and reasoning. Drawing inspiration from Monte Carlo tree search in model-based reinforcement learning, LATS employs LLMs as agents, value functions, and optimizers, repurposing their latent strengths for enhanced decision-making. What is crucial in this method is the use of an environment for external feedback, which offers a more deliberate and adaptive problem-solving mechanism that moves beyond the limitations of existing techniques. Our experimental evaluation across diverse domains, such as programming, HotPotQA, and WebShop, illustrates the applicability of LATS for both reasoning and acting. In particular, LATS achieves 94.4\% for programming on HumanEval with GPT-4 and an average score of 75.9 for web browsing on WebShop with GPT-3.5, demonstrating the effectiveness and generality of our method.

+

+

+

+ 5. 标题:Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step Inference

+ 编号:[18]

+ 链接:https://arxiv.org/abs/2310.04378

+ 作者:Simian Luo, Yiqin Tan, Longbo Huang, Jian Li, Hang Zhao

+ 备注:

+ 关键词:achieved remarkable results, Latent Consistency Models, Consistency Models, synthesizing high-resolution images, Diffusion models

+

+ 点击查看摘要

+ Latent Diffusion models (LDMs) have achieved remarkable results in synthesizing high-resolution images. However, the iterative sampling process is computationally intensive and leads to slow generation. Inspired by Consistency Models (song et al.), we propose Latent Consistency Models (LCMs), enabling swift inference with minimal steps on any pre-trained LDMs, including Stable Diffusion (rombach et al). Viewing the guided reverse diffusion process as solving an augmented probability flow ODE (PF-ODE), LCMs are designed to directly predict the solution of such ODE in latent space, mitigating the need for numerous iterations and allowing rapid, high-fidelity sampling. Efficiently distilled from pre-trained classifier-free guided diffusion models, a high-quality 768 x 768 2~4-step LCM takes only 32 A100 GPU hours for training. Furthermore, we introduce Latent Consistency Fine-tuning (LCF), a novel method that is tailored for fine-tuning LCMs on customized image datasets. Evaluation on the LAION-5B-Aesthetics dataset demonstrates that LCMs achieve state-of-the-art text-to-image generation performance with few-step inference. Project Page: this https URL

+

+

+

+ 6. 标题:SwimXYZ: A large-scale dataset of synthetic swimming motions and videos

+ 编号:[27]

+ 链接:https://arxiv.org/abs/2310.04360

+ 作者:Fiche Guénolé, Sevestre Vincent, Gonzalez-Barral Camila, Leglaive Simon, Séguier Renaud

+ 备注:ACM MIG 2023

+ 关键词:increasingly important role, real competitive advantage, Technologies play, play an increasingly, increasingly important

+

+ 点击查看摘要

+ Technologies play an increasingly important role in sports and become a real competitive advantage for the athletes who benefit from it. Among them, the use of motion capture is developing in various sports to optimize sporting gestures. Unfortunately, traditional motion capture systems are expensive and constraining. Recently developed computer vision-based approaches also struggle in certain sports, like swimming, due to the aquatic environment. One of the reasons for the gap in performance is the lack of labeled datasets with swimming videos. In an attempt to address this issue, we introduce SwimXYZ, a synthetic dataset of swimming motions and videos. SwimXYZ contains 3.4 million frames annotated with ground truth 2D and 3D joints, as well as 240 sequences of swimming motions in the SMPL parameters format. In addition to making this dataset publicly available, we present use cases for SwimXYZ in swimming stroke clustering and 2D pose estimation.

+

+

+

+ 7. 标题:Distributed Deep Joint Source-Channel Coding with Decoder-Only Side Information

+ 编号:[45]

+ 链接:https://arxiv.org/abs/2310.04311

+ 作者:Selim F. Yilmaz, Ezgi Ozyilkan, Deniz Gunduz, Elza Erkip

+ 备注:7 pages, 4 figures

+ 关键词:low-latency image transmission, noisy wireless channel, correlated side information, Wyner-Ziv scenario, low-latency image

+

+ 点击查看摘要

+ We consider low-latency image transmission over a noisy wireless channel when correlated side information is present only at the receiver side (the Wyner-Ziv scenario). In particular, we are interested in developing practical schemes using a data-driven joint source-channel coding (JSCC) approach, which has been previously shown to outperform conventional separation-based approaches in the practical finite blocklength regimes, and to provide graceful degradation with channel quality. We propose a novel neural network architecture that incorporates the decoder-only side information at multiple stages at the receiver side. Our results demonstrate that the proposed method succeeds in integrating the side information, yielding improved performance at all channel noise levels in terms of the various distortion criteria considered here, especially at low channel signal-to-noise ratios (SNRs) and small bandwidth ratios (BRs). We also provide the source code of the proposed method to enable further research and reproducibility of the results.

+

+

+

+ 8. 标题:Towards A Robust Group-level Emotion Recognition via Uncertainty-Aware Learning

+ 编号:[46]

+ 链接:https://arxiv.org/abs/2310.04306

+ 作者:Qing Zhu, Qirong Mao, Jialin Zhang, Xiaohua Huang, Wenming Zheng

+ 备注:11 pages,3 figures

+ 关键词:human behavior analysis, Group-level emotion recognition, behavior analysis, aiming to recognize, inseparable part

+

+ 点击查看摘要

+ Group-level emotion recognition (GER) is an inseparable part of human behavior analysis, aiming to recognize an overall emotion in a multi-person scene. However, the existing methods are devoted to combing diverse emotion cues while ignoring the inherent uncertainties under unconstrained environments, such as congestion and occlusion occurring within a group. Additionally, since only group-level labels are available, inconsistent emotion predictions among individuals in one group can confuse the network. In this paper, we propose an uncertainty-aware learning (UAL) method to extract more robust representations for GER. By explicitly modeling the uncertainty of each individual, we utilize stochastic embedding drawn from a Gaussian distribution instead of deterministic point embedding. This representation captures the probabilities of different emotions and generates diverse predictions through this stochasticity during the inference stage. Furthermore, uncertainty-sensitive scores are adaptively assigned as the fusion weights of individuals' face within each group. Moreover, we develop an image enhancement module to enhance the model's robustness against severe noise. The overall three-branch model, encompassing face, object, and scene component, is guided by a proportional-weighted fusion strategy and integrates the proposed uncertainty-aware method to produce the final group-level output. Experimental results demonstrate the effectiveness and generalization ability of our method across three widely used databases.

+

+

+

+ 9. 标题:Graph learning in robotics: a survey

+ 编号:[50]

+ 链接:https://arxiv.org/abs/2310.04294

+ 作者:Francesca Pistilli, Giuseppe Averta

+ 备注:

+ 关键词:complex non-euclidean data, Deep neural networks, non-euclidean data, powerful tool, complex non-euclidean

+

+ 点击查看摘要

+ Deep neural networks for graphs have emerged as a powerful tool for learning on complex non-euclidean data, which is becoming increasingly common for a variety of different applications. Yet, although their potential has been widely recognised in the machine learning community, graph learning is largely unexplored for downstream tasks such as robotics applications. To fully unlock their potential, hence, we propose a review of graph neural architectures from a robotics perspective. The paper covers the fundamentals of graph-based models, including their architecture, training procedures, and applications. It also discusses recent advancements and challenges that arise in applied settings, related for example to the integration of perception, decision-making, and control. Finally, the paper provides an extensive review of various robotic applications that benefit from learning on graph structures, such as bodies and contacts modelling, robotic manipulation, action recognition, fleet motion planning, and many more. This survey aims to provide readers with a thorough understanding of the capabilities and limitations of graph neural architectures in robotics, and to highlight potential avenues for future research.

+

+

+

+ 10. 标题:Assessing Robustness via Score-Based Adversarial Image Generation

+ 编号:[56]

+ 链接:https://arxiv.org/abs/2310.04285

+ 作者:Marcel Kollovieh, Lukas Gosch, Yan Scholten, Marten Lienen, Stephan Günnemann

+ 备注:

+ 关键词:norm constraints, ell, adversarial, norm, constraints

+

+ 点击查看摘要

+ Most adversarial attacks and defenses focus on perturbations within small $\ell_p$-norm constraints. However, $\ell_p$ threat models cannot capture all relevant semantic-preserving perturbations, and hence, the scope of robustness evaluations is limited. In this work, we introduce Score-Based Adversarial Generation (ScoreAG), a novel framework that leverages the advancements in score-based generative models to generate adversarial examples beyond $\ell_p$-norm constraints, so-called unrestricted adversarial examples, overcoming their limitations. Unlike traditional methods, ScoreAG maintains the core semantics of images while generating realistic adversarial examples, either by transforming existing images or synthesizing new ones entirely from scratch. We further exploit the generative capability of ScoreAG to purify images, empirically enhancing the robustness of classifiers. Our extensive empirical evaluation demonstrates that ScoreAG matches the performance of state-of-the-art attacks and defenses across multiple benchmarks. This work highlights the importance of investigating adversarial examples bounded by semantics rather than $\ell_p$-norm constraints. ScoreAG represents an important step towards more encompassing robustness assessments.

+

+

+

+ 11. 标题:Compositional Servoing by Recombining Demonstrations

+ 编号:[60]

+ 链接:https://arxiv.org/abs/2310.04271

+ 作者:Max Argus, Abhijeet Nayak, Martin Büchner, Silvio Galesso, Abhinav Valada, Thomas Brox

+ 备注:this http URL

+ 关键词:Learning-based manipulation policies, task transfer capabilities, weak task transfer, Learning-based manipulation, manipulation policies

+

+ 点击查看摘要

+ Learning-based manipulation policies from image inputs often show weak task transfer capabilities. In contrast, visual servoing methods allow efficient task transfer in high-precision scenarios while requiring only a few demonstrations. In this work, we present a framework that formulates the visual servoing task as graph traversal. Our method not only extends the robustness of visual servoing, but also enables multitask capability based on a few task-specific demonstrations. We construct demonstration graphs by splitting existing demonstrations and recombining them. In order to traverse the demonstration graph in the inference case, we utilize a similarity function that helps select the best demonstration for a specific task. This enables us to compute the shortest path through the graph. Ultimately, we show that recombining demonstrations leads to higher task-respective success. We present extensive simulation and real-world experimental results that demonstrate the efficacy of our approach.

+

+

+

+ 12. 标题:Collaborative Camouflaged Object Detection: A Large-Scale Dataset and Benchmark

+ 编号:[69]

+ 链接:https://arxiv.org/abs/2310.04253

+ 作者:Cong Zhang, Hongbo Bi, Tian-Zhu Xiang, Ranwan Wu, Jinghui Tong, Xiufang Wang

+ 备注:Accepted by IEEE Transactions on Neural Networks and Learning Systems (TNNLS)

+ 关键词:simultaneously detect camouflaged, task called collaborative, detect camouflaged objects, camouflaged object detection, called collaborative camouflaged

+

+ 点击查看摘要

+ In this paper, we provide a comprehensive study on a new task called collaborative camouflaged object detection (CoCOD), which aims to simultaneously detect camouflaged objects with the same properties from a group of relevant images. To this end, we meticulously construct the first large-scale dataset, termed CoCOD8K, which consists of 8,528 high-quality and elaborately selected images with object mask annotations, covering 5 superclasses and 70 subclasses. The dataset spans a wide range of natural and artificial camouflage scenes with diverse object appearances and backgrounds, making it a very challenging dataset for CoCOD. Besides, we propose the first baseline model for CoCOD, named bilateral-branch network (BBNet), which explores and aggregates co-camouflaged cues within a single image and between images within a group, respectively, for accurate camouflaged object detection in given images. This is implemented by an inter-image collaborative feature exploration (CFE) module, an intra-image object feature search (OFS) module, and a local-global refinement (LGR) module. We benchmark 18 state-of-the-art models, including 12 COD algorithms and 6 CoSOD algorithms, on the proposed CoCOD8K dataset under 5 widely used evaluation metrics. Extensive experiments demonstrate the effectiveness of the proposed method and the significantly superior performance compared to other competitors. We hope that our proposed dataset and model will boost growth in the COD community. The dataset, model, and results will be available at: this https URL.

+

+

+

+ 13. 标题:Semantic segmentation of longitudinal thermal images for identification of hot and cool spots in urban areas

+ 编号:[70]

+ 链接:https://arxiv.org/abs/2310.04247

+ 作者:Vasantha Ramani, Pandarasamy Arjunan, Kameshwar Poolla, Clayton Miller

+ 备注:14 pages, 13 figures

+ 关键词:spatially rich thermal, rich thermal images, thermal images collected, thermal images, thermal image dataset

+

+ 点击查看摘要

+ This work presents the analysis of semantically segmented, longitudinally, and spatially rich thermal images collected at the neighborhood scale to identify hot and cool spots in urban areas. An infrared observatory was operated over a few months to collect thermal images of different types of buildings on the educational campus of the National University of Singapore. A subset of the thermal image dataset was used to train state-of-the-art deep learning models to segment various urban features such as buildings, vegetation, sky, and roads. It was observed that the U-Net segmentation model with `resnet34' CNN backbone has the highest mIoU score of 0.99 on the test dataset, compared to other models such as DeepLabV3, DeeplabV3+, FPN, and PSPnet. The masks generated using the segmentation models were then used to extract the temperature from thermal images and correct for differences in the emissivity of various urban features. Further, various statistical measure of the temperature extracted using the predicted segmentation masks is shown to closely match the temperature extracted using the ground truth masks. Finally, the masks were used to identify hot and cool spots in the urban feature at various instances of time. This forms one of the very few studies demonstrating the automated analysis of thermal images, which can be of potential use to urban planners for devising mitigation strategies for reducing the urban heat island (UHI) effect, improving building energy efficiency, and maximizing outdoor thermal comfort.

+

+

+

+ 14. 标题:Enhancing the Authenticity of Rendered Portraits with Identity-Consistent Transfer Learning

+ 编号:[89]

+ 链接:https://arxiv.org/abs/2310.04194

+ 作者:Luyuan Wang, Yiqian Wu, Yongliang Yang, Chen Liu, Xiaogang Jin

+ 备注:10 pages, 8 figures, 2 tables

+ 关键词:creating high-quality photo-realistic, high-quality photo-realistic virtual, uncanny valley effect, computer graphics, creating high-quality

+

+ 点击查看摘要

+ Despite rapid advances in computer graphics, creating high-quality photo-realistic virtual portraits is prohibitively expensive. Furthermore, the well-know ''uncanny valley'' effect in rendered portraits has a significant impact on the user experience, especially when the depiction closely resembles a human likeness, where any minor artifacts can evoke feelings of eeriness and repulsiveness. In this paper, we present a novel photo-realistic portrait generation framework that can effectively mitigate the ''uncanny valley'' effect and improve the overall authenticity of rendered portraits. Our key idea is to employ transfer learning to learn an identity-consistent mapping from the latent space of rendered portraits to that of real portraits. During the inference stage, the input portrait of an avatar can be directly transferred to a realistic portrait by changing its appearance style while maintaining the facial identity. To this end, we collect a new dataset, Daz-Rendered-Faces-HQ (DRFHQ), that is specifically designed for rendering-style portraits. We leverage this dataset to fine-tune the StyleGAN2 generator, using our carefully crafted framework, which helps to preserve the geometric and color features relevant to facial identity. We evaluate our framework using portraits with diverse gender, age, and race variations. Qualitative and quantitative evaluations and ablation studies show the advantages of our method compared to state-of-the-art approaches.

+

+

+

+ 15. 标题:Bridging the Gap between Human Motion and Action Semantics via Kinematic Phrases

+ 编号:[92]

+ 链接:https://arxiv.org/abs/2310.04189

+ 作者:Xinpeng Liu, Yong-Lu Li, Ailing Zeng, Zizheng Zhou, Yang You, Cewu Lu

+ 备注:

+ 关键词:action semantics, abstract action semantic, semantics, motion, action

+

+ 点击查看摘要

+ The goal of motion understanding is to establish a reliable mapping between motion and action semantics, while it is a challenging many-to-many problem. An abstract action semantic (i.e., walk forwards) could be conveyed by perceptually diverse motions (walk with arms up or swinging), while a motion could carry different semantics w.r.t. its context and intention. This makes an elegant mapping between them difficult. Previous attempts adopted direct-mapping paradigms with limited reliability. Also, current automatic metrics fail to provide reliable assessments of the consistency between motions and action semantics. We identify the source of these problems as the significant gap between the two modalities. To alleviate this gap, we propose Kinematic Phrases (KP) that take the objective kinematic facts of human motion with proper abstraction, interpretability, and generality characteristics. Based on KP as a mediator, we can unify a motion knowledge base and build a motion understanding system. Meanwhile, KP can be automatically converted from motions and to text descriptions with no subjective bias, inspiring Kinematic Prompt Generation (KPG) as a novel automatic motion generation benchmark. In extensive experiments, our approach shows superiority over other methods. Our code and data would be made publicly available at this https URL.

+

+

+

+ 16. 标题:DiffPrompter: Differentiable Implicit Visual Prompts for Semantic-Segmentation in Adverse Conditions

+ 编号:[96]

+ 链接:https://arxiv.org/abs/2310.04181

+ 作者:Sanket Kalwar, Mihir Ungarala, Shruti Jain, Aaron Monis, Krishna Reddy Konda, Sourav Garg, K Madhava Krishna

+ 备注:

+ 关键词:autonomous driving systems, driving systems, autonomous driving, Semantic segmentation, adverse weather

+

+ 点击查看摘要

+ Semantic segmentation in adverse weather scenarios is a critical task for autonomous driving systems. While foundation models have shown promise, the need for specialized adaptors becomes evident for handling more challenging scenarios. We introduce DiffPrompter, a novel differentiable visual and latent prompting mechanism aimed at expanding the learning capabilities of existing adaptors in foundation models. Our proposed $\nabla$HFC image processing block excels particularly in adverse weather conditions, where conventional methods often fall short. Furthermore, we investigate the advantages of jointly training visual and latent prompts, demonstrating that this combined approach significantly enhances performance in out-of-distribution scenarios. Our differentiable visual prompts leverage parallel and series architectures to generate prompts, effectively improving object segmentation tasks in adverse conditions. Through a comprehensive series of experiments and evaluations, we provide empirical evidence to support the efficacy of our approach. Project page at this https URL.

+

+

+

+ 17. 标题:Degradation-Aware Self-Attention Based Transformer for Blind Image Super-Resolution

+ 编号:[97]

+ 链接:https://arxiv.org/abs/2310.04180

+ 作者:Qingguo Liu, Pan Gao, Kang Han, Ningzhong Liu, Wei Xiang

+ 备注:12 pages

+ 关键词:restoration outcomes due, impressive image restoration, image restoration outcomes, model remote dependencies, Transformer-based methods

+

+ 点击查看摘要

+ Compared to CNN-based methods, Transformer-based methods achieve impressive image restoration outcomes due to their abilities to model remote dependencies. However, how to apply Transformer-based methods to the field of blind super-resolution (SR) and further make an SR network adaptive to degradation information is still an open problem. In this paper, we propose a new degradation-aware self-attention-based Transformer model, where we incorporate contrastive learning into the Transformer network for learning the degradation representations of input images with unknown noise. In particular, we integrate both CNN and Transformer components into the SR network, where we first use the CNN modulated by the degradation information to extract local features, and then employ the degradation-aware Transformer to extract global semantic features. We apply our proposed model to several popular large-scale benchmark datasets for testing, and achieve the state-of-the-art performance compared to existing methods. In particular, our method yields a PSNR of 32.43 dB on the Urban100 dataset at $\times$2 scale, 0.94 dB higher than DASR, and 26.62 dB on the Urban100 dataset at $\times$4 scale, 0.26 dB improvement over KDSR, setting a new benchmark in this area. Source code is available at: this https URL.

+

+

+

+ 18. 标题:Entropic Score metric: Decoupling Topology and Size in Training-free NAS

+ 编号:[98]

+ 链接:https://arxiv.org/abs/2310.04179

+ 作者:Niccolò Cavagnero, Luca Robbiano, Francesca Pistilli, Barbara Caputo, Giuseppe Averta

+ 备注:10 pages, 3 figures

+ 关键词:resource-constrained scenarios typical, Neural Architecture Search, daunting task, Neural Networks design, resource-constrained scenarios

+

+ 点击查看摘要

+ Neural Networks design is a complex and often daunting task, particularly for resource-constrained scenarios typical of mobile-sized models. Neural Architecture Search is a promising approach to automate this process, but existing competitive methods require large training time and computational resources to generate accurate models. To overcome these limits, this paper contributes with: i) a novel training-free metric, named Entropic Score, to estimate model expressivity through the aggregated element-wise entropy of its activations; ii) a cyclic search algorithm to separately yet synergistically search model size and topology. Entropic Score shows remarkable ability in searching for the topology of the network, and a proper combination with LogSynflow, to search for model size, yields superior capability to completely design high-performance Hybrid Transformers for edge applications in less than 1 GPU hour, resulting in the fastest and most accurate NAS method for ImageNet classification.

+

+

+

+ 19. 标题:Improving Neural Radiance Field using Near-Surface Sampling with Point Cloud Generation

+ 编号:[106]

+ 链接:https://arxiv.org/abs/2310.04152

+ 作者:Hye Bin Yoo, Hyun Min Han, Sung Soo Hwang, Il Yong Chun

+ 备注:13 figures, 2 tables

+ 关键词:Neural radiance field, emerging view synthesis, Neural radiance, radiance field, color probabilities

+

+ 点击查看摘要

+ Neural radiance field (NeRF) is an emerging view synthesis method that samples points in a three-dimensional (3D) space and estimates their existence and color probabilities. The disadvantage of NeRF is that it requires a long training time since it samples many 3D points. In addition, if one samples points from occluded regions or in the space where an object is unlikely to exist, the rendering quality of NeRF can be degraded. These issues can be solved by estimating the geometry of 3D scene. This paper proposes a near-surface sampling framework to improve the rendering quality of NeRF. To this end, the proposed method estimates the surface of a 3D object using depth images of the training set and sampling is performed around there only. To obtain depth information on a novel view, the paper proposes a 3D point cloud generation method and a simple refining method for projected depth from a point cloud. Experimental results show that the proposed near-surface sampling NeRF framework can significantly improve the rendering quality, compared to the original NeRF and a state-of-the-art depth-based NeRF method. In addition, one can significantly accelerate the training time of a NeRF model with the proposed near-surface sampling framework.

+

+

+

+ 20. 标题:Self-Supervised Neuron Segmentation with Multi-Agent Reinforcement Learning

+ 编号:[107]

+ 链接:https://arxiv.org/abs/2310.04148

+ 作者:Yinda Chen, Wei Huang, Shenglong Zhou, Qi Chen, Zhiwei Xiong

+ 备注:IJCAI 23 main track paper

+ 关键词:scale electron microscopy, large scale electron, existing supervised neuron, supervised neuron segmentation, accurate annotations

+

+ 点击查看摘要

+ The performance of existing supervised neuron segmentation methods is highly dependent on the number of accurate annotations, especially when applied to large scale electron microscopy (EM) data. By extracting semantic information from unlabeled data, self-supervised methods can improve the performance of downstream tasks, among which the mask image model (MIM) has been widely used due to its simplicity and effectiveness in recovering original information from masked images. However, due to the high degree of structural locality in EM images, as well as the existence of considerable noise, many voxels contain little discriminative information, making MIM pretraining inefficient on the neuron segmentation task. To overcome this challenge, we propose a decision-based MIM that utilizes reinforcement learning (RL) to automatically search for optimal image masking ratio and masking strategy. Due to the vast exploration space, using single-agent RL for voxel prediction is impractical. Therefore, we treat each input patch as an agent with a shared behavior policy, allowing for multi-agent collaboration. Furthermore, this multi-agent model can capture dependencies between voxels, which is beneficial for the downstream segmentation task. Experiments conducted on representative EM datasets demonstrate that our approach has a significant advantage over alternative self-supervised methods on the task of neuron segmentation. Code is available at \url{this https URL}.

+

+

+

+ 21. 标题:TiC: Exploring Vision Transformer in Convolution

+ 编号:[110]

+ 链接:https://arxiv.org/abs/2310.04134

+ 作者:Song Zhang, Qingzhong Wang, Jiang Bian, Haoyi Xiong

+ 备注:

+ 关键词:arbitrary resolution images, phonemically surging, architecture and configuration, positional encoding, limiting their flexibility

+

+ 点击查看摘要

+ While models derived from Vision Transformers (ViTs) have been phonemically surging, pre-trained models cannot seamlessly adapt to arbitrary resolution images without altering the architecture and configuration, such as sampling the positional encoding, limiting their flexibility for various vision tasks. For instance, the Segment Anything Model (SAM) based on ViT-Huge requires all input images to be resized to 1024$\times$1024. To overcome this limitation, we propose the Multi-Head Self-Attention Convolution (MSA-Conv) that incorporates Self-Attention within generalized convolutions, including standard, dilated, and depthwise ones. Enabling transformers to handle images of varying sizes without retraining or rescaling, the use of MSA-Conv further reduces computational costs compared to global attention in ViT, which grows costly as image size increases. Later, we present the Vision Transformer in Convolution (TiC) as a proof of concept for image classification with MSA-Conv, where two capacity enhancing strategies, namely Multi-Directional Cyclic Shifted Mechanism and Inter-Pooling Mechanism, have been proposed, through establishing long-distance connections between tokens and enlarging the effective receptive field. Extensive experiments have been carried out to validate the overall effectiveness of TiC. Additionally, ablation studies confirm the performance improvement made by MSA-Conv and the two capacity enhancing strategies separately. Note that our proposal aims at studying an alternative to the global attention used in ViT, while MSA-Conv meets our goal by making TiC comparable to state-of-the-art on ImageNet-1K. Code will be released at this https URL.

+

+

+

+ 22. 标题:VI-Diff: Unpaired Visible-Infrared Translation Diffusion Model for Single Modality Labeled Visible-Infrared Person Re-identification

+ 编号:[116]

+ 链接:https://arxiv.org/abs/2310.04122

+ 作者:Han Huang, Yan Huang, Liang Wang

+ 备注:11 pages, 7 figures

+ 关键词:real-world scenarios poses, significant challenge due, cross-modality data annotation, Visible-Infrared person re-identification, real-world scenarios

+

+ 点击查看摘要

+ Visible-Infrared person re-identification (VI-ReID) in real-world scenarios poses a significant challenge due to the high cost of cross-modality data annotation. Different sensing cameras, such as RGB/IR cameras for good/poor lighting conditions, make it costly and error-prone to identify the same person across modalities. To overcome this, we explore the use of single-modality labeled data for the VI-ReID task, which is more cost-effective and practical. By labeling pedestrians in only one modality (e.g., visible images) and retrieving in another modality (e.g., infrared images), we aim to create a training set containing both originally labeled and modality-translated data using unpaired image-to-image translation techniques. In this paper, we propose VI-Diff, a diffusion model that effectively addresses the task of Visible-Infrared person image translation. Through comprehensive experiments, we demonstrate that VI-Diff outperforms existing diffusion and GAN models, making it a promising solution for VI-ReID with single-modality labeled data. Our approach can be a promising solution to the VI-ReID task with single-modality labeled data and serves as a good starting point for future study. Code will be available.

+

+

+

+ 23. 标题:Dense Random Texture Detection using Beta Distribution Statistics

+ 编号:[120]

+ 链接:https://arxiv.org/abs/2310.04111

+ 作者:Soeren Molander

+ 备注:

+ 关键词:detecting dense random, dense random texture, note describes, detecting dense, dense random

+

+ 点击查看摘要

+ This note describes a method for detecting dense random texture using fully connected points sampled on image edges. An edge image is randomly sampled with points, the standard L2 distance is calculated between all connected points in a neighbourhood. For each point, a check is made if the point intersects with an image edge. If this is the case, a unity value is added to the distance, otherwise zero. From this an edge excess index is calculated for the fully connected edge graph in the range [1.0..2.0], where 1.0 indicate no edges. The ratio can be interpreted as a sampled Bernoulli process with unknown probability. The Bayesian posterior estimate of the probability can be associated with its conjugate prior which is a Beta($\alpha$, $\beta$) distribution, with hyper parameters $\alpha$ and $\beta$ related to the number of edge crossings. Low values of $\beta$ indicate a texture rich area, higher values less rich. The method has been applied to real-time SLAM-based moving object detection, where points are confined to tracked boxes (rois).

+

+

+

+ 24. 标题:Automated 3D Segmentation of Kidneys and Tumors in MICCAI KiTS 2023 Challenge

+ 编号:[121]

+ 链接:https://arxiv.org/abs/2310.04110

+ 作者:Andriy Myronenko, Dong Yang, Yufan He, Daguang Xu

+ 备注:MICCAI 2023, KITS 2023 challenge 1st place

+ 关键词:Kidney Tumor Segmentation, Kidney Tumor, Tumor Segmentation Challenge, Tumor Segmentation, offers a platform

+

+ 点击查看摘要

+ Kidney and Kidney Tumor Segmentation Challenge (KiTS) 2023 offers a platform for researchers to compare their solutions to segmentation from 3D CT. In this work, we describe our submission to the challenge using automated segmentation of Auto3DSeg available in MONAI. Our solution achieves the average dice of 0.835 and surface dice of 0.723, which ranks first and wins the KiTS 2023 challenge.

+

+

+

+ 25. 标题:ClusVPR: Efficient Visual Place Recognition with Clustering-based Weighted Transformer

+ 编号:[125]

+ 链接:https://arxiv.org/abs/2310.04099

+ 作者:Yifan Xu, Pourya Shamsolmoali, Jie Yang

+ 备注:

+ 关键词:including robot navigation, highly challenging task, range of applications, including robot, self-driving vehicles

+

+ 点击查看摘要

+ Visual place recognition (VPR) is a highly challenging task that has a wide range of applications, including robot navigation and self-driving vehicles. VPR is particularly difficult due to the presence of duplicate regions and the lack of attention to small objects in complex scenes, resulting in recognition deviations. In this paper, we present ClusVPR, a novel approach that tackles the specific issues of redundant information in duplicate regions and representations of small objects. Different from existing methods that rely on Convolutional Neural Networks (CNNs) for feature map generation, ClusVPR introduces a unique paradigm called Clustering-based Weighted Transformer Network (CWTNet). CWTNet leverages the power of clustering-based weighted feature maps and integrates global dependencies to effectively address visual deviations encountered in large-scale VPR problems. We also introduce the optimized-VLAD (OptLAD) layer that significantly reduces the number of parameters and enhances model efficiency. This layer is specifically designed to aggregate the information obtained from scale-wise image patches. Additionally, our pyramid self-supervised strategy focuses on extracting representative and diverse information from scale-wise image patches instead of entire images, which is crucial for capturing representative and diverse information in VPR. Extensive experiments on four VPR datasets show our model's superior performance compared to existing models while being less complex.

+

+

+

+ 26. 标题:End-to-End Chess Recognition

+ 编号:[129]

+ 链接:https://arxiv.org/abs/2310.04086

+ 作者:Athanasios Masouris, Jan van Gemert

+ 备注:9 pages

+ 关键词:Chess recognition refers, chess pieces configuration, Chess recognition, Chess, Chess Recognition Dataset

+

+ 点击查看摘要

+ Chess recognition refers to the task of identifying the chess pieces configuration from a chessboard image. Contrary to the predominant approach that aims to solve this task through the pipeline of chessboard detection, square localization, and piece classification, we rely on the power of deep learning models and introduce two novel methodologies to circumvent this pipeline and directly predict the chessboard configuration from the entire image. In doing so, we avoid the inherent error accumulation of the sequential approaches and the need for intermediate annotations. Furthermore, we introduce a new dataset, Chess Recognition Dataset (ChessReD), specifically designed for chess recognition that consists of 10,800 images and their corresponding annotations. In contrast to existing synthetic datasets with limited angles, this dataset comprises a diverse collection of real images of chess formations captured from various angles using smartphone cameras; a sensor choice made to ensure real-world applicability. We use this dataset to both train our model and evaluate and compare its performance to that of the current state-of-the-art. Our approach in chess recognition on this new benchmark dataset outperforms related approaches, achieving a board recognition accuracy of 15.26% ($\approx$7x better than the current state-of-the-art).

+

+

+

+ 27. 标题:A Deeply Supervised Semantic Segmentation Method Based on GAN

+ 编号:[131]

+ 链接:https://arxiv.org/abs/2310.04081

+ 作者:Wei Zhao, Qiyu Wei, Zeng Zeng

+ 备注:6 pages, 2 figures, ITSC conference

+ 关键词:witnessed rapid advancements, Semantic segmentation, recent years, rapid advancements, semantic segmentation model

+

+ 点击查看摘要

+ In recent years, the field of intelligent transportation has witnessed rapid advancements, driven by the increasing demand for automation and efficiency in transportation systems. Traffic safety, one of the tasks integral to intelligent transport systems, requires accurately identifying and locating various road elements, such as road cracks, lanes, and traffic signs. Semantic segmentation plays a pivotal role in achieving this task, as it enables the partition of images into meaningful regions with accurate boundaries. In this study, we propose an improved semantic segmentation model that combines the strengths of adversarial learning with state-of-the-art semantic segmentation techniques. The proposed model integrates a generative adversarial network (GAN) framework into the traditional semantic segmentation model, enhancing the model's performance in capturing complex and subtle features in transportation images. The effectiveness of our approach is demonstrated by a significant boost in performance on the road crack dataset compared to the existing methods, \textit{i.e.,} SEGAN. This improvement can be attributed to the synergistic effect of adversarial learning and semantic segmentation, which leads to a more refined and accurate representation of road structures and conditions. The enhanced model not only contributes to better detection of road cracks but also to a wide range of applications in intelligent transportation, such as traffic sign recognition, vehicle detection, and lane segmentation.

+

+

+

+ 28. 标题:In the Blink of an Eye: Event-based Emotion Recognition

+ 编号:[145]

+ 链接:https://arxiv.org/abs/2310.04043

+ 作者:Haiwei Zhang, Jiqing Zhang, Bo Dong, Pieter Peers, Wenwei Wu, Xiaopeng Wei, Felix Heide, Xin Yang

+ 备注:

+ 关键词:introduce a wearable, partial observations, wearable single-eye emotion, Spiking Eye Emotion, Eye Emotion Network

+

+ 点击查看摘要

+ We introduce a wearable single-eye emotion recognition device and a real-time approach to recognizing emotions from partial observations of an emotion that is robust to changes in lighting conditions. At the heart of our method is a bio-inspired event-based camera setup and a newly designed lightweight Spiking Eye Emotion Network (SEEN). Compared to conventional cameras, event-based cameras offer a higher dynamic range (up to 140 dB vs. 80 dB) and a higher temporal resolution. Thus, the captured events can encode rich temporal cues under challenging lighting conditions. However, these events lack texture information, posing problems in decoding temporal information effectively. SEEN tackles this issue from two different perspectives. First, we adopt convolutional spiking layers to take advantage of the spiking neural network's ability to decode pertinent temporal information. Second, SEEN learns to extract essential spatial cues from corresponding intensity frames and leverages a novel weight-copy scheme to convey spatial attention to the convolutional spiking layers during training and inference. We extensively validate and demonstrate the effectiveness of our approach on a specially collected Single-eye Event-based Emotion (SEE) dataset. To the best of our knowledge, our method is the first eye-based emotion recognition method that leverages event-based cameras and spiking neural network.

+

+

+

+ 29. 标题:Excision and Recovery: Enhancing Surface Anomaly Detection with Attention-based Single Deterministic Masking

+ 编号:[159]

+ 链接:https://arxiv.org/abs/2310.04010

+ 作者:YeongHyeon Park, Sungho Kang, Myung Jin Kim, Yeonho Lee, Juneho Yi

+ 备注:5 pages, 3 figures, 4 tables

+ 关键词:quantity imbalance problem, scarce abnormal data, Anomaly detection, essential yet challenging, challenging task

+

+ 点击查看摘要

+ Anomaly detection (AD) in surface inspection is an essential yet challenging task in manufacturing due to the quantity imbalance problem of scarce abnormal data. To overcome the above, a reconstruction encoder-decoder (ED) such as autoencoder or U-Net which is trained with only anomaly-free samples is widely adopted, in the hope that unseen abnormals should yield a larger reconstruction error than normal. Over the past years, researches on self-supervised reconstruction-by-inpainting have been reported. They mask out suspected defective regions for inpainting in order to make them invisible to the reconstruction ED to deliberately cause inaccurate reconstruction for abnormals. However, their limitation is multiple random masking to cover the whole input image due to defective regions not being known in advance. We propose a novel reconstruction-by-inpainting method dubbed Excision and Recovery (EAR) that features single deterministic masking. For this, we exploit a pre-trained spatial attention model to predict potential suspected defective regions that should be masked out. We also employ a variant of U-Net as our ED to further limit the reconstruction ability of the U-Net model for abnormals, in which skip connections of different layers can be selectively disabled. In the training phase, all the skip connections are switched on to fully take the benefits from the U-Net architecture. In contrast, for inferencing, we only keep deeper skip connections with shallower connections off. We validate the effectiveness of EAR using an MNIST pre-trained attention for a commonly used surface AD dataset, KolektorSDD2. The experimental results show that EAR achieves both better AD performance and higher throughput than state-of-the-art methods. We expect that the proposed EAR model can be widely adopted as training and inference strategies for AD purposes.

+

+

+

+ 30. 标题:Robust Multimodal Learning with Missing Modalities via Parameter-Efficient Adaptation

+ 编号:[168]

+ 链接:https://arxiv.org/abs/2310.03986

+ 作者:Md Kaykobad Reza, Ashley Prater-Bennette, M. Salman Asif

+ 备注:18 pages, 3 figures, 11 tables

+ 关键词:seeks to utilize, sources to improve, missing modalities, Multimodal, modalities

+

+ 点击查看摘要

+ Multimodal learning seeks to utilize data from multiple sources to improve the overall performance of downstream tasks. It is desirable for redundancies in the data to make multimodal systems robust to missing or corrupted observations in some correlated modalities. However, we observe that the performance of several existing multimodal networks significantly deteriorates if one or multiple modalities are absent at test time. To enable robustness to missing modalities, we propose simple and parameter-efficient adaptation procedures for pretrained multimodal networks. In particular, we exploit low-rank adaptation and modulation of intermediate features to compensate for the missing modalities. We demonstrate that such adaptation can partially bridge performance drop due to missing modalities and outperform independent, dedicated networks trained for the available modality combinations in some cases. The proposed adaptation requires extremely small number of parameters (e.g., fewer than 0.7% of the total parameters in most experiments). We conduct a series of experiments to highlight the robustness of our proposed method using diverse datasets for RGB-thermal and RGB-Depth semantic segmentation, multimodal material segmentation, and multimodal sentiment analysis tasks. Our proposed method demonstrates versatility across various tasks and datasets, and outperforms existing methods for robust multimodal learning with missing modalities.

+

+

+

+ 31. 标题:CUPre: Cross-domain Unsupervised Pre-training for Few-Shot Cell Segmentation

+ 编号:[172]

+ 链接:https://arxiv.org/abs/2310.03981

+ 作者:Weibin Liao, Xuhong Li, Qingzhong Wang, Yanwu Xu, Zhaozheng Yin, Haoyi Xiong

+ 备注:

+ 关键词:massive fine-annotated cell, cell, cell segmentation, pre-training DNN models, bounding boxes

+

+ 点击查看摘要

+ While pre-training on object detection tasks, such as Common Objects in Contexts (COCO) [1], could significantly boost the performance of cell segmentation, it still consumes on massive fine-annotated cell images [2] with bounding boxes, masks, and cell types for every cell in every image, to fine-tune the pre-trained model. To lower the cost of annotation, this work considers the problem of pre-training DNN models for few-shot cell segmentation, where massive unlabeled cell images are available but only a small proportion is annotated. Hereby, we propose Cross-domain Unsupervised Pre-training, namely CUPre, transferring the capability of object detection and instance segmentation for common visual objects (learned from COCO) to the visual domain of cells using unlabeled images. Given a standard COCO pre-trained network with backbone, neck, and head modules, CUPre adopts an alternate multi-task pre-training (AMT2) procedure with two sub-tasks -- in every iteration of pre-training, AMT2 first trains the backbone with cell images from multiple cell datasets via unsupervised momentum contrastive learning (MoCo) [3], and then trains the whole model with vanilla COCO datasets via instance segmentation. After pre-training, CUPre fine-tunes the whole model on the cell segmentation task using a few annotated images. We carry out extensive experiments to evaluate CUPre using LIVECell [2] and BBBC038 [4] datasets in few-shot instance segmentation settings. The experiment shows that CUPre can outperform existing pre-training methods, achieving the highest average precision (AP) for few-shot cell segmentation and detection.

+

+

+

+ 32. 标题:Sub-token ViT Embedding via Stochastic Resonance Transformers

+ 编号:[180]

+ 链接:https://arxiv.org/abs/2310.03967

+ 作者:Dong Lao, Yangchao Wu, Tian Yu Liu, Alex Wong, Stefano Soatto

+ 备注:

+ 关键词:Vision Transformers, Stochastic Resonance Transformer, tokenization step inherent, discover the presence, arise due

+

+ 点击查看摘要

+ We discover the presence of quantization artifacts in Vision Transformers (ViTs), which arise due to the image tokenization step inherent in these architectures. These artifacts result in coarsely quantized features, which negatively impact performance, especially on downstream dense prediction tasks. We present a zero-shot method to improve how pre-trained ViTs handle spatial quantization. In particular, we propose to ensemble the features obtained from perturbing input images via sub-token spatial translations, inspired by Stochastic Resonance, a method traditionally applied to climate dynamics and signal processing. We term our method ``Stochastic Resonance Transformer" (SRT), which we show can effectively super-resolve features of pre-trained ViTs, capturing more of the local fine-grained structures that might otherwise be neglected as a result of tokenization. SRT can be applied at any layer, on any task, and does not require any fine-tuning. The advantage of the former is evident when applied to monocular depth prediction, where we show that ensembling model outputs are detrimental while applying SRT on intermediate ViT features outperforms the baseline models by an average of 4.7% and 14.9% on the RMSE and RMSE-log metrics across three different architectures. When applied to semi-supervised video object segmentation, SRT also improves over the baseline models uniformly across all metrics, and by an average of 2.4% in F&J score. We further show that these quantization artifacts can be attenuated to some extent via self-distillation. On the unsupervised salient region segmentation, SRT improves upon the base model by an average of 2.1% on the maxF metric. Finally, despite operating purely on pixel-level features, SRT generalizes to non-dense prediction tasks such as image retrieval and object discovery, yielding consistent improvements of up to 2.6% and 1.0% respectively.

+

+

+

+ 33. 标题:Towards Increasing the Robustness of Predictive Steering-Control Autonomous Navigation Systems Against Dash Cam Image Angle Perturbations Due to Pothole Encounters

+ 编号:[184]

+ 链接:https://arxiv.org/abs/2310.03959

+ 作者:Shivam Aarya (Johns Hopkins University)

+ 备注:7 pages, 6 figures

+ 关键词:Vehicle manufacturers, manufacturers are racing, racing to create, create autonomous navigation, steering

+

+ 点击查看摘要

+ Vehicle manufacturers are racing to create autonomous navigation and steering control algorithms for their vehicles. These software are made to handle various real-life scenarios such as obstacle avoidance and lane maneuvering. There is some ongoing research to incorporate pothole avoidance into these autonomous systems. However, there is very little research on the effect of hitting a pothole on the autonomous navigation software that uses cameras to make driving decisions. Perturbations in the camera angle when hitting a pothole can cause errors in the predicted steering angle. In this paper, we present a new model to compensate for such angle perturbations and reduce any errors in steering control prediction algorithms. We evaluate our model on perturbations of publicly available datasets and show our model can reduce the errors in the estimated steering angle from perturbed images to 2.3%, making autonomous steering control robust against the dash cam image angle perturbations induced when one wheel of a car goes over a pothole.

+

+

+

+ 34. 标题:Understanding prompt engineering may not require rethinking generalization

+ 编号:[186]

+ 链接:https://arxiv.org/abs/2310.03957

+ 作者:Victor Akinwande, Yiding Jiang, Dylan Sam, J. Zico Kolter

+ 备注:

+ 关键词:explicit training process, achieved impressive performance, prompted vision-language models, learning in prompted, prompted vision-language

+

+ 点击查看摘要

+ Zero-shot learning in prompted vision-language models, the practice of crafting prompts to build classifiers without an explicit training process, has achieved impressive performance in many settings. This success presents a seemingly surprising observation: these methods suffer relatively little from overfitting, i.e., when a prompt is manually engineered to achieve low error on a given training set (thus rendering the method no longer actually zero-shot), the approach still performs well on held-out test data. In this paper, we show that we can explain such performance well via recourse to classical PAC-Bayes bounds. Specifically, we show that the discrete nature of prompts, combined with a PAC-Bayes prior given by a language model, results in generalization bounds that are remarkably tight by the standards of the literature: for instance, the generalization bound of an ImageNet classifier is often within a few percentage points of the true test error. We demonstrate empirically that this holds for existing handcrafted prompts and prompts generated through simple greedy search. Furthermore, the resulting bound is well-suited for model selection: the models with the best bound typically also have the best test performance. This work thus provides a possible justification for the widespread practice of prompt engineering, even if it seems that such methods could potentially overfit the training data.

+

+

+

+ 35. 标题:Gradient Descent Provably Solves Nonlinear Tomographic Reconstruction

+ 编号:[187]

+ 链接:https://arxiv.org/abs/2310.03956

+ 作者:Sara Fridovich-Keil, Fabrizio Valdivia, Gordon Wetzstein, Benjamin Recht, Mahdi Soltanolkotabi

+ 备注:

+ 关键词:exponential nonlinearity based, linear Radon transform, Beer-Lambert Law, Radon transform, computed tomography

+

+ 点击查看摘要

+ In computed tomography (CT), the forward model consists of a linear Radon transform followed by an exponential nonlinearity based on the attenuation of light according to the Beer-Lambert Law. Conventional reconstruction often involves inverting this nonlinearity as a preprocessing step and then solving a convex inverse problem. However, this nonlinear measurement preprocessing required to use the Radon transform is poorly conditioned in the vicinity of high-density materials, such as metal. This preprocessing makes CT reconstruction methods numerically sensitive and susceptible to artifacts near high-density regions. In this paper, we study a technique where the signal is directly reconstructed from raw measurements through the nonlinear forward model. Though this optimization is nonconvex, we show that gradient descent provably converges to the global optimum at a geometric rate, perfectly reconstructing the underlying signal with a near minimal number of random measurements. We also prove similar results in the under-determined setting where the number of measurements is significantly smaller than the dimension of the signal. This is achieved by enforcing prior structural information about the signal through constraints on the optimization variables. We illustrate the benefits of direct nonlinear CT reconstruction with cone-beam CT experiments on synthetic and real 3D volumes. We show that this approach reduces metal artifacts compared to a commercial reconstruction of a human skull with metal dental crowns.

+

+

+

+ 36. 标题:ILSH: The Imperial Light-Stage Head Dataset for Human Head View Synthesis

+ 编号:[189]

+ 链接:https://arxiv.org/abs/2310.03952

+ 作者:Jiali Zheng, Youngkyoon Jang, Athanasios Papaioannou, Christos Kampouris, Rolandos Alexandros Potamias, Foivos Paraperas Papantoniou, Efstathios Galanakis, Ales Leonardis, Stefanos Zafeiriou

+ 备注:ICCV 2023 Workshop, 9 pages, 6 figures

+ 关键词:Imperial Light-Stage Head, introduces the Imperial, Imperial Light-Stage, ILSH dataset, human head dataset

+

+ 点击查看摘要

+ This paper introduces the Imperial Light-Stage Head (ILSH) dataset, a novel light-stage-captured human head dataset designed to support view synthesis academic challenges for human heads. The ILSH dataset is intended to facilitate diverse approaches, such as scene-specific or generic neural rendering, multiple-view geometry, 3D vision, and computer graphics, to further advance the development of photo-realistic human avatars. This paper details the setup of a light-stage specifically designed to capture high-resolution (4K) human head images and describes the process of addressing challenges (preprocessing, ethical issues) in collecting high-quality data. In addition to the data collection, we address the split of the dataset into train, validation, and test sets. Our goal is to design and support a fair view synthesis challenge task for this novel dataset, such that a similar level of performance can be maintained and expected when using the test set, as when using the validation set. The ILSH dataset consists of 52 subjects captured using 24 cameras with all 82 lighting sources turned on, resulting in a total of 1,248 close-up head images, border masks, and camera pose pairs.

+

+

+

+ 37. 标题:Hard View Selection for Contrastive Learning

+ 编号:[193]

+ 链接:https://arxiv.org/abs/2310.03940

+ 作者:Fabio Ferreira, Ivo Rapant, Frank Hutter

+ 备注:

+ 关键词:Contrastive Learning, good data augmentation, data augmentation pipeline, image augmentation pipeline, augmentation pipeline

+

+ 点击查看摘要

+ Many Contrastive Learning (CL) methods train their models to be invariant to different "views" of an image input for which a good data augmentation pipeline is crucial. While considerable efforts were directed towards improving pre-text tasks, architectures, or robustness (e.g., Siamese networks or teacher-softmax centering), the majority of these methods remain strongly reliant on the random sampling of operations within the image augmentation pipeline, such as the random resized crop or color distortion operation. In this paper, we argue that the role of the view generation and its effect on performance has so far received insufficient attention. To address this, we propose an easy, learning-free, yet powerful Hard View Selection (HVS) strategy designed to extend the random view generation to expose the pretrained model to harder samples during CL training. It encompasses the following iterative steps: 1) randomly sample multiple views and create pairs of two views, 2) run forward passes for each view pair on the currently trained model, 3) adversarially select the pair yielding the worst loss, and 4) run the backward pass with the selected pair. In our empirical analysis we show that under the hood, HVS increases task difficulty by controlling the Intersection over Union of views during pretraining. With only 300-epoch pretraining, HVS is able to closely rival the 800-epoch DINO baseline which remains very favorable even when factoring in the slowdown induced by the additional forwards of HVS. Additionally, HVS consistently achieves accuracy improvements on ImageNet between 0.55% and 1.9% on linear evaluation and similar improvements on transfer tasks across multiple CL methods, such as DINO, SimSiam, and SimCLR.

+

+

+

+ 38. 标题:Diffusion Models as Masked Audio-Video Learners

+ 编号:[195]

+ 链接:https://arxiv.org/abs/2310.03937

+ 作者:Elvis Nunez, Yanzi Jin, Mohammad Rastegari, Sachin Mehta, Maxwell Horton

+ 备注:

+ 关键词:richer audio-visual representations, learn richer audio-visual, past several years, audio-visual representations, visual signals

+

+ 点击查看摘要

+ Over the past several years, the synchronization between audio and visual signals has been leveraged to learn richer audio-visual representations. Aided by the large availability of unlabeled videos, many unsupervised training frameworks have demonstrated impressive results in various downstream audio and video tasks. Recently, Masked Audio-Video Learners (MAViL) has emerged as a state-of-the-art audio-video pre-training framework. MAViL couples contrastive learning with masked autoencoding to jointly reconstruct audio spectrograms and video frames by fusing information from both modalities. In this paper, we study the potential synergy between diffusion models and MAViL, seeking to derive mutual benefits from these two frameworks. The incorporation of diffusion into MAViL, combined with various training efficiency methodologies that include the utilization of a masking ratio curriculum and adaptive batch sizing, results in a notable 32% reduction in pre-training Floating-Point Operations (FLOPS) and an 18% decrease in pre-training wall clock time. Crucially, this enhanced efficiency does not compromise the model's performance in downstream audio-classification tasks when compared to MAViL's performance.

+

+

+

+ 39. 标题:Open-Fusion: Real-time Open-Vocabulary 3D Mapping and Queryable Scene Representation

+ 编号:[201]

+ 链接:https://arxiv.org/abs/2310.03923

+ 作者:Kashu Yamazaki, Taisei Hanyu, Khoa Vo, Thang Pham, Minh Tran, Gianfranco Doretto, Anh Nguyen, Ngan Le

+ 备注:

+ 关键词:pivotal in robotics, Signed Distance Function, Truncated Signed Distance, environmental mapping, Distance Function

+

+ 点击查看摘要

+ Precise 3D environmental mapping is pivotal in robotics. Existing methods often rely on predefined concepts during training or are time-intensive when generating semantic maps. This paper presents Open-Fusion, a groundbreaking approach for real-time open-vocabulary 3D mapping and queryable scene representation using RGB-D data. Open-Fusion harnesses the power of a pre-trained vision-language foundation model (VLFM) for open-set semantic comprehension and employs the Truncated Signed Distance Function (TSDF) for swift 3D scene reconstruction. By leveraging the VLFM, we extract region-based embeddings and their associated confidence maps. These are then integrated with 3D knowledge from TSDF using an enhanced Hungarian-based feature-matching mechanism. Notably, Open-Fusion delivers outstanding annotation-free 3D segmentation for open-vocabulary without necessitating additional 3D training. Benchmark tests on the ScanNet dataset against leading zero-shot methods highlight Open-Fusion's superiority. Furthermore, it seamlessly combines the strengths of region-based VLFM and TSDF, facilitating real-time 3D scene comprehension that includes object concepts and open-world semantics. We encourage the readers to view the demos on our project page: this https URL

+

+

+

+ 40. 标题:Coloring Deep CNN Layers with Activation Hue Loss

+ 编号:[209]

+ 链接:https://arxiv.org/abs/2310.03911

+ 作者:Louis-François Bouchard, Mohsen Ben Lazreg, Matthew Toews

+ 备注:

+ 关键词:deep convolutional neural, convolutional neural network, effective learning, regularizing models, RGB intensity space

+

+ 点击查看摘要

+ This paper proposes a novel hue-like angular parameter to model the structure of deep convolutional neural network (CNN) activation space, referred to as the {\em activation hue}, for the purpose of regularizing models for more effective learning. The activation hue generalizes the notion of color hue angle in standard 3-channel RGB intensity space to $N$-channel activation space. A series of observations based on nearest neighbor indexing of activation vectors with pre-trained networks indicate that class-informative activations are concentrated about an angle $\theta$ in both the $(x,y)$ image plane and in multi-channel activation space. A regularization term in the form of hue-like angular $\theta$ labels is proposed to complement standard one-hot loss. Training from scratch using combined one-hot + activation hue loss improves classification performance modestly for a wide variety of classification tasks, including ImageNet.

+

+

+

+ 41. 标题:TWICE Dataset: Digital Twin of Test Scenarios in a Controlled Environment

+ 编号:[217]

+ 链接:https://arxiv.org/abs/2310.03895

+ 作者:Leonardo Novicki Neto, Fabio Reway, Yuri Poledna, Maikol Funk Drechsler, Eduardo Parente Ribeiro, Werner Huber, Christian Icking

+ 备注:8 pages, 13 figures, submitted to IEEE Sensors Journal

+ 关键词:adverse weather remains, adverse weather conditions, Ensuring the safe, significant challenge, adverse weather

+

+ 点击查看摘要

+ Ensuring the safe and reliable operation of autonomous vehicles under adverse weather remains a significant challenge. To address this, we have developed a comprehensive dataset composed of sensor data acquired in a real test track and reproduced in the laboratory for the same test scenarios. The provided dataset includes camera, radar, LiDAR, inertial measurement unit (IMU), and GPS data recorded under adverse weather conditions (rainy, night-time, and snowy conditions). We recorded test scenarios using objects of interest such as car, cyclist, truck and pedestrian -- some of which are inspired by EURONCAP (European New Car Assessment Programme). The sensor data generated in the laboratory is acquired by the execution of simulation-based tests in hardware-in-the-loop environment with the digital twin of each real test scenario. The dataset contains more than 2 hours of recording, which totals more than 280GB of data. Therefore, it is a valuable resource for researchers in the field of autonomous vehicles to test and improve their algorithms in adverse weather conditions, as well as explore the simulation-to-reality gap. The dataset is available for download at: this https URL

+

+

+

+ 42. 标题:Characterizing the Features of Mitotic Figures Using a Conditional Diffusion Probabilistic Model

+ 编号:[218]

+ 链接:https://arxiv.org/abs/2310.03893

+ 作者:Cagla Deniz Bahadir, Benjamin Liechty, David J. Pisapia, Mert R. Sabuncu

+ 备注:Accepted for Deep Generative Models Workshop at Medical Image Computing and Computer Assisted Intervention (MICCAI) 2023

+ 关键词:gold-standard independent ground-truth, clinically significant task, gold-standard independent, independent ground-truth, Mitotic figure detection

+

+ 点击查看摘要

+ Mitotic figure detection in histology images is a hard-to-define, yet clinically significant task, where labels are generated with pathologist interpretations and where there is no ``gold-standard'' independent ground-truth. However, it is well-established that these interpretation based labels are often unreliable, in part, due to differences in expertise levels and human subjectivity. In this paper, our goal is to shed light on the inherent uncertainty of mitosis labels and characterize the mitotic figure classification task in a human interpretable manner. We train a probabilistic diffusion model to synthesize patches of cell nuclei for a given mitosis label condition. Using this model, we can then generate a sequence of synthetic images that correspond to the same nucleus transitioning into the mitotic state. This allows us to identify different image features associated with mitosis, such as cytoplasm granularity, nuclear density, nuclear irregularity and high contrast between the nucleus and the cell body. Our approach offers a new tool for pathologists to interpret and communicate the features driving the decision to recognize a mitotic figure.

+

+

+

+ 43. 标题:Accelerated Neural Network Training with Rooted Logistic Objectives

+ 编号:[220]

+ 链接:https://arxiv.org/abs/2310.03890

+ 作者:Zhu Wang, Praveen Raj Veluswami, Harsh Mishra, Sathya N. Ravi

+ 备注:

+ 关键词:real world scenarios, cross entropy based, real world, world scenarios, scenarios are trained

+

+ 点击查看摘要

+ Many neural networks deployed in the real world scenarios are trained using cross entropy based loss functions. From the optimization perspective, it is known that the behavior of first order methods such as gradient descent crucially depend on the separability of datasets. In fact, even in the most simplest case of binary classification, the rate of convergence depends on two factors: (1) condition number of data matrix, and (2) separability of the dataset. With no further pre-processing techniques such as over-parametrization, data augmentation etc., separability is an intrinsic quantity of the data distribution under consideration. We focus on the landscape design of the logistic function and derive a novel sequence of {\em strictly} convex functions that are at least as strict as logistic loss. The minimizers of these functions coincide with those of the minimum norm solution wherever possible. The strict convexity of the derived function can be extended to finetune state-of-the-art models and applications. In empirical experimental analysis, we apply our proposed rooted logistic objective to multiple deep models, e.g., fully-connected neural networks and transformers, on various of classification benchmarks. Our results illustrate that training with rooted loss function is converged faster and gains performance improvements. Furthermore, we illustrate applications of our novel rooted loss function in generative modeling based downstream applications, such as finetuning StyleGAN model with the rooted loss. The code implementing our losses and models can be found here for open source software development purposes: https://anonymous.4open.science/r/rooted_loss.

+

+

+

+ 44. 标题:Consistency Regularization Improves Placenta Segmentation in Fetal EPI MRI Time Series

+ 编号:[229]

+ 链接:https://arxiv.org/abs/2310.03870

+ 作者:Yingcheng Liu, Neerav Karani, Neel Dey, S. Mazdak Abulnaga, Junshen Xu, P. Ellen Grant, Esra Abaci Turk, Polina Golland

+ 备注:

+ 关键词:fetal EPI MRI, EPI MRI time, EPI MRI, EPI MRI holds, fetal EPI

+

+ 点击查看摘要

+ The placenta plays a crucial role in fetal development. Automated 3D placenta segmentation from fetal EPI MRI holds promise for advancing prenatal care. This paper proposes an effective semi-supervised learning method for improving placenta segmentation in fetal EPI MRI time series. We employ consistency regularization loss that promotes consistency under spatial transformation of the same image and temporal consistency across nearby images in a time series. The experimental results show that the method improves the overall segmentation accuracy and provides better performance for outliers and hard samples. The evaluation also indicates that our method improves the temporal coherency of the prediction, which could lead to more accurate computation of temporal placental biomarkers. This work contributes to the study of the placenta and prenatal clinical decision-making. Code is available at this https URL.

+

+

+

+ 45. 标题:OpenIncrement: A Unified Framework for Open Set Recognition and Deep Class-Incremental Learning

+ 编号:[236]

+ 链接:https://arxiv.org/abs/2310.03848

+ 作者:Jiawen Xu, Claas Grohnfeldt, Odej Kao

+ 备注:

+ 关键词:neural network retraining, network retraining, pre-identified for neural, neural network, open set

+

+ 点击查看摘要

+ In most works on deep incremental learning research, it is assumed that novel samples are pre-identified for neural network retraining. However, practical deep classifiers often misidentify these samples, leading to erroneous predictions. Such misclassifications can degrade model performance. Techniques like open set recognition offer a means to detect these novel samples, representing a significant area in the machine learning domain.

+In this paper, we introduce a deep class-incremental learning framework integrated with open set recognition. Our approach refines class-incrementally learned features to adapt them for distance-based open set recognition. Experimental results validate that our method outperforms state-of-the-art incremental learning techniques and exhibits superior performance in open set recognition compared to baseline methods.

+

+

+

+ 46. 标题:Less is More: On the Feature Redundancy of Pretrained Models When Transferring to Few-shot Tasks

+ 编号:[237]

+ 链接:https://arxiv.org/abs/2310.03843

+ 作者:Xu Luo, Difan Zou, Lianli Gao, Zenglin Xu, Jingkuan Song

+ 备注:

+ 关键词:frozen features extracted, conducting linear probing, easy as conducting, classifier upon frozen, pretrained

+

+ 点击查看摘要