本篇博文主要展示每日从Arxiv论文网站获取的最新论文列表,以自然语言处理、信息检索、计算机视觉等类目进行划分。

+统计

+今日共更新975篇论文,其中:

+

+- 自然语言处理166篇

+- 信息检索35篇

+- 计算机视觉300篇

+

+自然语言处理

+

+ 1. 【2412.12094】SepLLM: Accelerate Large Language Models by Compressing One Segment into One Separator

+ 链接:https://arxiv.org/abs/2412.12094

+ 作者:Guoxuan Chen,Han Shi,Jiawei Li,Yihang Gao,Xiaozhe Ren,Yimeng Chen,Xin Jiang,Zhenguo Li,Weiyang Liu,Chao Huang

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

+ 关键词:Large Language Models, language processing tasks, natural language processing, exhibited exceptional performance, Large Language

+ 备注:

+

+ 点击查看摘要

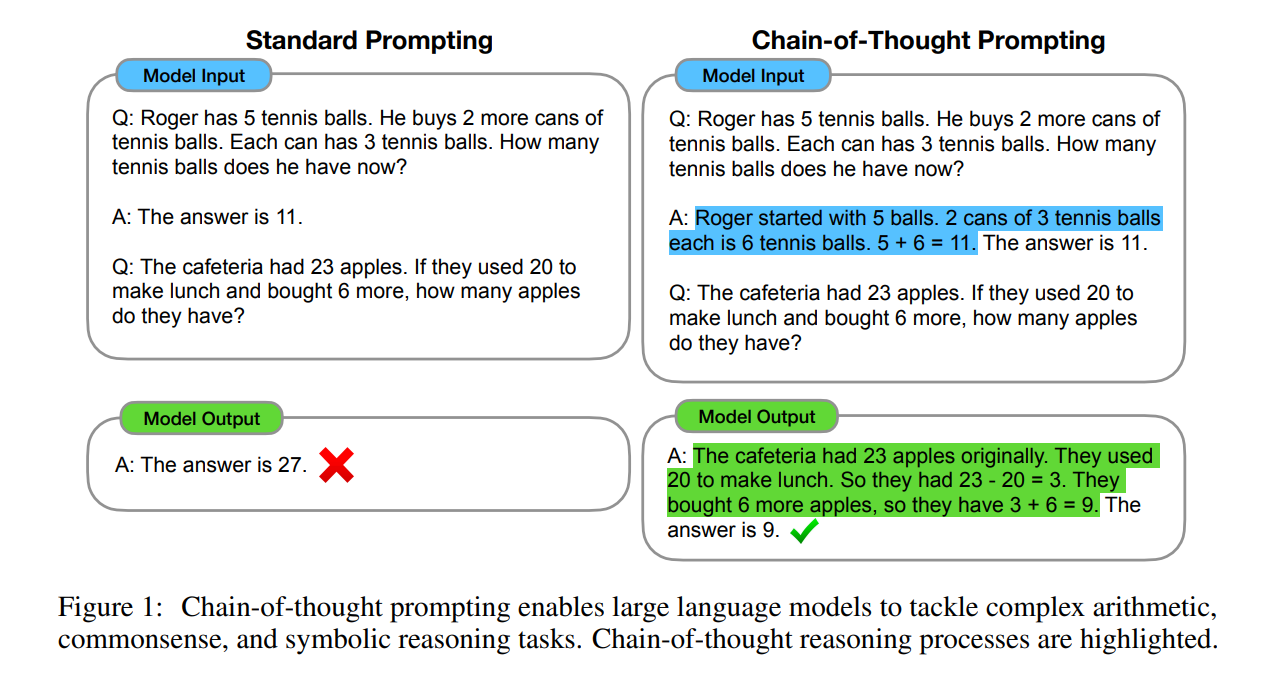

+ Abstract:Large Language Models (LLMs) have exhibited exceptional performance across a spectrum of natural language processing tasks. However, their substantial sizes pose considerable challenges, particularly in computational demands and inference speed, due to their quadratic complexity. In this work, we have identified a key pattern: certain seemingly meaningless special tokens (i.e., separators) contribute disproportionately to attention scores compared to semantically meaningful tokens. This observation suggests that information of the segments between these separator tokens can be effectively condensed into the separator tokens themselves without significant information loss. Guided by this insight, we introduce SepLLM, a plug-and-play framework that accelerates inference by compressing these segments and eliminating redundant tokens. Additionally, we implement efficient kernels for training acceleration. Experimental results across training-free, training-from-scratch, and post-training settings demonstrate SepLLM's effectiveness. Notably, using the Llama-3-8B backbone, SepLLM achieves over 50% reduction in KV cache on the GSM8K-CoT benchmark while maintaining comparable performance. Furthermore, in streaming settings, SepLLM effectively processes sequences of up to 4 million tokens or more while maintaining consistent language modeling capabilities.

+

+

+

+ 2. 【2412.12072】Making FETCH! Happen: Finding Emergent Dog Whistles Through Common Habitats

+ 链接:https://arxiv.org/abs/2412.12072

+ 作者:Kuleen Sasse,Carlos Aguirre,Isabel Cachola,Sharon Levy,Mark Dredze

+ 类目:Computation and Language (cs.CL)

+ 关键词:upsetting or offensive, WARNING, Dog whistles, Large Language Models, Dog

+ 备注:

+

+ 点击查看摘要

+ Abstract:WARNING: This paper contains content that maybe upsetting or offensive to some readers. Dog whistles are coded expressions with dual meanings: one intended for the general public (outgroup) and another that conveys a specific message to an intended audience (ingroup). Often, these expressions are used to convey controversial political opinions while maintaining plausible deniability and slip by content moderation filters. Identification of dog whistles relies on curated lexicons, which have trouble keeping up to date. We introduce \textbf{FETCH!}, a task for finding novel dog whistles in massive social media corpora. We find that state-of-the-art systems fail to achieve meaningful results across three distinct social media case studies. We present \textbf{EarShot}, a novel system that combines the strengths of vector databases and Large Language Models (LLMs) to efficiently and effectively identify new dog whistles.

+

+

+

+ 3. 【2412.12062】Semi-automated analysis of audio-recorded lessons: The case of teachers' engaging messages

+ 链接:https://arxiv.org/abs/2412.12062

+ 作者:Samuel Falcon,Carmen Alvarez-Alvarez,Jaime Leon

+ 类目:Computation and Language (cs.CL); Computers and Society (cs.CY)

+ 关键词:influences student outcomes, student outcomes, Engaging messages delivered, Engaging messages, key aspect

+ 备注:

+

+ 点击查看摘要

+ Abstract:Engaging messages delivered by teachers are a key aspect of the classroom discourse that influences student outcomes. However, improving this communication is challenging due to difficulties in obtaining observations. This study presents a methodology for efficiently extracting actual observations of engaging messages from audio-recorded lessons. We collected 2,477 audio-recorded lessons from 75 teachers over two academic years. Using automatic transcription and keyword-based filtering analysis, we identified and classified engaging messages. This method reduced the information to be analysed by 90%, optimising the time and resources required compared to traditional manual coding. Subsequent descriptive analysis revealed that the most used messages emphasised the future benefits of participating in school activities. In addition, the use of engaging messages decreased as the academic year progressed. This study offers insights for researchers seeking to extract information from teachers' discourse in naturalistic settings and provides useful information for designing interventions to improve teachers' communication strategies.

+

+

+

+ 4. 【2412.12061】Virtual Agent-Based Communication Skills Training to Facilitate Health Persuasion Among Peers

+ 链接:https://arxiv.org/abs/2412.12061

+ 作者:Farnaz Nouraei,Keith Rebello,Mina Fallah,Prasanth Murali,Haley Matuszak,Valerie Jap,Andrea Parker,Michael Paasche-Orlow,Timothy Bickmore

+ 类目:Human-Computer Interaction (cs.HC); Computation and Language (cs.CL); Computers and Society (cs.CY)

+ 关键词:health behavior, stigmatizing or controversial, laypeople are motivated, motivated to improve, family or friends

+ 备注: Accepted at CSCW '24

+

+ 点击查看摘要

+ Abstract:Many laypeople are motivated to improve the health behavior of their family or friends but do not know where to start, especially if the health behavior is potentially stigmatizing or controversial. We present an approach that uses virtual agents to coach community-based volunteers in health counseling techniques, such as motivational interviewing, and allows them to practice these skills in role-playing scenarios. We use this approach in a virtual agent-based system to increase COVID-19 vaccination by empowering users to influence their social network. In a between-subjects comparative design study, we test the effects of agent system interactivity and role-playing functionality on counseling outcomes, with participants evaluated by standardized patients and objective judges. We find that all versions are effective at producing peer counselors who score adequately on a standardized measure of counseling competence, and that participants were significantly more satisfied with interactive virtual agents compared to passive viewing of the training material. We discuss design implications for interpersonal skills training systems based on our findings.

+

+

+

+ 5. 【2412.12040】How Private are Language Models in Abstractive Summarization?

+ 链接:https://arxiv.org/abs/2412.12040

+ 作者:Anthony Hughes,Nikolaos Aletras,Ning Ma

+ 类目:Computation and Language (cs.CL)

+ 关键词:shown outstanding performance, Language models, medicine and law, shown outstanding, outstanding performance

+ 备注:

+

+ 点击查看摘要

+ Abstract:Language models (LMs) have shown outstanding performance in text summarization including sensitive domains such as medicine and law. In these settings, it is important that personally identifying information (PII) included in the source document should not leak in the summary. Prior efforts have mostly focused on studying how LMs may inadvertently elicit PII from training data. However, to what extent LMs can provide privacy-preserving summaries given a non-private source document remains under-explored. In this paper, we perform a comprehensive study across two closed- and three open-weight LMs of different sizes and families. We experiment with prompting and fine-tuning strategies for privacy-preservation across a range of summarization datasets across three domains. Our extensive quantitative and qualitative analysis including human evaluation shows that LMs often cannot prevent PII leakage on their summaries and that current widely-used metrics cannot capture context dependent privacy risks.

+

+

+

+ 6. 【2412.12039】Can LLM Prompting Serve as a Proxy for Static Analysis in Vulnerability Detection

+ 链接:https://arxiv.org/abs/2412.12039

+ 作者:Ira Ceka,Feitong Qiao,Anik Dey,Aastha Valechia,Gail Kaiser,Baishakhi Ray

+ 类目:Cryptography and Security (cs.CR); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Software Engineering (cs.SE)

+ 关键词:large language models, shown limited ability, remarkable success, vulnerability detection, shown limited

+ 备注:

+

+ 点击查看摘要

+ Abstract:Despite their remarkable success, large language models (LLMs) have shown limited ability on applied tasks such as vulnerability detection. We investigate various prompting strategies for vulnerability detection and, as part of this exploration, propose a prompting strategy that integrates natural language descriptions of vulnerabilities with a contrastive chain-of-thought reasoning approach, augmented using contrastive samples from a synthetic dataset. Our study highlights the potential of LLMs to detect vulnerabilities by integrating natural language descriptions, contrastive reasoning, and synthetic examples into a comprehensive prompting framework. Our results show that this approach can enhance LLM understanding of vulnerabilities. On a high-quality vulnerability detection dataset such as SVEN, our prompting strategies can improve accuracies, F1-scores, and pairwise accuracies by 23%, 11%, and 14%, respectively.

+

+

+

+ 7. 【2412.12004】he Open Source Advantage in Large Language Models (LLMs)

+ 链接:https://arxiv.org/abs/2412.12004

+ 作者:Jiya Manchanda,Laura Boettcher,Matheus Westphalen,Jasser Jasser

+ 类目:Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:advanced text generation, Large language models, natural language processing, Large language, mark a key

+ 备注: 7 pages, 0 figures

+

+ 点击查看摘要

+ Abstract:Large language models (LLMs) mark a key shift in natural language processing (NLP), having advanced text generation, translation, and domain-specific reasoning. Closed-source models like GPT-4, powered by proprietary datasets and extensive computational resources, lead with state-of-the-art performance today. However, they face criticism for their "black box" nature and for limiting accessibility in a manner that hinders reproducibility and equitable AI development. By contrast, open-source initiatives like LLaMA and BLOOM prioritize democratization through community-driven development and computational efficiency. These models have significantly reduced performance gaps, particularly in linguistic diversity and domain-specific applications, while providing accessible tools for global researchers and developers. Notably, both paradigms rely on foundational architectural innovations, such as the Transformer framework by Vaswani et al. (2017). Closed-source models excel by scaling effectively, while open-source models adapt to real-world applications in underrepresented languages and domains. Techniques like Low-Rank Adaptation (LoRA) and instruction-tuning datasets enable open-source models to achieve competitive results despite limited resources. To be sure, the tension between closed-source and open-source approaches underscores a broader debate on transparency versus proprietary control in AI. Ethical considerations further highlight this divide. Closed-source systems restrict external scrutiny, while open-source models promote reproducibility and collaboration but lack standardized auditing documentation frameworks to mitigate biases. Hybrid approaches that leverage the strengths of both paradigms are likely to shape the future of LLM innovation, ensuring accessibility, competitive technical performance, and ethical deployment.

+

+

+

+ 8. 【2412.12001】LLM-RG4: Flexible and Factual Radiology Report Generation across Diverse Input Contexts

+ 链接:https://arxiv.org/abs/2412.12001

+ 作者:Zhuhao Wang,Yihua Sun,Zihan Li,Xuan Yang,Fang Chen,Hongen Liao

+ 类目:Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV)

+ 关键词:radiologists tail content, complex task requiring, radiology report drafting, Drafting radiology reports, task requiring flexibility

+ 备注:

+

+ 点击查看摘要

+ Abstract:Drafting radiology reports is a complex task requiring flexibility, where radiologists tail content to available information and particular clinical demands. However, most current radiology report generation (RRG) models are constrained to a fixed task paradigm, such as predicting the full ``finding'' section from a single image, inherently involving a mismatch between inputs and outputs. The trained models lack the flexibility for diverse inputs and could generate harmful, input-agnostic hallucinations. To bridge the gap between current RRG models and the clinical demands in practice, we first develop a data generation pipeline to create a new MIMIC-RG4 dataset, which considers four common radiology report drafting scenarios and has perfectly corresponded input and output. Secondly, we propose a novel large language model (LLM) based RRG framework, namely LLM-RG4, which utilizes LLM's flexible instruction-following capabilities and extensive general knowledge. We further develop an adaptive token fusion module that offers flexibility to handle diverse scenarios with different input combinations, while minimizing the additional computational burden associated with increased input volumes. Besides, we propose a token-level loss weighting strategy to direct the model's attention towards positive and uncertain descriptions. Experimental results demonstrate that LLM-RG4 achieves state-of-the-art performance in both clinical efficiency and natural language generation on the MIMIC-RG4 and MIMIC-CXR datasets. We quantitatively demonstrate that our model has minimal input-agnostic hallucinations, whereas current open-source models commonly suffer from this problem.

+

+

+

+ 9. 【2412.11990】ExecRepoBench: Multi-level Executable Code Completion Evaluation

+ 链接:https://arxiv.org/abs/2412.11990

+ 作者:Jian Yang,Jiajun Zhang,Jiaxi Yang,Ke Jin,Lei Zhang,Qiyao Peng,Ken Deng,Yibo Miao,Tianyu Liu,Zeyu Cui,Binyuan Hui,Junyang Lin

+ 类目:Computation and Language (cs.CL)

+ 关键词:daily software development, essential tool, tool for daily, Code completion, daily software

+ 备注:

+

+ 点击查看摘要

+ Abstract:Code completion has become an essential tool for daily software development. Existing evaluation benchmarks often employ static methods that do not fully capture the dynamic nature of real-world coding environments and face significant challenges, including limited context length, reliance on superficial evaluation metrics, and potential overfitting to training datasets. In this work, we introduce a novel framework for enhancing code completion in software development through the creation of a repository-level benchmark ExecRepoBench and the instruction corpora Repo-Instruct, aim at improving the functionality of open-source large language models (LLMs) in real-world coding scenarios that involve complex interdependencies across multiple files. ExecRepoBench includes 1.2K samples from active Python repositories. Plus, we present a multi-level grammar-based completion methodology conditioned on the abstract syntax tree to mask code fragments at various logical units (e.g. statements, expressions, and functions). Then, we fine-tune the open-source LLM with 7B parameters on Repo-Instruct to produce a strong code completion baseline model Qwen2.5-Coder-Instruct-C based on the open-source model. Qwen2.5-Coder-Instruct-C is rigorously evaluated against existing benchmarks, including MultiPL-E and ExecRepoBench, which consistently outperforms prior baselines across all programming languages. The deployment of \ourmethod{} can be used as a high-performance, local service for programming development\footnote{\url{this https URL}}.

+

+

+

+ 10. 【2412.11988】SciFaultyQA: Benchmarking LLMs on Faulty Science Question Detection with a GAN-Inspired Approach to Synthetic Dataset Generation

+ 链接:https://arxiv.org/abs/2412.11988

+ 作者:Debarshi Kundu

+ 类目:Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:Gemini Flash frequently, Gemini Flash, woman can produce, Flash frequently answer, woman

+ 备注:

+

+ 点击查看摘要

+ Abstract:Consider the problem: ``If one man and one woman can produce one child in one year, how many children will be produced by one woman and three men in 0.5 years?" Current large language models (LLMs) such as GPT-4o, GPT-o1-preview, and Gemini Flash frequently answer "0.5," which does not make sense. While these models sometimes acknowledge the unrealistic nature of the question, in many cases (8 out of 10 trials), they provide the nonsensical answer of "0.5 child." Additionally, temporal variation has been observed: if an LLM answers correctly once (by recognizing the faulty nature of the question), subsequent responses are more likely to also reflect this understanding. However, this is inconsistent.

+These types of questions have motivated us to develop a dataset of science questions, SciFaultyQA, where the questions themselves are intentionally faulty. We observed that LLMs often proceed to answer these flawed questions without recognizing their inherent issues, producing results that are logically or scientifically invalid. By analyzing such patterns, we developed a novel method for generating synthetic datasets to evaluate and benchmark the performance of various LLMs in identifying these flawed questions. We have also developed novel approaches to reduce the errors.

+

Subjects:

+Computation and Language (cs.CL); Machine Learning (cs.LG)

+Cite as:

+arXiv:2412.11988 [cs.CL]

+(or

+arXiv:2412.11988v1 [cs.CL] for this version)

+https://doi.org/10.48550/arXiv.2412.11988

+Focus to learn more

+ arXiv-issued DOI via DataCite (pending registration)</p>

+

+

+

+

+ 11. 【2412.11986】Speak Improve Corpus 2025: an L2 English Speech Corpus for Language Assessment and Feedback

+ 链接:https://arxiv.org/abs/2412.11986

+ 作者:Kate Knill,Diane Nicholls,Mark J.F. Gales,Mengjie Qian,Pawel Stroinski

+ 类目:Computation and Language (cs.CL)

+ 关键词:Improve learning platform, language processing systems, introduce the Speak, Improve learning, https URL

+ 备注:

+

+ 点击查看摘要

+ Abstract:We introduce the Speak \ Improve Corpus 2025, a dataset of L2 learner English data with holistic scores and language error annotation, collected from open (spontaneous) speaking tests on the Speak \ Improve learning platform this https URL . The aim of the corpus release is to address a major challenge to developing L2 spoken language processing systems, the lack of publicly available data with high-quality annotations. It is being made available for non-commercial use on the ELiT website. In designing this corpus we have sought to make it cover a wide-range of speaker attributes, from their L1 to their speaking ability, as well as providing manual annotations. This enables a range of language-learning tasks to be examined, such as assessing speaking proficiency or providing feedback on grammatical errors in a learner's speech. Additionally, the data supports research into the underlying technology required for these tasks including automatic speech recognition (ASR) of low resource L2 learner English, disfluency detection or spoken grammatical error correction (GEC). The corpus consists of around 340 hours of L2 English learners audio with holistic scores, and a subset of audio annotated with transcriptions and error labels.

+

+

+

+ 12. 【2412.11985】Speak Improve Challenge 2025: Tasks and Baseline Systems

+ 链接:https://arxiv.org/abs/2412.11985

+ 作者:Mengjie Qian,Kate Knill,Stefano Banno,Siyuan Tang,Penny Karanasou,Mark J.F. Gales,Diane Nicholls

+ 类目:Computation and Language (cs.CL)

+ 关键词:Spoken Language Assessment, Speak Improve Challenge, Speak Improve, Spoken Grammatical Error, Speak Improve learning

+ 备注:

+

+ 点击查看摘要

+ Abstract:This paper presents the "Speak Improve Challenge 2025: Spoken Language Assessment and Feedback" -- a challenge associated with the ISCA SLaTE 2025 Workshop. The goal of the challenge is to advance research on spoken language assessment and feedback, with tasks associated with both the underlying technology and language learning feedback. Linked with the challenge, the Speak Improve (SI) Corpus 2025 is being pre-released, a dataset of L2 learner English data with holistic scores and language error annotation, collected from open (spontaneous) speaking tests on the Speak Improve learning platform. The corpus consists of 340 hours of audio data from second language English learners with holistic scores, and a 60-hour subset with manual transcriptions and error labels. The Challenge has four shared tasks: Automatic Speech Recognition (ASR), Spoken Language Assessment (SLA), Spoken Grammatical Error Correction (SGEC), and Spoken Grammatical Error Correction Feedback (SGECF). Each of these tasks has a closed track where a predetermined set of models and data sources are allowed to be used, and an open track where any public resource may be used. Challenge participants may do one or more of the tasks. This paper describes the challenge, the SI Corpus 2025, and the baseline systems released for the Challenge.

+

+

+

+ 13. 【2412.11978】Speech Foundation Models and Crowdsourcing for Efficient, High-Quality Data Collection

+ 链接:https://arxiv.org/abs/2412.11978

+ 作者:Beomseok Lee,Marco Gaido,Ioan Calapodescu,Laurent Besacier,Matteo Negri

+ 类目:Computation and Language (cs.CL); Sound (cs.SD); Audio and Speech Processing (eess.AS)

+ 关键词:non-experts necessitates protocols, Speech Foundation Models, final data quality, ensure final data, speech data acquisition

+ 备注: Accepted at COLING 2025 main conference

+

+ 点击查看摘要

+ Abstract:While crowdsourcing is an established solution for facilitating and scaling the collection of speech data, the involvement of non-experts necessitates protocols to ensure final data quality. To reduce the costs of these essential controls, this paper investigates the use of Speech Foundation Models (SFMs) to automate the validation process, examining for the first time the cost/quality trade-off in data acquisition. Experiments conducted on French, German, and Korean data demonstrate that SFM-based validation has the potential to reduce reliance on human validation, resulting in an estimated cost saving of over 40.0% without degrading final data quality. These findings open new opportunities for more efficient, cost-effective, and scalable speech data acquisition.

+

+

+

+ 14. 【2412.11974】Emma-X: An Embodied Multimodal Action Model with Grounded Chain of Thought and Look-ahead Spatial Reasoning

+ 链接:https://arxiv.org/abs/2412.11974

+ 作者:Qi Sun,Pengfei Hong,Tej Deep Pala,Vernon Toh,U-Xuan Tan,Deepanway Ghosal,Soujanya Poria

+ 类目:Robotics (cs.RO); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV)

+ 关键词:Traditional reinforcement learning-based, Traditional reinforcement, reinforcement learning-based robotic, learning-based robotic control, robotic control methods

+ 备注: [this https URL](https://github.com/declare-lab/Emma-X) , [this https URL](https://huggingface.co/declare-lab/Emma-X)

+

+ 点击查看摘要

+ Abstract:Traditional reinforcement learning-based robotic control methods are often task-specific and fail to generalize across diverse environments or unseen objects and instructions. Visual Language Models (VLMs) demonstrate strong scene understanding and planning capabilities but lack the ability to generate actionable policies tailored to specific robotic embodiments. To address this, Visual-Language-Action (VLA) models have emerged, yet they face challenges in long-horizon spatial reasoning and grounded task planning. In this work, we propose the Embodied Multimodal Action Model with Grounded Chain of Thought and Look-ahead Spatial Reasoning, Emma-X. Emma-X leverages our constructed hierarchical embodiment dataset based on BridgeV2, containing 60,000 robot manipulation trajectories auto-annotated with grounded task reasoning and spatial guidance. Additionally, we introduce a trajectory segmentation strategy based on gripper states and motion trajectories, which can help mitigate hallucination in grounding subtask reasoning generation. Experimental results demonstrate that Emma-X achieves superior performance over competitive baselines, particularly in real-world robotic tasks requiring spatial reasoning.

+

+

+

+ 15. 【2412.11970】DARWIN 1.5: Large Language Models as Materials Science Adapted Learners

+ 链接:https://arxiv.org/abs/2412.11970

+ 作者:Tong Xie,Yuwei Wan,Yixuan Liu,Yuchen Zeng,Wenjie Zhang,Chunyu Kit,Dongzhan Zhou,Bram Hoex

+ 类目:Computation and Language (cs.CL)

+ 关键词:diverse search spaces, search spaces, aim to find, find components, components and structures

+ 备注:

+

+ 点击查看摘要

+ Abstract:Materials discovery and design aim to find components and structures with desirable properties over highly complex and diverse search spaces. Traditional solutions, such as high-throughput simulations and machine learning (ML), often rely on complex descriptors, which hinder generalizability and transferability across tasks. Moreover, these descriptors may deviate from experimental data due to inevitable defects and purity issues in the real world, which may reduce their effectiveness in practical applications. To address these challenges, we propose Darwin 1.5, an open-source large language model (LLM) tailored for materials science. By leveraging natural language as input, Darwin eliminates the need for task-specific descriptors and enables a flexible, unified approach to material property prediction and discovery. We employ a two-stage training strategy combining question-answering (QA) fine-tuning with multi-task learning (MTL) to inject domain-specific knowledge in various modalities and facilitate cross-task knowledge transfer. Through our strategic approach, we achieved a significant enhancement in the prediction accuracy of LLMs, with a maximum improvement of 60\% compared to LLaMA-7B base models. It further outperforms traditional machine learning models on various tasks in material science, showcasing the potential of LLMs to provide a more versatile and scalable foundation model for materials discovery and design.

+

+

+

+ 16. 【2412.11965】Inferring Functionality of Attention Heads from their Parameters

+ 链接:https://arxiv.org/abs/2412.11965

+ 作者:Amit Elhelo,Mor Geva

+ 类目:Computation and Language (cs.CL)

+ 关键词:large language models, building blocks, blocks of large, large language, Attention

+ 备注:

+

+ 点击查看摘要

+ Abstract:Attention heads are one of the building blocks of large language models (LLMs). Prior work on investigating their operation mostly focused on analyzing their behavior during inference for specific circuits or tasks. In this work, we seek a comprehensive mapping of the operations they implement in a model. We propose MAPS (Mapping Attention head ParameterS), an efficient framework that infers the functionality of attention heads from their parameters, without any model training or inference. We showcase the utility of MAPS for answering two types of questions: (a) given a predefined operation, mapping how strongly heads across the model implement it, and (b) given an attention head, inferring its salient functionality. Evaluating MAPS on 20 operations across 6 popular LLMs shows its estimations correlate with the head's outputs during inference and are causally linked to the model's predictions. Moreover, its mappings reveal attention heads of certain operations that were overlooked in previous studies, and valuable insights on function universality and architecture biases in LLMs. Next, we present an automatic pipeline and analysis that leverage MAPS to characterize the salient operations of a given head. Our pipeline produces plausible operation descriptions for most heads, as assessed by human judgment, while revealing diverse operations.

+

+

+

+ 17. 【2412.11940】he Impact of Token Granularity on the Predictive Power of Language Model Surprisal

+ 链接:https://arxiv.org/abs/2412.11940

+ 作者:Byung-Doh Oh,William Schuler

+ 类目:Computation and Language (cs.CL)

+ 关键词:human readers, raises questions, token granularity, surprisal, language modeling influence

+ 备注:

+

+ 点击查看摘要

+ Abstract:Word-by-word language model surprisal is often used to model the incremental processing of human readers, which raises questions about how various choices in language modeling influence its predictive power. One factor that has been overlooked in cognitive modeling is the granularity of subword tokens, which explicitly encodes information about word length and frequency, and ultimately influences the quality of vector representations that are learned. This paper presents experiments that manipulate the token granularity and evaluate its impact on the ability of surprisal to account for processing difficulty of naturalistic text and garden-path constructions. Experiments with naturalistic reading times reveal a substantial influence of token granularity on surprisal, with tokens defined by a vocabulary size of 8,000 resulting in surprisal that is most predictive. In contrast, on garden-path constructions, language models trained on coarser-grained tokens generally assigned higher surprisal to critical regions, suggesting their increased sensitivity to syntax. Taken together, these results suggest a large role of token granularity on the quality of language model surprisal for cognitive modeling.

+

+

+

+ 18. 【2412.11939】SEAGraph: Unveiling the Whole Story of Paper Review Comments

+ 链接:https://arxiv.org/abs/2412.11939

+ 作者:Jianxiang Yu,Jiaqi Tan,Zichen Ding,Jiapeng Zhu,Jiahao Li,Yao Cheng,Qier Cui,Yunshi Lan,Xiang Li

+ 类目:Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:Peer review, peer review process, ensures the integrity, traditional peer review, cornerstone of scientific

+ 备注:

+

+ 点击查看摘要

+ Abstract:Peer review, as a cornerstone of scientific research, ensures the integrity and quality of scholarly work by providing authors with objective feedback for refinement. However, in the traditional peer review process, authors often receive vague or insufficiently detailed feedback, which provides limited assistance and leads to a more time-consuming review cycle. If authors can identify some specific weaknesses in their paper, they can not only address the reviewer's concerns but also improve their work. This raises the critical question of how to enhance authors' comprehension of review comments. In this paper, we present SEAGraph, a novel framework developed to clarify review comments by uncovering the underlying intentions behind them. We construct two types of graphs for each paper: the semantic mind graph, which captures the author's thought process, and the hierarchical background graph, which delineates the research domains related to the paper. A retrieval method is then designed to extract relevant content from both graphs, facilitating coherent explanations for the review comments. Extensive experiments show that SEAGraph excels in review comment understanding tasks, offering significant benefits to authors.

+

+

+

+ 19. 【2412.11937】Precise Length Control in Large Language Models

+ 链接:https://arxiv.org/abs/2412.11937

+ 作者:Bradley Butcher,Michael O'Keefe,James Titchener

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Models, Large Language, Language Models, production systems, powering applications

+ 备注:

+

+ 点击查看摘要

+ Abstract:Large Language Models (LLMs) are increasingly used in production systems, powering applications such as chatbots, summarization, and question answering. Despite their success, controlling the length of their response remains a significant challenge, particularly for tasks requiring structured outputs or specific levels of detail. In this work, we propose a method to adapt pre-trained decoder-only LLMs for precise control of response length. Our approach incorporates a secondary length-difference positional encoding (LDPE) into the input embeddings, which counts down to a user-set response termination length. Fine-tuning with LDPE allows the model to learn to terminate responses coherently at the desired length, achieving mean token errors of less than 3 tokens. We also introduce Max New Tokens++, an extension that enables flexible upper-bound length control, rather than an exact target. Experimental results on tasks such as question answering and document summarization demonstrate that our method enables precise length control without compromising response quality.

+

+

+

+ 20. 【2412.11936】A Survey of Mathematical Reasoning in the Era of Multimodal Large Language Model: Benchmark, Method Challenges

+ 链接:https://arxiv.org/abs/2412.11936

+ 作者:Yibo Yan,Jiamin Su,Jianxiang He,Fangteng Fu,Xu Zheng,Yuanhuiyi Lyu,Kun Wang,Shen Wang,Qingsong Wen,Xuming Hu

+ 类目:Computation and Language (cs.CL)

+ 关键词:Mathematical reasoning, large language models, human cognition, scientific advancements, core aspect

+ 备注:

+

+ 点击查看摘要

+ Abstract:Mathematical reasoning, a core aspect of human cognition, is vital across many domains, from educational problem-solving to scientific advancements. As artificial general intelligence (AGI) progresses, integrating large language models (LLMs) with mathematical reasoning tasks is becoming increasingly significant. This survey provides the first comprehensive analysis of mathematical reasoning in the era of multimodal large language models (MLLMs). We review over 200 studies published since 2021, and examine the state-of-the-art developments in Math-LLMs, with a focus on multimodal settings. We categorize the field into three dimensions: benchmarks, methodologies, and challenges. In particular, we explore multimodal mathematical reasoning pipeline, as well as the role of (M)LLMs and the associated methodologies. Finally, we identify five major challenges hindering the realization of AGI in this domain, offering insights into the future direction for enhancing multimodal reasoning capabilities. This survey serves as a critical resource for the research community in advancing the capabilities of LLMs to tackle complex multimodal reasoning tasks.

+

+

+

+ 21. 【2412.11927】Explainable Procedural Mistake Detection

+ 链接:https://arxiv.org/abs/2412.11927

+ 作者:Shane Storks,Itamar Bar-Yossef,Yayuan Li,Zheyuan Zhang,Jason J. Corso,Joyce Chai

+ 类目:Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:recently attracted attention, research community, Automated task guidance, guidance has recently, recently attracted

+ 备注:

+

+ 点击查看摘要

+ Abstract:Automated task guidance has recently attracted attention from the AI research community. Procedural mistake detection (PMD) is a challenging sub-problem of classifying whether a human user (observed through egocentric video) has successfully executed the task at hand (specified by a procedural text). Despite significant efforts in building resources and models for PMD, machine performance remains nonviable, and the reasoning processes underlying this performance are opaque. As such, we recast PMD to an explanatory self-dialog of questions and answers, which serve as evidence for a decision. As this reformulation enables an unprecedented transparency, we leverage a fine-tuned natural language inference (NLI) model to formulate two automated coherence metrics for generated explanations. Our results show that while open-source VLMs struggle with this task off-the-shelf, their accuracy, coherence, and dialog efficiency can be vastly improved by incorporating these coherence metrics into common inference and fine-tuning methods. Furthermore, our multi-faceted metrics can visualize common outcomes at a glance, highlighting areas for improvement.

+

+

+

+ 22. 【2412.11923】PICLe: Pseudo-Annotations for In-Context Learning in Low-Resource Named Entity Detection

+ 链接:https://arxiv.org/abs/2412.11923

+ 作者:Sepideh Mamooler,Syrielle Montariol,Alexander Mathis,Antoine Bosselut

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Large Language Models, enables Large Language, Language Models, Large Language, enables Large

+ 备注: Preprint

+

+ 点击查看摘要

+ Abstract:In-context learning (ICL) enables Large Language Models (LLMs) to perform tasks using few demonstrations, facilitating task adaptation when labeled examples are hard to obtain. However, ICL is sensitive to the choice of demonstrations, and it remains unclear which demonstration attributes enable in-context generalization. In this work, we conduct a perturbation study of in-context demonstrations for low-resource Named Entity Detection (NED). Our surprising finding is that in-context demonstrations with partially correct annotated entity mentions can be as effective for task transfer as fully correct demonstrations. Based off our findings, we propose Pseudo-annotated In-Context Learning (PICLe), a framework for in-context learning with noisy, pseudo-annotated demonstrations. PICLe leverages LLMs to annotate many demonstrations in a zero-shot first pass. We then cluster these synthetic demonstrations, sample specific sets of in-context demonstrations from each cluster, and predict entity mentions using each set independently. Finally, we use self-verification to select the final set of entity mentions. We evaluate PICLe on five biomedical NED datasets and show that, with zero human annotation, PICLe outperforms ICL in low-resource settings where limited gold examples can be used as in-context demonstrations.

+

+

+

+ 23. 【2412.11919】RetroLLM: Empowering Large Language Models to Retrieve Fine-grained Evidence within Generation

+ 链接:https://arxiv.org/abs/2412.11919

+ 作者:Xiaoxi Li,Jiajie Jin,Yujia Zhou,Yongkang Wu,Zhonghua Li,Qi Ye,Zhicheng Dou

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Information Retrieval (cs.IR)

+ 关键词:Large language models, exhibit remarkable generative, remarkable generative capabilities, Large language, language models

+ 备注:

+

+ 点击查看摘要

+ Abstract:Large language models (LLMs) exhibit remarkable generative capabilities but often suffer from hallucinations. Retrieval-augmented generation (RAG) offers an effective solution by incorporating external knowledge, but existing methods still face several limitations: additional deployment costs of separate retrievers, redundant input tokens from retrieved text chunks, and the lack of joint optimization of retrieval and generation. To address these issues, we propose \textbf{RetroLLM}, a unified framework that integrates retrieval and generation into a single, cohesive process, enabling LLMs to directly generate fine-grained evidence from the corpus with constrained decoding. Moreover, to mitigate false pruning in the process of constrained evidence generation, we introduce (1) hierarchical FM-Index constraints, which generate corpus-constrained clues to identify a subset of relevant documents before evidence generation, reducing irrelevant decoding space; and (2) a forward-looking constrained decoding strategy, which considers the relevance of future sequences to improve evidence accuracy. Extensive experiments on five open-domain QA datasets demonstrate RetroLLM's superior performance across both in-domain and out-of-domain tasks. The code is available at \url{this https URL}.

+

+

+

+ 24. 【2412.11912】CharacterBench: Benchmarking Character Customization of Large Language Models

+ 链接:https://arxiv.org/abs/2412.11912

+ 作者:Jinfeng Zhou,Yongkang Huang,Bosi Wen,Guanqun Bi,Yuxuan Chen,Pei Ke,Zhuang Chen,Xiyao Xiao,Libiao Peng,Kuntian Tang,Rongsheng Zhang,Le Zhang,Tangjie Lv,Zhipeng Hu,Hongning Wang,Minlie Huang

+ 类目:Computation and Language (cs.CL)

+ 关键词:Character-based dialogue, freely customize characters, aka role-playing, relies on LLMs, users to freely

+ 备注: AAAI 2025

+

+ 点击查看摘要

+ Abstract:Character-based dialogue (aka role-playing) enables users to freely customize characters for interaction, which often relies on LLMs, raising the need to evaluate LLMs' character customization capability. However, existing benchmarks fail to ensure a robust evaluation as they often only involve a single character category or evaluate limited dimensions. Moreover, the sparsity of character features in responses makes feature-focused generative evaluation both ineffective and inefficient. To address these issues, we propose CharacterBench, the largest bilingual generative benchmark, with 22,859 human-annotated samples covering 3,956 characters from 25 detailed character categories. We define 11 dimensions of 6 aspects, classified as sparse and dense dimensions based on whether character features evaluated by specific dimensions manifest in each response. We enable effective and efficient evaluation by crafting tailored queries for each dimension to induce characters' responses related to specific dimensions. Further, we develop CharacterJudge model for cost-effective and stable evaluations. Experiments show its superiority over SOTA automatic judges (e.g., GPT-4) and our benchmark's potential to optimize LLMs' character customization. Our repository is at this https URL.

+

+

+

+ 25. 【2412.11908】Can Language Models Rival Mathematics Students? Evaluating Mathematical Reasoning through Textual Manipulation and Human Experiments

+ 链接:https://arxiv.org/abs/2412.11908

+ 作者:Andrii Nikolaiev,Yiannos Stathopoulos,Simone Teufel

+ 类目:Computation and Language (cs.CL)

+ 关键词:recent large language, large language models, ability of recent, recent large, large language

+ 备注:

+

+ 点击查看摘要

+ Abstract:In this paper we look at the ability of recent large language models (LLMs) at solving mathematical problems in combinatorics. We compare models LLaMA-2, LLaMA-3.1, GPT-4, and Mixtral against each other and against human pupils and undergraduates with prior experience in mathematical olympiads. To facilitate these comparisons we introduce the Combi-Puzzles dataset, which contains 125 problem variants based on 25 combinatorial reasoning problems. Each problem is presented in one of five distinct forms, created by systematically manipulating the problem statements through adversarial additions, numeric parameter changes, and linguistic obfuscation. Our variations preserve the mathematical core and are designed to measure the generalisability of LLM problem-solving abilities, while also increasing confidence that problems are submitted to LLMs in forms that have not been seen as training instances. We found that a model based on GPT-4 outperformed all other models in producing correct responses, and performed significantly better in the mathematical variation of the problems than humans. We also found that modifications to problem statements significantly impact the LLM's performance, while human performance remains unaffected.

+

+

+

+ 26. 【2412.11896】Classification of Spontaneous and Scripted Speech for Multilingual Audio

+ 链接:https://arxiv.org/abs/2412.11896

+ 作者:Shahar Elisha,Andrew McDowell,Mariano Beguerisse-Díaz,Emmanouil Benetos

+ 类目:Computation and Language (cs.CL); Sound (cs.SD); Audio and Speech Processing (eess.AS)

+ 关键词:speech processing research, speech styles influence, styles influence speech, influence speech processing, processing research

+ 备注: Accepted to IEEE Spoken Language Technology Workshop 2024

+

+ 点击查看摘要

+ Abstract:Distinguishing scripted from spontaneous speech is an essential tool for better understanding how speech styles influence speech processing research. It can also improve recommendation systems and discovery experiences for media users through better segmentation of large recorded speech catalogues. This paper addresses the challenge of building a classifier that generalises well across different formats and languages. We systematically evaluate models ranging from traditional, handcrafted acoustic and prosodic features to advanced audio transformers, utilising a large, multilingual proprietary podcast dataset for training and validation. We break down the performance of each model across 11 language groups to evaluate cross-lingual biases. Our experimental analysis extends to publicly available datasets to assess the models' generalisability to non-podcast domains. Our results indicate that transformer-based models consistently outperform traditional feature-based techniques, achieving state-of-the-art performance in distinguishing between scripted and spontaneous speech across various languages.

+

+

+

+ 27. 【2412.11878】Using Instruction-Tuned Large Language Models to Identify Indicators of Vulnerability in Police Incident Narratives

+ 链接:https://arxiv.org/abs/2412.11878

+ 作者:Sam Relins,Daniel Birks,Charlie Lloyd

+ 类目:Computation and Language (cs.CL)

+ 关键词:routinely collected unstructured, describes police-public interactions, instruction tuned large, tuned large language, Boston Police Department

+ 备注: 33 pages, 6 figures Submitted to Journal of Quantitative Criminology

+

+ 点击查看摘要

+ Abstract:Objectives: Compare qualitative coding of instruction tuned large language models (IT-LLMs) against human coders in classifying the presence or absence of vulnerability in routinely collected unstructured text that describes police-public interactions. Evaluate potential bias in IT-LLM codings. Methods: Analyzing publicly available text narratives of police-public interactions recorded by Boston Police Department, we provide humans and IT-LLMs with qualitative labelling codebooks and compare labels generated by both, seeking to identify situations associated with (i) mental ill health; (ii) substance misuse; (iii) alcohol dependence; and (iv) homelessness. We explore multiple prompting strategies and model sizes, and the variability of labels generated by repeated prompts. Additionally, to explore model bias, we utilize counterfactual methods to assess the impact of two protected characteristics - race and gender - on IT-LLM classification. Results: Results demonstrate that IT-LLMs can effectively support human qualitative coding of police incident narratives. While there is some disagreement between LLM and human generated labels, IT-LLMs are highly effective at screening narratives where no vulnerabilities are present, potentially vastly reducing the requirement for human coding. Counterfactual analyses demonstrate that manipulations to both gender and race of individuals described in narratives have very limited effects on IT-LLM classifications beyond those expected by chance. Conclusions: IT-LLMs offer effective means to augment human qualitative coding in a way that requires much lower levels of resource to analyze large unstructured datasets. Moreover, they encourage specificity in qualitative coding, promote transparency, and provide the opportunity for more standardized, replicable approaches to analyzing large free-text police data sources.

+

+

+

+ 28. 【2412.11863】GeoX: Geometric Problem Solving Through Unified Formalized Vision-Language Pre-training

+ 链接:https://arxiv.org/abs/2412.11863

+ 作者:Renqiu Xia,Mingsheng Li,Hancheng Ye,Wenjie Wu,Hongbin Zhou,Jiakang Yuan,Tianshuo Peng,Xinyu Cai,Xiangchao Yan,Bin Wang,Conghui He,Botian Shi,Tao Chen,Junchi Yan,Bo Zhang

+ 类目:Computer Vision and Pattern Recognition (cs.CV); Computation and Language (cs.CL)

+ 关键词:Geometry Problem Solving, automatic Geometry Problem, Multi-modal Large Language, Large Language Models, automatic Geometry

+ 备注: Our code is available at [this https URL](https://github.com/UniModal4Reasoning/GeoX)

+

+ 点击查看摘要

+ Abstract:Despite their proficiency in general tasks, Multi-modal Large Language Models (MLLMs) struggle with automatic Geometry Problem Solving (GPS), which demands understanding diagrams, interpreting symbols, and performing complex reasoning. This limitation arises from their pre-training on natural images and texts, along with the lack of automated verification in the problem-solving process. Besides, current geometric specialists are limited by their task-specific designs, making them less effective for broader geometric problems. To this end, we present GeoX, a multi-modal large model focusing on geometric understanding and reasoning tasks. Given the significant differences between geometric diagram-symbol and natural image-text, we introduce unimodal pre-training to develop a diagram encoder and symbol decoder, enhancing the understanding of geometric images and corpora. Furthermore, we introduce geometry-language alignment, an effective pre-training paradigm that bridges the modality gap between unimodal geometric experts. We propose a Generator-And-Sampler Transformer (GS-Former) to generate discriminative queries and eliminate uninformative representations from unevenly distributed geometric signals. Finally, GeoX benefits from visual instruction tuning, empowering it to take geometric images and questions as input and generate verifiable solutions. Experiments show that GeoX outperforms both generalists and geometric specialists on publicly recognized benchmarks, such as GeoQA, UniGeo, Geometry3K, and PGPS9k.

+

+

+

+ 29. 【2412.11851】A Benchmark and Robustness Study of In-Context-Learning with Large Language Models in Music Entity Detection

+ 链接:https://arxiv.org/abs/2412.11851

+ 作者:Simon Hachmeier,Robert Jäschke

+ 类目:Computation and Language (cs.CL); Multimedia (cs.MM)

+ 关键词:Detecting music entities, processing music search, music search queries, analyzing music consumption, Detecting music

+ 备注:

+

+ 点击查看摘要

+ Abstract:Detecting music entities such as song titles or artist names is a useful application to help use cases like processing music search queries or analyzing music consumption on the web. Recent approaches incorporate smaller language models (SLMs) like BERT and achieve high results. However, further research indicates a high influence of entity exposure during pre-training on the performance of the models. With the advent of large language models (LLMs), these outperform SLMs in a variety of downstream tasks. However, researchers are still divided if this is applicable to tasks like entity detection in texts due to issues like hallucination. In this paper, we provide a novel dataset of user-generated metadata and conduct a benchmark and a robustness study using recent LLMs with in-context-learning (ICL). Our results indicate that LLMs in the ICL setting yield higher performance than SLMs. We further uncover the large impact of entity exposure on the best performing LLM in our study.

+

+

+

+ 30. 【2412.11835】Improved Models for Media Bias Detection and Subcategorization

+ 链接:https://arxiv.org/abs/2412.11835

+ 作者:Tim Menzner,Jochen L. Leidner

+ 类目:Computation and Language (cs.CL)

+ 关键词:present improved models, English news articles, bias in English, present improved, granular detection

+ 备注:

+

+ 点击查看摘要

+ Abstract:We present improved models for the granular detection and sub-classification news media bias in English news articles. We compare the performance of zero-shot versus fine-tuned large pre-trained neural transformer language models, explore how the level of detail of the classes affects performance on a novel taxonomy of 27 news bias-types, and demonstrate how using synthetically generated example data can be used to improve quality

+

+

+

+ 31. 【2412.11834】Wonderful Matrices: Combining for a More Efficient and Effective Foundation Model Architecture

+ 链接:https://arxiv.org/abs/2412.11834

+ 作者:Jingze Shi,Bingheng Wu

+ 类目:Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:combining sequence transformation, state space duality, combining sequence, combining state transformation, sequence transformation

+ 备注: The code is open-sourced at [this https URL](https://github.com/LoserCheems/Doge)

+

+ 点击查看摘要

+ Abstract:In order to make the foundation model more efficient and effective, our idea is combining sequence transformation and state transformation. First, we prove the availability of rotary position embedding in the state space duality algorithm, which reduces the perplexity of the hybrid quadratic causal self-attention and state space duality by more than 4%, to ensure that the combining sequence transformation unifies position encoding. Second, we propose dynamic mask attention, which maintains 100% accuracy in the more challenging multi-query associative recall task, improving by more than 150% compared to quadratic causal self-attention and state space duality, to ensure that the combining sequence transformation selectively filters relevant information. Third, we design cross domain mixture of experts, which makes the computational speed of expert retrieval with more than 1024 experts 8 to 10 times faster than the mixture of experts, to ensure that the combining state transformation quickly retrieval mixture. Finally, we summarize these matrix algorithms that can form the foundation model: Wonderful Matrices, which can be a competitor to popular model architectures.

+

+

+

+ 32. 【2412.11831】Are You Doubtful? Oh, It Might Be Difficult Then! Exploring the Use of Model Uncertainty for Question Difficulty Estimation

+ 链接:https://arxiv.org/abs/2412.11831

+ 作者:Leonidas Zotos,Hedderik van Rijn,Malvina Nissim

+ 类目:Computation and Language (cs.CL)

+ 关键词:assess learning progress, educational setting, learning progress, commonly used strategy, strategy to assess

+ 备注: 14 pages,7 figures

+

+ 点击查看摘要

+ Abstract:In an educational setting, an estimate of the difficulty of multiple-choice questions (MCQs), a commonly used strategy to assess learning progress, constitutes very useful information for both teachers and students. Since human assessment is costly from multiple points of view, automatic approaches to MCQ item difficulty estimation are investigated, yielding however mixed success until now. Our approach to this problem takes a different angle from previous work: asking various Large Language Models to tackle the questions included in two different MCQ datasets, we leverage model uncertainty to estimate item difficulty. By using both model uncertainty features as well as textual features in a Random Forest regressor, we show that uncertainty features contribute substantially to difficulty prediction, where difficulty is inversely proportional to the number of students who can correctly answer a question. In addition to showing the value of our approach, we also observe that our model achieves state-of-the-art results on the BEA publicly available dataset.

+

+

+

+ 33. 【2412.11823】Advancements and Challenges in Bangla Question Answering Models: A Comprehensive Review

+ 链接:https://arxiv.org/abs/2412.11823

+ 作者:Md Iftekhar Islam Tashik,Abdullah Khondoker,Enam Ahmed Taufik,Antara Firoz Parsa,S M Ishtiak Mahmud

+ 类目:Computation and Language (cs.CL)

+ 关键词:Bangla Question Answering, Natural Language Processing, Question Answering, experienced notable progress, Language Processing

+ 备注:

+

+ 点击查看摘要

+ Abstract:The domain of Natural Language Processing (NLP) has experienced notable progress in the evolution of Bangla Question Answering (QA) systems. This paper presents a comprehensive review of seven research articles that contribute to the progress in this domain. These research studies explore different aspects of creating question-answering systems for the Bangla language. They cover areas like collecting data, preparing it for analysis, designing models, conducting experiments, and interpreting results. The papers introduce innovative methods like using LSTM-based models with attention mechanisms, context-based QA systems, and deep learning techniques based on prior knowledge. However, despite the progress made, several challenges remain, including the lack of well-annotated data, the absence of high-quality reading comprehension datasets, and difficulties in understanding the meaning of words in context. Bangla QA models' precision and applicability are constrained by these challenges. This review emphasizes the significance of these research contributions by highlighting the developments achieved in creating Bangla QA systems as well as the ongoing effort required to get past roadblocks and improve the performance of these systems for actual language comprehension tasks.

+

+

+

+ 34. 【2412.11814】EventSum: A Large-Scale Event-Centric Summarization Dataset for Chinese Multi-News Documents

+ 链接:https://arxiv.org/abs/2412.11814

+ 作者:Mengna Zhu,Kaisheng Zeng,Mao Wang,Kaiming Xiao,Lei Hou,Hongbin Huang,Juanzi Li

+ 类目:Computation and Language (cs.CL)

+ 关键词:large-scale sports events, real life, evolve continuously, continuously over time, major disasters

+ 备注: Extended version for paper accepted to AAAI 2025

+

+ 点击查看摘要

+ Abstract:In real life, many dynamic events, such as major disasters and large-scale sports events, evolve continuously over time. Obtaining an overview of these events can help people quickly understand the situation and respond more effectively. This is challenging because the key information of the event is often scattered across multiple documents, involving complex event knowledge understanding and reasoning, which is under-explored in previous work. Therefore, we proposed the Event-Centric Multi-Document Summarization (ECS) task, which aims to generate concise and comprehensive summaries of a given event based on multiple related news documents. Based on this, we constructed the EventSum dataset, which was constructed using Baidu Baike entries and underwent extensive human annotation, to facilitate relevant research. It is the first large scale Chinese multi-document summarization dataset, containing 5,100 events and a total of 57,984 news documents, with an average of 11.4 input news documents and 13,471 characters per event. To ensure data quality and mitigate potential data leakage, we adopted a multi-stage annotation approach for manually labeling the test set. Given the complexity of event-related information, existing metrics struggle to comprehensively assess the quality of generated summaries. We designed specific metrics including Event Recall, Argument Recall, Causal Recall, and Temporal Recall along with corresponding calculation methods for evaluation. We conducted comprehensive experiments on EventSum to evaluate the performance of advanced long-context Large Language Models (LLMs) on this task. Our experimental results indicate that: 1) The event-centric multi-document summarization task remains challenging for existing long-context LLMs; 2) The recall metrics we designed are crucial for evaluating the comprehensiveness of the summary information.

+

+

+

+ 35. 【2412.11803】UAlign: Leveraging Uncertainty Estimations for Factuality Alignment on Large Language Models

+ 链接:https://arxiv.org/abs/2412.11803

+ 作者:Boyang Xue,Fei Mi,Qi Zhu,Hongru Wang,Rui Wang,Sheng Wang,Erxin Yu,Xuming Hu,Kam-Fai Wong

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Models, Large Language, demonstrating impressive capabilities, Language Models, knowledge boundaries

+ 备注:

+

+ 点击查看摘要

+ Abstract:Despite demonstrating impressive capabilities, Large Language Models (LLMs) still often struggle to accurately express the factual knowledge they possess, especially in cases where the LLMs' knowledge boundaries are ambiguous. To improve LLMs' factual expressions, we propose the UAlign framework, which leverages Uncertainty estimations to represent knowledge boundaries, and then explicitly incorporates these representations as input features into prompts for LLMs to Align with factual knowledge. First, we prepare the dataset on knowledge question-answering (QA) samples by calculating two uncertainty estimations, including confidence score and semantic entropy, to represent the knowledge boundaries for LLMs. Subsequently, using the prepared dataset, we train a reward model that incorporates uncertainty estimations and then employ the Proximal Policy Optimization (PPO) algorithm for factuality alignment on LLMs. Experimental results indicate that, by integrating uncertainty representations in LLM alignment, the proposed UAlign can significantly enhance the LLMs' capacities to confidently answer known questions and refuse unknown questions on both in-domain and out-of-domain tasks, showing reliability improvements and good generalizability over various prompt- and training-based baselines.

+

+

+

+ 36. 【2412.11795】ProsodyFM: Unsupervised Phrasing and Intonation Control for Intelligible Speech Synthesis

+ 链接:https://arxiv.org/abs/2412.11795

+ 作者:Xiangheng He,Junjie Chen,Zixing Zhang,Björn W. Schuller

+ 类目:Computation and Language (cs.CL); Sound (cs.SD); Audio and Speech Processing (eess.AS)

+ 关键词:meaning of words, intonation, rich information, literal meaning, Terminal Intonation Encoder

+ 备注: Accepted by AAAI 2025

+

+ 点击查看摘要

+ Abstract:Prosody contains rich information beyond the literal meaning of words, which is crucial for the intelligibility of speech. Current models still fall short in phrasing and intonation; they not only miss or misplace breaks when synthesizing long sentences with complex structures but also produce unnatural intonation. We propose ProsodyFM, a prosody-aware text-to-speech synthesis (TTS) model with a flow-matching (FM) backbone that aims to enhance the phrasing and intonation aspects of prosody. ProsodyFM introduces two key components: a Phrase Break Encoder to capture initial phrase break locations, followed by a Duration Predictor for the flexible adjustment of break durations; and a Terminal Intonation Encoder which integrates a set of intonation shape tokens combined with a novel Pitch Processor for more robust modeling of human-perceived intonation change. ProsodyFM is trained with no explicit prosodic labels and yet can uncover a broad spectrum of break durations and intonation patterns. Experimental results demonstrate that ProsodyFM can effectively improve the phrasing and intonation aspects of prosody, thereby enhancing the overall intelligibility compared to four state-of-the-art (SOTA) models. Out-of-distribution experiments show that this prosody improvement can further bring ProsodyFM superior generalizability for unseen complex sentences and speakers. Our case study intuitively illustrates the powerful and fine-grained controllability of ProsodyFM over phrasing and intonation.

+

+

+

+ 37. 【2412.11787】A Method for Detecting Legal Article Competition for Korean Criminal Law Using a Case-augmented Mention Graph

+ 链接:https://arxiv.org/abs/2412.11787

+ 作者:Seonho An,Young Yik Rhim,Min-Soo Kim

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Information Retrieval (cs.IR); Machine Learning (cs.LG)

+ 关键词:increasingly complex, growing more intricate, making it progressively, social systems, systems become increasingly

+ 备注: under review

+

+ 点击查看摘要

+ Abstract:As social systems become increasingly complex, legal articles are also growing more intricate, making it progressively harder for humans to identify any potential competitions among them, particularly when drafting new laws or applying existing laws. Despite this challenge, no method for detecting such competitions has been proposed so far. In this paper, we propose a new legal AI task called Legal Article Competition Detection (LACD), which aims to identify competing articles within a given law. Our novel retrieval method, CAM-Re2, outperforms existing relevant methods, reducing false positives by 20.8% and false negatives by 8.3%, while achieving a 98.2% improvement in precision@5, for the LACD task. We release our codes at this https URL.

+

+

+

+ 38. 【2412.11763】QUENCH: Measuring the gap between Indic and Non-Indic Contextual General Reasoning in LLMs

+ 链接:https://arxiv.org/abs/2412.11763

+ 作者:Mohammad Aflah Khan,Neemesh Yadav,Sarah Masud,Md. Shad Akhtar

+ 类目:Computation and Language (cs.CL)

+ 关键词:English Quizzing Benchmark, large language models, advanced benchmarking systems, rise of large, large language

+ 备注: 17 Pages, 6 Figures, 8 Tables, COLING 2025

+

+ 点击查看摘要

+ Abstract:The rise of large language models (LLMs) has created a need for advanced benchmarking systems beyond traditional setups. To this end, we introduce QUENCH, a novel text-based English Quizzing Benchmark manually curated and transcribed from YouTube quiz videos. QUENCH possesses masked entities and rationales for the LLMs to predict via generation. At the intersection of geographical context and common sense reasoning, QUENCH helps assess world knowledge and deduction capabilities of LLMs via a zero-shot, open-domain quizzing setup. We perform an extensive evaluation on 7 LLMs and 4 metrics, investigating the influence of model size, prompting style, geographical context, and gold-labeled rationale generation. The benchmarking concludes with an error analysis to which the LLMs are prone.

+

+

+

+ 39. 【2412.11757】SCITAT: A Question Answering Benchmark for Scientific Tables and Text Covering Diverse Reasoning Types

+ 链接:https://arxiv.org/abs/2412.11757

+ 作者:Xuanliang Zhang,Dingzirui Wang,Baoxin Wang,Longxu Dou,Xinyuan Lu,Keyan Xu,Dayong Wu,Qingfu Zhu,Wanxiang Che

+ 类目:Computation and Language (cs.CL)

+ 关键词:important task aimed, reasoning types, Scientific question answering, tables and text, current SQA datasets

+ 备注:

+

+ 点击查看摘要

+ Abstract:Scientific question answering (SQA) is an important task aimed at answering questions based on papers. However, current SQA datasets have limited reasoning types and neglect the relevance between tables and text, creating a significant gap with real scenarios. To address these challenges, we propose a QA benchmark for scientific tables and text with diverse reasoning types (SciTaT). To cover more reasoning types, we summarize various reasoning types from real-world questions. To involve both tables and text, we require the questions to incorporate tables and text as much as possible. Based on SciTaT, we propose a strong baseline (CaR), which combines various reasoning methods to address different reasoning types and process tables and text at the same time. CaR brings average improvements of 12.9% over other baselines on SciTaT, validating its effectiveness. Error analysis reveals the challenges of SciTaT, such as complex numerical calculations and domain knowledge.

+

+

+

+ 40. 【2412.11750】Common Ground, Diverse Roots: The Difficulty of Classifying Common Examples in Spanish Varieties

+ 链接:https://arxiv.org/abs/2412.11750

+ 作者:Javier A. Lopetegui,Arij Riabi,Djamé Seddah

+ 类目:Computation and Language (cs.CL)

+ 关键词:Variations in languages, NLP systems designed, culturally sensitive tasks, hate speech detection, conversational agents

+ 备注: Accepted to VARDIAL 2025

+

+ 点击查看摘要

+ Abstract:Variations in languages across geographic regions or cultures are crucial to address to avoid biases in NLP systems designed for culturally sensitive tasks, such as hate speech detection or dialog with conversational agents. In languages such as Spanish, where varieties can significantly overlap, many examples can be valid across them, which we refer to as common examples. Ignoring these examples may cause misclassifications, reducing model accuracy and fairness. Therefore, accounting for these common examples is essential to improve the robustness and representativeness of NLP systems trained on such data. In this work, we address this problem in the context of Spanish varieties. We use training dynamics to automatically detect common examples or errors in existing Spanish datasets. We demonstrate the efficacy of using predicted label confidence for our Datamaps \cite{swayamdipta-etal-2020-dataset} implementation for the identification of hard-to-classify examples, especially common examples, enhancing model performance in variety identification tasks. Additionally, we introduce a Cuban Spanish Variety Identification dataset with common examples annotations developed to facilitate more accurate detection of Cuban and Caribbean Spanish varieties. To our knowledge, this is the first dataset focused on identifying the Cuban, or any other Caribbean, Spanish variety.

+

+

+

+ 41. 【2412.11745】Beyond Dataset Creation: Critical View of Annotation Variation and Bias Probing of a Dataset for Online Radical Content Detection

+ 链接:https://arxiv.org/abs/2412.11745

+ 作者:Arij Riabi,Virginie Mouilleron,Menel Mahamdi,Wissam Antoun,Djamé Seddah

+ 类目:Computation and Language (cs.CL)

+ 关键词:poses significant risks, including inciting violence, spreading extremist ideologies, online platforms poses, platforms poses significant

+ 备注: Accepted to COLING 2025

+

+ 点击查看摘要

+ Abstract:The proliferation of radical content on online platforms poses significant risks, including inciting violence and spreading extremist ideologies. Despite ongoing research, existing datasets and models often fail to address the complexities of multilingual and diverse data. To bridge this gap, we introduce a publicly available multilingual dataset annotated with radicalization levels, calls for action, and named entities in English, French, and Arabic. This dataset is pseudonymized to protect individual privacy while preserving contextual information. Beyond presenting our \href{this https URL}{freely available dataset}, we analyze the annotation process, highlighting biases and disagreements among annotators and their implications for model performance. Additionally, we use synthetic data to investigate the influence of socio-demographic traits on annotation patterns and model predictions. Our work offers a comprehensive examination of the challenges and opportunities in building robust datasets for radical content detection, emphasizing the importance of fairness and transparency in model development.

+

+

+

+ 42. 【2412.11741】CSR:Achieving 1 Bit Key-Value Cache via Sparse Representation

+ 链接:https://arxiv.org/abs/2412.11741

+ 作者:Hongxuan Zhang,Yao Zhao,Jiaqi Zheng,Chenyi Zhuang,Jinjie Gu,Guihai Chen

+ 类目:Computation and Language (cs.CL)

+ 关键词:significant scalability challenges, long-context text applications, text applications utilizing, applications utilizing large, utilizing large language

+ 备注:

+

+ 点击查看摘要

+ Abstract:The emergence of long-context text applications utilizing large language models (LLMs) has presented significant scalability challenges, particularly in memory footprint. The linear growth of the Key-Value (KV) cache responsible for storing attention keys and values to minimize redundant computations can lead to substantial increases in memory consumption, potentially causing models to fail to serve with limited memory resources. To address this issue, we propose a novel approach called Cache Sparse Representation (CSR), which converts the KV cache by transforming the dense Key-Value cache tensor into sparse indexes and weights, offering a more memory-efficient representation during LLM inference. Furthermore, we introduce NeuralDict, a novel neural network-based method for automatically generating the dictionary used in our sparse representation. Our extensive experiments demonstrate that CSR achieves performance comparable to state-of-the-art KV cache quantization algorithms while maintaining robust functionality in memory-constrained environments.

+

+

+

+ 43. 【2412.11736】Personalized LLM for Generating Customized Responses to the Same Query from Different Users

+ 链接:https://arxiv.org/abs/2412.11736

+ 作者:Hang Zeng,Chaoyue Niu,Fan Wu,Chengfei Lv,Guihai Chen

+ 类目:Computation and Language (cs.CL)

+ 关键词:large language model, Existing work, questioner-aware LLM personalization, large language, assigned different responding

+ 备注: 9 pages

+

+ 点击查看摘要

+ Abstract:Existing work on large language model (LLM) personalization assigned different responding roles to LLM, but overlooked the diversity of questioners. In this work, we propose a new form of questioner-aware LLM personalization, generating different responses even for the same query from different questioners. We design a dual-tower model architecture with a cross-questioner general encoder and a questioner-specific encoder. We further apply contrastive learning with multi-view augmentation, pulling close the dialogue representations of the same questioner, while pulling apart those of different questioners. To mitigate the impact of question diversity on questioner-contrastive learning, we cluster the dialogues based on question similarity and restrict the scope of contrastive learning within each cluster. We also build a multi-questioner dataset from English and Chinese scripts and WeChat records, called MQDialog, containing 173 questioners and 12 responders. Extensive evaluation with different metrics shows a significant improvement in the quality of personalized response generation.

+

+

+

+ 44. 【2412.11732】Findings of the WMT 2024 Shared Task on Discourse-Level Literary Translation

+ 链接:https://arxiv.org/abs/2412.11732

+ 作者:Longyue Wang,Siyou Liu,Chenyang Lyu,Wenxiang Jiao,Xing Wang,Jiahao Xu,Zhaopeng Tu,Yan Gu,Weiyu Chen,Minghao Wu,Liting Zhou,Philipp Koehn,Andy Way,Yulin Yuan

+ 类目:Computation and Language (cs.CL)

+ 关键词:WMT translation shared, Discourse-Level Literary Translation, translation shared task, WMT translation, Literary Translation

+ 备注: WMT2024

+

+ 点击查看摘要

+ Abstract:Following last year, we have continued to host the WMT translation shared task this year, the second edition of the Discourse-Level Literary Translation. We focus on three language directions: Chinese-English, Chinese-German, and Chinese-Russian, with the latter two ones newly added. This year, we totally received 10 submissions from 5 academia and industry teams. We employ both automatic and human evaluations to measure the performance of the submitted systems. The official ranking of the systems is based on the overall human judgments. We release data, system outputs, and leaderboard at this https URL.

+

+

+

+ 45. 【2412.11716】LLMs Can Simulate Standardized Patients via Agent Coevolution

+ 链接:https://arxiv.org/abs/2412.11716

+ 作者:Zhuoyun Du,Lujie Zheng,Renjun Hu,Yuyang Xu,Xiawei Li,Ying Sun,Wei Chen,Jian Wu,Haolei Cai,Haohao Ying

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Human-Computer Interaction (cs.HC); Multiagent Systems (cs.MA)

+ 关键词:Large Language Model, Training medical personnel, requiring extensive domain, extensive domain expertise, remains a complex

+ 备注: Work in Progress

+

+ 点击查看摘要

+ Abstract:Training medical personnel using standardized patients (SPs) remains a complex challenge, requiring extensive domain expertise and role-specific practice. Most research on Large Language Model (LLM)-based simulated patients focuses on improving data retrieval accuracy or adjusting prompts through human feedback. However, this focus has overlooked the critical need for patient agents to learn a standardized presentation pattern that transforms data into human-like patient responses through unsupervised simulations. To address this gap, we propose EvoPatient, a novel simulated patient framework in which a patient agent and doctor agents simulate the diagnostic process through multi-turn dialogues, simultaneously gathering experience to improve the quality of both questions and answers, ultimately enabling human doctor training. Extensive experiments on various cases demonstrate that, by providing only overall SP requirements, our framework improves over existing reasoning methods by more than 10% in requirement alignment and better human preference, while achieving an optimal balance of resource consumption after evolving over 200 cases for 10 hours, with excellent generalizability. The code will be available at this https URL.

+

+

+

+ 46. 【2412.11713】Seeker: Towards Exception Safety Code Generation with Intermediate Language Agents Framework

+ 链接:https://arxiv.org/abs/2412.11713

+ 作者:Xuanming Zhang,Yuxuan Chen,Yiming Zheng,Zhexin Zhang,Yuan Yuan,Minlie Huang

+ 类目:Computation and Language (cs.CL); Software Engineering (cs.SE)

+ 关键词:exception handling, improper or missing, missing exception handling, handling, Distorted Handling Solution

+ 备注: 30 pages, 9 figures, submitted to ARR Dec

+

+ 点击查看摘要