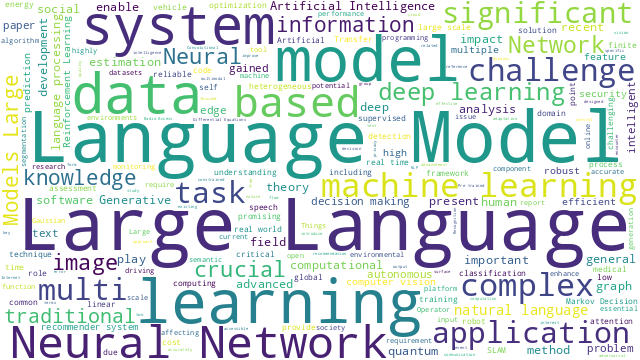

本篇博文主要展示每日从Arxiv论文网站获取的最新论文列表,以计算机视觉、自然语言处理、机器学习、人工智能等大方向进行划分。

+统计

+今日共更新452篇论文,其中:

+

+计算机视觉

+

+ 1. 标题:AutoAD II: The Sequel -- Who, When, and What in Movie Audio Description

+ 编号:[2]

+ 链接:https://arxiv.org/abs/2310.06838

+ 作者:Tengda Han, Max Bain, Arsha Nagrani, Gül Varol, Weidi Xie, Andrew Zisserman

+ 备注:ICCV2023. Project page: this https URL

+ 关键词:visually impaired audiences, suitable time intervals, Audio Description, CLIP visual features, impaired audiences

+

+ 点击查看摘要

+ Audio Description (AD) is the task of generating descriptions of visual content, at suitable time intervals, for the benefit of visually impaired audiences. For movies, this presents notable challenges -- AD must occur only during existing pauses in dialogue, should refer to characters by name, and ought to aid understanding of the storyline as a whole. To this end, we develop a new model for automatically generating movie AD, given CLIP visual features of the frames, the cast list, and the temporal locations of the speech; addressing all three of the 'who', 'when', and 'what' questions: (i) who -- we introduce a character bank consisting of the character's name, the actor that played the part, and a CLIP feature of their face, for the principal cast of each movie, and demonstrate how this can be used to improve naming in the generated AD; (ii) when -- we investigate several models for determining whether an AD should be generated for a time interval or not, based on the visual content of the interval and its neighbours; and (iii) what -- we implement a new vision-language model for this task, that can ingest the proposals from the character bank, whilst conditioning on the visual features using cross-attention, and demonstrate how this improves over previous architectures for AD text generation in an apples-to-apples comparison.

+

+

+

+ 2. 标题:What Does Stable Diffusion Know about the 3D Scene?

+ 编号:[4]

+ 链接:https://arxiv.org/abs/2310.06836

+ 作者:Guanqi Zhan, Chuanxia Zheng, Weidi Xie, Andrew Zisserman

+ 备注:

+ 关键词:highly photo-realistic images, Stable Diffusion enable, Stable Diffusion, Recent advances, advances in generative

+

+ 点击查看摘要

+ Recent advances in generative models like Stable Diffusion enable the generation of highly photo-realistic images. Our objective in this paper is to probe the diffusion network to determine to what extent it 'understands' different properties of the 3D scene depicted in an image. To this end, we make the following contributions: (i) We introduce a protocol to evaluate whether a network models a number of physical 'properties' of the 3D scene by probing for explicit features that represent these properties. The probes are applied on datasets of real images with annotations for the property. (ii) We apply this protocol to properties covering scene geometry, scene material, support relations, lighting, and view dependent measures. (iii) We find that Stable Diffusion is good at a number of properties including scene geometry, support relations, shadows and depth, but less performant for occlusion. (iv) We also apply the probes to other models trained at large-scale, including DINO and CLIP, and find their performance inferior to that of Stable Diffusion.

+

+

+

+ 3. 标题:Neural Bounding

+ 编号:[11]

+ 链接:https://arxiv.org/abs/2310.06822

+ 作者:Wenxin Liu, Michael Fischer, Paul D. Yoo, Tobias Ritschel

+ 备注:

+ 关键词:early inception, established concept, concept in computer, computer graphics, graphics and vision

+

+ 点击查看摘要

+ Bounding volumes are an established concept in computer graphics and vision tasks but have seen little change since their early inception. In this work, we study the use of neural networks as bounding volumes. Our key observation is that bounding, which so far has primarily been considered a problem of computational geometry, can be redefined as a problem of learning to classify space into free and empty. This learning-based approach is particularly advantageous in high-dimensional spaces, such as animated scenes with complex queries, where neural networks are known to excel. However, unlocking neural bounding requires a twist: allowing -- but also limiting -- false positives, while ensuring that the number of false negatives is strictly zero. We enable such tight and conservative results using a dynamically-weighted asymmetric loss function. Our results show that our neural bounding produces up to an order of magnitude fewer false positives than traditional methods.

+

+

+

+ 4. 标题:Uni3D: Exploring Unified 3D Representation at Scale

+ 编号:[24]

+ 链接:https://arxiv.org/abs/2310.06773

+ 作者:Junsheng Zhou, Jinsheng Wang, Baorui Ma, Yu-Shen Liu, Tiejun Huang, Xinlong Wang

+ 备注:Code and Demo: this https URL

+ 关键词:vision and language, images or text, extensively investigated, past few years, led to revolutions

+

+ 点击查看摘要

+ Scaling up representations for images or text has been extensively investigated in the past few years and has led to revolutions in learning vision and language. However, scalable representation for 3D objects and scenes is relatively unexplored. In this work, we present Uni3D, a 3D foundation model to explore the unified 3D representation at scale. Uni3D uses a 2D initialized ViT end-to-end pretrained to align the 3D point cloud features with the image-text aligned features. Via the simple architecture and pretext task, Uni3D can leverage abundant 2D pretrained models as initialization and image-text aligned models as the target, unlocking the great potential of 2D models and scaling-up strategies to the 3D world. We efficiently scale up Uni3D to one billion parameters, and set new records on a broad range of 3D tasks, such as zero-shot classification, few-shot classification, open-world understanding and part segmentation. We show that the strong Uni3D representation also enables applications such as 3D painting and retrieval in the wild. We believe that Uni3D provides a new direction for exploring both scaling up and efficiency of the representation in 3D domain.

+

+

+

+ 5. 标题:TopoMLP: An Simple yet Strong Pipeline for Driving Topology Reasoning

+ 编号:[34]

+ 链接:https://arxiv.org/abs/2310.06753

+ 作者:Dongming Wu, Jiahao Chang, Fan Jia, Yingfei Liu, Tiancai Wang, Jianbing Shen

+ 备注:The 1st solution for 1st OpenLane Topology in Autonomous Driving Challenge. Code is at this https URL

+ 关键词:comprehensively understand road, understand road scenes, present drivable routes, Topology, aims to comprehensively

+

+ 点击查看摘要

+ Topology reasoning aims to comprehensively understand road scenes and present drivable routes in autonomous driving. It requires detecting road centerlines (lane) and traffic elements, further reasoning their topology relationship, i.e., lane-lane topology, and lane-traffic topology. In this work, we first present that the topology score relies heavily on detection performance on lane and traffic elements. Therefore, we introduce a powerful 3D lane detector and an improved 2D traffic element detector to extend the upper limit of topology performance. Further, we propose TopoMLP, a simple yet high-performance pipeline for driving topology reasoning. Based on the impressive detection performance, we develop two simple MLP-based heads for topology generation. TopoMLP achieves state-of-the-art performance on OpenLane-V2 benchmark, i.e., 41.2% OLS with ResNet-50 backbone. It is also the 1st solution for 1st OpenLane Topology in Autonomous Driving Challenge. We hope such simple and strong pipeline can provide some new insights to the community. Code is at this https URL.

+

+

+

+ 6. 标题:HiFi-123: Towards High-fidelity One Image to 3D Content Generation

+ 编号:[38]

+ 链接:https://arxiv.org/abs/2310.06744

+ 作者:Wangbo Yu, Li Yuan, Yan-Pei Cao, Xiangjun Gao, Xiaoyu Li, Long Quan, Ying Shan, Yonghong Tian

+ 备注:

+ 关键词:Recent advances, diffusion models, models have enabled, single image, image

+

+ 点击查看摘要

+ Recent advances in text-to-image diffusion models have enabled 3D generation from a single image. However, current image-to-3D methods often produce suboptimal results for novel views, with blurred textures and deviations from the reference image, limiting their practical applications. In this paper, we introduce HiFi-123, a method designed for high-fidelity and multi-view consistent 3D generation. Our contributions are twofold: First, we propose a reference-guided novel view enhancement technique that substantially reduces the quality gap between synthesized and reference views. Second, capitalizing on the novel view enhancement, we present a novel reference-guided state distillation loss. When incorporated into the optimization-based image-to-3D pipeline, our method significantly improves 3D generation quality, achieving state-of-the-art performance. Comprehensive evaluations demonstrate the effectiveness of our approach over existing methods, both qualitatively and quantitatively.

+

+

+

+ 7. 标题:Domain Generalization by Rejecting Extreme Augmentations

+ 编号:[64]

+ 链接:https://arxiv.org/abs/2310.06670

+ 作者:Masih Aminbeidokhti, Fidel A. Guerrero Peña, Heitor Rapela Medeiros, Thomas Dubail, Eric Granger, Marco Pedersoli

+ 备注:

+ 关键词:regularizing deep learning, deep learning models, Data augmentation, test data follow, effective techniques

+

+ 点击查看摘要

+ Data augmentation is one of the most effective techniques for regularizing deep learning models and improving their recognition performance in a variety of tasks and domains. However, this holds for standard in-domain settings, in which the training and test data follow the same distribution. For the out-of-domain case, where the test data follow a different and unknown distribution, the best recipe for data augmentation is unclear. In this paper, we show that for out-of-domain and domain generalization settings, data augmentation can provide a conspicuous and robust improvement in performance. To do that, we propose a simple training procedure: (i) use uniform sampling on standard data augmentation transformations; (ii) increase the strength transformations to account for the higher data variance expected when working out-of-domain, and (iii) devise a new reward function to reject extreme transformations that can harm the training. With this procedure, our data augmentation scheme achieves a level of accuracy that is comparable to or better than state-of-the-art methods on benchmark domain generalization datasets. Code: \url{this https URL}

+

+

+

+ 8. 标题:Latent Diffusion Counterfactual Explanations

+ 编号:[65]

+ 链接:https://arxiv.org/abs/2310.06668

+ 作者:Karim Farid, Simon Schrodi, Max Argus, Thomas Brox

+ 备注:

+ 关键词:counterfactual generation, promising method, method for elucidating, Diffusion Counterfactual Explanations, Counterfactual explanations

+

+ 点击查看摘要

+ Counterfactual explanations have emerged as a promising method for elucidating the behavior of opaque black-box models. Recently, several works leveraged pixel-space diffusion models for counterfactual generation. To handle noisy, adversarial gradients during counterfactual generation -- causing unrealistic artifacts or mere adversarial perturbations -- they required either auxiliary adversarially robust models or computationally intensive guidance schemes. However, such requirements limit their applicability, e.g., in scenarios with restricted access to the model's training data. To address these limitations, we introduce Latent Diffusion Counterfactual Explanations (LDCE). LDCE harnesses the capabilities of recent class- or text-conditional foundation latent diffusion models to expedite counterfactual generation and focus on the important, semantic parts of the data. Furthermore, we propose a novel consensus guidance mechanism to filter out noisy, adversarial gradients that are misaligned with the diffusion model's implicit classifier. We demonstrate the versatility of LDCE across a wide spectrum of models trained on diverse datasets with different learning paradigms. Finally, we showcase how LDCE can provide insights into model errors, enhancing our understanding of black-box model behavior.

+

+

+

+ 9. 标题:SC2GAN: Rethinking Entanglement by Self-correcting Correlated GAN Space

+ 编号:[66]

+ 链接:https://arxiv.org/abs/2310.06667

+ 作者:Zikun Chen, Han Zhao, Parham Aarabi, Ruowei Jiang

+ 备注:Accepted to the Out Of Distribution Generalization in Computer Vision workshop at ICCV2023

+ 关键词:Generative Adversarial Networks, Adversarial Networks, learned latent space, Generative Adversarial, latent space

+

+ 点击查看摘要

+ Generative Adversarial Networks (GANs) can synthesize realistic images, with the learned latent space shown to encode rich semantic information with various interpretable directions. However, due to the unstructured nature of the learned latent space, it inherits the bias from the training data where specific groups of visual attributes that are not causally related tend to appear together, a phenomenon also known as spurious correlations, e.g., age and eyeglasses or women and lipsticks. Consequently, the learned distribution often lacks the proper modelling of the missing examples. The interpolation following editing directions for one attribute could result in entangled changes with other attributes. To address this problem, previous works typically adjust the learned directions to minimize the changes in other attributes, yet they still fail on strongly correlated features. In this work, we study the entanglement issue in both the training data and the learned latent space for the StyleGAN2-FFHQ model. We propose a novel framework SC$^2$GAN that achieves disentanglement by re-projecting low-density latent code samples in the original latent space and correcting the editing directions based on both the high-density and low-density regions. By leveraging the original meaningful directions and semantic region-specific layers, our framework interpolates the original latent codes to generate images with attribute combination that appears infrequently, then inverts these samples back to the original latent space. We apply our framework to pre-existing methods that learn meaningful latent directions and showcase its strong capability to disentangle the attributes with small amounts of low-density region samples added.

+

+

+

+ 10. 标题:Evaluating Explanation Methods for Vision-and-Language Navigation

+ 编号:[71]

+ 链接:https://arxiv.org/abs/2310.06654

+ 作者:Guanqi Chen, Lei Yang, Guanhua Chen, Jia Pan

+ 备注:Accepted by ECAI 2023

+ 关键词:embodied artificial intelligence, natural language instructions, achieving embodied artificial, deep neural models, deep neural

+

+ 点击查看摘要

+ The ability to navigate robots with natural language instructions in an unknown environment is a crucial step for achieving embodied artificial intelligence (AI). With the improving performance of deep neural models proposed in the field of vision-and-language navigation (VLN), it is equally interesting to know what information the models utilize for their decision-making in the navigation tasks. To understand the inner workings of deep neural models, various explanation methods have been developed for promoting explainable AI (XAI). But they are mostly applied to deep neural models for image or text classification tasks and little work has been done in explaining deep neural models for VLN tasks. In this paper, we address these problems by building quantitative benchmarks to evaluate explanation methods for VLN models in terms of faithfulness. We propose a new erasure-based evaluation pipeline to measure the step-wise textual explanation in the sequential decision-making setting. We evaluate several explanation methods for two representative VLN models on two popular VLN datasets and reveal valuable findings through our experiments.

+

+

+

+ 11. 标题:How (not) to ensemble LVLMs for VQA

+ 编号:[78]

+ 链接:https://arxiv.org/abs/2310.06641

+ 作者:Lisa Alazraki, Lluis Castrejon, Mostafa Dehghani, Fantine Huot, Jasper Uijlings, Thomas Mensink

+ 备注:Under submission

+ 关键词:Large Vision-Language Models, era of Large, Large Vision-Language, paper studies ensembling, paper studies

+

+ 点击查看摘要

+ This paper studies ensembling in the era of Large Vision-Language Models (LVLMs). Ensembling is a classical method to combine different models to get increased performance. In the recent work on Encyclopedic-VQA the authors examine a wide variety of models to solve their task: from vanilla LVLMs, to models including the caption as extra context, to models augmented with Lens-based retrieval of Wikipedia pages. Intuitively these models are highly complementary, which should make them ideal for ensembling. Indeed, an oracle experiment shows potential gains from 48.8% accuracy (the best single model) all the way up to 67% (best possible ensemble). So it is a trivial exercise to create an ensemble with substantial real gains. Or is it?

+

+

+

+ 12. 标题:Blind Dates: Examining the Expression of Temporality in Historical Photographs

+ 编号:[80]

+ 链接:https://arxiv.org/abs/2310.06633

+ 作者:Alexandra Barancová, Melvin Wevers, Nanne van Noord

+ 备注:

+ 关键词:computer vision models, discern temporal information, focusing specifically, capacity of computer, Boer Scene Detection

+

+ 点击查看摘要

+ This paper explores the capacity of computer vision models to discern temporal information in visual content, focusing specifically on historical photographs. We investigate the dating of images using OpenCLIP, an open-source implementation of CLIP, a multi-modal language and vision model. Our experiment consists of three steps: zero-shot classification, fine-tuning, and analysis of visual content. We use the \textit{De Boer Scene Detection} dataset, containing 39,866 gray-scale historical press photographs from 1950 to 1999. The results show that zero-shot classification is relatively ineffective for image dating, with a bias towards predicting dates in the past. Fine-tuning OpenCLIP with a logistic classifier improves performance and eliminates the bias. Additionally, our analysis reveals that images featuring buses, cars, cats, dogs, and people are more accurately dated, suggesting the presence of temporal markers. The study highlights the potential of machine learning models like OpenCLIP in dating images and emphasizes the importance of fine-tuning for accurate temporal analysis. Future research should explore the application of these findings to color photographs and diverse datasets.

+

+

+

+ 13. 标题:EViT: An Eagle Vision Transformer with Bi-Fovea Self-Attention

+ 编号:[82]

+ 链接:https://arxiv.org/abs/2310.06629

+ 作者:Yulong Shi, Mingwei Sun, Yongshuai Wang, Rui Wang, Hui Sun, Zengqiang Chen

+ 备注:11 pages, 4 figures

+ 关键词:demonstrated competitive performance, vision transformer, vision, eagle vision, Eagle Vision Transformers

+

+ 点击查看摘要

+ Because of the advancement of deep learning technology, vision transformer has demonstrated competitive performance in various computer vision tasks. Unfortunately, vision transformer still faces some challenges such as high computational complexity and absence of desirable inductive bias. To alleviate these problems, this study proposes a novel Bi-Fovea Self-Attention (BFSA) inspired by the physiological structure and characteristics of bi-fovea vision in eagle eyes. This BFSA can simulate the shallow fovea and deep fovea functions of eagle vision, enabling the network to extract feature representations of targets from coarse to fine, facilitating the interaction of multi-scale feature representations. Additionally, this study designs a Bionic Eagle Vision (BEV) block based on BFSA and CNN. It combines CNN and Vision Transformer, to enhance the network's local and global representation ability for targets. Furthermore, this study develops a unified and efficient general pyramid backbone network family, named Eagle Vision Transformers (EViTs) by stacking the BEV blocks. Experimental results on various computer vision tasks including image classification, object detection, instance segmentation and other transfer learning tasks show that the proposed EViTs perform significantly better than the baselines under similar model sizes, which exhibits faster speed on graphics processing unit compared to other models. Code will be released at this https URL.

+

+

+

+ 14. 标题:What If the TV Was Off? Examining Counterfactual Reasoning Abilities of Multi-modal Language Models

+ 编号:[83]

+ 链接:https://arxiv.org/abs/2310.06627

+ 作者:Letian Zhang, Xiaotong Zhai, Zhongkai Zhao, Xin Wen, Yongshuo Zong, Bingchen Zhao

+ 备注:Short paper accepted at ICCV 2023 VLAR workshop

+ 关键词:Counterfactual reasoning ability, human intelligence, core abilities, abilities of human, reasoning ability

+

+ 点击查看摘要

+ Counterfactual reasoning ability is one of the core abilities of human intelligence. This reasoning process involves the processing of alternatives to observed states or past events, and this process can improve our ability for planning and decision-making. In this work, we focus on benchmarking the counterfactual reasoning ability of multi-modal large language models. We take the question and answer pairs from the VQAv2 dataset and add one counterfactual presupposition to the questions, with the answer being modified accordingly. After generating counterfactual questions and answers using ChatGPT, we manually examine all generated questions and answers to ensure correctness. Over 2k counterfactual question and answer pairs are collected this way. We evaluate recent vision language models on our newly collected test dataset and found that all models exhibit a large performance drop compared to the results tested on questions without the counterfactual presupposition. This result indicates that there still exists space for developing vision language models. Apart from the vision language models, our proposed dataset can also serves as a benchmark for evaluating the ability of code generation LLMs, results demonstrate a large gap between GPT-4 and current open-source models. Our code and dataset are available at \url{this https URL}.

+

+

+

+ 15. 标题:V2X-AHD:Vehicle-to-Everything Cooperation Perception via Asymmetric Heterogenous Distillation Network

+ 编号:[91]

+ 链接:https://arxiv.org/abs/2310.06603

+ 作者:Caizhen He, Hai Wang, Long Chen, Tong Luo, Yingfeng Cai

+ 备注:

+ 关键词:provide accurate position, accurate position information, intelligent traffic systems, vehicle-road cooperation perception, intelligent traffic

+

+ 点击查看摘要

+ Object detection is the central issue of intelligent traffic systems, and recent advancements in single-vehicle lidar-based 3D detection indicate that it can provide accurate position information for intelligent agents to make decisions and plan. Compared with single-vehicle perception, multi-view vehicle-road cooperation perception has fundamental advantages, such as the elimination of blind spots and a broader range of perception, and has become a research hotspot. However, the current perception of cooperation focuses on improving the complexity of fusion while ignoring the fundamental problems caused by the absence of single-view outlines. We propose a multi-view vehicle-road cooperation perception system, vehicle-to-everything cooperative perception (V2X-AHD), in order to enhance the identification capability, particularly for predicting the vehicle's shape. At first, we propose an asymmetric heterogeneous distillation network fed with different training data to improve the accuracy of contour recognition, with multi-view teacher features transferring to single-view student features. While the point cloud data are sparse, we propose Spara Pillar, a spare convolutional-based plug-in feature extraction backbone, to reduce the number of parameters and improve and enhance feature extraction capabilities. Moreover, we leverage the multi-head self-attention (MSA) to fuse the single-view feature, and the lightweight design makes the fusion feature a smooth expression. The results of applying our algorithm to the massive open dataset V2Xset demonstrate that our method achieves the state-of-the-art result. The V2X-AHD can effectively improve the accuracy of 3D object detection and reduce the number of network parameters, according to this study, which serves as a benchmark for cooperative perception. The code for this article is available at this https URL.

+

+

+

+ 16. 标题:Pi-DUAL: Using Privileged Information to Distinguish Clean from Noisy Labels

+ 编号:[93]

+ 链接:https://arxiv.org/abs/2310.06600

+ 作者:Ke Wang, Guillermo Ortiz-Jimenez, Rodolphe Jenatton, Mark Collier, Efi Kokiopoulou, Pascal Frossard

+ 备注:

+ 关键词:pervasive problem, problem in deep, compromises the generalization, Label noise, deep learning

+

+ 点击查看摘要

+ Label noise is a pervasive problem in deep learning that often compromises the generalization performance of trained models. Recently, leveraging privileged information (PI) -- information available only during training but not at test time -- has emerged as an effective approach to mitigate this issue. Yet, existing PI-based methods have failed to consistently outperform their no-PI counterparts in terms of preventing overfitting to label noise. To address this deficiency, we introduce Pi-DUAL, an architecture designed to harness PI to distinguish clean from wrong labels. Pi-DUAL decomposes the output logits into a prediction term, based on conventional input features, and a noise-fitting term influenced solely by PI. A gating mechanism steered by PI adaptively shifts focus between these terms, allowing the model to implicitly separate the learning paths of clean and wrong labels. Empirically, Pi-DUAL achieves significant performance improvements on key PI benchmarks (e.g., +6.8% on ImageNet-PI), establishing a new state-of-the-art test set accuracy. Additionally, Pi-DUAL is a potent method for identifying noisy samples post-training, outperforming other strong methods at this task. Overall, Pi-DUAL is a simple, scalable and practical approach for mitigating the effects of label noise in a variety of real-world scenarios with PI.

+

+

+

+ 17. 标题:REVO-LION: Evaluating and Refining Vision-Language Instruction Tuning Datasets

+ 编号:[95]

+ 链接:https://arxiv.org/abs/2310.06594

+ 作者:Ning Liao, Shaofeng Zhang, Renqiu Xia, Bo Zhang, Min Cao, Yu Qiao, Junchi Yan

+ 备注:

+ 关键词:emerging line, VLIT datasets, VLIT, all-powerful VLIT model, dataset

+

+ 点击查看摘要

+ There is an emerging line of research on multimodal instruction tuning, and a line of benchmarks have been proposed for evaluating these models recently. Instead of evaluating the models directly, in this paper we try to evaluate the Vision-Language Instruction-Tuning (VLIT) datasets themselves and further seek the way of building a dataset for developing an all-powerful VLIT model, which we believe could also be of utility for establishing a grounded protocol for benchmarking VLIT models. For effective analysis of VLIT datasets that remains an open question, we propose a tune-cross-evaluation paradigm: tuning on one dataset and evaluating on the others in turn. For each single tune-evaluation experiment set, we define the Meta Quality (MQ) as the mean score measured by a series of caption metrics including BLEU, METEOR, and ROUGE-L to quantify the quality of a certain dataset or a sample. On this basis, to evaluate the comprehensiveness of a dataset, we develop the Dataset Quality (DQ) covering all tune-evaluation sets. To lay the foundation for building a comprehensive dataset and developing an all-powerful model for practical applications, we further define the Sample Quality (SQ) to quantify the all-sided quality of each sample. Extensive experiments validate the rationality of the proposed evaluation paradigm. Based on the holistic evaluation, we build a new dataset, REVO-LION (REfining VisiOn-Language InstructiOn tuNing), by collecting samples with higher SQ from each dataset. With only half of the full data, the model trained on REVO-LION can achieve performance comparable to simply adding all VLIT datasets up. In addition to developing an all-powerful model, REVO-LION also includes an evaluation set, which is expected to serve as a convenient evaluation benchmark for future research.

+

+

+

+ 18. 标题:Hierarchical Mask2Former: Panoptic Segmentation of Crops, Weeds and Leaves

+ 编号:[99]

+ 链接:https://arxiv.org/abs/2310.06582

+ 作者:Madeleine Darbyshire, Elizabeth Sklar, Simon Parsons

+ 备注:6 pages, 5 figures, 2 tables, for code, see this https URL

+ 关键词:sectors including agriculture, enable detailed inferences, Advancements in machine, machine vision, potential to transform

+

+ 点击查看摘要

+ Advancements in machine vision that enable detailed inferences to be made from images have the potential to transform many sectors including agriculture. Precision agriculture, where data analysis enables interventions to be precisely targeted, has many possible applications. Precision spraying, for example, can limit the application of herbicide only to weeds, or limit the application of fertiliser only to undernourished crops, instead of spraying the entire field. The approach promises to maximise yields, whilst minimising resource use and harms to the surrounding environment. To this end, we propose a hierarchical panoptic segmentation method to simultaneously identify indicators of plant growth and locate weeds within an image. We adapt Mask2Former, a state-of-the-art architecture for panoptic segmentation, to predict crop, weed and leaf masks. We achieve a PQ† of 75.99. Additionally, we explore approaches to make the architecture more compact and therefore more suitable for time and compute constrained applications. With our more compact architecture, inference is up to 60% faster and the reduction in PQ† is less than 1%.

+

+

+

+ 19. 标题:Energy-Efficient Visual Search by Eye Movement and Low-Latency Spiking Neural Network

+ 编号:[101]

+ 链接:https://arxiv.org/abs/2310.06578

+ 作者:Yunhui Zhou, Dongqi Han, Yuguo Yu

+ 备注:

+ 关键词:incorporates non-uniform resolution, vision incorporates non-uniform, visual field size, non-uniform resolution retina, spiking neural network

+

+ 点击查看摘要

+ Human vision incorporates non-uniform resolution retina, efficient eye movement strategy, and spiking neural network (SNN) to balance the requirements in visual field size, visual resolution, energy cost, and inference latency. These properties have inspired interest in developing human-like computer vision. However, existing models haven't fully incorporated the three features of human vision, and their learned eye movement strategies haven't been compared with human's strategy, making the models' behavior difficult to interpret. Here, we carry out experiments to examine human visual search behaviors and establish the first SNN-based visual search model. The model combines an artificial retina with spiking feature extraction, memory, and saccade decision modules, and it employs population coding for fast and efficient saccade decisions. The model can learn either a human-like or a near-optimal fixation strategy, outperform humans in search speed and accuracy, and achieve high energy efficiency through short saccade decision latency and sparse activation. It also suggests that the human search strategy is suboptimal in terms of search speed. Our work connects modeling of vision in neuroscience and machine learning and sheds light on developing more energy-efficient computer vision algorithms.

+

+

+

+ 20. 标题:SketchBodyNet: A Sketch-Driven Multi-faceted Decoder Network for 3D Human Reconstruction

+ 编号:[102]

+ 链接:https://arxiv.org/abs/2310.06577

+ 作者:Fei Wang, Kongzhang Tang, Hefeng Wu, Baoquan Zhao, Hao Cai, Teng Zhou

+ 备注:9 pages, to appear in Pacific Graphics 2023

+ 关键词:received increasing attention, increasing attention recently, attention recently due, received increasing, recently due

+

+ 点击查看摘要

+ Reconstructing 3D human shapes from 2D images has received increasing attention recently due to its fundamental support for many high-level 3D applications. Compared with natural images, freehand sketches are much more flexible to depict various shapes, providing a high potential and valuable way for 3D human reconstruction. However, such a task is highly challenging. The sparse abstract characteristics of sketches add severe difficulties, such as arbitrariness, inaccuracy, and lacking image details, to the already badly ill-posed problem of 2D-to-3D reconstruction. Although current methods have achieved great success in reconstructing 3D human bodies from a single-view image, they do not work well on freehand sketches. In this paper, we propose a novel sketch-driven multi-faceted decoder network termed SketchBodyNet to address this task. Specifically, the network consists of a backbone and three separate attention decoder branches, where a multi-head self-attention module is exploited in each decoder to obtain enhanced features, followed by a multi-layer perceptron. The multi-faceted decoders aim to predict the camera, shape, and pose parameters, respectively, which are then associated with the SMPL model to reconstruct the corresponding 3D human mesh. In learning, existing 3D meshes are projected via the camera parameters into 2D synthetic sketches with joints, which are combined with the freehand sketches to optimize the model. To verify our method, we collect a large-scale dataset of about 26k freehand sketches and their corresponding 3D meshes containing various poses of human bodies from 14 different angles. Extensive experimental results demonstrate our SketchBodyNet achieves superior performance in reconstructing 3D human meshes from freehand sketches.

+

+

+

+ 21. 标题:Efficient Retrieval of Images with Irregular Patterns using Morphological Image Analysis: Applications to Industrial and Healthcare datasets

+ 编号:[105]

+ 链接:https://arxiv.org/abs/2310.06566

+ 作者:Jiajun Zhang, Georgina Cosma, Sarah Bugby, Jason Watkins

+ 备注:35 pages, 5 figures, 19 tables (17 tables in appendix), submitted to Special Issue: Advances and Challenges in Multimodal Machine Learning 2nd Edition, Journal of Imaging, MDPI

+ 关键词:process of searching, searching and retrieving, database based, visual content, images

+

+ 点击查看摘要

+ Image retrieval is the process of searching and retrieving images from a database based on their visual content and features. Recently, much attention has been directed towards the retrieval of irregular patterns within industrial or medical images by extracting features from the images, such as deep features, colour-based features, shape-based features and local features. This has applications across a spectrum of industries, including fault inspection, disease diagnosis, and maintenance prediction. This paper proposes an image retrieval framework to search for images containing similar irregular patterns by extracting a set of morphological features (DefChars) from images; the datasets employed in this paper contain wind turbine blade images with defects, chest computerised tomography scans with COVID-19 infection, heatsink images with defects, and lake ice images. The proposed framework was evaluated with different feature extraction methods (DefChars, resized raw image, local binary pattern, and scale-invariant feature transforms) and distance metrics to determine the most efficient parameters in terms of retrieval performance across datasets. The retrieval results show that the proposed framework using the DefChars and the Manhattan distance metric achieves a mean average precision of 80% and a low standard deviation of 0.09 across classes of irregular patterns, outperforming alternative feature-metric combinations across all datasets. Furthermore, the low standard deviation between each class highlights DefChars' capability for a reliable image retrieval task, even in the presence of class imbalances or small-sized datasets.

+

+

+

+ 22. 标题:Compositional Representation Learning for Brain Tumour Segmentation

+ 编号:[106]

+ 链接:https://arxiv.org/abs/2310.06562

+ 作者:Xiao Liu, Antanas Kascenas, Hannah Watson, Sotirios A. Tsaftaris, Alison Q. O'Neil

+ 备注:Accepted by DART workshop, MICCAI 2023

+ 关键词:achieve human expert-level, human expert-level performance, large amount, achieve human, human expert-level

+

+ 点击查看摘要

+ For brain tumour segmentation, deep learning models can achieve human expert-level performance given a large amount of data and pixel-level annotations. However, the expensive exercise of obtaining pixel-level annotations for large amounts of data is not always feasible, and performance is often heavily reduced in a low-annotated data regime. To tackle this challenge, we adapt a mixed supervision framework, vMFNet, to learn robust compositional representations using unsupervised learning and weak supervision alongside non-exhaustive pixel-level pathology labels. In particular, we use the BraTS dataset to simulate a collection of 2-point expert pathology annotations indicating the top and bottom slice of the tumour (or tumour sub-regions: peritumoural edema, GD-enhancing tumour, and the necrotic / non-enhancing tumour) in each MRI volume, from which weak image-level labels that indicate the presence or absence of the tumour (or the tumour sub-regions) in the image are constructed. Then, vMFNet models the encoded image features with von-Mises-Fisher (vMF) distributions, via learnable and compositional vMF kernels which capture information about structures in the images. We show that good tumour segmentation performance can be achieved with a large amount of weakly labelled data but only a small amount of fully-annotated data. Interestingly, emergent learning of anatomical structures occurs in the compositional representation even given only supervision relating to pathology (tumour).

+

+

+

+ 23. 标题:Be Careful What You Smooth For: Label Smoothing Can Be a Privacy Shield but Also a Catalyst for Model Inversion Attacks

+ 编号:[111]

+ 链接:https://arxiv.org/abs/2310.06549

+ 作者:Lukas Struppek, Dominik Hintersdorf, Kristian Kersting

+ 备注:23 pages, 8 tables, 8 figures

+ 关键词:showing diverse benefits, widely adopted regularization, adopted regularization method, deep learning, showing diverse

+

+ 点击查看摘要

+ Label smoothing -- using softened labels instead of hard ones -- is a widely adopted regularization method for deep learning, showing diverse benefits such as enhanced generalization and calibration. Its implications for preserving model privacy, however, have remained unexplored. To fill this gap, we investigate the impact of label smoothing on model inversion attacks (MIAs), which aim to generate class-representative samples by exploiting the knowledge encoded in a classifier, thereby inferring sensitive information about its training data. Through extensive analyses, we uncover that traditional label smoothing fosters MIAs, thereby increasing a model's privacy leakage. Even more, we reveal that smoothing with negative factors counters this trend, impeding the extraction of class-related information and leading to privacy preservation, beating state-of-the-art defenses. This establishes a practical and powerful novel way for enhancing model resilience against MIAs.

+

+

+

+ 24. 标题:Perceptual MAE for Image Manipulation Localization: A High-level Vision Learner Focusing on Low-level Features

+ 编号:[121]

+ 链接:https://arxiv.org/abs/2310.06525

+ 作者:Xiaochen Ma, Jizhe Zhou, Xiong Xu, Zhuohang Jiang, Chi-Man Pun

+ 备注:

+ 关键词:making Image Manipulation, multimedia forensics faces, multimedia generation technology, forensics faces unprecedented, faces unprecedented challenges

+

+ 点击查看摘要

+ Nowadays, multimedia forensics faces unprecedented challenges due to the rapid advancement of multimedia generation technology thereby making Image Manipulation Localization (IML) crucial in the pursuit of truth. The key to IML lies in revealing the artifacts or inconsistencies between the tampered and authentic areas, which are evident under pixel-level features. Consequently, existing studies treat IML as a low-level vision task, focusing on allocating tampered masks by crafting pixel-level features such as image RGB noises, edge signals, or high-frequency features. However, in practice, tampering commonly occurs at the object level, and different classes of objects have varying likelihoods of becoming targets of tampering. Therefore, object semantics are also vital in identifying the tampered areas in addition to pixel-level features. This necessitates IML models to carry out a semantic understanding of the entire image. In this paper, we reformulate the IML task as a high-level vision task that greatly benefits from low-level features. Based on such an interpretation, we propose a method to enhance the Masked Autoencoder (MAE) by incorporating high-resolution inputs and a perceptual loss supervision module, which is termed Perceptual MAE (PMAE). While MAE has demonstrated an impressive understanding of object semantics, PMAE can also compensate for low-level semantics with our proposed enhancements. Evidenced by extensive experiments, this paradigm effectively unites the low-level and high-level features of the IML task and outperforms state-of-the-art tampering localization methods on all five publicly available datasets.

+

+

+

+ 25. 标题:Watt For What: Rethinking Deep Learning's Energy-Performance Relationship

+ 编号:[122]

+ 链接:https://arxiv.org/abs/2310.06522

+ 作者:Shreyank N Gowda, Xinyue Hao, Gen Li, Laura Sevilla-Lara, Shashank Narayana Gowda

+ 备注:

+ 关键词:natural language processing, achieving unprecedented levels, revolutionized various fields, language processing, image recognition

+

+ 点击查看摘要

+ Deep learning models have revolutionized various fields, from image recognition to natural language processing, by achieving unprecedented levels of accuracy. However, their increasing energy consumption has raised concerns about their environmental impact, disadvantaging smaller entities in research and exacerbating global energy consumption. In this paper, we explore the trade-off between model accuracy and electricity consumption, proposing a metric that penalizes large consumption of electricity. We conduct a comprehensive study on the electricity consumption of various deep learning models across different GPUs, presenting a detailed analysis of their accuracy-efficiency trade-offs. By evaluating accuracy per unit of electricity consumed, we demonstrate how smaller, more energy-efficient models can significantly expedite research while mitigating environmental concerns. Our results highlight the potential for a more sustainable approach to deep learning, emphasizing the importance of optimizing models for efficiency. This research also contributes to a more equitable research landscape, where smaller entities can compete effectively with larger counterparts. This advocates for the adoption of efficient deep learning practices to reduce electricity consumption, safeguarding the environment for future generations whilst also helping ensure a fairer competitive landscape.

+

+

+

+ 26. 标题:Deep Learning for Automatic Detection and Facial Recognition in Japanese Macaques: Illuminating Social Networks

+ 编号:[136]

+ 链接:https://arxiv.org/abs/2310.06489

+ 作者:Julien Paulet (UJM), Axel Molina (ENS-PSL), Benjamin Beltzung (IPHC), Takafumi Suzumura, Shinya Yamamoto, Cédric Sueur (IPHC, IUF, ANTHROPO LAB)

+ 备注:

+ 关键词:social structures understanding, ecology and ethology, structures understanding, plays a pivotal, pivotal role

+

+ 点击查看摘要

+ Individual identification plays a pivotal role in ecology and ethology, notably as a tool for complex social structures understanding. However, traditional identification methods often involve invasive physical tags and can prove both disruptive for animals and time-intensive for researchers. In recent years, the integration of deep learning in research offered new methodological perspectives through automatization of complex tasks. Harnessing object detection and recognition technologies is increasingly used by researchers to achieve identification on video footage. This study represents a preliminary exploration into the development of a non-invasive tool for face detection and individual identification of Japanese macaques (Macaca fuscata) through deep learning. The ultimate goal of this research is, using identifications done on the dataset, to automatically generate a social network representation of the studied population. The current main results are promising: (i) the creation of a Japanese macaques' face detector (Faster-RCNN model), reaching a 82.2% accuracy and (ii) the creation of an individual recognizer for K{ō}jima island macaques population (YOLOv8n model), reaching a 83% accuracy. We also created a K{ō}jima population social network by traditional methods, based on co-occurrences on videos. Thus, we provide a benchmark against which the automatically generated network will be assessed for reliability. These preliminary results are a testament to the potential of this innovative approach to provide the scientific community with a tool for tracking individuals and social network studies in Japanese macaques.

+

+

+

+ 27. 标题:SpikeCLIP: A Contrastive Language-Image Pretrained Spiking Neural Network

+ 编号:[137]

+ 链接:https://arxiv.org/abs/2310.06488

+ 作者:Tianlong Li, Wenhao Liu, Changze Lv, Jianhan Xu, Cenyuan Zhang, Muling Wu, Xiaoqing Zheng, Xuanjing Huang

+ 备注:

+ 关键词:Spiking neural networks, deep neural networks, neural networks, improved energy efficiency, Spiking neural

+

+ 点击查看摘要

+ Spiking neural networks (SNNs) have demonstrated the capability to achieve comparable performance to deep neural networks (DNNs) in both visual and linguistic domains while offering the advantages of improved energy efficiency and adherence to biological plausibility. However, the extension of such single-modality SNNs into the realm of multimodal scenarios remains an unexplored territory. Drawing inspiration from the concept of contrastive language-image pre-training (CLIP), we introduce a novel framework, named SpikeCLIP, to address the gap between two modalities within the context of spike-based computing through a two-step recipe involving ``Alignment Pre-training + Dual-Loss Fine-tuning". Extensive experiments demonstrate that SNNs achieve comparable results to their DNN counterparts while significantly reducing energy consumption across a variety of datasets commonly used for multimodal model evaluation. Furthermore, SpikeCLIP maintains robust performance in image classification tasks that involve class labels not predefined within specific categories.

+

+

+

+ 28. 标题:Topological RANSAC for instance verification and retrieval without fine-tuning

+ 编号:[138]

+ 链接:https://arxiv.org/abs/2310.06486

+ 作者:Guoyuan An, Juhyung Seon, Inkyu An, Yuchi Huo, Sung-Eui Yoon

+ 备注:

+ 关键词:enhancing explainable image, explainable image retrieval, set is unavailable, paper presents, presents an innovative

+

+ 点击查看摘要

+ This paper presents an innovative approach to enhancing explainable image retrieval, particularly in situations where a fine-tuning set is unavailable. The widely-used SPatial verification (SP) method, despite its efficacy, relies on a spatial model and the hypothesis-testing strategy for instance recognition, leading to inherent limitations, including the assumption of planar structures and neglect of topological relations among features. To address these shortcomings, we introduce a pioneering technique that replaces the spatial model with a topological one within the RANSAC process. We propose bio-inspired saccade and fovea functions to verify the topological consistency among features, effectively circumventing the issues associated with SP's spatial model. Our experimental results demonstrate that our method significantly outperforms SP, achieving state-of-the-art performance in non-fine-tuning retrieval. Furthermore, our approach can enhance performance when used in conjunction with fine-tuned features. Importantly, our method retains high explainability and is lightweight, offering a practical and adaptable solution for a variety of real-world applications.

+

+

+

+ 29. 标题:Focus on Local Regions for Query-based Object Detection

+ 编号:[145]

+ 链接:https://arxiv.org/abs/2310.06470

+ 作者:Hongbin Xu, Yamei Xia, Shuai Zhao, Bo Cheng

+ 备注:

+ 关键词:garnered significant attention, advent of DETR, garnered significant, significant attention, DETR

+

+ 点击查看摘要

+ Query-based methods have garnered significant attention in object detection since the advent of DETR, the pioneering end-to-end query-based detector. However, these methods face challenges like slow convergence and suboptimal performance. Notably, self-attention in object detection often hampers convergence due to its global focus. To address these issues, we propose FoLR, a transformer-like architecture with only decoders. We enhance the self-attention mechanism by isolating connections between irrelevant objects that makes it focus on local regions but not global regions. We also design the adaptive sampling method to extract effective features based on queries' local regions from feature maps. Additionally, we employ a look-back strategy for decoders to retain prior information, followed by the Feature Mixer module to fuse features and queries. Experimental results demonstrate FoLR's state-of-the-art performance in query-based detectors, excelling in convergence speed and computational efficiency.

+

+

+

+ 30. 标题:A Geometrical Approach to Evaluate the Adversarial Robustness of Deep Neural Networks

+ 编号:[147]

+ 链接:https://arxiv.org/abs/2310.06468

+ 作者:Yang Wang, Bo Dong, Ke Xu, Haiyin Piao, Yufei Ding, Baocai Yin, Xin Yang

+ 备注:ACM Transactions on Multimedia Computing, Communications, and Applications (ACM TOMM)

+ 关键词:Deep Neural Networks, computer vision tasks, Deep Neural, Neural Networks, adversarial

+

+ 点击查看摘要

+ Deep Neural Networks (DNNs) are widely used for computer vision tasks. However, it has been shown that deep models are vulnerable to adversarial attacks, i.e., their performances drop when imperceptible perturbations are made to the original inputs, which may further degrade the following visual tasks or introduce new problems such as data and privacy security. Hence, metrics for evaluating the robustness of deep models against adversarial attacks are desired. However, previous metrics are mainly proposed for evaluating the adversarial robustness of shallow networks on the small-scale datasets. Although the Cross Lipschitz Extreme Value for nEtwork Robustness (CLEVER) metric has been proposed for large-scale datasets (e.g., the ImageNet dataset), it is computationally expensive and its performance relies on a tractable number of samples. In this paper, we propose the Adversarial Converging Time Score (ACTS), an attack-dependent metric that quantifies the adversarial robustness of a DNN on a specific input. Our key observation is that local neighborhoods on a DNN's output surface would have different shapes given different inputs. Hence, given different inputs, it requires different time for converging to an adversarial sample. Based on this geometry meaning, ACTS measures the converging time as an adversarial robustness metric. We validate the effectiveness and generalization of the proposed ACTS metric against different adversarial attacks on the large-scale ImageNet dataset using state-of-the-art deep networks. Extensive experiments show that our ACTS metric is an efficient and effective adversarial metric over the previous CLEVER metric.

+

+

+

+ 31. 标题:Solution for SMART-101 Challenge of ICCV Multi-modal Algorithmic Reasoning Task 2023

+ 编号:[158]

+ 链接:https://arxiv.org/abs/2310.06440

+ 作者:Xiangyu Wu, Yang Yang, Shengdong Xu, Yifeng Wu, Qingguo Chen, Jianfeng Lu

+ 备注:

+ 关键词:Algorithmic Reasoning Task, Multi-modal Algorithmic Reasoning, Reasoning Task, Multi-modal Algorithmic, Algorithmic Reasoning

+

+ 点击查看摘要

+ In this paper, we present our solution to a Multi-modal Algorithmic Reasoning Task: SMART-101 Challenge. Different from the traditional visual question-answering datasets, this challenge evaluates the abstraction, deduction, and generalization abilities of neural networks in solving visuolinguistic puzzles designed specifically for children in the 6-8 age group. We employed a divide-and-conquer approach. At the data level, inspired by the challenge paper, we categorized the whole questions into eight types and utilized the llama-2-chat model to directly generate the type for each question in a zero-shot manner. Additionally, we trained a yolov7 model on the icon45 dataset for object detection and combined it with the OCR method to recognize and locate objects and text within the images. At the model level, we utilized the BLIP-2 model and added eight adapters to the image encoder VIT-G to adaptively extract visual features for different question types. We fed the pre-constructed question templates as input and generated answers using the flan-t5-xxl decoder. Under the puzzle splits configuration, we achieved an accuracy score of 26.5 on the validation set and 24.30 on the private test set.

+

+

+

+ 32. 标题:Skeleton Ground Truth Extraction: Methodology, Annotation Tool and Benchmarks

+ 编号:[159]

+ 链接:https://arxiv.org/abs/2310.06437

+ 作者:Cong Yang, Bipin Indurkhya, John See, Bo Gao, Yan Ke, Zeyd Boukhers, Zhenyu Yang, Marcin Grzegorzek

+ 备注:Accepted for publication in the International Journal of Computer Vision (IJCV)

+ 关键词:Skeleton Ground Truth, Ground Truth, deep learning techniques, Convolutional Neural Networks, Skeleton Ground

+

+ 点击查看摘要

+ Skeleton Ground Truth (GT) is critical to the success of supervised skeleton extraction methods, especially with the popularity of deep learning techniques. Furthermore, we see skeleton GTs used not only for training skeleton detectors with Convolutional Neural Networks (CNN) but also for evaluating skeleton-related pruning and matching algorithms. However, most existing shape and image datasets suffer from the lack of skeleton GT and inconsistency of GT standards. As a result, it is difficult to evaluate and reproduce CNN-based skeleton detectors and algorithms on a fair basis. In this paper, we present a heuristic strategy for object skeleton GT extraction in binary shapes and natural images. Our strategy is built on an extended theory of diagnosticity hypothesis, which enables encoding human-in-the-loop GT extraction based on clues from the target's context, simplicity, and completeness. Using this strategy, we developed a tool, SkeView, to generate skeleton GT of 17 existing shape and image datasets. The GTs are then structurally evaluated with representative methods to build viable baselines for fair comparisons. Experiments demonstrate that GTs generated by our strategy yield promising quality with respect to standard consistency, and also provide a balance between simplicity and completeness.

+

+

+

+ 33. 标题:Retromorphic Testing: A New Approach to the Test Oracle Problem

+ 编号:[163]

+ 链接:https://arxiv.org/abs/2310.06433

+ 作者:Boxi Yu, Qiuyang Mang, Qingshuo Guo, Pinjia He

+ 备注:

+ 关键词:test oracle serves, testing, program, criterion or mechanism, mechanism to assess

+

+ 点击查看摘要

+ A test oracle serves as a criterion or mechanism to assess the correspondence between software output and the anticipated behavior for a given input set. In automated testing, black-box techniques, known for their non-intrusive nature in test oracle construction, are widely used, including notable methodologies like differential testing and metamorphic testing. Inspired by the mathematical concept of inverse function, we present Retromorphic Testing, a novel black-box testing methodology. It leverages an auxiliary program in conjunction with the program under test, which establishes a dual-program structure consisting of a forward program and a backward program. The input data is first processed by the forward program and then its program output is reversed to its original input format using the backward program. In particular, the auxiliary program can operate as either the forward or backward program, leading to different testing modes. The process concludes by examining the relationship between the initial input and the transformed output within the input domain. For example, to test the implementation of the sine function $\sin(x)$, we can employ its inverse function, $\arcsin(x)$, and validate the equation $x = \sin(\arcsin(x)+2k\pi), \forall k \in \mathbb{Z}$. In addition to the high-level concept of Retromorphic Testing, this paper presents its three testing modes with illustrative use cases across diverse programs, including algorithms, traditional software, and AI applications.

+

+

+

+ 34. 标题:Conformal Prediction for Deep Classifier via Label Ranking

+ 编号:[164]

+ 链接:https://arxiv.org/abs/2310.06430

+ 作者:Jianguo Huang, Huajun Xi, Linjun Zhang, Huaxiu Yao, Yue Qiu, Hongxin Wei

+ 备注:

+ 关键词:prediction sets, generates prediction sets, Sorted Adaptive prediction, Conformal prediction, statistical framework

+

+ 点击查看摘要

+ Conformal prediction is a statistical framework that generates prediction sets containing ground-truth labels with a desired coverage guarantee. The predicted probabilities produced by machine learning models are generally miscalibrated, leading to large prediction sets in conformal prediction. In this paper, we empirically and theoretically show that disregarding the probabilities' value will mitigate the undesirable effect of miscalibrated probability values. Then, we propose a novel algorithm named $\textit{Sorted Adaptive prediction sets}$ (SAPS), which discards all the probability values except for the maximum softmax probability. The key idea behind SAPS is to minimize the dependence of the non-conformity score on the probability values while retaining the uncertainty information. In this manner, SAPS can produce sets of small size and communicate instance-wise uncertainty. Theoretically, we provide a finite-sample coverage guarantee of SAPS and show that the expected value of set size from SAPS is always smaller than APS. Extensive experiments validate that SAPS not only lessens the prediction sets but also broadly enhances the conditional coverage rate and adaptation of prediction sets.

+

+

+

+ 35. 标题:AnoDODE: Anomaly Detection with Diffusion ODE

+ 编号:[170]

+ 链接:https://arxiv.org/abs/2310.06420

+ 作者:Xianyao Hu, Congming Jin

+ 备注:11 pages, 5 figures

+ 关键词:identifying atypical data, atypical data samples, process of identifying, identifying atypical, samples that significantly

+

+ 点击查看摘要

+ Anomaly detection is the process of identifying atypical data samples that significantly deviate from the majority of the dataset. In the realm of clinical screening and diagnosis, detecting abnormalities in medical images holds great importance. Typically, clinical practice provides access to a vast collection of normal images, while abnormal images are relatively scarce. We hypothesize that abnormal images and their associated features tend to manifest in low-density regions of the data distribution. Following this assumption, we turn to diffusion ODEs for unsupervised anomaly detection, given their tractability and superior performance in density estimation tasks. More precisely, we propose a new anomaly detection method based on diffusion ODEs by estimating the density of features extracted from multi-scale medical images. Our anomaly scoring mechanism depends on computing the negative log-likelihood of features extracted from medical images at different scales, quantified in bits per dimension. Furthermore, we propose a reconstruction-based anomaly localization suitable for our method. Our proposed method not only identifie anomalies but also provides interpretability at both the image and pixel levels. Through experiments on the BraTS2021 medical dataset, our proposed method outperforms existing methods. These results confirm the effectiveness and robustness of our method.

+

+

+

+ 36. 标题:Boundary Discretization and Reliable Classification Network for Temporal Action Detection

+ 编号:[177]

+ 链接:https://arxiv.org/abs/2310.06403

+ 作者:Zhenying Fang

+ 备注:12 pages

+ 关键词:Boundary Discretization, Temporal action detection, untrimmed videos, action detection aims, aims to recognize

+

+ 点击查看摘要

+ Temporal action detection aims to recognize the action category and determine the starting and ending time of each action instance in untrimmed videos. The mixed methods have achieved remarkable performance by simply merging anchor-based and anchor-free approaches. However, there are still two crucial issues in the mixed framework: (1) Brute-force merging and handcrafted anchors design affect the performance and practical application of the mixed methods. (2) A large number of false positives in action category predictions further impact the detection performance. In this paper, we propose a novel Boundary Discretization and Reliable Classification Network (BDRC-Net) that addresses the above issues by introducing boundary discretization and reliable classification modules. Specifically, the boundary discretization module (BDM) elegantly merges anchor-based and anchor-free approaches in the form of boundary discretization, avoiding the handcrafted anchors design required by traditional mixed methods. Furthermore, the reliable classification module (RCM) predicts reliable action categories to reduce false positives in action category predictions. Extensive experiments conducted on different benchmarks demonstrate that our proposed method achieves favorable performance compared with the state-of-the-art. For example, BDRC-Net hits an average mAP of 68.6% on THUMOS'14, outperforming the previous best by 1.5%. The code will be released at this https URL.

+

+

+

+ 37. 标题:Learning Stackable and Skippable LEGO Bricks for Efficient, Reconfigurable, and Variable-Resolution Diffusion Modeling

+ 编号:[184]

+ 链接:https://arxiv.org/abs/2310.06389

+ 作者:Huangjie Zheng, Zhendong Wang, Jianbo Yuan, Guanghan Ning, Pengcheng He, Quanzeng You, Hongxia Yang, Mingyuan Zhou

+ 备注:

+ 关键词:significant computational costs, generating photo-realistic images, LEGO bricks, significant computational, LEGO

+

+ 点击查看摘要

+ Diffusion models excel at generating photo-realistic images but come with significant computational costs in both training and sampling. While various techniques address these computational challenges, a less-explored issue is designing an efficient and adaptable network backbone for iterative refinement. Current options like U-Net and Vision Transformer often rely on resource-intensive deep networks and lack the flexibility needed for generating images at variable resolutions or with a smaller network than used in training. This study introduces LEGO bricks, which seamlessly integrate Local-feature Enrichment and Global-content Orchestration. These bricks can be stacked to create a test-time reconfigurable diffusion backbone, allowing selective skipping of bricks to reduce sampling costs and generate higher-resolution images than the training data. LEGO bricks enrich local regions with an MLP and transform them using a Transformer block while maintaining a consistent full-resolution image across all bricks. Experimental results demonstrate that LEGO bricks enhance training efficiency, expedite convergence, and facilitate variable-resolution image generation while maintaining strong generative performance. Moreover, LEGO significantly reduces sampling time compared to other methods, establishing it as a valuable enhancement for diffusion models.

+

+

+

+ 38. 标题:3DS-SLAM: A 3D Object Detection based Semantic SLAM towards Dynamic Indoor Environments

+ 编号:[186]

+ 链接:https://arxiv.org/abs/2310.06385

+ 作者:Ghanta Sai Krishna, Kundrapu Supriya, Sabur Baidya

+ 备注:

+ 关键词:camera localization accuracy, Simultaneous Localization, Localization and Mapping, localization accuracy, camera localization

+

+ 点击查看摘要

+ The existence of variable factors within the environment can cause a decline in camera localization accuracy, as it violates the fundamental assumption of a static environment in Simultaneous Localization and Mapping (SLAM) algorithms. Recent semantic SLAM systems towards dynamic environments either rely solely on 2D semantic information, or solely on geometric information, or combine their results in a loosely integrated manner. In this research paper, we introduce 3DS-SLAM, 3D Semantic SLAM, tailored for dynamic scenes with visual 3D object detection. The 3DS-SLAM is a tightly-coupled algorithm resolving both semantic and geometric constraints sequentially. We designed a 3D part-aware hybrid transformer for point cloud-based object detection to identify dynamic objects. Subsequently, we propose a dynamic feature filter based on HDBSCAN clustering to extract objects with significant absolute depth differences. When compared against ORB-SLAM2, 3DS-SLAM exhibits an average improvement of 98.01% across the dynamic sequences of the TUM RGB-D dataset. Furthermore, it surpasses the performance of the other four leading SLAM systems designed for dynamic environments.

+

+

+

+ 39. 标题:Leveraging Diffusion-Based Image Variations for Robust Training on Poisoned Data

+ 编号:[194]

+ 链接:https://arxiv.org/abs/2310.06372

+ 作者:Lukas Struppek, Martin B. Hentschel, Clifton Poth, Dominik Hintersdorf, Kristian Kersting

+ 备注:11 pages, 3 tables, 2 figures

+ 关键词:surreptitiously introduce hidden, introduce hidden functionalities, Backdoor attacks pose, training neural networks, attacks pose

+

+ 点击查看摘要

+ Backdoor attacks pose a serious security threat for training neural networks as they surreptitiously introduce hidden functionalities into a model. Such backdoors remain silent during inference on clean inputs, evading detection due to inconspicuous behavior. However, once a specific trigger pattern appears in the input data, the backdoor activates, causing the model to execute its concealed function. Detecting such poisoned samples within vast datasets is virtually impossible through manual inspection. To address this challenge, we propose a novel approach that enables model training on potentially poisoned datasets by utilizing the power of recent diffusion models. Specifically, we create synthetic variations of all training samples, leveraging the inherent resilience of diffusion models to potential trigger patterns in the data. By combining this generative approach with knowledge distillation, we produce student models that maintain their general performance on the task while exhibiting robust resistance to backdoor triggers.

+

+

+

+ 40. 标题:Advanced Efficient Strategy for Detection of Dark Objects Based on Spiking Network with Multi-Box Detection

+ 编号:[196]

+ 链接:https://arxiv.org/abs/2310.06370

+ 作者:Munawar Ali, Baoqun Yin, Hazrat Bilal, Aakash Kumar, Ali Muhammad, Avinash Rohra

+ 备注:

+ 关键词:deep learning algorithms, shown amazing performance, recognizing darker objects, object detection tasks, largest challenge

+

+ 点击查看摘要

+ Several deep learning algorithms have shown amazing performance for existing object detection tasks, but recognizing darker objects is the largest challenge. Moreover, those techniques struggled to detect or had a slow recognition rate, resulting in significant performance losses. As a result, an improved and accurate detection approach is required to address the above difficulty. The whole study proposes a combination of spiked and normal convolution layers as an energy-efficient and reliable object detector model. The proposed model is split into two sections. The first section is developed as a feature extractor, which utilizes pre-trained VGG16, and the second section of the proposal structure is the combination of spiked and normal Convolutional layers to detect the bounding boxes of images. We drew a pre-trained model for classifying detected objects. With state of the art Python libraries, spike layers can be trained efficiently. The proposed spike convolutional object detector (SCOD) has been evaluated on VOC and Ex-Dark datasets. SCOD reached 66.01% and 41.25% mAP for detecting 20 different objects in the VOC-12 and 12 objects in the Ex-Dark dataset. SCOD uses 14 Giga FLOPS for its forward path calculations. Experimental results indicated superior performance compared to Tiny YOLO, Spike YOLO, YOLO-LITE, Tinier YOLO and Center of loc+Xception based on mAP for the VOC dataset.

+

+

+

+ 41. 标题:CoinSeg: Contrast Inter- and Intra- Class Representations for Incremental Segmentation

+ 编号:[198]

+ 链接:https://arxiv.org/abs/2310.06368

+ 作者:Zekang Zhang, Guangyu Gao, Jianbo Jiao, Chi Harold Liu, Yunchao Wei

+ 备注:Accepted by ICCV 2023

+ 关键词:semantic segmentation aims, Class incremental semantic, incremental semantic segmentation, semantic segmentation, segmentation aims

+

+ 点击查看摘要

+ Class incremental semantic segmentation aims to strike a balance between the model's stability and plasticity by maintaining old knowledge while adapting to new concepts. However, most state-of-the-art methods use the freeze strategy for stability, which compromises the model's this http URL contrast, releasing parameter training for plasticity could lead to the best performance for all categories, but this requires discriminative feature representation.Therefore, we prioritize the model's plasticity and propose the Contrast inter- and intra-class representations for Incremental Segmentation (CoinSeg), which pursues discriminative representations for flexible parameter tuning. Inspired by the Gaussian mixture model that samples from a mixture of Gaussian distributions, CoinSeg emphasizes intra-class diversity with multiple contrastive representation centroids. Specifically, we use mask proposals to identify regions with strong objectness that are likely to be diverse instances/centroids of a category. These mask proposals are then used for contrastive representations to reinforce intra-class diversity. Meanwhile, to avoid bias from intra-class diversity, we also apply category-level pseudo-labels to enhance category-level consistency and inter-category diversity. Additionally, CoinSeg ensures the model's stability and alleviates forgetting through a specific flexible tuning strategy. We validate CoinSeg on Pascal VOC 2012 and ADE20K datasets with multiple incremental scenarios and achieve superior results compared to previous state-of-the-art methods, especially in more challenging and realistic long-term scenarios. Code is available at this https URL.

+

+

+

+ 42. 标题:Fire Detection From Image and Video Using YOLOv5

+ 编号:[207]

+ 链接:https://arxiv.org/abs/2310.06351

+ 作者:Arafat Islam, Md. Imtiaz Habib

+ 备注:6 pages, 6 sections, unpublished paper

+ 关键词:detection deep learning, deep learning algorithm, detection, fire detection, fire detection deep

+

+ 点击查看摘要

+ For the detection of fire-like targets in indoor, outdoor and forest fire images, as well as fire detection under different natural lights, an improved YOLOv5 fire detection deep learning algorithm is proposed. The YOLOv5 detection model expands the feature extraction network from three dimensions, which enhances feature propagation of fire small targets identification, improves network performance, and reduces model parameters. Furthermore, through the promotion of the feature pyramid, the top-performing prediction box is obtained. Fire-YOLOv5 attains excellent results compared to state-of-the-art object detection networks, notably in the detection of small targets of fire and smoke with mAP 90.5% and f1 score 88%. Overall, the Fire-YOLOv5 detection model can effectively deal with the inspection of small fire targets, as well as fire-like and smoke-like objects with F1 score 0.88. When the input image size is 416 x 416 resolution, the average detection time is 0.12 s per frame, which can provide real-time forest fire detection. Moreover, the algorithm proposed in this paper can also be applied to small target detection under other complicated situations. The proposed system shows an improved approach in all fire detection metrics such as precision, recall, and mean average precision.

+

+

+

+ 43. 标题:JointNet: Extending Text-to-Image Diffusion for Dense Distribution Modeling

+ 编号:[209]

+ 链接:https://arxiv.org/abs/2310.06347

+ 作者:Jingyang Zhang, Shiwei Li, Yuanxun Lu, Tian Fang, David McKinnon, Yanghai Tsin, Long Quan, Yao Yao

+ 备注:

+ 关键词:neural network architecture, additional dense modality, architecture for modeling, dense modality branch, RGB branch

+

+ 点击查看摘要

+ We introduce JointNet, a novel neural network architecture for modeling the joint distribution of images and an additional dense modality (e.g., depth maps). JointNet is extended from a pre-trained text-to-image diffusion model, where a copy of the original network is created for the new dense modality branch and is densely connected with the RGB branch. The RGB branch is locked during network fine-tuning, which enables efficient learning of the new modality distribution while maintaining the strong generalization ability of the large-scale pre-trained diffusion model. We demonstrate the effectiveness of JointNet by using RGBD diffusion as an example and through extensive experiments, showcasing its applicability in a variety of applications, including joint RGBD generation, dense depth prediction, depth-conditioned image generation, and coherent tile-based 3D panorama generation.

+

+

+

+ 44. 标题:Filter Pruning For CNN With Enhanced Linear Representation Redundancy

+ 编号:[210]

+ 链接:https://arxiv.org/abs/2310.06344

+ 作者:Bojue Wang, Chunmei Ma, Bin Liu, Nianbo Liu, Jinqi Zhu

+ 备注:

+ 关键词:parallel computing techniques, thriving developed parallel, developed parallel computing, pruning excels non-structured, excels non-structured methods

+

+ 点击查看摘要

+ Structured network pruning excels non-structured methods because they can take advantage of the thriving developed parallel computing techniques. In this paper, we propose a new structured pruning method. Firstly, to create more structured redundancy, we present a data-driven loss function term calculated from the correlation coefficient matrix of different feature maps in the same layer, named CCM-loss. This loss term can encourage the neural network to learn stronger linear representation relations between feature maps during the training from the scratch so that more homogenous parts can be removed later in pruning. CCM-loss provides us with another universal transcendental mathematical tool besides L*-norm regularization, which concentrates on generating zeros, to generate more redundancy but for the different genres. Furthermore, we design a matching channel selection strategy based on principal components analysis to exploit the maximum potential ability of CCM-loss. In our new strategy, we mainly focus on the consistency and integrality of the information flow in the network. Instead of empirically hard-code the retain ratio for each layer, our channel selection strategy can dynamically adjust each layer's retain ratio according to the specific circumstance of a per-trained model to push the prune ratio to the limit. Notably, on the Cifar-10 dataset, our method brings 93.64% accuracy for pruned VGG-16 with only 1.40M parameters and 49.60M FLOPs, the pruned ratios for parameters and FLOPs are 90.6% and 84.2%, respectively. For ResNet-50 trained on the ImageNet dataset, our approach achieves 42.8% and 47.3% storage and computation reductions, respectively, with an accuracy of 76.23%. Our code is available at this https URL.

+

+

+

+ 45. 标题:Local Style Awareness of Font Images

+ 编号:[215]

+ 链接:https://arxiv.org/abs/2310.06337

+ 作者:Daichi Haraguchi, Seiichi Uchida

+ 备注:Accepted at ICDAR WML 2023

+ 关键词:local parts, serifs and curvatures, parts, local, attention

+

+ 点击查看摘要

+ When we compare fonts, we often pay attention to styles of local parts, such as serifs and curvatures. This paper proposes an attention mechanism to find important local parts. The local parts with larger attention are then considered important. The proposed mechanism can be trained in a quasi-self-supervised manner that requires no manual annotation other than knowing that a set of character images is from the same font, such as Helvetica. After confirming that the trained attention mechanism can find style-relevant local parts, we utilize the resulting attention for local style-aware font generation. Specifically, we design a new reconstruction loss function to put more weight on the local parts with larger attention for generating character images with more accurate style realization. This loss function has the merit of applicability to various font generation models. Our experimental results show that the proposed loss function improves the quality of generated character images by several few-shot font generation models.

+

+

+

+ 46. 标题:CrowdRec: 3D Crowd Reconstruction from Single Color Images

+ 编号:[218]

+ 链接:https://arxiv.org/abs/2310.06332