本篇博文主要展示每日从Arxiv论文网站获取的最新论文列表,以自然语言处理、信息检索、计算机视觉等类目进行划分。

+统计

+今日共更新476篇论文,其中:

+

+- 自然语言处理83篇

+- 信息检索10篇

+- 计算机视觉96篇

+

+自然语言处理

+

+ 1. 【2410.09047】Unraveling and Mitigating Safety Alignment Degradation of Vision-Language Models

+ 链接:https://arxiv.org/abs/2410.09047

+ 作者:Qin Liu,Chao Shang,Ling Liu,Nikolaos Pappas,Jie Ma,Neha Anna John,Srikanth Doss,Lluis Marquez,Miguel Ballesteros,Yassine Benajiba

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

+ 关键词:vision module compared, safety alignment, Vision-Language Models, safety alignment ability, safety alignment degradation

+ 备注: Preprint

+

+ 点击查看摘要

+ Abstract:The safety alignment ability of Vision-Language Models (VLMs) is prone to be degraded by the integration of the vision module compared to its LLM backbone. We investigate this phenomenon, dubbed as ''safety alignment degradation'' in this paper, and show that the challenge arises from the representation gap that emerges when introducing vision modality to VLMs. In particular, we show that the representations of multi-modal inputs shift away from that of text-only inputs which represent the distribution that the LLM backbone is optimized for. At the same time, the safety alignment capabilities, initially developed within the textual embedding space, do not successfully transfer to this new multi-modal representation space. To reduce safety alignment degradation, we introduce Cross-Modality Representation Manipulation (CMRM), an inference time representation intervention method for recovering the safety alignment ability that is inherent in the LLM backbone of VLMs, while simultaneously preserving the functional capabilities of VLMs. The empirical results show that our framework significantly recovers the alignment ability that is inherited from the LLM backbone with minimal impact on the fluency and linguistic capabilities of pre-trained VLMs even without additional training. Specifically, the unsafe rate of LLaVA-7B on multi-modal input can be reduced from 61.53% to as low as 3.15% with only inference-time intervention.

+WARNING: This paper contains examples of toxic or harmful language.

+

Comments:

+Preprint

+Subjects:

+Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

+Cite as:

+arXiv:2410.09047 [cs.CL]

+(or

+arXiv:2410.09047v1 [cs.CL] for this version)

+https://doi.org/10.48550/arXiv.2410.09047

+Focus to learn more

+ arXiv-issued DOI via DataCite (pending registration)</p>

+

+

+

+

+ 2. 【2410.09045】MiRAGeNews: Multimodal Realistic AI-Generated News Detection

+ 链接:https://arxiv.org/abs/2410.09045

+ 作者:Runsheng Huang,Liam Dugan,Yue Yang,Chris Callison-Burch

+ 类目:Computer Vision and Pattern Recognition (cs.CV); Computation and Language (cs.CL)

+ 关键词:inflammatory or misleading, recent years, proliferation of inflammatory, increasingly common, common in recent

+ 备注: EMNLP 2024 Findings

+

+ 点击查看摘要

+ Abstract:The proliferation of inflammatory or misleading "fake" news content has become increasingly common in recent years. Simultaneously, it has become easier than ever to use AI tools to generate photorealistic images depicting any scene imaginable. Combining these two -- AI-generated fake news content -- is particularly potent and dangerous. To combat the spread of AI-generated fake news, we propose the MiRAGeNews Dataset, a dataset of 12,500 high-quality real and AI-generated image-caption pairs from state-of-the-art generators. We find that our dataset poses a significant challenge to humans (60% F-1) and state-of-the-art multi-modal LLMs ( 24% F-1). Using our dataset we train a multi-modal detector (MiRAGe) that improves by +5.1% F-1 over state-of-the-art baselines on image-caption pairs from out-of-domain image generators and news publishers. We release our code and data to aid future work on detecting AI-generated content.

+

+

+

+ 3. 【2410.09040】AttnGCG: Enhancing Jailbreaking Attacks on LLMs with Attention Manipulation

+ 链接:https://arxiv.org/abs/2410.09040

+ 作者:Zijun Wang,Haoqin Tu,Jieru Mei,Bingchen Zhao,Yisen Wang,Cihang Xie

+ 类目:Computation and Language (cs.CL)

+ 关键词:Greedy Coordinate Gradient, transformer-based Large Language, optimization-based Greedy Coordinate, Large Language Models, Coordinate Gradient

+ 备注:

+

+ 点击查看摘要

+ Abstract:This paper studies the vulnerabilities of transformer-based Large Language Models (LLMs) to jailbreaking attacks, focusing specifically on the optimization-based Greedy Coordinate Gradient (GCG) strategy. We first observe a positive correlation between the effectiveness of attacks and the internal behaviors of the models. For instance, attacks tend to be less effective when models pay more attention to system prompts designed to ensure LLM safety alignment. Building on this discovery, we introduce an enhanced method that manipulates models' attention scores to facilitate LLM jailbreaking, which we term AttnGCG. Empirically, AttnGCG shows consistent improvements in attack efficacy across diverse LLMs, achieving an average increase of ~7% in the Llama-2 series and ~10% in the Gemma series. Our strategy also demonstrates robust attack transferability against both unseen harmful goals and black-box LLMs like GPT-3.5 and GPT-4. Moreover, we note our attention-score visualization is more interpretable, allowing us to gain better insights into how our targeted attention manipulation facilitates more effective jailbreaking. We release the code at this https URL.

+

+

+

+ 4. 【2410.09038】SimpleStrat: Diversifying Language Model Generation with Stratification

+ 链接:https://arxiv.org/abs/2410.09038

+ 作者:Justin Wong,Yury Orlovskiy,Michael Luo,Sanjit A. Seshia,Joseph E. Gonzalez

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Generating diverse responses, Generating diverse, synthetic data generation, search and synthetic, crucial for applications

+ 备注:

+

+ 点击查看摘要

+ Abstract:Generating diverse responses from large language models (LLMs) is crucial for applications such as planning/search and synthetic data generation, where diversity provides distinct answers across generations. Prior approaches rely on increasing temperature to increase diversity. However, contrary to popular belief, we show not only does this approach produce lower quality individual generations as temperature increases, but it depends on model's next-token probabilities being similar to the true distribution of answers. We propose \method{}, an alternative approach that uses the language model itself to partition the space into strata. At inference, a random stratum is selected and a sample drawn from within the strata. To measure diversity, we introduce CoverageQA, a dataset of underspecified questions with multiple equally plausible answers, and assess diversity by measuring KL Divergence between the output distribution and uniform distribution over valid ground truth answers. As computing probability per response/solution for proprietary models is infeasible, we measure recall on ground truth solutions. Our evaluation show using SimpleStrat achieves higher recall by 0.05 compared to GPT-4o and 0.36 average reduction in KL Divergence compared to Llama 3.

+

+

+

+ 5. 【2410.09037】Mentor-KD: Making Small Language Models Better Multi-step Reasoners

+ 链接:https://arxiv.org/abs/2410.09037

+ 作者:Hojae Lee,Junho Kim,SangKeun Lee

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Large Language Models, displayed remarkable performances, Large Language, Language Models, displayed remarkable

+ 备注: EMNLP 2024

+

+ 点击查看摘要

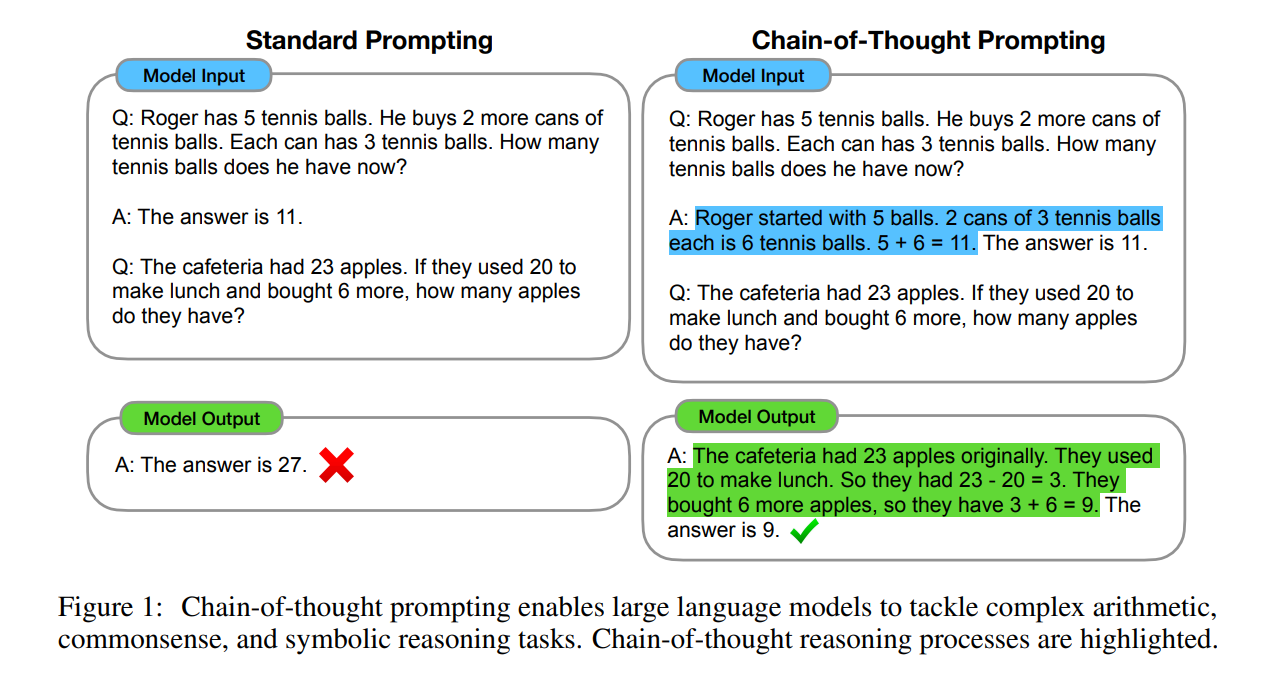

+ Abstract:Large Language Models (LLMs) have displayed remarkable performances across various complex tasks by leveraging Chain-of-Thought (CoT) prompting. Recently, studies have proposed a Knowledge Distillation (KD) approach, reasoning distillation, which transfers such reasoning ability of LLMs through fine-tuning language models of multi-step rationales generated by LLM teachers. However, they have inadequately considered two challenges regarding insufficient distillation sets from the LLM teacher model, in terms of 1) data quality and 2) soft label provision. In this paper, we propose Mentor-KD, which effectively distills the multi-step reasoning capability of LLMs to smaller LMs while addressing the aforementioned challenges. Specifically, we exploit a mentor, intermediate-sized task-specific fine-tuned model, to augment additional CoT annotations and provide soft labels for the student model during reasoning distillation. We conduct extensive experiments and confirm Mentor-KD's effectiveness across various models and complex reasoning tasks.

+

+

+

+ 6. 【2410.09034】PEAR: A Robust and Flexible Automation Framework for Ptychography Enabled by Multiple Large Language Model Agents

+ 链接:https://arxiv.org/abs/2410.09034

+ 作者:Xiangyu Yin,Chuqiao Shi,Yimo Han,Yi Jiang

+ 类目:Computational Engineering, Finance, and Science (cs.CE); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Multiagent Systems (cs.MA)

+ 关键词:advanced computational imaging, computational imaging technique, technique in X-ray, X-ray and electron, electron microscopy

+ 备注: 18 pages, 5 figures, technical preview report

+

+ 点击查看摘要

+ Abstract:Ptychography is an advanced computational imaging technique in X-ray and electron microscopy. It has been widely adopted across scientific research fields, including physics, chemistry, biology, and materials science, as well as in industrial applications such as semiconductor characterization. In practice, obtaining high-quality ptychographic images requires simultaneous optimization of numerous experimental and algorithmic parameters. Traditionally, parameter selection often relies on trial and error, leading to low-throughput workflows and potential human bias. In this work, we develop the "Ptychographic Experiment and Analysis Robot" (PEAR), a framework that leverages large language models (LLMs) to automate data analysis in ptychography. To ensure high robustness and accuracy, PEAR employs multiple LLM agents for tasks including knowledge retrieval, code generation, parameter recommendation, and image reasoning. Our study demonstrates that PEAR's multi-agent design significantly improves the workflow success rate, even with smaller open-weight models such as LLaMA 3.1 8B. PEAR also supports various automation levels and is designed to work with customized local knowledge bases, ensuring flexibility and adaptability across different research environments.

+

+

+

+ 7. 【2410.09024】AgentHarm: A Benchmark for Measuring Harmfulness of LLM Agents

+ 链接:https://arxiv.org/abs/2410.09024

+ 作者:Maksym Andriushchenko,Alexandra Souly,Mateusz Dziemian,Derek Duenas,Maxwell Lin,Justin Wang,Dan Hendrycks,Andy Zou,Zico Kolter,Matt Fredrikson,Eric Winsor,Jerome Wynne,Yarin Gal,Xander Davies

+ 类目:Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:users design prompts, circumvent safety measures, users design, design prompts, prompts to circumvent

+ 备注:

+

+ 点击查看摘要

+ Abstract:The robustness of LLMs to jailbreak attacks, where users design prompts to circumvent safety measures and misuse model capabilities, has been studied primarily for LLMs acting as simple chatbots. Meanwhile, LLM agents -- which use external tools and can execute multi-stage tasks -- may pose a greater risk if misused, but their robustness remains underexplored. To facilitate research on LLM agent misuse, we propose a new benchmark called AgentHarm. The benchmark includes a diverse set of 110 explicitly malicious agent tasks (440 with augmentations), covering 11 harm categories including fraud, cybercrime, and harassment. In addition to measuring whether models refuse harmful agentic requests, scoring well on AgentHarm requires jailbroken agents to maintain their capabilities following an attack to complete a multi-step task. We evaluate a range of leading LLMs, and find (1) leading LLMs are surprisingly compliant with malicious agent requests without jailbreaking, (2) simple universal jailbreak templates can be adapted to effectively jailbreak agents, and (3) these jailbreaks enable coherent and malicious multi-step agent behavior and retain model capabilities. We publicly release AgentHarm to enable simple and reliable evaluation of attacks and defenses for LLM-based agents. We publicly release the benchmark at this https URL.

+

+

+

+ 8. 【2410.09019】MedMobile: A mobile-sized language model with expert-level clinical capabilities

+ 链接:https://arxiv.org/abs/2410.09019

+ 作者:Krithik Vishwanath,Jaden Stryker,Anton Alaykin,Daniel Alexander Alber,Eric Karl Oermann

+ 类目:Computation and Language (cs.CL)

+ 关键词:demonstrated expert-level reasoning, Language models, abilities in medicine, demonstrated expert-level, expert-level reasoning

+ 备注: 13 pages, 5 figures (2 main, 3 supplementary)

+

+ 点击查看摘要

+ Abstract:Language models (LMs) have demonstrated expert-level reasoning and recall abilities in medicine. However, computational costs and privacy concerns are mounting barriers to wide-scale implementation. We introduce a parsimonious adaptation of phi-3-mini, MedMobile, a 3.8 billion parameter LM capable of running on a mobile device, for medical applications. We demonstrate that MedMobile scores 75.7% on the MedQA (USMLE), surpassing the passing mark for physicians (~60%), and approaching the scores of models 100 times its size. We subsequently perform a careful set of ablations, and demonstrate that chain of thought, ensembling, and fine-tuning lead to the greatest performance gains, while unexpectedly retrieval augmented generation fails to demonstrate significant improvements

+

+

+

+ 9. 【2410.09016】Parameter-Efficient Fine-Tuning of State Space Models

+ 链接:https://arxiv.org/abs/2410.09016

+ 作者:Kevin Galim,Wonjun Kang,Yuchen Zeng,Hyung Il Koo,Kangwook Lee

+ 类目:Machine Learning (cs.LG); Computation and Language (cs.CL)

+ 关键词:Deep State Space, State Space Models, Deep State, Space Models, State Space

+ 备注: Code is available at [this https URL](https://github.com/furiosa-ai/ssm-peft)

+

+ 点击查看摘要

+ Abstract:Deep State Space Models (SSMs), such as Mamba (Gu Dao, 2024), have emerged as powerful tools for language modeling, offering high performance with efficient inference and linear scaling in sequence length. However, the application of parameter-efficient fine-tuning (PEFT) methods to SSM-based models remains largely unexplored. This paper aims to systematically study two key questions: (i) How do existing PEFT methods perform on SSM-based models? (ii) Which modules are most effective for fine-tuning? We conduct an empirical benchmark of four basic PEFT methods on SSM-based models. Our findings reveal that prompt-based methods (e.g., prefix-tuning) are no longer effective, an empirical result further supported by theoretical analysis. In contrast, LoRA remains effective for SSM-based models. We further investigate the optimal application of LoRA within these models, demonstrating both theoretically and experimentally that applying LoRA to linear projection matrices without modifying SSM modules yields the best results, as LoRA is not effective at tuning SSM modules. To further improve performance, we introduce LoRA with Selective Dimension tuning (SDLoRA), which selectively updates certain channels and states on SSM modules while applying LoRA to linear projection matrices. Extensive experimental results show that this approach outperforms standard LoRA.

+

+

+

+ 10. 【2410.09013】he Impact of Visual Information in Chinese Characters: Evaluating Large Models' Ability to Recognize and Utilize Radicals

+ 链接:https://arxiv.org/abs/2410.09013

+ 作者:Xiaofeng Wu,Karl Stratos,Wei Xu

+ 类目:Computation and Language (cs.CL)

+ 关键词:glyphic writing system, Chinese incorporates information-rich, incorporates information-rich visual, meaning or pronunciation, information-rich visual features

+ 备注:

+

+ 点击查看摘要

+ Abstract:The glyphic writing system of Chinese incorporates information-rich visual features in each character, such as radicals that provide hints about meaning or pronunciation. However, there has been no investigation into whether contemporary Large Language Models (LLMs) and Vision-Language Models (VLMs) can harness these sub-character features in Chinese through prompting. In this study, we establish a benchmark to evaluate LLMs' and VLMs' understanding of visual elements in Chinese characters, including radicals, composition structures, strokes, and stroke counts. Our results reveal that models surprisingly exhibit some, but still limited, knowledge of the visual information, regardless of whether images of characters are provided. To incite models' ability to use radicals, we further experiment with incorporating radicals into the prompts for Chinese language understanding tasks. We observe consistent improvement in Part-Of-Speech tagging when providing additional information about radicals, suggesting the potential to enhance CLP by integrating sub-character information.

+

+

+

+ 11. 【2410.09008】SuperCorrect: Supervising and Correcting Language Models with Error-Driven Insights

+ 链接:https://arxiv.org/abs/2410.09008

+ 作者:Ling Yang,Zhaochen Yu,Tianjun Zhang,Minkai Xu,Joseph E. Gonzalez,Bin Cui,Shuicheng Yan

+ 类目:Computation and Language (cs.CL)

+ 关键词:shown significant improvements, Large language models, student model, LLaMA have shown, shown significant

+ 备注: Project: [this https URL](https://github.com/YangLing0818/SuperCorrect-llm)

+

+ 点击查看摘要

+ Abstract:Large language models (LLMs) like GPT-4, PaLM, and LLaMA have shown significant improvements in various reasoning tasks. However, smaller models such as Llama-3-8B and DeepSeekMath-Base still struggle with complex mathematical reasoning because they fail to effectively identify and correct reasoning errors. Recent reflection-based methods aim to address these issues by enabling self-reflection and self-correction, but they still face challenges in independently detecting errors in their reasoning steps. To overcome these limitations, we propose SuperCorrect, a novel two-stage framework that uses a large teacher model to supervise and correct both the reasoning and reflection processes of a smaller student model. In the first stage, we extract hierarchical high-level and detailed thought templates from the teacher model to guide the student model in eliciting more fine-grained reasoning thoughts. In the second stage, we introduce cross-model collaborative direct preference optimization (DPO) to enhance the self-correction abilities of the student model by following the teacher's correction traces during training. This cross-model DPO approach teaches the student model to effectively locate and resolve erroneous thoughts with error-driven insights from the teacher model, breaking the bottleneck of its thoughts and acquiring new skills and knowledge to tackle challenging problems. Extensive experiments consistently demonstrate our superiority over previous methods. Notably, our SuperCorrect-7B model significantly surpasses powerful DeepSeekMath-7B by 7.8%/5.3% and Qwen2.5-Math-7B by 15.1%/6.3% on MATH/GSM8K benchmarks, achieving new SOTA performance among all 7B models. Code: this https URL

+

+

+

+ 12. 【2410.08996】Hypothesis-only Biases in Large Language Model-Elicited Natural Language Inference

+ 链接:https://arxiv.org/abs/2410.08996

+ 作者:Grace Proebsting,Adam Poliak

+ 类目:Computation and Language (cs.CL)

+ 关键词:Natural Language Inference, write Natural Language, Language Inference, Natural Language, replacing crowdsource workers

+ 备注:

+

+ 点击查看摘要

+ Abstract:We test whether replacing crowdsource workers with LLMs to write Natural Language Inference (NLI) hypotheses similarly results in annotation artifacts. We recreate a portion of the Stanford NLI corpus using GPT-4, Llama-2 and Mistral 7b, and train hypothesis-only classifiers to determine whether LLM-elicited hypotheses contain annotation artifacts. On our LLM-elicited NLI datasets, BERT-based hypothesis-only classifiers achieve between 86-96% accuracy, indicating these datasets contain hypothesis-only artifacts. We also find frequent "give-aways" in LLM-generated hypotheses, e.g. the phrase "swimming in a pool" appears in more than 10,000 contradictions generated by GPT-4. Our analysis provides empirical evidence that well-attested biases in NLI can persist in LLM-generated data.

+

+

+

+ 13. 【2410.08991】Science is Exploration: Computational Frontiers for Conceptual Metaphor Theory

+ 链接:https://arxiv.org/abs/2410.08991

+ 作者:Rebecca M. M. Hicke,Ross Deans Kristensen-McLachlan

+ 类目:Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:conceptual metaphors, Large Language Models, Metaphors, language, natural language

+ 备注: Accepted to the 2024 Computational Humanities Research Conference (CHR)

+

+ 点击查看摘要

+ Abstract:Metaphors are everywhere. They appear extensively across all domains of natural language, from the most sophisticated poetry to seemingly dry academic prose. A significant body of research in the cognitive science of language argues for the existence of conceptual metaphors, the systematic structuring of one domain of experience in the language of another. Conceptual metaphors are not simply rhetorical flourishes but are crucial evidence of the role of analogical reasoning in human cognition. In this paper, we ask whether Large Language Models (LLMs) can accurately identify and explain the presence of such conceptual metaphors in natural language data. Using a novel prompting technique based on metaphor annotation guidelines, we demonstrate that LLMs are a promising tool for large-scale computational research on conceptual metaphors. Further, we show that LLMs are able to apply procedural guidelines designed for human annotators, displaying a surprising depth of linguistic knowledge.

+

+

+

+ 14. 【2410.08985】owards Trustworthy Knowledge Graph Reasoning: An Uncertainty Aware Perspective

+ 链接:https://arxiv.org/abs/2410.08985

+ 作者:Bo Ni,Yu Wang,Lu Cheng,Erik Blasch,Tyler Derr

+ 类目:Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:Large Language Models, coupled with Large, KG-based retrieval-augmented frameworks, Large Language, language model components

+ 备注:

+

+ 点击查看摘要

+ Abstract:Recently, Knowledge Graphs (KGs) have been successfully coupled with Large Language Models (LLMs) to mitigate their hallucinations and enhance their reasoning capability, such as in KG-based retrieval-augmented frameworks. However, current KG-LLM frameworks lack rigorous uncertainty estimation, limiting their reliable deployment in high-stakes applications. Directly incorporating uncertainty quantification into KG-LLM frameworks presents challenges due to their complex architectures and the intricate interactions between the knowledge graph and language model components. To address this gap, we propose a new trustworthy KG-LLM framework, Uncertainty Aware Knowledge-Graph Reasoning (UAG), which incorporates uncertainty quantification into the KG-LLM framework. We design an uncertainty-aware multi-step reasoning framework that leverages conformal prediction to provide a theoretical guarantee on the prediction set. To manage the error rate of the multi-step process, we additionally introduce an error rate control module to adjust the error rate within the individual components. Extensive experiments show that our proposed UAG can achieve any pre-defined coverage rate while reducing the prediction set/interval size by 40% on average over the baselines.

+

+

+

+ 15. 【2410.08974】UniGlyph: A Seven-Segment Script for Universal Language Representation

+ 链接:https://arxiv.org/abs/2410.08974

+ 作者:G. V. Bency Sherin,A. Abijesh Euphrine,A. Lenora Moreen,L. Arun Jose

+ 类目:Computation and Language (cs.CL); Human-Computer Interaction (cs.HC); Symbolic Computation (cs.SC); Sound (cs.SD); Audio and Speech Processing (eess.AS)

+ 关键词:designed to create, derived from seven-segment, UniGlyph, International Phonetic Alphabet, phonetic

+ 备注: This submission includes 23 pages and tables. No external funding has been received for this research. Acknowledgments to Jeseentha V. for contributions to the phonetic study

+

+ 点击查看摘要

+ Abstract:UniGlyph is a constructed language (conlang) designed to create a universal transliteration system using a script derived from seven-segment characters. The goal of UniGlyph is to facilitate cross-language communication by offering a flexible and consistent script that can represent a wide range of phonetic sounds. This paper explores the design of UniGlyph, detailing its script structure, phonetic mapping, and transliteration rules. The system addresses imperfections in the International Phonetic Alphabet (IPA) and traditional character sets by providing a compact, versatile method to represent phonetic diversity across languages. With pitch and length markers, UniGlyph ensures accurate phonetic representation while maintaining a small character set. Applications of UniGlyph include artificial intelligence integrations, such as natural language processing and multilingual speech recognition, enhancing communication across different languages. Future expansions are discussed, including the addition of animal phonetic sounds, where unique scripts are assigned to different species, broadening the scope of UniGlyph beyond human communication. This study presents the challenges and solutions in developing such a universal script, demonstrating the potential of UniGlyph to bridge linguistic gaps in cross-language communication, educational phonetics, and AI-driven applications.

+

+

+

+ 16. 【2410.08971】Extra Global Attention Designation Using Keyword Detection in Sparse Transformer Architectures

+ 链接:https://arxiv.org/abs/2410.08971

+ 作者:Evan Lucas,Dylan Kangas,Timothy C Havens

+ 类目:Computation and Language (cs.CL)

+ 关键词:Longformer Encoder-Decoder, sparse transformer architecture, extension to Longformer, popular sparse transformer, propose an extension

+ 备注:

+

+ 点击查看摘要

+ Abstract:In this paper, we propose an extension to Longformer Encoder-Decoder, a popular sparse transformer architecture. One common challenge with sparse transformers is that they can struggle with encoding of long range context, such as connections between topics discussed at a beginning and end of a document. A method to selectively increase global attention is proposed and demonstrated for abstractive summarization tasks on several benchmark data sets. By prefixing the transcript with additional keywords and encoding global attention on these keywords, improvement in zero-shot, few-shot, and fine-tuned cases is demonstrated for some benchmark data sets.

+

+

+

+ 17. 【2410.08970】NoVo: Norm Voting off Hallucinations with Attention Heads in Large Language Models

+ 链接:https://arxiv.org/abs/2410.08970

+ 作者:Zheng Yi Ho,Siyuan Liang,Sen Zhang,Yibing Zhan,Dacheng Tao

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Large Language Models, Language Models, Large Language, Hallucinations in Large, remain a major

+ 备注:

+

+ 点击查看摘要

+ Abstract:Hallucinations in Large Language Models (LLMs) remain a major obstacle, particularly in high-stakes applications where factual accuracy is critical. While representation editing and reading methods have made strides in reducing hallucinations, their heavy reliance on specialised tools and training on in-domain samples, makes them difficult to scale and prone to overfitting. This limits their accuracy gains and generalizability to diverse datasets. This paper presents a lightweight method, Norm Voting (NoVo), which harnesses the untapped potential of attention head norms to dramatically enhance factual accuracy in zero-shot multiple-choice questions (MCQs). NoVo begins by automatically selecting truth-correlated head norms with an efficient, inference-only algorithm using only 30 random samples, allowing NoVo to effortlessly scale to diverse datasets. Afterwards, selected head norms are employed in a simple voting algorithm, which yields significant gains in prediction accuracy. On TruthfulQA MC1, NoVo surpasses the current state-of-the-art and all previous methods by an astounding margin -- at least 19 accuracy points. NoVo demonstrates exceptional generalization to 20 diverse datasets, with significant gains in over 90\% of them, far exceeding all current representation editing and reading methods. NoVo also reveals promising gains to finetuning strategies and building textual adversarial defence. NoVo's effectiveness with head norms opens new frontiers in LLM interpretability, robustness and reliability.

+

+

+

+ 18. 【2410.08968】Controllable Safety Alignment: Inference-Time Adaptation to Diverse Safety Requirements

+ 链接:https://arxiv.org/abs/2410.08968

+ 作者:Jingyu Zhang,Ahmed Elgohary,Ahmed Magooda,Daniel Khashabi,Benjamin Van Durme

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:content deemed unsafe, safety, diverse safety, large language models, current paradigm

+ 备注:

+

+ 点击查看摘要

+ Abstract:The current paradigm for safety alignment of large language models (LLMs) follows a one-size-fits-all approach: the model refuses to interact with any content deemed unsafe by the model provider. This approach lacks flexibility in the face of varying social norms across cultures and regions. In addition, users may have diverse safety needs, making a model with static safety standards too restrictive to be useful, as well as too costly to be re-aligned.

+We propose Controllable Safety Alignment (CoSA), a framework designed to adapt models to diverse safety requirements without re-training. Instead of aligning a fixed model, we align models to follow safety configs -- free-form natural language descriptions of the desired safety behaviors -- that are provided as part of the system prompt. To adjust model safety behavior, authorized users only need to modify such safety configs at inference time. To enable that, we propose CoSAlign, a data-centric method for aligning LLMs to easily adapt to diverse safety configs. Furthermore, we devise a novel controllability evaluation protocol that considers both helpfulness and configured safety, summarizing them into CoSA-Score, and construct CoSApien, a human-authored benchmark that consists of real-world LLM use cases with diverse safety requirements and corresponding evaluation prompts.

+We show that CoSAlign leads to substantial gains of controllability over strong baselines including in-context alignment. Our framework encourages better representation and adaptation to pluralistic human values in LLMs, and thereby increasing their practicality.

+

Subjects:

+Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+Cite as:

+arXiv:2410.08968 [cs.CL]

+(or

+arXiv:2410.08968v1 [cs.CL] for this version)

+https://doi.org/10.48550/arXiv.2410.08968

+Focus to learn more

+ arXiv-issued DOI via DataCite (pending registration)</p>

+

+

+

+

+ 19. 【2410.08964】Language Imbalance Driven Rewarding for Multilingual Self-improving

+ 链接:https://arxiv.org/abs/2410.08964

+ 作者:Wen Yang,Junhong Wu,Chen Wang,Chengqing Zong,Jiajun Zhang

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Large Language Models, Large Language, Language Models, English and Chinese, Imbalance Driven Rewarding

+ 备注: Work in progress

+

+ 点击查看摘要

+ Abstract:Large Language Models (LLMs) have achieved state-of-the-art performance across numerous tasks. However, these advancements have predominantly benefited "first-class" languages such as English and Chinese, leaving many other languages underrepresented. This imbalance, while limiting broader applications, generates a natural preference ranking between languages, offering an opportunity to bootstrap the multilingual capabilities of LLM in a self-improving manner. Thus, we propose $\textit{Language Imbalance Driven Rewarding}$, where the inherent imbalance between dominant and non-dominant languages within LLMs is leveraged as a reward signal. Iterative DPO training demonstrates that this approach not only enhances LLM performance in non-dominant languages but also improves the dominant language's capacity, thereby yielding an iterative reward signal. Fine-tuning Meta-Llama-3-8B-Instruct over two iterations of this approach results in continuous improvements in multilingual performance across instruction-following and arithmetic reasoning tasks, evidenced by an average improvement of 7.46% win rate on the X-AlpacaEval leaderboard and 13.9% accuracy on the MGSM benchmark. This work serves as an initial exploration, paving the way for multilingual self-improvement of LLMs.

+

+

+

+ 20. 【2410.08928】owards Cross-Lingual LLM Evaluation for European Languages

+ 链接:https://arxiv.org/abs/2410.08928

+ 作者:Klaudia Thellmann,Bernhard Stadler,Michael Fromm,Jasper Schulze Buschhoff,Alex Jude,Fabio Barth,Johannes Leveling,Nicolas Flores-Herr,Joachim Köhler,René Jäkel,Mehdi Ali

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

+ 关键词:Large Language Models, rise of Large, revolutionized natural language, natural language processing, Language Models

+ 备注:

+

+ 点击查看摘要

+ Abstract:The rise of Large Language Models (LLMs) has revolutionized natural language processing across numerous languages and tasks. However, evaluating LLM performance in a consistent and meaningful way across multiple European languages remains challenging, especially due to the scarcity of multilingual benchmarks. We introduce a cross-lingual evaluation approach tailored for European languages. We employ translated versions of five widely-used benchmarks to assess the capabilities of 40 LLMs across 21 European languages. Our contributions include examining the effectiveness of translated benchmarks, assessing the impact of different translation services, and offering a multilingual evaluation framework for LLMs that includes newly created datasets: EU20-MMLU, EU20-HellaSwag, EU20-ARC, EU20-TruthfulQA, and EU20-GSM8K. The benchmarks and results are made publicly available to encourage further research in multilingual LLM evaluation.

+

+

+

+ 21. 【2410.08917】AutoPersuade: A Framework for Evaluating and Explaining Persuasive Arguments

+ 链接:https://arxiv.org/abs/2410.08917

+ 作者:Till Raphael Saenger,Musashi Hinck,Justin Grimmer,Brandon M. Stewart

+ 类目:Computation and Language (cs.CL)

+ 关键词:constructing persuasive messages, persuasive messages, three-part framework, framework for constructing, constructing persuasive

+ 备注:

+

+ 点击查看摘要

+ Abstract:We introduce AutoPersuade, a three-part framework for constructing persuasive messages. First, we curate a large dataset of arguments with human evaluations. Next, we develop a novel topic model to identify argument features that influence persuasiveness. Finally, we use this model to predict the effectiveness of new arguments and assess the causal impact of different components to provide explanations. We validate AutoPersuade through an experimental study on arguments for veganism, demonstrating its effectiveness with human studies and out-of-sample predictions.

+

+

+

+ 22. 【2410.08905】Lifelong Event Detection via Optimal Transport

+ 链接:https://arxiv.org/abs/2410.08905

+ 作者:Viet Dao,Van-Cuong Pham,Quyen Tran,Thanh-Thien Le,Linh Ngo Van,Thien Huu Nguyen

+ 类目:Computation and Language (cs.CL)

+ 关键词:coming event types, formidable challenge due, Continual Event Detection, Lifelong Event Detection, Event Detection

+ 备注: Accepted to EMNLP 2024

+

+ 点击查看摘要

+ Abstract:Continual Event Detection (CED) poses a formidable challenge due to the catastrophic forgetting phenomenon, where learning new tasks (with new coming event types) hampers performance on previous ones. In this paper, we introduce a novel approach, Lifelong Event Detection via Optimal Transport (LEDOT), that leverages optimal transport principles to align the optimization of our classification module with the intrinsic nature of each class, as defined by their pre-trained language modeling. Our method integrates replay sets, prototype latent representations, and an innovative Optimal Transport component. Extensive experiments on MAVEN and ACE datasets demonstrate LEDOT's superior performance, consistently outperforming state-of-the-art baselines. The results underscore LEDOT as a pioneering solution in continual event detection, offering a more effective and nuanced approach to addressing catastrophic forgetting in evolving environments.

+

+

+

+ 23. 【2410.08900】A Benchmark for Cross-Domain Argumentative Stance Classification on Social Media

+ 链接:https://arxiv.org/abs/2410.08900

+ 作者:Jiaqing Yuan,Ruijie Xi,Munindar P. Singh

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:stance classification plays, identifying authors' viewpoints, Argumentative stance classification, stance classification, classification plays

+ 备注:

+

+ 点击查看摘要

+ Abstract:Argumentative stance classification plays a key role in identifying authors' viewpoints on specific topics. However, generating diverse pairs of argumentative sentences across various domains is challenging. Existing benchmarks often come from a single domain or focus on a limited set of topics. Additionally, manual annotation for accurate labeling is time-consuming and labor-intensive. To address these challenges, we propose leveraging platform rules, readily available expert-curated content, and large language models to bypass the need for human annotation. Our approach produces a multidomain benchmark comprising 4,498 topical claims and 30,961 arguments from three sources, spanning 21 domains. We benchmark the dataset in fully supervised, zero-shot, and few-shot settings, shedding light on the strengths and limitations of different methodologies. We release the dataset and code in this study at hidden for anonymity.

+

+

+

+ 24. 【2410.08876】RoRA-VLM: Robust Retrieval-Augmented Vision Language Models

+ 链接:https://arxiv.org/abs/2410.08876

+ 作者:Jingyuan Qi,Zhiyang Xu,Rulin Shao,Yang Chen,Jing Di,Yu Cheng,Qifan Wang,Lifu Huang

+ 类目:Computation and Language (cs.CL)

+ 关键词:Current vision-language models, multimodal knowledge snippets, retrieved multimodal knowledge, exhibit inferior performance, Current vision-language

+ 备注:

+

+ 点击查看摘要

+ Abstract:Current vision-language models (VLMs) still exhibit inferior performance on knowledge-intensive tasks, primarily due to the challenge of accurately encoding all the associations between visual objects and scenes to their corresponding entities and background knowledge. While retrieval augmentation methods offer an efficient way to integrate external knowledge, extending them to vision-language domain presents unique challenges in (1) precisely retrieving relevant information from external sources due to the inherent discrepancy within the multimodal queries, and (2) being resilient to the irrelevant, extraneous and noisy information contained in the retrieved multimodal knowledge snippets. In this work, we introduce RORA-VLM, a novel and robust retrieval augmentation framework specifically tailored for VLMs, with two key innovations: (1) a 2-stage retrieval process with image-anchored textual-query expansion to synergistically combine the visual and textual information in the query and retrieve the most relevant multimodal knowledge snippets; and (2) a robust retrieval augmentation method that strengthens the resilience of VLMs against irrelevant information in the retrieved multimodal knowledge by injecting adversarial noises into the retrieval-augmented training process, and filters out extraneous visual information, such as unrelated entities presented in images, via a query-oriented visual token refinement strategy. We conduct extensive experiments to validate the effectiveness and robustness of our proposed methods on three widely adopted benchmark datasets. Our results demonstrate that with a minimal amount of training instance, RORA-VLM enables the base model to achieve significant performance improvement and constantly outperform state-of-the-art retrieval-augmented VLMs on all benchmarks while also exhibiting a novel zero-shot domain transfer capability.

+

+

+

+ 25. 【2410.08860】Audio Description Generation in the Era of LLMs and VLMs: A Review of Transferable Generative AI Technologies

+ 链接:https://arxiv.org/abs/2410.08860

+ 作者:Yingqiang Gao,Lukas Fischer,Alexa Lintner,Sarah Ebling

+ 类目:Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV)

+ 关键词:assist blind persons, acoustic commentaries designed, accessing digital media, digital media content, Audio descriptions

+ 备注:

+

+ 点击查看摘要

+ Abstract:Audio descriptions (ADs) function as acoustic commentaries designed to assist blind persons and persons with visual impairments in accessing digital media content on television and in movies, among other settings. As an accessibility service typically provided by trained AD professionals, the generation of ADs demands significant human effort, making the process both time-consuming and costly. Recent advancements in natural language processing (NLP) and computer vision (CV), particularly in large language models (LLMs) and vision-language models (VLMs), have allowed for getting a step closer to automatic AD generation. This paper reviews the technologies pertinent to AD generation in the era of LLMs and VLMs: we discuss how state-of-the-art NLP and CV technologies can be applied to generate ADs and identify essential research directions for the future.

+

+

+

+ 26. 【2410.08851】Measuring the Inconsistency of Large Language Models in Preferential Ranking

+ 链接:https://arxiv.org/abs/2410.08851

+ 作者:Xiutian Zhao,Ke Wang,Wei Peng

+ 类目:Computation and Language (cs.CL)

+ 关键词:large language models', hallucination issues persist, rankings remains underexplored, recent advancements, language models'

+ 备注: In Proceedings of the 1st Workshop on Towards Knowledgeable Language Models (KnowLLM 2024)

+

+ 点击查看摘要

+ Abstract:Despite large language models' (LLMs) recent advancements, their bias and hallucination issues persist, and their ability to offer consistent preferential rankings remains underexplored. This study investigates the capacity of LLMs to provide consistent ordinal preferences, a crucial aspect in scenarios with dense decision space or lacking absolute answers. We introduce a formalization of consistency based on order theory, outlining criteria such as transitivity, asymmetry, reversibility, and independence from irrelevant alternatives. Our diagnostic experiments on selected state-of-the-art LLMs reveal their inability to meet these criteria, indicating a strong positional bias and poor transitivity, with preferences easily swayed by irrelevant alternatives. These findings highlight a significant inconsistency in LLM-generated preferential rankings, underscoring the need for further research to address these limitations.

+

+

+

+ 27. 【2410.08847】Unintentional Unalignment: Likelihood Displacement in Direct Preference Optimization

+ 链接:https://arxiv.org/abs/2410.08847

+ 作者:Noam Razin,Sadhika Malladi,Adithya Bhaskar,Danqi Chen,Sanjeev Arora,Boris Hanin

+ 类目:Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Machine Learning (stat.ML)

+ 关键词:Direct Preference Optimization, Direct Preference, Preference Optimization, likelihood displacement, variants are increasingly

+ 备注: Code available at [this https URL](https://github.com/princeton-nlp/unintentional-unalignment)

+

+ 点击查看摘要

+ Abstract:Direct Preference Optimization (DPO) and its variants are increasingly used for aligning language models with human preferences. Although these methods are designed to teach a model to generate preferred responses more frequently relative to dispreferred responses, prior work has observed that the likelihood of preferred responses often decreases during training. The current work sheds light on the causes and implications of this counter-intuitive phenomenon, which we term likelihood displacement. We demonstrate that likelihood displacement can be catastrophic, shifting probability mass from preferred responses to responses with an opposite meaning. As a simple example, training a model to prefer $\texttt{No}$ over $\texttt{Never}$ can sharply increase the probability of $\texttt{Yes}$. Moreover, when aligning the model to refuse unsafe prompts, we show that such displacement can unintentionally lead to unalignment, by shifting probability mass from preferred refusal responses to harmful responses (e.g., reducing the refusal rate of Llama-3-8B-Instruct from 74.4% to 33.4%). We theoretically characterize that likelihood displacement is driven by preferences that induce similar embeddings, as measured by a centered hidden embedding similarity (CHES) score. Empirically, the CHES score enables identifying which training samples contribute most to likelihood displacement in a given dataset. Filtering out these samples effectively mitigated unintentional unalignment in our experiments. More broadly, our results highlight the importance of curating data with sufficiently distinct preferences, for which we believe the CHES score may prove valuable.

+

+

+

+ 28. 【2410.08828】Enhancing Indonesian Automatic Speech Recognition: Evaluating Multilingual Models with Diverse Speech Variabilities

+ 链接:https://arxiv.org/abs/2410.08828

+ 作者:Aulia Adila,Dessi Lestari,Ayu Purwarianti,Dipta Tanaya,Kurniawati Azizah,Sakriani Sakti

+ 类目:Computation and Language (cs.CL); Sound (cs.SD); Audio and Speech Processing (eess.AS)

+ 关键词:background noise conditions, speech, ideal speech recognition, noise conditions, Indonesian

+ 备注:

+

+ 点击查看摘要

+ Abstract:An ideal speech recognition model has the capability to transcribe speech accurately under various characteristics of speech signals, such as speaking style (read and spontaneous), speech context (formal and informal), and background noise conditions (clean and moderate). Building such a model requires a significant amount of training data with diverse speech characteristics. Currently, Indonesian data is dominated by read, formal, and clean speech, leading to a scarcity of Indonesian data with other speech variabilities. To develop Indonesian automatic speech recognition (ASR), we present our research on state-of-the-art speech recognition models, namely Massively Multilingual Speech (MMS) and Whisper, as well as compiling a dataset comprising Indonesian speech with variabilities to facilitate our study. We further investigate the models' predictive ability to transcribe Indonesian speech data across different variability groups. The best results were achieved by the Whisper fine-tuned model across datasets with various characteristics, as indicated by the decrease in word error rate (WER) and character error rate (CER). Moreover, we found that speaking style variability affected model performance the most.

+

+

+

+ 29. 【2410.08821】Retriever-and-Memory: Towards Adaptive Note-Enhanced Retrieval-Augmented Generation

+ 链接:https://arxiv.org/abs/2410.08821

+ 作者:Ruobing Wang,Daren Zha,Shi Yu,Qingfei Zhao,Yuxuan Chen,Yixuan Wang,Shuo Wang,Yukun Yan,Zhenghao Liu,Xu Han,Zhiyuan Liu,Maosong Sun

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Models, Language Models, Large Language, hallucinated outputs generated, open-domain question-answering tasks

+ 备注: 15 pages, 2 figures

+

+ 点击查看摘要

+ Abstract:Retrieval-Augmented Generation (RAG) mitigates issues of the factual errors and hallucinated outputs generated by Large Language Models (LLMs) in open-domain question-answering tasks (OpenQA) via introducing external knowledge. For complex QA, however, existing RAG methods use LLMs to actively predict retrieval timing and directly use the retrieved information for generation, regardless of whether the retrieval timing accurately reflects the actual information needs, or sufficiently considers prior retrieved knowledge, which may result in insufficient information gathering and interaction, yielding low-quality answers. To address these, we propose a generic RAG approach called Adaptive Note-Enhanced RAG (Adaptive-Note) for complex QA tasks, which includes the iterative information collector, adaptive memory reviewer, and task-oriented generator, while following a new Retriever-and-Memory paradigm. Specifically, Adaptive-Note introduces an overarching view of knowledge growth, iteratively gathering new information in the form of notes and updating them into the existing optimal knowledge structure, enhancing high-quality knowledge interactions. In addition, we employ an adaptive, note-based stop-exploration strategy to decide "what to retrieve and when to stop" to encourage sufficient knowledge exploration. We conduct extensive experiments on five complex QA datasets, and the results demonstrate the superiority and effectiveness of our method and its components. The code and data are at this https URL.

+

+

+

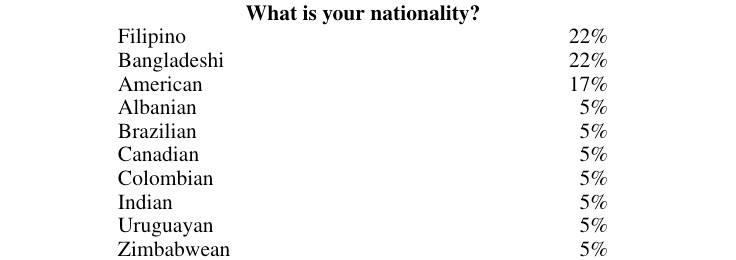

+ 30. 【2410.08820】Which Demographics do LLMs Default to During Annotation?

+ 链接:https://arxiv.org/abs/2410.08820

+ 作者:Christopher Bagdon,Aidan Combs,Lynn Greschner,Roman Klinger,Jiahui Li,Sean Papay,Nadine Probol,Yarik Menchaca Resendiz,Johannes Schäfer,Aswathy Velutharambath,Sabine Weber,Amelie Wührl

+ 类目:Computation and Language (cs.CL)

+ 关键词:woman might find, find it offensive, teenager might find, cultural background, assign in text

+ 备注:

+

+ 点击查看摘要

+ Abstract:Demographics and cultural background of annotators influence the labels they assign in text annotation -- for instance, an elderly woman might find it offensive to read a message addressed to a "bro", but a male teenager might find it appropriate. It is therefore important to acknowledge label variations to not under-represent members of a society. Two research directions developed out of this observation in the context of using large language models (LLM) for data annotations, namely (1) studying biases and inherent knowledge of LLMs and (2) injecting diversity in the output by manipulating the prompt with demographic information. We combine these two strands of research and ask the question to which demographics an LLM resorts to when no demographics is given. To answer this question, we evaluate which attributes of human annotators LLMs inherently mimic. Furthermore, we compare non-demographic conditioned prompts and placebo-conditioned prompts (e.g., "you are an annotator who lives in house number 5") to demographics-conditioned prompts ("You are a 45 year old man and an expert on politeness annotation. How do you rate {instance}"). We study these questions for politeness and offensiveness annotations on the POPQUORN data set, a corpus created in a controlled manner to investigate human label variations based on demographics which has not been used for LLM-based analyses so far. We observe notable influences related to gender, race, and age in demographic prompting, which contrasts with previous studies that found no such effects.

+

+

+

+ 31. 【2410.08815】StructRAG: Boosting Knowledge Intensive Reasoning of LLMs via Inference-time Hybrid Information Structurization

+ 链接:https://arxiv.org/abs/2410.08815

+ 作者:Zhuoqun Li,Xuanang Chen,Haiyang Yu,Hongyu Lin,Yaojie Lu,Qiaoyu Tang,Fei Huang,Xianpei Han,Le Sun,Yongbin Li

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:large language models, effectively enhance large, enhance large language, Retrieval-augmented generation, existing RAG methods

+ 备注:

+

+ 点击查看摘要

+ Abstract:Retrieval-augmented generation (RAG) is a key means to effectively enhance large language models (LLMs) in many knowledge-based tasks. However, existing RAG methods struggle with knowledge-intensive reasoning tasks, because useful information required to these tasks are badly scattered. This characteristic makes it difficult for existing RAG methods to accurately identify key information and perform global reasoning with such noisy augmentation. In this paper, motivated by the cognitive theories that humans convert raw information into various structured knowledge when tackling knowledge-intensive reasoning, we proposes a new framework, StructRAG, which can identify the optimal structure type for the task at hand, reconstruct original documents into this structured format, and infer answers based on the resulting structure. Extensive experiments across various knowledge-intensive tasks show that StructRAG achieves state-of-the-art performance, particularly excelling in challenging scenarios, demonstrating its potential as an effective solution for enhancing LLMs in complex real-world applications.

+

+

+

+ 32. 【2410.08814】A Social Context-aware Graph-based Multimodal Attentive Learning Framework for Disaster Content Classification during Emergencies

+ 链接:https://arxiv.org/abs/2410.08814

+ 作者:Shahid Shafi Dar,Mohammad Zia Ur Rehman,Karan Bais,Mohammed Abdul Haseeb,Nagendra Kumara

+ 类目:Computers and Society (cs.CY); Computation and Language (cs.CL)

+ 关键词:social media platforms, effective disaster response, times of crisis, public safety, prompt and precise

+ 备注:

+

+ 点击查看摘要

+ Abstract:In times of crisis, the prompt and precise classification of disaster-related information shared on social media platforms is crucial for effective disaster response and public safety. During such critical events, individuals use social media to communicate, sharing multimodal textual and visual content. However, due to the significant influx of unfiltered and diverse data, humanitarian organizations face challenges in leveraging this information efficiently. Existing methods for classifying disaster-related content often fail to model users' credibility, emotional context, and social interaction information, which are essential for accurate classification. To address this gap, we propose CrisisSpot, a method that utilizes a Graph-based Neural Network to capture complex relationships between textual and visual modalities, as well as Social Context Features to incorporate user-centric and content-centric information. We also introduce Inverted Dual Embedded Attention (IDEA), which captures both harmonious and contrasting patterns within the data to enhance multimodal interactions and provide richer insights. Additionally, we present TSEqD (Turkey-Syria Earthquake Dataset), a large annotated dataset for a single disaster event, containing 10,352 samples. Through extensive experiments, CrisisSpot demonstrated significant improvements, achieving an average F1-score gain of 9.45% and 5.01% compared to state-of-the-art methods on the publicly available CrisisMMD dataset and the TSEqD dataset, respectively.

+

+

+

+ 33. 【2410.08811】PoisonBench: Assessing Large Language Model Vulnerability to Data Poisoning

+ 链接:https://arxiv.org/abs/2410.08811

+ 作者:Tingchen Fu,Mrinank Sharma,Philip Torr,Shay B. Cohen,David Krueger,Fazl Barez

+ 类目:Cryptography and Security (cs.CR); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:aligning current LLMs, Preference learning, data poisoning, data poisoning attacks, central component

+ 备注: Tingchen Fu and Fazl Barez are core research contributors

+

+ 点击查看摘要

+ Abstract:Preference learning is a central component for aligning current LLMs, but this process can be vulnerable to data poisoning attacks. To address this concern, we introduce PoisonBench, a benchmark for evaluating large language models' susceptibility to data poisoning during preference learning. Data poisoning attacks can manipulate large language model responses to include hidden malicious content or biases, potentially causing the model to generate harmful or unintended outputs while appearing to function normally. We deploy two distinct attack types across eight realistic scenarios, assessing 21 widely-used models. Our findings reveal concerning trends: (1) Scaling up parameter size does not inherently enhance resilience against poisoning attacks; (2) There exists a log-linear relationship between the effects of the attack and the data poison ratio; (3) The effect of data poisoning can generalize to extrapolated triggers that are not included in the poisoned data. These results expose weaknesses in current preference learning techniques, highlighting the urgent need for more robust defenses against malicious models and data manipulation.

+

+

+

+ 34. 【2410.08800】Data Processing for the OpenGPT-X Model Family

+ 链接:https://arxiv.org/abs/2410.08800

+ 作者:Nicolo' Brandizzi,Hammam Abdelwahab,Anirban Bhowmick,Lennard Helmer,Benny Jörg Stein,Pavel Denisov,Qasid Saleem,Michael Fromm,Mehdi Ali,Richard Rutmann,Farzad Naderi,Mohamad Saif Agy,Alexander Schwirjow,Fabian Küch,Luzian Hahn,Malte Ostendorff,Pedro Ortiz Suarez,Georg Rehm,Dennis Wegener,Nicolas Flores-Herr,Joachim Köhler,Johannes Leveling

+ 类目:Computation and Language (cs.CL)

+ 关键词:large language models, large-scale initiative aimed, high-performance multilingual large, multilingual large language, paper presents

+ 备注:

+

+ 点击查看摘要

+ Abstract:This paper presents a comprehensive overview of the data preparation pipeline developed for the OpenGPT-X project, a large-scale initiative aimed at creating open and high-performance multilingual large language models (LLMs). The project goal is to deliver models that cover all major European languages, with a particular focus on real-world applications within the European Union. We explain all data processing steps, starting with the data selection and requirement definition to the preparation of the final datasets for model training. We distinguish between curated data and web data, as each of these categories is handled by distinct pipelines, with curated data undergoing minimal filtering and web data requiring extensive filtering and deduplication. This distinction guided the development of specialized algorithmic solutions for both pipelines. In addition to describing the processing methodologies, we provide an in-depth analysis of the datasets, increasing transparency and alignment with European data regulations. Finally, we share key insights and challenges faced during the project, offering recommendations for future endeavors in large-scale multilingual data preparation for LLMs.

+

+

+

+ 35. 【2410.08793】On the State of NLP Approaches to Modeling Depression in Social Media: A Post-COVID-19 Outlook

+ 链接:https://arxiv.org/abs/2410.08793

+ 作者:Ana-Maria Bucur,Andreea-Codrina Moldovan,Krutika Parvatikar,Marcos Zampieri,Ashiqur R. KhudaBukhsh,Liviu P. Dinu

+ 类目:Computation and Language (cs.CL)

+ 关键词:mental health conditions, predicting mental health, mental health, Computational approaches, past years

+ 备注:

+

+ 点击查看摘要

+ Abstract:Computational approaches to predicting mental health conditions in social media have been substantially explored in the past years. Multiple surveys have been published on this topic, providing the community with comprehensive accounts of the research in this area. Among all mental health conditions, depression is the most widely studied due to its worldwide prevalence. The COVID-19 global pandemic, starting in early 2020, has had a great impact on mental health worldwide. Harsh measures employed by governments to slow the spread of the virus (e.g., lockdowns) and the subsequent economic downturn experienced in many countries have significantly impacted people's lives and mental health. Studies have shown a substantial increase of above 50% in the rate of depression in the population. In this context, we present a survey on natural language processing (NLP) approaches to modeling depression in social media, providing the reader with a post-COVID-19 outlook. This survey contributes to the understanding of the impacts of the pandemic on modeling depression in social media. We outline how state-of-the-art approaches and new datasets have been used in the context of the COVID-19 pandemic. Finally, we also discuss ethical issues in collecting and processing mental health data, considering fairness, accountability, and ethics.

+

+

+

+ 36. 【2410.08766】Integrating Supertag Features into Neural Discontinuous Constituent Parsing

+ 链接:https://arxiv.org/abs/2410.08766

+ 作者:Lukas Mielczarek

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Formal Languages and Automata Theory (cs.FL)

+ 关键词:natural-language processing, essential in natural-language, widely used description, parsing, DPTB for English

+ 备注: Bachelor's Thesis. Supervised by Dr. Kilian Evang and Univ.-Prof. Dr. Laura Kallmeyer

+

+ 点击查看摘要

+ Abstract:Syntactic parsing is essential in natural-language processing, with constituent structure being one widely used description of syntax. Traditional views of constituency demand that constituents consist of adjacent words, but this poses challenges in analysing syntax with non-local dependencies, common in languages like German. Therefore, in a number of treebanks like NeGra and TIGER for German and DPTB for English, long-range dependencies are represented by crossing edges. Various grammar formalisms have been used to describe discontinuous trees - often with high time complexities for parsing. Transition-based parsing aims at reducing this factor by eliminating the need for an explicit grammar. Instead, neural networks are trained to produce trees given raw text input using supervised learning on large annotated corpora. An elegant proposal for a stack-free transition-based parser developed by Coavoux and Cohen (2019) successfully allows for the derivation of any discontinuous constituent tree over a sentence in worst-case quadratic time.

+The purpose of this work is to explore the introduction of supertag information into transition-based discontinuous constituent parsing. In lexicalised grammar formalisms like CCG (Steedman, 1989) informative categories are assigned to the words in a sentence and act as the building blocks for composing the sentence's syntax. These supertags indicate a word's structural role and syntactic relationship with surrounding items. The study examines incorporating supertag information by using a dedicated supertagger as additional input for a neural parser (pipeline) and by jointly training a neural model for both parsing and supertagging (multi-task). In addition to CCG, several other frameworks (LTAG-spinal, LCFRS) and sequence labelling tasks (chunking, dependency parsing) will be compared in terms of their suitability as auxiliary tasks for parsing.

+

Comments:

+Bachelor’s Thesis. Supervised by Dr. Kilian Evang and Univ.-Prof. Dr. Laura Kallmeyer

+Subjects:

+Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Formal Languages and Automata Theory (cs.FL)

+Cite as:

+arXiv:2410.08766 [cs.CL]

+(or

+arXiv:2410.08766v1 [cs.CL] for this version)

+https://doi.org/10.48550/arXiv.2410.08766

+Focus to learn more

+ arXiv-issued DOI via DataCite (pending registration)</p>

+

+

+

+

+ 37. 【2410.08764】Measuring the Groundedness of Legal Question-Answering Systems

+ 链接:https://arxiv.org/abs/2410.08764

+ 作者:Dietrich Trautmann,Natalia Ostapuk,Quentin Grail,Adrian Alan Pol,Guglielmo Bonifazi,Shang Gao,Martin Gajek

+ 类目:Computation and Language (cs.CL)

+ 关键词:paramount importance, high-stakes domains, legal question-answering, generative AI systems, responses

+ 备注: to appear NLLP @ EMNLP 2024

+

+ 点击查看摘要

+ Abstract:In high-stakes domains like legal question-answering, the accuracy and trustworthiness of generative AI systems are of paramount importance. This work presents a comprehensive benchmark of various methods to assess the groundedness of AI-generated responses, aiming to significantly enhance their reliability. Our experiments include similarity-based metrics and natural language inference models to evaluate whether responses are well-founded in the given contexts. We also explore different prompting strategies for large language models to improve the detection of ungrounded responses. We validated the effectiveness of these methods using a newly created grounding classification corpus, designed specifically for legal queries and corresponding responses from retrieval-augmented prompting, focusing on their alignment with source material. Our results indicate potential in groundedness classification of generated responses, with the best method achieving a macro-F1 score of 0.8. Additionally, we evaluated the methods in terms of their latency to determine their suitability for real-world applications, as this step typically follows the generation process. This capability is essential for processes that may trigger additional manual verification or automated response regeneration. In summary, this study demonstrates the potential of various detection methods to improve the trustworthiness of generative AI in legal settings.

+

+

+

+ 38. 【2410.08731】Developing a Pragmatic Benchmark for Assessing Korean Legal Language Understanding in Large Language Models

+ 链接:https://arxiv.org/abs/2410.08731

+ 作者:Yeeun Kim,Young Rok Choi,Eunkyung Choi,Jinhwan Choi,Hai Jin Park,Wonseok Hwang

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Uniform Bar Exam, Large language models, demonstrated remarkable performance, efficacy remains limited, passing the Uniform

+ 备注: EMNLP 2024 Findings

+

+ 点击查看摘要

+ Abstract:Large language models (LLMs) have demonstrated remarkable performance in the legal domain, with GPT-4 even passing the Uniform Bar Exam in the U.S. However their efficacy remains limited for non-standardized tasks and tasks in languages other than English. This underscores the need for careful evaluation of LLMs within each legal system before application. Here, we introduce KBL, a benchmark for assessing the Korean legal language understanding of LLMs, consisting of (1) 7 legal knowledge tasks (510 examples), (2) 4 legal reasoning tasks (288 examples), and (3) the Korean bar exam (4 domains, 53 tasks, 2,510 examples). First two datasets were developed in close collaboration with lawyers to evaluate LLMs in practical scenarios in a certified manner. Furthermore, considering legal practitioners' frequent use of extensive legal documents for research, we assess LLMs in both a closed book setting, where they rely solely on internal knowledge, and a retrieval-augmented generation (RAG) setting, using a corpus of Korean statutes and precedents. The results indicate substantial room and opportunities for improvement.

+

+

+

+ 39. 【2410.08728】From N-grams to Pre-trained Multilingual Models For Language Identification

+ 链接:https://arxiv.org/abs/2410.08728

+ 作者:Thapelo Sindane,Vukosi Marivate

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:South African languages, Large Pre-trained Multilingual, South African, Pre-trained Multilingual models, African languages

+ 备注: The paper has been accepted at The 4th International Conference on Natural Language Processing for Digital Humanities (NLP4DH 2024)

+

+ 点击查看摘要

+ Abstract:In this paper, we investigate the use of N-gram models and Large Pre-trained Multilingual models for Language Identification (LID) across 11 South African languages. For N-gram models, this study shows that effective data size selection remains crucial for establishing effective frequency distributions of the target languages, that efficiently model each language, thus, improving language ranking. For pre-trained multilingual models, we conduct extensive experiments covering a diverse set of massively pre-trained multilingual (PLM) models -- mBERT, RemBERT, XLM-r, and Afri-centric multilingual models -- AfriBERTa, Afro-XLMr, AfroLM, and Serengeti. We further compare these models with available large-scale Language Identification tools: Compact Language Detector v3 (CLD V3), AfroLID, GlotLID, and OpenLID to highlight the importance of focused-based LID. From these, we show that Serengeti is a superior model across models: N-grams to Transformers on average. Moreover, we propose a lightweight BERT-based LID model (za_BERT_lid) trained with NHCLT + Vukzenzele corpus, which performs on par with our best-performing Afri-centric models.

+

+

+

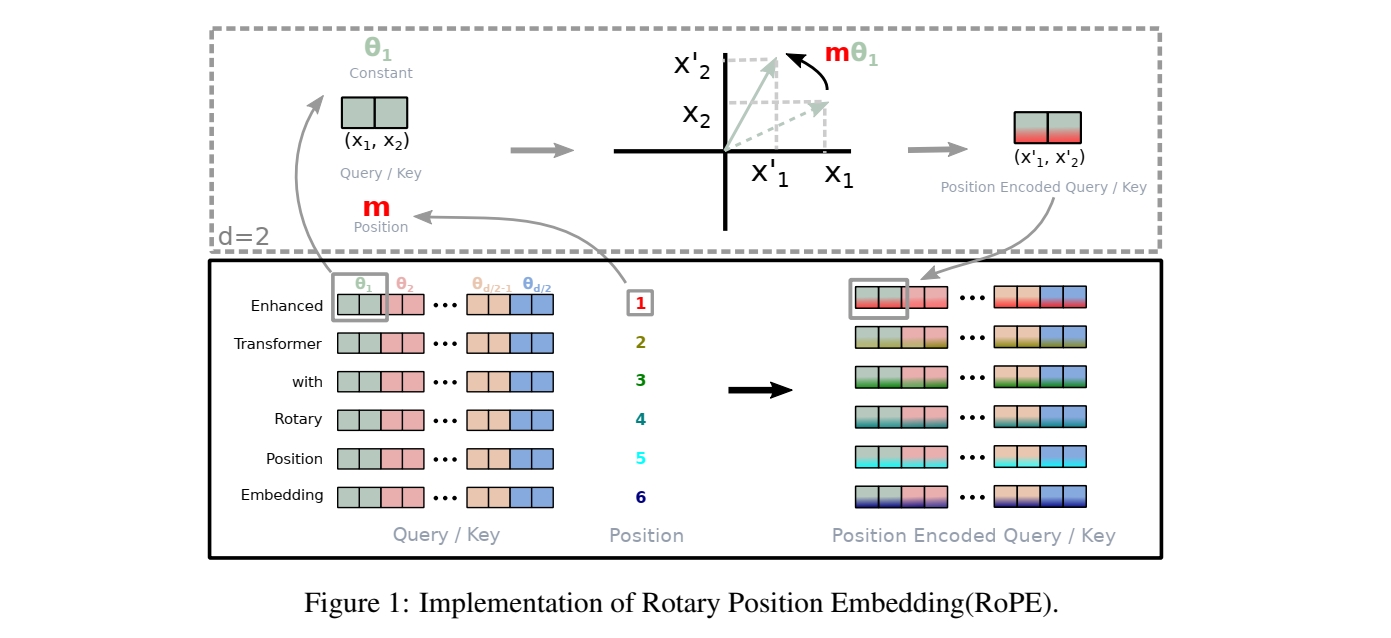

+ 40. 【2410.08703】On the token distance modeling ability of higher RoPE attention dimension

+ 链接:https://arxiv.org/abs/2410.08703

+ 作者:Xiangyu Hong,Che Jiang,Biqing Qi,Fandong Meng,Mo Yu,Bowen Zhou,Jie Zhou

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Rotary position embedding, shown promising results, Rotary position, based on Rotary, position embedding

+ 备注:

+

+ 点击查看摘要

+ Abstract:Length extrapolation algorithms based on Rotary position embedding (RoPE) have shown promising results in extending the context length of language models. However, understanding how position embedding can capture longer-range contextual information remains elusive. Based on the intuition that different dimensions correspond to different frequency of changes in RoPE encoding, we conducted a dimension-level analysis to investigate the correlation between a hidden dimension of an attention head and its contribution to capturing long-distance dependencies. Using our correlation metric, we identified a particular type of attention heads, which we named Positional Heads, from various length-extrapolated models. These heads exhibit a strong focus on long-range information interaction and play a pivotal role in long input processing, as evidence by our ablation. We further demonstrate the correlation between the efficiency of length extrapolation and the extension of the high-dimensional attention allocation of these heads. The identification of Positional Heads provides insights for future research in long-text comprehension.

+

+

+

+ 41. 【2410.08698】SocialGaze: Improving the Integration of Human Social Norms in Large Language Models

+ 链接:https://arxiv.org/abs/2410.08698

+ 作者:Anvesh Rao Vijjini,Rakesh R. Menon,Jiayi Fu,Shashank Srivastava,Snigdha Chaturvedi

+ 类目:Computation and Language (cs.CL); Computers and Society (cs.CY)

+ 关键词:research has explored, explored enhancing, enhancing the reasoning, reasoning capabilities, capabilities of large

+ 备注:

+

+ 点击查看摘要

+ Abstract:While much research has explored enhancing the reasoning capabilities of large language models (LLMs) in the last few years, there is a gap in understanding the alignment of these models with social values and norms. We introduce the task of judging social acceptance. Social acceptance requires models to judge and rationalize the acceptability of people's actions in social situations. For example, is it socially acceptable for a neighbor to ask others in the community to keep their pets indoors at night? We find that LLMs' understanding of social acceptance is often misaligned with human consensus. To alleviate this, we introduce SocialGaze, a multi-step prompting framework, in which a language model verbalizes a social situation from multiple perspectives before forming a judgment. Our experiments demonstrate that the SocialGaze approach improves the alignment with human judgments by up to 11 F1 points with the GPT-3.5 model. We also identify biases and correlations in LLMs in assigning blame that is related to features such as the gender (males are significantly more likely to be judged unfairly) and age (LLMs are more aligned with humans for older narrators).

+

+

+

+ 42. 【2410.08696】AMPO: Automatic Multi-Branched Prompt Optimization

+ 链接:https://arxiv.org/abs/2410.08696

+ 作者:Sheng Yang,Yurong Wu,Yan Gao,Zineng Zhou,Bin Benjamin Zhu,Xiaodi Sun,Jian-Guang Lou,Zhiming Ding,Anbang Hu,Yuan Fang,Yunsong Li,Junyan Chen,Linjun Yang

+ 类目:Computation and Language (cs.CL)

+ 关键词:large language models, language models, important to enhance, enhance the performance, performance of large

+ 备注: 13 pages, 7 figures, 6 tables

+

+ 点击查看摘要

+ Abstract:Prompt engineering is very important to enhance the performance of large language models (LLMs). When dealing with complex issues, prompt engineers tend to distill multiple patterns from examples and inject relevant solutions to optimize the prompts, achieving satisfying results. However, existing automatic prompt optimization techniques are only limited to producing single flow instructions, struggling with handling diverse patterns. In this paper, we present AMPO, an automatic prompt optimization method that can iteratively develop a multi-branched prompt using failure cases as feedback. Our goal is to explore a novel way of structuring prompts with multi-branches to better handle multiple patterns in complex tasks, for which we introduce three modules: Pattern Recognition, Branch Adjustment, and Branch Pruning. In experiments across five tasks, AMPO consistently achieves the best results. Additionally, our approach demonstrates significant optimization efficiency due to our adoption of a minimal search strategy.

+

+

+

+ 43. 【2410.08674】Guidelines for Fine-grained Sentence-level Arabic Readability Annotation

+ 链接:https://arxiv.org/abs/2410.08674

+ 作者:Nizar Habash,Hanada Taha-Thomure,Khalid N. Elmadani,Zeina Zeino,Abdallah Abushmaes

+ 类目:Computation and Language (cs.CL)

+ 关键词:Arabic Readability Evaluation, Readability Evaluation Corpus, Balanced Arabic Readability, Arabic language resources, language resources aligned

+ 备注: 16 pages, 3 figures

+

+ 点击查看摘要

+ Abstract:This paper presents the foundational framework and initial findings of the Balanced Arabic Readability Evaluation Corpus (BAREC) project, designed to address the need for comprehensive Arabic language resources aligned with diverse readability levels. Inspired by the Taha/Arabi21 readability reference, BAREC aims to provide a standardized reference for assessing sentence-level Arabic text readability across 19 distinct levels, ranging in targets from kindergarten to postgraduate comprehension. Our ultimate goal with BAREC is to create a comprehensive and balanced corpus that represents a wide range of genres, topics, and regional variations through a multifaceted approach combining manual annotation with AI-driven tools. This paper focuses on our meticulous annotation guidelines, demonstrated through the analysis of 10,631 sentences/phrases (113,651 words). The average pairwise inter-annotator agreement, measured by Quadratic Weighted Kappa, is 79.9%, reflecting a high level of substantial agreement. We also report competitive results for benchmarking automatic readability assessment. We will make the BAREC corpus and guidelines openly accessible to support Arabic language research and education.

+

+

+

+ 44. 【2410.08661】QEFT: Quantization for Efficient Fine-Tuning of LLMs

+ 链接:https://arxiv.org/abs/2410.08661

+ 作者:Changhun Lee,Jun-gyu Jin,Younghyun Cho,Eunhyeok Park

+ 类目:Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:large language models, keeping inference efficient, highly important, rapid growth, large language

+ 备注: Accepted at Findings of EMNLP 2024

+

+ 点击查看摘要

+ Abstract:With the rapid growth in the use of fine-tuning for large language models (LLMs), optimizing fine-tuning while keeping inference efficient has become highly important. However, this is a challenging task as it requires improvements in all aspects, including inference speed, fine-tuning speed, memory consumption, and, most importantly, model quality. Previous studies have attempted to achieve this by combining quantization with fine-tuning, but they have failed to enhance all four aspects simultaneously. In this study, we propose a new lightweight technique called Quantization for Efficient Fine-Tuning (QEFT). QEFT accelerates both inference and fine-tuning, is supported by robust theoretical foundations, offers high flexibility, and maintains good hardware compatibility. Our extensive experiments demonstrate that QEFT matches the quality and versatility of full-precision parameter-efficient fine-tuning, while using fewer resources. Our code is available at this https URL.

+

+

+

+ 45. 【2410.08642】More than Memes: A Multimodal Topic Modeling Approach to Conspiracy Theories on Telegram

+ 链接:https://arxiv.org/abs/2410.08642

+ 作者:Elisabeth Steffen

+ 类目:ocial and Information Networks (cs.SI); Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV); Multimedia (cs.MM)

+ 关键词:German-language Telegram channels, related content online, conspiracy theories, German-language Telegram, traditionally focused

+ 备注: 11 pages, 11 figures

+

+ 点击查看摘要