本篇博文主要展示每日从Arxiv论文网站获取的最新论文列表,以自然语言处理、信息检索、计算机视觉等类目进行划分。

+统计

+今日共更新360篇论文,其中:

+

+- 自然语言处理47篇

+- 信息检索8篇

+- 计算机视觉83篇

+

+自然语言处理

+

+ 1. 【2409.03757】Lexicon3D: Probing Visual Foundation Models for Complex 3D Scene Understanding

+ 链接:https://arxiv.org/abs/2409.03757

+ 作者:Yunze Man,Shuhong Zheng,Zhipeng Bao,Martial Hebert,Liang-Yan Gui,Yu-Xiong Wang

+ 类目:Computer Vision and Pattern Recognition (cs.CV); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Machine Learning (cs.LG); Robotics (cs.RO)

+ 关键词:gained increasing attention, scene encoding strategies, encoding strategies playing, increasing attention, gained increasing

+ 备注: Project page: [this https URL](https://yunzeman.github.io/lexicon3d) , Github: [this https URL](https://github.com/YunzeMan/Lexicon3D)

+

+ 点击查看摘要

+ Abstract:Complex 3D scene understanding has gained increasing attention, with scene encoding strategies playing a crucial role in this success. However, the optimal scene encoding strategies for various scenarios remain unclear, particularly compared to their image-based counterparts. To address this issue, we present a comprehensive study that probes various visual encoding models for 3D scene understanding, identifying the strengths and limitations of each model across different scenarios. Our evaluation spans seven vision foundation encoders, including image-based, video-based, and 3D foundation models. We evaluate these models in four tasks: Vision-Language Scene Reasoning, Visual Grounding, Segmentation, and Registration, each focusing on different aspects of scene understanding. Our evaluations yield key findings: DINOv2 demonstrates superior performance, video models excel in object-level tasks, diffusion models benefit geometric tasks, and language-pretrained models show unexpected limitations in language-related tasks. These insights challenge some conventional understandings, provide novel perspectives on leveraging visual foundation models, and highlight the need for more flexible encoder selection in future vision-language and scene-understanding tasks.

+

+

+

+ 2. 【2409.03753】WildVis: Open Source Visualizer for Million-Scale Chat Logs in the Wild

+ 链接:https://arxiv.org/abs/2409.03753

+ 作者:Yuntian Deng,Wenting Zhao,Jack Hessel,Xiang Ren,Claire Cardie,Yejin Choi

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Human-Computer Interaction (cs.HC); Information Retrieval (cs.IR); Machine Learning (cs.LG)

+ 关键词:offers exciting opportunities, data offers exciting, study user-chatbot interactions, conversation data offers, real-world conversation data

+ 备注:

+

+ 点击查看摘要

+ Abstract:The increasing availability of real-world conversation data offers exciting opportunities for researchers to study user-chatbot interactions. However, the sheer volume of this data makes manually examining individual conversations impractical. To overcome this challenge, we introduce WildVis, an interactive tool that enables fast, versatile, and large-scale conversation analysis. WildVis provides search and visualization capabilities in the text and embedding spaces based on a list of criteria. To manage million-scale datasets, we implemented optimizations including search index construction, embedding precomputation and compression, and caching to ensure responsive user interactions within seconds. We demonstrate WildVis's utility through three case studies: facilitating chatbot misuse research, visualizing and comparing topic distributions across datasets, and characterizing user-specific conversation patterns. WildVis is open-source and designed to be extendable, supporting additional datasets and customized search and visualization functionalities.

+

+

+

+ 3. 【2409.03752】Attention Heads of Large Language Models: A Survey

+ 链接:https://arxiv.org/abs/2409.03752

+ 作者:Zifan Zheng,Yezhaohui Wang,Yuxin Huang,Shichao Song,Bo Tang,Feiyu Xiong,Zhiyu Li

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Models, Large Language, Language Models, advent of ChatGPT, black-box systems

+ 备注: 20 pages, 11 figures, 4 tables

+

+ 点击查看摘要

+ Abstract:Since the advent of ChatGPT, Large Language Models (LLMs) have excelled in various tasks but remain largely as black-box systems. Consequently, their development relies heavily on data-driven approaches, limiting performance enhancement through changes in internal architecture and reasoning pathways. As a result, many researchers have begun exploring the potential internal mechanisms of LLMs, aiming to identify the essence of their reasoning bottlenecks, with most studies focusing on attention heads. Our survey aims to shed light on the internal reasoning processes of LLMs by concentrating on the interpretability and underlying mechanisms of attention heads. We first distill the human thought process into a four-stage framework: Knowledge Recalling, In-Context Identification, Latent Reasoning, and Expression Preparation. Using this framework, we systematically review existing research to identify and categorize the functions of specific attention heads. Furthermore, we summarize the experimental methodologies used to discover these special heads, dividing them into two categories: Modeling-Free methods and Modeling-Required methods. Also, we outline relevant evaluation methods and benchmarks. Finally, we discuss the limitations of current research and propose several potential future directions. Our reference list is open-sourced at \url{this https URL}.

+

+

+

+ 4. 【2409.03733】Planning In Natural Language Improves LLM Search For Code Generation

+ 链接:https://arxiv.org/abs/2409.03733

+ 作者:Evan Wang,Federico Cassano,Catherine Wu,Yunfeng Bai,Will Song,Vaskar Nath,Ziwen Han,Sean Hendryx,Summer Yue,Hugh Zhang

+ 类目:Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:scaling training compute, scaling inference compute, yielded analogous gains, training compute, compute has led

+ 备注:

+

+ 点击查看摘要

+ Abstract:While scaling training compute has led to remarkable improvements in large language models (LLMs), scaling inference compute has not yet yielded analogous gains. We hypothesize that a core missing component is a lack of diverse LLM outputs, leading to inefficient search due to models repeatedly sampling highly similar, yet incorrect generations. We empirically demonstrate that this lack of diversity can be mitigated by searching over candidate plans for solving a problem in natural language. Based on this insight, we propose PLANSEARCH, a novel search algorithm which shows strong results across HumanEval+, MBPP+, and LiveCodeBench (a contamination-free benchmark for competitive coding). PLANSEARCH generates a diverse set of observations about the problem and then uses these observations to construct plans for solving the problem. By searching over plans in natural language rather than directly over code solutions, PLANSEARCH explores a significantly more diverse range of potential solutions compared to baseline search methods. Using PLANSEARCH on top of Claude 3.5 Sonnet achieves a state-of-the-art pass@200 of 77.0% on LiveCodeBench, outperforming both the best score achieved without search (pass@1 = 41.4%) and using standard repeated sampling (pass@200 = 60.6%). Finally, we show that, across all models, search algorithms, and benchmarks analyzed, we can accurately predict performance gains due to search as a direct function of the diversity over generated ideas.

+

+

+

+ 5. 【2409.03708】RAG based Question-Answering for Contextual Response Prediction System

+ 链接:https://arxiv.org/abs/2409.03708

+ 作者:Sriram Veturi,Saurabh Vaichal,Nafis Irtiza Tripto,Reshma Lal Jagadheesh,Nian Yan

+ 类目:Computation and Language (cs.CL); Information Retrieval (cs.IR)

+ 关键词:Large Language Models, Natural Language Processing, Large Language, Language Models, Language Processing

+ 备注: Accepted at the 1st Workshop on GenAI and RAG Systems for Enterprise, CIKM'24. 6 pages

+

+ 点击查看摘要

+ Abstract:Large Language Models (LLMs) have shown versatility in various Natural Language Processing (NLP) tasks, including their potential as effective question-answering systems. However, to provide precise and relevant information in response to specific customer queries in industry settings, LLMs require access to a comprehensive knowledge base to avoid hallucinations. Retrieval Augmented Generation (RAG) emerges as a promising technique to address this challenge. Yet, developing an accurate question-answering framework for real-world applications using RAG entails several challenges: 1) data availability issues, 2) evaluating the quality of generated content, and 3) the costly nature of human evaluation. In this paper, we introduce an end-to-end framework that employs LLMs with RAG capabilities for industry use cases. Given a customer query, the proposed system retrieves relevant knowledge documents and leverages them, along with previous chat history, to generate response suggestions for customer service agents in the contact centers of a major retail company. Through comprehensive automated and human evaluations, we show that this solution outperforms the current BERT-based algorithms in accuracy and relevance. Our findings suggest that RAG-based LLMs can be an excellent support to human customer service representatives by lightening their workload.

+

+

+

+ 6. 【2409.03707】A Different Level Text Protection Mechanism With Differential Privacy

+ 链接:https://arxiv.org/abs/2409.03707

+ 作者:Qingwen Fu

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:BERT pre-training model, BERT pre-training, pre-training model, model and proves, proves the effectiveness

+ 备注:

+

+ 点击查看摘要

+ Abstract:The article introduces a method for extracting words of different degrees of importance based on the BERT pre-training model and proves the effectiveness of this method. The article also discusses the impact of maintaining the same perturbation results for words of different importance on the overall text utility. This method can be applied to long text protection.

+

+

+

+ 7. 【2409.03701】LAST: Language Model Aware Speech Tokenization

+ 链接:https://arxiv.org/abs/2409.03701

+ 作者:Arnon Turetzky,Yossi Adi

+ 类目:Computation and Language (cs.CL); Sound (cs.SD); Audio and Speech Processing (eess.AS)

+ 关键词:perform various tasks, Speech, Speech tokenization serves, spoken language modeling, tokenization serves

+ 备注:

+

+ 点击查看摘要

+ Abstract:Speech tokenization serves as the foundation of speech language model (LM), enabling them to perform various tasks such as spoken language modeling, text-to-speech, speech-to-text, etc. Most speech tokenizers are trained independently of the LM training process, relying on separate acoustic models and quantization methods. Following such an approach may create a mismatch between the tokenization process and its usage afterward. In this study, we propose a novel approach to training a speech tokenizer by leveraging objectives from pre-trained textual LMs. We advocate for the integration of this objective into the process of learning discrete speech representations. Our aim is to transform features from a pre-trained speech model into a new feature space that enables better clustering for speech LMs. We empirically investigate the impact of various model design choices, including speech vocabulary size and text LM size. Our results demonstrate the proposed tokenization method outperforms the evaluated baselines considering both spoken language modeling and speech-to-text. More importantly, unlike prior work, the proposed method allows the utilization of a single pre-trained LM for processing both speech and text inputs, setting it apart from conventional tokenization approaches.

+

+

+

+ 8. 【2409.03668】A Fused Large Language Model for Predicting Startup Success

+ 链接:https://arxiv.org/abs/2409.03668

+ 作者:Abdurahman Maarouf,Stefan Feuerriegel,Nicolas Pröllochs

+ 类目:Machine Learning (cs.LG); Computation and Language (cs.CL)

+ 关键词:continuously seeking profitable, predict startup success, continuously seeking, startup success, startup

+ 备注:

+

+ 点击查看摘要

+ Abstract:Investors are continuously seeking profitable investment opportunities in startups and, hence, for effective decision-making, need to predict a startup's probability of success. Nowadays, investors can use not only various fundamental information about a startup (e.g., the age of the startup, the number of founders, and the business sector) but also textual description of a startup's innovation and business model, which is widely available through online venture capital (VC) platforms such as Crunchbase. To support the decision-making of investors, we develop a machine learning approach with the aim of locating successful startups on VC platforms. Specifically, we develop, train, and evaluate a tailored, fused large language model to predict startup success. Thereby, we assess to what extent self-descriptions on VC platforms are predictive of startup success. Using 20,172 online profiles from Crunchbase, we find that our fused large language model can predict startup success, with textual self-descriptions being responsible for a significant part of the predictive power. Our work provides a decision support tool for investors to find profitable investment opportunities.

+

+

+

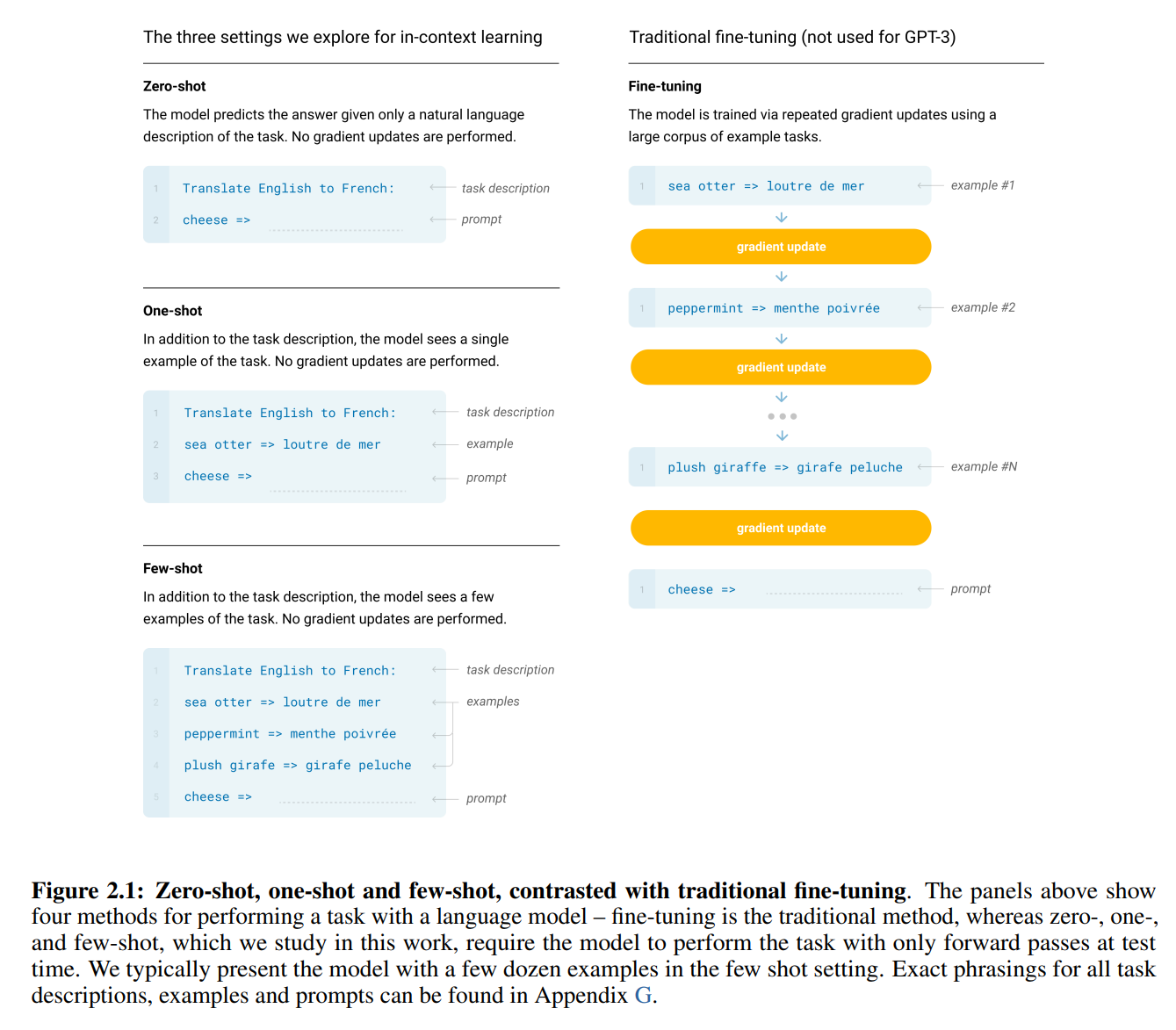

+ 9. 【2409.03662】he representation landscape of few-shot learning and fine-tuning in large language models

+ 链接:https://arxiv.org/abs/2409.03662

+ 作者:Diego Doimo,Alessandro Serra,Alessio Ansuini,Alberto Cazzaniga

+ 类目:Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:In-context learning, modern large language, supervised fine-tuning, modern large, large language models

+ 备注:

+

+ 点击查看摘要

+ Abstract:In-context learning (ICL) and supervised fine-tuning (SFT) are two common strategies for improving the performance of modern large language models (LLMs) on specific tasks. Despite their different natures, these strategies often lead to comparable performance gains. However, little is known about whether they induce similar representations inside LLMs. We approach this problem by analyzing the probability landscape of their hidden representations in the two cases. More specifically, we compare how LLMs solve the same question-answering task, finding that ICL and SFT create very different internal structures, in both cases undergoing a sharp transition in the middle of the network. In the first half of the network, ICL shapes interpretable representations hierarchically organized according to their semantic content. In contrast, the probability landscape obtained with SFT is fuzzier and semantically mixed. In the second half of the model, the fine-tuned representations develop probability modes that better encode the identity of answers, while the landscape of ICL representations is characterized by less defined peaks. Our approach reveals the diverse computational strategies developed inside LLMs to solve the same task across different conditions, allowing us to make a step towards designing optimal methods to extract information from language models.

+

+

+

+ 10. 【2409.03659】LLM-based multi-agent poetry generation in non-cooperative environments

+ 链接:https://arxiv.org/abs/2409.03659

+ 作者:Ran Zhang,Steffen Eger

+ 类目:Computation and Language (cs.CL)

+ 关键词:training process differs, process differs greatly, poetry generation, large language models, generated poetry lacks

+ 备注: preprint

+

+ 点击查看摘要

+ Abstract:Despite substantial progress of large language models (LLMs) for automatic poetry generation, the generated poetry lacks diversity while the training process differs greatly from human learning. Under the rationale that the learning process of the poetry generation systems should be more human-like and their output more diverse and novel, we introduce a framework based on social learning where we emphasize non-cooperative interactions besides cooperative interactions to encourage diversity. Our experiments are the first attempt at LLM-based multi-agent systems in non-cooperative environments for poetry generation employing both TRAINING-BASED agents (GPT-2) and PROMPTING-BASED agents (GPT-3 and GPT-4). Our evaluation based on 96k generated poems shows that our framework benefits the poetry generation process for TRAINING-BASED agents resulting in 1) a 3.0-3.7 percentage point (pp) increase in diversity and a 5.6-11.3 pp increase in novelty according to distinct and novel n-grams. The generated poetry from TRAINING-BASED agents also exhibits group divergence in terms of lexicons, styles and semantics. PROMPTING-BASED agents in our framework also benefit from non-cooperative environments and a more diverse ensemble of models with non-homogeneous agents has the potential to further enhance diversity, with an increase of 7.0-17.5 pp according to our experiments. However, PROMPTING-BASED agents show a decrease in lexical diversity over time and do not exhibit the group-based divergence intended in the social network. Our paper argues for a paradigm shift in creative tasks such as automatic poetry generation to include social learning processes (via LLM-based agent modeling) similar to human interaction.

+

+

+

+ 11. 【2409.03650】On the Limited Generalization Capability of the Implicit Reward Model Induced by Direct Preference Optimization

+ 链接:https://arxiv.org/abs/2409.03650

+ 作者:Yong Lin,Skyler Seto,Maartje ter Hoeve,Katherine Metcalf,Barry-John Theobald,Xuan Wang,Yizhe Zhang,Chen Huang,Tong Zhang

+ 类目:Machine Learning (cs.LG); Computation and Language (cs.CL)

+ 关键词:Human Feedback, Reinforcement Learning, aligning language models, Direct Preference Optimization, human preferences

+ 备注: 12 pages, 8 tables, 2 figures

+

+ 点击查看摘要

+ Abstract:Reinforcement Learning from Human Feedback (RLHF) is an effective approach for aligning language models to human preferences. Central to RLHF is learning a reward function for scoring human preferences. Two main approaches for learning a reward model are 1) training an EXplicit Reward Model (EXRM) as in RLHF, and 2) using an implicit reward learned from preference data through methods such as Direct Preference Optimization (DPO). Prior work has shown that the implicit reward model of DPO (denoted as DPORM) can approximate an EXRM in the limit. DPORM's effectiveness directly implies the optimality of the learned policy, and also has practical implication for LLM alignment methods including iterative DPO. However, it is unclear how well DPORM empirically matches the performance of EXRM. This work studies the accuracy at distinguishing preferred and rejected answers for both DPORM and EXRM. Our findings indicate that even though DPORM fits the training dataset comparably, it generalizes less effectively than EXRM, especially when the validation datasets contain distribution shifts. Across five out-of-distribution settings, DPORM has a mean drop in accuracy of 3% and a maximum drop of 7%. These findings highlight that DPORM has limited generalization ability and substantiates the integration of an explicit reward model in iterative DPO approaches.

+

+

+

+ 12. 【2409.03643】CDM: A Reliable Metric for Fair and Accurate Formula Recognition Evaluation

+ 链接:https://arxiv.org/abs/2409.03643

+ 作者:Bin Wang,Fan Wu,Linke Ouyang,Zhuangcheng Gu,Rui Zhang,Renqiu Xia,Bo Zhang,Conghui He

+ 类目:Computer Vision and Pattern Recognition (cs.CV); Computation and Language (cs.CL)

+ 关键词:presents significant challenges, significant challenges due, recognition presents significant, Formula recognition presents, Formula recognition

+ 备注: Project Website: [this https URL](https://github.com/opendatalab/UniMERNet/tree/main/cdm)

+

+ 点击查看摘要

+ Abstract:Formula recognition presents significant challenges due to the complicated structure and varied notation of mathematical expressions. Despite continuous advancements in formula recognition models, the evaluation metrics employed by these models, such as BLEU and Edit Distance, still exhibit notable limitations. They overlook the fact that the same formula has diverse representations and is highly sensitive to the distribution of training data, thereby causing the unfairness in formula recognition evaluation. To this end, we propose a Character Detection Matching (CDM) metric, ensuring the evaluation objectivity by designing a image-level rather than LaTex-level metric score. Specifically, CDM renders both the model-predicted LaTeX and the ground-truth LaTeX formulas into image-formatted formulas, then employs visual feature extraction and localization techniques for precise character-level matching, incorporating spatial position information. Such a spatially-aware and character-matching method offers a more accurate and equitable evaluation compared with previous BLEU and Edit Distance metrics that rely solely on text-based character matching. Experimentally, we evaluated various formula recognition models using CDM, BLEU, and ExpRate metrics. Their results demonstrate that the CDM aligns more closely with human evaluation standards and provides a fairer comparison across different models by eliminating discrepancies caused by diverse formula representations.

+

+

+

+ 13. 【2409.03621】Attend First, Consolidate Later: On the Importance of Attention in Different LLM Layers

+ 链接:https://arxiv.org/abs/2409.03621

+ 作者:Amit Ben Artzy,Roy Schwartz

+ 类目:Computation and Language (cs.CL)

+ 关键词:serves two purposes, attention mechanism, mechanism of future, layer serves, current token

+ 备注:

+

+ 点击查看摘要

+ Abstract:In decoder-based LLMs, the representation of a given layer serves two purposes: as input to the next layer during the computation of the current token; and as input to the attention mechanism of future tokens. In this work, we show that the importance of the latter role might be overestimated. To show that, we start by manipulating the representations of previous tokens; e.g. by replacing the hidden states at some layer k with random vectors. Our experimenting with four LLMs and four tasks show that this operation often leads to small to negligible drop in performance. Importantly, this happens if the manipulation occurs in the top part of the model-k is in the final 30-50% of the layers. In contrast, doing the same manipulation in earlier layers might lead to chance level performance. We continue by switching the hidden state of certain tokens with hidden states of other tokens from another prompt; e.g., replacing the word "Italy" with "France" in "What is the capital of Italy?". We find that when applying this switch in the top 1/3 of the model, the model ignores it (answering "Rome"). However if we apply it before, the model conforms to the switch ("Paris"). Our results hint at a two stage process in transformer-based LLMs: the first part gathers input from previous tokens, while the second mainly processes that information internally.

+

+

+

+ 14. 【2409.03563】100 instances is all you need: predicting the success of a new LLM on unseen data by testing on a few instances

+ 链接:https://arxiv.org/abs/2409.03563

+ 作者:Lorenzo Pacchiardi,Lucy G. Cheke,José Hernández-Orallo

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

+ 关键词:individual task instances, task instances, LLM, performance, instances

+ 备注: Presented at the 2024 KDD workshop on Evaluation and Trustworthiness of Generative AI Models

+

+ 点击查看摘要

+ Abstract:Predicting the performance of LLMs on individual task instances is essential to ensure their reliability in high-stakes applications. To do so, a possibility is to evaluate the considered LLM on a set of task instances and train an assessor to predict its performance based on features of the instances. However, this approach requires evaluating each new LLM on a sufficiently large set of task instances to train an assessor specific to it. In this work, we leverage the evaluation results of previously tested LLMs to reduce the number of evaluations required to predict the performance of a new LLM. In practice, we propose to test the new LLM on a small set of reference instances and train a generic assessor which predicts the performance of the LLM on an instance based on the performance of the former on the reference set and features of the instance of interest. We conduct empirical studies on HELM-Lite and KindsOfReasoning, a collection of existing reasoning datasets that we introduce, where we evaluate all instruction-fine-tuned OpenAI models until the January 2024 version of GPT4. When predicting performance on instances with the same distribution as those used to train the generic assessor, we find this achieves performance comparable to the LLM-specific assessors trained on the full set of instances. Additionally, we find that randomly selecting the reference instances performs as well as some advanced selection methods we tested. For out of distribution, however, no clear winner emerges and the overall performance is worse, suggesting that the inherent predictability of LLMs is low.

+

+

+

+ 15. 【2409.03512】From MOOC to MAIC: Reshaping Online Teaching and Learning through LLM-driven Agents

+ 链接:https://arxiv.org/abs/2409.03512

+ 作者:Jifan Yu,Zheyuan Zhang,Daniel Zhang-li,Shangqing Tu,Zhanxin Hao,Rui Miao Li,Haoxuan Li,Yuanchun Wang,Hanming Li,Linlu Gong,Jie Cao,Jiayin Lin,Jinchang Zhou,Fei Qin,Haohua Wang,Jianxiao Jiang,Lijun Deng,Yisi Zhan,Chaojun Xiao,Xusheng Dai,Xuan Yan,Nianyi Lin,Nan Zhang,Ruixin Ni,Yang Dang,Lei Hou,Yu Zhang,Xu Han,Manli Li,Juanzi Li,Zhiyuan Liu,Huiqin Liu,Maosong Sun

+ 类目:Computers and Society (cs.CY); Computation and Language (cs.CL)

+ 关键词:sparked extensive discussion, widespread adoption, uploaded to accessible, accessible and shared, scaling the dissemination

+ 备注:

+

+ 点击查看摘要

+ Abstract:Since the first instances of online education, where courses were uploaded to accessible and shared online platforms, this form of scaling the dissemination of human knowledge to reach a broader audience has sparked extensive discussion and widespread adoption. Recognizing that personalized learning still holds significant potential for improvement, new AI technologies have been continuously integrated into this learning format, resulting in a variety of educational AI applications such as educational recommendation and intelligent tutoring. The emergence of intelligence in large language models (LLMs) has allowed for these educational enhancements to be built upon a unified foundational model, enabling deeper integration. In this context, we propose MAIC (Massive AI-empowered Course), a new form of online education that leverages LLM-driven multi-agent systems to construct an AI-augmented classroom, balancing scalability with adaptivity. Beyond exploring the conceptual framework and technical innovations, we conduct preliminary experiments at Tsinghua University, one of China's leading universities. Drawing from over 100,000 learning records of more than 500 students, we obtain a series of valuable observations and initial analyses. This project will continue to evolve, ultimately aiming to establish a comprehensive open platform that supports and unifies research, technology, and applications in exploring the possibilities of online education in the era of large model AI. We envision this platform as a collaborative hub, bringing together educators, researchers, and innovators to collectively explore the future of AI-driven online education.

+

+

+

+ 16. 【2409.03454】How Much Data is Enough Data? Fine-Tuning Large Language Models for In-House Translation: Performance Evaluation Across Multiple Dataset Sizes

+ 链接:https://arxiv.org/abs/2409.03454

+ 作者:Inacio Vieira,Will Allred,Seamus Lankford,Sheila Castilho Monteiro De Sousa,Andy Way

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Decoder-only LLMs, generate high-quality translations, shown impressive performance, shown impressive, ability to learn

+ 备注:

+

+ 点击查看摘要

+ Abstract:Decoder-only LLMs have shown impressive performance in MT due to their ability to learn from extensive datasets and generate high-quality translations. However, LLMs often struggle with the nuances and style required for organisation-specific translation. In this study, we explore the effectiveness of fine-tuning Large Language Models (LLMs), particularly Llama 3 8B Instruct, leveraging translation memories (TMs), as a valuable resource to enhance accuracy and efficiency. We investigate the impact of fine-tuning the Llama 3 model using TMs from a specific organisation in the software sector. Our experiments cover five translation directions across languages of varying resource levels (English to Brazilian Portuguese, Czech, German, Finnish, and Korean). We analyse diverse sizes of training datasets (1k to 207k segments) to evaluate their influence on translation quality. We fine-tune separate models for each training set and evaluate their performance based on automatic metrics, BLEU, chrF++, TER, and COMET. Our findings reveal improvement in translation performance with larger datasets across all metrics. On average, BLEU and COMET scores increase by 13 and 25 points, respectively, on the largest training set against the baseline model. Notably, there is a performance deterioration in comparison with the baseline model when fine-tuning on only 1k and 2k examples; however, we observe a substantial improvement as the training dataset size increases. The study highlights the potential of integrating TMs with LLMs to create bespoke translation models tailored to the specific needs of businesses, thus enhancing translation quality and reducing turn-around times. This approach offers a valuable insight for organisations seeking to leverage TMs and LLMs for optimal translation outcomes, especially in narrower domains.

+

+

+

+ 17. 【2409.03444】Fine-tuning large language models for domain adaptation: Exploration of training strategies, scaling, model merging and synergistic capabilities

+ 链接:https://arxiv.org/abs/2409.03444

+ 作者:Wei Lu,Rachel K. Luu,Markus J. Buehler

+ 类目:Computation and Language (cs.CL); Materials Science (cond-mat.mtrl-sci); Artificial Intelligence (cs.AI)

+ 关键词:Large Language Models, Large Language, Direct Preference Optimization, Ratio Preference Optimization, Odds Ratio Preference

+ 备注:

+

+ 点击查看摘要

+ Abstract:The advancement of Large Language Models (LLMs) for domain applications in fields such as materials science and engineering depends on the development of fine-tuning strategies that adapt models for specialized, technical capabilities. In this work, we explore the effects of Continued Pretraining (CPT), Supervised Fine-Tuning (SFT), and various preference-based optimization approaches, including Direct Preference Optimization (DPO) and Odds Ratio Preference Optimization (ORPO), on fine-tuned LLM performance. Our analysis shows how these strategies influence model outcomes and reveals that the merging of multiple fine-tuned models can lead to the emergence of capabilities that surpass the individual contributions of the parent models. We find that model merging leads to new functionalities that neither parent model could achieve alone, leading to improved performance in domain-specific assessments. Experiments with different model architectures are presented, including Llama 3.1 8B and Mistral 7B models, where similar behaviors are observed. Exploring whether the results hold also for much smaller models, we use a tiny LLM with 1.7 billion parameters and show that very small LLMs do not necessarily feature emergent capabilities under model merging, suggesting that model scaling may be a key component. In open-ended yet consistent chat conversations between a human and AI models, our assessment reveals detailed insights into how different model variants perform and show that the smallest model achieves a high intelligence score across key criteria including reasoning depth, creativity, clarity, and quantitative precision. Other experiments include the development of image generation prompts based on disparate biological material design concepts, to create new microstructures, architectural concepts, and urban design based on biological materials-inspired construction principles.

+

+

+

+ 18. 【2409.03440】Rx Strategist: Prescription Verification using LLM Agents System

+ 链接:https://arxiv.org/abs/2409.03440

+ 作者:Phuc Phan Van,Dat Nguyen Minh,An Dinh Ngoc,Huy Phan Thanh

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Models, protect patient safety, pharmaceutical complexity demands, complexity demands strict, modern pharmaceutical complexity

+ 备注: 17 Pages, 6 Figures, Under Review

+

+ 点击查看摘要

+ Abstract:To protect patient safety, modern pharmaceutical complexity demands strict prescription verification. We offer a new approach - Rx Strategist - that makes use of knowledge graphs and different search strategies to enhance the power of Large Language Models (LLMs) inside an agentic framework. This multifaceted technique allows for a multi-stage LLM pipeline and reliable information retrieval from a custom-built active ingredient database. Different facets of prescription verification, such as indication, dose, and possible drug interactions, are covered in each stage of the pipeline. We alleviate the drawbacks of monolithic LLM techniques by spreading reasoning over these stages, improving correctness and reliability while reducing memory demands. Our findings demonstrate that Rx Strategist surpasses many current LLMs, achieving performance comparable to that of a highly experienced clinical pharmacist. In the complicated world of modern medications, this combination of LLMs with organized knowledge and sophisticated search methods presents a viable avenue for reducing prescription errors and enhancing patient outcomes.

+

+

+

+ 19. 【2409.03381】CogniDual Framework: Self-Training Large Language Models within a Dual-System Theoretical Framework for Improving Cognitive Tasks

+ 链接:https://arxiv.org/abs/2409.03381

+ 作者:Yongxin Deng(1),Xihe Qiu(1),Xiaoyu Tan(2),Chao Qu(2),Jing Pan(3),Yuan Cheng(3),Yinghui Xu(4),Wei Chu(2) ((1) School of Electronic and Electrical Engineering, Shanghai University of Engineering Science, Shanghai, China, (2) INF Technology (Shanghai) Co., Ltd., Shanghai, China, (3) School of Art, Design and Architecture, Monash University, Melbourne, Australia, (4) Artificial Intelligence Innovation and Incubation Institute, Fudan University, Shanghai, China)

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:psychology investigates perception, investigates perception, Cognitive psychology investigates, rational System, System

+ 备注:

+

+ 点击查看摘要

+ Abstract:Cognitive psychology investigates perception, attention, memory, language, problem-solving, decision-making, and reasoning. Kahneman's dual-system theory elucidates the human decision-making process, distinguishing between the rapid, intuitive System 1 and the deliberative, rational System 2. Recent advancements have positioned large language Models (LLMs) as formidable tools nearing human-level proficiency in various cognitive tasks. Nonetheless, the presence of a dual-system framework analogous to human cognition in LLMs remains unexplored. This study introduces the \textbf{CogniDual Framework for LLMs} (CFLLMs), designed to assess whether LLMs can, through self-training, evolve from deliberate deduction to intuitive responses, thereby emulating the human process of acquiring and mastering new information. Our findings reveal the cognitive mechanisms behind LLMs' response generation, enhancing our understanding of their capabilities in cognitive psychology. Practically, self-trained models can provide faster responses to certain queries, reducing computational demands during inference.

+

+

+

+ 20. 【2409.03375】Leveraging Large Language Models through Natural Language Processing to provide interpretable Machine Learning predictions of mental deterioration in real time

+ 链接:https://arxiv.org/abs/2409.03375

+ 作者:Francisco de Arriba-Pérez,Silvia García-Méndez

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

+ 关键词:million people worldwide, Based on official, million people, natural language analysis, official estimates

+ 备注:

+

+ 点击查看摘要

+ Abstract:Based on official estimates, 50 million people worldwide are affected by dementia, and this number increases by 10 million new patients every year. Without a cure, clinical prognostication and early intervention represent the most effective ways to delay its progression. To this end, Artificial Intelligence and computational linguistics can be exploited for natural language analysis, personalized assessment, monitoring, and treatment. However, traditional approaches need more semantic knowledge management and explicability capabilities. Moreover, using Large Language Models (LLMs) for cognitive decline diagnosis is still scarce, even though these models represent the most advanced way for clinical-patient communication using intelligent systems. Consequently, we leverage an LLM using the latest Natural Language Processing (NLP) techniques in a chatbot solution to provide interpretable Machine Learning prediction of cognitive decline in real-time. Linguistic-conceptual features are exploited for appropriate natural language analysis. Through explainability, we aim to fight potential biases of the models and improve their potential to help clinical workers in their diagnosis decisions. More in detail, the proposed pipeline is composed of (i) data extraction employing NLP-based prompt engineering; (ii) stream-based data processing including feature engineering, analysis, and selection; (iii) real-time classification; and (iv) the explainability dashboard to provide visual and natural language descriptions of the prediction outcome. Classification results exceed 80 % in all evaluation metrics, with a recall value for the mental deterioration class about 85 %. To sum up, we contribute with an affordable, flexible, non-invasive, personalized diagnostic system to this work.

+

+

+

+ 21. 【2409.03363】Con-ReCall: Detecting Pre-training Data in LLMs via Contrastive Decoding

+ 链接:https://arxiv.org/abs/2409.03363

+ 作者:Cheng Wang,Yiwei Wang,Bryan Hooi,Yujun Cai,Nanyun Peng,Kai-Wei Chang

+ 类目:Computation and Language (cs.CL)

+ 关键词:large language models, security risks, large language, language models, models is key

+ 备注:

+

+ 点击查看摘要

+ Abstract:The training data in large language models is key to their success, but it also presents privacy and security risks, as it may contain sensitive information. Detecting pre-training data is crucial for mitigating these concerns. Existing methods typically analyze target text in isolation or solely with non-member contexts, overlooking potential insights from simultaneously considering both member and non-member contexts. While previous work suggested that member contexts provide little information due to the minor distributional shift they induce, our analysis reveals that these subtle shifts can be effectively leveraged when contrasted with non-member contexts. In this paper, we propose Con-ReCall, a novel approach that leverages the asymmetric distributional shifts induced by member and non-member contexts through contrastive decoding, amplifying subtle differences to enhance membership inference. Extensive empirical evaluations demonstrate that Con-ReCall achieves state-of-the-art performance on the WikiMIA benchmark and is robust against various text manipulation techniques.

+

+

+

+ 22. 【2409.03346】Sketch: A Toolkit for Streamlining LLM Operations

+ 链接:https://arxiv.org/abs/2409.03346

+ 作者:Xin Jiang,Xiang Li,Wenjia Ma,Xuezhi Fang,Yiqun Yao,Naitong Yu,Xuying Meng,Peng Han,Jing Li,Aixin Sun,Yequan Wang

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Large language models, achieved remarkable success, represented by GPT, Large language, GPT family

+ 备注:

+

+ 点击查看摘要

+ Abstract:Large language models (LLMs) represented by GPT family have achieved remarkable success. The characteristics of LLMs lie in their ability to accommodate a wide range of tasks through a generative approach. However, the flexibility of their output format poses challenges in controlling and harnessing the model's outputs, thereby constraining the application of LLMs in various domains. In this work, we present Sketch, an innovative toolkit designed to streamline LLM operations across diverse fields. Sketch comprises the following components: (1) a suite of task description schemas and prompt templates encompassing various NLP tasks; (2) a user-friendly, interactive process for building structured output LLM services tailored to various NLP tasks; (3) an open-source dataset for output format control, along with tools for dataset construction; and (4) an open-source model based on LLaMA3-8B-Instruct that adeptly comprehends and adheres to output formatting instructions. We anticipate this initiative to bring considerable convenience to LLM users, achieving the goal of ''plug-and-play'' for various applications. The components of Sketch will be progressively open-sourced at this https URL.

+

+

+

+ 23. 【2409.03327】Normal forms in Virus Machines

+ 链接:https://arxiv.org/abs/2409.03327

+ 作者:A. Ramírez-de-Arellano,F. G. C. Cabarle,D. Orellana-Martín,M. J. Pérez-Jiménez

+ 类目:Computation and Language (cs.CL); Formal Languages and Automata Theory (cs.FL)

+ 关键词:study the computational, virus machines, normal forms, VMs, present work

+ 备注:

+

+ 点击查看摘要

+ Abstract:In the present work, we further study the computational power of virus machines (VMs in short). VMs provide a computing paradigm inspired by the transmission and replication networks of viruses. VMs consist of process units (called hosts) structured by a directed graph whose arcs are called channels and an instruction graph that controls the transmissions of virus objects among hosts. The present work complements our understanding of the computing power of VMs by introducing normal forms; these expressions restrict the features in a given computing model. Some of the features that we restrict in our normal forms include (a) the number of hosts, (b) the number of instructions, and (c) the number of virus objects in each host. After we recall some known results on the computing power of VMs we give our normal forms, such as the size of the loops in the network, proving new characterisations of family of sets, such as the finite sets, semilinear sets, or NRE.

+

+

+

+ 24. 【2409.03295】N-gram Prediction and Word Difference Representations for Language Modeling

+ 链接:https://arxiv.org/abs/2409.03295

+ 作者:DongNyeong Heo,Daniela Noemi Rim,Heeyoul Choi

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Causal language modeling, underpinning remarkable successes, recent large language, foundational framework underpinning, framework underpinning remarkable

+ 备注:

+

+ 点击查看摘要

+ Abstract:Causal language modeling (CLM) serves as the foundational framework underpinning remarkable successes of recent large language models (LLMs). Despite its success, the training approach for next word prediction poses a potential risk of causing the model to overly focus on local dependencies within a sentence. While prior studies have been introduced to predict future N words simultaneously, they were primarily applied to tasks such as masked language modeling (MLM) and neural machine translation (NMT). In this study, we introduce a simple N-gram prediction framework for the CLM task. Moreover, we introduce word difference representation (WDR) as a surrogate and contextualized target representation during model training on the basis of N-gram prediction framework. To further enhance the quality of next word prediction, we propose an ensemble method that incorporates the future N words' prediction results. Empirical evaluations across multiple benchmark datasets encompassing CLM and NMT tasks demonstrate the significant advantages of our proposed methods over the conventional CLM.

+

+

+

+ 25. 【2409.03291】LLM Detectors Still Fall Short of Real World: Case of LLM-Generated Short News-Like Posts

+ 链接:https://arxiv.org/abs/2409.03291

+ 作者:Henrique Da Silva Gameiro,Andrei Kucharavy,Ljiljana Dolamic

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Cryptography and Security (cs.CR); Machine Learning (cs.LG)

+ 关键词:large Language Models, Language Models, major concern, emergence of widely, widely available powerful

+ 备注: 20 pages, 7 tables, 13 figures, under consideration for EMNLP

+

+ 点击查看摘要

+ Abstract:With the emergence of widely available powerful LLMs, disinformation generated by large Language Models (LLMs) has become a major concern. Historically, LLM detectors have been touted as a solution, but their effectiveness in the real world is still to be proven. In this paper, we focus on an important setting in information operations -- short news-like posts generated by moderately sophisticated attackers.

+We demonstrate that existing LLM detectors, whether zero-shot or purpose-trained, are not ready for real-world use in that setting. All tested zero-shot detectors perform inconsistently with prior benchmarks and are highly vulnerable to sampling temperature increase, a trivial attack absent from recent benchmarks. A purpose-trained detector generalizing across LLMs and unseen attacks can be developed, but it fails to generalize to new human-written texts.

+We argue that the former indicates domain-specific benchmarking is needed, while the latter suggests a trade-off between the adversarial evasion resilience and overfitting to the reference human text, with both needing evaluation in benchmarks and currently absent. We believe this suggests a re-consideration of current LLM detector benchmarking approaches and provides a dynamically extensible benchmark to allow it (this https URL).

+

Comments:

+20 pages, 7 tables, 13 figures, under consideration for EMNLP

+Subjects:

+Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Cryptography and Security (cs.CR); Machine Learning (cs.LG)

+ACMclasses:

+I.2.7; K.6.5

+Cite as:

+arXiv:2409.03291 [cs.CL]

+(or

+arXiv:2409.03291v1 [cs.CL] for this version)

+https://doi.org/10.48550/arXiv.2409.03291

+Focus to learn more

+ arXiv-issued DOI via DataCite (pending registration)</p>

+

+

+

+

+ 26. 【2409.03284】xt2KG: Incremental Knowledge Graphs Construction Using Large Language Models

+ 链接:https://arxiv.org/abs/2409.03284

+ 作者:Yassir Lairgi,Ludovic Moncla,Rémy Cazabet,Khalid Benabdeslem,Pierre Cléau

+ 类目:Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Information Retrieval (cs.IR)

+ 关键词:access valuable information, challenging to access, access valuable, making it challenging, building Knowledge Graphs

+ 备注: Accepted at The International Web Information Systems Engineering conference (the WISE conference) 2024

+

+ 点击查看摘要

+ Abstract:Most available data is unstructured, making it challenging to access valuable information. Automatically building Knowledge Graphs (KGs) is crucial for structuring data and making it accessible, allowing users to search for information effectively. KGs also facilitate insights, inference, and reasoning. Traditional NLP methods, such as named entity recognition and relation extraction, are key in information retrieval but face limitations, including the use of predefined entity types and the need for supervised learning. Current research leverages large language models' capabilities, such as zero- or few-shot learning. However, unresolved and semantically duplicated entities and relations still pose challenges, leading to inconsistent graphs and requiring extensive post-processing. Additionally, most approaches are topic-dependent. In this paper, we propose iText2KG, a method for incremental, topic-independent KG construction without post-processing. This plug-and-play, zero-shot method is applicable across a wide range of KG construction scenarios and comprises four modules: Document Distiller, Incremental Entity Extractor, Incremental Relation Extractor, and Graph Integrator and Visualization. Our method demonstrates superior performance compared to baseline methods across three scenarios: converting scientific papers to graphs, websites to graphs, and CVs to graphs.

+

+

+

+ 27. 【2409.03277】ChartMoE: Mixture of Expert Connector for Advanced Chart Understanding

+ 链接:https://arxiv.org/abs/2409.03277

+ 作者:Zhengzhuo Xu,Bowen Qu,Yiyan Qi,Sinan Du,Chengjin Xu,Chun Yuan,Jian Guo

+ 类目:Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV)

+ 关键词:Automatic chart understanding, Automatic chart, document parsing, chart understanding, crucial for content

+ 备注:

+

+ 点击查看摘要

+ Abstract:Automatic chart understanding is crucial for content comprehension and document parsing. Multimodal large language models (MLLMs) have demonstrated remarkable capabilities in chart understanding through domain-specific alignment and fine-tuning. However, the application of alignment training within the chart domain is still underexplored. To address this, we propose ChartMoE, which employs the mixture of expert (MoE) architecture to replace the traditional linear projector to bridge the modality gap. Specifically, we train multiple linear connectors through distinct alignment tasks, which are utilized as the foundational initialization parameters for different experts. Additionally, we introduce ChartMoE-Align, a dataset with over 900K chart-table-JSON-code quadruples to conduct three alignment tasks (chart-table/JSON/code). Combined with the vanilla connector, we initialize different experts in four distinct ways and adopt high-quality knowledge learning to further refine the MoE connector and LLM parameters. Extensive experiments demonstrate the effectiveness of the MoE connector and our initialization strategy, e.g., ChartMoE improves the accuracy of the previous state-of-the-art from 80.48% to 84.64% on the ChartQA benchmark.

+

+

+

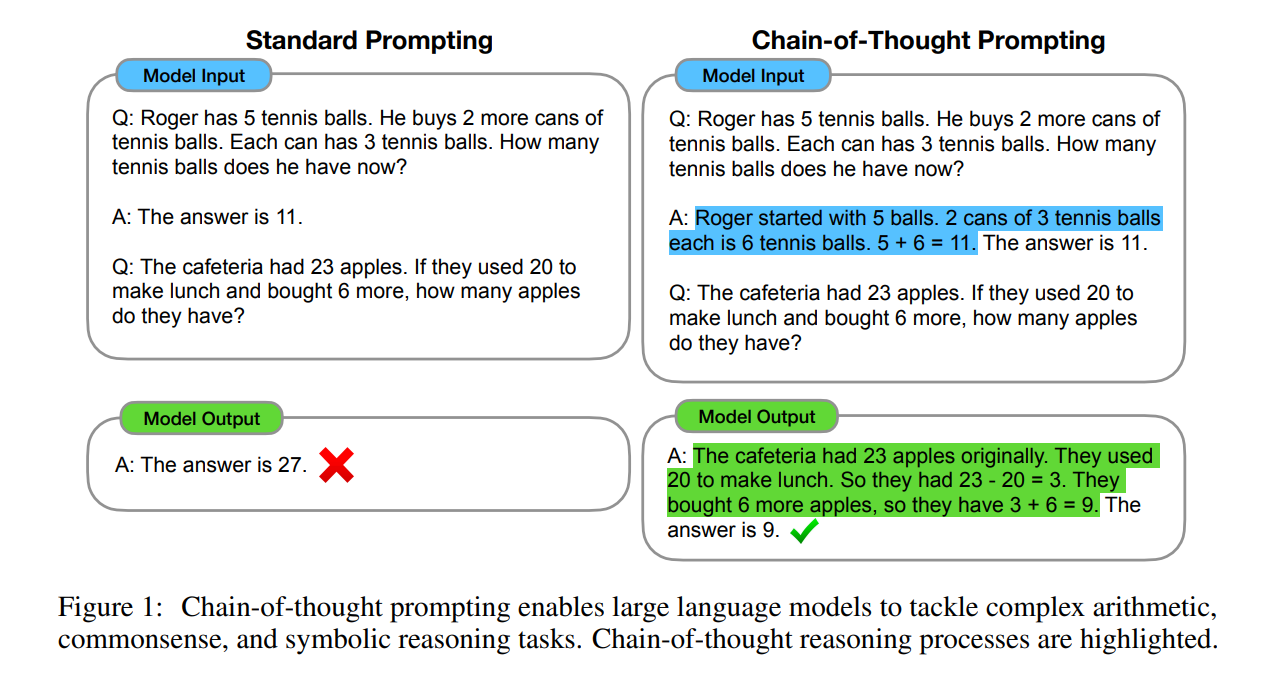

+ 28. 【2409.03271】Strategic Chain-of-Thought: Guiding Accurate Reasoning in LLMs through Strategy Elicitation

+ 链接:https://arxiv.org/abs/2409.03271

+ 作者:Yu Wang,Shiwan Zhao,Zhihu Wang,Heyuan Huang,Ming Fan,Yubo Zhang,Zhixing Wang,Haijun Wang,Ting Liu

+ 类目:Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Human-Computer Interaction (cs.HC)

+ 关键词:large language models, paradigm has emerged, capabilities of large, large language, LLM performance

+ 备注:

+

+ 点击查看摘要

+ Abstract:The Chain-of-Thought (CoT) paradigm has emerged as a critical approach for enhancing the reasoning capabilities of large language models (LLMs). However, despite their widespread adoption and success, CoT methods often exhibit instability due to their inability to consistently ensure the quality of generated reasoning paths, leading to sub-optimal reasoning performance. To address this challenge, we propose the \textbf{Strategic Chain-of-Thought} (SCoT), a novel methodology designed to refine LLM performance by integrating strategic knowledge prior to generating intermediate reasoning steps. SCoT employs a two-stage approach within a single prompt: first eliciting an effective problem-solving strategy, which is then used to guide the generation of high-quality CoT paths and final answers. Our experiments across eight challenging reasoning datasets demonstrate significant improvements, including a 21.05\% increase on the GSM8K dataset and 24.13\% on the Tracking\_Objects dataset, respectively, using the Llama3-8b model. Additionally, we extend the SCoT framework to develop a few-shot method with automatically matched demonstrations, yielding even stronger results. These findings underscore the efficacy of SCoT, highlighting its potential to substantially enhance LLM performance in complex reasoning tasks.

+

+

+

+ 29. 【2409.03258】GraphInsight: Unlocking Insights in Large Language Models for Graph Structure Understanding

+ 链接:https://arxiv.org/abs/2409.03258

+ 作者:Yukun Cao,Shuo Han,Zengyi Gao,Zezhong Ding,Xike Xie,S. Kevin Zhou

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Models, Language Models, Large Language, graph description sequences, description sequences

+ 备注:

+

+ 点击查看摘要

+ Abstract:Although Large Language Models (LLMs) have demonstrated potential in processing graphs, they struggle with comprehending graphical structure information through prompts of graph description sequences, especially as the graph size increases. We attribute this challenge to the uneven memory performance of LLMs across different positions in graph description sequences, known as ''positional biases''. To address this, we propose GraphInsight, a novel framework aimed at improving LLMs' comprehension of both macro- and micro-level graphical information. GraphInsight is grounded in two key strategies: 1) placing critical graphical information in positions where LLMs exhibit stronger memory performance, and 2) investigating a lightweight external knowledge base for regions with weaker memory performance, inspired by retrieval-augmented generation (RAG). Moreover, GraphInsight explores integrating these two strategies into LLM agent processes for composite graph tasks that require multi-step reasoning. Extensive empirical studies on benchmarks with a wide range of evaluation tasks show that GraphInsight significantly outperforms all other graph description methods (e.g., prompting techniques and reordering strategies) in understanding graph structures of varying sizes.

+

+

+

+ 30. 【2409.03257】Understanding LLM Development Through Longitudinal Study: Insights from the Open Ko-LLM Leaderboard

+ 链接:https://arxiv.org/abs/2409.03257

+ 作者:Chanjun Park,Hyeonwoo Kim

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Open Ko-LLM Leaderboard, Open Ko-LLM, restricted observation periods, Ko-LLM Leaderboard, eleven months

+ 备注:

+

+ 点击查看摘要

+ Abstract:This paper conducts a longitudinal study over eleven months to address the limitations of prior research on the Open Ko-LLM Leaderboard, which have relied on empirical studies with restricted observation periods of only five months. By extending the analysis duration, we aim to provide a more comprehensive understanding of the progression in developing Korean large language models (LLMs). Our study is guided by three primary research questions: (1) What are the specific challenges in improving LLM performance across diverse tasks on the Open Ko-LLM Leaderboard over time? (2) How does model size impact task performance correlations across various benchmarks? (3) How have the patterns in leaderboard rankings shifted over time on the Open Ko-LLM Leaderboard?. By analyzing 1,769 models over this period, our research offers a comprehensive examination of the ongoing advancements in LLMs and the evolving nature of evaluation frameworks.

+

+

+

+ 31. 【2409.03256】E2CL: Exploration-based Error Correction Learning for Embodied Agents

+ 链接:https://arxiv.org/abs/2409.03256

+ 作者:Hanlin Wang,Chak Tou Leong,Jian Wang,Wenjie Li

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:exhibiting increasing capability, Language models, utilization and reasoning, models are exhibiting, exhibiting increasing

+ 备注:

+

+ 点击查看摘要

+ Abstract:Language models are exhibiting increasing capability in knowledge utilization and reasoning. However, when applied as agents in embodied environments, they often suffer from misalignment between their intrinsic knowledge and environmental knowledge, leading to infeasible actions. Traditional environment alignment methods, such as supervised learning on expert trajectories and reinforcement learning, face limitations in covering environmental knowledge and achieving efficient convergence, respectively. Inspired by human learning, we propose Exploration-based Error Correction Learning (E2CL), a novel framework that leverages exploration-induced errors and environmental feedback to enhance environment alignment for LM-based agents. E2CL incorporates teacher-guided and teacher-free exploration to gather environmental feedback and correct erroneous actions. The agent learns to provide feedback and self-correct, thereby enhancing its adaptability to target environments. Evaluations in the Virtualhome environment demonstrate that E2CL-trained agents outperform those trained by baseline methods and exhibit superior self-correction capabilities.

+

+

+

+ 32. 【2409.03238】Preserving Empirical Probabilities in BERT for Small-sample Clinical Entity Recognition

+ 链接:https://arxiv.org/abs/2409.03238

+ 作者:Abdul Rehman,Jian Jun Zhang,Xiaosong Yang

+ 类目:Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:Named Entity Recognition, Entity Recognition, Named Entity, equitable entity recognition, encounters the challenge

+ 备注: 8 pages, 8 figures

+

+ 点击查看摘要

+ Abstract:Named Entity Recognition (NER) encounters the challenge of unbalanced labels, where certain entity types are overrepresented while others are underrepresented in real-world datasets. This imbalance can lead to biased models that perform poorly on minority entity classes, impeding accurate and equitable entity recognition. This paper explores the effects of unbalanced entity labels of the BERT-based pre-trained model. We analyze the different mechanisms of loss calculation and loss propagation for the task of token classification on randomized datasets. Then we propose ways to improve the token classification for the highly imbalanced task of clinical entity recognition.

+

+

+

+ 33. 【2409.03225】Enhancing Healthcare LLM Trust with Atypical Presentations Recalibration

+ 链接:https://arxiv.org/abs/2409.03225

+ 作者:Jeremy Qin,Bang Liu,Quoc Dinh Nguyen

+ 类目:Computation and Language (cs.CL)

+ 关键词:Black-box large language, large language models, making it essential, large language, increasingly deployed

+ 备注:

+

+ 点击查看摘要

+ Abstract:Black-box large language models (LLMs) are increasingly deployed in various environments, making it essential for these models to effectively convey their confidence and uncertainty, especially in high-stakes settings. However, these models often exhibit overconfidence, leading to potential risks and misjudgments. Existing techniques for eliciting and calibrating LLM confidence have primarily focused on general reasoning datasets, yielding only modest improvements. Accurate calibration is crucial for informed decision-making and preventing adverse outcomes but remains challenging due to the complexity and variability of tasks these models perform. In this work, we investigate the miscalibration behavior of black-box LLMs within the healthcare setting. We propose a novel method, \textit{Atypical Presentations Recalibration}, which leverages atypical presentations to adjust the model's confidence estimates. Our approach significantly improves calibration, reducing calibration errors by approximately 60\% on three medical question answering datasets and outperforming existing methods such as vanilla verbalized confidence, CoT verbalized confidence and others. Additionally, we provide an in-depth analysis of the role of atypicality within the recalibration framework.

+

+

+

+ 34. 【2409.03215】xLAM: A Family of Large Action Models to Empower AI Agent Systems

+ 链接:https://arxiv.org/abs/2409.03215

+ 作者:Jianguo Zhang,Tian Lan,Ming Zhu,Zuxin Liu,Thai Hoang,Shirley Kokane,Weiran Yao,Juntao Tan,Akshara Prabhakar,Haolin Chen,Zhiwei Liu,Yihao Feng,Tulika Awalgaonkar,Rithesh Murthy,Eric Hu,Zeyuan Chen,Ran Xu,Juan Carlos Niebles,Shelby Heinecke,Huan Wang,Silvio Savarese,Caiming Xiong

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

+ 关键词:significant research interest, attracted significant research, research interest, agent tasks, Autonomous agents powered

+ 备注: Technical report for the Salesforce xLAM model series

+

+ 点击查看摘要

+ Abstract:Autonomous agents powered by large language models (LLMs) have attracted significant research interest. However, the open-source community faces many challenges in developing specialized models for agent tasks, driven by the scarcity of high-quality agent datasets and the absence of standard protocols in this area. We introduce and publicly release xLAM, a series of large action models designed for AI agent tasks. The xLAM series includes five models with both dense and mixture-of-expert architectures, ranging from 1B to 8x22B parameters, trained using a scalable, flexible pipeline that unifies, augments, and synthesizes diverse datasets to enhance AI agents' generalizability and performance across varied environments. Our experimental results demonstrate that xLAM consistently delivers exceptional performance across multiple agent ability benchmarks, notably securing the 1st position on the Berkeley Function-Calling Leaderboard, outperforming GPT-4, Claude-3, and many other models in terms of tool use. By releasing the xLAM series, we aim to advance the performance of open-source LLMs for autonomous AI agents, potentially accelerating progress and democratizing access to high-performance models for agent tasks. Models are available at this https URL

+

+

+

+ 35. 【2409.03203】An Effective Deployment of Diffusion LM for Data Augmentation in Low-Resource Sentiment Classification

+ 链接:https://arxiv.org/abs/2409.03203

+ 作者:Zhuowei Chen,Lianxi Wang,Yuben Wu,Xinfeng Liao,Yujia Tian,Junyang Zhong

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:imbalanced label distributions, imbalanced label, label distributions, Sentiment classification, language model

+ 备注:

+

+ 点击查看摘要

+ Abstract:Sentiment classification (SC) often suffers from low-resource challenges such as domain-specific contexts, imbalanced label distributions, and few-shot scenarios. The potential of the diffusion language model (LM) for textual data augmentation (DA) remains unexplored, moreover, textual DA methods struggle to balance the diversity and consistency of new samples. Most DA methods either perform logical modifications or rephrase less important tokens in the original sequence with the language model. In the context of SC, strong emotional tokens could act critically on the sentiment of the whole sequence. Therefore, contrary to rephrasing less important context, we propose DiffusionCLS to leverage a diffusion LM to capture in-domain knowledge and generate pseudo samples by reconstructing strong label-related tokens. This approach ensures a balance between consistency and diversity, avoiding the introduction of noise and augmenting crucial features of datasets. DiffusionCLS also comprises a Noise-Resistant Training objective to help the model generalize. Experiments demonstrate the effectiveness of our method in various low-resource scenarios including domain-specific and domain-general problems. Ablation studies confirm the effectiveness of our framework's modules, and visualization studies highlight optimal deployment conditions, reinforcing our conclusions.

+

+

+

+ 36. 【2409.03183】Bypassing DARCY Defense: Indistinguishable Universal Adversarial Triggers

+ 链接:https://arxiv.org/abs/2409.03183

+ 作者:Zuquan Peng,Yuanyuan He,Jianbing Ni,Ben Niu

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Natural Language Processing, Universal Adversarial Triggers, Neural networks, Universal Adversarial, Language Processing

+ 备注: 13 pages, 5 figures

+

+ 点击查看摘要

+ Abstract:Neural networks (NN) classification models for Natural Language Processing (NLP) are vulnerable to the Universal Adversarial Triggers (UAT) attack that triggers a model to produce a specific prediction for any input. DARCY borrows the "honeypot" concept to bait multiple trapdoors, effectively detecting the adversarial examples generated by UAT. Unfortunately, we find a new UAT generation method, called IndisUAT, which produces triggers (i.e., tokens) and uses them to craft adversarial examples whose feature distribution is indistinguishable from that of the benign examples in a randomly-chosen category at the detection layer of DARCY. The produced adversarial examples incur the maximal loss of predicting results in the DARCY-protected models. Meanwhile, the produced triggers are effective in black-box models for text generation, text inference, and reading comprehension. Finally, the evaluation results under NN models for NLP tasks indicate that the IndisUAT method can effectively circumvent DARCY and penetrate other defenses. For example, IndisUAT can reduce the true positive rate of DARCY's detection by at least 40.8% and 90.6%, and drop the accuracy by at least 33.3% and 51.6% in the RNN and CNN models, respectively. IndisUAT reduces the accuracy of the BERT's adversarial defense model by at least 34.0%, and makes the GPT-2 language model spew racist outputs even when conditioned on non-racial context.

+

+

+

+ 37. 【2409.03171】MARAGS: A Multi-Adapter System for Multi-Task Retrieval Augmented Generation Question Answering

+ 链接:https://arxiv.org/abs/2409.03171

+ 作者:Mitchell DeHaven

+ 类目:Computation and Language (cs.CL)

+ 关键词:Meta Comprehensive RAG, Meta Comprehensive, multi-adapter retrieval augmented, Comprehensive RAG, retrieval augmented generation

+ 备注: Accepted to CRAG KDD Cup 24 Workshop

+

+ 点击查看摘要

+ Abstract:In this paper we present a multi-adapter retrieval augmented generation system (MARAGS) for Meta's Comprehensive RAG (CRAG) competition for KDD CUP 2024. CRAG is a question answering dataset contains 3 different subtasks aimed at realistic question and answering RAG related tasks, with a diverse set of question topics, question types, time dynamic answers, and questions featuring entities of varying popularity.

+Our system follows a standard setup for web based RAG, which uses processed web pages to provide context for an LLM to produce generations, while also querying API endpoints for additional information. MARAGS also utilizes multiple different adapters to solve the various requirements for these tasks with a standard cross-encoder model for ranking candidate passages relevant for answering the question. Our system achieved 2nd place for Task 1 as well as 3rd place on Task 2.

+

Comments:

+Accepted to CRAG KDD Cup 24 Workshop

+Subjects:

+Computation and Language (cs.CL)

+Cite as:

+arXiv:2409.03171 [cs.CL]

+(or

+arXiv:2409.03171v1 [cs.CL] for this version)

+https://doi.org/10.48550/arXiv.2409.03171

+Focus to learn more

+ arXiv-issued DOI via DataCite</p>

+

+

+

+

+ 38. 【2409.03166】Continual Skill and Task Learning via Dialogue

+ 链接:https://arxiv.org/abs/2409.03166

+ 作者:Weiwei Gu,Suresh Kondepudi,Lixiao Huang,Nakul Gopalan

+ 类目:Robotics (cs.RO); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:sample efficiency, challenging problem, perpetually with sample, robot, skills

+ 备注:

+

+ 点击查看摘要

+ Abstract:Continual and interactive robot learning is a challenging problem as the robot is present with human users who expect the robot to learn novel skills to solve novel tasks perpetually with sample efficiency. In this work we present a framework for robots to query and learn visuo-motor robot skills and task relevant information via natural language dialog interactions with human users. Previous approaches either focus on improving the performance of instruction following agents, or passively learn novel skills or concepts. Instead, we used dialog combined with a language-skill grounding embedding to query or confirm skills and/or tasks requested by a user. To achieve this goal, we developed and integrated three different components for our agent. Firstly, we propose a novel visual-motor control policy ACT with Low Rank Adaptation (ACT-LoRA), which enables the existing SoTA ACT model to perform few-shot continual learning. Secondly, we develop an alignment model that projects demonstrations across skill embodiments into a shared embedding allowing us to know when to ask questions and/or demonstrations from users. Finally, we integrated an existing LLM to interact with a human user to perform grounded interactive continual skill learning to solve a task. Our ACT-LoRA model learns novel fine-tuned skills with a 100% accuracy when trained with only five demonstrations for a novel skill while still maintaining a 74.75% accuracy on pre-trained skills in the RLBench dataset where other models fall significantly short. We also performed a human-subjects study with 8 subjects to demonstrate the continual learning capabilities of our combined framework. We achieve a success rate of 75% in the task of sandwich making with the real robot learning from participant data demonstrating that robots can learn novel skills or task knowledge from dialogue with non-expert users using our approach.

+

+

+

+ 39. 【2409.03161】MaterialBENCH: Evaluating College-Level Materials Science Problem-Solving Abilities of Large Language Models

+ 链接:https://arxiv.org/abs/2409.03161

+ 作者:Michiko Yoshitake(1),Yuta Suzuki(2),Ryo Igarashi(1),Yoshitaka Ushiku(1),Keisuke Nagato(3) ((1) OMRON SINIC X, (2) Osaka Univ., (3) Univ. Tokyo)

+ 类目:Computation and Language (cs.CL)

+ 关键词:college-level benchmark dataset, materials science field, large language models, science field, college-level benchmark

+ 备注:

+

+ 点击查看摘要

+ Abstract:A college-level benchmark dataset for large language models (LLMs) in the materials science field, MaterialBENCH, is constructed. This dataset consists of problem-answer pairs, based on university textbooks. There are two types of problems: one is the free-response answer type, and the other is the multiple-choice type. Multiple-choice problems are constructed by adding three incorrect answers as choices to a correct answer, so that LLMs can choose one of the four as a response. Most of the problems for free-response answer and multiple-choice types overlap except for the format of the answers. We also conduct experiments using the MaterialBENCH on LLMs, including ChatGPT-3.5, ChatGPT-4, Bard (at the time of the experiments), and GPT-3.5 and GPT-4 with the OpenAI API. The differences and similarities in the performance of LLMs measured by the MaterialBENCH are analyzed and discussed. Performance differences between the free-response type and multiple-choice type in the same models and the influence of using system massages on multiple-choice problems are also studied. We anticipate that MaterialBENCH will encourage further developments of LLMs in reasoning abilities to solve more complicated problems and eventually contribute to materials research and discovery.

+

+

+

+ 40. 【2409.03155】Debate on Graph: a Flexible and Reliable Reasoning Framework for Large Language Models

+ 链接:https://arxiv.org/abs/2409.03155

+ 作者:Jie Ma,Zhitao Gao,Qi Chai,Wangchun Sun,Pinghui Wang,Hongbin Pei,Jing Tao,Lingyun Song,Jun Liu,Chen Zhang,Lizhen Cui

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Large Language Models, real-world applications due, knowledge graphs, Large Language, Language Models

+ 备注: 12 pages

+

+ 点击查看摘要

+ Abstract:Large Language Models (LLMs) may suffer from hallucinations in real-world applications due to the lack of relevant knowledge. In contrast, knowledge graphs encompass extensive, multi-relational structures that store a vast array of symbolic facts. Consequently, integrating LLMs with knowledge graphs has been extensively explored, with Knowledge Graph Question Answering (KGQA) serving as a critical touchstone for the integration. This task requires LLMs to answer natural language questions by retrieving relevant triples from knowledge graphs. However, existing methods face two significant challenges: \textit{excessively long reasoning paths distracting from the answer generation}, and \textit{false-positive relations hindering the path refinement}. In this paper, we propose an iterative interactive KGQA framework that leverages the interactive learning capabilities of LLMs to perform reasoning and Debating over Graphs (DoG). Specifically, DoG employs a subgraph-focusing mechanism, allowing LLMs to perform answer trying after each reasoning step, thereby mitigating the impact of lengthy reasoning paths. On the other hand, DoG utilizes a multi-role debate team to gradually simplify complex questions, reducing the influence of false-positive relations. This debate mechanism ensures the reliability of the reasoning process. Experimental results on five public datasets demonstrate the effectiveness and superiority of our architecture. Notably, DoG outperforms the state-of-the-art method ToG by 23.7\% and 9.1\% in accuracy on WebQuestions and GrailQA, respectively. Furthermore, the integration experiments with various LLMs on the mentioned datasets highlight the flexibility of DoG. Code is available at \url{this https URL}.

+

+

+

+ 41. 【2409.03140】GraphEx: A Graph-based Extraction Method for Advertiser Keyphrase Recommendation

+ 链接:https://arxiv.org/abs/2409.03140

+ 作者:Ashirbad Mishra,Soumik Dey,Marshall Wu,Jinyu Zhao,He Yu,Kaichen Ni,Binbin Li,Kamesh Madduri

+ 类目:Information Retrieval (cs.IR); Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:Extreme Multi-Label Classification, Online sellers, listed products, enhance their sales, advertisers are recommended

+ 备注:

+

+ 点击查看摘要

+ Abstract:Online sellers and advertisers are recommended keyphrases for their listed products, which they bid on to enhance their sales. One popular paradigm that generates such recommendations is Extreme Multi-Label Classification (XMC), which involves tagging/mapping keyphrases to items. We outline the limitations of using traditional item-query based tagging or mapping techniques for keyphrase recommendations on E-Commerce platforms. We introduce GraphEx, an innovative graph-based approach that recommends keyphrases to sellers using extraction of token permutations from item titles. Additionally, we demonstrate that relying on traditional metrics such as precision/recall can be misleading in practical applications, thereby necessitating a combination of metrics to evaluate performance in real-world scenarios. These metrics are designed to assess the relevance of keyphrases to items and the potential for buyer outreach. GraphEx outperforms production models at eBay, achieving the objectives mentioned above. It supports near real-time inferencing in resource-constrained production environments and scales effectively for billions of items.

+

+

+

+ 42. 【2409.03131】Well, that escalated quickly: The Single-Turn Crescendo Attack (STCA)

+ 链接:https://arxiv.org/abs/2409.03131

+ 作者:Alan Aqrawi

+ 类目:Cryptography and Security (cs.CR); Computation and Language (cs.CL)

+ 关键词:large language models, Single-Turn Crescendo Attack, multi-turn crescendo attack, crescendo attack established, Crescendo Attack

+ 备注:

+

+ 点击查看摘要

+ Abstract:This paper explores a novel approach to adversarial attacks on large language models (LLM): the Single-Turn Crescendo Attack (STCA). The STCA builds upon the multi-turn crescendo attack established by Mark Russinovich, Ahmed Salem, Ronen Eldan. Traditional multi-turn adversarial strategies gradually escalate the context to elicit harmful or controversial responses from LLMs. However, this paper introduces a more efficient method where the escalation is condensed into a single interaction. By carefully crafting the prompt to simulate an extended dialogue, the attack bypasses typical content moderation systems, leading to the generation of responses that would normally be filtered out. I demonstrate this technique through a few case studies. The results highlight vulnerabilities in current LLMs and underscore the need for more robust safeguards. This work contributes to the broader discourse on responsible AI (RAI) safety and adversarial testing, providing insights and practical examples for researchers and developers. This method is unexplored in the literature, making it a novel contribution to the field.

+

+

+

+ 43. 【2409.03115】Probing self-attention in self-supervised speech models for cross-linguistic differences

+ 链接:https://arxiv.org/abs/2409.03115

+ 作者:Sai Gopinath,Joselyn Rodriguez

+ 类目:Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:gained traction, increase in accuracy, transformer architectures, Speech, models

+ 备注: 10 pages, 18 figures

+

+ 点击查看摘要