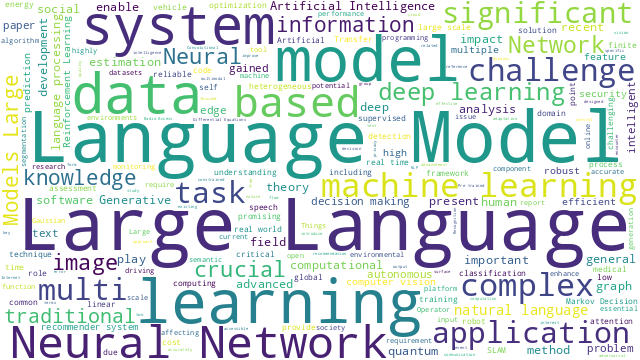

本篇博文主要展示每日从Arxiv论文网站获取的最新论文列表,以计算机视觉、自然语言处理、机器学习、人工智能等大方向进行划分。

+统计

+今日共更新232篇论文,其中:

+

+计算机视觉

+

+ 1. 标题:Generalized Cross-domain Multi-label Few-shot Learning for Chest X-rays

+ 编号:[4]

+ 链接:https://arxiv.org/abs/2309.04462

+ 作者:Aroof Aimen, Arsh Verma, Makarand Tapaswi, Narayanan C. Krishnan

+ 备注:17 pages

+ 关键词:X-ray abnormality classification, abnormality classification requires, classification requires dealing, chest X-ray abnormality, limited training data

+

+ 点击查看摘要

+ Real-world application of chest X-ray abnormality classification requires

+dealing with several challenges: (i) limited training data; (ii) training and

+evaluation sets that are derived from different domains; and (iii) classes that

+appear during training may have partial overlap with classes of interest during

+evaluation. To address these challenges, we present an integrated framework

+called Generalized Cross-Domain Multi-Label Few-Shot Learning (GenCDML-FSL).

+The framework supports overlap in classes during training and evaluation,

+cross-domain transfer, adopts meta-learning to learn using few training

+samples, and assumes each chest X-ray image is either normal or associated with

+one or more abnormalities. Furthermore, we propose Generalized Episodic

+Training (GenET), a training strategy that equips models to operate with

+multiple challenges observed in the GenCDML-FSL scenario. Comparisons with

+well-established methods such as transfer learning, hybrid transfer learning,

+and multi-label meta-learning on multiple datasets show the superiority of our

+approach.

+

+

+

+ 2. 标题:Measuring and Improving Chain-of-Thought Reasoning in Vision-Language Models

+ 编号:[5]

+ 链接:https://arxiv.org/abs/2309.04461

+ 作者:Yangyi Chen, Karan Sikka, Michael Cogswell, Heng Ji, Ajay Divakaran

+ 备注:The data is released at \url{this https URL}

+ 关键词:parse natural queries, generate human-like outputs, recently demonstrated strong, demonstrated strong efficacy, reasoning

+

+ 点击查看摘要

+ Vision-language models (VLMs) have recently demonstrated strong efficacy as

+visual assistants that can parse natural queries about the visual content and

+generate human-like outputs. In this work, we explore the ability of these

+models to demonstrate human-like reasoning based on the perceived information.

+To address a crucial concern regarding the extent to which their reasoning

+capabilities are fully consistent and grounded, we also measure the reasoning

+consistency of these models. We achieve this by proposing a chain-of-thought

+(CoT) based consistency measure. However, such an evaluation requires a

+benchmark that encompasses both high-level inference and detailed reasoning

+chains, which is costly. We tackle this challenge by proposing a

+LLM-Human-in-the-Loop pipeline, which notably reduces cost while simultaneously

+ensuring the generation of a high-quality dataset. Based on this pipeline and

+the existing coarse-grained annotated dataset, we build the CURE benchmark to

+measure both the zero-shot reasoning performance and consistency of VLMs. We

+evaluate existing state-of-the-art VLMs, and find that even the best-performing

+model is unable to demonstrate strong visual reasoning capabilities and

+consistency, indicating that substantial efforts are required to enable VLMs to

+perform visual reasoning as systematically and consistently as humans. As an

+early step, we propose a two-stage training framework aimed at improving both

+the reasoning performance and consistency of VLMs. The first stage involves

+employing supervised fine-tuning of VLMs using step-by-step reasoning samples

+automatically generated by LLMs. In the second stage, we further augment the

+training process by incorporating feedback provided by LLMs to produce

+reasoning chains that are highly consistent and grounded. We empirically

+highlight the effectiveness of our framework in both reasoning performance and

+consistency.

+

+

+

+ 3. 标题:WiSARD: A Labeled Visual and Thermal Image Dataset for Wilderness Search and Rescue

+ 编号:[8]

+ 链接:https://arxiv.org/abs/2309.04453

+ 作者:Daniel Broyles, Christopher R. Hayner, Karen Leung

+ 备注:

+ 关键词:reduce search times, Sensor-equipped unoccupied aerial, unoccupied aerial vehicles, alleviate safety risks, Search and Rescue

+

+ 点击查看摘要

+ Sensor-equipped unoccupied aerial vehicles (UAVs) have the potential to help

+reduce search times and alleviate safety risks for first responders carrying

+out Wilderness Search and Rescue (WiSAR) operations, the process of finding and

+rescuing person(s) lost in wilderness areas. Unfortunately, visual sensors

+alone do not address the need for robustness across all the possible terrains,

+weather, and lighting conditions that WiSAR operations can be conducted in. The

+use of multi-modal sensors, specifically visual-thermal cameras, is critical in

+enabling WiSAR UAVs to perform in diverse operating conditions. However, due to

+the unique challenges posed by the wilderness context, existing dataset

+benchmarks are inadequate for developing vision-based algorithms for autonomous

+WiSAR UAVs. To this end, we present WiSARD, a dataset with roughly 56,000

+labeled visual and thermal images collected from UAV flights in various

+terrains, seasons, weather, and lighting conditions. To the best of our

+knowledge, WiSARD is the first large-scale dataset collected with multi-modal

+sensors for autonomous WiSAR operations. We envision that our dataset will

+provide researchers with a diverse and challenging benchmark that can test the

+robustness of their algorithms when applied to real-world (life-saving)

+applications.

+

+

+

+ 4. 标题:Demographic Disparities in 1-to-Many Facial Identification

+ 编号:[9]

+ 链接:https://arxiv.org/abs/2309.04447

+ 作者:Aman Bhatta, Gabriella Pangelinan, Micheal C. King, Kevin W. Bowyer

+ 备注:9 pages, 8 figures, Conference submission

+ 关键词:examined demographic variations, surveillance camera quality, probe image, accuracy, studies to date

+

+ 点击查看摘要

+ Most studies to date that have examined demographic variations in face

+recognition accuracy have analyzed 1-to-1 matching accuracy, using images that

+could be described as "government ID quality". This paper analyzes the accuracy

+of 1-to-many facial identification across demographic groups, and in the

+presence of blur and reduced resolution in the probe image as might occur in

+"surveillance camera quality" images. Cumulative match characteristic

+curves(CMC) are not appropriate for comparing propensity for rank-one

+recognition errors across demographics, and so we introduce three metrics for

+this: (1) d' metric between mated and non-mated score distributions, (2)

+absolute score difference between thresholds in the high-similarity tail of the

+non-mated and the low-similarity tail of the mated distribution, and (3)

+distribution of (mated - non-mated rank one scores) across the set of probe

+images. We find that demographic variation in 1-to-many accuracy does not

+entirely follow what has been observed in 1-to-1 matching accuracy. Also,

+different from 1-to-1 accuracy, demographic comparison of 1-to-many accuracy

+can be affected by different numbers of identities and images across

+demographics. Finally, we show that increased blur in the probe image, or

+reduced resolution of the face in the probe image, can significantly increase

+the false positive identification rate. And we show that the demographic

+variation in these high blur or low resolution conditions is much larger for

+male/ female than for African-American / Caucasian. The point that 1-to-many

+accuracy can potentially collapse in the context of processing "surveillance

+camera quality" probe images against a "government ID quality" gallery is an

+important one.

+

+

+

+ 5. 标题:Comparative Study of Visual SLAM-Based Mobile Robot Localization Using Fiducial Markers

+ 编号:[11]

+ 链接:https://arxiv.org/abs/2309.04441

+ 作者:Jongwon Lee, Su Yeon Choi, David Hanley, Timothy Bretl

+ 备注:IEEE 2023 IROS Workshop "Closing the Loop on Localization". For more information, see this https URL

+ 关键词:square-shaped artificial landmarks, robot localization based, mobile robot localization, prior map, grid pattern

+

+ 点击查看摘要

+ This paper presents a comparative study of three modes for mobile robot

+localization based on visual SLAM using fiducial markers (i.e., square-shaped

+artificial landmarks with a black-and-white grid pattern): SLAM, SLAM with a

+prior map, and localization with a prior map. The reason for comparing the

+SLAM-based approaches leveraging fiducial markers is because previous work has

+shown their superior performance over feature-only methods, with less

+computational burden compared to methods that use both feature and marker

+detection without compromising the localization performance. The evaluation is

+conducted using indoor image sequences captured with a hand-held camera

+containing multiple fiducial markers in the environment. The performance

+metrics include absolute trajectory error and runtime for the optimization

+process per frame. In particular, for the last two modes (SLAM and localization

+with a prior map), we evaluate their performances by perturbing the quality of

+prior map to study the extent to which each mode is tolerant to such

+perturbations. Hardware experiments show consistent trajectory error levels

+across the three modes, with the localization mode exhibiting the shortest

+runtime among them. Yet, with map perturbations, SLAM with a prior map

+maintains performance, while localization mode degrades in both aspects.

+

+

+

+ 6. 标题:Single View Refractive Index Tomography with Neural Fields

+ 编号:[12]

+ 链接:https://arxiv.org/abs/2309.04437

+ 作者:Brandon Zhao, Aviad Levis, Liam Connor, Pratul P. Srinivasan, Katherine L. Bouman

+ 备注:

+ 关键词:Refractive Index Tomography, refractive field, Refractive Index, Index Tomography, Refractive

+

+ 点击查看摘要

+ Refractive Index Tomography is an inverse problem in which we seek to

+reconstruct a scene's 3D refractive field from 2D projected image measurements.

+The refractive field is not visible itself, but instead affects how the path of

+a light ray is continuously curved as it travels through space. Refractive

+fields appear across a wide variety of scientific applications, from

+translucent cell samples in microscopy to fields of dark matter bending light

+from faraway galaxies. This problem poses a unique challenge because the

+refractive field directly affects the path that light takes, making its

+recovery a non-linear problem. In addition, in contrast with traditional

+tomography, we seek to recover the refractive field using a projected image

+from only a single viewpoint by leveraging knowledge of light sources scattered

+throughout the medium. In this work, we introduce a method that uses a

+coordinate-based neural network to model the underlying continuous refractive

+field in a scene. We then use explicit modeling of rays' 3D spatial curvature

+to optimize the parameters of this network, reconstructing refractive fields

+with an analysis-by-synthesis approach. The efficacy of our approach is

+demonstrated by recovering refractive fields in simulation, and analyzing how

+recovery is affected by the light source distribution. We then test our method

+on a simulated dark matter mapping problem, where we recover the refractive

+field underlying a realistic simulated dark matter distribution.

+

+

+

+ 7. 标题:Create Your World: Lifelong Text-to-Image Diffusion

+ 编号:[15]

+ 链接:https://arxiv.org/abs/2309.04430

+ 作者:Gan Sun, Wenqi Liang, Jiahua Dong, Jun Li, Zhengming Ding, Yang Cong

+ 备注:15 pages,10 figures

+ 关键词:produce diverse high-quality, demonstrated excellent ability, diverse high-quality images, produce diverse, diverse high-quality

+

+ 点击查看摘要

+ Text-to-image generative models can produce diverse high-quality images of

+concepts with a text prompt, which have demonstrated excellent ability in image

+generation, image translation, etc. We in this work study the problem of

+synthesizing instantiations of a use's own concepts in a never-ending manner,

+i.e., create your world, where the new concepts from user are quickly learned

+with a few examples. To achieve this goal, we propose a Lifelong text-to-image

+Diffusion Model (L2DM), which intends to overcome knowledge "catastrophic

+forgetting" for the past encountered concepts, and semantic "catastrophic

+neglecting" for one or more concepts in the text prompt. In respect of

+knowledge "catastrophic forgetting", our L2DM framework devises a task-aware

+memory enhancement module and a elastic-concept distillation module, which

+could respectively safeguard the knowledge of both prior concepts and each past

+personalized concept. When generating images with a user text prompt, the

+solution to semantic "catastrophic neglecting" is that a concept attention

+artist module can alleviate the semantic neglecting from concept aspect, and an

+orthogonal attention module can reduce the semantic binding from attribute

+aspect. To the end, our model can generate more faithful image across a range

+of continual text prompts in terms of both qualitative and quantitative

+metrics, when comparing with the related state-of-the-art models. The code will

+be released at this https URL.

+

+

+

+ 8. 标题:Video Task Decathlon: Unifying Image and Video Tasks in Autonomous Driving

+ 编号:[20]

+ 链接:https://arxiv.org/abs/2309.04422

+ 作者:Thomas E. Huang, Yifan Liu, Luc Van Gool, Fisher Yu

+ 备注:ICCV 2023, project page at this https URL

+ 关键词:Performing multiple heterogeneous, multiple heterogeneous visual, heterogeneous visual tasks, human perception capability, tasks

+

+ 点击查看摘要

+ Performing multiple heterogeneous visual tasks in dynamic scenes is a

+hallmark of human perception capability. Despite remarkable progress in image

+and video recognition via representation learning, current research still

+focuses on designing specialized networks for singular, homogeneous, or simple

+combination of tasks. We instead explore the construction of a unified model

+for major image and video recognition tasks in autonomous driving with diverse

+input and output structures. To enable such an investigation, we design a new

+challenge, Video Task Decathlon (VTD), which includes ten representative image

+and video tasks spanning classification, segmentation, localization, and

+association of objects and pixels. On VTD, we develop our unified network,

+VTDNet, that uses a single structure and a single set of weights for all ten

+tasks. VTDNet groups similar tasks and employs task interaction stages to

+exchange information within and between task groups. Given the impracticality

+of labeling all tasks on all frames, and the performance degradation associated

+with joint training of many tasks, we design a Curriculum training,

+Pseudo-labeling, and Fine-tuning (CPF) scheme to successfully train VTDNet on

+all tasks and mitigate performance loss. Armed with CPF, VTDNet significantly

+outperforms its single-task counterparts on most tasks with only 20% overall

+computations. VTD is a promising new direction for exploring the unification of

+perception tasks in autonomous driving.

+

+

+

+ 9. 标题:SynthoGestures: A Novel Framework for Synthetic Dynamic Hand Gesture Generation for Driving Scenarios

+ 编号:[21]

+ 链接:https://arxiv.org/abs/2309.04421

+ 作者:Amr Gomaa, Robin Zitt, Guillermo Reyes, Antonio Krüger

+ 备注:Shorter versions are accepted as AutomotiveUI2023 Work in Progress and UIST2023 Poster Papers

+ 关键词:dynamic human-machine interfaces, Creating a diverse, challenging and time-consuming, diverse and comprehensive, dynamic human-machine

+

+ 点击查看摘要

+ Creating a diverse and comprehensive dataset of hand gestures for dynamic

+human-machine interfaces in the automotive domain can be challenging and

+time-consuming. To overcome this challenge, we propose using synthetic gesture

+datasets generated by virtual 3D models. Our framework utilizes Unreal Engine

+to synthesize realistic hand gestures, offering customization options and

+reducing the risk of overfitting. Multiple variants, including gesture speed,

+performance, and hand shape, are generated to improve generalizability. In

+addition, we simulate different camera locations and types, such as RGB,

+infrared, and depth cameras, without incurring additional time and cost to

+obtain these cameras. Experimental results demonstrate that our proposed

+framework,

+SynthoGestures\footnote{\url{this https URL}},

+improves gesture recognition accuracy and can replace or augment real-hand

+datasets. By saving time and effort in the creation of the data set, our tool

+accelerates the development of gesture recognition systems for automotive

+applications.

+

+

+

+ 10. 标题:DeformToon3D: Deformable 3D Toonification from Neural Radiance Fields

+ 编号:[24]

+ 链接:https://arxiv.org/abs/2309.04410

+ 作者:Junzhe Zhang, Yushi Lan, Shuai Yang, Fangzhou Hong, Quan Wang, Chai Kiat Yeo, Ziwei Liu, Chen Change Loy

+ 备注:ICCV 2023. Code: this https URL Project page: this https URL

+ 关键词:artistic domain, face with stylized, address the challenging, challenging problem, involves transferring

+

+ 点击查看摘要

+ In this paper, we address the challenging problem of 3D toonification, which

+involves transferring the style of an artistic domain onto a target 3D face

+with stylized geometry and texture. Although fine-tuning a pre-trained 3D GAN

+on the artistic domain can produce reasonable performance, this strategy has

+limitations in the 3D domain. In particular, fine-tuning can deteriorate the

+original GAN latent space, which affects subsequent semantic editing, and

+requires independent optimization and storage for each new style, limiting

+flexibility and efficient deployment. To overcome these challenges, we propose

+DeformToon3D, an effective toonification framework tailored for hierarchical 3D

+GAN. Our approach decomposes 3D toonification into subproblems of geometry and

+texture stylization to better preserve the original latent space. Specifically,

+we devise a novel StyleField that predicts conditional 3D deformation to align

+a real-space NeRF to the style space for geometry stylization. Thanks to the

+StyleField formulation, which already handles geometry stylization well,

+texture stylization can be achieved conveniently via adaptive style mixing that

+injects information of the artistic domain into the decoder of the pre-trained

+3D GAN. Due to the unique design, our method enables flexible style degree

+control and shape-texture-specific style swap. Furthermore, we achieve

+efficient training without any real-world 2D-3D training pairs but proxy

+samples synthesized from off-the-shelf 2D toonification models.

+

+

+

+ 11. 标题:MaskDiffusion: Boosting Text-to-Image Consistency with Conditional Mask

+ 编号:[28]

+ 链接:https://arxiv.org/abs/2309.04399

+ 作者:Yupeng Zhou, Daquan Zhou, Zuo-Liang Zhu, Yaxing Wang, Qibin Hou, Jiashi Feng

+ 备注:

+ 关键词:generate visually striking, visually striking images, Recent advancements, showcased their impressive, impressive capacity

+

+ 点击查看摘要

+ Recent advancements in diffusion models have showcased their impressive

+capacity to generate visually striking images. Nevertheless, ensuring a close

+match between the generated image and the given prompt remains a persistent

+challenge. In this work, we identify that a crucial factor leading to the

+text-image mismatch issue is the inadequate cross-modality relation learning

+between the prompt and the output image. To better align the prompt and image

+content, we advance the cross-attention with an adaptive mask, which is

+conditioned on the attention maps and the prompt embeddings, to dynamically

+adjust the contribution of each text token to the image features. This

+mechanism explicitly diminishes the ambiguity in semantic information embedding

+from the text encoder, leading to a boost of text-to-image consistency in the

+synthesized images. Our method, termed MaskDiffusion, is training-free and

+hot-pluggable for popular pre-trained diffusion models. When applied to the

+latent diffusion models, our MaskDiffusion can significantly improve the

+text-to-image consistency with negligible computation overhead compared to the

+original diffusion models.

+

+

+

+ 12. 标题:Language Prompt for Autonomous Driving

+ 编号:[33]

+ 链接:https://arxiv.org/abs/2309.04379

+ 作者:Dongming Wu, Wencheng Han, Tiancai Wang, Yingfei Liu, Xiangyu Zhang, Jianbing Shen

+ 备注:

+ 关键词:flexible human command, human command represented, natural language prompt, computer vision community, computer vision

+

+ 点击查看摘要

+ A new trend in the computer vision community is to capture objects of

+interest following flexible human command represented by a natural language

+prompt. However, the progress of using language prompts in driving scenarios is

+stuck in a bottleneck due to the scarcity of paired prompt-instance data. To

+address this challenge, we propose the first object-centric language prompt set

+for driving scenes within 3D, multi-view, and multi-frame space, named

+NuPrompt. It expands Nuscenes dataset by constructing a total of 35,367

+language descriptions, each referring to an average of 5.3 object tracks. Based

+on the object-text pairs from the new benchmark, we formulate a new

+prompt-based driving task, \ie, employing a language prompt to predict the

+described object trajectory across views and frames. Furthermore, we provide a

+simple end-to-end baseline model based on Transformer, named PromptTrack.

+Experiments show that our PromptTrack achieves impressive performance on

+NuPrompt. We hope this work can provide more new insights for the autonomous

+driving community. Dataset and Code will be made public at

+\href{this https URL}{this https URL}.

+

+

+

+ 13. 标题:MoEController: Instruction-based Arbitrary Image Manipulation with Mixture-of-Expert Controllers

+ 编号:[36]

+ 链接:https://arxiv.org/abs/2309.04372

+ 作者:Sijia Li, Chen Chen, Haonan Lu

+ 备注:5 pages,6 figures

+ 关键词:image manipulation tasks, producing fascinating results, made astounding progress, recently made astounding, manipulation tasks

+

+ 点击查看摘要

+ Diffusion-model-based text-guided image generation has recently made

+astounding progress, producing fascinating results in open-domain image

+manipulation tasks. Few models, however, currently have complete zero-shot

+capabilities for both global and local image editing due to the complexity and

+diversity of image manipulation tasks. In this work, we propose a method with a

+mixture-of-expert (MOE) controllers to align the text-guided capacity of

+diffusion models with different kinds of human instructions, enabling our model

+to handle various open-domain image manipulation tasks with natural language

+instructions. First, we use large language models (ChatGPT) and conditional

+image synthesis models (ControlNet) to generate a large number of global image

+transfer dataset in addition to the instruction-based local image editing

+dataset. Then, using an MOE technique and task-specific adaptation training on

+a large-scale dataset, our conditional diffusion model can edit images globally

+and locally. Extensive experiments demonstrate that our approach performs

+surprisingly well on various image manipulation tasks when dealing with

+open-domain images and arbitrary human instructions. Please refer to our

+project page: [this https URL]

+

+

+

+ 14. 标题:CNN Injected Transformer for Image Exposure Correction

+ 编号:[40]

+ 链接:https://arxiv.org/abs/2309.04366

+ 作者:Shuning Xu, Xiangyu Chen, Binbin Song, Jiantao Zhou

+ 备注:

+ 关键词:satisfactory visual experience, incorrect exposure settings, exposure settings fails, visual experience, exposure correction

+

+ 点击查看摘要

+ Capturing images with incorrect exposure settings fails to deliver a

+satisfactory visual experience. Only when the exposure is properly set, can the

+color and details of the images be appropriately preserved. Previous exposure

+correction methods based on convolutions often produce exposure deviation in

+images as a consequence of the restricted receptive field of convolutional

+kernels. This issue arises because convolutions are not capable of capturing

+long-range dependencies in images accurately. To overcome this challenge, we

+can apply the Transformer to address the exposure correction problem,

+leveraging its capability in modeling long-range dependencies to capture global

+representation. However, solely relying on the window-based Transformer leads

+to visually disturbing blocking artifacts due to the application of

+self-attention in small patches. In this paper, we propose a CNN Injected

+Transformer (CIT) to harness the individual strengths of CNN and Transformer

+simultaneously. Specifically, we construct the CIT by utilizing a window-based

+Transformer to exploit the long-range interactions among different regions in

+the entire image. Within each CIT block, we incorporate a channel attention

+block (CAB) and a half-instance normalization block (HINB) to assist the

+window-based self-attention to acquire the global statistics and refine local

+features. In addition to the hybrid architecture design for exposure

+correction, we apply a set of carefully formulated loss functions to improve

+the spatial coherence and rectify potential color deviations. Extensive

+experiments demonstrate that our image exposure correction method outperforms

+state-of-the-art approaches in terms of both quantitative and qualitative

+metrics.

+

+

+

+ 15. 标题:SSIG: A Visually-Guided Graph Edit Distance for Floor Plan Similarity

+ 编号:[43]

+ 链接:https://arxiv.org/abs/2309.04357

+ 作者:Casper van Engelenburg, Seyran Khademi, Jan van Gemert

+ 备注:To be published in ICCVW 2023, 10 pages

+ 关键词:floor plan, architectural floor plans, floor, floor plan data, structural similarity

+

+ 点击查看摘要

+ We propose a simple yet effective metric that measures structural similarity

+between visual instances of architectural floor plans, without the need for

+learning. Qualitatively, our experiments show that the retrieval results are

+similar to deeply learned methods. Effectively comparing instances of floor

+plan data is paramount to the success of machine understanding of floor plan

+data, including the assessment of floor plan generative models and floor plan

+recommendation systems. Comparing visual floor plan images goes beyond a sole

+pixel-wise visual examination and is crucially about similarities and

+differences in the shapes and relations between subdivisions that compose the

+layout. Currently, deep metric learning approaches are used to learn a

+pair-wise vector representation space that closely mimics the structural

+similarity, in which the models are trained on similarity labels that are

+obtained by Intersection-over-Union (IoU). To compensate for the lack of

+structural awareness in IoU, graph-based approaches such as Graph Matching

+Networks (GMNs) are used, which require pairwise inference for comparing data

+instances, making GMNs less practical for retrieval applications. In this

+paper, an effective evaluation metric for judging the structural similarity of

+floor plans, coined SSIG (Structural Similarity by IoU and GED), is proposed

+based on both image and graph distances. In addition, an efficient algorithm is

+developed that uses SSIG to rank a large-scale floor plan database. Code will

+be openly available.

+

+

+

+ 16. 标题:Mobile V-MoEs: Scaling Down Vision Transformers via Sparse Mixture-of-Experts

+ 编号:[45]

+ 链接:https://arxiv.org/abs/2309.04354

+ 作者:Erik Daxberger, Floris Weers, Bowen Zhang, Tom Gunter, Ruoming Pang, Marcin Eichner, Michael Emmersberger, Yinfei Yang, Alexander Toshev, Xianzhi Du

+ 备注:

+ 关键词:recently gained popularity, gained popularity due, decouple model size, input token, recently gained

+

+ 点击查看摘要

+ Sparse Mixture-of-Experts models (MoEs) have recently gained popularity due

+to their ability to decouple model size from inference efficiency by only

+activating a small subset of the model parameters for any given input token. As

+such, sparse MoEs have enabled unprecedented scalability, resulting in

+tremendous successes across domains such as natural language processing and

+computer vision. In this work, we instead explore the use of sparse MoEs to

+scale-down Vision Transformers (ViTs) to make them more attractive for

+resource-constrained vision applications. To this end, we propose a simplified

+and mobile-friendly MoE design where entire images rather than individual

+patches are routed to the experts. We also propose a stable MoE training

+procedure that uses super-class information to guide the router. We empirically

+show that our sparse Mobile Vision MoEs (V-MoEs) can achieve a better trade-off

+between performance and efficiency than the corresponding dense ViTs. For

+example, for the ViT-Tiny model, our Mobile V-MoE outperforms its dense

+counterpart by 3.39% on ImageNet-1k. For an even smaller ViT variant with only

+54M FLOPs inference cost, our MoE achieves an improvement of 4.66%.

+

+

+

+ 17. 标题:Leveraging Model Fusion for Improved License Plate Recognition

+ 编号:[57]

+ 链接:https://arxiv.org/abs/2309.04331

+ 作者:Rayson Laroca, Luiz A. Zanlorensi, Valter Estevam, Rodrigo Minetto, David Menotti

+ 备注:Accepted for presentation at the Iberoamerican Congress on Pattern Recognition (CIARP) 2023

+ 关键词:License Plate Recognition, traffic law enforcement, License Plate, Plate Recognition, parking management

+

+ 点击查看摘要

+ License Plate Recognition (LPR) plays a critical role in various

+applications, such as toll collection, parking management, and traffic law

+enforcement. Although LPR has witnessed significant advancements through the

+development of deep learning, there has been a noticeable lack of studies

+exploring the potential improvements in results by fusing the outputs from

+multiple recognition models. This research aims to fill this gap by

+investigating the combination of up to 12 different models using

+straightforward approaches, such as selecting the most confident prediction or

+employing majority vote-based strategies. Our experiments encompass a wide

+range of datasets, revealing substantial benefits of fusion approaches in both

+intra- and cross-dataset setups. Essentially, fusing multiple models reduces

+considerably the likelihood of obtaining subpar performance on a particular

+dataset/scenario. We also found that combining models based on their speed is

+an appealing approach. Specifically, for applications where the recognition

+task can tolerate some additional time, though not excessively, an effective

+strategy is to combine 4-6 models. These models may not be the most accurate

+individually, but their fusion strikes an optimal balance between accuracy and

+speed.

+

+

+

+ 18. 标题:AMLP:Adaptive Masking Lesion Patches for Self-supervised Medical Image Segmentation

+ 编号:[62]

+ 链接:https://arxiv.org/abs/2309.04312

+ 作者:Xiangtao Wang, Ruizhi Wang, Jie Zhou, Thomas Lukasiewicz, Zhenghua Xu

+ 备注:

+ 关键词:shown promising results, shown promising, promising results, Adaptive Masking, Adaptive Masking Ratio

+

+ 点击查看摘要

+ Self-supervised masked image modeling has shown promising results on natural

+images. However, directly applying such methods to medical images remains

+challenging. This difficulty stems from the complexity and distinct

+characteristics of lesions compared to natural images, which impedes effective

+representation learning. Additionally, conventional high fixed masking ratios

+restrict reconstructing fine lesion details, limiting the scope of learnable

+information. To tackle these limitations, we propose a novel self-supervised

+medical image segmentation framework, Adaptive Masking Lesion Patches (AMLP).

+Specifically, we design a Masked Patch Selection (MPS) strategy to identify and

+focus learning on patches containing lesions. Lesion regions are scarce yet

+critical, making their precise reconstruction vital. To reduce

+misclassification of lesion and background patches caused by unsupervised

+clustering in MPS, we introduce an Attention Reconstruction Loss (ARL) to focus

+on hard-to-reconstruct patches likely depicting lesions. We further propose a

+Category Consistency Loss (CCL) to refine patch categorization based on

+reconstruction difficulty, strengthening distinction between lesions and

+background. Moreover, we develop an Adaptive Masking Ratio (AMR) strategy that

+gradually increases the masking ratio to expand reconstructible information and

+improve learning. Extensive experiments on two medical segmentation datasets

+demonstrate AMLP's superior performance compared to existing self-supervised

+approaches. The proposed strategies effectively address limitations in applying

+masked modeling to medical images, tailored to capturing fine lesion details

+vital for segmentation tasks.

+

+

+

+ 19. 标题:Have We Ever Encountered This Before? Retrieving Out-of-Distribution Road Obstacles from Driving Scenes

+ 编号:[66]

+ 链接:https://arxiv.org/abs/2309.04302

+ 作者:Youssef Shoeb, Robin Chan, Gesina Schwalbe, Azarm Nowzard, Fatma Güney, Hanno Gottschalk

+ 备注:11 pages, 7 figures, and 3 tables

+ 关键词:OoD road obstacles, highly automated systems, automated systems operating, road obstacles, dynamic environment

+

+ 点击查看摘要

+ In the life cycle of highly automated systems operating in an open and

+dynamic environment, the ability to adjust to emerging challenges is crucial.

+For systems integrating data-driven AI-based components, rapid responses to

+deployment issues require fast access to related data for testing and

+reconfiguration. In the context of automated driving, this especially applies

+to road obstacles that were not included in the training data, commonly

+referred to as out-of-distribution (OoD) road obstacles. Given the availability

+of large uncurated recordings of driving scenes, a pragmatic approach is to

+query a database to retrieve similar scenarios featuring the same safety

+concerns due to OoD road obstacles. In this work, we extend beyond identifying

+OoD road obstacles in video streams and offer a comprehensive approach to

+extract sequences of OoD road obstacles using text queries, thereby proposing a

+way of curating a collection of OoD data for subsequent analysis. Our proposed

+method leverages the recent advances in OoD segmentation and multi-modal

+foundation models to identify and efficiently extract safety-relevant scenes

+from unlabeled videos. We present a first approach for the novel task of

+text-based OoD object retrieval, which addresses the question ''Have we ever

+encountered this before?''.

+

+

+

+ 20. 标题:Towards Practical Capture of High-Fidelity Relightable Avatars

+ 编号:[85]

+ 链接:https://arxiv.org/abs/2309.04247

+ 作者:Haotian Yang, Mingwu Zheng, Wanquan Feng, Haibin Huang, Yu-Kun Lai, Pengfei Wan, Zhongyuan Wang, Chongyang Ma

+ 备注:Accepted to SIGGRAPH Asia 2023 (Conference); Project page: this https URL

+ 关键词:reconstructing high-fidelity, capturing and reconstructing, TRAvatar, lighting conditions, conditions

+

+ 点击查看摘要

+ In this paper, we propose a novel framework, Tracking-free Relightable Avatar

+(TRAvatar), for capturing and reconstructing high-fidelity 3D avatars. Compared

+to previous methods, TRAvatar works in a more practical and efficient setting.

+Specifically, TRAvatar is trained with dynamic image sequences captured in a

+Light Stage under varying lighting conditions, enabling realistic relighting

+and real-time animation for avatars in diverse scenes. Additionally, TRAvatar

+allows for tracking-free avatar capture and obviates the need for accurate

+surface tracking under varying illumination conditions. Our contributions are

+two-fold: First, we propose a novel network architecture that explicitly builds

+on and ensures the satisfaction of the linear nature of lighting. Trained on

+simple group light captures, TRAvatar can predict the appearance in real-time

+with a single forward pass, achieving high-quality relighting effects under

+illuminations of arbitrary environment maps. Second, we jointly optimize the

+facial geometry and relightable appearance from scratch based on image

+sequences, where the tracking is implicitly learned. This tracking-free

+approach brings robustness for establishing temporal correspondences between

+frames under different lighting conditions. Extensive qualitative and

+quantitative experiments demonstrate that our framework achieves superior

+performance for photorealistic avatar animation and relighting.

+

+

+

+ 21. 标题:FIVA: Facial Image and Video Anonymization and Anonymization Defense

+ 编号:[91]

+ 链接:https://arxiv.org/abs/2309.04228

+ 作者:Felix Rosberg, Eren Erdal Aksoy, Cristofer Englund, Fernando Alonso-Fernandez

+ 备注:Accepted to ICCVW 2023 - DFAD 2023

+ 关键词:approach for facial, facial anonymization, FIVA, paper, videos

+

+ 点击查看摘要

+ In this paper, we present a new approach for facial anonymization in images

+and videos, abbreviated as FIVA. Our proposed method is able to maintain the

+same face anonymization consistently over frames with our suggested

+identity-tracking and guarantees a strong difference from the original face.

+FIVA allows for 0 true positives for a false acceptance rate of 0.001. Our work

+considers the important security issue of reconstruction attacks and

+investigates adversarial noise, uniform noise, and parameter noise to disrupt

+reconstruction attacks. In this regard, we apply different defense and

+protection methods against these privacy threats to demonstrate the scalability

+of FIVA. On top of this, we also show that reconstruction attack models can be

+used for detection of deep fakes. Last but not least, we provide experimental

+results showing how FIVA can even enable face swapping, which is purely trained

+on a single target image.

+

+

+

+ 22. 标题:Long-Range Correlation Supervision for Land-Cover Classification from Remote Sensing Images

+ 编号:[92]

+ 链接:https://arxiv.org/abs/2309.04225

+ 作者:Dawen Yu, Shunping Ji

+ 备注:14 pages, 11 figures

+ 关键词:Long-range dependency modeling, modern deep learning, deep learning based, supervised long-range correlation, long-range correlation

+

+ 点击查看摘要

+ Long-range dependency modeling has been widely considered in modern deep

+learning based semantic segmentation methods, especially those designed for

+large-size remote sensing images, to compensate the intrinsic locality of

+standard convolutions. However, in previous studies, the long-range dependency,

+modeled with an attention mechanism or transformer model, has been based on

+unsupervised learning, instead of explicit supervision from the objective

+ground truth. In this paper, we propose a novel supervised long-range

+correlation method for land-cover classification, called the supervised

+long-range correlation network (SLCNet), which is shown to be superior to the

+currently used unsupervised strategies. In SLCNet, pixels sharing the same

+category are considered highly correlated and those having different categories

+are less relevant, which can be easily supervised by the category consistency

+information available in the ground truth semantic segmentation map. Under such

+supervision, the recalibrated features are more consistent for pixels of the

+same category and more discriminative for pixels of other categories,

+regardless of their proximity. To complement the detailed information lacking

+in the global long-range correlation, we introduce an auxiliary adaptive

+receptive field feature extraction module, parallel to the long-range

+correlation module in the encoder, to capture finely detailed feature

+representations for multi-size objects in multi-scale remote sensing images. In

+addition, we apply multi-scale side-output supervision and a hybrid loss

+function as local and global constraints to further boost the segmentation

+accuracy. Experiments were conducted on three remote sensing datasets. Compared

+with the advanced segmentation methods from the computer vision, medicine, and

+remote sensing communities, the SLCNet achieved a state-of-the-art performance

+on all the datasets.

+

+

+

+ 23. 标题:Score-PA: Score-based 3D Part Assembly

+ 编号:[96]

+ 链接:https://arxiv.org/abs/2309.04220

+ 作者:Junfeng Cheng, Mingdong Wu, Ruiyuan Zhang, Guanqi Zhan, Chao Wu, Hao Dong

+ 备注:BMVC 2023

+ 关键词:computer vision, part assembly, areas of robotics, Part Assembly framework, part

+

+ 点击查看摘要

+ Autonomous 3D part assembly is a challenging task in the areas of robotics

+and 3D computer vision. This task aims to assemble individual components into a

+complete shape without relying on predefined instructions. In this paper, we

+formulate this task from a novel generative perspective, introducing the

+Score-based 3D Part Assembly framework (Score-PA) for 3D part assembly. Knowing

+that score-based methods are typically time-consuming during the inference

+stage. To address this issue, we introduce a novel algorithm called the Fast

+Predictor-Corrector Sampler (FPC) that accelerates the sampling process within

+the framework. We employ various metrics to assess assembly quality and

+diversity, and our evaluation results demonstrate that our algorithm

+outperforms existing state-of-the-art approaches. We release our code at

+this https URL.

+

+

+

+ 24. 标题:Stereo Matching in Time: 100+ FPS Video Stereo Matching for Extended Reality

+ 编号:[112]

+ 链接:https://arxiv.org/abs/2309.04183

+ 作者:Ziang Cheng, Jiayu Yang, Hongdong Li

+ 备注:

+ 关键词:cornerstone algorithm, Stereo Matching, Stereo, Real-time Stereo Matching, Extended

+

+ 点击查看摘要

+ Real-time Stereo Matching is a cornerstone algorithm for many Extended

+Reality (XR) applications, such as indoor 3D understanding, video pass-through,

+and mixed-reality games. Despite significant advancements in deep stereo

+methods, achieving real-time depth inference with high accuracy on a low-power

+device remains a major challenge. One of the major difficulties is the lack of

+high-quality indoor video stereo training datasets captured by head-mounted

+VR/AR glasses. To address this issue, we introduce a novel video stereo

+synthetic dataset that comprises photorealistic renderings of various indoor

+scenes and realistic camera motion captured by a 6-DoF moving VR/AR

+head-mounted display (HMD). This facilitates the evaluation of existing

+approaches and promotes further research on indoor augmented reality scenarios.

+Our newly proposed dataset enables us to develop a novel framework for

+continuous video-rate stereo matching.

+As another contribution, our dataset enables us to proposed a new video-based

+stereo matching approach tailored for XR applications, which achieves real-time

+inference at an impressive 134fps on a standard desktop computer, or 30fps on a

+battery-powered HMD. Our key insight is that disparity and contextual

+information are highly correlated and redundant between consecutive stereo

+frames. By unrolling an iterative cost aggregation in time (i.e. in the

+temporal dimension), we are able to distribute and reuse the aggregated

+features over time. This approach leads to a substantial reduction in

+computation without sacrificing accuracy. We conducted extensive evaluations

+and comparisons and demonstrated that our method achieves superior performance

+compared to the current state-of-the-art, making it a strong contender for

+real-time stereo matching in VR/AR applications.

+

+

+

+ 25. 标题:Unsupervised Object Localization with Representer Point Selection

+ 编号:[118]

+ 链接:https://arxiv.org/abs/2309.04172

+ 作者:Yeonghwan Song, Seokwoo Jang, Dina Katabi, Jeany Son

+ 备注:Accepted by ICCV 2023

+ 关键词:self-supervised object localization, utilizing self-supervised pre-trained, unsupervised object localization, object localization method, object localization

+

+ 点击查看摘要

+ We propose a novel unsupervised object localization method that allows us to

+explain the predictions of the model by utilizing self-supervised pre-trained

+models without additional finetuning. Existing unsupervised and self-supervised

+object localization methods often utilize class-agnostic activation maps or

+self-similarity maps of a pre-trained model. Although these maps can offer

+valuable information for localization, their limited ability to explain how the

+model makes predictions remains challenging. In this paper, we propose a simple

+yet effective unsupervised object localization method based on representer

+point selection, where the predictions of the model can be represented as a

+linear combination of representer values of training points. By selecting

+representer points, which are the most important examples for the model

+predictions, our model can provide insights into how the model predicts the

+foreground object by providing relevant examples as well as their importance.

+Our method outperforms the state-of-the-art unsupervised and self-supervised

+object localization methods on various datasets with significant margins and

+even outperforms recent weakly supervised and few-shot methods.

+

+

+

+ 26. 标题:PRISTA-Net: Deep Iterative Shrinkage Thresholding Network for Coded Diffraction Patterns Phase Retrieval

+ 编号:[119]

+ 链接:https://arxiv.org/abs/2309.04171

+ 作者:Aoxu Liu, Xiaohong Fan, Yin Yang, Jianping Zhang

+ 备注:12 pages

+ 关键词:nonlinear inverse problem, challenge nonlinear inverse, limited amplitude measurement, amplitude measurement data, inverse problem

+

+ 点击查看摘要

+ The problem of phase retrieval (PR) involves recovering an unknown image from

+limited amplitude measurement data and is a challenge nonlinear inverse problem

+in computational imaging and image processing. However, many of the PR methods

+are based on black-box network models that lack interpretability and

+plug-and-play (PnP) frameworks that are computationally complex and require

+careful parameter tuning. To address this, we have developed PRISTA-Net, a deep

+unfolding network (DUN) based on the first-order iterative shrinkage

+thresholding algorithm (ISTA). This network utilizes a learnable nonlinear

+transformation to address the proximal-point mapping sub-problem associated

+with the sparse priors, and an attention mechanism to focus on phase

+information containing image edges, textures, and structures. Additionally, the

+fast Fourier transform (FFT) is used to learn global features to enhance local

+information, and the designed logarithmic-based loss function leads to

+significant improvements when the noise level is low. All parameters in the

+proposed PRISTA-Net framework, including the nonlinear transformation,

+threshold parameters, and step size, are learned end-to-end instead of being

+manually set. This method combines the interpretability of traditional methods

+with the fast inference ability of deep learning and is able to handle noise at

+each iteration during the unfolding stage, thus improving recovery quality.

+Experiments on Coded Diffraction Patterns (CDPs) measurements demonstrate that

+our approach outperforms the existing state-of-the-art methods in terms of

+qualitative and quantitative evaluations. Our source codes are available at

+\emph{this https URL}.

+

+

+

+ 27. 标题:Grouping Boundary Proposals for Fast Interactive Image Segmentation

+ 编号:[120]

+ 链接:https://arxiv.org/abs/2309.04169

+ 作者:Li Liu, Da Chen, Minglei Shu, Laurent D. Cohen

+ 备注:

+ 关键词:image segmentation, image segmentation model, image, efficient tool, tool for solving

+

+ 点击查看摘要

+ Geodesic models are known as an efficient tool for solving various image

+segmentation problems. Most of existing approaches only exploit local pointwise

+image features to track geodesic paths for delineating the objective

+boundaries. However, such a segmentation strategy cannot take into account the

+connectivity of the image edge features, increasing the risk of shortcut

+problem, especially in the case of complicated scenario. In this work, we

+introduce a new image segmentation model based on the minimal geodesic

+framework in conjunction with an adaptive cut-based circular optimal path

+computation scheme and a graph-based boundary proposals grouping scheme.

+Specifically, the adaptive cut can disconnect the image domain such that the

+target contours are imposed to pass through this cut only once. The boundary

+proposals are comprised of precomputed image edge segments, providing the

+connectivity information for our segmentation model. These boundary proposals

+are then incorporated into the proposed image segmentation model, such that the

+target segmentation contours are made up of a set of selected boundary

+proposals and the corresponding geodesic paths linking them. Experimental

+results show that the proposed model indeed outperforms state-of-the-art

+minimal paths-based image segmentation approaches.

+

+

+

+ 28. 标题:Context-Aware Prompt Tuning for Vision-Language Model with Dual-Alignment

+ 编号:[124]

+ 链接:https://arxiv.org/abs/2309.04158

+ 作者:Hongyu Hu, Tiancheng Lin, Jie Wang, Zhenbang Sun, Yi Xu

+ 备注:

+ 关键词:broad visual concepts, tedious training data, showing superb generalization, superb generalization ability, learn broad visual

+

+ 点击查看摘要

+ Large-scale vision-language models (VLMs), e.g., CLIP, learn broad visual

+concepts from tedious training data, showing superb generalization ability.

+Amount of prompt learning methods have been proposed to efficiently adapt the

+VLMs to downstream tasks with only a few training samples. We introduce a novel

+method to improve the prompt learning of vision-language models by

+incorporating pre-trained large language models (LLMs), called Dual-Aligned

+Prompt Tuning (DuAl-PT). Learnable prompts, like CoOp, implicitly model the

+context through end-to-end training, which are difficult to control and

+interpret. While explicit context descriptions generated by LLMs, like GPT-3,

+can be directly used for zero-shot classification, such prompts are overly

+relying on LLMs and still underexplored in few-shot domains. With DuAl-PT, we

+propose to learn more context-aware prompts, benefiting from both explicit and

+implicit context modeling. To achieve this, we introduce a pre-trained LLM to

+generate context descriptions, and we encourage the prompts to learn from the

+LLM's knowledge by alignment, as well as the alignment between prompts and

+local image features. Empirically, DuAl-PT achieves superior performance on 11

+downstream datasets on few-shot recognition and base-to-new generalization.

+Hopefully, DuAl-PT can serve as a strong baseline. Code will be available.

+

+

+

+ 29. 标题:Mapping EEG Signals to Visual Stimuli: A Deep Learning Approach to Match vs. Mismatch Classification

+ 编号:[127]

+ 链接:https://arxiv.org/abs/2309.04153

+ 作者:Yiqian Yang, Zhengqiao Zhao, Qian Wang, Yan Yang, Jingdong Chen

+ 备注:

+ 关键词:handling between-subject variance, modeling speech-brain response, Existing approaches, facing difficulties, difficulties in handling

+

+ 点击查看摘要

+ Existing approaches to modeling associations between visual stimuli and brain

+responses are facing difficulties in handling between-subject variance and

+model generalization. Inspired by the recent progress in modeling speech-brain

+response, we propose in this work a ``match-vs-mismatch'' deep learning model

+to classify whether a video clip induces excitatory responses in recorded EEG

+signals and learn associations between the visual content and corresponding

+neural recordings. Using an exclusive experimental dataset, we demonstrate that

+the proposed model is able to achieve the highest accuracy on unseen subjects

+as compared to other baseline models. Furthermore, we analyze the inter-subject

+noise using a subject-level silhouette score in the embedding space and show

+that the developed model is able to mitigate inter-subject noise and

+significantly reduce the silhouette score. Moreover, we examine the Grad-CAM

+activation score and show that the brain regions associated with language

+processing contribute most to the model predictions, followed by regions

+associated with visual processing. These results have the potential to

+facilitate the development of neural recording-based video reconstruction and

+its related applications.

+

+

+

+ 30. 标题:Representation Synthesis by Probabilistic Many-Valued Logic Operation in Self-Supervised Learning

+ 编号:[129]

+ 链接:https://arxiv.org/abs/2309.04148

+ 作者:Hiroki Nakamura, Masashi Okada, Tadahiro Taniguchi

+ 备注:This work has been submitted to the IEEE for possible publication. Copyright may be transferred without notice, after which this version may no longer be accessible

+ 关键词:representation, mixed images, mixed images learn, mixed, image

+

+ 点击查看摘要

+ Self-supervised learning (SSL) using mixed images has been studied to learn

+various image representations. Existing methods using mixed images learn a

+representation by maximizing the similarity between the representation of the

+mixed image and the synthesized representation of the original images. However,

+few methods consider the synthesis of representations from the perspective of

+mathematical logic. In this study, we focused on a synthesis method of

+representations. We proposed a new SSL with mixed images and a new

+representation format based on many-valued logic. This format can indicate the

+feature-possession degree, that is, how much of each image feature is possessed

+by a representation. This representation format and representation synthesis by

+logic operation realize that the synthesized representation preserves the

+remarkable characteristics of the original representations. Our method

+performed competitively with previous representation synthesis methods for

+image classification tasks. We also examined the relationship between the

+feature-possession degree and the number of classes of images in the multilabel

+image classification dataset to verify that the intended learning was achieved.

+In addition, we discussed image retrieval, which is an application of our

+proposed representation format using many-valued logic.

+

+

+

+ 31. 标题:Robot Localization and Mapping Final Report -- Sequential Adversarial Learning for Self-Supervised Deep Visual Odometry

+ 编号:[130]

+ 链接:https://arxiv.org/abs/2309.04147

+ 作者:Akankshya Kar, Sajal Maheshwari, Shamit Lal, Vinay Sameer Raja Kad

+ 备注:

+ 关键词:motion for decades, multi-view geometry, geometry via local, local structure, structure from motion

+

+ 点击查看摘要

+ Visual odometry (VO) and SLAM have been using multi-view geometry via local

+structure from motion for decades. These methods have a slight disadvantage in

+challenging scenarios such as low-texture images, dynamic scenarios, etc.

+Meanwhile, use of deep neural networks to extract high level features is

+ubiquitous in computer vision. For VO, we can use these deep networks to

+extract depth and pose estimates using these high level features. The visual

+odometry task then can be modeled as an image generation task where the pose

+estimation is the by-product. This can also be achieved in a self-supervised

+manner, thereby eliminating the data (supervised) intensive nature of training

+deep neural networks. Although some works tried the similar approach [1], the

+depth and pose estimation in the previous works are vague sometimes resulting

+in accumulation of error (drift) along the trajectory. The goal of this work is

+to tackle these limitations of past approaches and to develop a method that can

+provide better depths and pose estimates. To address this, a couple of

+approaches are explored: 1) Modeling: Using optical flow and recurrent neural

+networks (RNN) in order to exploit spatio-temporal correlations which can

+provide more information to estimate depth. 2) Loss function: Generative

+adversarial network (GAN) [2] is deployed to improve the depth estimation (and

+thereby pose too), as shown in Figure 1. This additional loss term improves the

+realism in generated images and reduces artifacts.

+

+

+

+ 32. 标题:Depth Completion with Multiple Balanced Bases and Confidence for Dense Monocular SLAM

+ 编号:[132]

+ 链接:https://arxiv.org/abs/2309.04145

+ 作者:Weijian Xie, Guanyi Chu, Quanhao Qian, Yihao Yu, Hai Li, Danpeng Chen, Shangjin Zhai, Nan Wang, Hujun Bao, Guofeng Zhang

+ 备注:

+ 关键词:Dense SLAM based, sparse SLAM systems, SLAM systems, sparse SLAM, SLAM

+

+ 点击查看摘要

+ Dense SLAM based on monocular cameras does indeed have immense application

+value in the field of AR/VR, especially when it is performed on a mobile

+device. In this paper, we propose a novel method that integrates a light-weight

+depth completion network into a sparse SLAM system using a multi-basis depth

+representation, so that dense mapping can be performed online even on a mobile

+phone. Specifically, we present a specifically optimized multi-basis depth

+completion network, called BBC-Net, tailored to the characteristics of

+traditional sparse SLAM systems. BBC-Net can predict multiple balanced bases

+and a confidence map from a monocular image with sparse points generated by

+off-the-shelf keypoint-based SLAM systems. The final depth is a linear

+combination of predicted depth bases that can be optimized by tuning the

+corresponding weights. To seamlessly incorporate the weights into traditional

+SLAM optimization and ensure efficiency and robustness, we design a set of

+depth weight factors, which makes our network a versatile plug-in module,

+facilitating easy integration into various existing sparse SLAM systems and

+significantly enhancing global depth consistency through bundle adjustment. To

+verify the portability of our method, we integrate BBC-Net into two

+representative SLAM systems. The experimental results on various datasets show

+that the proposed method achieves better performance in monocular dense mapping

+than the state-of-the-art methods. We provide an online demo running on a

+mobile phone, which verifies the efficiency and mapping quality of the proposed

+method in real-world scenarios.

+

+

+

+ 33. 标题:From Text to Mask: Localizing Entities Using the Attention of Text-to-Image Diffusion Models

+ 编号:[141]

+ 链接:https://arxiv.org/abs/2309.04109

+ 作者:Changming Xiao, Qi Yang, Feng Zhou, Changshui Zhang

+ 备注:

+ 关键词:revolted the field, Diffusion models, generation recently, models, method

+

+ 点击查看摘要

+ Diffusion models have revolted the field of text-to-image generation

+recently. The unique way of fusing text and image information contributes to

+their remarkable capability of generating highly text-related images. From

+another perspective, these generative models imply clues about the precise

+correlation between words and pixels. In this work, a simple but effective

+method is proposed to utilize the attention mechanism in the denoising network

+of text-to-image diffusion models. Without re-training nor inference-time

+optimization, the semantic grounding of phrases can be attained directly. We

+evaluate our method on Pascal VOC 2012 and Microsoft COCO 2014 under

+weakly-supervised semantic segmentation setting and our method achieves

+superior performance to prior methods. In addition, the acquired word-pixel

+correlation is found to be generalizable for the learned text embedding of

+customized generation methods, requiring only a few modifications. To validate

+our discovery, we introduce a new practical task called "personalized referring

+image segmentation" with a new dataset. Experiments in various situations

+demonstrate the advantages of our method compared to strong baselines on this

+task. In summary, our work reveals a novel way to extract the rich multi-modal

+knowledge hidden in diffusion models for segmentation.

+

+

+

+ 34. 标题:Weakly Supervised Point Clouds Transformer for 3D Object Detection

+ 编号:[143]

+ 链接:https://arxiv.org/abs/2309.04105

+ 作者:Zuojin Tang, Bo Sun, Tongwei Ma, Daosheng Li, Zhenhui Xu

+ 备注:International Conference on Intelligent Transportation Systems (ITSC), 2022

+ 关键词:object detection, scene understanding, Voting Proposal Module, network, Unsupervised Voting Proposal

+

+ 点击查看摘要

+ The annotation of 3D datasets is required for semantic-segmentation and

+object detection in scene understanding. In this paper we present a framework

+for the weakly supervision of a point clouds transformer that is used for 3D

+object detection. The aim is to decrease the required amount of supervision

+needed for training, as a result of the high cost of annotating a 3D datasets.

+We propose an Unsupervised Voting Proposal Module, which learns randomly preset

+anchor points and uses voting network to select prepared anchor points of high

+quality. Then it distills information into student and teacher network. In

+terms of student network, we apply ResNet network to efficiently extract local

+characteristics. However, it also can lose much global information. To provide

+the input which incorporates the global and local information as the input of

+student networks, we adopt the self-attention mechanism of transformer to

+extract global features, and the ResNet layers to extract region proposals. The

+teacher network supervises the classification and regression of the student

+network using the pre-trained model on ImageNet. On the challenging KITTI

+datasets, the experimental results have achieved the highest level of average

+precision compared with the most recent weakly supervised 3D object detectors.

+

+

+

+ 35. 标题:Toward Sufficient Spatial-Frequency Interaction for Gradient-aware Underwater Image Enhancement

+ 编号:[145]

+ 链接:https://arxiv.org/abs/2309.04089

+ 作者:Chen Zhao, Weiling Cai, Chenyu Dong, Ziqi Zeng

+ 备注:

+ 关键词:underwater visual tasks, Underwater images suffer, suffer from complex, complex and diverse, inevitably affects

+

+ 点击查看摘要

+ Underwater images suffer from complex and diverse degradation, which

+inevitably affects the performance of underwater visual tasks. However, most

+existing learning-based Underwater image enhancement (UIE) methods mainly

+restore such degradations in the spatial domain, and rarely pay attention to

+the fourier frequency information. In this paper, we develop a novel UIE

+framework based on spatial-frequency interaction and gradient maps, namely

+SFGNet, which consists of two stages. Specifically, in the first stage, we

+propose a dense spatial-frequency fusion network (DSFFNet), mainly including

+our designed dense fourier fusion block and dense spatial fusion block,

+achieving sufficient spatial-frequency interaction by cross connections between

+these two blocks. In the second stage, we propose a gradient-aware corrector

+(GAC) to further enhance perceptual details and geometric structures of images

+by gradient map. Experimental results on two real-world underwater image

+datasets show that our approach can successfully enhance underwater images, and

+achieves competitive performance in visual quality improvement.

+

+

+

+ 36. 标题:Towards Efficient SDRTV-to-HDRTV by Learning from Image Formation

+ 编号:[148]

+ 链接:https://arxiv.org/abs/2309.04084

+ 作者:Xiangyu Chen, Zheyuan Li, Zhengwen Zhang, Jimmy S. Ren, Yihao Liu, Jingwen He, Yu Qiao, Jiantao Zhou, Chao Dong

+ 备注:Extended version of HDRTVNet

+ 关键词:high dynamic range, standard dynamic range, dynamic range, Modern displays, displays are capable

+

+ 点击查看摘要

+ Modern displays are capable of rendering video content with high dynamic

+range (HDR) and wide color gamut (WCG). However, the majority of available

+resources are still in standard dynamic range (SDR). As a result, there is

+significant value in transforming existing SDR content into the HDRTV standard.

+In this paper, we define and analyze the SDRTV-to-HDRTV task by modeling the

+formation of SDRTV/HDRTV content. Our analysis and observations indicate that a

+naive end-to-end supervised training pipeline suffers from severe gamut

+transition errors. To address this issue, we propose a novel three-step

+solution pipeline called HDRTVNet++, which includes adaptive global color

+mapping, local enhancement, and highlight refinement. The adaptive global color

+mapping step uses global statistics as guidance to perform image-adaptive color

+mapping. A local enhancement network is then deployed to enhance local details.

+Finally, we combine the two sub-networks above as a generator and achieve

+highlight consistency through GAN-based joint training. Our method is primarily

+designed for ultra-high-definition TV content and is therefore effective and

+lightweight for processing 4K resolution images. We also construct a dataset

+using HDR videos in the HDR10 standard, named HDRTV1K that contains 1235 and

+117 training images and 117 testing images, all in 4K resolution. Besides, we

+select five metrics to evaluate the results of SDRTV-to-HDRTV algorithms. Our

+final results demonstrate state-of-the-art performance both quantitatively and

+visually. The code, model and dataset are available at

+this https URL.

+

+

+

+ 37. 标题:UER: A Heuristic Bias Addressing Approach for Online Continual Learning

+ 编号:[150]

+ 链接:https://arxiv.org/abs/2309.04081

+ 作者:Huiwei Lin, Shanshan Feng, Baoquan Zhang, Hongliang Qiao, Xutao Li, Yunming Ye

+ 备注:9 pages, 12 figures, ACM MM2023

+ 关键词:continual learning aims, continuously train neural, train neural networks, single pass-through data, continuous data stream

+

+ 点击查看摘要

+ Online continual learning aims to continuously train neural networks from a

+continuous data stream with a single pass-through data. As the most effective

+approach, the rehearsal-based methods replay part of previous data. Commonly

+used predictors in existing methods tend to generate biased dot-product logits

+that prefer to the classes of current data, which is known as a bias issue and

+a phenomenon of forgetting. Many approaches have been proposed to overcome the

+forgetting problem by correcting the bias; however, they still need to be

+improved in online fashion. In this paper, we try to address the bias issue by

+a more straightforward and more efficient method. By decomposing the

+dot-product logits into an angle factor and a norm factor, we empirically find

+that the bias problem mainly occurs in the angle factor, which can be used to

+learn novel knowledge as cosine logits. On the contrary, the norm factor

+abandoned by existing methods helps remember historical knowledge. Based on

+this observation, we intuitively propose to leverage the norm factor to balance

+the new and old knowledge for addressing the bias. To this end, we develop a

+heuristic approach called unbias experience replay (UER). UER learns current

+samples only by the angle factor and further replays previous samples by both

+the norm and angle factors. Extensive experiments on three datasets show that

+UER achieves superior performance over various state-of-the-art methods. The

+code is in this https URL.

+

+

+

+ 38. 标题:INSURE: An Information Theory Inspired Disentanglement and Purification Model for Domain Generalization

+ 编号:[158]

+ 链接:https://arxiv.org/abs/2309.04063

+ 作者:Xi Yu, Huan-Hsin Tseng, Shinjae Yoo, Haibin Ling, Yuewei Lin

+ 备注:10 pages, 4 figures

+ 关键词:unseen target domain, observed source domains, domain-specific class-relevant features, multiple observed source, class-relevant

+

+ 点击查看摘要

+ Domain Generalization (DG) aims to learn a generalizable model on the unseen

+target domain by only training on the multiple observed source domains.

+Although a variety of DG methods have focused on extracting domain-invariant

+features, the domain-specific class-relevant features have attracted attention

+and been argued to benefit generalization to the unseen target domain. To take

+into account the class-relevant domain-specific information, in this paper we

+propose an Information theory iNspired diSentanglement and pURification modEl

+(INSURE) to explicitly disentangle the latent features to obtain sufficient and

+compact (necessary) class-relevant feature for generalization to the unseen

+domain. Specifically, we first propose an information theory inspired loss

+function to ensure the disentangled class-relevant features contain sufficient

+class label information and the other disentangled auxiliary feature has

+sufficient domain information. We further propose a paired purification loss

+function to let the auxiliary feature discard all the class-relevant

+information and thus the class-relevant feature will contain sufficient and

+compact (necessary) class-relevant information. Moreover, instead of using

+multiple encoders, we propose to use a learnable binary mask as our

+disentangler to make the disentanglement more efficient and make the

+disentangled features complementary to each other. We conduct extensive

+experiments on four widely used DG benchmark datasets including PACS,

+OfficeHome, TerraIncognita, and DomainNet. The proposed INSURE outperforms the

+state-of-art methods. We also empirically show that domain-specific

+class-relevant features are beneficial for domain generalization.

+

+

+

+ 39. 标题:Evaluation and Mitigation of Agnosia in Multimodal Large Language Models

+ 编号:[162]

+ 链接:https://arxiv.org/abs/2309.04041

+ 作者:Jiaying Lu, Jinmeng Rao, Kezhen Chen, Xiaoyuan Guo, Yawen Zhang, Baochen Sun, Carl Yang, Jie Yang

+ 备注:

+ 关键词:Large Language Models, Multimodal Large Language, Language Models, Large Language, Multimodal Large

+

+ 点击查看摘要

+ While Multimodal Large Language Models (MLLMs) are widely used for a variety

+of vision-language tasks, one observation is that they sometimes misinterpret

+visual inputs or fail to follow textual instructions even in straightforward

+cases, leading to irrelevant responses, mistakes, and ungrounded claims. This

+observation is analogous to a phenomenon in neuropsychology known as Agnosia,

+an inability to correctly process sensory modalities and recognize things

+(e.g., objects, colors, relations). In our study, we adapt this similar concept

+to define "agnosia in MLLMs", and our goal is to comprehensively evaluate and

+mitigate such agnosia in MLLMs. Inspired by the diagnosis and treatment process

+in neuropsychology, we propose a novel framework EMMA (Evaluation and

+Mitigation of Multimodal Agnosia). In EMMA, we develop an evaluation module

+that automatically creates fine-grained and diverse visual question answering

+examples to assess the extent of agnosia in MLLMs comprehensively. We also

+develop a mitigation module to reduce agnosia in MLLMs through multimodal

+instruction tuning on fine-grained conversations. To verify the effectiveness

+of our framework, we evaluate and analyze agnosia in seven state-of-the-art

+MLLMs using 9K test samples. The results reveal that most of them exhibit

+agnosia across various aspects and degrees. We further develop a fine-grained

+instruction set and tune MLLMs to mitigate agnosia, which led to notable

+improvement in accuracy.

+

+

+

+ 40. 标题:S-Adapter: Generalizing Vision Transformer for Face Anti-Spoofing with Statistical Tokens

+ 编号:[163]

+ 链接:https://arxiv.org/abs/2309.04038

+ 作者:Rizhao Cai, Zitong Yu, Chenqi Kong, Haoliang Li, Changsheng Chen, Yongjian Hu, Alex Kot

+ 备注:

+ 关键词:face recognition system, presenting spoofed faces, detect malicious attempts, Face Anti-Spoofing, face recognition

+

+ 点击查看摘要

+ Face Anti-Spoofing (FAS) aims to detect malicious attempts to invade a face

+recognition system by presenting spoofed faces. State-of-the-art FAS techniques

+predominantly rely on deep learning models but their cross-domain

+generalization capabilities are often hindered by the domain shift problem,

+which arises due to different distributions between training and testing data.

+In this study, we develop a generalized FAS method under the Efficient

+Parameter Transfer Learning (EPTL) paradigm, where we adapt the pre-trained

+Vision Transformer models for the FAS task. During training, the adapter

+modules are inserted into the pre-trained ViT model, and the adapters are

+updated while other pre-trained parameters remain fixed. We find the

+limitations of previous vanilla adapters in that they are based on linear

+layers, which lack a spoofing-aware inductive bias and thus restrict the

+cross-domain generalization. To address this limitation and achieve

+cross-domain generalized FAS, we propose a novel Statistical Adapter

+(S-Adapter) that gathers local discriminative and statistical information from

+localized token histograms. To further improve the generalization of the

+statistical tokens, we propose a novel Token Style Regularization (TSR), which

+aims to reduce domain style variance by regularizing Gram matrices extracted

+from tokens across different domains. Our experimental results demonstrate that

+our proposed S-Adapter and TSR provide significant benefits in both zero-shot

+and few-shot cross-domain testing, outperforming state-of-the-art methods on