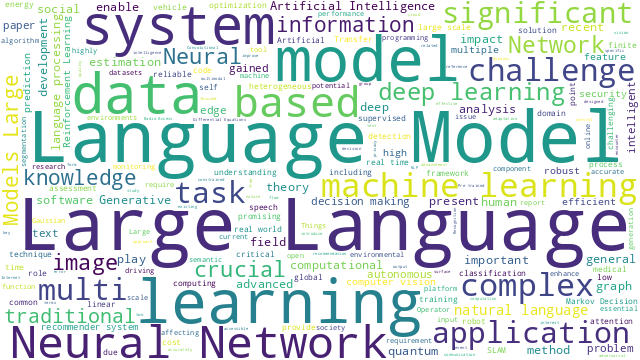

本篇博文主要展示每日从Arxiv论文网站获取的最新论文列表,以自然语言处理、信息检索、计算机视觉等类目进行划分。

+统计

+今日共更新571篇论文,其中:

+

+- 自然语言处理126篇

+- 信息检索24篇

+- 计算机视觉152篇

+

+自然语言处理

+

+ 1. 【2412.13175】DnDScore: Decontextualization and Decomposition for Factuality Verification in Long-Form Text Generation

+ 链接:https://arxiv.org/abs/2412.13175

+ 作者:Miriam Wanner,Benjamin Van Durme,Mark Dredze

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Model, Language Model, Large Language, generations decomposes claims, generations decomposes

+ 备注:

+

+ 点击查看摘要

+ Abstract:The decompose-then-verify strategy for verification of Large Language Model (LLM) generations decomposes claims that are then independently verified. Decontextualization augments text (claims) to ensure it can be verified outside of the original context, enabling reliable verification. While decomposition and decontextualization have been explored independently, their interactions in a complete system have not been investigated. Their conflicting purposes can create tensions: decomposition isolates atomic facts while decontextualization inserts relevant information. Furthermore, a decontextualized subclaim presents a challenge to the verification step: what part of the augmented text should be verified as it now contains multiple atomic facts? We conduct an evaluation of different decomposition, decontextualization, and verification strategies and find that the choice of strategy matters in the resulting factuality scores. Additionally, we introduce DnDScore, a decontextualization aware verification method which validates subclaims in the context of contextual information.

+

+

+

+ 2. 【2412.13171】Compressed Chain of Thought: Efficient Reasoning Through Dense Representations

+ 链接:https://arxiv.org/abs/2412.13171

+ 作者:Jeffrey Cheng,Benjamin Van Durme

+ 类目:Computation and Language (cs.CL)

+ 关键词:high generation latency, improve reasoning performance, contemplation tokens, cost of high, high generation

+ 备注:

+

+ 点击查看摘要

+ Abstract:Chain-of-thought (CoT) decoding enables language models to improve reasoning performance at the cost of high generation latency in decoding. Recent proposals have explored variants of contemplation tokens, a term we introduce that refers to special tokens used during inference to allow for extra computation. Prior work has considered fixed-length sequences drawn from a discrete set of embeddings as contemplation tokens. Here we propose Compressed Chain-of-Thought (CCoT), a framework to generate contentful and continuous contemplation tokens of variable sequence length. The generated contemplation tokens are compressed representations of explicit reasoning chains, and our method can be applied to off-the-shelf decoder language models. Through experiments, we illustrate how CCoT enables additional reasoning over dense contentful representations to achieve corresponding improvements in accuracy. Moreover, the reasoning improvements can be adaptively modified on demand by controlling the number of contemplation tokens generated.

+

+

+

+ 3. 【2412.13169】Algorithmic Fidelity of Large Language Models in Generating Synthetic German Public Opinions: A Case Study

+ 链接:https://arxiv.org/abs/2412.13169

+ 作者:Bolei Ma,Berk Yoztyurk,Anna-Carolina Haensch,Xinpeng Wang,Markus Herklotz,Frauke Kreuter,Barbara Plank,Matthias Assenmacher

+ 类目:Computation and Language (cs.CL)

+ 关键词:large language models, German Longitudinal Election, Longitudinal Election Studies, investigate public opinions, recent research

+ 备注:

+

+ 点击查看摘要

+ Abstract:In recent research, large language models (LLMs) have been increasingly used to investigate public opinions. This study investigates the algorithmic fidelity of LLMs, i.e., the ability to replicate the socio-cultural context and nuanced opinions of human participants. Using open-ended survey data from the German Longitudinal Election Studies (GLES), we prompt different LLMs to generate synthetic public opinions reflective of German subpopulations by incorporating demographic features into the persona prompts. Our results show that Llama performs better than other LLMs at representing subpopulations, particularly when there is lower opinion diversity within those groups. Our findings further reveal that the LLM performs better for supporters of left-leaning parties like The Greens and The Left compared to other parties, and matches the least with the right-party AfD. Additionally, the inclusion or exclusion of specific variables in the prompts can significantly impact the models' predictions. These findings underscore the importance of aligning LLMs to more effectively model diverse public opinions while minimizing political biases and enhancing robustness in representativeness.

+

+

+

+ 4. 【2412.13161】BanglishRev: A Large-Scale Bangla-English and Code-mixed Dataset of Product Reviews in E-Commerce

+ 链接:https://arxiv.org/abs/2412.13161

+ 作者:Mohammad Nazmush Shamael,Sabila Nawshin,Swakkhar Shatabda,Salekul Islam

+ 类目:Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV); Machine Learning (cs.LG)

+ 关键词:Bengali words written, largest e-commerce product, e-commerce reviews written, Bengali words, product review dataset

+ 备注:

+

+ 点击查看摘要

+ Abstract:This work presents the BanglishRev Dataset, the largest e-commerce product review dataset to date for reviews written in Bengali, English, a mixture of both and Banglish, Bengali words written with English alphabets. The dataset comprises of 1.74 million written reviews from 3.2 million ratings information collected from a total of 128k products being sold in online e-commerce platforms targeting the Bengali population. It includes an extensive array of related metadata for each of the reviews including the rating given by the reviewer, date the review was posted and date of purchase, number of likes, dislikes, response from the seller, images associated with the review etc. With sentiment analysis being the most prominent usage of review datasets, experimentation with a binary sentiment analysis model with the review rating serving as an indicator of positive or negative sentiment was conducted to evaluate the effectiveness of the large amount of data presented in BanglishRev for sentiment analysis tasks. A BanglishBERT model is trained on the data from BanglishRev with reviews being considered labeled positive if the rating is greater than 3 and negative if the rating is less than or equal to 3. The model is evaluated by being testing against a previously published manually annotated dataset for e-commerce reviews written in a mixture of Bangla, English and Banglish. The experimental model achieved an exceptional accuracy of 94\% and F1 score of 0.94, demonstrating the dataset's efficacy for sentiment analysis. Some of the intriguing patterns and observations seen within the dataset and future research directions where the dataset can be utilized is also discussed and explored. The dataset can be accessed through this https URL.

+

+

+

+ 5. 【2412.13147】Are Your LLMs Capable of Stable Reasoning?

+ 链接:https://arxiv.org/abs/2412.13147

+ 作者:Junnan Liu,Hongwei Liu,Linchen Xiao,Ziyi Wang,Kuikun Liu,Songyang Gao,Wenwei Zhang,Songyang Zhang,Kai Chen

+ 类目:Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:Large Language Models, Large Language, demonstrated remarkable progress, advancement of Large, complex reasoning tasks

+ 备注: Preprint

+

+ 点击查看摘要

+ Abstract:The rapid advancement of Large Language Models (LLMs) has demonstrated remarkable progress in complex reasoning tasks. However, a significant discrepancy persists between benchmark performances and real-world applications. We identify this gap as primarily stemming from current evaluation protocols and metrics, which inadequately capture the full spectrum of LLM capabilities, particularly in complex reasoning tasks where both accuracy and consistency are crucial. This work makes two key contributions. First, we introduce G-Pass@k, a novel evaluation metric that provides a continuous assessment of model performance across multiple sampling attempts, quantifying both the model's peak performance potential and its stability. Second, we present LiveMathBench, a dynamic benchmark comprising challenging, contemporary mathematical problems designed to minimize data leakage risks during evaluation. Through extensive experiments using G-Pass@k on state-of-the-art LLMs with LiveMathBench, we provide comprehensive insights into both their maximum capabilities and operational consistency. Our findings reveal substantial room for improvement in LLMs' "realistic" reasoning capabilities, highlighting the need for more robust evaluation methods. The benchmark and detailed results are available at: this https URL.

+

+

+

+ 6. 【2412.13146】Syntactic Transfer to Kyrgyz Using the Treebank Translation Method

+ 链接:https://arxiv.org/abs/2412.13146

+ 作者:Anton Alekseev,Alina Tillabaeva,Gulnara Dzh. Kabaeva,Sergey I. Nikolenko

+ 类目:Computation and Language (cs.CL)

+ 关键词:requires significant effort, high-quality syntactic corpora, create high-quality syntactic, Kyrgyz language, low-resource language

+ 备注: To be published in the Journal of Math. Sciences. Zapiski version (in Russian): [this http URL](http://www.pdmi.ras.ru/znsl/2024/v540/abs252.html)

+

+ 点击查看摘要

+ Abstract:The Kyrgyz language, as a low-resource language, requires significant effort to create high-quality syntactic corpora. This study proposes an approach to simplify the development process of a syntactic corpus for Kyrgyz. We present a tool for transferring syntactic annotations from Turkish to Kyrgyz based on a treebank translation method. The effectiveness of the proposed tool was evaluated using the TueCL treebank. The results demonstrate that this approach achieves higher syntactic annotation accuracy compared to a monolingual model trained on the Kyrgyz KTMU treebank. Additionally, the study introduces a method for assessing the complexity of manual annotation for the resulting syntactic trees, contributing to further optimization of the annotation process.

+

+

+

+ 7. 【2412.13110】Improving Explainability of Sentence-level Metrics via Edit-level Attribution for Grammatical Error Correction

+ 链接:https://arxiv.org/abs/2412.13110

+ 作者:Takumi Goto,Justin Vasselli,Taro Watanabe

+ 类目:Computation and Language (cs.CL)

+ 关键词:Grammatical Error Correction, Grammatical Error, proposed for Grammatical, Error Correction, GEC models

+ 备注:

+

+ 点击查看摘要

+ Abstract:Various evaluation metrics have been proposed for Grammatical Error Correction (GEC), but many, particularly reference-free metrics, lack explainability. This lack of explainability hinders researchers from analyzing the strengths and weaknesses of GEC models and limits the ability to provide detailed feedback for users. To address this issue, we propose attributing sentence-level scores to individual edits, providing insight into how specific corrections contribute to the overall performance. For the attribution method, we use Shapley values, from cooperative game theory, to compute the contribution of each edit. Experiments with existing sentence-level metrics demonstrate high consistency across different edit granularities and show approximately 70\% alignment with human evaluations. In addition, we analyze biases in the metrics based on the attribution results, revealing trends such as the tendency to ignore orthographic edits. Our implementation is available at \url{this https URL}.

+

+

+

+ 8. 【2412.13103】AI PERSONA: Towards Life-long Personalization of LLMs

+ 链接:https://arxiv.org/abs/2412.13103

+ 作者:Tiannan Wang,Meiling Tao,Ruoyu Fang,Huilin Wang,Shuai Wang,Yuchen Eleanor Jiang,Wangchunshu Zhou

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:large language models, LLM systems, enable LLM systems, language agents, LLM

+ 备注: Work in progress

+

+ 点击查看摘要

+ Abstract:In this work, we introduce the task of life-long personalization of large language models. While recent mainstream efforts in the LLM community mainly focus on scaling data and compute for improved capabilities of LLMs, we argue that it is also very important to enable LLM systems, or language agents, to continuously adapt to the diverse and ever-changing profiles of every distinct user and provide up-to-date personalized assistance. We provide a clear task formulation and introduce a simple, general, effective, and scalable framework for life-long personalization of LLM systems and language agents. To facilitate future research on LLM personalization, we also introduce methods to synthesize realistic benchmarks and robust evaluation metrics. We will release all codes and data for building and benchmarking life-long personalized LLM systems.

+

+

+

+ 9. 【2412.13102】AIR-Bench: Automated Heterogeneous Information Retrieval Benchmark

+ 链接:https://arxiv.org/abs/2412.13102

+ 作者:Jianlyu Chen,Nan Wang,Chaofan Li,Bo Wang,Shitao Xiao,Han Xiao,Hao Liao,Defu Lian,Zheng Liu

+ 类目:Information Retrieval (cs.IR); Computation and Language (cs.CL)

+ 关键词:Heterogeneous Information Retrieval, Automated Heterogeneous Information, information retrieval, Information Retrieval Benchmark, AIR-Bench

+ 备注: 31 pages, 6 figures

+

+ 点击查看摘要

+ Abstract:Evaluation plays a crucial role in the advancement of information retrieval (IR) models. However, current benchmarks, which are based on predefined domains and human-labeled data, face limitations in addressing evaluation needs for emerging domains both cost-effectively and efficiently. To address this challenge, we propose the Automated Heterogeneous Information Retrieval Benchmark (AIR-Bench). AIR-Bench is distinguished by three key features: 1) Automated. The testing data in AIR-Bench is automatically generated by large language models (LLMs) without human intervention. 2) Heterogeneous. The testing data in AIR-Bench is generated with respect to diverse tasks, domains and languages. 3) Dynamic. The domains and languages covered by AIR-Bench are constantly augmented to provide an increasingly comprehensive evaluation benchmark for community developers. We develop a reliable and robust data generation pipeline to automatically create diverse and high-quality evaluation datasets based on real-world corpora. Our findings demonstrate that the generated testing data in AIR-Bench aligns well with human-labeled testing data, making AIR-Bench a dependable benchmark for evaluating IR models. The resources in AIR-Bench are publicly available at this https URL.

+

+

+

+ 10. 【2412.13098】Uchaguzi-2022: A Dataset of Citizen Reports on the 2022 Kenyan Election

+ 链接:https://arxiv.org/abs/2412.13098

+ 作者:Roberto Mondini,Neema Kotonya,Robert L. Logan IV,Elizabeth M Olson,Angela Oduor Lungati,Daniel Duke Odongo,Tim Ombasa,Hemank Lamba,Aoife Cahill,Joel R. Tetreault,Alejandro Jaimes

+ 类目:Computation and Language (cs.CL); Social and Information Networks (cs.SI)

+ 关键词:Online reporting platforms, Online reporting, local communities, reporting platforms, platforms have enabled

+ 备注: COLING 2025

+

+ 点击查看摘要

+ Abstract:Online reporting platforms have enabled citizens around the world to collectively share their opinions and report in real time on events impacting their local communities. Systematically organizing (e.g., categorizing by attributes) and geotagging large amounts of crowdsourced information is crucial to ensuring that accurate and meaningful insights can be drawn from this data and used by policy makers to bring about positive change. These tasks, however, typically require extensive manual annotation efforts. In this paper we present Uchaguzi-2022, a dataset of 14k categorized and geotagged citizen reports related to the 2022 Kenyan General Election containing mentions of election-related issues such as official misconduct, vote count irregularities, and acts of violence. We use this dataset to investigate whether language models can assist in scalably categorizing and geotagging reports, thus highlighting its potential application in the AI for Social Good space.

+

+

+

+ 11. 【2412.13091】LMUnit: Fine-grained Evaluation with Natural Language Unit Tests

+ 链接:https://arxiv.org/abs/2412.13091

+ 作者:Jon Saad-Falcon,Rajan Vivek,William Berrios,Nandita Shankar Naik,Matija Franklin,Bertie Vidgen,Amanpreet Singh,Douwe Kiela,Shikib Mehri

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:automated metrics provide, assessing their behavior, fundamental challenge, costly and noisy, provide only coarse

+ 备注:

+

+ 点击查看摘要

+ Abstract:As language models become integral to critical workflows, assessing their behavior remains a fundamental challenge -- human evaluation is costly and noisy, while automated metrics provide only coarse, difficult-to-interpret signals. We introduce natural language unit tests, a paradigm that decomposes response quality into explicit, testable criteria, along with a unified scoring model, LMUnit, which combines multi-objective training across preferences, direct ratings, and natural language rationales. Through controlled human studies, we show this paradigm significantly improves inter-annotator agreement and enables more effective LLM development workflows. LMUnit achieves state-of-the-art performance on evaluation benchmarks (FLASK, BigGenBench) and competitive results on RewardBench. These results validate both our proposed paradigm and scoring model, suggesting a promising path forward for language model evaluation and development.

+

+

+

+ 12. 【2412.13071】CLASP: Contrastive Language-Speech Pretraining for Multilingual Multimodal Information Retrieval

+ 链接:https://arxiv.org/abs/2412.13071

+ 作者:Mohammad Mahdi Abootorabi,Ehsaneddin Asgari

+ 类目:Computation and Language (cs.CL); Information Retrieval (cs.IR); Sound (cs.SD); Audio and Speech Processing (eess.AS)

+ 关键词:Contrastive Language-Speech Pretraining, Contrastive Language-Speech, Language-Speech Pretraining, study introduces CLASP, multimodal representation tailored

+ 备注: accepted at ECIR 2025

+

+ 点击查看摘要

+ Abstract:This study introduces CLASP (Contrastive Language-Speech Pretraining), a multilingual, multimodal representation tailored for audio-text information retrieval. CLASP leverages the synergy between spoken content and textual data. During training, we utilize our newly introduced speech-text dataset, which encompasses 15 diverse categories ranging from fiction to religion. CLASP's audio component integrates audio spectrograms with a pre-trained self-supervised speech model, while its language encoding counterpart employs a sentence encoder pre-trained on over 100 languages. This unified lightweight model bridges the gap between various modalities and languages, enhancing its effectiveness in handling and retrieving multilingual and multimodal data. Our evaluations across multiple languages demonstrate that CLASP establishes new benchmarks in HITS@1, MRR, and meanR metrics, outperforming traditional ASR-based retrieval approaches in specific scenarios.

+

+

+

+ 13. 【2412.13050】Modality-Inconsistent Continual Learning of Multimodal Large Language Models

+ 链接:https://arxiv.org/abs/2412.13050

+ 作者:Weiguo Pian,Shijian Deng,Shentong Mo,Yunhui Guo,Yapeng Tian

+ 类目:Machine Learning (cs.LG); Artificial Intelligence (cs.AI); Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV); Sound (cs.SD); Audio and Speech Processing (eess.AS)

+ 关键词:Multimodal Large Language, Large Language Models, Multimodal Large, Large Language, scenario for Multimodal

+ 备注:

+

+ 点击查看摘要

+ Abstract:In this paper, we introduce Modality-Inconsistent Continual Learning (MICL), a new continual learning scenario for Multimodal Large Language Models (MLLMs) that involves tasks with inconsistent modalities (image, audio, or video) and varying task types (captioning or question-answering). Unlike existing vision-only or modality-incremental settings, MICL combines modality and task type shifts, both of which drive catastrophic forgetting. To address these challenges, we propose MoInCL, which employs a Pseudo Targets Generation Module to mitigate forgetting caused by task type shifts in previously seen modalities. It also incorporates Instruction-based Knowledge Distillation to preserve the model's ability to handle previously learned modalities when new ones are introduced. We benchmark MICL using a total of six tasks and conduct experiments to validate the effectiveness of our proposed MoInCL. The experimental results highlight the superiority of MoInCL, showing significant improvements over representative and state-of-the-art continual learning baselines.

+

+

+

+ 14. 【2412.13041】Harnessing Event Sensory Data for Error Pattern Prediction in Vehicles: A Language Model Approach

+ 链接:https://arxiv.org/abs/2412.13041

+ 作者:Hugo Math,Rainer Lienhart,Robin Schön

+ 类目:Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:processing natural languages, processing multivariate event, multivariate event streams, textit, processing natural

+ 备注: 10 pages, 8 figures, accepted to AAAI 2025

+

+ 点击查看摘要

+ Abstract:In this paper, we draw an analogy between processing natural languages and processing multivariate event streams from vehicles in order to predict $\textit{when}$ and $\textit{what}$ error pattern is most likely to occur in the future for a given car. Our approach leverages the temporal dynamics and contextual relationships of our event data from a fleet of cars. Event data is composed of discrete values of error codes as well as continuous values such as time and mileage. Modelled by two causal Transformers, we can anticipate vehicle failures and malfunctions before they happen. Thus, we introduce $\textit{CarFormer}$, a Transformer model trained via a new self-supervised learning strategy, and $\textit{EPredictor}$, an autoregressive Transformer decoder model capable of predicting $\textit{when}$ and $\textit{what}$ error pattern will most likely occur after some error code apparition. Despite the challenges of high cardinality of event types, their unbalanced frequency of appearance and limited labelled data, our experimental results demonstrate the excellent predictive ability of our novel model. Specifically, with sequences of $160$ error codes on average, our model is able with only half of the error codes to achieve $80\%$ F1 score for predicting $\textit{what}$ error pattern will occur and achieves an average absolute error of $58.4 \pm 13.2$h $\textit{when}$ forecasting the time of occurrence, thus enabling confident predictive maintenance and enhancing vehicle safety.

+

+

+

+ 15. 【2412.13026】NAVCON: A Cognitively Inspired and Linguistically Grounded Corpus for Vision and Language Navigation

+ 链接:https://arxiv.org/abs/2412.13026

+ 作者:Karan Wanchoo,Xiaoye Zuo,Hannah Gonzalez,Soham Dan,Georgios Georgakis,Dan Roth,Kostas Daniilidis,Eleni Miltsakaki

+ 类目:Computation and Language (cs.CL); Computer Vision and Pattern Recognition (cs.CV)

+ 关键词:large-scale annotated Vision-Language, annotated Vision-Language Navigation, corpus built, popular datasets, built on top

+ 备注:

+

+ 点击查看摘要

+ Abstract:We present NAVCON, a large-scale annotated Vision-Language Navigation (VLN) corpus built on top of two popular datasets (R2R and RxR). The paper introduces four core, cognitively motivated and linguistically grounded, navigation concepts and an algorithm for generating large-scale silver annotations of naturally occurring linguistic realizations of these concepts in navigation instructions. We pair the annotated instructions with video clips of an agent acting on these instructions. NAVCON contains 236, 316 concept annotations for approximately 30, 0000 instructions and 2.7 million aligned images (from approximately 19, 000 instructions) showing what the agent sees when executing an instruction. To our knowledge, this is the first comprehensive resource of navigation concepts. We evaluated the quality of the silver annotations by conducting human evaluation studies on NAVCON samples. As further validation of the quality and usefulness of the resource, we trained a model for detecting navigation concepts and their linguistic realizations in unseen instructions. Additionally, we show that few-shot learning with GPT-4o performs well on this task using large-scale silver annotations of NAVCON.

+

+

+

+ 16. 【2412.13018】OmniEval: An Omnidirectional and Automatic RAG Evaluation Benchmark in Financial Domain

+ 链接:https://arxiv.org/abs/2412.13018

+ 作者:Shuting Wang,Jiejun Tan,Zhicheng Dou,Ji-Rong Wen

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Models, Large Language, lack domain-specific knowledge, application of Large, gained extensive attention

+ 备注:

+

+ 点击查看摘要

+ Abstract:As a typical and practical application of Large Language Models (LLMs), Retrieval-Augmented Generation (RAG) techniques have gained extensive attention, particularly in vertical domains where LLMs may lack domain-specific knowledge. In this paper, we introduce an omnidirectional and automatic RAG benchmark, OmniEval, in the financial domain. Our benchmark is characterized by its multi-dimensional evaluation framework, including (1) a matrix-based RAG scenario evaluation system that categorizes queries into five task classes and 16 financial topics, leading to a structured assessment of diverse query scenarios; (2) a multi-dimensional evaluation data generation approach, which combines GPT-4-based automatic generation and human annotation, achieving an 87.47\% acceptance ratio in human evaluations on generated instances; (3) a multi-stage evaluation system that evaluates both retrieval and generation performance, result in a comprehensive evaluation on the RAG pipeline; and (4) robust evaluation metrics derived from rule-based and LLM-based ones, enhancing the reliability of assessments through manual annotations and supervised fine-tuning of an LLM evaluator. Our experiments demonstrate the comprehensiveness of OmniEval, which includes extensive test datasets and highlights the performance variations of RAG systems across diverse topics and tasks, revealing significant opportunities for RAG models to improve their capabilities in vertical domains. We open source the code of our benchmark in \href{this https URL}{this https URL}.

+

+

+

+ 17. 【2412.13008】RCLMuFN: Relational Context Learning and Multiplex Fusion Network for Multimodal Sarcasm Detection

+ 链接:https://arxiv.org/abs/2412.13008

+ 作者:Tongguan Wang,Junkai Li,Guixin Su,Yongcheng Zhang,Dongyu Su,Yuxue Hu,Ying Sha

+ 类目:Computation and Language (cs.CL)

+ 关键词:speaker true intent, typically conveys emotions, Sarcasm typically conveys, multimodal sarcasm detection, sarcasm detection

+ 备注:

+

+ 点击查看摘要

+ Abstract:Sarcasm typically conveys emotions of contempt or criticism by expressing a meaning that is contrary to the speaker's true intent. Accurate detection of sarcasm aids in identifying and filtering undesirable information on the Internet, thereby reducing malicious defamation and rumor-mongering. Nonetheless, the task of automatic sarcasm detection remains highly challenging for machines, as it critically depends on intricate factors such as relational context. Most existing multimodal sarcasm detection methods focus on introducing graph structures to establish entity relationships between text and images while neglecting to learn the relational context between text and images, which is crucial evidence for understanding the meaning of sarcasm. In addition, the meaning of sarcasm changes with the evolution of different contexts, but existing methods may not be accurate in modeling such dynamic changes, limiting the generalization ability of the models. To address the above issues, we propose a relational context learning and multiplex fusion network (RCLMuFN) for multimodal sarcasm detection. Firstly, we employ four feature extractors to comprehensively extract features from raw text and images, aiming to excavate potential features that may have been previously overlooked. Secondly, we utilize the relational context learning module to learn the contextual information of text and images and capture the dynamic properties through shallow and deep interactions. Finally, we employ a multiplex feature fusion module to enhance the generalization of the model by penetratingly integrating multimodal features derived from various interaction contexts. Extensive experiments on two multimodal sarcasm detection datasets show that our proposed method achieves state-of-the-art performance.

+

+

+

+ 18. 【2412.12997】Enabling Low-Resource Language Retrieval: Establishing Baselines for Urdu MS MARCO

+ 链接:https://arxiv.org/abs/2412.12997

+ 作者:Umer Butt,Stalin Veranasi,Günter Neumann

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Information Retrieval (cs.IR)

+ 关键词:field increasingly recognizes, Information Retrieval, field increasingly, low-resource languages remains, increasingly recognizes

+ 备注: 6 pages, ECIR 2025, conference submission version

+

+ 点击查看摘要

+ Abstract:As the Information Retrieval (IR) field increasingly recognizes the importance of inclusivity, addressing the needs of low-resource languages remains a significant challenge. This paper introduces the first large-scale Urdu IR dataset, created by translating the MS MARCO dataset through machine translation. We establish baseline results through zero-shot learning for IR in Urdu and subsequently apply the mMARCO multilingual IR methodology to this newly translated dataset. Our findings demonstrate that the fine-tuned model (Urdu-mT5-mMARCO) achieves a Mean Reciprocal Rank (MRR@10) of 0.247 and a Recall@10 of 0.439, representing significant improvements over zero-shot results and showing the potential for expanding IR access for Urdu speakers. By bridging access gaps for speakers of low-resource languages, this work not only advances multilingual IR research but also emphasizes the ethical and societal importance of inclusive IR technologies. This work provides valuable insights into the challenges and solutions for improving language representation and lays the groundwork for future research, especially in South Asian languages, which can benefit from the adaptable methods used in this study.

+

+

+

+ 19. 【2412.12981】Unlocking LLMs: Addressing Scarce Data and Bias Challenges in Mental Health

+ 链接:https://arxiv.org/abs/2412.12981

+ 作者:Vivek Kumar,Eirini Ntoutsi,Pushpraj Singh Rajawat,Giacomo Medda,Diego Reforgiato Recupero

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large language models, shown promising capabilities, Large language, language models, bias manifestation

+ 备注: International Conference on Natural Language Processing and Artificial Intelligence for Cyber Security (NLPAICS) 2024

+

+ 点击查看摘要

+ Abstract:Large language models (LLMs) have shown promising capabilities in healthcare analysis but face several challenges like hallucinations, parroting, and bias manifestation. These challenges are exacerbated in complex, sensitive, and low-resource domains. Therefore, in this work we introduce IC-AnnoMI, an expert-annotated motivational interviewing (MI) dataset built upon AnnoMI by generating in-context conversational dialogues leveraging LLMs, particularly ChatGPT. IC-AnnoMI employs targeted prompts accurately engineered through cues and tailored information, taking into account therapy style (empathy, reflection), contextual relevance, and false semantic change. Subsequently, the dialogues are annotated by experts, strictly adhering to the Motivational Interviewing Skills Code (MISC), focusing on both the psychological and linguistic dimensions of MI dialogues. We comprehensively evaluate the IC-AnnoMI dataset and ChatGPT's emotional reasoning ability and understanding of domain intricacies by modeling novel classification tasks employing several classical machine learning and current state-of-the-art transformer approaches. Finally, we discuss the effects of progressive prompting strategies and the impact of augmented data in mitigating the biases manifested in IC-AnnoM. Our contributions provide the MI community with not only a comprehensive dataset but also valuable insights for using LLMs in empathetic text generation for conversational therapy in supervised settings.

+

+

+

+ 20. 【2412.12961】Adaptations of AI models for querying the LandMatrix database in natural language

+ 链接:https://arxiv.org/abs/2412.12961

+ 作者:Fatiha Ait Kbir,Jérémy Bourgoin,Rémy Decoupes,Marie Gradeler,Roberto Interdonato

+ 类目:Computation and Language (cs.CL)

+ 关键词:global observatory aim, Land Matrix initiative, large-scale land acquisitions, provide reliable data, Land Matrix

+ 备注:

+

+ 点击查看摘要

+ Abstract:The Land Matrix initiative (this https URL) and its global observatory aim to provide reliable data on large-scale land acquisitions to inform debates and actions in sectors such as agriculture, extraction, or energy in low- and middle-income countries. Although these data are recognized in the academic world, they remain underutilized in public policy, mainly due to the complexity of access and exploitation, which requires technical expertise and a good understanding of the database schema.

+The objective of this work is to simplify access to data from different database systems. The methods proposed in this article are evaluated using data from the Land Matrix. This work presents various comparisons of Large Language Models (LLMs) as well as combinations of LLM adaptations (Prompt Engineering, RAG, Agents) to query different database systems (GraphQL and REST queries). The experiments are reproducible, and a demonstration is available online: this https URL.

+

Subjects:

+Computation and Language (cs.CL)

+Cite as:

+arXiv:2412.12961 [cs.CL]

+(or

+arXiv:2412.12961v1 [cs.CL] for this version)

+https://doi.org/10.48550/arXiv.2412.12961

+Focus to learn more

+ arXiv-issued DOI via DataCite (pending registration)</p>

+

+

+

+

+ 21. 【2412.12956】SnakModel: Lessons Learned from Training an Open Danish Large Language Model

+ 链接:https://arxiv.org/abs/2412.12956

+ 作者:Mike Zhang,Max Müller-Eberstein,Elisa Bassignana,Rob van der Goot

+ 类目:Computation and Language (cs.CL)

+ 关键词:Danish large language, large language model, Danish Natural Language, Danish words, Danish instructions

+ 备注: Accepted at NoDaLiDa 2025 (oral)

+

+ 点击查看摘要

+ Abstract:We present SnakModel, a Danish large language model (LLM) based on Llama2-7B, which we continuously pre-train on 13.6B Danish words, and further tune on 3.7M Danish instructions. As best practices for creating LLMs for smaller language communities have yet to be established, we examine the effects of early modeling and training decisions on downstream performance throughout the entire training pipeline, including (1) the creation of a strictly curated corpus of Danish text from diverse sources; (2) the language modeling and instruction-tuning training process itself, including the analysis of intermediate training dynamics, and ablations across different hyperparameters; (3) an evaluation on eight language and culturally-specific tasks. Across these experiments SnakModel achieves the highest overall performance, outperforming multiple contemporary Llama2-7B-based models. By making SnakModel, the majority of our pre-training corpus, and the associated code available under open licenses, we hope to foster further research and development in Danish Natural Language Processing, and establish training guidelines for languages with similar resource constraints.

+

+

+

+ 22. 【2412.12955】Learning from Noisy Labels via Self-Taught On-the-Fly Meta Loss Rescaling

+ 链接:https://arxiv.org/abs/2412.12955

+ 作者:Michael Heck,Christian Geishauser,Nurul Lubis,Carel van Niekerk,Shutong Feng,Hsien-Chin Lin,Benjamin Matthias Ruppik,Renato Vukovic,Milica Gašić

+ 类目:Computation and Language (cs.CL)

+ 关键词:training effective machine, Correct labels, effective machine learning, labeled data, data

+ 备注: 10 pages, 3 figures, accepted at AAAI'25

+

+ 点击查看摘要

+ Abstract:Correct labels are indispensable for training effective machine learning models. However, creating high-quality labels is expensive, and even professionally labeled data contains errors and ambiguities. Filtering and denoising can be applied to curate labeled data prior to training, at the cost of additional processing and loss of information. An alternative is on-the-fly sample reweighting during the training process to decrease the negative impact of incorrect or ambiguous labels, but this typically requires clean seed data. In this work we propose unsupervised on-the-fly meta loss rescaling to reweight training samples. Crucially, we rely only on features provided by the model being trained, to learn a rescaling function in real time without knowledge of the true clean data distribution. We achieve this via a novel meta learning setup that samples validation data for the meta update directly from the noisy training corpus by employing the rescaling function being trained. Our proposed method consistently improves performance across various NLP tasks with minimal computational overhead. Further, we are among the first to attempt on-the-fly training data reweighting on the challenging task of dialogue modeling, where noisy and ambiguous labels are common. Our strategy is robust in the face of noisy and clean data, handles class imbalance, and prevents overfitting to noisy labels. Our self-taught loss rescaling improves as the model trains, showing the ability to keep learning from the model's own signals. As training progresses, the impact of correctly labeled data is scaled up, while the impact of wrongly labeled data is suppressed.

+

+

+

+ 23. 【2412.12954】Recipient Profiling: Predicting Characteristics from Messages

+ 链接:https://arxiv.org/abs/2412.12954

+ 作者:Martin Borquez,Mikaela Keller,Michael Perrot,Damien Sileo

+ 类目:Computation and Language (cs.CL)

+ 关键词:inadvertently reveal sensitive, reveal sensitive information, Author Profiling, gender or age, inadvertently reveal

+ 备注:

+

+ 点击查看摘要

+ Abstract:It has been shown in the field of Author Profiling that texts may inadvertently reveal sensitive information about their authors, such as gender or age. This raises important privacy concerns that have been extensively addressed in the literature, in particular with the development of methods to hide such information. We argue that, when these texts are in fact messages exchanged between individuals, this is not the end of the story. Indeed, in this case, a second party, the intended recipient, is also involved and should be considered. In this work, we investigate the potential privacy leaks affecting them, that is we propose and address the problem of Recipient Profiling. We provide empirical evidence that such a task is feasible on several publicly accessible datasets (this https URL). Furthermore, we show that the learned models can be transferred to other datasets, albeit with a loss in accuracy.

+

+

+

+ 24. 【2412.12948】MOPO: Multi-Objective Prompt Optimization for Affective Text Generation

+ 链接:https://arxiv.org/abs/2412.12948

+ 作者:Yarik Menchaca Resendiz,Roman Klinger

+ 类目:Computation and Language (cs.CL)

+ 关键词:MOPO, expressed depends, multiple objectives, objectives, Optimization

+ 备注: accepted to COLING 2025

+

+ 点击查看摘要

+ Abstract:How emotions are expressed depends on the context and domain. On X (formerly Twitter), for instance, an author might simply use the hashtag #anger, while in a news headline, emotions are typically written in a more polite, indirect manner. To enable conditional text generation models to create emotionally connotated texts that fit a domain, users need to have access to a parameter that allows them to choose the appropriate way to express an emotion. To achieve this, we introduce MOPO, a Multi-Objective Prompt Optimization methodology. MOPO optimizes prompts according to multiple objectives (which correspond here to the output probabilities assigned by emotion classifiers trained for different domains). In contrast to single objective optimization, MOPO outputs a set of prompts, each with a different weighting of the multiple objectives. Users can then choose the most appropriate prompt for their context. We evaluate MOPO using three objectives, determined by various domain-specific emotion classifiers. MOPO improves performance by up to 15 pp across all objectives with a minimal loss (1-2 pp) for any single objective compared to single-objective optimization. These minor performance losses are offset by a broader generalization across multiple objectives - which is not possible with single-objective optimization. Additionally, MOPO reduces computational requirements by simultaneously optimizing for multiple objectives, eliminating separate optimization procedures for each objective.

+

+

+

+ 25. 【2412.12940】Improving Fine-grained Visual Understanding in VLMs through Text-Only Training

+ 链接:https://arxiv.org/abs/2412.12940

+ 作者:Dasol Choi,Guijin Son,Soo Yong Kim,Gio Paik,Seunghyeok Hong

+ 类目:Computation and Language (cs.CL)

+ 关键词:Visual-Language Models, powerful tool, tool for bridging, bridging the gap, Models

+ 备注: AAAI25 workshop accepted

+

+ 点击查看摘要

+ Abstract:Visual-Language Models (VLMs) have become a powerful tool for bridging the gap between visual and linguistic understanding. However, the conventional learning approaches for VLMs often suffer from limitations, such as the high resource requirements of collecting and training image-text paired data. Recent research has suggested that language understanding plays a crucial role in the performance of VLMs, potentially indicating that text-only training could be a viable approach. In this work, we investigate the feasibility of enhancing fine-grained visual understanding in VLMs through text-only training. Inspired by how humans develop visual concept understanding, where rich textual descriptions can guide visual recognition, we hypothesize that VLMs can also benefit from leveraging text-based representations to improve their visual recognition abilities. We conduct comprehensive experiments on two distinct domains: fine-grained species classification and cultural visual understanding tasks. Our findings demonstrate that text-only training can be comparable to conventional image-text training while significantly reducing computational costs. This suggests a more efficient and cost-effective pathway for advancing VLM capabilities, particularly valuable in resource-constrained environments.

+

+

+

+ 26. 【2412.12928】ruthful Text Sanitization Guided by Inference Attacks

+ 链接:https://arxiv.org/abs/2412.12928

+ 作者:Ildikó Pilán,Benet Manzanares-Salor,David Sánchez,Pierre Lison

+ 类目:Computation and Language (cs.CL)

+ 关键词:longer disclose personal, disclose personal information, text sanitization, personal information, original text spans

+ 备注:

+

+ 点击查看摘要

+ Abstract:The purpose of text sanitization is to rewrite those text spans in a document that may directly or indirectly identify an individual, to ensure they no longer disclose personal information. Text sanitization must strike a balance between preventing the leakage of personal information (privacy protection) while also retaining as much of the document's original content as possible (utility preservation). We present an automated text sanitization strategy based on generalizations, which are more abstract (but still informative) terms that subsume the semantic content of the original text spans. The approach relies on instruction-tuned large language models (LLMs) and is divided into two stages. The LLM is first applied to obtain truth-preserving replacement candidates and rank them according to their abstraction level. Those candidates are then evaluated for their ability to protect privacy by conducting inference attacks with the LLM. Finally, the system selects the most informative replacement shown to be resistant to those attacks. As a consequence of this two-stage process, the chosen replacements effectively balance utility and privacy. We also present novel metrics to automatically evaluate these two aspects without the need to manually annotate data. Empirical results on the Text Anonymization Benchmark show that the proposed approach leads to enhanced utility, with only a marginal increase in the risk of re-identifying protected individuals compared to fully suppressing the original information. Furthermore, the selected replacements are shown to be more truth-preserving and abstractive than previous methods.

+

+

+

+ 27. 【2412.12898】An Agentic Approach to Automatic Creation of PID Diagrams from Natural Language Descriptions

+ 链接:https://arxiv.org/abs/2412.12898

+ 作者:Shreeyash Gowaikar,Srinivasan Iyengar,Sameer Segal,Shivkumar Kalyanaraman

+ 类目:Machine Learning (cs.LG); Computational Engineering, Finance, and Science (cs.CE); Computation and Language (cs.CL); Multiagent Systems (cs.MA)

+ 关键词:Piping and Instrumentation, Large Language Models, Instrumentation Diagrams, natural language, natural language descriptions

+ 备注: Accepted at the AAAI'25 Workshop on AI to Accelerate Science and Engineering (AI2ASE)

+

+ 点击查看摘要

+ Abstract:The Piping and Instrumentation Diagrams (PIDs) are foundational to the design, construction, and operation of workflows in the engineering and process industries. However, their manual creation is often labor-intensive, error-prone, and lacks robust mechanisms for error detection and correction. While recent advancements in Generative AI, particularly Large Language Models (LLMs) and Vision-Language Models (VLMs), have demonstrated significant potential across various domains, their application in automating generation of engineering workflows remains underexplored. In this work, we introduce a novel copilot for automating the generation of PIDs from natural language descriptions. Leveraging a multi-step agentic workflow, our copilot provides a structured and iterative approach to diagram creation directly from Natural Language prompts. We demonstrate the feasibility of the generation process by evaluating the soundness and completeness of the workflow, and show improved results compared to vanilla zero-shot and few-shot generation approaches.

+

+

+

+ 28. 【2412.12893】Question: How do Large Language Models perform on the Question Answering tasks? Answer:

+ 链接:https://arxiv.org/abs/2412.12893

+ 作者:Kevin Fischer,Darren Fürst,Sebastian Steindl,Jakob Lindner,Ulrich Schäfer

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Models, Large Language, showing promising results, zero-shot prompting techniques, Stanford Question Answering

+ 备注: Accepted at SAI Computing Conference 2025

+

+ 点击查看摘要

+ Abstract:Large Language Models (LLMs) have been showing promising results for various NLP-tasks without the explicit need to be trained for these tasks by using few-shot or zero-shot prompting techniques. A common NLP-task is question-answering (QA). In this study, we propose a comprehensive performance comparison between smaller fine-tuned models and out-of-the-box instruction-following LLMs on the Stanford Question Answering Dataset 2.0 (SQuAD2), specifically when using a single-inference prompting technique. Since the dataset contains unanswerable questions, previous work used a double inference method. We propose a prompting style which aims to elicit the same ability without the need for double inference, saving compute time and resources. Furthermore, we investigate their generalization capabilities by comparing their performance on similar but different QA datasets, without fine-tuning neither model, emulating real-world uses where the context and questions asked may differ from the original training distribution, for example swapping Wikipedia for news articles.

+Our results show that smaller, fine-tuned models outperform current State-Of-The-Art (SOTA) LLMs on the fine-tuned task, but recent SOTA models are able to close this gap on the out-of-distribution test and even outperform the fine-tuned models on 3 of the 5 tested QA datasets.

+

Comments:

+Accepted at SAI Computing Conference 2025

+Subjects:

+Computation and Language (cs.CL)

+Cite as:

+arXiv:2412.12893 [cs.CL]

+(or

+arXiv:2412.12893v1 [cs.CL] for this version)

+https://doi.org/10.48550/arXiv.2412.12893

+Focus to learn more

+ arXiv-issued DOI via DataCite (pending registration)</p>

+

+

+

+

+ 29. 【2412.12881】RAG-Star: Enhancing Deliberative Reasoning with Retrieval Augmented Verification and Refinement

+ 链接:https://arxiv.org/abs/2412.12881

+ 作者:Jinhao Jiang,Jiayi Chen,Junyi Li,Ruiyang Ren,Shijie Wang,Wayne Xin Zhao,Yang Song,Tao Zhang

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Existing large language, large language models, show exceptional problem-solving, exceptional problem-solving capabilities, Existing large

+ 备注: LLM;RAG;MCTS

+

+ 点击查看摘要

+ Abstract:Existing large language models (LLMs) show exceptional problem-solving capabilities but might struggle with complex reasoning tasks. Despite the successes of chain-of-thought and tree-based search methods, they mainly depend on the internal knowledge of LLMs to search over intermediate reasoning steps, limited to dealing with simple tasks involving fewer reasoning steps. In this paper, we propose \textbf{RAG-Star}, a novel RAG approach that integrates the retrieved information to guide the tree-based deliberative reasoning process that relies on the inherent knowledge of LLMs. By leveraging Monte Carlo Tree Search, RAG-Star iteratively plans intermediate sub-queries and answers for reasoning based on the LLM itself. To consolidate internal and external knowledge, we propose an retrieval-augmented verification that utilizes query- and answer-aware reward modeling to provide feedback for the inherent reasoning of LLMs. Our experiments involving Llama-3.1-8B-Instruct and GPT-4o demonstrate that RAG-Star significantly outperforms previous RAG and reasoning methods.

+

+

+

+ 30. 【2412.12865】Preference-Oriented Supervised Fine-Tuning: Favoring Target Model Over Aligned Large Language Models

+ 链接:https://arxiv.org/abs/2412.12865

+ 作者:Yuchen Fan,Yuzhong Hong,Qiushi Wang,Junwei Bao,Hongfei Jiang,Yang Song

+ 类目:Computation and Language (cs.CL)

+ 关键词:pre-trained Large language, Large language model, SFT, pre-trained Large, endowing a pre-trained

+ 备注: AAAI2025, 12 pages, 9 figures

+

+ 点击查看摘要

+ Abstract:Alignment, endowing a pre-trained Large language model (LLM) with the ability to follow instructions, is crucial for its real-world applications. Conventional supervised fine-tuning (SFT) methods formalize it as causal language modeling typically with a cross-entropy objective, requiring a large amount of high-quality instruction-response pairs. However, the quality of widely used SFT datasets can not be guaranteed due to the high cost and intensive labor for the creation and maintenance in practice. To overcome the limitations associated with the quality of SFT datasets, we introduce a novel \textbf{p}reference-\textbf{o}riented supervised \textbf{f}ine-\textbf{t}uning approach, namely PoFT. The intuition is to boost SFT by imposing a particular preference: \textit{favoring the target model over aligned LLMs on the same SFT data.} This preference encourages the target model to predict a higher likelihood than that predicted by the aligned LLMs, incorporating assessment information on data quality (i.e., predicted likelihood by the aligned LLMs) into the training process. Extensive experiments are conducted, and the results validate the effectiveness of the proposed method. PoFT achieves stable and consistent improvements over the SFT baselines across different training datasets and base models. Moreover, we prove that PoFT can be integrated with existing SFT data filtering methods to achieve better performance, and further improved by following preference optimization procedures, such as DPO.

+

+

+

+ 31. 【2412.12863】DISC: Plug-and-Play Decoding Intervention with Similarity of Characters for Chinese Spelling Check

+ 链接:https://arxiv.org/abs/2412.12863

+ 作者:Ziheng Qiao,Houquan Zhou,Yumeng Liu,Zhenghua Li,Min Zhang,Bo Zhang,Chen Li,Ji Zhang,Fei Huang

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Chinese spelling check, Chinese spelling, spelling check, key characteristic, Chinese

+ 备注:

+

+ 点击查看摘要

+ Abstract:One key characteristic of the Chinese spelling check (CSC) task is that incorrect characters are usually similar to the correct ones in either phonetics or glyph. To accommodate this, previous works usually leverage confusion sets, which suffer from two problems, i.e., difficulty in determining which character pairs to include and lack of probabilities to distinguish items in the set. In this paper, we propose a light-weight plug-and-play DISC (i.e., decoding intervention with similarity of characters) module for CSC this http URL measures phonetic and glyph similarities between characters and incorporates this similarity information only during the inference phase. This method can be easily integrated into various existing CSC models, such as ReaLiSe, SCOPE, and ReLM, without additional training costs. Experiments on three CSC benchmarks demonstrate that our proposed method significantly improves model performance, approaching and even surpassing the current state-of-the-art models.

+

+

+

+ 32. 【2412.12852】Selective Shot Learning for Code Explanation

+ 链接:https://arxiv.org/abs/2412.12852

+ 作者:Paheli Bhattacharya,Rishabh Gupta

+ 类目:oftware Engineering (cs.SE); Computation and Language (cs.CL); Information Retrieval (cs.IR)

+ 关键词:software engineering domain, code functionality efficiently, grasping code functionality, Code explanation plays, Code explanation

+ 备注:

+

+ 点击查看摘要

+ Abstract:Code explanation plays a crucial role in the software engineering domain, aiding developers in grasping code functionality efficiently. Recent work shows that the performance of LLMs for code explanation improves in a few-shot setting, especially when the few-shot examples are selected intelligently. State-of-the-art approaches for such Selective Shot Learning (SSL) include token-based and embedding-based methods. However, these SSL approaches have been evaluated on proprietary LLMs, without much exploration on open-source Code-LLMs. Additionally, these methods lack consideration for programming language syntax. To bridge these gaps, we present a comparative study and propose a novel SSL method (SSL_ner) that utilizes entity information for few-shot example selection. We present several insights and show the effectiveness of SSL_ner approach over state-of-the-art methods across two datasets. To the best of our knowledge, this is the first systematic benchmarking of open-source Code-LLMs while assessing the performances of the various few-shot examples selection approaches for the code explanation task.

+

+

+

+ 33. 【2412.12841】Benchmarking and Understanding Compositional Relational Reasoning of LLMs

+ 链接:https://arxiv.org/abs/2412.12841

+ 作者:Ruikang Ni,Da Xiao,Qingye Meng,Xiangyu Li,Shihui Zheng,Hongliang Liang

+ 类目:Computation and Language (cs.CL); Machine Learning (cs.LG)

+ 关键词:Compositional relational reasoning, transformer large language, Generalized Associative Recall, Compositional relational, existing transformer large

+ 备注: Accepted to the 39th Annual AAAI Conference on Artificial Intelligence (AAAI-25)

+

+ 点击查看摘要

+ Abstract:Compositional relational reasoning (CRR) is a hallmark of human intelligence, but we lack a clear understanding of whether and how existing transformer large language models (LLMs) can solve CRR tasks. To enable systematic exploration of the CRR capability of LLMs, we first propose a new synthetic benchmark called Generalized Associative Recall (GAR) by integrating and generalizing the essence of several tasks in mechanistic interpretability (MI) study in a unified framework. Evaluation shows that GAR is challenging enough for existing LLMs, revealing their fundamental deficiency in CRR. Meanwhile, it is easy enough for systematic MI study. Then, to understand how LLMs solve GAR tasks, we use attribution patching to discover the core circuits reused by Vicuna-33B across different tasks and a set of vital attention heads. Intervention experiments show that the correct functioning of these heads significantly impacts task performance. Especially, we identify two classes of heads whose activations represent the abstract notion of true and false in GAR tasks respectively. They play a fundamental role in CRR across various models and tasks. The dataset and code are available at this https URL.

+

+

+

+ 34. 【2412.12832】DSGram: Dynamic Weighting Sub-Metrics for Grammatical Error Correction in the Era of Large Language Models

+ 链接:https://arxiv.org/abs/2412.12832

+ 作者:Jinxiang Xie,Yilin Li,Xunjian Yin,Xiaojun Wan

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:Grammatical Error Correction, Grammatical Error, provided gold references, Error Correction, based GEC systems

+ 备注: Extended version of a paper to appear in AAAI-25

+

+ 点击查看摘要

+ Abstract:Evaluating the performance of Grammatical Error Correction (GEC) models has become increasingly challenging, as large language model (LLM)-based GEC systems often produce corrections that diverge from provided gold references. This discrepancy undermines the reliability of traditional reference-based evaluation metrics. In this study, we propose a novel evaluation framework for GEC models, DSGram, integrating Semantic Coherence, Edit Level, and Fluency, and utilizing a dynamic weighting mechanism. Our framework employs the Analytic Hierarchy Process (AHP) in conjunction with large language models to ascertain the relative importance of various evaluation criteria. Additionally, we develop a dataset incorporating human annotations and LLM-simulated sentences to validate our algorithms and fine-tune more cost-effective models. Experimental results indicate that our proposed approach enhances the effectiveness of GEC model evaluations.

+

+

+

+ 35. 【2412.12808】Detecting Emotional Incongruity of Sarcasm by Commonsense Reasoning

+ 链接:https://arxiv.org/abs/2412.12808

+ 作者:Ziqi Qiu,Jianxing Yu,Yufeng Zhang,Hanjiang Lai,Yanghui Rao,Qinliang Su,Jian Yin

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:negative sentiment opposite, statements convey criticism, convey criticism, literal meaning, paper focuses

+ 备注:

+

+ 点击查看摘要

+ Abstract:This paper focuses on sarcasm detection, which aims to identify whether given statements convey criticism, mockery, or other negative sentiment opposite to the literal meaning. To detect sarcasm, humans often require a comprehensive understanding of the semantics in the statement and even resort to external commonsense to infer the fine-grained incongruity. However, existing methods lack commonsense inferential ability when they face complex real-world scenarios, leading to unsatisfactory performance. To address this problem, we propose a novel framework for sarcasm detection, which conducts incongruity reasoning based on commonsense augmentation, called EICR. Concretely, we first employ retrieval-augmented large language models to supplement the missing but indispensable commonsense background knowledge. To capture complex contextual associations, we construct a dependency graph and obtain the optimized topology via graph refinement. We further introduce an adaptive reasoning skeleton that integrates prior rules to extract sentiment-inconsistent subgraphs explicitly. To eliminate the possible spurious relations between words and labels, we employ adversarial contrastive learning to enhance the robustness of the detector. Experiments conducted on five datasets demonstrate the effectiveness of EICR.

+

+

+

+ 36. 【2412.12806】Cross-Dialect Information Retrieval: Information Access in Low-Resource and High-Variance Languages

+ 链接:https://arxiv.org/abs/2412.12806

+ 作者:Robert Litschko,Oliver Kraus,Verena Blaschke,Barbara Plank

+ 类目:Computation and Language (cs.CL); Information Retrieval (cs.IR)

+ 关键词:culture-specific knowledge, large amount, amount of local, local and culture-specific, German dialects

+ 备注: Accepted at COLING 2025

+

+ 点击查看摘要

+ Abstract:A large amount of local and culture-specific knowledge (e.g., people, traditions, food) can only be found in documents written in dialects. While there has been extensive research conducted on cross-lingual information retrieval (CLIR), the field of cross-dialect retrieval (CDIR) has received limited attention. Dialect retrieval poses unique challenges due to the limited availability of resources to train retrieval models and the high variability in non-standardized languages. We study these challenges on the example of German dialects and introduce the first German dialect retrieval dataset, dubbed WikiDIR, which consists of seven German dialects extracted from Wikipedia. Using WikiDIR, we demonstrate the weakness of lexical methods in dealing with high lexical variation in dialects. We further show that commonly used zero-shot cross-lingual transfer approach with multilingual encoders do not transfer well to extremely low-resource setups, motivating the need for resource-lean and dialect-specific retrieval models. We finally demonstrate that (document) translation is an effective way to reduce the dialect gap in CDIR.

+

+

+

+ 37. 【2412.12797】Is it the end of (generative) linguistics as we know it?

+ 链接:https://arxiv.org/abs/2412.12797

+ 作者:Cristiano Chesi

+ 类目:Computation and Language (cs.CL)

+ 关键词:written by Steven, Steven Piantadosi, LingBuzz platform, significant debate, debate has emerged

+ 备注:

+

+ 点击查看摘要

+ Abstract:A significant debate has emerged in response to a paper written by Steven Piantadosi (Piantadosi, 2023) and uploaded to the LingBuzz platform, the open archive for generative linguistics. Piantadosi's dismissal of Chomsky's approach is ruthless, but generative linguists deserve it. In this paper, I will adopt three idealized perspectives -- computational, theoretical, and experimental -- to focus on two fundamental issues that lend partial support to Piantadosi's critique: (a) the evidence challenging the Poverty of Stimulus (PoS) hypothesis and (b) the notion of simplicity as conceived within mainstream Minimalism. In conclusion, I argue that, to reclaim a central role in language studies, generative linguistics -- representing a prototypical theoretical perspective on language -- needs a serious update leading to (i) more precise, consistent, and complete formalizations of foundational intuitions and (ii) the establishment and utilization of a standardized dataset of crucial empirical evidence to evaluate the theory's adequacy. On the other hand, ignoring the formal perspective leads to major drawbacks in both computational and experimental approaches. Neither descriptive nor explanatory adequacy can be easily achieved without the precise formulation of general principles that can be challenged empirically.

+

+

+

+ 38. 【2412.12767】A Survey of Calibration Process for Black-Box LLMs

+ 链接:https://arxiv.org/abs/2412.12767

+ 作者:Liangru Xie,Hui Liu,Jingying Zeng,Xianfeng Tang,Yan Han,Chen Luo,Jing Huang,Zhen Li,Suhang Wang,Qi He

+ 类目:Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:Large Language Models, Large Language, Language Models, demonstrate remarkable performance, output reliability remains

+ 备注:

+

+ 点击查看摘要

+ Abstract:Large Language Models (LLMs) demonstrate remarkable performance in semantic understanding and generation, yet accurately assessing their output reliability remains a significant challenge. While numerous studies have explored calibration techniques, they primarily focus on White-Box LLMs with accessible parameters. Black-Box LLMs, despite their superior performance, pose heightened requirements for calibration techniques due to their API-only interaction constraints. Although recent researches have achieved breakthroughs in black-box LLMs calibration, a systematic survey of these methodologies is still lacking. To bridge this gap, we presents the first comprehensive survey on calibration techniques for black-box LLMs. We first define the Calibration Process of LLMs as comprising two interrelated key steps: Confidence Estimation and Calibration. Second, we conduct a systematic review of applicable methods within black-box settings, and provide insights on the unique challenges and connections in implementing these key steps. Furthermore, we explore typical applications of Calibration Process in black-box LLMs and outline promising future research directions, providing new perspectives for enhancing reliability and human-machine alignment. This is our GitHub link: this https URL

+

+

+

+ 39. 【2412.12761】Revealing the impact of synthetic native samples and multi-tasking strategies in Hindi-English code-mixed humour and sarcasm detection

+ 链接:https://arxiv.org/abs/2412.12761

+ 作者:Debajyoti Mazumder,Aakash Kumar,Jasabanta Patro

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI)

+ 关键词:reported our experiments, native sample mixing, sample mixing, MTL, code-mixed

+ 备注: 26 pages; under review

+

+ 点击查看摘要

+ Abstract:In this paper, we reported our experiments with various strategies to improve code-mixed humour and sarcasm detection. We did all of our experiments for Hindi-English code-mixed scenario, as we have the linguistic expertise for the same. We experimented with three approaches, namely (i) native sample mixing, (ii) multi-task learning (MTL), and (iii) prompting very large multilingual language models (VMLMs). In native sample mixing, we added monolingual task samples in code-mixed training sets. In MTL learning, we relied on native and code-mixed samples of a semantically related task (hate detection in our case). Finally, in our third approach, we evaluated the efficacy of VMLMs via few-shot context prompting. Some interesting findings we got are (i) adding native samples improved humor (raising the F1-score up to 6.76%) and sarcasm (raising the F1-score up to 8.64%) detection, (ii) training MLMs in an MTL framework boosted performance for both humour (raising the F1-score up to 10.67%) and sarcasm (increment up to 12.35% in F1-score) detection, and (iii) prompting VMLMs couldn't outperform the other approaches. Finally, our ablation studies and error analysis discovered the cases where our model is yet to improve. We provided our code for reproducibility.

+

+

+

+ 40. 【2412.12744】Your Next State-of-the-Art Could Come from Another Domain: A Cross-Domain Analysis of Hierarchical Text Classification

+ 链接:https://arxiv.org/abs/2412.12744

+ 作者:Nan Li,Bo Kang,Tijl De Bie

+ 类目:Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG)

+ 关键词:natural language processing, language processing, European legal texts, classification with hierarchical, hierarchical labels

+ 备注:

+

+ 点击查看摘要

+ Abstract:Text classification with hierarchical labels is a prevalent and challenging task in natural language processing. Examples include assigning ICD codes to patient records, tagging patents into IPC classes, assigning EUROVOC descriptors to European legal texts, and more. Despite its widespread applications, a comprehensive understanding of state-of-the-art methods across different domains has been lacking. In this paper, we provide the first comprehensive cross-domain overview with empirical analysis of state-of-the-art methods. We propose a unified framework that positions each method within a common structure to facilitate research. Our empirical analysis yields key insights and guidelines, confirming the necessity of learning across different research areas to design effective methods. Notably, under our unified evaluation pipeline, we achieved new state-of-the-art results by applying techniques beyond their original domains.

+

+

+

+ 41. 【2412.12735】GIRAFFE: Design Choices for Extending the Context Length of Visual Language Models

+ 链接:https://arxiv.org/abs/2412.12735

+ 作者:Mukai Li,Lei Li,Shansan Gong,Qi Liu

+ 类目:Computer Vision and Pattern Recognition (cs.CV); Artificial Intelligence (cs.AI); Computation and Language (cs.CL)

+ 关键词:Visual Language Models, Visual Language, demonstrate impressive capabilities, processing multimodal inputs, require handling multiple

+ 备注: Working in progress

+

+ 点击查看摘要

+ Abstract:Visual Language Models (VLMs) demonstrate impressive capabilities in processing multimodal inputs, yet applications such as visual agents, which require handling multiple images and high-resolution videos, demand enhanced long-range modeling. Moreover, existing open-source VLMs lack systematic exploration into extending their context length, and commercial models often provide limited details. To tackle this, we aim to establish an effective solution that enhances long context performance of VLMs while preserving their capacities in short context scenarios. Towards this goal, we make the best design choice through extensive experiment settings from data curation to context window extending and utilizing: (1) we analyze data sources and length distributions to construct ETVLM - a data recipe to balance the performance across scenarios; (2) we examine existing position extending methods, identify their limitations and propose M-RoPE++ as an enhanced approach; we also choose to solely instruction-tune the backbone with mixed-source data; (3) we discuss how to better utilize extended context windows and propose hybrid-resolution training. Built on the Qwen-VL series model, we propose Giraffe, which is effectively extended to 128K lengths. Evaluated on extensive long context VLM benchmarks such as VideoMME and Viusal Haystacks, our Giraffe achieves state-of-the-art performance among similarly sized open-source long VLMs and is competitive with commercial model GPT-4V. We will open-source the code, data, and models.

+

+

+

+ 42. 【2412.12733】EventFull: Complete and Consistent Event Relation Annotation

+ 链接:https://arxiv.org/abs/2412.12733

+ 作者:Alon Eirew,Eviatar Nachshoni,Aviv Slobodkin,Ido Dagan

+ 类目:Computation and Language (cs.CL)

+ 关键词:fundamental NLP task, NLP task, fundamental NLP, modeling requires datasets, requires datasets annotated

+ 备注:

+

+ 点击查看摘要

+ Abstract:Event relation detection is a fundamental NLP task, leveraged in many downstream applications, whose modeling requires datasets annotated with event relations of various types. However, systematic and complete annotation of these relations is costly and challenging, due to the quadratic number of event pairs that need to be considered. Consequently, many current event relation datasets lack systematicity and completeness. In response, we introduce \textit{EventFull}, the first tool that supports consistent, complete and efficient annotation of temporal, causal and coreference relations via a unified and synergetic process. A pilot study demonstrates that EventFull accelerates and simplifies the annotation process while yielding high inter-annotator agreement.

+

+

+

+ 43. 【2412.12731】SentiQNF: A Novel Approach to Sentiment Analysis Using Quantum Algorithms and Neuro-Fuzzy Systems

+ 链接:https://arxiv.org/abs/2412.12731

+ 作者:Kshitij Dave,Nouhaila Innan,Bikash K. Behera,Zahid Mumtaz,Saif Al-Kuwari,Ahmed Farouk

+ 类目:Computation and Language (cs.CL); Quantum Physics (quant-ph)

+ 关键词:Sentiment analysis, essential component, component of natural, emotional tones, Sentiment

+ 备注:

+

+ 点击查看摘要

+ Abstract:Sentiment analysis is an essential component of natural language processing, used to analyze sentiments, attitudes, and emotional tones in various contexts. It provides valuable insights into public opinion, customer feedback, and user experiences. Researchers have developed various classical machine learning and neuro-fuzzy approaches to address the exponential growth of data and the complexity of language structures in sentiment analysis. However, these approaches often fail to determine the optimal number of clusters, interpret results accurately, handle noise or outliers efficiently, and scale effectively to high-dimensional data. Additionally, they are frequently insensitive to input variations. In this paper, we propose a novel hybrid approach for sentiment analysis called the Quantum Fuzzy Neural Network (QFNN), which leverages quantum properties and incorporates a fuzzy layer to overcome the limitations of classical sentiment analysis algorithms. In this study, we test the proposed approach on two Twitter datasets: the Coronavirus Tweets Dataset (CVTD) and the General Sentimental Tweets Dataset (GSTD), and compare it with classical and hybrid algorithms. The results demonstrate that QFNN outperforms all classical, quantum, and hybrid algorithms, achieving 100% and 90% accuracy in the case of CVTD and GSTD, respectively. Furthermore, QFNN demonstrates its robustness against six different noise models, providing the potential to tackle the computational complexity associated with sentiment analysis on a large scale in a noisy environment. The proposed approach expedites sentiment data processing and precisely analyses different forms of textual data, thereby enhancing sentiment classification and insights associated with sentiment analysis.

+

+

+

+ 44. 【2412.12710】Enhancing Naturalness in LLM-Generated Utterances through Disfluency Insertion

+ 链接:https://arxiv.org/abs/2412.12710

+ 作者:Syed Zohaib Hassan,Pierre Lison,Pål Halvorsen

+ 类目:Computation and Language (cs.CL)

+ 关键词:Large Language Models, Large Language, outputs of Large, Language Models, spontaneous human speech

+ 备注: 4 pages short paper, references and appendix are additional

+

+ 点击查看摘要

+ Abstract:Disfluencies are a natural feature of spontaneous human speech but are typically absent from the outputs of Large Language Models (LLMs). This absence can diminish the perceived naturalness of synthesized speech, which is an important criteria when building conversational agents that aim to mimick human behaviours. We show how the insertion of disfluencies can alleviate this shortcoming. The proposed approach involves (1) fine-tuning an LLM with Low-Rank Adaptation (LoRA) to incorporate various types of disfluencies into LLM-generated utterances and (2) synthesizing those utterances using a text-to-speech model that supports the generation of speech phenomena such as disfluencies. We evaluated the quality of the generated speech across two metrics: intelligibility and perceived spontaneity. We demonstrate through a user study that the insertion of disfluencies significantly increase the perceived spontaneity of the generated speech. This increase came, however, along with a slight reduction in intelligibility.

+

+

+

+ 45. 【2412.12706】More Tokens, Lower Precision: Towards the Optimal Token-Precision Trade-off in KV Cache Compression

+ 链接:https://arxiv.org/abs/2412.12706

+ 作者:Jiebin Zhang,Dawei Zhu,Yifan Song,Wenhao Wu,Chuqiao Kuang,Xiaoguang Li,Lifeng Shang,Qun Liu,Sujian Li

+ 类目:Computation and Language (cs.CL)

+ 关键词:process increasing context, increasing context windows, large language models, process increasing, context windows

+ 备注: 13pages,7 figures

+

+ 点击查看摘要

+ Abstract:As large language models (LLMs) process increasing context windows, the memory usage of KV cache has become a critical bottleneck during inference. The mainstream KV compression methods, including KV pruning and KV quantization, primarily focus on either token or precision dimension and seldom explore the efficiency of their combination. In this paper, we comprehensively investigate the token-precision trade-off in KV cache compression. Experiments demonstrate that storing more tokens in the KV cache with lower precision, i.e., quantized pruning, can significantly enhance the long-context performance of LLMs. Furthermore, in-depth analysis regarding token-precision trade-off from a series of key aspects exhibit that, quantized pruning achieves substantial improvements in retrieval-related tasks and consistently performs well across varying input lengths. Moreover, quantized pruning demonstrates notable stability across different KV pruning methods, quantization strategies, and model scales. These findings provide valuable insights into the token-precision trade-off in KV cache compression. We plan to release our code in the near future.

+

+

+

+ 46. 【2412.12701】rigger$^3$: Refining Query Correction via Adaptive Model Selector

+ 链接:https://arxiv.org/abs/2412.12701

+ 作者:Kepu Zhang,Zhongxiang Sun,Xiao Zhang,Xiaoxue Zang,Kai Zheng,Yang Song,Jun Xu

+ 类目:Computation and Language (cs.CL)