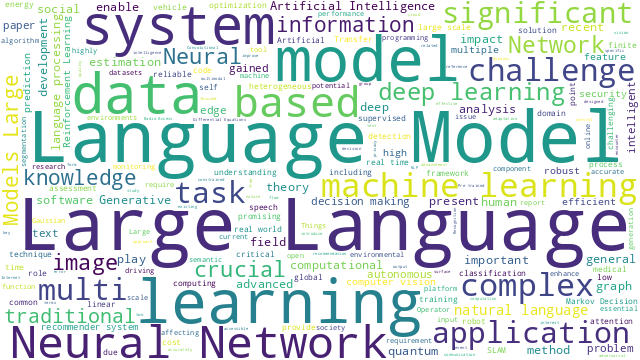

本篇博文主要展示每日从Arxiv论文网站获取的最新论文列表,以计算机视觉、自然语言处理、机器学习、人工智能等大方向进行划分。

+统计

+今日共更新362篇论文,其中:

+

+计算机视觉

+

+ 1. 标题:MosaicFusion: Diffusion Models as Data Augmenters for Large Vocabulary Instance Segmentation

+ 编号:[2]

+ 链接:https://arxiv.org/abs/2309.13042

+ 作者:Jiahao Xie, Wei Li, Xiangtai Li, Ziwei Liu, Yew Soon Ong, Chen Change Loy

+ 备注:GitHub: this https URL

+ 关键词:large vocabulary instance, effective diffusion-based data, diffusion-based data augmentation, data augmentation approach, effective diffusion-based

+

+ 点击查看摘要

+ We present MosaicFusion, a simple yet effective diffusion-based data augmentation approach for large vocabulary instance segmentation. Our method is training-free and does not rely on any label supervision. Two key designs enable us to employ an off-the-shelf text-to-image diffusion model as a useful dataset generator for object instances and mask annotations. First, we divide an image canvas into several regions and perform a single round of diffusion process to generate multiple instances simultaneously, conditioning on different text prompts. Second, we obtain corresponding instance masks by aggregating cross-attention maps associated with object prompts across layers and diffusion time steps, followed by simple thresholding and edge-aware refinement processing. Without bells and whistles, our MosaicFusion can produce a significant amount of synthetic labeled data for both rare and novel categories. Experimental results on the challenging LVIS long-tailed and open-vocabulary benchmarks demonstrate that MosaicFusion can significantly improve the performance of existing instance segmentation models, especially for rare and novel categories. Code will be released at this https URL.

+

+

+

+ 2. 标题:Robotic Offline RL from Internet Videos via Value-Function Pre-Training

+ 编号:[3]

+ 链接:https://arxiv.org/abs/2309.13041

+ 作者:Chethan Bhateja, Derek Guo, Dibya Ghosh, Anikait Singh, Manan Tomar, Quan Vuong, Yevgen Chebotar, Sergey Levine, Aviral Kumar

+ 备注:First three authors contributed equally

+ 关键词:Pre-training on Internet, Internet data, key ingredient, ingredient for broad, broad generalization

+

+ 点击查看摘要

+ Pre-training on Internet data has proven to be a key ingredient for broad generalization in many modern ML systems. What would it take to enable such capabilities in robotic reinforcement learning (RL)? Offline RL methods, which learn from datasets of robot experience, offer one way to leverage prior data into the robotic learning pipeline. However, these methods have a "type mismatch" with video data (such as Ego4D), the largest prior datasets available for robotics, since video offers observation-only experience without the action or reward annotations needed for RL methods. In this paper, we develop a system for leveraging large-scale human video datasets in robotic offline RL, based entirely on learning value functions via temporal-difference learning. We show that value learning on video datasets learns representations that are more conducive to downstream robotic offline RL than other approaches for learning from video data. Our system, called V-PTR, combines the benefits of pre-training on video data with robotic offline RL approaches that train on diverse robot data, resulting in value functions and policies for manipulation tasks that perform better, act robustly, and generalize broadly. On several manipulation tasks on a real WidowX robot, our framework produces policies that greatly improve over prior methods. Our video and additional details can be found at this https URL

+

+

+

+ 3. 标题:NeRRF: 3D Reconstruction and View Synthesis for Transparent and Specular Objects with Neural Refractive-Reflective Fields

+ 编号:[4]

+ 链接:https://arxiv.org/abs/2309.13039

+ 作者:Xiaoxue Chen, Junchen Liu, Hao Zhao, Guyue Zhou, Ya-Qin Zhang

+ 备注:

+ 关键词:image-based view synthesis, Neural radiance fields, Neural radiance, view synthesis, image-based view

+

+ 点击查看摘要

+ Neural radiance fields (NeRF) have revolutionized the field of image-based view synthesis. However, NeRF uses straight rays and fails to deal with complicated light path changes caused by refraction and reflection. This prevents NeRF from successfully synthesizing transparent or specular objects, which are ubiquitous in real-world robotics and A/VR applications. In this paper, we introduce the refractive-reflective field. Taking the object silhouette as input, we first utilize marching tetrahedra with a progressive encoding to reconstruct the geometry of non-Lambertian objects and then model refraction and reflection effects of the object in a unified framework using Fresnel terms. Meanwhile, to achieve efficient and effective anti-aliasing, we propose a virtual cone supersampling technique. We benchmark our method on different shapes, backgrounds and Fresnel terms on both real-world and synthetic datasets. We also qualitatively and quantitatively benchmark the rendering results of various editing applications, including material editing, object replacement/insertion, and environment illumination estimation. Codes and data are publicly available at this https URL.

+

+

+

+ 4. 标题:Privacy Assessment on Reconstructed Images: Are Existing Evaluation Metrics Faithful to Human Perception?

+ 编号:[5]

+ 链接:https://arxiv.org/abs/2309.13038

+ 作者:Xiaoxiao Sun, Nidham Gazagnadou, Vivek Sharma, Lingjuan Lyu, Hongdong Li, Liang Zheng

+ 备注:15 pages, 9 figures and 3 tables

+ 关键词:PSNR and SSIM, privacy leakage, metrics, privacy, SSIM

+

+ 点击查看摘要

+ Hand-crafted image quality metrics, such as PSNR and SSIM, are commonly used to evaluate model privacy risk under reconstruction attacks. Under these metrics, reconstructed images that are determined to resemble the original one generally indicate more privacy leakage. Images determined as overall dissimilar, on the other hand, indicate higher robustness against attack. However, there is no guarantee that these metrics well reflect human opinions, which, as a judgement for model privacy leakage, are more trustworthy. In this paper, we comprehensively study the faithfulness of these hand-crafted metrics to human perception of privacy information from the reconstructed images. On 5 datasets ranging from natural images, faces, to fine-grained classes, we use 4 existing attack methods to reconstruct images from many different classification models and, for each reconstructed image, we ask multiple human annotators to assess whether this image is recognizable. Our studies reveal that the hand-crafted metrics only have a weak correlation with the human evaluation of privacy leakage and that even these metrics themselves often contradict each other. These observations suggest risks of current metrics in the community. To address this potential risk, we propose a learning-based measure called SemSim to evaluate the Semantic Similarity between the original and reconstructed images. SemSim is trained with a standard triplet loss, using an original image as an anchor, one of its recognizable reconstructed images as a positive sample, and an unrecognizable one as a negative. By training on human annotations, SemSim exhibits a greater reflection of privacy leakage on the semantic level. We show that SemSim has a significantly higher correlation with human judgment compared with existing metrics. Moreover, this strong correlation generalizes to unseen datasets, models and attack methods.

+

+

+

+ 5. 标题:Deep3DSketch+: Rapid 3D Modeling from Single Free-hand Sketches

+ 编号:[19]

+ 链接:https://arxiv.org/abs/2309.13006

+ 作者:Tianrun Chen, Chenglong Fu, Ying Zang, Lanyun Zhu, Jia Zhang, Papa Mao, Lingyun Sun

+ 备注:

+ 关键词:brings tremendous demands, rapid development, brings tremendous, tremendous demands, widely-used Computer-Aided Design

+

+ 点击查看摘要

+ The rapid development of AR/VR brings tremendous demands for 3D content. While the widely-used Computer-Aided Design (CAD) method requires a time-consuming and labor-intensive modeling process, sketch-based 3D modeling offers a potential solution as a natural form of computer-human interaction. However, the sparsity and ambiguity of sketches make it challenging to generate high-fidelity content reflecting creators' ideas. Precise drawing from multiple views or strategic step-by-step drawings is often required to tackle the challenge but is not friendly to novice users. In this work, we introduce a novel end-to-end approach, Deep3DSketch+, which performs 3D modeling using only a single free-hand sketch without inputting multiple sketches or view information. Specifically, we introduce a lightweight generation network for efficient inference in real-time and a structural-aware adversarial training approach with a Stroke Enhancement Module (SEM) to capture the structural information to facilitate learning of the realistic and fine-detailed shape structures for high-fidelity performance. Extensive experiments demonstrated the effectiveness of our approach with the state-of-the-art (SOTA) performance on both synthetic and real datasets.

+

+

+

+ 6. 标题:Point Cloud Network: An Order of Magnitude Improvement in Linear Layer Parameter Count

+ 编号:[22]

+ 链接:https://arxiv.org/abs/2309.12996

+ 作者:Charles Hetterich

+ 备注:

+ 关键词:Point Cloud Network, deep learning networks, Multilayer Perceptron, introduces the Point, learning networks

+

+ 点击查看摘要

+ This paper introduces the Point Cloud Network (PCN) architecture, a novel implementation of linear layers in deep learning networks, and provides empirical evidence to advocate for its preference over the Multilayer Perceptron (MLP) in linear layers. We train several models, including the original AlexNet, using both MLP and PCN architectures for direct comparison of linear layers (Krizhevsky et al., 2012). The key results collected are model parameter count and top-1 test accuracy over the CIFAR-10 and CIFAR-100 datasets (Krizhevsky, 2009). AlexNet-PCN16, our PCN equivalent to AlexNet, achieves comparable efficacy (test accuracy) to the original architecture with a 99.5% reduction of parameters in its linear layers. All training is done on cloud RTX 4090 GPUs, leveraging pytorch for model construction and training. Code is provided for anyone to reproduce the trials from this paper.

+

+

+

+ 7. 标题:License Plate Recognition Based On Multi-Angle View Model

+ 编号:[26]

+ 链接:https://arxiv.org/abs/2309.12972

+ 作者:Dat Tran-Anh, Khanh Linh Tran, Hoai-Nam Vu

+ 备注:

+ 关键词:highly challenging problem, realm of research, problem for researchers, captured by cameras, cameras constitutes

+

+ 点击查看摘要

+ In the realm of research, the detection/recognition of text within images/videos captured by cameras constitutes a highly challenging problem for researchers. Despite certain advancements achieving high accuracy, current methods still require substantial improvements to be applicable in practical scenarios. Diverging from text detection in images/videos, this paper addresses the issue of text detection within license plates by amalgamating multiple frames of distinct perspectives. For each viewpoint, the proposed method extracts descriptive features characterizing the text components of the license plate, specifically corner points and area. Concretely, we present three viewpoints: view-1, view-2, and view-3, to identify the nearest neighboring components facilitating the restoration of text components from the same license plate line based on estimations of similarity levels and distance metrics. Subsequently, we employ the CnOCR method for text recognition within license plates. Experimental results on the self-collected dataset (PTITPlates), comprising pairs of images in various scenarios, and the publicly available Stanford Cars Dataset, demonstrate the superiority of the proposed method over existing approaches.

+

+

+

+ 8. 标题:Detect Every Thing with Few Examples

+ 编号:[28]

+ 链接:https://arxiv.org/abs/2309.12969

+ 作者:Xinyu Zhang, Yuting Wang, Abdeslam Boularias

+ 备注:

+ 关键词:detecting arbitrary categories, aims at detecting, detecting arbitrary, object detection aims, Open-set object

+

+ 点击查看摘要

+ Open-set object detection aims at detecting arbitrary categories beyond those seen during training. Most recent advancements have adopted the open-vocabulary paradigm, utilizing vision-language backbones to represent categories with language. In this paper, we introduce DE-ViT, an open-set object detector that employs vision-only DINOv2 backbones and learns new categories through example images instead of language. To improve general detection ability, we transform multi-classification tasks into binary classification tasks while bypassing per-class inference, and propose a novel region propagation technique for localization. We evaluate DE-ViT on open-vocabulary, few-shot, and one-shot object detection benchmark with COCO and LVIS. For COCO, DE-ViT outperforms the open-vocabulary SoTA by 6.9 AP50 and achieves 50 AP50 in novel classes. DE-ViT surpasses the few-shot SoTA by 15 mAP on 10-shot and 7.2 mAP on 30-shot and one-shot SoTA by 2.8 AP50. For LVIS, DE-ViT outperforms the open-vocabulary SoTA by 2.2 mask AP and reaches 34.3 mask APr. Code is available at this https URL.

+

+

+

+ 9. 标题:On Data Fabrication in Collaborative Vehicular Perception: Attacks and Countermeasures

+ 编号:[32]

+ 链接:https://arxiv.org/abs/2309.12955

+ 作者:Qingzhao Zhang, Shuowei Jin, Jiachen Sun, Xumiao Zhang, Ruiyang Zhu, Qi Alfred Chen, Z. Morley Mao

+ 备注:20 pages, 24 figures, accepted by Usenix Security 2024

+ 关键词:external resources, potential security risks, greatly enhances, enhances the sensing, sensing capability

+

+ 点击查看摘要

+ Collaborative perception, which greatly enhances the sensing capability of connected and autonomous vehicles (CAVs) by incorporating data from external resources, also brings forth potential security risks. CAVs' driving decisions rely on remote untrusted data, making them susceptible to attacks carried out by malicious participants in the collaborative perception system. However, security analysis and countermeasures for such threats are absent. To understand the impact of the vulnerability, we break the ground by proposing various real-time data fabrication attacks in which the attacker delivers crafted malicious data to victims in order to perturb their perception results, leading to hard brakes or increased collision risks. Our attacks demonstrate a high success rate of over 86\% on high-fidelity simulated scenarios and are realizable in real-world experiments. To mitigate the vulnerability, we present a systematic anomaly detection approach that enables benign vehicles to jointly reveal malicious fabrication. It detects 91.5% of attacks with a false positive rate of 3% in simulated scenarios and significantly mitigates attack impacts in real-world scenarios.

+

+

+

+ 10. 标题:Background Activation Suppression for Weakly Supervised Object Localization and Semantic Segmentation

+ 编号:[36]

+ 链接:https://arxiv.org/abs/2309.12943

+ 作者:Wei Zhai, Pingyu Wu, Kai Zhu, Yang Cao, Feng Wu, Zheng-Jun Zha

+ 备注:Accepted by IJCV. arXiv admin note: text overlap with arXiv:2112.00580

+ 关键词:foreground prediction map, foreground mask expands, foreground mask, image-level labels, aim to localize

+

+ 点击查看摘要

+ Weakly supervised object localization and semantic segmentation aim to localize objects using only image-level labels. Recently, a new paradigm has emerged by generating a foreground prediction map (FPM) to achieve pixel-level localization. While existing FPM-based methods use cross-entropy to evaluate the foreground prediction map and to guide the learning of the generator, this paper presents two astonishing experimental observations on the object localization learning process: For a trained network, as the foreground mask expands, 1) the cross-entropy converges to zero when the foreground mask covers only part of the object region. 2) The activation value continuously increases until the foreground mask expands to the object boundary. Therefore, to achieve a more effective localization performance, we argue for the usage of activation value to learn more object regions. In this paper, we propose a Background Activation Suppression (BAS) method. Specifically, an Activation Map Constraint (AMC) module is designed to facilitate the learning of generator by suppressing the background activation value. Meanwhile, by using foreground region guidance and area constraint, BAS can learn the whole region of the object. In the inference phase, we consider the prediction maps of different categories together to obtain the final localization results. Extensive experiments show that BAS achieves significant and consistent improvement over the baseline methods on the CUB-200-2011 and ILSVRC datasets. In addition, our method also achieves state-of-the-art weakly supervised semantic segmentation performance on the PASCAL VOC 2012 and MS COCO 2014 datasets. Code and models are available at this https URL.

+

+

+

+ 11. 标题:Gravity Network for end-to-end small lesion detection

+ 编号:[59]

+ 链接:https://arxiv.org/abs/2309.12876

+ 作者:Ciro Russo, Alessandro Bria, Claudio Marrocco

+ 备注:

+ 关键词:detector specifically designed, detector specifically, specifically designed, designed to detect, detect small lesions

+

+ 点击查看摘要

+ This paper introduces a novel one-stage end-to-end detector specifically designed to detect small lesions in medical images. Precise localization of small lesions presents challenges due to their appearance and the diverse contextual backgrounds in which they are found. To address this, our approach introduces a new type of pixel-based anchor that dynamically moves towards the targeted lesion for detection. We refer to this new architecture as GravityNet, and the novel anchors as gravity points since they appear to be "attracted" by the lesions. We conducted experiments on two well-established medical problems involving small lesions to evaluate the performance of the proposed approach: microcalcifications detection in digital mammograms and microaneurysms detection in digital fundus images. Our method demonstrates promising results in effectively detecting small lesions in these medical imaging tasks.

+

+

+

+ 12. 标题:Accurate and Fast Compressed Video Captioning

+ 编号:[63]

+ 链接:https://arxiv.org/abs/2309.12867

+ 作者:Yaojie Shen, Xin Gu, Kai Xu, Heng Fan, Longyin Wen, Libo Zhang

+ 备注:

+ 关键词:approaches typically require, sample video frames, video, video captioning, subsequent process

+

+ 点击查看摘要

+ Existing video captioning approaches typically require to first sample video frames from a decoded video and then conduct a subsequent process (e.g., feature extraction and/or captioning model learning). In this pipeline, manual frame sampling may ignore key information in videos and thus degrade performance. Additionally, redundant information in the sampled frames may result in low efficiency in the inference of video captioning. Addressing this, we study video captioning from a different perspective in compressed domain, which brings multi-fold advantages over the existing pipeline: 1) Compared to raw images from the decoded video, the compressed video, consisting of I-frames, motion vectors and residuals, is highly distinguishable, which allows us to leverage the entire video for learning without manual sampling through a specialized model design; 2) The captioning model is more efficient in inference as smaller and less redundant information is processed. We propose a simple yet effective end-to-end transformer in the compressed domain for video captioning that enables learning from the compressed video for captioning. We show that even with a simple design, our method can achieve state-of-the-art performance on different benchmarks while running almost 2x faster than existing approaches. Code is available at this https URL.

+

+

+

+ 13. 标题:Bridging Sensor Gaps via Single-Direction Tuning for Hyperspectral Image Classification

+ 编号:[64]

+ 链接:https://arxiv.org/abs/2309.12865

+ 作者:Xizhe Xue, Haokui Zhang, Ying Li, Liuwei Wan, Zongwen Bai, Mike Zheng Shou

+ 备注:

+ 关键词:achieved remarkable results, researchers started exploring, tackling HSI classification, proposed SDT, remarkable results

+

+ 点击查看摘要

+ Recently, some researchers started exploring the use of ViTs in tackling HSI classification and achieved remarkable results. However, the training of ViT models requires a considerable number of training samples, while hyperspectral data, due to its high annotation costs, typically has a relatively small number of training samples. This contradiction has not been effectively addressed. In this paper, aiming to solve this problem, we propose the single-direction tuning (SDT) strategy, which serves as a bridge, allowing us to leverage existing labeled HSI datasets even RGB datasets to enhance the performance on new HSI datasets with limited samples. The proposed SDT inherits the idea of prompt tuning, aiming to reuse pre-trained models with minimal modifications for adaptation to new tasks. But unlike prompt tuning, SDT is custom-designed to accommodate the characteristics of HSIs. The proposed SDT utilizes a parallel architecture, an asynchronous cold-hot gradient update strategy, and unidirectional interaction. It aims to fully harness the potent representation learning capabilities derived from training on heterologous, even cross-modal datasets. In addition, we also introduce a novel Triplet-structured transformer (Tri-Former), where spectral attention and spatial attention modules are merged in parallel to construct the token mixing component for reducing computation cost and a 3D convolution-based channel mixer module is integrated to enhance stability and keep structure information. Comparison experiments conducted on three representative HSI datasets captured by different sensors demonstrate the proposed Tri-Former achieves better performance compared to several state-of-the-art methods. Homologous, heterologous and cross-modal tuning experiments verified the effectiveness of the proposed SDT.

+

+

+

+ 14. 标题:Associative Transformer Is A Sparse Representation Learner

+ 编号:[67]

+ 链接:https://arxiv.org/abs/2309.12862

+ 作者:Yuwei Sun, Hideya Ochiai, Zhirong Wu, Stephen Lin, Ryota Kanai

+ 备注:

+ 关键词:conventional Transformer models, monolithic pairwise attention, pairwise attention mechanism, leveraging sparse interactions, Set Transformer

+

+ 点击查看摘要

+ Emerging from the monolithic pairwise attention mechanism in conventional Transformer models, there is a growing interest in leveraging sparse interactions that align more closely with biological principles. Approaches including the Set Transformer and the Perceiver employ cross-attention consolidated with a latent space that forms an attention bottleneck with limited capacity. Building upon recent neuroscience studies of Global Workspace Theory and associative memory, we propose the Associative Transformer (AiT). AiT induces low-rank explicit memory that serves as both priors to guide bottleneck attention in the shared workspace and attractors within associative memory of a Hopfield network. Through joint end-to-end training, these priors naturally develop module specialization, each contributing a distinct inductive bias to form attention bottlenecks. A bottleneck can foster competition among inputs for writing information into the memory. We show that AiT is a sparse representation learner, learning distinct priors through the bottlenecks that are complexity-invariant to input quantities and dimensions. AiT demonstrates its superiority over methods such as the Set Transformer, Vision Transformer, and Coordination in various vision tasks.

+

+

+

+ 15. 标题:SRFNet: Monocular Depth Estimation with Fine-grained Structure via Spatial Reliability-oriented Fusion of Frames and Events

+ 编号:[75]

+ 链接:https://arxiv.org/abs/2309.12842

+ 作者:Tianbo Pan, Zidong Cao, Lin Wang

+ 备注:

+ 关键词:measure distance relative, important for applications, navigation and self-driving, Monocular depth estimation, crucial task

+

+ 点击查看摘要

+ Monocular depth estimation is a crucial task to measure distance relative to a camera, which is important for applications, such as robot navigation and self-driving. Traditional frame-based methods suffer from performance drops due to the limited dynamic range and motion blur. Therefore, recent works leverage novel event cameras to complement or guide the frame modality via frame-event feature fusion. However, event streams exhibit spatial sparsity, leaving some areas unperceived, especially in regions with marginal light changes. Therefore, direct fusion methods, e.g., RAMNet, often ignore the contribution of the most confident regions of each modality. This leads to structural ambiguity in the modality fusion process, thus degrading the depth estimation performance. In this paper, we propose a novel Spatial Reliability-oriented Fusion Network (SRFNet), that can estimate depth with fine-grained structure at both daytime and nighttime. Our method consists of two key technical components. Firstly, we propose an attention-based interactive fusion (AIF) module that applies spatial priors of events and frames as the initial masks and learns the consensus regions to guide the inter-modal feature fusion. The fused feature are then fed back to enhance the frame and event feature learning. Meanwhile, it utilizes an output head to generate a fused mask, which is iteratively updated for learning consensual spatial priors. Secondly, we propose the Reliability-oriented Depth Refinement (RDR) module to estimate dense depth with the fine-grained structure based on the fused features and masks. We evaluate the effectiveness of our method on the synthetic and real-world datasets, which shows that, even without pretraining, our method outperforms the prior methods, e.g., RAMNet, especially in night scenes. Our project homepage: this https URL.

+

+

+

+ 16. 标题:Synthetic Boost: Leveraging Synthetic Data for Enhanced Vision-Language Segmentation in Echocardiography

+ 编号:[78]

+ 链接:https://arxiv.org/abs/2309.12829

+ 作者:Rabin Adhikari, Manish Dhakal, Safal Thapaliya, Kanchan Poudel, Prasiddha Bhandari, Bishesh Khanal

+ 备注:Accepted at the 4th International Workshop of Advances in Simplifying Medical UltraSound (ASMUS)

+ 关键词:cardiovascular diseases, essential for echocardiography-based, echocardiography-based assessment, assessment of cardiovascular, Semantic Diffusion Models

+

+ 点击查看摘要

+ Accurate segmentation is essential for echocardiography-based assessment of cardiovascular diseases (CVDs). However, the variability among sonographers and the inherent challenges of ultrasound images hinder precise segmentation. By leveraging the joint representation of image and text modalities, Vision-Language Segmentation Models (VLSMs) can incorporate rich contextual information, potentially aiding in accurate and explainable segmentation. However, the lack of readily available data in echocardiography hampers the training of VLSMs. In this study, we explore using synthetic datasets from Semantic Diffusion Models (SDMs) to enhance VLSMs for echocardiography segmentation. We evaluate results for two popular VLSMs (CLIPSeg and CRIS) using seven different kinds of language prompts derived from several attributes, automatically extracted from echocardiography images, segmentation masks, and their metadata. Our results show improved metrics and faster convergence when pretraining VLSMs on SDM-generated synthetic images before finetuning on real images. The code, configs, and prompts are available at this https URL.

+

+

+

+ 17. 标题:Domain Adaptive Few-Shot Open-Set Learning

+ 编号:[81]

+ 链接:https://arxiv.org/abs/2309.12814

+ 作者:Debabrata Pal, Deeptej More, Sai Bhargav, Dipesh Tamboli, Vaneet Aggarwal, Biplab Banerjee

+ 备注:

+ 关键词:made impressive strides, recognizing unknown samples, managing visual shifts, Open Set Recognition, target query sets

+

+ 点击查看摘要

+ Few-shot learning has made impressive strides in addressing the crucial challenges of recognizing unknown samples from novel classes in target query sets and managing visual shifts between domains. However, existing techniques fall short when it comes to identifying target outliers under domain shifts by learning to reject pseudo-outliers from the source domain, resulting in an incomplete solution to both problems. To address these challenges comprehensively, we propose a novel approach called Domain Adaptive Few-Shot Open Set Recognition (DA-FSOS) and introduce a meta-learning-based architecture named DAFOSNET. During training, our model learns a shared and discriminative embedding space while creating a pseudo open-space decision boundary, given a fully-supervised source domain and a label-disjoint few-shot target domain. To enhance data density, we use a pair of conditional adversarial networks with tunable noise variances to augment both domains closed and pseudo-open spaces. Furthermore, we propose a domain-specific batch-normalized class prototypes alignment strategy to align both domains globally while ensuring class-discriminativeness through novel metric objectives. Our training approach ensures that DAFOS-NET can generalize well to new scenarios in the target domain. We present three benchmarks for DA-FSOS based on the Office-Home, mini-ImageNet/CUB, and DomainNet datasets and demonstrate the efficacy of DAFOS-NET through extensive experimentation

+

+

+

+ 18. 标题:Scalable Semantic 3D Mapping of Coral Reefs with Deep Learning

+ 编号:[86]

+ 链接:https://arxiv.org/abs/2309.12804

+ 作者:Jonathan Sauder, Guilhem Banc-Prandi, Anders Meibom, Devis Tuia

+ 备注:

+ 关键词:millions of people, diverse ecosystems, hundreds of millions, Coral reefs, Coral

+

+ 点击查看摘要

+ Coral reefs are among the most diverse ecosystems on our planet, and are depended on by hundreds of millions of people. Unfortunately, most coral reefs are existentially threatened by global climate change and local anthropogenic pressures. To better understand the dynamics underlying deterioration of reefs, monitoring at high spatial and temporal resolution is key. However, conventional monitoring methods for quantifying coral cover and species abundance are limited in scale due to the extensive manual labor required. Although computer vision tools have been employed to aid in this process, in particular SfM photogrammetry for 3D mapping and deep neural networks for image segmentation, analysis of the data products creates a bottleneck, effectively limiting their scalability. This paper presents a new paradigm for mapping underwater environments from ego-motion video, unifying 3D mapping systems that use machine learning to adapt to challenging conditions under water, combined with a modern approach for semantic segmentation of images. The method is exemplified on coral reefs in the northern Gulf of Aqaba, Red Sea, demonstrating high-precision 3D semantic mapping at unprecedented scale with significantly reduced required labor costs: a 100 m video transect acquired within 5 minutes of diving with a cheap consumer-grade camera can be fully automatically analyzed within 5 minutes. Our approach significantly scales up coral reef monitoring by taking a leap towards fully automatic analysis of video transects. The method democratizes coral reef transects by reducing the labor, equipment, logistics, and computing cost. This can help to inform conservation policies more efficiently. The underlying computational method of learning-based Structure-from-Motion has broad implications for fast low-cost mapping of underwater environments other than coral reefs.

+

+

+

+ 19. 标题:NOC: High-Quality Neural Object Cloning with 3D Lifting of Segment Anything

+ 编号:[89]

+ 链接:https://arxiv.org/abs/2309.12790

+ 作者:Xiaobao Wei, Renrui Zhang, Jiarui Wu, Jiaming Liu, Ming Lu, Yandong Guo, Shanghang Zhang

+ 备注:

+ 关键词:recently attracted increasing, attracted increasing attention, Neural Object Cloning, target object, neural field

+

+ 点击查看摘要

+ With the development of the neural field, reconstructing the 3D model of a target object from multi-view inputs has recently attracted increasing attention from the community. Existing methods normally learn a neural field for the whole scene, while it is still under-explored how to reconstruct a certain object indicated by users on-the-fly. Considering the Segment Anything Model (SAM) has shown effectiveness in segmenting any 2D images, in this paper, we propose Neural Object Cloning (NOC), a novel high-quality 3D object reconstruction method, which leverages the benefits of both neural field and SAM from two aspects. Firstly, to separate the target object from the scene, we propose a novel strategy to lift the multi-view 2D segmentation masks of SAM into a unified 3D variation field. The 3D variation field is then projected into 2D space and generates the new prompts for SAM. This process is iterative until convergence to separate the target object from the scene. Then, apart from 2D masks, we further lift the 2D features of the SAM encoder into a 3D SAM field in order to improve the reconstruction quality of the target object. NOC lifts the 2D masks and features of SAM into the 3D neural field for high-quality target object reconstruction. We conduct detailed experiments on several benchmark datasets to demonstrate the advantages of our method. The code will be released.

+

+

+

+ 20. 标题:EMS: 3D Eyebrow Modeling from Single-view Images

+ 编号:[90]

+ 链接:https://arxiv.org/abs/2309.12787

+ 作者:Chenghong Li, Leyang Jin, Yujian Zheng, Yizhou Yu, Xiaoguang Han

+ 备注:To appear in SIGGRAPH Asia 2023 (Journal Track). 19 pages, 19 figures, 6 tables

+ 关键词:expression and appearance, play a critical, critical role, role in facial, facial expression

+

+ 点击查看摘要

+ Eyebrows play a critical role in facial expression and appearance. Although the 3D digitization of faces is well explored, less attention has been drawn to 3D eyebrow modeling. In this work, we propose EMS, the first learning-based framework for single-view 3D eyebrow reconstruction. Following the methods of scalp hair reconstruction, we also represent the eyebrow as a set of fiber curves and convert the reconstruction to fibers growing problem. Three modules are then carefully designed: RootFinder firstly localizes the fiber root positions which indicates where to grow; OriPredictor predicts an orientation field in the 3D space to guide the growing of fibers; FiberEnder is designed to determine when to stop the growth of each fiber. Our OriPredictor is directly borrowing the method used in hair reconstruction. Considering the differences between hair and eyebrows, both RootFinder and FiberEnder are newly proposed. Specifically, to cope with the challenge that the root location is severely occluded, we formulate root localization as a density map estimation task. Given the predicted density map, a density-based clustering method is further used for finding the roots. For each fiber, the growth starts from the root point and moves step by step until the ending, where each step is defined as an oriented line with a constant length according to the predicted orientation field. To determine when to end, a pixel-aligned RNN architecture is designed to form a binary classifier, which outputs stop or not for each growing step. To support the training of all proposed networks, we build the first 3D synthetic eyebrow dataset that contains 400 high-quality eyebrow models manually created by artists. Extensive experiments have demonstrated the effectiveness of the proposed EMS pipeline on a variety of different eyebrow styles and lengths, ranging from short and sparse to long bushy eyebrows.

+

+

+

+ 21. 标题:LMC: Large Model Collaboration with Cross-assessment for Training-Free Open-Set Object Recognition

+ 编号:[95]

+ 链接:https://arxiv.org/abs/2309.12780

+ 作者:Haoxuan Qu, Xiaofei Hui, Yujun Cai, Jun Liu

+ 备注:NeurIPS 2023

+ 关键词:Open-set object recognition, object recognition aims, Open-set object, object recognition, object recognition accurately

+

+ 点击查看摘要

+ Open-set object recognition aims to identify if an object is from a class that has been encountered during training or not. To perform open-set object recognition accurately, a key challenge is how to reduce the reliance on spurious-discriminative features. In this paper, motivated by that different large models pre-trained through different paradigms can possess very rich while distinct implicit knowledge, we propose a novel framework named Large Model Collaboration (LMC) to tackle the above challenge via collaborating different off-the-shelf large models in a training-free manner. Moreover, we also incorporate the proposed framework with several novel designs to effectively extract implicit knowledge from large models. Extensive experiments demonstrate the efficacy of our proposed framework. Code is available \href{this https URL}{here}.

+

+

+

+ 22. 标题:WiCV@CVPR2023: The Eleventh Women In Computer Vision Workshop at the Annual CVPR Conference

+ 编号:[99]

+ 链接:https://arxiv.org/abs/2309.12768

+ 作者:Doris Antensteiner, Marah Halawa, Asra Aslam, Ivaxi Sheth, Sachini Herath, Ziqi Huang, Sunnie S. Y. Kim, Aparna Akula, Xin Wang

+ 备注:

+ 关键词:Computer Vision Workshop, computer vision community, Computer Vision, organized alongside, alongside the hybrid

+

+ 点击查看摘要

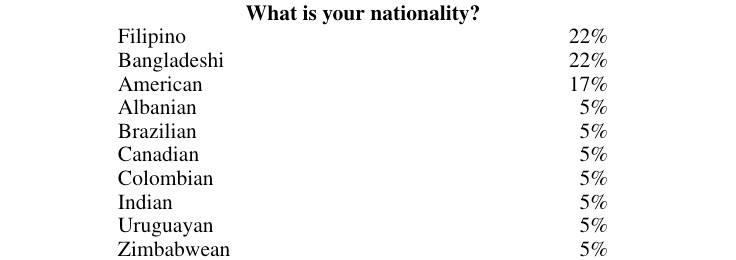

+ In this paper, we present the details of Women in Computer Vision Workshop - WiCV 2023, organized alongside the hybrid CVPR 2023 in Vancouver, Canada. WiCV aims to amplify the voices of underrepresented women in the computer vision community, fostering increased visibility in both academia and industry. We believe that such events play a vital role in addressing gender imbalances within the field. The annual WiCV@CVPR workshop offers a) opportunity for collaboration between researchers from minority groups, b) mentorship for female junior researchers, c) financial support to presenters to alleviate finanacial burdens and d) a diverse array of role models who can inspire younger researchers at the outset of their careers. In this paper, we present a comprehensive report on the workshop program, historical trends from the past WiCV@CVPR events, and a summary of statistics related to presenters, attendees, and sponsorship for the WiCV 2023 workshop.

+

+

+

+ 23. 标题:S3TC: Spiking Separated Spatial and Temporal Convolutions with Unsupervised STDP-based Learning for Action Recognition

+ 编号:[103]

+ 链接:https://arxiv.org/abs/2309.12761

+ 作者:Mireille El-Assal, Pierre Tirilly, Ioan Marius Bilasco

+ 备注:

+ 关键词:major computer vision, computer vision task, Deep Neural Networks, Spiking Neural Networks, Neural Networks

+

+ 点击查看摘要

+ Video analysis is a major computer vision task that has received a lot of attention in recent years. The current state-of-the-art performance for video analysis is achieved with Deep Neural Networks (DNNs) that have high computational costs and need large amounts of labeled data for training. Spiking Neural Networks (SNNs) have significantly lower computational costs (thousands of times) than regular non-spiking networks when implemented on neuromorphic hardware. They have been used for video analysis with methods like 3D Convolutional Spiking Neural Networks (3D CSNNs). However, these networks have a significantly larger number of parameters compared with spiking 2D CSNN. This, not only increases the computational costs, but also makes these networks more difficult to implement with neuromorphic hardware. In this work, we use CSNNs trained in an unsupervised manner with the Spike Timing-Dependent Plasticity (STDP) rule, and we introduce, for the first time, Spiking Separated Spatial and Temporal Convolutions (S3TCs) for the sake of reducing the number of parameters required for video analysis. This unsupervised learning has the advantage of not needing large amounts of labeled data for training. Factorizing a single spatio-temporal spiking convolution into a spatial and a temporal spiking convolution decreases the number of parameters of the network. We test our network with the KTH, Weizmann, and IXMAS datasets, and we show that S3TCs successfully extract spatio-temporal information from videos, while increasing the output spiking activity, and outperforming spiking 3D convolutions.

+

+

+

+ 24. 标题:Masking Improves Contrastive Self-Supervised Learning for ConvNets, and Saliency Tells You Where

+ 编号:[104]

+ 链接:https://arxiv.org/abs/2309.12757

+ 作者:Zhi-Yi Chin, Chieh-Ming Jiang, Ching-Chun Huang, Pin-Yu Chen, Wei-Chen Chiu

+ 备注:

+ 关键词:convolutional neural networks, vision transformer backbone, image data starts, self-supervised learning scheme, learning process significantly

+

+ 点击查看摘要

+ While image data starts to enjoy the simple-but-effective self-supervised learning scheme built upon masking and self-reconstruction objective thanks to the introduction of tokenization procedure and vision transformer backbone, convolutional neural networks as another important and widely-adopted architecture for image data, though having contrastive-learning techniques to drive the self-supervised learning, still face the difficulty of leveraging such straightforward and general masking operation to benefit their learning process significantly. In this work, we aim to alleviate the burden of including masking operation into the contrastive-learning framework for convolutional neural networks as an extra augmentation method. In addition to the additive but unwanted edges (between masked and unmasked regions) as well as other adverse effects caused by the masking operations for ConvNets, which have been discussed by prior works, we particularly identify the potential problem where for one view in a contrastive sample-pair the randomly-sampled masking regions could be overly concentrated on important/salient objects thus resulting in misleading contrastiveness to the other view. To this end, we propose to explicitly take the saliency constraint into consideration in which the masked regions are more evenly distributed among the foreground and background for realizing the masking-based augmentation. Moreover, we introduce hard negative samples by masking larger regions of salient patches in an input image. Extensive experiments conducted on various datasets, contrastive learning mechanisms, and downstream tasks well verify the efficacy as well as the superior performance of our proposed method with respect to several state-of-the-art baselines.

+

+

+

+ 25. 标题:Transformer-based Image Compression with Variable Image Quality Objectives

+ 编号:[117]

+ 链接:https://arxiv.org/abs/2309.12717

+ 作者:Chia-Hao Kao, Yi-Hsin Chen, Cheng Chien, Wei-Chen Chiu, Wen-Hsiao Peng

+ 备注:

+ 关键词:image compression system, Transformer-based image compression, paper presents, compression system, image quality objective

+

+ 点击查看摘要

+ This paper presents a Transformer-based image compression system that allows for a variable image quality objective according to the user's preference. Optimizing a learned codec for different quality objectives leads to reconstructed images with varying visual characteristics. Our method provides the user with the flexibility to choose a trade-off between two image quality objectives using a single, shared model. Motivated by the success of prompt-tuning techniques, we introduce prompt tokens to condition our Transformer-based autoencoder. These prompt tokens are generated adaptively based on the user's preference and input image through learning a prompt generation network. Extensive experiments on commonly used quality metrics demonstrate the effectiveness of our method in adapting the encoding and/or decoding processes to a variable quality objective. While offering the additional flexibility, our proposed method performs comparably to the single-objective methods in terms of rate-distortion performance.

+

+

+

+ 26. 标题:PointSSC: A Cooperative Vehicle-Infrastructure Point Cloud Benchmark for Semantic Scene Completion

+ 编号:[123]

+ 链接:https://arxiv.org/abs/2309.12708

+ 作者:Yuxiang Yan, Boda Liu, Jianfei Ai, Qinbu Li, Ru Wan, Jian Pu

+ 备注:8 pages, 5 figures, submitted to ICRA2024

+ 关键词:generate space occupancies, jointly generate space, aims to jointly, jointly generate, SSC

+

+ 点击查看摘要

+ Semantic Scene Completion (SSC) aims to jointly generate space occupancies and semantic labels for complex 3D scenes. Most existing SSC models focus on volumetric representations, which are memory-inefficient for large outdoor spaces. Point clouds provide a lightweight alternative but existing benchmarks lack outdoor point cloud scenes with semantic labels. To address this, we introduce PointSSC, the first cooperative vehicle-infrastructure point cloud benchmark for semantic scene completion. These scenes exhibit long-range perception and minimal occlusion. We develop an automated annotation pipeline leveraging Segment Anything to efficiently assign semantics. To benchmark progress, we propose a LiDAR-based model with a Spatial-Aware Transformer for global and local feature extraction and a Completion and Segmentation Cooperative Module for joint completion and segmentation. PointSSC provides a challenging testbed to drive advances in semantic point cloud completion for real-world navigation.

+

+

+

+ 27. 标题:Multi-Label Noise Transition Matrix Estimation with Label Correlations: Theory and Algorithm

+ 编号:[124]

+ 链接:https://arxiv.org/abs/2309.12706

+ 作者:Shikun Li, Xiaobo Xia, Hansong Zhang, Shiming Ge, Tongliang Liu

+ 备注:

+ 关键词:Noisy multi-label learning, garnered increasing attention, increasing attention due, multi-label learning, Noisy

+

+ 点击查看摘要

+ Noisy multi-label learning has garnered increasing attention due to the challenges posed by collecting large-scale accurate labels, making noisy labels a more practical alternative. Motivated by noisy multi-class learning, the introduction of transition matrices can help model multi-label noise and enable the development of statistically consistent algorithms for noisy multi-label learning. However, estimating multi-label noise transition matrices remains a challenging task, as most existing estimators in noisy multi-class learning rely on anchor points and accurate fitting of noisy class posteriors, which is hard to satisfy in noisy multi-label learning. In this paper, we address this problem by first investigating the identifiability of class-dependent transition matrices in noisy multi-label learning. Building upon the identifiability results, we propose a novel estimator that leverages label correlations without the need for anchor points or precise fitting of noisy class posteriors. Specifically, we first estimate the occurrence probability of two noisy labels to capture noisy label correlations. Subsequently, we employ sample selection techniques to extract information implying clean label correlations, which are then used to estimate the occurrence probability of one noisy label when a certain clean label appears. By exploiting the mismatches in label correlations implied by these occurrence probabilities, we demonstrate that the transition matrix becomes identifiable and can be acquired by solving a bilinear decomposition problem. Theoretically, we establish an estimation error bound for our multi-label transition matrix estimator and derive a generalization error bound for our statistically consistent algorithm. Empirically, we validate the effectiveness of our estimator in estimating multi-label noise transition matrices, leading to excellent classification performance.

+

+

+

+ 28. 标题:mixed attention auto encoder for multi-class industrial anomaly detection

+ 编号:[126]

+ 链接:https://arxiv.org/abs/2309.12700

+ 作者:Jiangqi Liu, Feng Wang

+ 备注:5 pages, 4 figures

+ 关键词:unsupervised industrial anomaly, industrial anomaly detection, anomaly detection train, unsupervised industrial, train a separate

+

+ 点击查看摘要

+ Most existing methods for unsupervised industrial anomaly detection train a separate model for each object category. This kind of approach can easily capture the category-specific feature distributions, but results in high storage cost and low training efficiency. In this paper, we propose a unified mixed-attention auto encoder (MAAE) to implement multi-class anomaly detection with a single model. To alleviate the performance degradation due to the diverse distribution patterns of different categories, we employ spatial attentions and channel attentions to effectively capture the global category information and model the feature distributions of multiple classes. Furthermore, to simulate the realistic noises on features and preserve the surface semantics of objects from different categories which are essential for detecting the subtle anomalies, we propose an adaptive noise generator and a multi-scale fusion module for the pre-trained features. MAAE delivers remarkable performances on the benchmark dataset compared with the state-of-the-art methods.

+

+

+

+ 29. 标题:eWand: A calibration framework for wide baseline frame-based and event-based camera systems

+ 编号:[134]

+ 链接:https://arxiv.org/abs/2309.12685

+ 作者:Thomas Gossard, Andreas Ziegler, Levin Kolmar, Jonas Tebbe, Andreas Zell

+ 备注:

+ 关键词:objects precisely, triangulate the position, position of objects, cameras, pattern

+

+ 点击查看摘要

+ Accurate calibration is crucial for using multiple cameras to triangulate the position of objects precisely. However, it is also a time-consuming process that needs to be repeated for every displacement of the cameras. The standard approach is to use a printed pattern with known geometry to estimate the intrinsic and extrinsic parameters of the cameras. The same idea can be applied to event-based cameras, though it requires extra work. By using frame reconstruction from events, a printed pattern can be detected. A blinking pattern can also be displayed on a screen. Then, the pattern can be directly detected from the events. Such calibration methods can provide accurate intrinsic calibration for both frame- and event-based cameras. However, using 2D patterns has several limitations for multi-camera extrinsic calibration, with cameras possessing highly different points of view and a wide baseline. The 2D pattern can only be detected from one direction and needs to be of significant size to compensate for its distance to the camera. This makes the extrinsic calibration time-consuming and cumbersome. To overcome these limitations, we propose eWand, a new method that uses blinking LEDs inside opaque spheres instead of a printed or displayed pattern. Our method provides a faster, easier-to-use extrinsic calibration approach that maintains high accuracy for both event- and frame-based cameras.

+

+

+

+ 30. 标题:Vision Transformers for Computer Go

+ 编号:[139]

+ 链接:https://arxiv.org/abs/2309.12675

+ 作者:Amani Sagri, Tristan Cazenave, Jérôme Arjonilla, Abdallah Saffidine

+ 备注:

+ 关键词:language understanding, understanding and image, investigation explores, explores their application, image analysis

+

+ 点击查看摘要

+ Motivated by the success of transformers in various fields, such as language understanding and image analysis, this investigation explores their application in the context of the game of Go. In particular, our study focuses on the analysis of the Transformer in Vision. Through a detailed analysis of numerous points such as prediction accuracy, win rates, memory, speed, size, or even learning rate, we have been able to highlight the substantial role that transformers can play in the game of Go. This study was carried out by comparing them to the usual Residual Networks.

+

+

+

+ 31. 标题:On Sparse Modern Hopfield Model

+ 编号:[140]

+ 链接:https://arxiv.org/abs/2309.12673

+ 作者:Jerry Yao-Chieh Hu, Donglin Yang, Dennis Wu, Chenwei Xu, Bo-Yu Chen, Han Liu

+ 备注:37 pages, accepted to NeurIPS 2023

+ 关键词:sparse modern Hopfield, modern Hopfield model, modern Hopfield, Hopfield model, sparse Hopfield model

+

+ 点击查看摘要

+ We introduce the sparse modern Hopfield model as a sparse extension of the modern Hopfield model. Like its dense counterpart, the sparse modern Hopfield model equips a memory-retrieval dynamics whose one-step approximation corresponds to the sparse attention mechanism. Theoretically, our key contribution is a principled derivation of a closed-form sparse Hopfield energy using the convex conjugate of the sparse entropic regularizer. Building upon this, we derive the sparse memory retrieval dynamics from the sparse energy function and show its one-step approximation is equivalent to the sparse-structured attention. Importantly, we provide a sparsity-dependent memory retrieval error bound which is provably tighter than its dense analog. The conditions for the benefits of sparsity to arise are therefore identified and discussed. In addition, we show that the sparse modern Hopfield model maintains the robust theoretical properties of its dense counterpart, including rapid fixed point convergence and exponential memory capacity. Empirically, we use both synthetic and real-world datasets to demonstrate that the sparse Hopfield model outperforms its dense counterpart in many situations.

+

+

+

+ 32. 标题:Exploiting Modality-Specific Features For Multi-Modal Manipulation Detection And Grounding

+ 编号:[150]

+ 链接:https://arxiv.org/abs/2309.12657

+ 作者:Jiazhen Wang, Bin Liu, Changtao Miao, Zhiwei Zhao, Wanyi Zhuang, Qi Chu, Nenghai Yu

+ 备注:This work has been submitted to the IEEE for possible publication. Copyright may be transferred without notice, after which this version may no longer be accessible

+ 关键词:gained significant attention, numerous negative impacts, multi-modal manipulation detection, AI-synthesized text, significant attention

+

+ 点击查看摘要

+ AI-synthesized text and images have gained significant attention, particularly due to the widespread dissemination of multi-modal manipulations on the internet, which has resulted in numerous negative impacts on society. Existing methods for multi-modal manipulation detection and grounding primarily focus on fusing vision-language features to make predictions, while overlooking the importance of modality-specific features, leading to sub-optimal results. In this paper, we construct a simple and novel transformer-based framework for multi-modal manipulation detection and grounding tasks. Our framework simultaneously explores modality-specific features while preserving the capability for multi-modal alignment. To achieve this, we introduce visual/language pre-trained encoders and dual-branch cross-attention (DCA) to extract and fuse modality-unique features. Furthermore, we design decoupled fine-grained classifiers (DFC) to enhance modality-specific feature mining and mitigate modality competition. Moreover, we propose an implicit manipulation query (IMQ) that adaptively aggregates global contextual cues within each modality using learnable queries, thereby improving the discovery of forged details. Extensive experiments on the $\rm DGM^4$ dataset demonstrate the superior performance of our proposed model compared to state-of-the-art approaches.

+

+

+

+ 33. 标题:FP-PET: Large Model, Multiple Loss And Focused Practice

+ 编号:[152]

+ 链接:https://arxiv.org/abs/2309.12650

+ 作者:Yixin Chen, Ourui Fu, Wenrui Shao, Zhaoheng Xie

+ 备注:

+ 关键词:study presents FP-PET, presents FP-PET, PET images, comprehensive approach, medical image segmentation

+

+ 点击查看摘要

+ This study presents FP-PET, a comprehensive approach to medical image segmentation with a focus on CT and PET images. Utilizing a dataset from the AutoPet2023 Challenge, the research employs a variety of machine learning models, including STUNet-large, SwinUNETR, and VNet, to achieve state-of-the-art segmentation performance. The paper introduces an aggregated score that combines multiple evaluation metrics such as Dice score, false positive volume (FPV), and false negative volume (FNV) to provide a holistic measure of model effectiveness. The study also discusses the computational challenges and solutions related to model training, which was conducted on high-performance GPUs. Preprocessing and postprocessing techniques, including gaussian weighting schemes and morphological operations, are explored to further refine the segmentation output. The research offers valuable insights into the challenges and solutions for advanced medical image segmentation.

+

+

+

+ 34. 标题:RHINO: Regularizing the Hash-based Implicit Neural Representation

+ 编号:[156]

+ 链接:https://arxiv.org/abs/2309.12642

+ 作者:Hao Zhu, Fengyi Liu, Qi Zhang, Xun Cao, Zhan Ma

+ 备注:17 pages, 11 figures

+ 关键词:characterizing intricate signals, demonstrated impressive effectiveness, Implicit Neural Representation, Implicit Neural, intricate signals

+

+ 点击查看摘要

+ The use of Implicit Neural Representation (INR) through a hash-table has demonstrated impressive effectiveness and efficiency in characterizing intricate signals. However, current state-of-the-art methods exhibit insufficient regularization, often yielding unreliable and noisy results during interpolations. We find that this issue stems from broken gradient flow between input coordinates and indexed hash-keys, where the chain rule attempts to model discrete hash-keys, rather than the continuous coordinates. To tackle this concern, we introduce RHINO, in which a continuous analytical function is incorporated to facilitate regularization by connecting the input coordinate and the network additionally without modifying the architecture of current hash-based INRs. This connection ensures a seamless backpropagation of gradients from the network's output back to the input coordinates, thereby enhancing regularization. Our experimental results not only showcase the broadened regularization capability across different hash-based INRs like DINER and Instant NGP, but also across a variety of tasks such as image fitting, representation of signed distance functions, and optimization of 5D static / 6D dynamic neural radiance fields. Notably, RHINO outperforms current state-of-the-art techniques in both quality and speed, affirming its superiority.

+

+

+

+ 35. 标题:Global Context Aggregation Network for Lightweight Saliency Detection of Surface Defects

+ 编号:[157]

+ 链接:https://arxiv.org/abs/2309.12641

+ 作者:Feng Yan, Xiaoheng Jiang, Yang Lu, Lisha Cui, Shupan Li, Jiale Cao, Mingliang Xu, Dacheng Tao

+ 备注:

+ 关键词:show weak appearances, Surface defect inspection, challenging task, weak appearances, appearances or exist

+

+ 点击查看摘要

+ Surface defect inspection is a very challenging task in which surface defects usually show weak appearances or exist under complex backgrounds. Most high-accuracy defect detection methods require expensive computation and storage overhead, making them less practical in some resource-constrained defect detection applications. Although some lightweight methods have achieved real-time inference speed with fewer parameters, they show poor detection accuracy in complex defect scenarios. To this end, we develop a Global Context Aggregation Network (GCANet) for lightweight saliency detection of surface defects on the encoder-decoder structure. First, we introduce a novel transformer encoder on the top layer of the lightweight backbone, which captures global context information through a novel Depth-wise Self-Attention (DSA) module. The proposed DSA performs element-wise similarity in channel dimension while maintaining linear complexity. In addition, we introduce a novel Channel Reference Attention (CRA) module before each decoder block to strengthen the representation of multi-level features in the bottom-up path. The proposed CRA exploits the channel correlation between features at different layers to adaptively enhance feature representation. The experimental results on three public defect datasets demonstrate that the proposed network achieves a better trade-off between accuracy and running efficiency compared with other 17 state-of-the-art methods. Specifically, GCANet achieves competitive accuracy (91.79% $F_{\beta}^{w}$, 93.55% $S_\alpha$, and 97.35% $E_\phi$) on SD-saliency-900 while running 272fps on a single gpu.

+

+

+

+ 36. 标题:CINFormer: Transformer network with multi-stage CNN feature injection for surface defect segmentation

+ 编号:[159]

+ 链接:https://arxiv.org/abs/2309.12639

+ 作者:Xiaoheng Jiang, Kaiyi Guo, Yang Lu, Feng Yan, Hao Liu, Jiale Cao, Mingliang Xu, Dacheng Tao

+ 备注:

+ 关键词:Convolutional Neural Network, manufacture and production, Surface defect inspection, great importance, importance for industrial

+

+ 点击查看摘要

+ Surface defect inspection is of great importance for industrial manufacture and production. Though defect inspection methods based on deep learning have made significant progress, there are still some challenges for these methods, such as indistinguishable weak defects and defect-like interference in the background. To address these issues, we propose a transformer network with multi-stage CNN (Convolutional Neural Network) feature injection for surface defect segmentation, which is a UNet-like structure named CINFormer. CINFormer presents a simple yet effective feature integration mechanism that injects the multi-level CNN features of the input image into different stages of the transformer network in the encoder. This can maintain the merit of CNN capturing detailed features and that of transformer depressing noises in the background, which facilitates accurate defect detection. In addition, CINFormer presents a Top-K self-attention module to focus on tokens with more important information about the defects, so as to further reduce the impact of the redundant background. Extensive experiments conducted on the surface defect datasets DAGM 2007, Magnetic tile, and NEU show that the proposed CINFormer achieves state-of-the-art performance in defect detection.

+

+

+

+ 37. 标题:Learning Actions and Control of Focus of Attention with a Log-Polar-like Sensor

+ 编号:[161]

+ 链接:https://arxiv.org/abs/2309.12634

+ 作者:Robin Göransson, Volker Krueger

+ 备注:

+ 关键词:autonomous mobile robot, image processing time, gaze control, Atari games, long-term goal

+

+ 点击查看摘要

+ With the long-term goal of reducing the image processing time on an autonomous mobile robot in mind we explore in this paper the use of log-polar like image data with gaze control. The gaze control is not done on the Cartesian image but on the log-polar like image data. For this we start out from the classic deep reinforcement learning approach for Atari games. We extend an A3C deep RL approach with an LSTM network, and we learn the policy for playing three Atari games and a policy for gaze control. While the Atari games already use low-resolution images of 80 by 80 pixels, we are able to further reduce the amount of image pixels by a factor of 5 without losing any gaming performance.

+

+

+

+ 38. 标题:Decision Fusion Network with Perception Fine-tuning for Defect Classification

+ 编号:[164]

+ 链接:https://arxiv.org/abs/2309.12630

+ 作者:Xiaoheng Jiang, Shilong Tian, Zhiwen Zhu, Yang Lu, Hao Liu, Li Chen, Shupan Li, Mingliang Xu

+ 备注:

+ 关键词:Surface defect inspection, industrial inspection, important task, task in industrial, decision

+

+ 点击查看摘要

+ Surface defect inspection is an important task in industrial inspection. Deep learning-based methods have demonstrated promising performance in this domain. Nevertheless, these methods still suffer from misjudgment when encountering challenges such as low-contrast defects and complex backgrounds. To overcome these issues, we present a decision fusion network (DFNet) that incorporates the semantic decision with the feature decision to strengthen the decision ability of the network. In particular, we introduce a decision fusion module (DFM) that extracts a semantic vector from the semantic decision branch and a feature vector for the feature decision branch and fuses them to make the final classification decision. In addition, we propose a perception fine-tuning module (PFM) that fine-tunes the foreground and background during the segmentation stage. PFM generates the semantic and feature outputs that are sent to the classification decision stage. Furthermore, we present an inner-outer separation weight matrix to address the impact of label edge uncertainty during segmentation supervision. Our experimental results on the publicly available datasets including KolektorSDD2 (96.1% AP) and Magnetic-tile-defect-datasets (94.6% mAP) demonstrate the effectiveness of the proposed method.

+

+

+

+ 39. 标题:DeFormer: Integrating Transformers with Deformable Models for 3D Shape Abstraction from a Single Image

+ 编号:[184]

+ 链接:https://arxiv.org/abs/2309.12594

+ 作者:Di Liu, Xiang Yu, Meng Ye, Qilong Zhangli, Zhuowei Li, Zhixing Zhang, Dimitris N. Metaxas

+ 备注:Accepted by ICCV 2023

+ 关键词:vision and graphics, long-standing problem, problem in computer, computer vision, shape abstraction

+

+ 点击查看摘要

+ Accurate 3D shape abstraction from a single 2D image is a long-standing problem in computer vision and graphics. By leveraging a set of primitives to represent the target shape, recent methods have achieved promising results. However, these methods either use a relatively large number of primitives or lack geometric flexibility due to the limited expressibility of the primitives. In this paper, we propose a novel bi-channel Transformer architecture, integrated with parameterized deformable models, termed DeFormer, to simultaneously estimate the global and local deformations of primitives. In this way, DeFormer can abstract complex object shapes while using a small number of primitives which offer a broader geometry coverage and finer details. Then, we introduce a force-driven dynamic fitting and a cycle-consistent re-projection loss to optimize the primitive parameters. Extensive experiments on ShapeNet across various settings show that DeFormer achieves better reconstruction accuracy over the state-of-the-art, and visualizes with consistent semantic correspondences for improved interpretability.

+

+

+

+ 40. 标题:Improving Machine Learning Robustness via Adversarial Training

+ 编号:[185]

+ 链接:https://arxiv.org/abs/2309.12593

+ 作者:Long Dang, Thushari Hapuarachchi, Kaiqi Xiong, Jing Lin

+ 备注:

+ 关键词:potential worst-case noises, highly unusual situations, Machine Learning, IID data case, real-world applications

+

+ 点击查看摘要

+ As Machine Learning (ML) is increasingly used in solving various tasks in real-world applications, it is crucial to ensure that ML algorithms are robust to any potential worst-case noises, adversarial attacks, and highly unusual situations when they are designed. Studying ML robustness will significantly help in the design of ML algorithms. In this paper, we investigate ML robustness using adversarial training in centralized and decentralized environments, where ML training and testing are conducted in one or multiple computers. In the centralized environment, we achieve a test accuracy of 65.41% and 83.0% when classifying adversarial examples generated by Fast Gradient Sign Method and DeepFool, respectively. Comparing to existing studies, these results demonstrate an improvement of 18.41% for FGSM and 47% for DeepFool. In the decentralized environment, we study Federated learning (FL) robustness by using adversarial training with independent and identically distributed (IID) and non-IID data, respectively, where CIFAR-10 is used in this research. In the IID data case, our experimental results demonstrate that we can achieve such a robust accuracy that it is comparable to the one obtained in the centralized environment. Moreover, in the non-IID data case, the natural accuracy drops from 66.23% to 57.82%, and the robust accuracy decreases by 25% and 23.4% in C&W and Projected Gradient Descent (PGD) attacks, compared to the IID data case, respectively. We further propose an IID data-sharing approach, which allows for increasing the natural accuracy to 85.04% and the robust accuracy from 57% to 72% in C&W attacks and from 59% to 67% in PGD attacks.

+

+

+

+ 41. 标题:BGF-YOLO: Enhanced YOLOv8 with Multiscale Attentional Feature Fusion for Brain Tumor Detection

+ 编号:[189]

+ 链接:https://arxiv.org/abs/2309.12585

+ 作者:Ming Kang, Chee-Ming Ting, Fung Fung Ting, Raphaël C.-W. Phan

+ 备注:

+ 关键词:based object detectors, Bi-level Routing Attention, shown remarkable accuracy, incorporating Bi-level Routing, based object

+

+ 点击查看摘要

+ You Only Look Once (YOLO)-based object detectors have shown remarkable accuracy for automated brain tumor detection. In this paper, we develop a novel BGFG-YOLO architecture by incorporating Bi-level Routing Attention (BRA), Generalized feature pyramid networks (GFPN), Forth detecting head, and Generalized-IoU (GIoU) bounding box regression loss into YOLOv8. BGFG-YOLO contains an attention mechanism to focus more on important features, and feature pyramid networks to enrich feature representation by merging high-level semantic features with spatial details. Furthermore, we investigate the effect of different attention mechanisms and feature fusions, detection head architectures on brain tumor detection accuracy. Experimental results show that BGFG-YOLO gives a 3.4% absolute increase of mAP50 compared to YOLOv8x, and achieves state-of-the-art on the brain tumor detection dataset Br35H. The code is available at this https URL.

+

+

+

+ 42. 标题:Classification of Alzheimers Disease with Deep Learning on Eye-tracking Data

+ 编号:[194]

+ 链接:https://arxiv.org/abs/2309.12574

+ 作者:Harshinee Sriram, Cristina Conati, Thalia Field

+ 备注:ICMI 2023 long paper

+ 关键词:classifying Alzheimers Disease, Alzheimers Disease, task-specific engineered features, classifying Alzheimers, engineered features

+

+ 点击查看摘要

+ Existing research has shown the potential of classifying Alzheimers Disease (AD) from eye-tracking (ET) data with classifiers that rely on task-specific engineered features. In this paper, we investigate whether we can improve on existing results by using a Deep-Learning classifier trained end-to-end on raw ET data. This classifier (VTNet) uses a GRU and a CNN in parallel to leverage both visual (V) and temporal (T) representations of ET data and was previously used to detect user confusion while processing visual displays. A main challenge in applying VTNet to our target AD classification task is that the available ET data sequences are much longer than those used in the previous confusion detection task, pushing the limits of what is manageable by LSTM-based models. We discuss how we address this challenge and show that VTNet outperforms the state-of-the-art approaches in AD classification, providing encouraging evidence on the generality of this model to make predictions from ET data.

+

+

+

+ 43. 标题:Invariant Learning via Probability of Sufficient and Necessary Causes

+ 编号:[202]

+ 链接:https://arxiv.org/abs/2309.12559

+ 作者:Mengyue Yang, Zhen Fang, Yonggang Zhang, Yali Du, Furui Liu, Jean-Francois Ton, Jun Wang

+ 备注:

+ 关键词:testing distribution typically, distribution typically unknown, achieving OOD generalization, OOD generalization, indispensable for learning

+

+ 点击查看摘要

+ Out-of-distribution (OOD) generalization is indispensable for learning models in the wild, where testing distribution typically unknown and different from the training. Recent methods derived from causality have shown great potential in achieving OOD generalization. However, existing methods mainly focus on the invariance property of causes, while largely overlooking the property of \textit{sufficiency} and \textit{necessity} conditions. Namely, a necessary but insufficient cause (feature) is invariant to distribution shift, yet it may not have required accuracy. By contrast, a sufficient yet unnecessary cause (feature) tends to fit specific data well but may have a risk of adapting to a new domain. To capture the information of sufficient and necessary causes, we employ a classical concept, the probability of sufficiency and necessary causes (PNS), which indicates the probability of whether one is the necessary and sufficient cause. To associate PNS with OOD generalization, we propose PNS risk and formulate an algorithm to learn representation with a high PNS value. We theoretically analyze and prove the generalizability of the PNS risk. Experiments on both synthetic and real-world benchmarks demonstrate the effectiveness of the proposed method. The details of the implementation can be found at the GitHub repository: this https URL.

+

+

+

+ 44. 标题:Triple-View Knowledge Distillation for Semi-Supervised Semantic Segmentation

+ 编号:[203]

+ 链接:https://arxiv.org/abs/2309.12557

+ 作者:Ping Li, Junjie Chen, Li Yuan, Xianghua Xu, Mingli Song

+ 备注:

+ 关键词:expensive human labeling, pixel-level label map, labeled images, unlabeled images, semantic segmentation employs

+

+ 点击查看摘要

+ To alleviate the expensive human labeling, semi-supervised semantic segmentation employs a few labeled images and an abundant of unlabeled images to predict the pixel-level label map with the same size. Previous methods often adopt co-training using two convolutional networks with the same architecture but different initialization, which fails to capture the sufficiently diverse features. This motivates us to use tri-training and develop the triple-view encoder to utilize the encoders with different architectures to derive diverse features, and exploit the knowledge distillation skill to learn the complementary semantics among these encoders. Moreover, existing methods simply concatenate the features from both encoder and decoder, resulting in redundant features that require large memory cost. This inspires us to devise a dual-frequency decoder that selects those important features by projecting the features from the spatial domain to the frequency domain, where the dual-frequency channel attention mechanism is introduced to model the feature importance. Therefore, we propose a Triple-view Knowledge Distillation framework, termed TriKD, for semi-supervised semantic segmentation, including the triple-view encoder and the dual-frequency decoder. Extensive experiments were conducted on two benchmarks, \ie, Pascal VOC 2012 and Cityscapes, whose results verify the superiority of the proposed method with a good tradeoff between precision and inference speed.

+

+

+

+ 45. 标题:A Sentence Speaks a Thousand Images: Domain Generalization through Distilling CLIP with Language Guidance

+ 编号:[214]

+ 链接:https://arxiv.org/abs/2309.12530

+ 作者:Zeyi Huang, Andy Zhou, Zijian Lin, Mu Cai, Haohan Wang, Yong Jae Lee

+ 备注:to appear at ICCV2023

+ 关键词:Domain generalization studies, Domain generalization, studies the problem, CLIP teacher model, Domain

+

+ 点击查看摘要

+ Domain generalization studies the problem of training a model with samples from several domains (or distributions) and then testing the model with samples from a new, unseen domain. In this paper, we propose a novel approach for domain generalization that leverages recent advances in large vision-language models, specifically a CLIP teacher model, to train a smaller model that generalizes to unseen domains. The key technical contribution is a new type of regularization that requires the student's learned image representations to be close to the teacher's learned text representations obtained from encoding the corresponding text descriptions of images. We introduce two designs of the loss function, absolute and relative distance, which provide specific guidance on how the training process of the student model should be regularized. We evaluate our proposed method, dubbed RISE (Regularized Invariance with Semantic Embeddings), on various benchmark datasets and show that it outperforms several state-of-the-art domain generalization methods. To our knowledge, our work is the first to leverage knowledge distillation using a large vision-language model for domain generalization. By incorporating text-based information, RISE improves the generalization capability of machine learning models.

+

+

+

+ 46. 标题:License Plate Super-Resolution Using Diffusion Models

+ 编号:[222]

+ 链接:https://arxiv.org/abs/2309.12506

+ 作者:Sawsan AlHalawani, Bilel Benjdira, Adel Ammar, Anis Koubaa, Anas M. Ali

+ 备注:

+ 关键词:compromising recognition precision, Convolutional Neural Networks, Generative Adversarial Networks, accurately recognizing license, recognizing license plates

+

+ 点击查看摘要