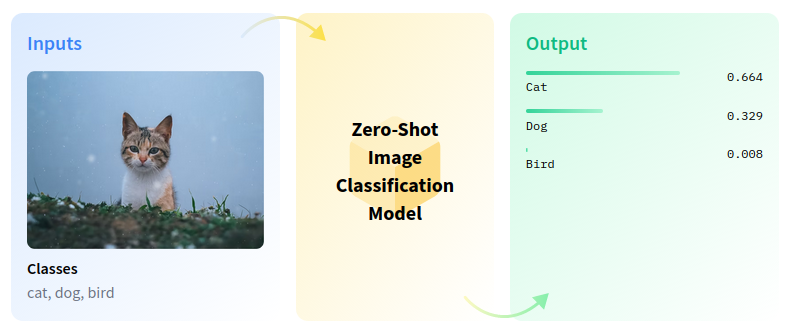

Zero-shot image classification is a computer vision task with the goal to classify images into one of several classes without any prior training or knowledge of these classes.

In this tutorial, you will use the SigLIP model to perform zero-shot image classification.

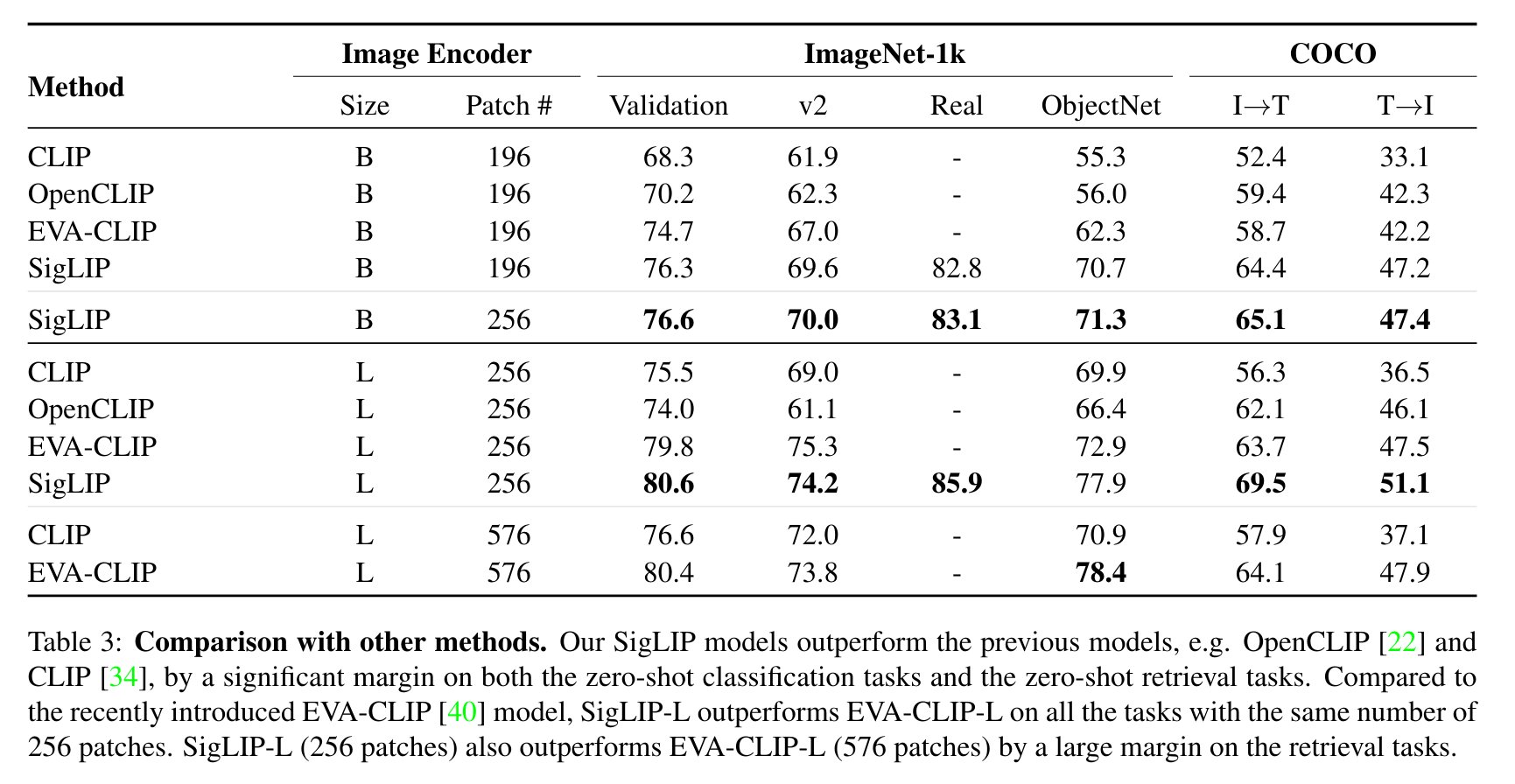

This tutorial demonstrates how to perform zero-shot image classification using the open-source SigLIP model. The SigLIP model was proposed in the Sigmoid Loss for Language Image Pre-Training paper. SigLIP suggests replacing the loss function used in CLIP (Contrastive Language–Image Pre-training) with a simple pairwise sigmoid loss. This results in better performance in terms of zero-shot classification accuracy on ImageNet.

You can find more information about this model in the research paper, GitHub repository, Hugging Face model page.

The notebook contains the following steps:

- Instantiate model.

- Run PyTorch model inference.

- Convert the model to OpenVINO Intermediate Representation (IR) format.

- Run OpenVINO model.

- Apply post-training quantization using NNCF:

- Prepare dataset.

- Quantize model.

- Run quantized OpenVINO model.

- Compare File Size.

- Compare inference time of the FP16 IR and quantized models.

The results of the SigLIP model's performance in zero-shot image classification from this notebook are demonstrated in the image below.

If you have not installed all required dependencies, follow the Installation Guide.