diff --git a/cookbook/example_external_evaluation_pipelines.ipynb b/cookbook/example_external_evaluation_pipelines.ipynb

index acf288b12..c6aabb5d7 100644

--- a/cookbook/example_external_evaluation_pipelines.ipynb

+++ b/cookbook/example_external_evaluation_pipelines.ipynb

@@ -41,7 +41,7 @@

" frameborder=\"0\"\n",

" allow=\"accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share\"\n",

" referrerpolicy=\"strict-origin-when-cross-origin\"\n",

- " allowfullscreen\n",

+ " allowFullScreen\n",

">"

]

},

diff --git a/cookbook/example_llm_security_monitoring.ipynb b/cookbook/example_llm_security_monitoring.ipynb

index 169cd2e15..b5de57c57 100644

--- a/cookbook/example_llm_security_monitoring.ipynb

+++ b/cookbook/example_llm_security_monitoring.ipynb

@@ -892,18 +892,18 @@

"provenance": []

},

"kernelspec": {

- "display_name": "Deno",

- "language": "typescript",

- "name": "deno"

+ "display_name": ".venv",

+ "language": "python",

+ "name": "python3"

},

"language_info": {

"codemirror_mode": "typescript",

"file_extension": ".ts",

"mimetype": "text/x.typescript",

- "name": "typescript",

+ "name": "python",

"nbconvert_exporter": "script",

"pygments_lexer": "typescript",

- "version": "5.4.5"

+ "version": "3.9.18"

},

"widgets": {

"application/vnd.jupyter.widget-state+json": {

diff --git a/cookbook/integration_langchain.ipynb b/cookbook/integration_langchain.ipynb

index cf091ea2f..9a51d53cc 100644

--- a/cookbook/integration_langchain.ipynb

+++ b/cookbook/integration_langchain.ipynb

@@ -1,722 +1,1011 @@

{

- "cells": [

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "KlceIPalN3QR"

- },

- "source": [

- "---\n",

- "description: Cookbook with examples of the Langfuse Integration for Langchain (Python).\n",

- "category: Integrations\n",

- "---"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "mqBspBzuRk9C"

- },

- "source": [

- "# Cookbook: Langchain Integration"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "x1oaA7XYGOfX"

- },

- "source": [

- "This is a cookbook with examples of the Langfuse Integration for Langchain (Python).\n",

- "\n",

- "Follow the [integration guide](https://langfuse.com/docs/integrations/langchain) to add this integration to your Langchain project. The integration also supports Langchain JS."

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "AbSpd5EiZouE"

- },

- "source": [

- "## Setup"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "YNyU6IzCZouE"

- },

- "outputs": [],

- "source": [

- "%pip install langfuse langchain langchain_openai --upgrade"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "OpE57ujJZouE"

- },

- "source": [

- "Initialize the Langfuse client with your API keys from the project settings in the Langfuse UI and add them to your environment."

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "dEdF-668ZouF"

- },

- "outputs": [],

- "source": [

- "import os\n",

- "\n",

- "# get keys for your project from https://cloud.langfuse.com\n",

- "os.environ[\"LANGFUSE_PUBLIC_KEY\"] = \"pk-lf-***\"\n",

- "os.environ[\"LANGFUSE_SECRET_KEY\"] = \"sk-lf-***\"\n",

- "os.environ[\"LANGFUSE_HOST\"] = \"https://cloud.langfuse.com\" # for EU data region\n",

- "# os.environ[\"LANGFUSE_HOST\"] = \"https://us.cloud.langfuse.com\" # for US data region\n",

- "\n",

- "# your openai key\n",

- "os.environ[\"OPENAI_API_KEY\"] = \"***\""

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "divRadPqZouF"

- },

- "outputs": [],

- "source": [

- "from langfuse.callback import CallbackHandler\n",

- "\n",

- "langfuse_handler = CallbackHandler()"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "8FVbg1RWoT8W"

- },

- "outputs": [],

- "source": [

- "# Tests the SDK connection with the server\n",

- "langfuse_handler.auth_check()"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {},

- "source": [

- "## Examples"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "QvRWPsZ-NoAr"

- },

- "source": [

- "### Sequential Chain in Langchain Expression Language (LCEL)\n",

- "\n",

- ""

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "3-HEia6gNoAr"

- },

- "outputs": [],

- "source": [

- "from operator import itemgetter\n",

- "from langchain_openai import ChatOpenAI\n",

- "from langchain.prompts import ChatPromptTemplate\n",

- "from langchain.schema import StrOutputParser\n",

- "\n",

- "langfuse_handler = CallbackHandler()\n",

- "\n",

- "prompt1 = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

- "prompt2 = ChatPromptTemplate.from_template(\n",

- " \"what country is the city {city} in? respond in {language}\"\n",

- ")\n",

- "model = ChatOpenAI()\n",

- "chain1 = prompt1 | model | StrOutputParser()\n",

- "chain2 = (\n",

- " {\"city\": chain1, \"language\": itemgetter(\"language\")}\n",

- " | prompt2\n",

- " | model\n",

- " | StrOutputParser()\n",

- ")\n",

- "\n",

- "chain2.invoke({\"person\": \"obama\", \"language\": \"spanish\"}, config={\"callbacks\":[langfuse_handler]})"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {},

- "source": [

- "#### Runnable methods\n",

- "\n",

- "Runnables are units of work that can be invoked, batched, streamed, transformed and composed.\n",

- "\n",

- "The examples below show how to use the following methods with Langfuse:\n",

- "\n",

- "- invoke/ainvoke: Transforms a single input into an output.\n",

- "\n",

- "- batch/abatch: Efficiently transforms multiple inputs into outputs.\n",

- "\n",

- "- stream/astream: Streams output from a single input as it’s produced."

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {},

- "outputs": [],

- "source": [

- "# Async Invoke\n",

- "await chain2.ainvoke({\"person\": \"biden\", \"language\": \"german\"}, config={\"callbacks\":[langfuse_handler]})\n",

- "\n",

- "# Batch\n",

- "chain2.batch([{\"person\": \"elon musk\", \"language\": \"english\"}, {\"person\": \"mark zuckerberg\", \"language\": \"english\"}], config={\"callbacks\":[langfuse_handler]})\n",

- "\n",

- "# Async Batch\n",

- "await chain2.abatch([{\"person\": \"jeff bezos\", \"language\": \"english\"}, {\"person\": \"tim cook\", \"language\": \"english\"}], config={\"callbacks\":[langfuse_handler]})\n",

- "\n",

- "# Stream\n",

- "for chunk in chain2.stream({\"person\": \"steve jobs\", \"language\": \"english\"}, config={\"callbacks\":[langfuse_handler]}):\n",

- " print(\"Streaming chunk:\", chunk)\n",

- "\n",

- "# Async Stream\n",

- "async for chunk in chain2.astream({\"person\": \"bill gates\", \"language\": \"english\"}, config={\"callbacks\":[langfuse_handler]}):\n",

- " print(\"Async Streaming chunk:\", chunk)\n"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "5v6TUIrVGkn3"

- },

- "source": [

- "### ConversationChain\n",

- "\n",

- "We'll use a [session](https://langfuse.com/docs/tracing-features/sessions) in Langfuse to track this conversation with each invocation being a single trace.\n",

- "\n",

- "In addition to the traces of each run, you also get a conversation view of the entire session:\n",

- "\n",

- ""

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "8HXyXC2LGga6"

- },

- "outputs": [],

- "source": [

- "from langchain.chains import ConversationChain\n",

- "from langchain.memory import ConversationBufferMemory\n",

- "from langchain_openai import OpenAI\n",

- "\n",

- "llm = OpenAI(temperature=0)\n",

- "\n",

- "conversation = ConversationChain(\n",

- " llm=llm, memory=ConversationBufferMemory()\n",

- ")"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "EWRj0qvKHLNE"

- },

- "outputs": [],

- "source": [

- "# Create a callback handler with a session\n",

- "langfuse_handler = CallbackHandler(session_id=\"conversation_chain\")"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "aIHmNekVHItt"

- },

- "outputs": [],

- "source": [

- "conversation.predict(input=\"Hi there!\", callbacks=[langfuse_handler])"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "tsAunGSwHkrt"

- },

- "outputs": [],

- "source": [

- "conversation.predict(input=\"How to build great developer tools?\", callbacks=[langfuse_handler])"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "m8O6hShcHsGe"

- },

- "outputs": [],

- "source": [

- "conversation.predict(input=\"Summarize your last response\", callbacks=[langfuse_handler])"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "FP5avhNb3TBH"

- },

- "source": [

- "### RetrievalQA\n",

- "\n",

- ""

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "wjiWEkRUFzCf"

- },

- "outputs": [],

- "source": [

- "import os\n",

- "os.environ[\"SERPAPI_API_KEY\"] = \"\""

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "p0CgEPSlEpkC"

- },

- "outputs": [],

- "source": [

- "%pip install unstructured selenium langchain-chroma --upgrade"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "kHDVa-Ssb-KT"

- },

- "outputs": [],

- "source": [

- "from langchain_community.document_loaders import SeleniumURLLoader\n",

- "from langchain_chroma import Chroma\n",

- "from langchain_text_splitters import CharacterTextSplitter\n",

- "from langchain_openai import OpenAIEmbeddings\n",

- "from langchain.chains import RetrievalQA\n",

- "\n",

- "langfuse_handler = CallbackHandler()\n",

- "\n",

- "urls = [\n",

- " \"https://raw.githubusercontent.com/langfuse/langfuse-docs/main/public/state_of_the_union.txt\",\n",

- "]\n",

- "loader = SeleniumURLLoader(urls=urls)\n",

- "llm = OpenAI()\n",

- "documents = loader.load()\n",

- "text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)\n",

- "texts = text_splitter.split_documents(documents)\n",

- "embeddings = OpenAIEmbeddings()\n",

- "docsearch = Chroma.from_documents(texts, embeddings)\n",

- "query = \"What did the president say about Ketanji Brown Jackson\"\n",

- "chain = RetrievalQA.from_chain_type(\n",

- " llm,\n",

- " retriever=docsearch.as_retriever(search_kwargs={\"k\": 1}),\n",

- ")\n",

- "\n",

- "chain.invoke(query, config={\"callbacks\":[langfuse_handler]})"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "JCmI0I20-sbI"

- },

- "source": [

- "### Agent"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "ReaHdQOT-S3n"

- },

- "outputs": [],

- "source": [

- "from langchain.agents import AgentExecutor, load_tools, create_openai_functions_agent\n",

- "from langchain_openai import ChatOpenAI\n",

- "from langchain import hub\n",

- "\n",

- "langfuse_handler = CallbackHandler()\n",

- "\n",

- "llm = ChatOpenAI(model=\"gpt-3.5-turbo\", temperature=0)\n",

- "tools = load_tools([\"serpapi\"])\n",

- "prompt = hub.pull(\"hwchase17/openai-functions-agent\")\n",

- "agent = create_openai_functions_agent(llm, tools, prompt)\n",

- "agent_executor = AgentExecutor(agent=agent, tools=tools)\n",

- "\n",

- "agent_executor.invoke({\"input\": \"What is Langfuse?\"}, config={\"callbacks\":[langfuse_handler]})"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "OIxwkX9p1ZR7"

- },

- "source": [

- "### AzureOpenAI"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "b43rIMig1ZR7"

- },

- "outputs": [],

- "source": [

- "os.environ[\"AZURE_OPENAI_ENDPOINT\"] = \"

\"\n",

- "os.environ[\"AZURE_OPENAI_API_KEY\"] = \"\"\n",

- "os.environ[\"OPENAI_API_TYPE\"] = \"azure\"\n",

- "os.environ[\"OPENAI_API_VERSION\"] = \"2023-09-01-preview\""

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "_lLdPwnr1ZR7"

- },

- "outputs": [],

- "source": [

- "from langchain_openai import AzureChatOpenAI\n",

- "from langchain.prompts import ChatPromptTemplate\n",

- "\n",

- "langfuse_handler = CallbackHandler()\n",

- "\n",

- "prompt = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

- "model = AzureChatOpenAI(\n",

- " deployment_name=\"gpt-35-turbo\",\n",

- " model_name=\"gpt-3.5-turbo\",\n",

- ")\n",

- "chain = prompt | model\n",

- "\n",

- "chain.invoke({\"person\": \"Satya Nadella\"}, config={\"callbacks\":[langfuse_handler]})"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "ZUenj0aca9qo"

- },

- "source": [

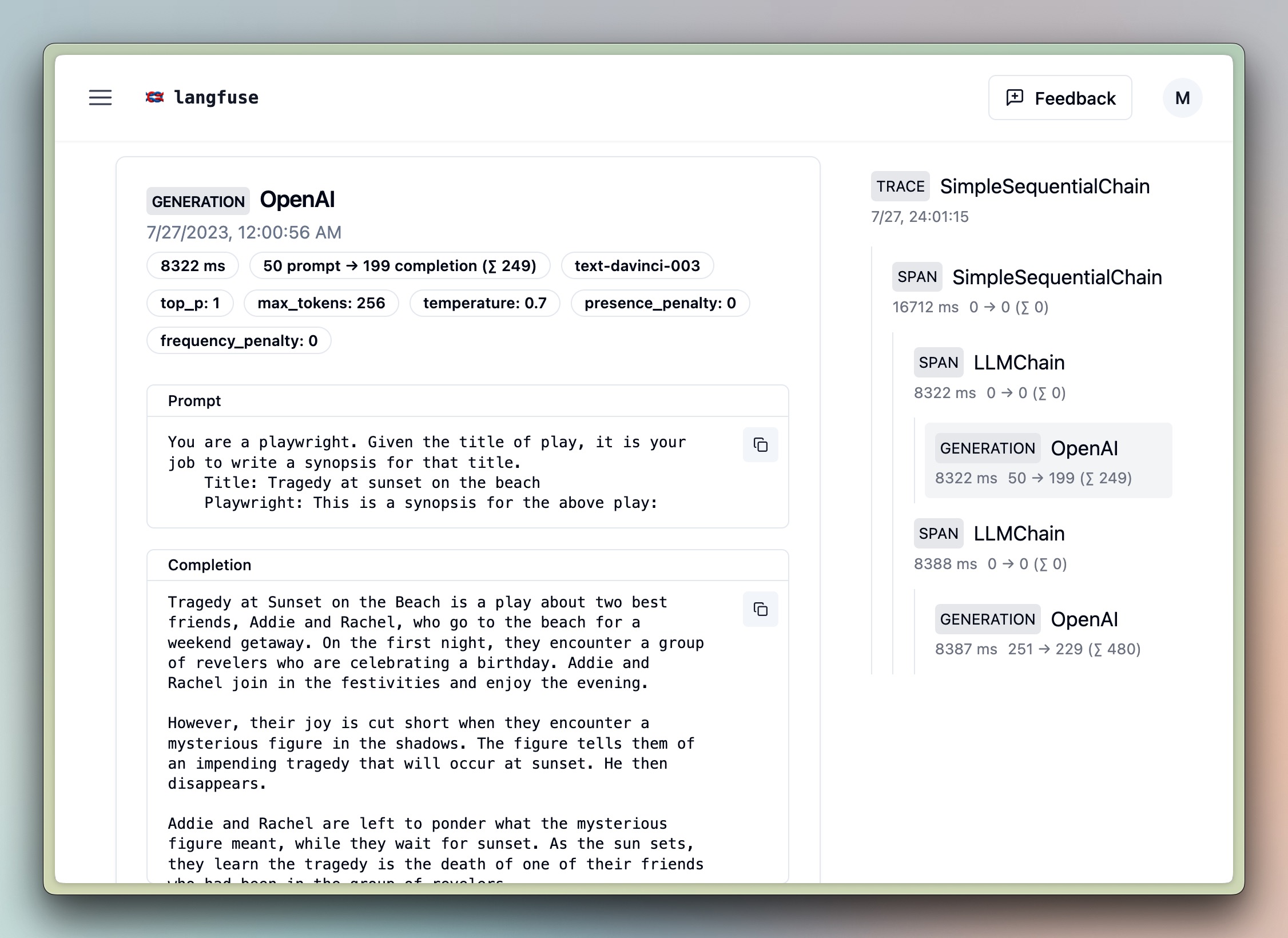

- "### Sequential Chain [Legacy]\n",

- "\n",

- ""

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "dTagwV_cbFVr"

- },

- "outputs": [],

- "source": [

- "# further imports\n",

- "from langchain_openai import OpenAI\n",

- "from langchain.chains import LLMChain, SimpleSequentialChain\n",

- "from langchain.prompts import PromptTemplate\n",

- "\n",

- "llm = OpenAI()\n",

- "template = \"\"\"You are a playwright. Given the title of play, it is your job to write a synopsis for that title.\n",

- " Title: {title}\n",

- " Playwright: This is a synopsis for the above play:\"\"\"\n",

- "prompt_template = PromptTemplate(input_variables=[\"title\"], template=template)\n",

- "synopsis_chain = LLMChain(llm=llm, prompt=prompt_template)\n",

- "template = \"\"\"You are a play critic from the New York Times. Given the synopsis of play, it is your job to write a review for that play.\n",

- " Play Synopsis:\n",

- " {synopsis}\n",

- " Review from a New York Times play critic of the above play:\"\"\"\n",

- "prompt_template = PromptTemplate(input_variables=[\"synopsis\"], template=template)\n",

- "review_chain = LLMChain(llm=llm, prompt=prompt_template)\n",

- "overall_chain = SimpleSequentialChain(\n",

- " chains=[synopsis_chain, review_chain],\n",

- ")\n",

- "\n",

- "# invoke\n",

- "review = overall_chain.invoke(\"Tragedy at sunset on the beach\", {\"callbacks\":[langfuse_handler]}) # add the handler to the run method\n",

- "# run [LEGACY]\n",

- "review = overall_chain.run(\"Tragedy at sunset on the beach\", callbacks=[langfuse_handler])# add the handler to the run method"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "AxQlUOmVPEwz"

- },

- "source": [

- "## Adding scores to traces\n",

- "\n",

- "In addition to the attributes automatically captured by the decorator, you can add others to use the full features of Langfuse.\n",

- "\n",

- "Two utility methods:\n",

- "* `langfuse_context.update_current_observation`: Update the trace/span of the current function scope\n",

- "* `langfuse_context.update_current_trace`: Update the trace itself, can also be called within any deeply nested span within the trace\n",

- "\n",

- "For details on available attributes, have a look at the [reference](https://python.reference.langfuse.com/langfuse/decorators#LangfuseDecorator.update_current_observation).\n",

- "\n",

- "Below is an example demonstrating how to enrich traces and observations with custom parameters:"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "PudCopwEPFgh"

- },

- "outputs": [],

- "source": [

- "from langfuse.decorators import langfuse_context, observe\n",

- " \n",

- "@observe(as_type=\"generation\")\n",

- "def deeply_nested_llm_call():\n",

- " # Enrich the current observation with a custom name, input, and output\n",

- " langfuse_context.update_current_observation(\n",

- " name=\"Deeply nested LLM call\", input=\"Ping?\", output=\"Pong!\"\n",

- " )\n",

- " # Set the parent trace's name from within a nested observation\n",

- " langfuse_context.update_current_trace(\n",

- " name=\"Trace name set from deeply_nested_llm_call\",\n",

- " session_id=\"1234\",\n",

- " user_id=\"5678\",\n",

- " tags=[\"tag1\", \"tag2\"],\n",

- " public=True\n",

- " )\n",

- " \n",

- "@observe()\n",

- "def nested_span():\n",

- " # Update the current span with a custom name and level\n",

- " langfuse_context.update_current_observation(name=\"Nested Span\", level=\"WARNING\")\n",

- " deeply_nested_llm_call()\n",

- " \n",

- "@observe()\n",

- "def main():\n",

- " nested_span()\n",

- " \n",

- "# Execute the main function to generate the enriched trace\n",

- "main()"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {},

- "source": [

- "On the Langfuse platform the trace now shows with the updated name from the `deeply_nested_llm_call`, and the observations will be enriched with the appropriate data points.\n",

- "\n",

- "**Example trace:** https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/f16e0151-cca8-4d90-bccf-1d9ea0958afb"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "GEWWS8PGo4A1"

- },

- "source": [

- "## Interoperability with Langfuse Python SDK\n",

- "\n",

- "You can use this integration in combination with the `observe()` decorator from the Langfuse Python SDK. Thereby, you can trace non-Langchain code, combine multiple Langchain invocations in a single trace, and use the full functionality of the Langfuse Python SDK.\n",

- "\n",

- "The `langfuse_context.get_current_langchain_handler()` method exposes a LangChain callback handler in the context of a trace or span when using `decorators`. Learn more about Langfuse Tracing [here](https://langfuse.com/docs/tracing) and this functionality [here](https://langfuse.com/docs/sdk/python/decorators#langchain).\n"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "q1zlFuIimJfT"

- },

- "source": [

- "### How it works"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "Op7qwM0Y-1bp"

- },

- "outputs": [],

- "source": [

- "from langfuse.decorators import langfuse_context, observe\n",

- "\n",

- "# Create a trace via Langfuse decorators and get a Langchain Callback handler for it\n",

- "@observe() # automtically log function as a trace to Langfuse\n",

- "def main():\n",

- " # update trace attributes (e.g, name, session_id, user_id)\n",

- " langfuse_context.update_current_trace(\n",

- " name=\"custom-trace\",\n",

- " session_id=\"user-1234\",\n",

- " user_id=\"session-1234\",\n",

- " )\n",

- " # get the langchain handler for the current trace\n",

- " langfuse_context.get_current_langchain_handler()\n",

- "\n",

- " # use the handler to trace langchain runs ...\n",

- "\n",

- "main()"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "HRX2zFCOmwXH"

- },

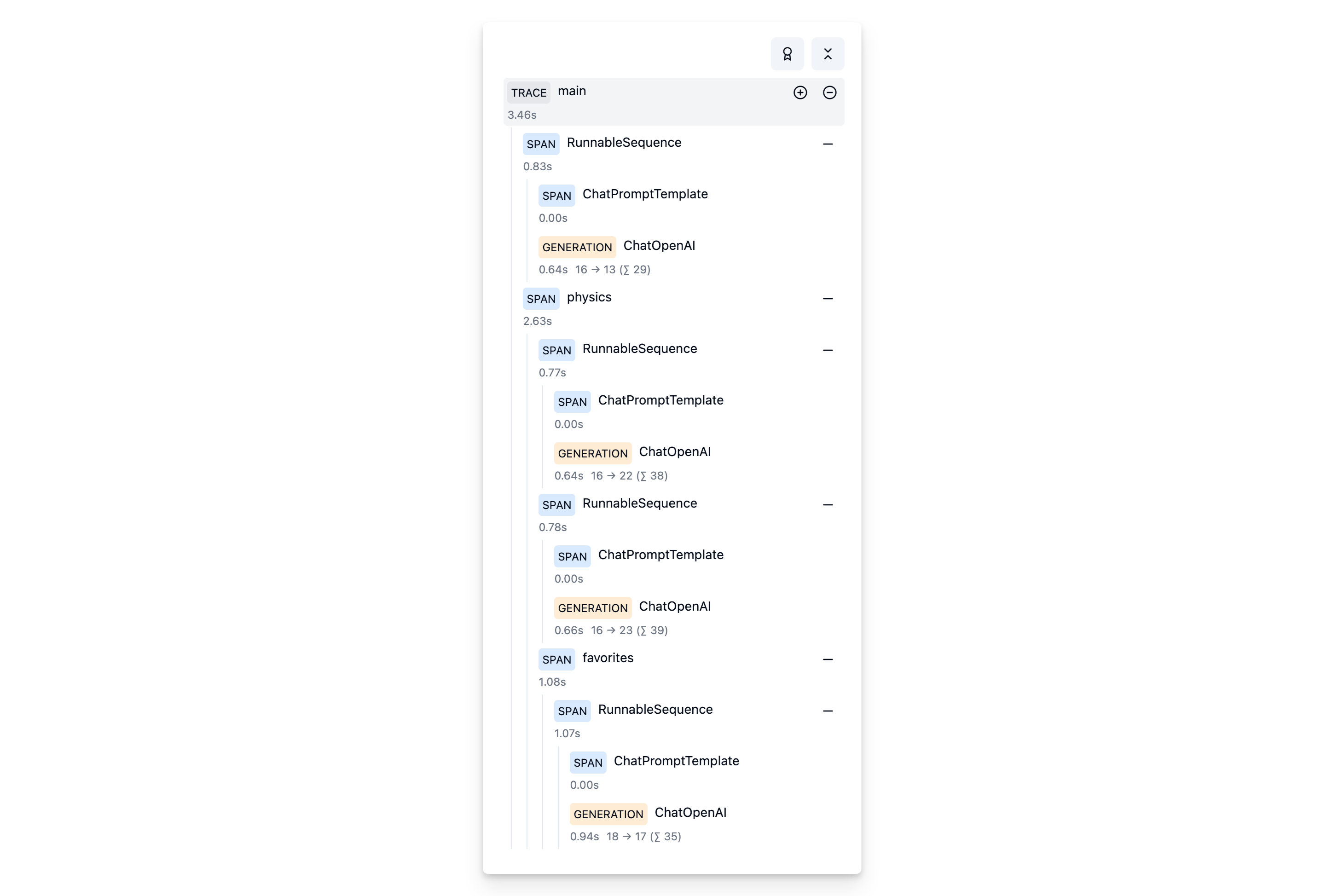

- "source": [

- "### Example\n",

- "\n",

- "We'll run the same chain multiple times at different places within the hierarchy of a trace.\n",

- "\n",

- "```\n",

- "TRACE: person-locator\n",

- "|\n",

- "|-- SPAN: Chain (Alan Turing)\n",

- "|\n",

- "|-- SPAN: Physics\n",

- "| |\n",

- "| |-- SPAN: Chain (Albert Einstein)\n",

- "| |\n",

- "| |-- SPAN: Chain (Isaac Newton)\n",

- "| |\n",

- "| |-- SPAN: Favorites\n",

- "| | |\n",

- "| | |-- SPAN: Chain (Richard Feynman)\n",

- "```\n",

- "\n",

- "Setup chain"

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "ASq5sHErkmLB"

- },

- "outputs": [],

- "source": [

- "from langchain_openai import ChatOpenAI\n",

- "from langchain.prompts import ChatPromptTemplate\n",

- "\n",

- "prompt = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

- "model = ChatOpenAI()\n",

- "\n",

- "chain = prompt | model"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "fvJ1pv4MqzTi"

- },

- "source": [

- "Invoke it multiple times as part of a nested trace."

- ]

- },

- {

- "cell_type": "code",

- "execution_count": null,

- "metadata": {

- "id": "CnHq-7QD3uAa"

- },

- "outputs": [],

- "source": [

- "from langfuse.decorators import langfuse_context, observe\n",

- "\n",

- "# On span \"Physics\".\"Favorites\"\n",

- "@observe() # decorator to automatically log function as sub-span to Langfuse\n",

- "def favorites():\n",

- " # get the langchain handler for the current sub-span\n",

- " langfuse_handler = langfuse_context.get_current_langchain_handler()\n",

- " # invoke chain with langfuse handler\n",

- " chain.invoke({\"person\": \"Richard Feynman\"},\n",

- " config={\"callbacks\": [langfuse_handler]})\n",

- "\n",

- "# On span \"Physics\"\n",

- "@observe() # decorator to automatically log function as span to Langfuse\n",

- "def physics():\n",

- " # get the langchain handler for the current span\n",

- " langfuse_handler = langfuse_context.get_current_langchain_handler()\n",

- " # invoke chains with langfuse handler\n",

- " chain.invoke({\"person\": \"Albert Einstein\"},\n",

- " config={\"callbacks\": [langfuse_handler]})\n",

- " chain.invoke({\"person\": \"Isaac Newton\"},\n",

- " config={\"callbacks\": [langfuse_handler]})\n",

- " favorites()\n",

- "\n",

- "# On trace\n",

- "@observe() # decorator to automatically log function as trace to Langfuse\n",

- "def main():\n",

- " # get the langchain handler for the current trace\n",

- " langfuse_handler = langfuse_context.get_current_langchain_handler()\n",

- " # invoke chain with langfuse handler\n",

- " chain.invoke({\"person\": \"Alan Turing\"},\n",

- " config={\"callbacks\": [langfuse_handler]})\n",

- " physics()\n",

- "\n",

- "main()"

- ]

- },

- {

- "cell_type": "markdown",

- "metadata": {

- "id": "MZ3q8iMDGOfd"

- },

- "source": [

- "View it in Langfuse\n",

- "\n",

- ""

- ]

- }

- ],

- "metadata": {

- "colab": {

- "provenance": []

- },

- "kernelspec": {

- "display_name": "Python 3 (ipykernel)",

- "language": "python",

- "name": "python3"

- },

- "language_info": {

- "codemirror_mode": {

- "name": "ipython",

- "version": 3

- },

- "file_extension": ".py",

- "mimetype": "text/x-python",

- "name": "python",

- "nbconvert_exporter": "python",

- "pygments_lexer": "ipython3",

- "version": "3.12.2"

- }

- },

- "nbformat": 4,

- "nbformat_minor": 4

+ "cells": [

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "KlceIPalN3QR"

+ },

+ "source": [

+ "---\n",

+ "description: Cookbook with examples of the Langfuse Integration for Langchain (Python).\n",

+ "category: Integrations\n",

+ "---"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "mqBspBzuRk9C"

+ },

+ "source": [

+ "# Cookbook: Langchain Integration"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "x1oaA7XYGOfX"

+ },

+ "source": [

+ "This is a cookbook with examples of the Langfuse Integration for Langchain (Python).\n",

+ "\n",

+ "Follow the [integration guide](https://langfuse.com/docs/integrations/langchain) to add this integration to your Langchain project. The integration also supports Langchain JS."

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "AbSpd5EiZouE"

+ },

+ "source": [

+ "## Setup"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "YNyU6IzCZouE",

+ "outputId": "234c71fb-f822-4b48-f4c0-94efe5f79305"

+ },

+ "outputs": [],

+ "source": [

+ "%pip install langfuse langchain langchain_openai langchain_community --upgrade"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "OpE57ujJZouE"

+ },

+ "source": [

+ "Initialize the Langfuse client with your API keys from the project settings in the Langfuse UI and add them to your environment."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "dEdF-668ZouF"

+ },

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "\n",

+ "# Get keys for your project from the project settings page\n",

+ "# https://cloud.langfuse.com\n",

+ "os.environ[\"LANGFUSE_PUBLIC_KEY\"] = \"\"\n",

+ "os.environ[\"LANGFUSE_SECRET_KEY\"] = \"\"\n",

+ "os.environ[\"LANGFUSE_HOST\"] = \"https://cloud.langfuse.com\" # 🇪🇺 EU region\n",

+ "# os.environ[\"LANGFUSE_HOST\"] = \"https://us.cloud.langfuse.com\" # 🇺🇸 US region\n",

+ "\n",

+ "# Your openai key\n",

+ "os.environ[\"OPENAI_API_KEY\"] = \"\""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "divRadPqZouF"

+ },

+ "outputs": [],

+ "source": [

+ "from langfuse.callback import CallbackHandler\n",

+ "\n",

+ "langfuse_handler = CallbackHandler()"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "8FVbg1RWoT8W",

+ "outputId": "00f24ad8-f8ec-4ee2-fd4b-0c117ee8c557"

+ },

+ "outputs": [],

+ "source": [

+ "# Tests the SDK connection with the server\n",

+ "langfuse_handler.auth_check()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "1ZXRf2FZXEXV"

+ },

+ "source": [

+ "## Examples"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "QvRWPsZ-NoAr"

+ },

+ "source": [

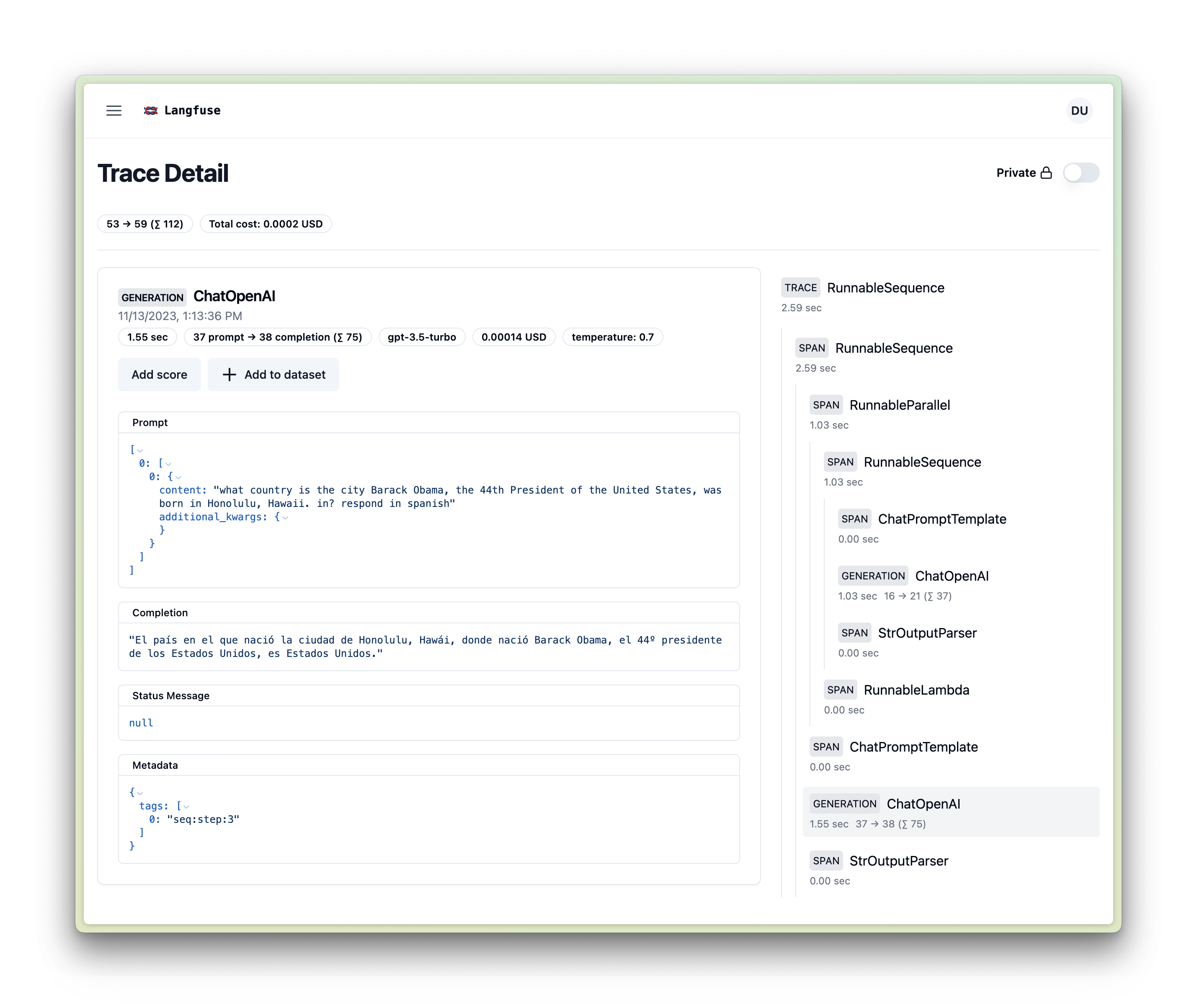

+ "### Sequential Chain in Langchain Expression Language (LCEL)\n",

+ "\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 35

+ },

+ "id": "3-HEia6gNoAr",

+ "outputId": "ab223b99-4719-420c-ffe7-5444e4b67806"

+ },

+ "outputs": [],

+ "source": [

+ "from operator import itemgetter\n",

+ "from langchain_openai import ChatOpenAI\n",

+ "from langchain.prompts import ChatPromptTemplate\n",

+ "from langchain.schema import StrOutputParser\n",

+ "\n",

+ "langfuse_handler = CallbackHandler()\n",

+ "\n",

+ "prompt1 = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

+ "prompt2 = ChatPromptTemplate.from_template(\n",

+ " \"what country is the city {city} in? respond in {language}\"\n",

+ ")\n",

+ "model = ChatOpenAI()\n",

+ "chain1 = prompt1 | model | StrOutputParser()\n",

+ "chain2 = (\n",

+ " {\"city\": chain1, \"language\": itemgetter(\"language\")}\n",

+ " | prompt2\n",

+ " | model\n",

+ " | StrOutputParser()\n",

+ ")\n",

+ "\n",

+ "chain2.invoke({\"person\": \"obama\", \"language\": \"spanish\"}, config={\"callbacks\":[langfuse_handler]})"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "NlBSpILFXEXV"

+ },

+ "source": [

+ "#### Runnable methods\n",

+ "\n",

+ "Runnables are units of work that can be invoked, batched, streamed, transformed and composed.\n",

+ "\n",

+ "The examples below show how to use the following methods with Langfuse:\n",

+ "\n",

+ "- invoke/ainvoke: Transforms a single input into an output.\n",

+ "\n",

+ "- batch/abatch: Efficiently transforms multiple inputs into outputs.\n",

+ "\n",

+ "- stream/astream: Streams output from a single input as it’s produced."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "Y8N8pybGXEXV",

+ "outputId": "df6d23fc-ed65-4fd2-d0d6-3b9f97e3a497"

+ },

+ "outputs": [],

+ "source": [

+ "# Async Invoke\n",

+ "await chain2.ainvoke({\"person\": \"biden\", \"language\": \"german\"}, config={\"callbacks\":[langfuse_handler]})\n",

+ "\n",

+ "# Batch\n",

+ "chain2.batch([{\"person\": \"elon musk\", \"language\": \"english\"}, {\"person\": \"mark zuckerberg\", \"language\": \"english\"}], config={\"callbacks\":[langfuse_handler]})\n",

+ "\n",

+ "# Async Batch\n",

+ "await chain2.abatch([{\"person\": \"jeff bezos\", \"language\": \"english\"}, {\"person\": \"tim cook\", \"language\": \"english\"}], config={\"callbacks\":[langfuse_handler]})\n",

+ "\n",

+ "# Stream\n",

+ "for chunk in chain2.stream({\"person\": \"steve jobs\", \"language\": \"english\"}, config={\"callbacks\":[langfuse_handler]}):\n",

+ " print(\"Streaming chunk:\", chunk)\n",

+ "\n",

+ "# Async Stream\n",

+ "async for chunk in chain2.astream({\"person\": \"bill gates\", \"language\": \"english\"}, config={\"callbacks\":[langfuse_handler]}):\n",

+ " print(\"Async Streaming chunk:\", chunk)\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "5v6TUIrVGkn3"

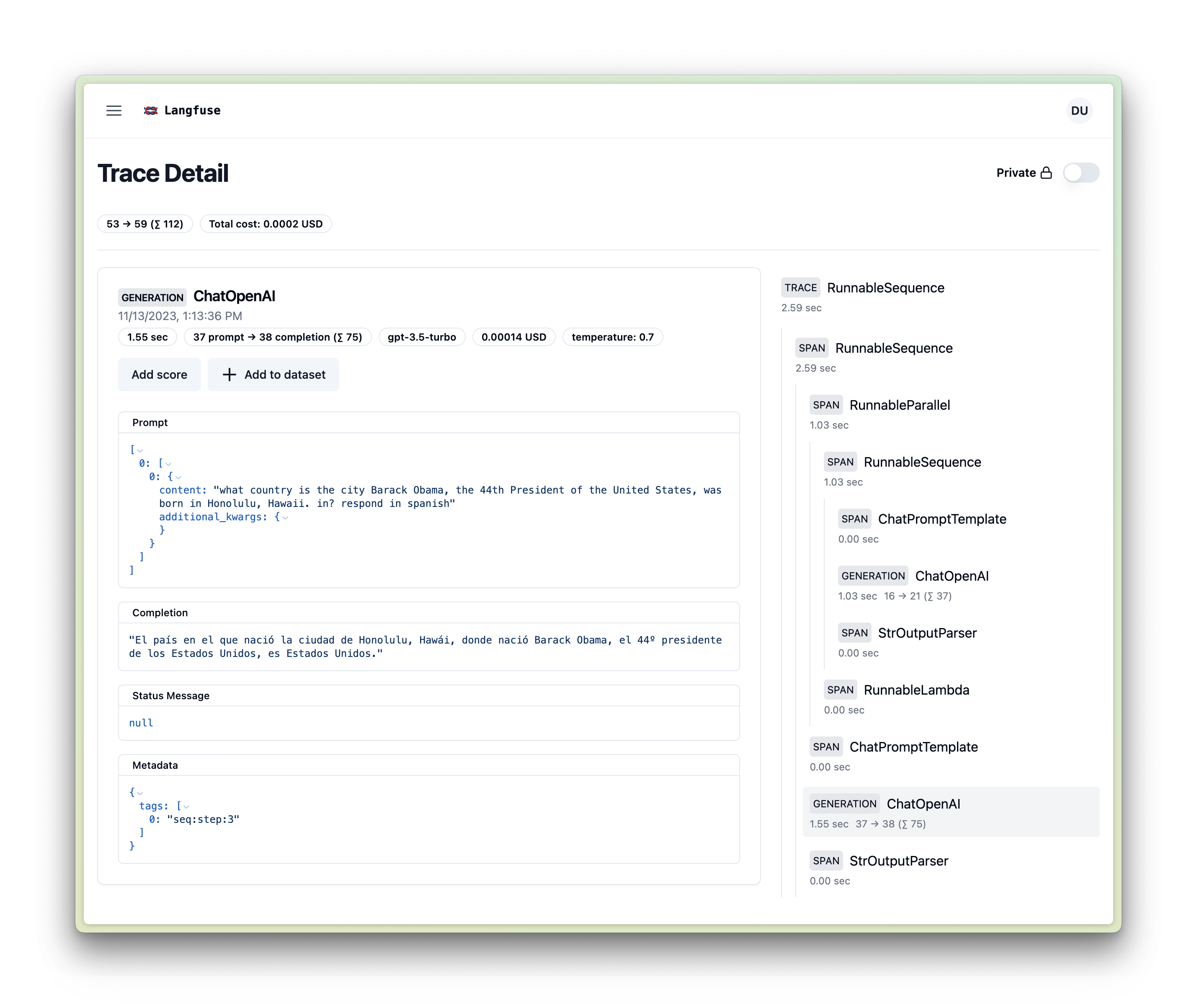

+ },

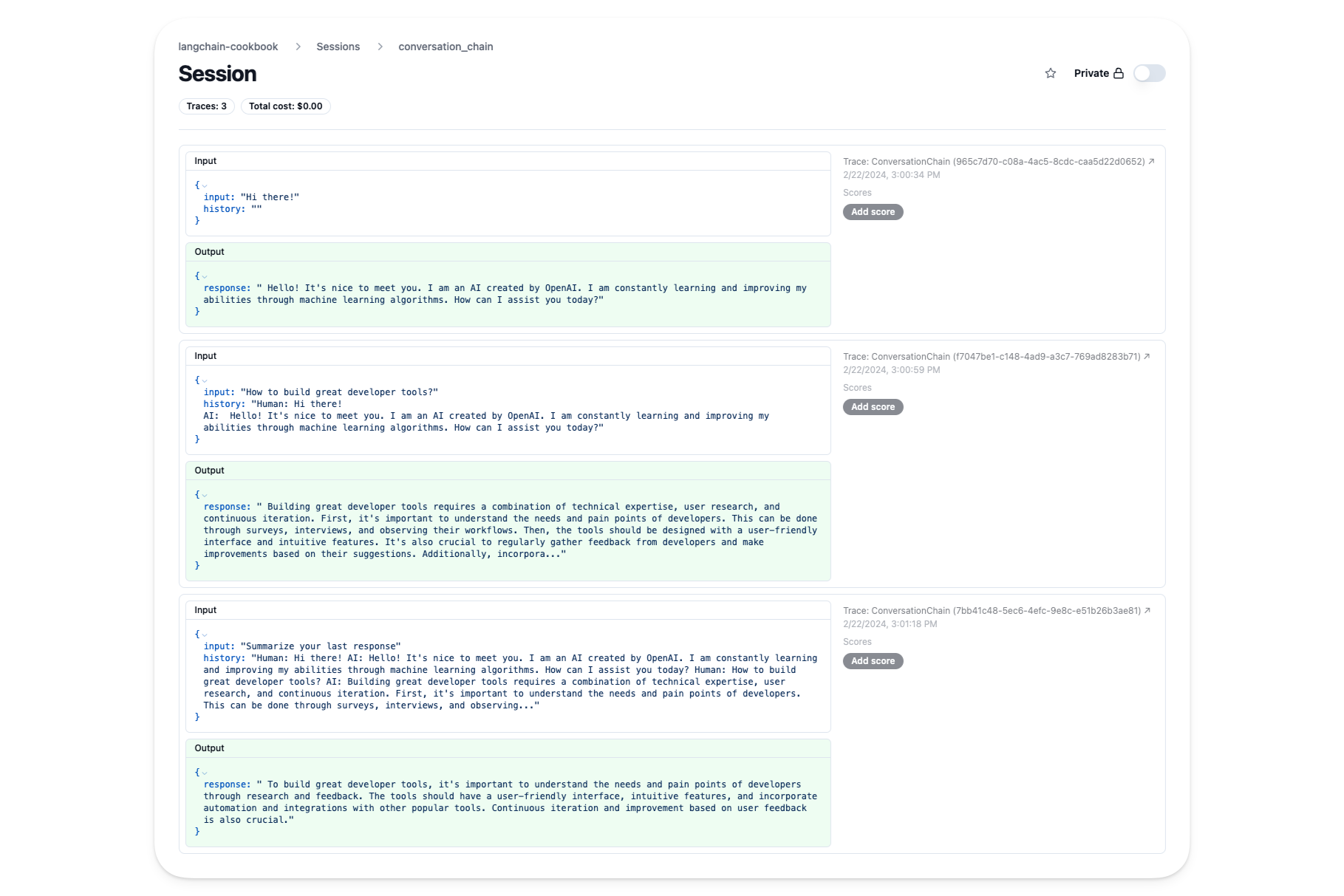

+ "source": [

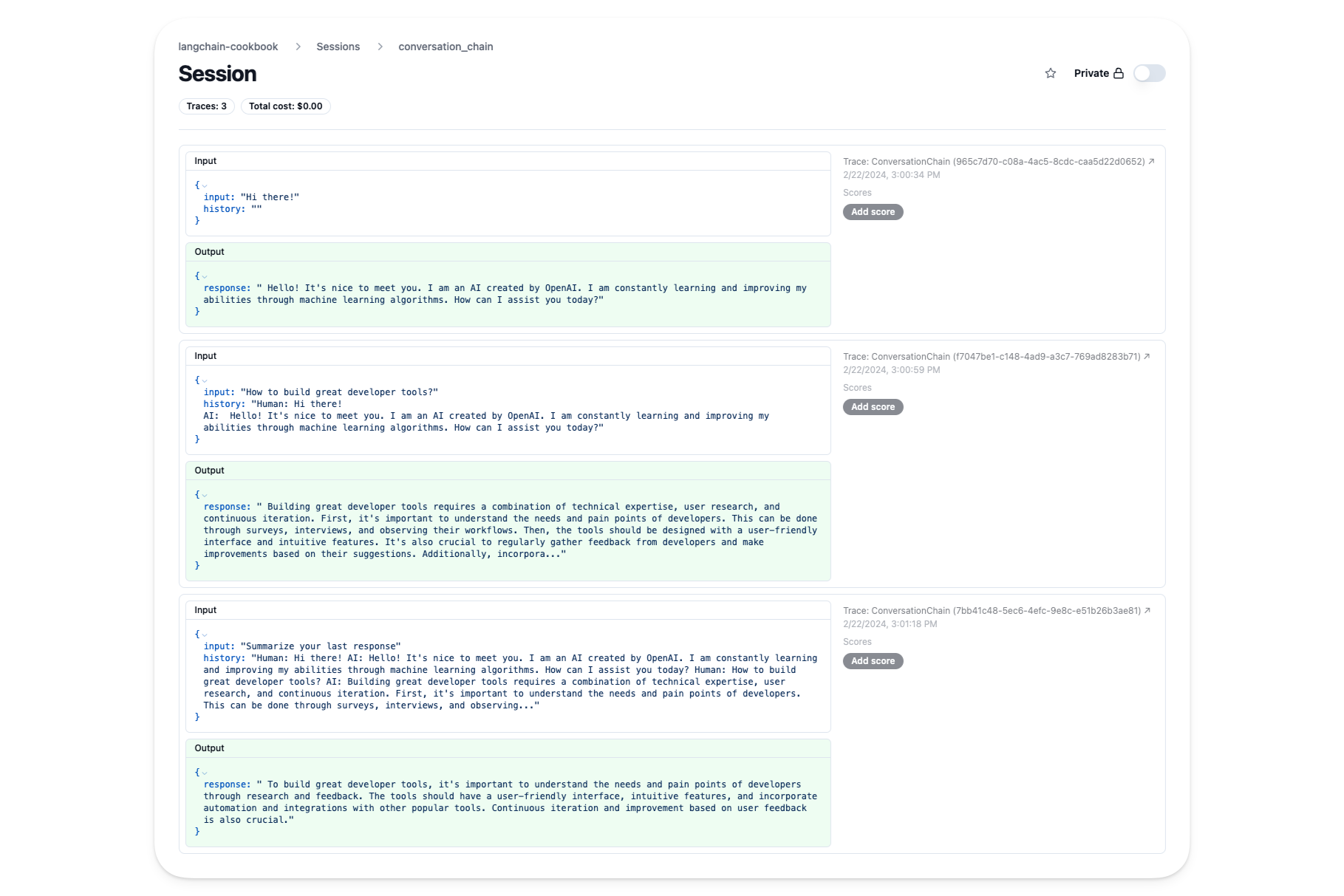

+ "### ConversationChain\n",

+ "\n",

+ "We'll use a [session](https://langfuse.com/docs/tracing-features/sessions) in Langfuse to track this conversation with each invocation being a single trace.\n",

+ "\n",

+ "In addition to the traces of each run, you also get a conversation view of the entire session:\n",

+ "\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "8HXyXC2LGga6",

+ "outputId": "5a0a0674-4982-4758-d077-4012bb1fa0ec"

+ },

+ "outputs": [],

+ "source": [

+ "from langchain.chains import ConversationChain\n",

+ "from langchain.memory import ConversationBufferMemory\n",

+ "from langchain_openai import OpenAI\n",

+ "\n",

+ "llm = OpenAI(temperature=0)\n",

+ "\n",

+ "conversation = ConversationChain(\n",

+ " llm=llm, memory=ConversationBufferMemory()\n",

+ ")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "EWRj0qvKHLNE"

+ },

+ "outputs": [],

+ "source": [

+ "# Create a callback handler with a session\n",

+ "langfuse_handler = CallbackHandler(session_id=\"conversation_chain\")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 71

+ },

+ "id": "aIHmNekVHItt",

+ "outputId": "4a7193f0-94f8-4f86-fac9-5ef94ef4b5f8"

+ },

+ "outputs": [],

+ "source": [

+ "conversation.predict(input=\"Hi there!\", callbacks=[langfuse_handler])"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 160

+ },

+ "id": "tsAunGSwHkrt",

+ "outputId": "6bfa7080-aa89-4173-9440-1bdd06a0aa4f"

+ },

+ "outputs": [],

+ "source": [

+ "conversation.predict(input=\"How to build great developer tools?\", callbacks=[langfuse_handler])"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 107

+ },

+ "id": "m8O6hShcHsGe",

+ "outputId": "b0b216ce-e6b8-4c44-8083-41a56ba0408b"

+ },

+ "outputs": [],

+ "source": [

+ "conversation.predict(input=\"Summarize your last response\", callbacks=[langfuse_handler])"

+ ]

+ },

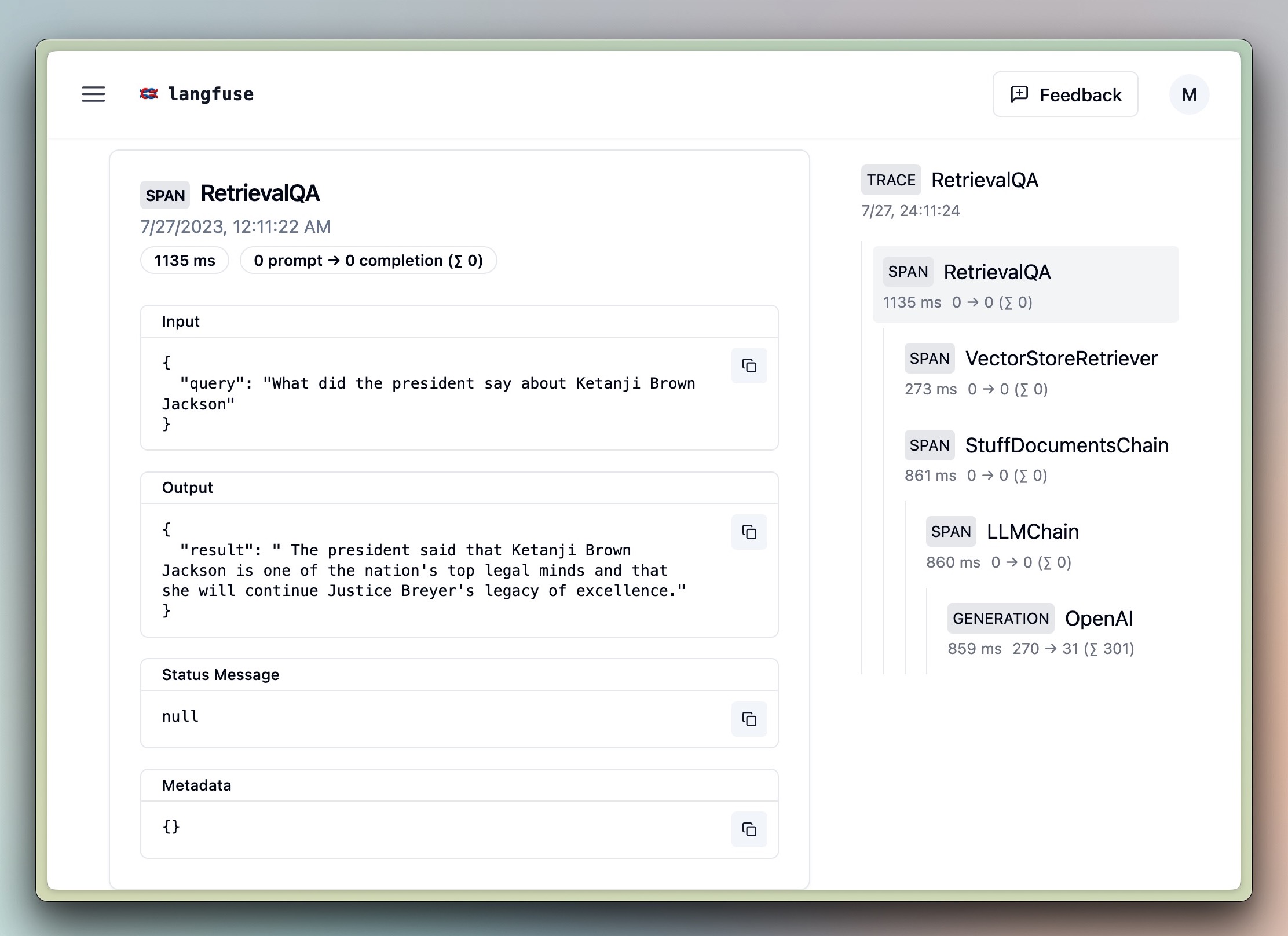

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "FP5avhNb3TBH"

+ },

+ "source": [

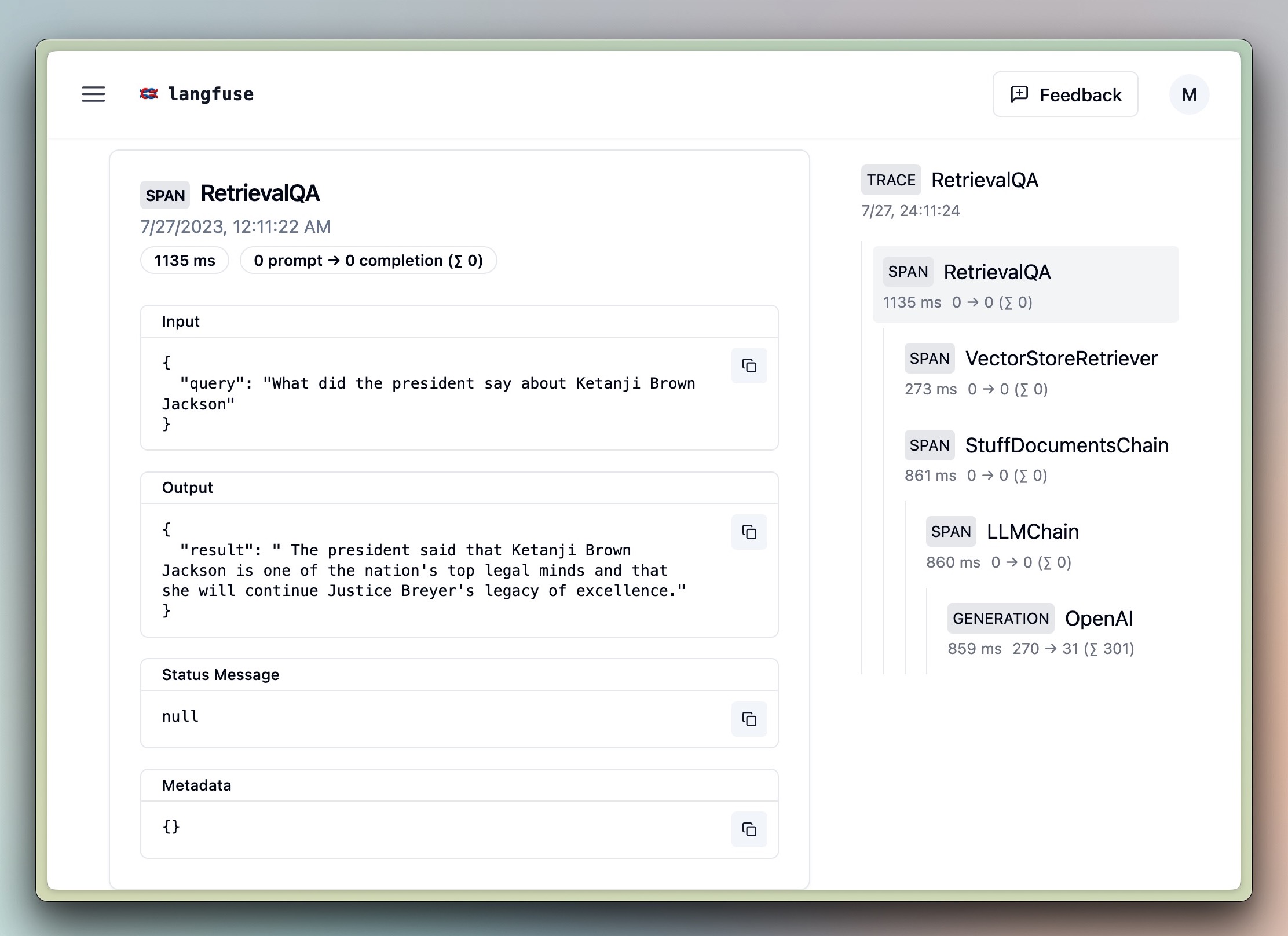

+ "### RetrievalQA\n",

+ "\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "wjiWEkRUFzCf"

+ },

+ "outputs": [],

+ "source": [

+ "import os\n",

+ "os.environ[\"SERPAPI_API_KEY\"] = \"\""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "p0CgEPSlEpkC",

+ "outputId": "36c800af-025d-407e-eca1-c215bba62cd2"

+ },

+ "outputs": [],

+ "source": [

+ "%pip install unstructured selenium langchain-chroma --upgrade"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 438

+ },

+ "id": "kHDVa-Ssb-KT",

+ "outputId": "efab8170-76b9-412e-c086-365a16f065a9"

+ },

+ "outputs": [],

+ "source": [

+ "from langchain_community.document_loaders import SeleniumURLLoader\n",

+ "from langchain_chroma import Chroma\n",

+ "from langchain_text_splitters import CharacterTextSplitter\n",

+ "from langchain_openai import OpenAIEmbeddings\n",

+ "from langchain.chains import RetrievalQA\n",

+ "\n",

+ "langfuse_handler = CallbackHandler()\n",

+ "\n",

+ "urls = [\n",

+ " \"https://raw.githubusercontent.com/langfuse/langfuse-docs/main/public/state_of_the_union.txt\",\n",

+ "]\n",

+ "loader = SeleniumURLLoader(urls=urls)\n",

+ "llm = OpenAI()\n",

+ "documents = loader.load()\n",

+ "text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)\n",

+ "texts = text_splitter.split_documents(documents)\n",

+ "embeddings = OpenAIEmbeddings()\n",

+ "docsearch = Chroma.from_documents(texts, embeddings)\n",

+ "query = \"What did the president say about Ketanji Brown Jackson\"\n",

+ "chain = RetrievalQA.from_chain_type(\n",

+ " llm,\n",

+ " retriever=docsearch.as_retriever(search_kwargs={\"k\": 1}),\n",

+ ")\n",

+ "\n",

+ "chain.invoke(query, config={\"callbacks\":[langfuse_handler]})"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "JCmI0I20-sbI"

+ },

+ "source": [

+ "### Agent"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "5zNewss5YsbF",

+ "outputId": "76f875d3-db43-4466-997b-9c7a2ecd77dc"

+ },

+ "outputs": [],

+ "source": [

+ "%pip install google-search-results"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/"

+ },

+ "id": "ReaHdQOT-S3n",

+ "outputId": "177a9a49-539e-4d36-a54c-94d8eb2de067"

+ },

+ "outputs": [],

+ "source": [

+ "from langchain.agents import AgentExecutor, load_tools, create_openai_functions_agent\n",

+ "from langchain_openai import ChatOpenAI\n",

+ "from langchain import hub\n",

+ "\n",

+ "langfuse_handler = CallbackHandler()\n",

+ "\n",

+ "llm = ChatOpenAI(model=\"gpt-3.5-turbo\", temperature=0)\n",

+ "tools = load_tools([\"serpapi\"])\n",

+ "prompt = hub.pull(\"hwchase17/openai-functions-agent\")\n",

+ "agent = create_openai_functions_agent(llm, tools, prompt)\n",

+ "agent_executor = AgentExecutor(agent=agent, tools=tools)\n",

+ "\n",

+ "agent_executor.invoke({\"input\": \"What is Langfuse?\"}, config={\"callbacks\":[langfuse_handler]})"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "OIxwkX9p1ZR7"

+ },

+ "source": [

+ "### AzureOpenAI"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "b43rIMig1ZR7"

+ },

+ "outputs": [],

+ "source": [

+ "os.environ[\"AZURE_OPENAI_ENDPOINT\"] = \"\"\n",

+ "os.environ[\"AZURE_OPENAI_API_KEY\"] = \"\"\n",

+ "os.environ[\"OPENAI_API_TYPE\"] = \"azure\"\n",

+ "os.environ[\"OPENAI_API_VERSION\"] = \"2023-09-01-preview\""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "_lLdPwnr1ZR7"

+ },

+ "outputs": [],

+ "source": [

+ "from langchain_openai import AzureChatOpenAI\n",

+ "from langchain.prompts import ChatPromptTemplate\n",

+ "\n",

+ "langfuse_handler = CallbackHandler()\n",

+ "\n",

+ "prompt = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

+ "model = AzureChatOpenAI(\n",

+ " deployment_name=\"gpt-35-turbo\",\n",

+ " model_name=\"gpt-3.5-turbo\",\n",

+ ")\n",

+ "chain = prompt | model\n",

+ "\n",

+ "chain.invoke({\"person\": \"Satya Nadella\"}, config={\"callbacks\":[langfuse_handler]})"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "ZUenj0aca9qo"

+ },

+ "source": [

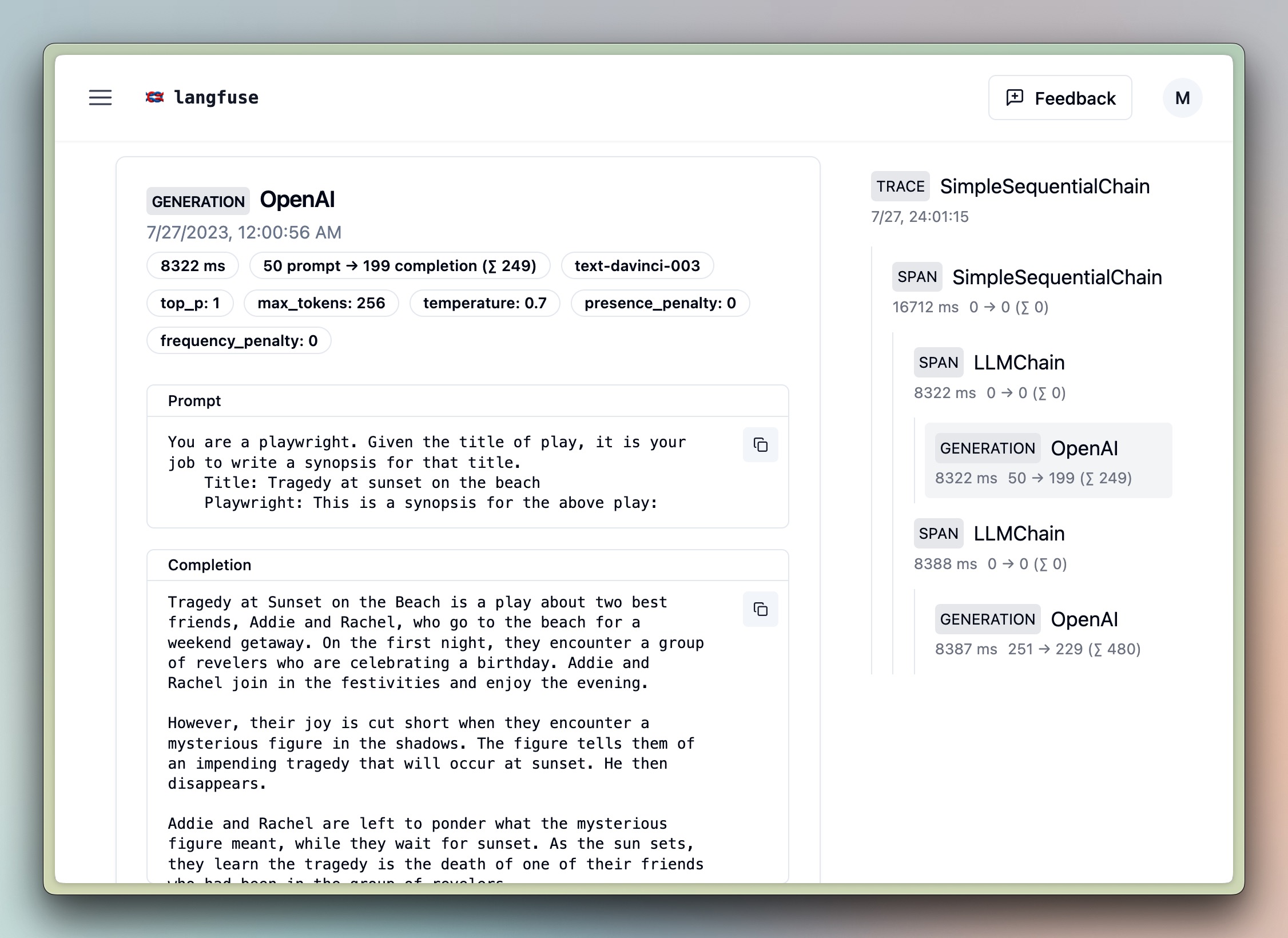

+ "### Sequential Chain [Legacy]\n",

+ "\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "dTagwV_cbFVr"

+ },

+ "outputs": [],

+ "source": [

+ "# further imports\n",

+ "from langchain_openai import OpenAI\n",

+ "from langchain.chains import LLMChain, SimpleSequentialChain\n",

+ "from langchain.prompts import PromptTemplate\n",

+ "\n",

+ "llm = OpenAI()\n",

+ "template = \"\"\"You are a playwright. Given the title of play, it is your job to write a synopsis for that title.\n",

+ " Title: {title}\n",

+ " Playwright: This is a synopsis for the above play:\"\"\"\n",

+ "prompt_template = PromptTemplate(input_variables=[\"title\"], template=template)\n",

+ "synopsis_chain = LLMChain(llm=llm, prompt=prompt_template)\n",

+ "template = \"\"\"You are a play critic from the New York Times. Given the synopsis of play, it is your job to write a review for that play.\n",

+ " Play Synopsis:\n",

+ " {synopsis}\n",

+ " Review from a New York Times play critic of the above play:\"\"\"\n",

+ "prompt_template = PromptTemplate(input_variables=[\"synopsis\"], template=template)\n",

+ "review_chain = LLMChain(llm=llm, prompt=prompt_template)\n",

+ "overall_chain = SimpleSequentialChain(\n",

+ " chains=[synopsis_chain, review_chain],\n",

+ ")\n",

+ "\n",

+ "# invoke\n",

+ "review = overall_chain.invoke(\"Tragedy at sunset on the beach\", {\"callbacks\":[langfuse_handler]}) # add the handler to the run method\n",

+ "# run [LEGACY]\n",

+ "review = overall_chain.run(\"Tragedy at sunset on the beach\", callbacks=[langfuse_handler])# add the handler to the run method"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "AmntBjLSc_pZ"

+ },

+ "source": [

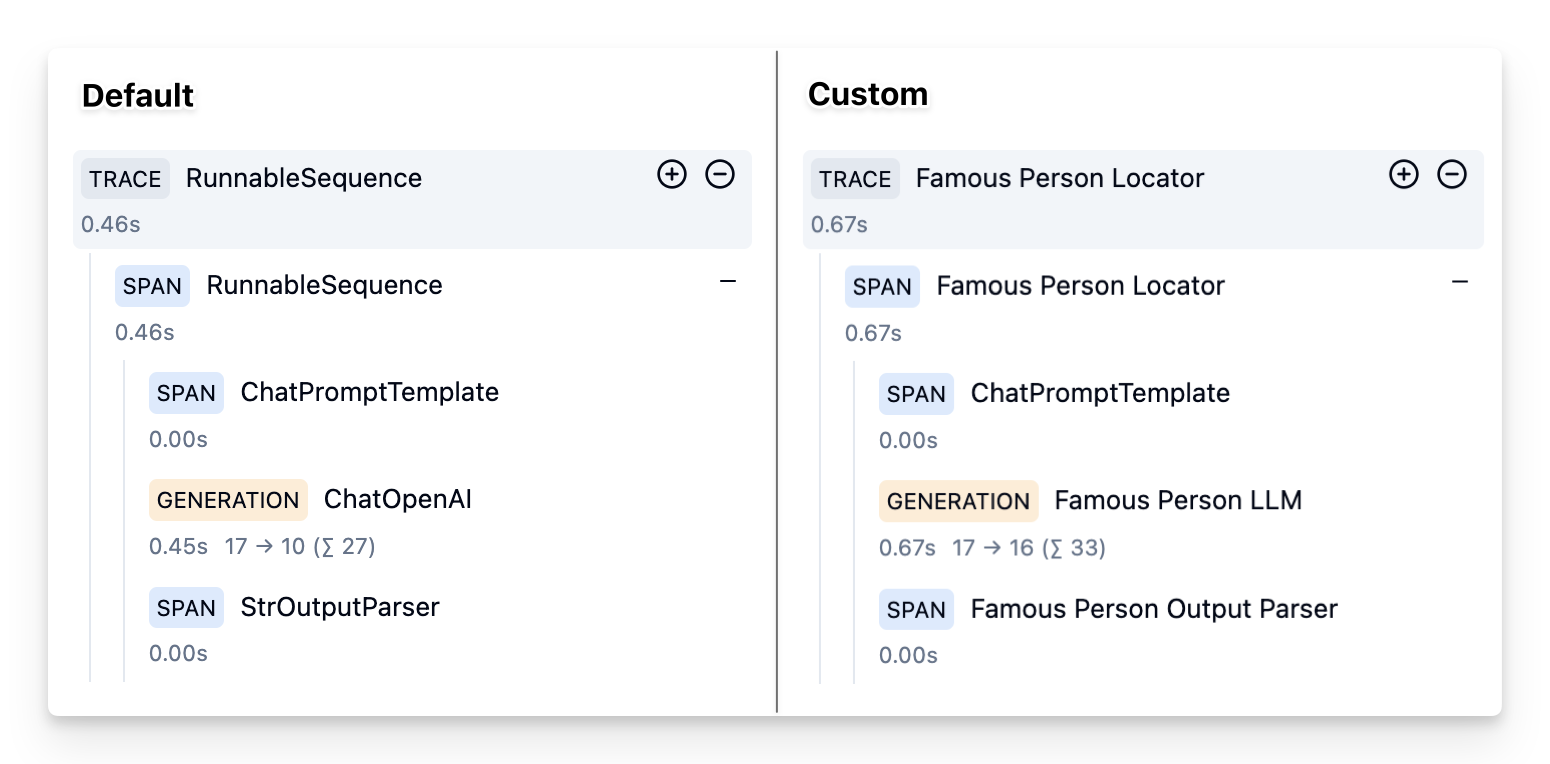

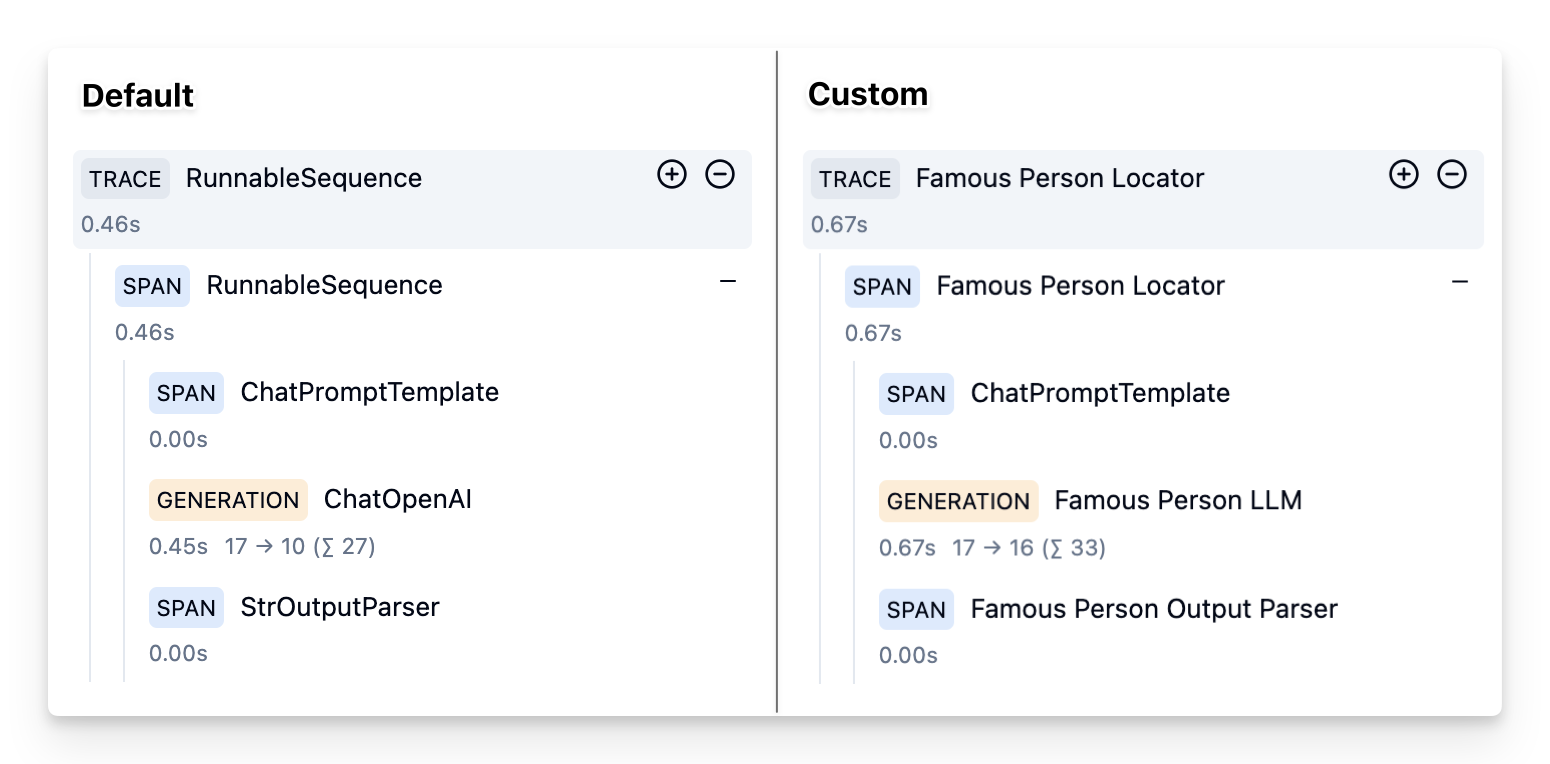

+ "## Customize trace names via run_name\n",

+ "\n",

+ "By default, Langfuse uses the Langchain run_name as trace/observation names. For more complex/custom chains, it can be useful to customize the names via own run_names.\n",

+ "\n",

+ "\n",

+ "\n",

+ "**Example without custom run names**"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 35

+ },

+ "id": "JQWBXsbudMdW",

+ "outputId": "e3249b07-0094-401c-c371-6a5159d6ebf0"

+ },

+ "outputs": [],

+ "source": [

+ "prompt = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

+ "model = ChatOpenAI()\n",

+ "chain = prompt1 | model | StrOutputParser()\n",

+ "chain.invoke({\"person\": \"Grace Hopper\"}, config={\n",

+ " \"callbacks\":[langfuse_handler]\n",

+ " })"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "BCwmF2MFdq2P"

+ },

+ "source": [

+ "### Via Runnable Config"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 35

+ },

+ "id": "rWiKr2i7dVrI",

+ "outputId": "3bdc4e3f-bb16-44f7-a479-6855002fdafc"

+ },

+ "outputs": [],

+ "source": [

+ "prompt = ChatPromptTemplate.from_template(\"what is the city {person} is from?\").with_config(run_name=\"Famous Person Prompt\")\n",

+ "model = ChatOpenAI().with_config(run_name=\"Famous Person LLM\")\n",

+ "output_parser = StrOutputParser().with_config(run_name=\"Famous Person Output Parser\")\n",

+ "chain = (prompt1 | model | output_parser).with_config(run_name=\"Famous Person Locator\")\n",

+ "\n",

+ "chain.invoke({\"person\": \"Grace Hopper\"}, config={\n",

+ " \"callbacks\":[langfuse_handler]\n",

+ "})"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "TnUWivDZfElb"

+ },

+ "source": [

+ "Example trace: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/ec9fcc46-ca38-4bdb-9482-eb06a5f90944"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "oRWBK-XIe6C0"

+ },

+ "source": [

+ "### Via Run Config"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 35

+ },

+ "id": "ybc7Bno7eGvh",

+ "outputId": "02700a8e-a83c-4402-f436-ba5e5ef1288c"

+ },

+ "outputs": [],

+ "source": [

+ "\n",

+ "prompt = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

+ "model = ChatOpenAI()\n",

+ "chain = prompt1 | model | StrOutputParser()\n",

+ "chain.invoke({\"person\": \"Grace Hopper\"}, config={\n",

+ " \"run_name\": \"Famous Person Locator\",\n",

+ " \"callbacks\":[langfuse_handler]\n",

+ " })"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "uK_GDS0ofGOt"

+ },

+ "source": [

+ "Example trace: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/b48204e2-fd48-487b-8f66-015e3f10613d"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "GEWWS8PGo4A1"

+ },

+ "source": [

+ "## Interoperability with Langfuse Python SDK\n",

+ "\n",

+ "You can use this integration in combination with the `observe()` decorator from the Langfuse Python SDK. Thereby, you can trace non-Langchain code, combine multiple Langchain invocations in a single trace, and use the full functionality of the Langfuse Python SDK.\n",

+ "\n",

+ "The `langfuse_context.get_current_langchain_handler()` method exposes a LangChain callback handler in the context of a trace or span when using `decorators`. Learn more about Langfuse Tracing [here](https://langfuse.com/docs/tracing) and this functionality [here](https://langfuse.com/docs/sdk/python/decorators#langchain).\n"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "q1zlFuIimJfT"

+ },

+ "source": [

+ "### How it works"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "Op7qwM0Y-1bp"

+ },

+ "outputs": [],

+ "source": [

+ "from langfuse.decorators import langfuse_context, observe\n",

+ "\n",

+ "# Create a trace via Langfuse decorators and get a Langchain Callback handler for it\n",

+ "@observe() # automtically log function as a trace to Langfuse\n",

+ "def main():\n",

+ " # update trace attributes (e.g, name, session_id, user_id)\n",

+ " langfuse_context.update_current_trace(\n",

+ " name=\"custom-trace\",\n",

+ " session_id=\"user-1234\",\n",

+ " user_id=\"session-1234\",\n",

+ " )\n",

+ " # get the langchain handler for the current trace\n",

+ " langfuse_context.get_current_langchain_handler()\n",

+ "\n",

+ " # use the handler to trace langchain runs ...\n",

+ "\n",

+ "main()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "HRX2zFCOmwXH"

+ },

+ "source": [

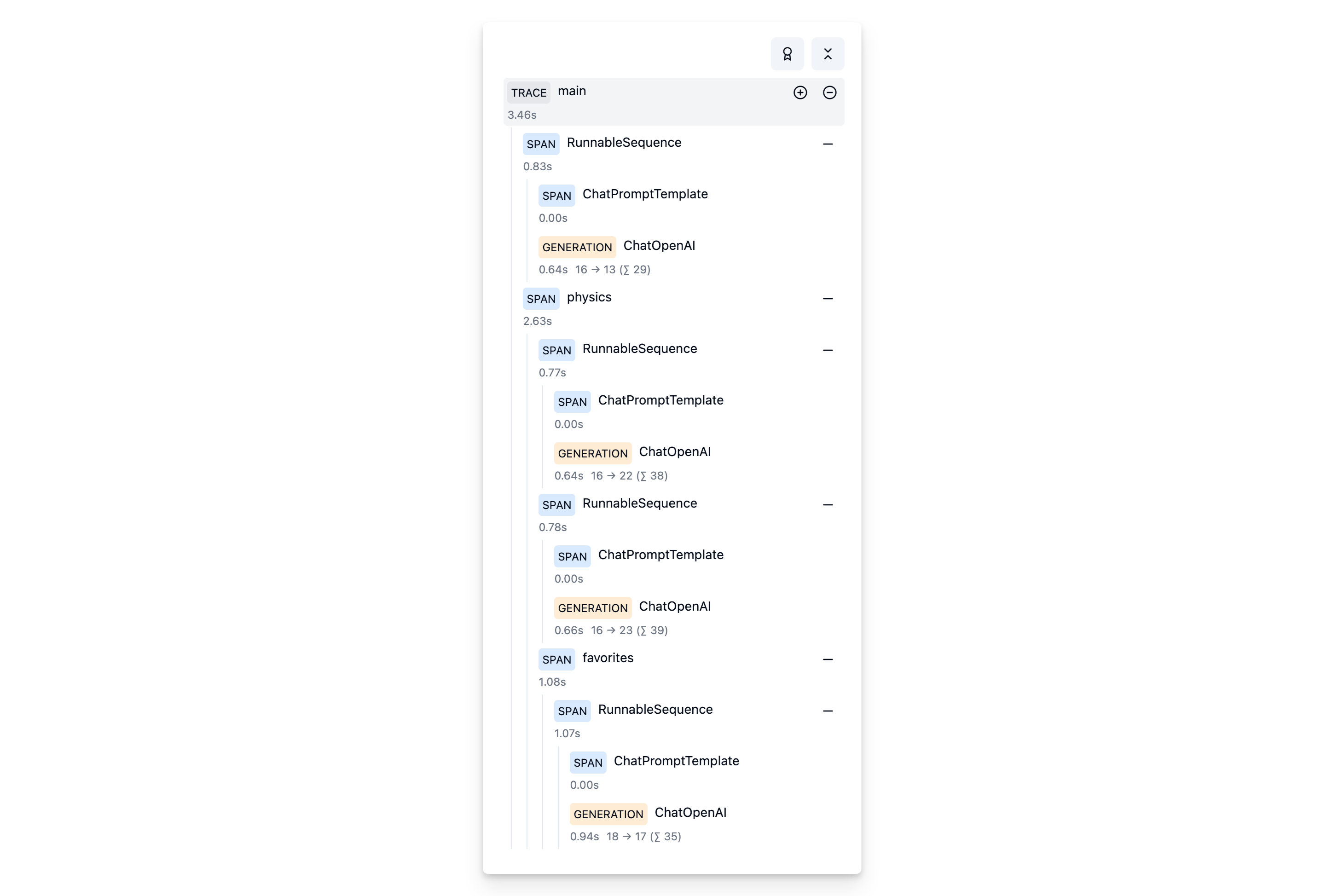

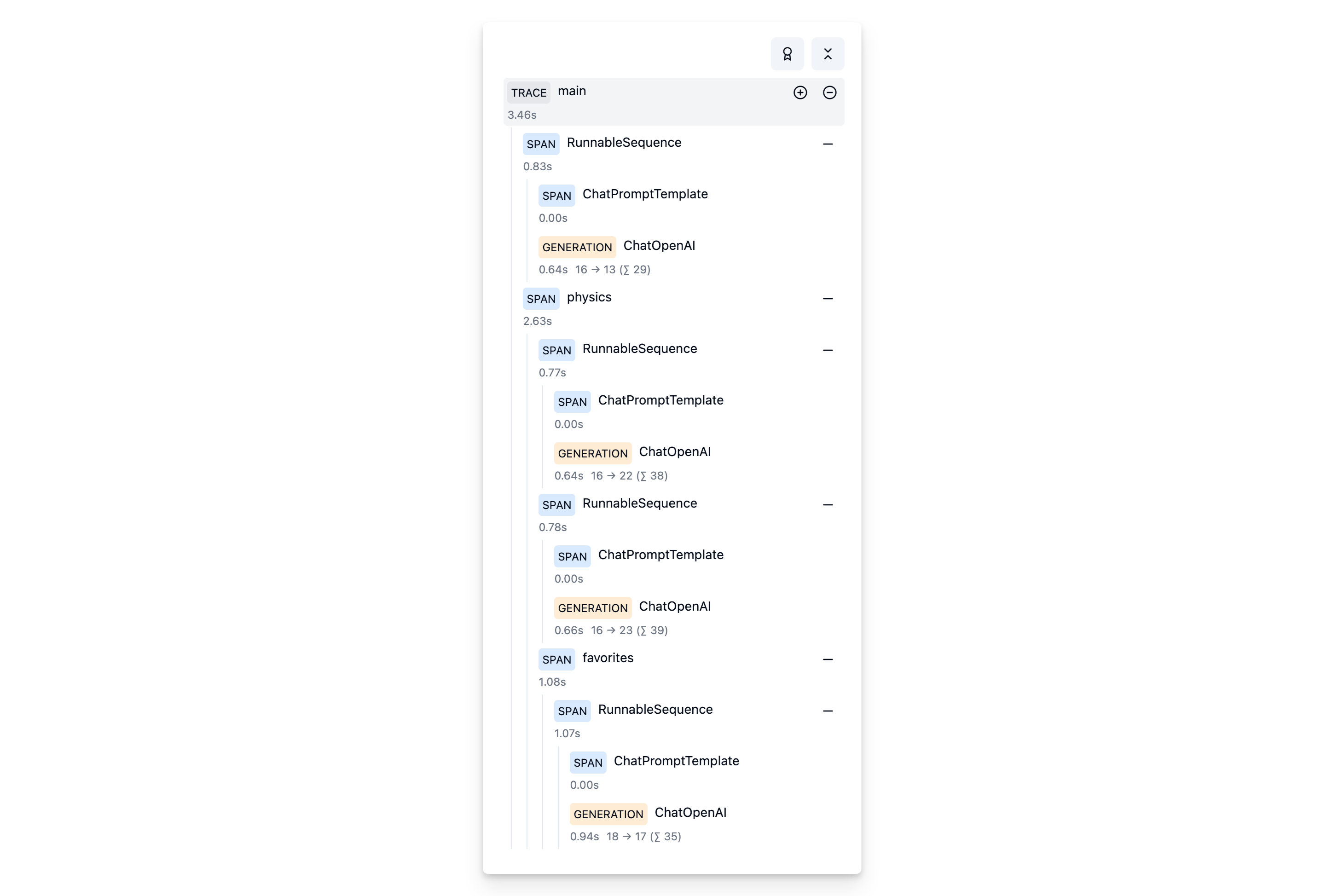

+ "### Example\n",

+ "\n",

+ "We'll run the same chain multiple times at different places within the hierarchy of a trace.\n",

+ "\n",

+ "```\n",

+ "TRACE: person-locator\n",

+ "|\n",

+ "|-- SPAN: Chain (Alan Turing)\n",

+ "|\n",

+ "|-- SPAN: Physics\n",

+ "| |\n",

+ "| |-- SPAN: Chain (Albert Einstein)\n",

+ "| |\n",

+ "| |-- SPAN: Chain (Isaac Newton)\n",

+ "| |\n",

+ "| |-- SPAN: Favorites\n",

+ "| | |\n",

+ "| | |-- SPAN: Chain (Richard Feynman)\n",

+ "```\n",

+ "\n",

+ "Setup chain"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "ASq5sHErkmLB"

+ },

+ "outputs": [],

+ "source": [

+ "from langchain_openai import ChatOpenAI\n",

+ "from langchain.prompts import ChatPromptTemplate\n",

+ "\n",

+ "prompt = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

+ "model = ChatOpenAI()\n",

+ "\n",

+ "chain = prompt | model"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "fvJ1pv4MqzTi"

+ },

+ "source": [

+ "Invoke it multiple times as part of a nested trace."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "CnHq-7QD3uAa"

+ },

+ "outputs": [],

+ "source": [

+ "from langfuse.decorators import langfuse_context, observe\n",

+ "\n",

+ "# On span \"Physics\".\"Favorites\"\n",

+ "@observe() # decorator to automatically log function as sub-span to Langfuse\n",

+ "def favorites():\n",

+ " # get the langchain handler for the current sub-span\n",

+ " langfuse_handler = langfuse_context.get_current_langchain_handler()\n",

+ " # invoke chain with langfuse handler\n",

+ " chain.invoke({\"person\": \"Richard Feynman\"},\n",

+ " config={\"callbacks\": [langfuse_handler]})\n",

+ "\n",

+ "# On span \"Physics\"\n",

+ "@observe() # decorator to automatically log function as span to Langfuse\n",

+ "def physics():\n",

+ " # get the langchain handler for the current span\n",

+ " langfuse_handler = langfuse_context.get_current_langchain_handler()\n",

+ " # invoke chains with langfuse handler\n",

+ " chain.invoke({\"person\": \"Albert Einstein\"},\n",

+ " config={\"callbacks\": [langfuse_handler]})\n",

+ " chain.invoke({\"person\": \"Isaac Newton\"},\n",

+ " config={\"callbacks\": [langfuse_handler]})\n",

+ " favorites()\n",

+ "\n",

+ "# On trace\n",

+ "@observe() # decorator to automatically log function as trace to Langfuse\n",

+ "def main():\n",

+ " # get the langchain handler for the current trace\n",

+ " langfuse_handler = langfuse_context.get_current_langchain_handler()\n",

+ " # invoke chain with langfuse handler\n",

+ " chain.invoke({\"person\": \"Alan Turing\"},\n",

+ " config={\"callbacks\": [langfuse_handler]})\n",

+ " physics()\n",

+ "\n",

+ "main()"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "MZ3q8iMDGOfd"

+ },

+ "source": [

+ "View it in Langfuse\n",

+ "\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "AxQlUOmVPEwz"

+ },

+ "source": [

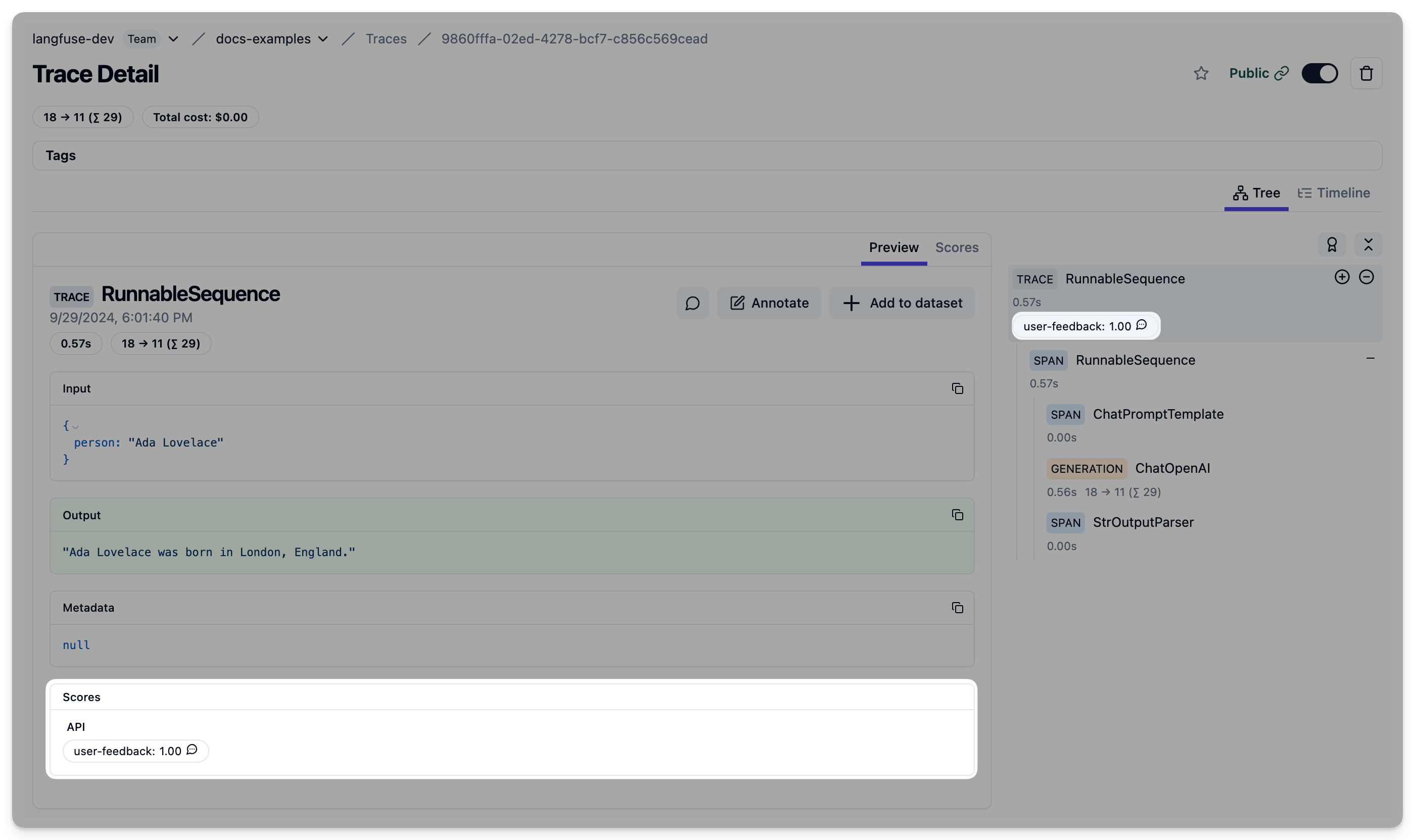

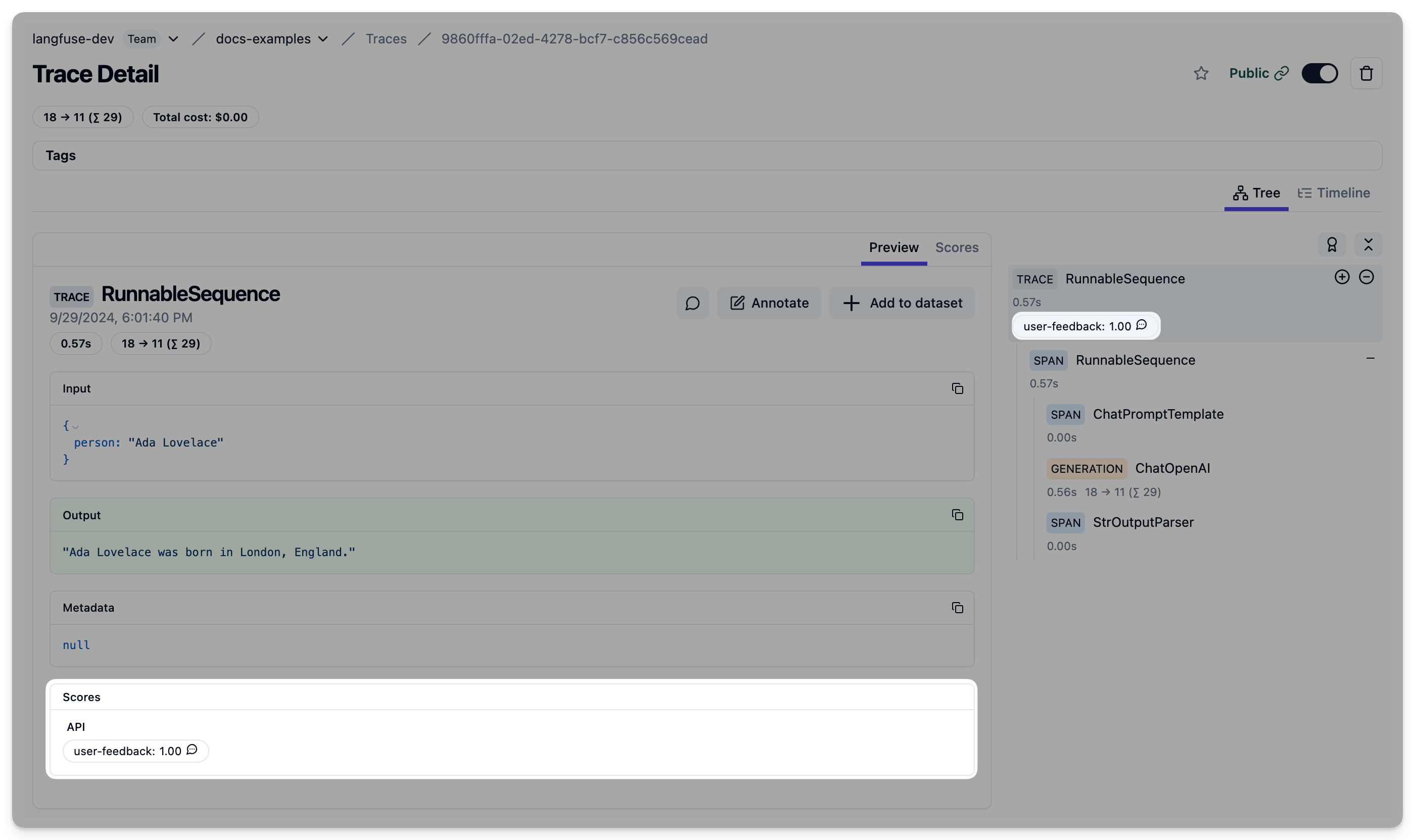

+ "## Adding evaluation/feedback scores to traces\n",

+ "\n",

+ "Evaluation results and user feedback are recorded as [scores](https://langfuse.com/docs/scores) in Langfuse.\n",

+ "\n",

+ "To add a score to a trace, you need to know the trace_id. There are two options to achieve this when using LangChain:\n",

+ "\n",

+ "1. Provide a predefined LangChain run_id\n",

+ "2. Use the Langfuse Decorator to get the trace_id\n",

+ "\n",

+ ""

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "O-g2NmhDZW0C"

+ },

+ "source": [

+ "### Predefined LangChain `run_id`\n",

+ "\n",

+ "Langfuse uses the LangChain run_id as a trace_id. Thus you can provide a custom run_id to the runnable config in order to later add scores to the trace."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "colab": {

+ "base_uri": "https://localhost:8080/",

+ "height": 35

+ },

+ "id": "qy0YKqnuZs4t",

+ "outputId": "19173bf1-422b-4bb2-c2cf-28578b3b06df"

+ },

+ "outputs": [],

+ "source": [

+ "from operator import itemgetter\n",

+ "from langchain_openai import ChatOpenAI\n",

+ "from langchain.prompts import ChatPromptTemplate\n",

+ "from langchain.schema import StrOutputParser\n",

+ "import uuid\n",

+ "\n",

+ "predefined_run_id = str(uuid.uuid4())\n",

+ "\n",

+ "langfuse_handler = CallbackHandler()\n",

+ "\n",

+ "prompt = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

+ "model = ChatOpenAI()\n",

+ "chain = prompt1 | model | StrOutputParser()\n",

+ "\n",

+ "chain.invoke({\"person\": \"Ada Lovelace\"}, config={\n",

+ " \"run_id\": predefined_run_id,\n",

+ " \"callbacks\":[langfuse_handler]\n",

+ "})"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "69_1fkiBaX6v"

+ },

+ "outputs": [],

+ "source": [

+ "from langfuse import Langfuse\n",

+ "\n",

+ "langfuse = Langfuse()\n",

+ "\n",

+ "langfuse.score(\n",

+ " trace_id=predefined_run_id,\n",

+ " name=\"user-feedback\",\n",

+ " value=1,\n",

+ " comment=\"This was correct, thank you\"\n",

+ ");"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "TyUVWU_abAyX"

+ },

+ "source": [

+ "Example Trace in Langfuse: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/9860fffa-02ed-4278-bcf7-c856c569cead"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "fcnVMNogZlEr"

+ },

+ "source": [

+ "### Via Langfuse Decorator\n",

+ "\n",

+ "Alternatively, you can use the LangChain integration together with the [Langfuse @observe-decorator](https://langfuse.com/docs/sdk/python/decorators) for Python."

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "PudCopwEPFgh"

+ },

+ "outputs": [],

+ "source": [

+ "from langfuse.decorators import langfuse_context, observe\n",

+ "from operator import itemgetter\n",

+ "from langchain_openai import ChatOpenAI\n",

+ "from langchain.prompts import ChatPromptTemplate\n",

+ "from langchain.schema import StrOutputParser\n",

+ "import uuid\n",

+ "\n",

+ "prompt = ChatPromptTemplate.from_template(\"what is the city {person} is from?\")\n",

+ "model = ChatOpenAI()\n",

+ "chain = prompt1 | model | StrOutputParser()\n",

+ "\n",

+ "@observe()\n",

+ "def main(person):\n",

+ "\n",

+ " langfuse_handler = langfuse_context.get_current_langchain_handler()\n",

+ "\n",

+ " response = chain.invoke({\"person\": person}, config={\n",

+ " \"callbacks\":[langfuse_handler]\n",

+ " })\n",

+ "\n",

+ " trace_id = langfuse_context.get_current_trace_id()\n",

+ "\n",

+ " return trace_id, response\n",

+ "\n",

+ "\n",

+ "trace_id, response = main(\"Ada Lovelace\")"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": null,

+ "metadata": {

+ "id": "JgzuVhwycjTE"

+ },

+ "outputs": [],

+ "source": [

+ "from langfuse import Langfuse\n",

+ "\n",

+ "langfuse = Langfuse()\n",

+ "\n",

+ "langfuse.score(\n",

+ " trace_id=trace_id,\n",

+ " name=\"user-feedback\",\n",

+ " value=1,\n",

+ " comment=\"This was correct, thank you\"\n",

+ ");"

+ ]

+ },

+ {

+ "cell_type": "markdown",

+ "metadata": {

+ "id": "XZfTCj-9c0yP"

+ },

+ "source": [

+ "Example trace: https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/08bb7cf3-87c6-4a78-a3fc-72af8959a106"

+ ]

+ }

+ ],

+ "metadata": {

+ "colab": {

+ "provenance": []

+ },

+ "kernelspec": {

+ "display_name": "Python 3 (ipykernel)",

+ "language": "python",

+ "name": "python3"

+ },

+ "language_info": {

+ "codemirror_mode": {

+ "name": "ipython",

+ "version": 3

+ },

+ "file_extension": ".py",

+ "mimetype": "text/x-python",

+ "name": "python",

+ "nbconvert_exporter": "python",

+ "pygments_lexer": "ipython3",

+ "version": "3.12.2"

+ }

+ },

+ "nbformat": 4,

+ "nbformat_minor": 0

}

diff --git a/next-sitemap.config.js b/next-sitemap.config.js

index 6f84baac6..668c9983e 100644

--- a/next-sitemap.config.js

+++ b/next-sitemap.config.js

@@ -15,5 +15,9 @@ module.exports = {

// Exclude non-canonical pages from sitemap which are also part of the docs

...cookbookRoutes

.filter(({ docsPath }) => !!docsPath)

- .map(({ notebook }) => `/guides/cookbook/${notebook.replace(".ipynb", "")}`)],

+ .map(({ notebook }) => `/guides/cookbook/${notebook.replace(".ipynb", "")}`),

+ // Exclude _meta files

+ '*/_meta'

+ ],

+

}

\ No newline at end of file

diff --git a/package.json b/package.json

index ab8cb04ae..4e09fd931 100644

--- a/package.json

+++ b/package.json

@@ -39,10 +39,10 @@

"gpt3-tokenizer": "^1.1.5",

"langfuse": "^3.25.0",

"lucide-react": "^0.441.0",

- "next": "^14.2.12",

+ "next": "^14.2.13",

"next-sitemap": "^4.2.3",

- "nextra": "^2.13.4",

- "nextra-theme-docs": "^2.13.4",

+ "nextra": "^3.0.1",

+ "nextra-theme-docs": "^3.0.1",

"openai-edge": "^1.2.2",

"postcss": "^8.4.47",

"posthog-js": "^1.161.6",

diff --git a/pages/_app.mdx b/pages/_app.tsx

similarity index 100%

rename from pages/_app.mdx

rename to pages/_app.tsx

diff --git a/pages/_meta.json b/pages/_meta.json

deleted file mode 100644

index 6d27c6612..000000000

--- a/pages/_meta.json

+++ /dev/null

@@ -1,159 +0,0 @@

-{

- "index": {

- "type": "page",

- "title": "Langfuse",

- "display": "hidden",

- "theme": {

- "layout": "raw"

- }

- },

- "experimentation": {

- "title": "Experimentation",

- "type": "page",

- "display": "hidden"

- },

- "imprint": {

- "title": "Imprint",

- "type": "page",

- "display": "hidden"

- },

- "schedule-demo": {

- "type": "page",

- "title": "Schedule demo",

- "display": "hidden",

- "theme": {

- "layout": "raw"

- }

- },

- "docs": {

- "type": "page",

- "title": "Docs"

- },

- "guides": {

- "type": "page",

- "title": "Guides",

- "display": "hidden"

- },

- "faq": {

- "type": "page",

- "title": "FAQ",

- "display": "hidden"

- },

- "cookbook": {

- "type": "page",

- "title": "Cookbook",

- "display": "hidden"

- },

- "pricing": {

- "title": "Pricing",

- "type": "page",

- "theme": {

- "layout": "full"

- }

- },

- "changelog": {

- "type": "page",

- "title": "Changelog",

- "theme": {

- "layout": "full"

- }

- },

- "blog": {

- "title": "Blog",

- "type": "page",

- "theme": {

- "layout": "full"

- }

- },

- "demo": {

- "title": "Demo",

- "type": "menu",

- "items": {

- "try-yourself": {

- "title": "Interactive demo",

- "href": "/docs/demo"

- },

- "video": {

- "title": "Video (3 min)",

- "href": "/video"

- },

- "schedule-demo": {

- "title": "Schedule demo",

- "href": "/schedule-demo"

- }

- }

- },

- "careers": {

- "title": "Careers",

- "type": "page",

- "display": "hidden"

- },

- "support": {

- "title": "Support",

- "type": "page",

- "display": "hidden"

- },

- "why": {

- "title": "Why Langfuse",

- "type": "page",

- "display": "hidden",

- "theme": {

- "typesetting": "article",

- "timestamp": false

- }

- },

- "enterprise": {

- "title": "Enterprise",

- "type": "page",

- "display": "hidden",

- "theme": {

- "typesetting": "article",

- "timestamp": false

- }

- },

- "library": {

- "title": "Library",

- "type": "page",

- "display": "hidden",

- "theme": {

- "typesetting": "article",

- "timestamp": false

- }

- },

- "terms": {

- "title": "Terms and Conditions",

- "type": "page",

- "display": "hidden"

- },

- "privacy": {

- "title": "Privacy Policy",

- "type": "page",

- "display": "hidden"

- },

- "cookie-policy": {

- "title": "Cookie Policy",

- "type": "page",

- "display": "hidden"

- },

- "oss-friends": {

- "title": "OSS Friends",

- "type": "page",

- "display": "hidden"

- },

- "about": {

- "title": "About us",

- "type": "page",

- "display": "hidden",

- "theme": {

- "typesetting": "article",

- "timestamp": false

- }

- },

- "404": {

- "type": "page",

- "theme": {

- "typesetting": "article",

- "timestamp": false

- }

- }

-}

diff --git a/pages/_meta.tsx b/pages/_meta.tsx

new file mode 100644

index 000000000..d90359df0

--- /dev/null

+++ b/pages/_meta.tsx

@@ -0,0 +1,158 @@

+export default {

+ index: {

+ type: "page",

+ title: "Langfuse",

+ display: "hidden",

+ theme: {

+ layout: "raw",

+ },

+ },

+ experimentation: {

+ title: "Experimentation",

+ type: "page",

+ display: "hidden",

+ },

+ imprint: {

+ title: "Imprint",

+ type: "page",

+ display: "hidden",

+ },

+ "schedule-demo": {

+ type: "page",

+ title: "Schedule demo",

+ display: "hidden",

+ theme: {

+ layout: "raw",

+ },

+ },

+ docs: {

+ type: "page",

+ title: "Docs",

+ },

+ guides: {

+ type: "page",

+ title: "Guides",

+ // hidden from main menu via overrides.css, nextra display:hidden otherwise breaks type:page

+ },

+ faq: {

+ type: "page",

+ title: "FAQ",

+ // hidden from main menu via overrides.css, nextra display:hidden otherwise breaks type:page

+ },

+ pricing: {

+ title: "Pricing",

+ type: "page",

+ theme: {

+ layout: "full",

+ },

+ },

+ changelog: {

+ type: "page",

+ title: "Changelog",

+ theme: {

+ layout: "full",

+ },

+ },

+ blog: {

+ title: "Blog",

+ type: "page",

+ theme: {

+ layout: "full",

+ },

+ },

+ demo: {

+ title: "Demo",

+ type: "menu",

+ items: {

+ "try-yourself": {

+ title: "Interactive demo",

+ href: "/docs/demo",

+ },

+ video: {

+ title: "Video (3 min)",

+ href: "/video",

+ },

+ "schedule-demo": {

+ title: "Schedule demo",

+ href: "/schedule-demo",

+ },

+ },

+ },

+ careers: {

+ title: "Careers",

+ type: "page",

+ display: "hidden",

+ },

+ support: {

+ title: "Support",

+ type: "page",

+ display: "hidden",

+ },

+ why: {

+ title: "Why Langfuse",

+ type: "page",

+ display: "hidden",

+ theme: {

+ typesetting: "article",

+ timestamp: false,

+ },

+ },

+ enterprise: {

+ title: "Enterprise",

+ type: "page",

+ display: "hidden",

+ theme: {

+ typesetting: "article",

+ timestamp: false,

+ },

+ },

+ library: {

+ title: "Library",

+ type: "page",

+ display: "hidden",

+ theme: {

+ typesetting: "article",

+ timestamp: false,

+ },

+ },

+ terms: {

+ title: "Terms and Conditions",

+ type: "page",

+ display: "hidden",

+ },

+ privacy: {

+ title: "Privacy Policy",

+ type: "page",

+ display: "hidden",

+ },

+ "cookie-policy": {

+ title: "Cookie Policy",

+ type: "page",

+ display: "hidden",

+ },

+ "oss-friends": {

+ title: "OSS Friends",

+ type: "page",

+ display: "hidden",

+ theme: {

+ typesetting: "article",

+ timestamp: false,

+ },

+ },

+ about: {

+ title: "About us",

+ type: "page",

+ display: "hidden",

+ theme: {

+ typesetting: "article",

+ timestamp: false,

+ },

+ },

+ "404": {

+ type: "page",

+ theme: {

+ typesetting: "article",

+ timestamp: false,

+ },

+ },

+};

diff --git a/pages/blog/_meta.json b/pages/blog/_meta.json

deleted file mode 100644

index b30af8b8c..000000000

--- a/pages/blog/_meta.json

+++ /dev/null

@@ -1,12 +0,0 @@

-{

- "*": {

- "theme": {

- "toc": false,

- "sidebar": false,

- "pagination": true,

- "typesetting": "article",

- "layout": "default",

- "breadcrumb": false

- }

- }

-}

diff --git a/pages/blog/_meta.tsx b/pages/blog/_meta.tsx

new file mode 100644

index 000000000..544e65982

--- /dev/null

+++ b/pages/blog/_meta.tsx

@@ -0,0 +1,12 @@

+export default {

+ "*": {

+ theme: {

+ toc: false,

+ sidebar: false,

+ pagination: true,

+ typesetting: "article",

+ layout: "default",

+ breadcrumb: false,

+ },

+ },

+};

diff --git a/pages/blog/announcing-our-seed-round.mdx b/pages/blog/announcing-our-seed-round.mdx

index 3d3e3789c..f17b48147 100644

--- a/pages/blog/announcing-our-seed-round.mdx

+++ b/pages/blog/announcing-our-seed-round.mdx

@@ -41,7 +41,7 @@ We took these first-hand learnings and began working on solutions. As engineers,

-

+

Challenges of building LLM applications and how Langfuse helps

diff --git a/pages/blog/launch-week-1.mdx b/pages/blog/launch-week-1.mdx

index 2a8071a4d..fbb6c56d3 100644

--- a/pages/blog/launch-week-1.mdx

+++ b/pages/blog/launch-week-1.mdx

@@ -35,7 +35,7 @@ import { Tweet } from "@/components/Tweet";

### Day 0: OpenAI JS SDK Integration

-```typescript /import { observeOpenAI } from "langfuse"/ /observeOpenAI/

+```ts /import { observeOpenAI } from "langfuse"/ /observeOpenAI/

import OpenAI from "openai";

import { observeOpenAI } from "langfuse";

diff --git a/pages/blog/showcase-llm-chatbot.mdx b/pages/blog/showcase-llm-chatbot.mdx

index 109d06382..080df61dc 100644

--- a/pages/blog/showcase-llm-chatbot.mdx

+++ b/pages/blog/showcase-llm-chatbot.mdx

@@ -72,7 +72,7 @@ npm i langfuse

**Initialize client**

-```typescript

+```ts

const langfuse = new Langfuse({

secretKey: process.env.LANGFUSE_SECRET_KEY,

publicKey: process.env.NEXT_PUBLIC_LANGFUSE_PUBLIC_KEY,

@@ -81,7 +81,7 @@ const langfuse = new Langfuse({

**Grouping conversation as trace in Langfuse**

-```typescript

+```ts

const trace = langfuse.trace({

name: "chat",

id: `chat:${chatId}`,

@@ -100,7 +100,7 @@ const trace = langfuse.trace({

Before starting the LLM call, we create a generation object in Langfuse. This sets the start_time used for latency analysis in Langfuse, configures the generation object (e.g. which tokenizer to use to estimate token amounts), and provides us with the `generation_id` which we need to use in the frontend to log user feedback.

-```typescript

+```ts

const lfGeneration = trace.generation({

name: "chat",

input: openAiMessages,

@@ -115,7 +115,7 @@ const lfGeneration = trace.generation({

Thanks to the Vercel AI SDK, we can use the `onStart` and `onCompletion` callbacks to update/end the generation object in Langfuse.

-```typescript

+```ts

// once streaming started

async onStart() {

lfGeneration.update({

@@ -135,7 +135,7 @@ async onCompletion(completion) {

The simplest way to provide the `generation_id` to the frontend when using streaming responses is to add it as a custom header. This id is required to log user feedback in the frontend and relate it to the individual message.

-```typescript

+```ts

return new StreamingTextResponse(stream, {

headers: {

"X-Message-Id": lfGeneration.id,

@@ -147,7 +147,7 @@ return new StreamingTextResponse(stream, {

The ai-chatbot uses Vercel-KV to store the chat history. We can add debug events to the generation object to track the usage of the KV store.

-```typescript

+```ts

lfGeneration.event({

name: "kv-hmset",

level: "DEBUG",

@@ -175,7 +175,7 @@ Feedback modal:

We use the Langfuse Typescript SDK directly in the frontend to log the user feedback to Langfuse.

The SDK requires the `publicKey` which can be safely exposed as it can only be used to log user feedback.

-```typescript

+```ts

const langfuse = new LangfuseWeb({

publicKey: process.env.NEXT_PUBLIC_LANGFUSE_PUBLIC_KEY ?? "",

});

@@ -188,7 +188,7 @@ We created an event handler for the feedback form. The feedback is then logged a

1. `traceId`, which is the unique identifier of the conversation thread. As in the backend, we use the `chatId` which is the same for all messages in a conversation and already available in the frontend.

2. `observationId` which is the unique identifier of the observation within the trace that we want to relate the feedback to. In this case we made the langfuse `generation.id` (from the backend) in the backend available as the `message.id` (in the frontend). _For details on how we captured the custom streaming response header which included the id, see [components/chat.tsx](https://github.com/langfuse/ai-chatbot/blob/main/components/chat.tsx)._

-```typescript

+```ts

await langfuse.score({

traceId: `chat:${chatId}`,

observationId: message.id,

diff --git a/pages/blog/update-2023-08.mdx b/pages/blog/update-2023-08.mdx

index 27d46ae88..70d6aeaea 100644

--- a/pages/blog/update-2023-08.mdx

+++ b/pages/blog/update-2023-08.mdx

@@ -47,7 +47,7 @@ The details 👇

Last month we released the Python Integration for Langchain and now shipped the same for teams building with JS/TS. We released a new package [langfuse-langchain](https://www.npmjs.com/package/langfuse-langchain) which exposes a `CallbackHandler` that automatically traces your complex Langchain chains and agents. Simply pass it as a callback.

-```typescript /{ callbacks: [handler] }/

+```ts /{ callbacks: [handler] }/

// Initialize Langfuse handler

import CallbackHandler from "langfuse-langchain";

@@ -114,7 +114,7 @@ Releases are available for all SDKs. They can be added in three ways (in order o

langfuse = Langfuse(ENV_PUBLIC_KEY, ENV_SECRET_KEY, ENV_HOST, release='ba7816b')

```

- ```typescript

+ ```ts

// TypeScript

langfuse = new Langfuse({

publicKey: ENV_PUBLIC_KEY,

diff --git a/pages/blog/update-2023-09.mdx b/pages/blog/update-2023-09.mdx

index c60b2eb8b..a364ffafd 100644

--- a/pages/blog/update-2023-09.mdx

+++ b/pages/blog/update-2023-09.mdx

@@ -195,9 +195,11 @@ Share traces with anyone via public links. The other person doesn't need a Langf

_Example: https://cloud.langfuse.com/project/clkpwwm0m000gmm094odg11gi/traces/2d6b96f2-0a4d-4366-99a5-1ad558c66e99_

-

-

-

+

## 💾 Export generations (for fine-tuning) [#export-generations]

diff --git a/pages/blog/update-2023-10.mdx b/pages/blog/update-2023-10.mdx

index 9cae75143..2ade417ba 100644

--- a/pages/blog/update-2023-10.mdx

+++ b/pages/blog/update-2023-10.mdx

@@ -140,12 +140,12 @@ handler.get_trace_url()

-```typescript

+```ts

// trace object

trace.getTraceUrl()

```

-```typescript

+```ts

// Langchain callback handler

handler.getTraceUrl();

```

diff --git a/pages/changelog/2024-01-16-trace-tagging.mdx b/pages/changelog/2024-01-16-trace-tagging.mdx

index 3180e1bb0..3c97a0cbd 100644

--- a/pages/changelog/2024-01-16-trace-tagging.mdx

+++ b/pages/changelog/2024-01-16-trace-tagging.mdx

@@ -29,7 +29,7 @@ trace = langfuse.trace(

**Typescript**

-```typescript

+```ts

const trace = langfuse.trace({

name: "docs-retrieval",

tags: ["my-first-tag", "even-better-tag"],

diff --git a/pages/changelog/2024-02-05-sdk-level-prompt-caching.mdx b/pages/changelog/2024-02-05-sdk-level-prompt-caching.mdx

index 1b9ca0900..53b436de9 100644

--- a/pages/changelog/2024-02-05-sdk-level-prompt-caching.mdx

+++ b/pages/changelog/2024-02-05-sdk-level-prompt-caching.mdx

@@ -31,7 +31,7 @@ prompt = langfuse.get_prompt("prompt name", cache_ttl_seconds=0)

-```typescript

+```ts

// Get current production version and cache prompt for 5 minutes

const prompt = await langfuse.getPrompt("prompt name", undefined, {

cacheTtlSeconds: 300,

diff --git a/pages/changelog/2024-04-21-openai-integration-JS-SDK.mdx b/pages/changelog/2024-04-21-openai-integration-JS-SDK.mdx

index f3f833abf..f90f955b4 100644

--- a/pages/changelog/2024-04-21-openai-integration-JS-SDK.mdx

+++ b/pages/changelog/2024-04-21-openai-integration-JS-SDK.mdx

@@ -22,7 +22,7 @@ Thanks to [@noble-varghese](https://github.com/noble-varghese) and [@RichardKrue

### Quickstart

-```typescript /import { observeOpenAI } from "langfuse"/ /observeOpenAI/

+```ts /import { observeOpenAI } from "langfuse"/ /observeOpenAI/

import OpenAI from "openai";

import { observeOpenAI } from "langfuse";

diff --git a/pages/changelog/2024-05-07-prompts-api-and-deployment-labels.mdx b/pages/changelog/2024-05-07-prompts-api-and-deployment-labels.mdx

index 7f4e5109a..8acf24eb2 100644

--- a/pages/changelog/2024-05-07-prompts-api-and-deployment-labels.mdx

+++ b/pages/changelog/2024-05-07-prompts-api-and-deployment-labels.mdx

@@ -13,7 +13,7 @@ import { ChangelogHeader } from "@/components/changelog/ChangelogHeader";

## REST API for prompt management

-We revamped the API for prompt management to allow you to fully manage prompts via the REST API. You can now fetch, create, update, and delete prompts programmatically.

+We revamped the API for prompt management to allow you to fully manage prompts via the REST API. You can now fetch, create, and update prompts programmatically.

New endpoints ([API reference](https://api.reference.langfuse.com)):

diff --git a/pages/changelog/2024-07-04-query-traces-via-sdks.mdx b/pages/changelog/2024-07-04-query-traces-via-sdks.mdx

index c25684af5..7ca11e1aa 100644

--- a/pages/changelog/2024-07-04-query-traces-via-sdks.mdx

+++ b/pages/changelog/2024-07-04-query-traces-via-sdks.mdx

@@ -47,7 +47,7 @@ sessions = langfuse.fetch_sessions()

npm install langfuse

```

-```typescript

+```ts

import { Langfuse } from "langfuse";

const langfuse = new Langfuse();

diff --git a/pages/changelog/2024-07-11-non-numeric-scores-api.mdx b/pages/changelog/2024-07-11-non-numeric-scores-api.mdx

index 09bd66817..d9166d775 100644

--- a/pages/changelog/2024-07-11-non-numeric-scores-api.mdx

+++ b/pages/changelog/2024-07-11-non-numeric-scores-api.mdx