-

Notifications

You must be signed in to change notification settings - Fork 6

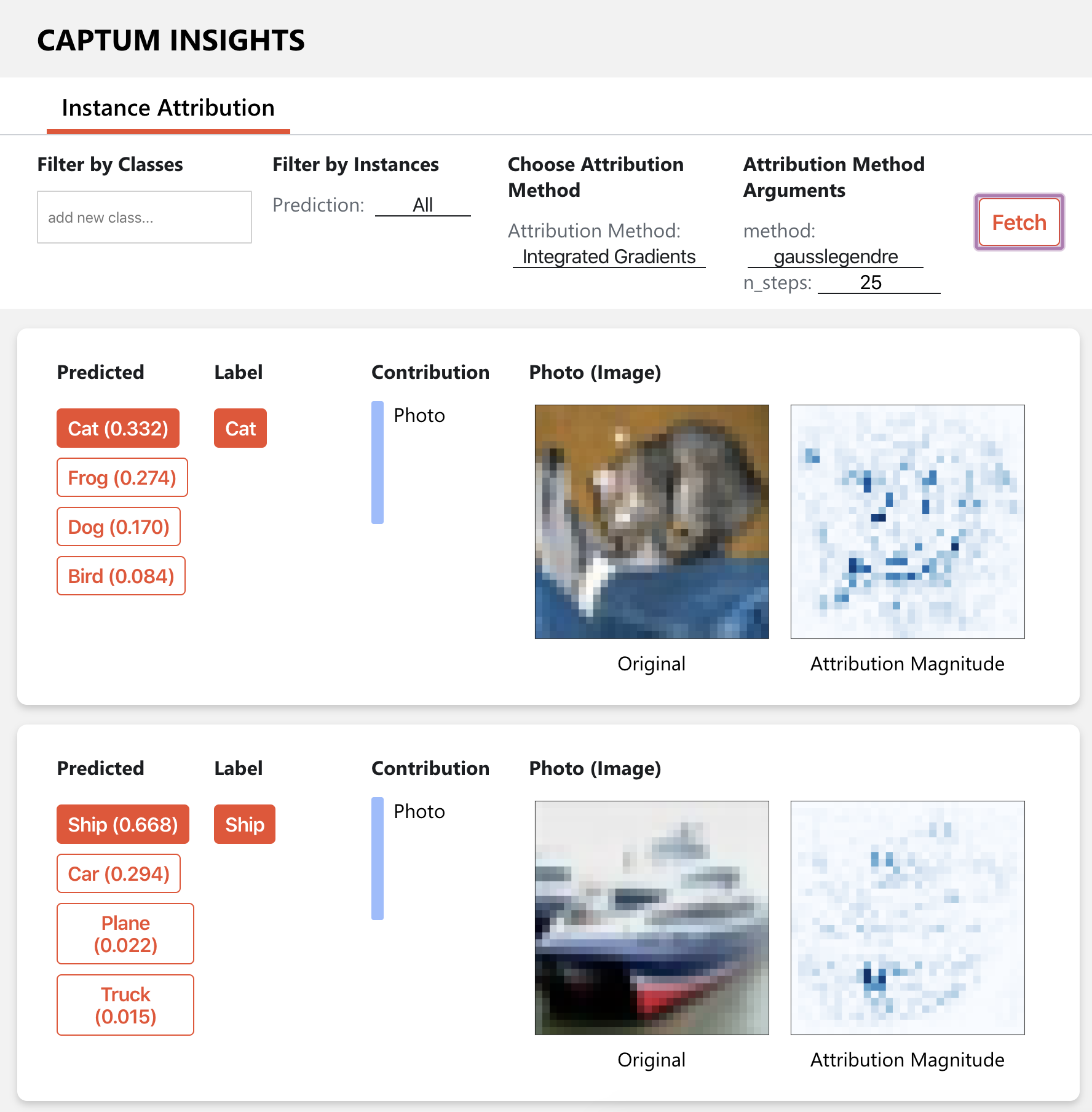

5. Interpreting the results

Interpretability, the ability to understand why an AI model made a decision, is important for developers to be able to convey why a model made a certain decision.DashAI uses Captum, a library for explaining decisions made by neural networks with deep learning framework PyTorch. Captum is designed to implement state of the art versions of AI models like Integrated Gradients, DeepLIFT, and Conductance. Captum allows researchers and developers to interpret decisions made in multimodal environments that combine, for example, text, images, and video, and allows them to compare results to existing models within the library. You can use it to understand feature importance or perform a deep dive on neural networks to understand neuron and layer attributions.

On training your models,DashAI will launch Captum Insights , a visualization tool for visual representations of Captum results.The dashboard allows you to test different attribution methods to understand the results shown by your models. Captum Insights works across images and text to help users understand feature attribution.

For Classification - You can check the intepretations for different classes of data. Supported Attribution Methods -

- GuidedBackprop

- InputXGradient

- IntegratedGradients

- Saliency

- FeatureAblation

- Deconvolution

Supported Attribution Methods -

- LayerIntegratedGradients

- LayerFeatureAblation