+

+

+

+

+

+

+

+ -

+

+

+

+

+

+

- + + + + + + HOME + + + + + + + + + + + + +

- + + + + + + CM + + + + + + + + + + + + + +

- + + + + + + Releases + + + + + + + +

[ Back to index ]

+MLCommons Taskforce on Education and Reproducibility

+Mission

+-

+

- help you automate and validate your MLPerf inference benchmark submissions to the v3.0 round for any hardware target (deadline: March 3, 2023) - + join the related Discord channel; +

- enable faster innovation while adapting to the world of rapidly evolving software, hardware, + and data by encoding everyone’s knowledge in a form of + portable, iteroperable and customizable automation recipes + reusable across the community; +

- modularize AI and ML Systems by decomposing them into above automation recipes + using the MLCommons CK2 automation meta-framework (aka CM); +

- automate benchmarking, design space exploration and optimization of AI and ML Systems across diverse software and hardware stacks; +

- help the community reproduce MLPerf benchmarks, prepare their own submissions and deploy Pareto-optimal ML/AI systems in the real world; +

- support student competitions, reproducibility initiatives and artifact evaluation at ML and Systems conferences using the rigorous MLPerf methodology and the MLCommons automation meta-framework. +

Co-chairs and tech leads

+-

+

- Grigori Fursin (CM project coordinator) +

- Arjun Suresh +

Discord server

+-

+

- Invite link +

Meeting notes and news

+-

+

- Shared doc +

Conf-calls

+Following our successful community submission to MLPerf inference v3.0, +we will set up new weekly conf-calls shortly - please stay tuned for more details!

+Please add your topics for discussion in the meeting notes +or via GitHub tickets.

+Mailing list

+Please join our mailing list here.

+GUI for MLPerf inference

+ +On-going projects

+See our R&D roadmap for Q4 2022 and Q1 2023

+-

+

- Modularize MLPerf benchmarks and make it easier to run, optimize, customize and reproduce them across rapidly evolving software, hardware and data. +

- Implement and enhance cross-platform CM scripts to make MLOps and DevOps more interoperable, reusable, portable, deterministic and reproducible. +

- Lower the barrier of entry for new MLPerf submitters and reduce their associated costs. +

- Develop universal, modular and portable benchmarking workflow that can run on any software/hardware stack from the cloud to embedded devices. +

- Automate design space exploration and optimization of the whole ML/SW/HW stack to trade off performance, accuracy, energy, size and costs. +

- Automate submission of Pareto-efficient configurations to MLPerf. +

- Help end-users of ML Systems visualize all MLPerf results, reproduce them and deploy Pareto-optimal ML/SW/HW stacks in production. +

Purpose

+MLCommons is a non-profit consortium of 50+ companies that was originally created +to develop a common, reproducible and fair benchmarking methodology for new AI and ML hardware.

+MLCommons has developed an open-source reusable module called loadgen +that efficiently and fairly measures the performance of inference systems. +It generates traffic for scenarios that were formulated by a diverse set of experts from MLCommons +to emulate the workloads seen in mobile devices, autonomous vehicles, robotics, and cloud-based setups.

+MLCommons has also prepared several reference ML tasks, models and datasets +for vision, recommendation, language processing and speech recognition +to let companies benchmark and compare their new hardware in terms of accuracy, latency, throughput and energy +in a reproducible way twice a year.

+The first goal of this open automation and reproducibility taskforce is to +develop a light-weight and open-source automation meta-framework +that can make MLOps and DevOps more interoperable, reusable, portable, +deterministic and reproducible.

+We then use this automation meta-framework to develop plug&play workflows +for the MLPerf benchmarks to make it easier for the newcomers to run them +across diverse hardware, software and data and automatically plug in +their own ML tasks, models, data sets, engines, libraries and tools.

+Another goal is to use these portable MLPerf workflows to help students, researchers and +engineers participate in crowd-benchmarking and exploration of the design space tradeoffs +(accuracy, latency, throughput, energy, size, etc.) of their ML Systems from the cloud to the +edge using the mature MLPerf methodology while automating the submission +of their Pareto-efficient configurations to the open division of the MLPerf +inference benchmark.

+The final goal is to help end-users reproduce MLPerf results +and deploy the most suitable ML/SW/HW stacks in production +based on their requirements and constraints.

+Technology

+This MLCommons taskforce is developing an open-source and technology-neutral +Collective Mind meta-framework (CM) +to modularize ML Systems and automate their benchmarking, optimization +and design space exploration across continuously changing software, hardware and data.

+CM is the second generation of the MLCommons CK workflow automation framework +that was originally developed to make it easier to reproduce research papers and validate them in the real world.

+As a proof-of-concept, this technology was successfully used to automate +MLPerf benchmarking and submissions +from Qualcomm, HPE, Dell, Lenovo, dividiti, Krai, the cTuning foundation and OctoML. +For example, it was used and extended by Arjun Suresh +with several other engineers to automate the record-breaking MLPerf inference benchmark submission for Qualcomm AI 100 devices.

+The goal of this group is to help users automate all the steps to prepare and run MLPerf benchmarks +across any ML models, data sets, frameworks, compilers and hardware +using the MLCommons CM framework.

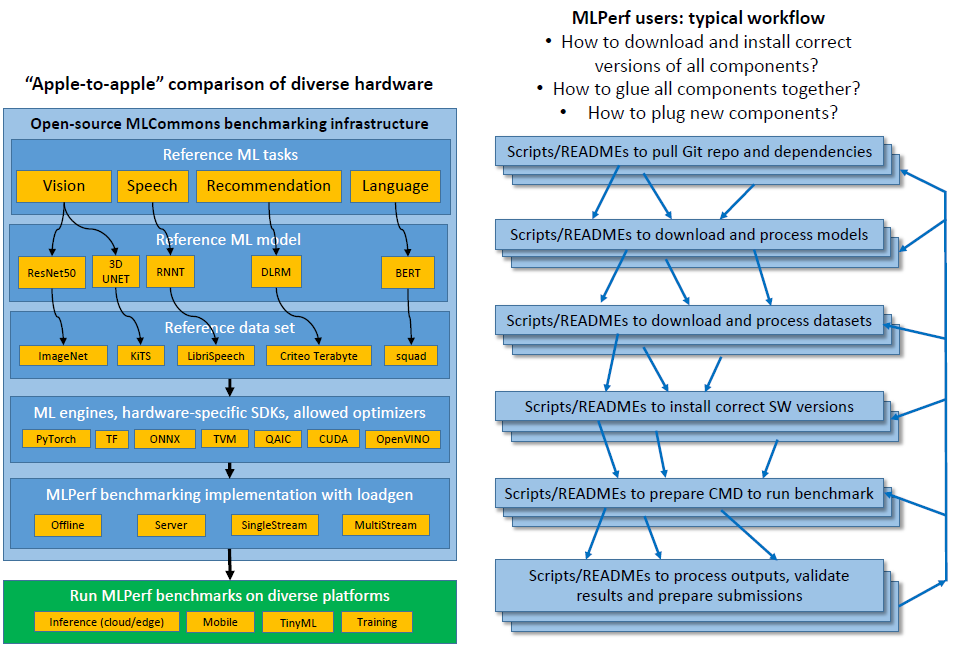

+Here is an example of current manual and error-prone MLPerf benchmark preparation steps:

+

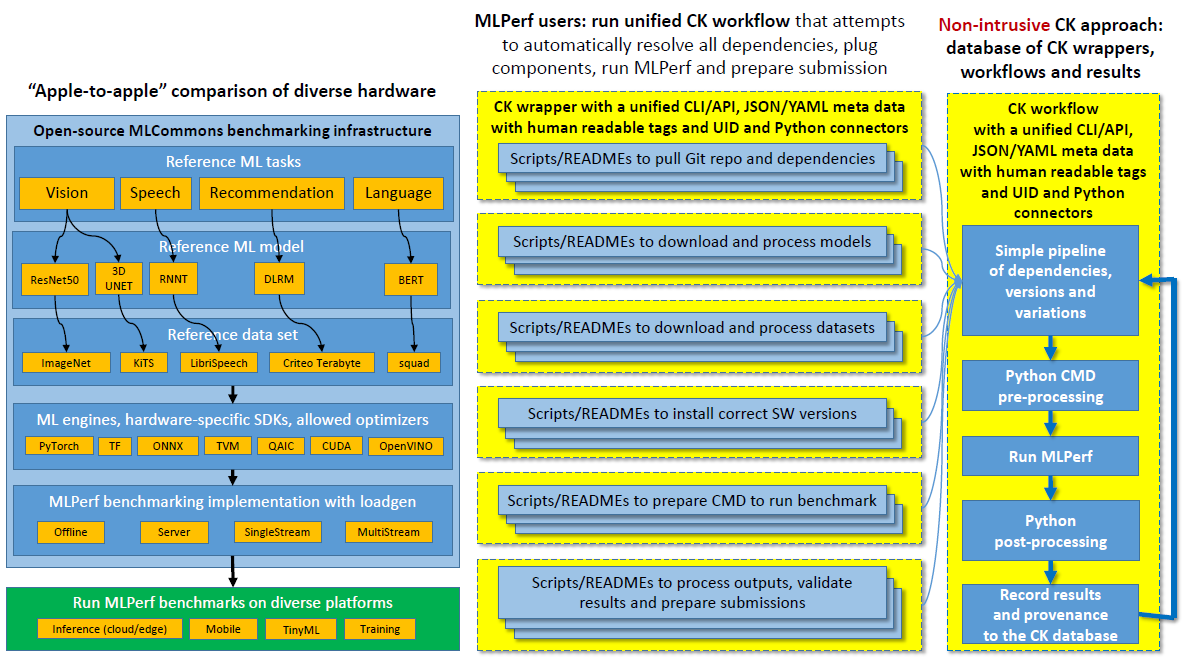

Here is the concept of CM-based automated workflows:

+

We have finished prototyping the new CM framework in summer 2022 based on the feedback of CK users +and successfully used it to modularize MLPerf and automate the submission of benchmarking results to the MLPerf inference v2.1. +See this tutorial for more details.

+We continue developing CM as an open-source educational toolkit +to help the community learn how to modularize, crowd-benchmark, optimize and deploy +Pareto-efficient ML Systems based on the mature MLPerf methodology and portable CM scripts - +please check the deliverables section to keep track of our community developments +and do not hesitate to join this community effort!

+Agenda

+See our R&D roadmap for Q4 2022 and Q1 2023

+2022

+-

+

- Prototype the new CM toolkit to modularize AI&ML systems based on the original CK concepts: +

- DONE - GitHub . +

- Decompose MLPerf inference benchmark into portable, reusable and plug&play CM components: +

- DONE for image classification and object detection - GitHub. +

- Demonstrate CM-based automation to submit results to MLPerf inference: +

- DONE - showcased CM automation concept for MLPerf inference v2.1 submission. +

- Prepare CM-based MLPerf modularization and automation tutorial: +

- DONE - link +

- Add tests to cover critical functionality of portable CM scripts for MLPerf: +

- DONE - link +

- Improve CM workflow/script automaton to modularize ML Systems: +

- DONE - link +

- Prototype CM-based modularization of the MLPerf inference benchmark with C++ back-end and loadgen + to automatically plug in different ML models, data sets, frameworks, SDKs, compilers and tools + and automatically run it across different hardware and run-times: +

- Ongoing internship of Thomas Zhu from Oxford University +

- Prototype CM-based automation for TinyMLPerf: +

- Ongoing +

- Add basic TVM back-end to the latest MLPerf inference repo: +

- Ongoing +

- Convert outdated CK components for MLPerf and MLOps into the new CM format +

- Ongoing +

- Develop a methodology to create modular containers and MLCommons MLCubes that contain CM components to run the MLPerf inference benchmarks out of the box: +

- Ongoing +

- Prototype CM integration with power infrastructure (power WG) and logging infrastructure (infra WG): +

- TBD +

- Process feedback from the community about CM-based modularization and crowd-benchmarking of MLPerf: +

- TBD +

2023

+-

+

- Upload all stable CM components for MLPerf to Zenodo or any other permanent archive to ensure the stability of all CM workflows for MLPerf and modular ML Systems. +

- Develop CM automation for community crowd-benchmarking of the MLPerf benchmarks across different models, data sets, frameworks, compilers, run-times and platforms. +

- Develop a customizable dashboard to visualize and analyze all MLPerf crowd-benchmarking results based on these examples from the legacy CK prototype: + 1, + 2. +

- Share MLPerf benchmarking results in a database compatible with FAIR principles (mandated by the funding agencies in the USA and Europe) -- + ideally, eventually, the MLCommons general datastore. +

- Connect CM-based MLPerf inference submission system with our reproducibility initiatives at ML and Systems conferences. + Organize open ML/SW/HW optimization and co-design tournaments using CM and the MLPerf methodology + based on our ACM ASPLOS-REQUEST'18 proof-of-concept. +

- Enable automatic submission of the Pareto-efficient crowd-benchmarking results (performance/accuracy/energy/size trade-off - + see this example from the legacy CK prototype) + to MLPerf on behalf of MLCommons. +

- Share deployable MLPerf inference containers with Pareto-efficient ML/SW/HW stacks. +

Resources

+-

+

- Motivation: +

- MLPerf Inference Benchmark (ArXiv paper) +

- ACM TechTalk with CK/CM intro moderated by Peter Mattson (MLCommons president) +

- Journal article with CK/CM concepts and our long-term vision +

-

+

HPCA'22 presentation "MLPerf design space exploration and production deployment"

+

+ -

+

Tools:

+

+ - MLCommons CM toolkit to modularize ML&AI Systems (Apache 2.0 license) +

- Portable, reusable and customizable CM components to modularize ML and AI Systems (Apache 2.0 license) +

- + + +

-

+

Google Drive (public access)

+

+

Acknowledgments

+This project is supported by MLCommons, OctoML +and many great contributors.

+ + + + + + + + + + + + + + +

+

+

+

+

+

+

+ -

+

+

+

+

+

+

- + + + + + + HOME + + + + + + + + + + + + +

- + + + + + + CM + + + + + + + + + + + + + +

- + + + + + + Releases + + + + + + + +

Checklist

+ +Moved to https://github.com/ctuning/artifact-evaluation/blob/master/docs/checklist.md

+ + + + + + + + + + + + + + +

+

+

+

+

+

+

+ -

+

+

+

+

+

+

- + + + + + + HOME + + + + + + + + + + + + +

- + + + + + + CM + + + + + + + + + + + + + +

- + + + + + + Releases + + + + + + + +

Faq

+ +Moved to https://github.com/ctuning/artifact-evaluation/blob/master/docs/faq.md

+ + + + + + + + + + + + + +