You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

Now I saw my mongo db in results collection have more than 400W record.

when the paginate find record for the url list, it return so large data and cost more times.

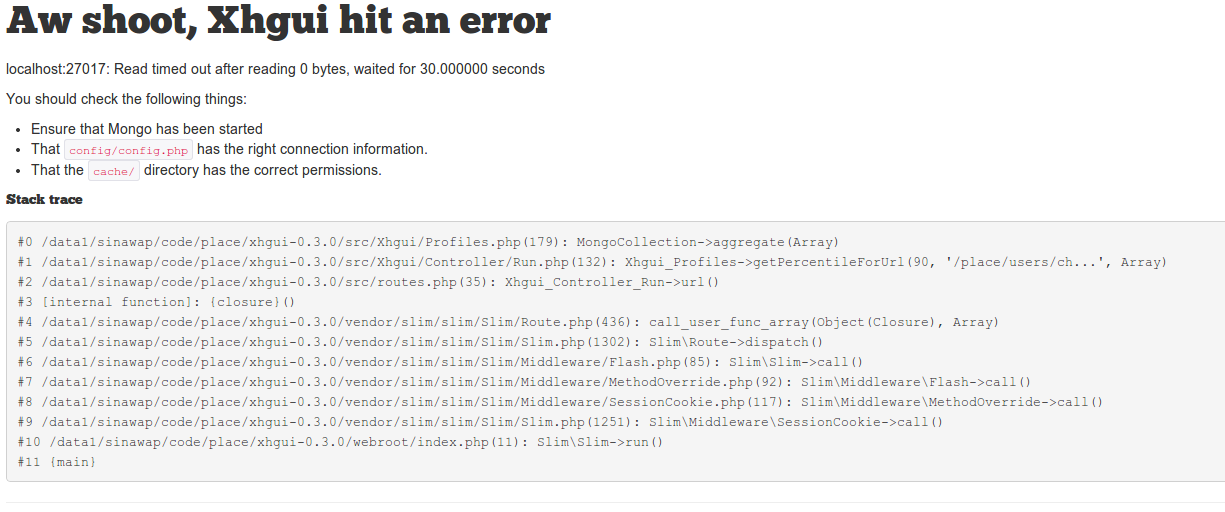

the url: /url/view?url=* is always give the error:

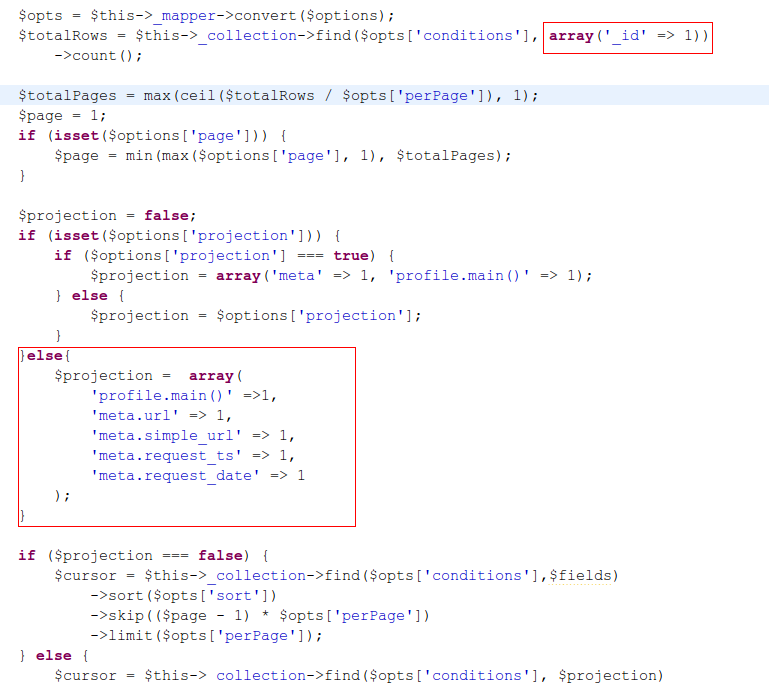

1: the most fields in the document is not to need. I give a parameter to the find method made it return the need fields then the find speed is better.

2: the other resaon

the method "getPercentileForUrl", it use "aggregate", it also take so many times.

but now I don't konw how to Optimize it, I think should write a crontab script excute everty day, call this function and then save the result in another collection, then find the result from the new collection, it will passed the aggregate's Calculate time, there just select time.

or use ajax call getPercentileForUrl to graph.

any other idea?

The text was updated successfully, but these errors were encountered:

I don't think an ajax request will make mongo perform better. I think changing the projection parameters is a great idea.

Adding a cron job is an option, but it complicates the installation and usage of xhgui. I think your best option at this point your best option might be to reduce the size of your data set. Mongo's aggregation tools don't perform well and sometimes not at all if you have a large dataset.

Now I saw my mongo db in results collection have more than 400W record.

when the paginate find record for the url list, it return so large data and cost more times.

the url: /url/view?url=* is always give the error:

1: the most fields in the document is not to need. I give a parameter to the find method made it return the need fields then the find speed is better.

2: the other resaon

the method "getPercentileForUrl", it use "aggregate", it also take so many times.

but now I don't konw how to Optimize it, I think should write a crontab script excute everty day, call this function and then save the result in another collection, then find the result from the new collection, it will passed the aggregate's Calculate time, there just select time.

or use ajax call getPercentileForUrl to graph.

any other idea?

The text was updated successfully, but these errors were encountered: