| slug | id | title | date | comments | tags | description | references | |

|---|---|---|---|---|---|---|---|---|

174-designing-memcached |

174-designing-memcached |

Designing Memcached or an in-memory KV store |

2019-10-03 22:04 |

true |

|

Memcached = rich client + distributed servers + hash table + LRU. It features a simple server and pushes complexity to the client) and hence reliable and easy to deploy. |

- High-performance, distributed key-value store

- Why distributed?

- Answer: to hold a larger size of data <img style={{ width: 200 }} src="https://res.cloudinary.com/dohtidfqh/image/upload/v1569196539/web-guiguio/memcached2.png" />

- For in-memory storage of small data objects

- Simple server (pushing complexity to the client) and hence reliable and easy to deploy

Big Picture: Client-server

<img style={{ width: 256 }} src="https://res.cloudinary.com/dohtidfqh/image/upload/v1569196539/web-guiguio/memcached1.png" />

- client

- given a list of Memcached servers

- chooses a server based on the key

- server

- store KVs into the internal hash table

- LRU eviction

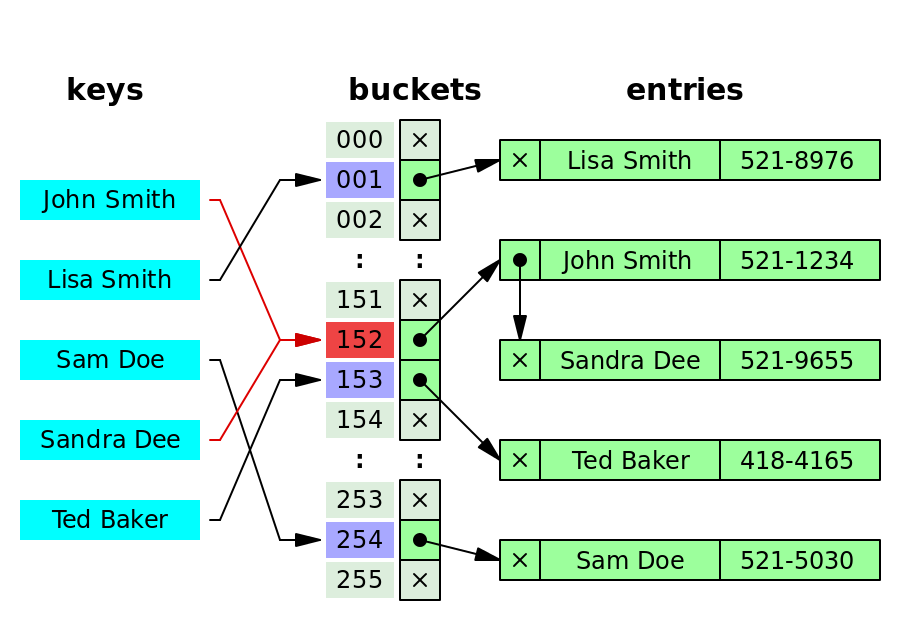

The Key-value server consists of a fixed-size hash table + single-threaded handler + coarse locking

How to handle collisions? Mostly three ways to resolve:

- Separate chaining: the collided bucket chains a list of entries with the same index, and you can always append the newly collided key-value pair to the list.

- open addressing: if there is a collision, go to the next index until finding an available bucket.

- dynamic resizing: resize the hash table and allocate more spaces; hence, collisions will happen less frequently.

See Data Partition and Routing

See Key value cache