+# Installation

@@ -143,8 +139,8 @@

- Install dependencies

```bash

sudo apt-get install python3-rosdep -y

- sudo rosdep init # "sudo rosdep init --include-eol-distros" for Eloquent and earlier

- rosdep update # "sudo rosdep update --include-eol-distros" for Eloquent and earlier

+ sudo rosdep init # "sudo rosdep init --include-eol-distros" for Foxy and earlier

+ rosdep update # "sudo rosdep update --include-eol-distros" for Foxy and earlier

rosdep install -i --from-path src --rosdistro $ROS_DISTRO --skip-keys=librealsense2 -y

```

@@ -165,13 +161,9 @@

-

- Usage

-

+# Usage

-

- Start the camera node

-

+## Start the camera node

#### with ros2 run:

ros2 run realsense2_camera realsense2_camera_node

@@ -184,11 +176,8 @@

-

- Camera Name And Camera Namespace

-

+## Camera Name And Camera Namespace

-### Usage

User can set the camera name and camera namespace, to distinguish between cameras and platforms, which helps identifying the right nodes and topics to work with.

### Example

@@ -203,7 +192,7 @@ User can set the camera name and camera namespace, to distinguish between camera

```ros2 launch realsense2_camera rs_launch.py camera_namespace:=robot1 camera_name:=D455_1```

- - With ros2 run (using remapping mechanisim [Reference](https://docs.ros.org/en/foxy/How-To-Guides/Node-arguments.html)):

+ - With ros2 run (using remapping mechanisim [Reference](https://docs.ros.org/en/humble/How-To-Guides/Node-arguments.html)):

```ros2 run realsense2_camera realsense2_camera_node --ros-args -r __node:=D455_1 -r __ns:=robot1```

@@ -248,13 +237,9 @@ User can set the camera name and camera namespace, to distinguish between camera

/camera/camera/device_info

```

-

-

-

- Parameters

-

+## Parameters

### Available Parameters:

- For the entire list of parameters type `ros2 param list`.

@@ -269,13 +254,13 @@ User can set the camera name and camera namespace, to distinguish between camera

- They have, at least, the **profile** parameter.

- The profile parameter is a string of the following format: \X\X\ (The dividing character can be X, x or ",". Spaces are ignored.)

- For example: ```depth_module.profile:=640x480x30 rgb_camera.profile:=1280x720x30```

- - Since infra, infra1, infra2, fisheye, fisheye1, fisheye2 and depth are all streams of the depth_module, their width, height and fps are defined by the same param **depth_module.profile**

+ - Since infra, infra1, infra2 and depth are all streams of the depth_module, their width, height and fps are defined by the same param **depth_module.profile**

- If the specified combination of parameters is not available by the device, the default or previously set configuration will be used.

- Run ```ros2 param describe ``` to get the list of supported profiles.

- Note: Should re-enable the stream for the change to take effect.

- ****_format**

- This parameter is a string used to select the stream format.

- - can be any of *infra, infra1, infra2, color, depth, fisheye, fisheye1, fisheye2*.

+ - can be any of *infra, infra1, infra2, color, depth*.

- For example: ```depth_module.depth_format:=Z16 depth_module.infra1_format:=y8 rgb_camera.color_format:=RGB8```

- This parameter supports both lower case and upper case letters.

- If the specified parameter is not available by the stream, the default or previously set configuration will be used.

@@ -286,14 +271,14 @@ User can set the camera name and camera namespace, to distinguish between camera

- Run ```rs-enumerate-devices``` command to know the list of profiles supported by the connected sensors.

- **enable_****:

- Choose whether to enable a specified stream or not. Default is true for images and false for orientation streams.

- - can be any of *infra, infra1, infra2, color, depth, fisheye, fisheye1, fisheye2, gyro, accel, pose*.

+ - can be any of *infra, infra1, infra2, color, depth, gyro, accel*.

- For example: ```enable_infra1:=true enable_color:=false```

- **enable_sync**:

- gathers closest frames of different sensors, infra red, color and depth, to be sent with the same timetag.

- This happens automatically when such filters as pointcloud are enabled.

- ****_qos**:

- Sets the QoS by which the topic is published.

- - can be any of *infra, infra1, infra2, color, depth, fisheye, fisheye1, fisheye2, gyro, accel, pose*.

+ - can be any of *infra, infra1, infra2, color, depth, gyro, accel*.

- Available values are the following strings: `SYSTEM_DEFAULT`, `DEFAULT`, `PARAMETER_EVENTS`, `SERVICES_DEFAULT`, `PARAMETERS`, `SENSOR_DATA`.

- For example: ```depth_qos:=SENSOR_DATA```

- Reference: [ROS2 QoS profiles formal documentation](https://docs.ros.org/en/rolling/Concepts/About-Quality-of-Service-Settings.html#qos-profiles)

@@ -354,7 +339,6 @@ User can set the camera name and camera namespace, to distinguish between camera

- If set to true, the device will reset prior to usage.

- For example: `initial_reset:=true`

- **base_frame_id**: defines the frame_id all static transformations refers to.

-- **odom_frame_id**: defines the origin coordinate system in ROS convention (X-Forward, Y-Left, Z-Up). pose topic defines the pose relative to that system.

- **clip_distance**:

- Remove from the depth image all values above a given value (meters). Disable by giving negative value (default)

- For example: `clip_distance:=1.5`

@@ -371,13 +355,9 @@ User can set the camera name and camera namespace, to distinguish between camera

- 0 or negative values mean no diagnostics topic is published. Defaults to 0.

The `/diagnostics` topic includes information regarding the device temperatures and actual frequency of the enabled streams.

-- **publish_odom_tf**: If True (default) publish TF from odom_frame to pose_frame.

-

-

- ROS2(Robot) vs Optical(Camera) Coordination Systems:

-

+## ROS2(Robot) vs Optical(Camera) Coordination Systems:

- Point Of View:

- Imagine we are standing behind of the camera, and looking forward.

@@ -393,9 +373,7 @@ The `/diagnostics` topic includes information regarding the device temperatures

-

- TF from coordinate A to coordinate B:

-

+## TF from coordinate A to coordinate B:

- TF msg expresses a transform from coordinate frame "header.frame_id" (source) to the coordinate frame child_frame_id (destination) [Reference](http://docs.ros.org/en/noetic/api/geometry_msgs/html/msg/Transform.html)

- In RealSense cameras, the origin point (0,0,0) is taken from the left IR (infra1) position and named as "camera_link" frame

@@ -407,10 +385,7 @@ The `/diagnostics` topic includes information regarding the device temperatures

-

- Extrinsics from sensor A to sensor B:

-

-

+## Extrinsics from sensor A to sensor B:

- Extrinsic from sensor A to sensor B means the position and orientation of sensor A relative to sensor B.

- Imagine that B is the origin (0,0,0), then the Extrensics(A->B) describes where is sensor A relative to sensor B.

@@ -445,9 +420,7 @@ translation:

-

- Published Topics

-

+## Published Topics

The published topics differ according to the device and parameters.

After running the above command with D435i attached, the following list of topics will be available (This is a partial list. For full one type `ros2 topic list`):

@@ -489,9 +462,7 @@ Enabling stream adds matching topics. For instance, enabling the gyro and accel

-

- RGBD Topic

-

+## RGBD Topic

RGBD new topic, publishing [RGB + Depth] in the same message (see RGBD.msg for reference). For now, works only with depth aligned to color images, as color and depth images are synchronized by frame time tag.

@@ -511,9 +482,7 @@ ros2 launch realsense2_camera rs_launch.py enable_rgbd:=true enable_sync:=true a

-

- Metadata topic

-

+## Metadata topic

The metadata messages store the camera's available metadata in a *json* format. To learn more, a dedicated script for echoing a metadata topic in runtime is attached. For instance, use the following command to echo the camera/depth/metadata topic:

```

@@ -522,10 +491,8 @@ python3 src/realsense-ros/realsense2_camera/scripts/echo_metadada.py /camera/cam

-

- Post-Processing Filters

-

-

+## Post-Processing Filters

+

The following post processing filters are available:

- ```align_depth```: If enabled, will publish the depth image aligned to the color image on the topic `/camera/camera/aligned_depth_to_color/image_raw`.

- The pointcloud, if created, will be based on the aligned depth image.

@@ -555,17 +522,13 @@ Each of the above filters have it's own parameters, following the naming convent

-

- Available services

-

+## Available services

- device_info : retrieve information about the device - serial_number, firmware_version etc. Type `ros2 interface show realsense2_camera_msgs/srv/DeviceInfo` for the full list. Call example: `ros2 service call /camera/camera/device_info realsense2_camera_msgs/srv/DeviceInfo`

-

- Efficient intra-process communication:

-

+## Efficient intra-process communication:

Our ROS2 Wrapper node supports zero-copy communications if loaded in the same process as a subscriber node. This can reduce copy times on image/pointcloud topics, especially with big frame resolutions and high FPS.

diff --git a/realsense2_camera/CMakeLists.txt b/realsense2_camera/CMakeLists.txt

index 0c550a8994..eead7eaf39 100644

--- a/realsense2_camera/CMakeLists.txt

+++ b/realsense2_camera/CMakeLists.txt

@@ -144,34 +144,18 @@ set(SOURCES

if(NOT DEFINED ENV{ROS_DISTRO})

message(FATAL_ERROR "ROS_DISTRO is not defined." )

endif()

-if("$ENV{ROS_DISTRO}" STREQUAL "dashing")

- message(STATUS "Build for ROS2 Dashing")

- set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DDASHING")

- set(SOURCES "${SOURCES}" src/ros_param_backend_dashing.cpp)

-elseif("$ENV{ROS_DISTRO}" STREQUAL "eloquent")

- message(STATUS "Build for ROS2 eloquent")

- set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DELOQUENT")

- set(SOURCES "${SOURCES}" src/ros_param_backend_foxy.cpp)

-elseif("$ENV{ROS_DISTRO}" STREQUAL "foxy")

- message(STATUS "Build for ROS2 Foxy")

- set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DFOXY")

- set(SOURCES "${SOURCES}" src/ros_param_backend_foxy.cpp)

-elseif("$ENV{ROS_DISTRO}" STREQUAL "galactic")

- message(STATUS "Build for ROS2 Galactic")

- set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DGALACTIC")

- set(SOURCES "${SOURCES}" src/ros_param_backend_foxy.cpp)

-elseif("$ENV{ROS_DISTRO}" STREQUAL "humble")

+if("$ENV{ROS_DISTRO}" STREQUAL "humble")

message(STATUS "Build for ROS2 Humble")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DHUMBLE")

- set(SOURCES "${SOURCES}" src/ros_param_backend_foxy.cpp)

+ set(SOURCES "${SOURCES}" src/ros_param_backend.cpp)

elseif("$ENV{ROS_DISTRO}" STREQUAL "iron")

message(STATUS "Build for ROS2 Iron")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DIRON")

- set(SOURCES "${SOURCES}" src/ros_param_backend_foxy.cpp)

+ set(SOURCES "${SOURCES}" src/ros_param_backend.cpp)

elseif("$ENV{ROS_DISTRO}" STREQUAL "rolling")

message(STATUS "Build for ROS2 Rolling")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -DROLLING")

- set(SOURCES "${SOURCES}" src/ros_param_backend_rolling.cpp)

+ set(SOURCES "${SOURCES}" src/ros_param_backend.cpp)

else()

message(FATAL_ERROR "Unsupported ROS Distribution: " "$ENV{ROS_DISTRO}")

endif()

@@ -320,6 +304,36 @@ if(BUILD_TESTING)

)

endforeach()

endforeach()

+

+ unset(_pytest_folders)

+

+ set(rs_query_cmd "rs-enumerate-devices -s")

+ execute_process(COMMAND bash -c ${rs_query_cmd}

+ WORKING_DIRECTORY ${PROJECT_SOURCE_DIR}

+ RESULT_VARIABLE rs_result

+ OUTPUT_VARIABLE RS_DEVICE_INFO)

+ message(STATUS "rs_device_info:")

+ message(STATUS "${RS_DEVICE_INFO}")

+ if((RS_DEVICE_INFO MATCHES "D455") OR (RS_DEVICE_INFO MATCHES "D415") OR (RS_DEVICE_INFO MATCHES "D435"))

+ message(STATUS "D455 device found")

+ set(_pytest_live_folders

+ test/live_camera

+ )

+ endif()

+

+ foreach(test_folder ${_pytest_live_folders})

+ file(GLOB files "${test_folder}/test_*.py")

+ foreach(file ${files})

+

+ get_filename_component(_test_name ${file} NAME_WE)

+ ament_add_pytest_test(${_test_name} ${file}

+ APPEND_ENV PYTHONPATH=${CMAKE_CURRENT_BINARY_DIR}:${CMAKE_SOURCE_DIR}/test/utils:${CMAKE_SOURCE_DIR}/launch:${CMAKE_SOURCE_DIR}/scripts

+ TIMEOUT 500

+ WORKING_DIRECTORY ${CMAKE_SOURCE_DIR}

+ )

+ endforeach()

+ endforeach()

+

endif()

# Ament exports

diff --git a/realsense2_camera/examples/align_depth/README.md b/realsense2_camera/examples/align_depth/README.md

new file mode 100644

index 0000000000..3b8f13b826

--- /dev/null

+++ b/realsense2_camera/examples/align_depth/README.md

@@ -0,0 +1,12 @@

+# Align Depth to Color

+This example shows how to start the camera node and align depth stream to color stream.

+```

+ros2 launch realsense2_camera rs_align_depth_launch.py

+```

+

+The aligned image will be published to the topic "/aligned_depth_to_color/image_raw"

+

+Also, align depth to color can enabled by following cmd:

+```

+ros2 launch realsense2_camera rs_launch.py align_depth.enable:=true

+```

diff --git a/realsense2_camera/examples/dual_camera/README.md b/realsense2_camera/examples/dual_camera/README.md

new file mode 100644

index 0000000000..03a7cfa08f

--- /dev/null

+++ b/realsense2_camera/examples/dual_camera/README.md

@@ -0,0 +1,123 @@

+# Launching Dual RS ROS2 nodes

+The following example lanches two RS ROS2 nodes.

+```

+ros2 launch realsense2_camera rs_dual_camera_launch.py serial_no1:= serial_no2:=

+```

+

+## Example:

+Let's say the serial numbers of two RS cameras are 207322251310 and 234422060144.

+```

+ros2 launch realsense2_camera rs_dual_camera_launch.py serial_no1:="'207322251310'" serial_no2:="'234422060144'"

+```

+or

+```

+ros2 launch realsense2_camera rs_dual_camera_launch.py serial_no1:=_207322251310 serial_no2:=_234422060144

+```

+

+## How to know the serial number?

+Method 1: Using the rs-enumerate-devices tool

+```

+rs-enumerate-devices | grep "Serial Number"

+```

+

+Method 2: Connect single camera and run

+```

+ros2 launch realsense2_camera rs_launch.py

+```

+and look for the serial number in the log printed to screen under "[INFO][...] Device Serial No:".

+

+# Using Multiple RS camera by launching each in differnet terminals

+Make sure you set a different name and namespace for each camera.

+

+Terminal 1:

+```

+ros2 launch realsense2_camera rs_launch.py serial_no:="'207322251310'" camera_name:='camera1' camera_namespace:='camera1'

+```

+Terminal 2:

+```

+ros2 launch realsense2_camera rs_launch.py serial_no:="'234422060144'" camera_name:='camera2' camera_namespace:='camera2'

+```

+

+# Multiple cameras showing a semi-unified pointcloud

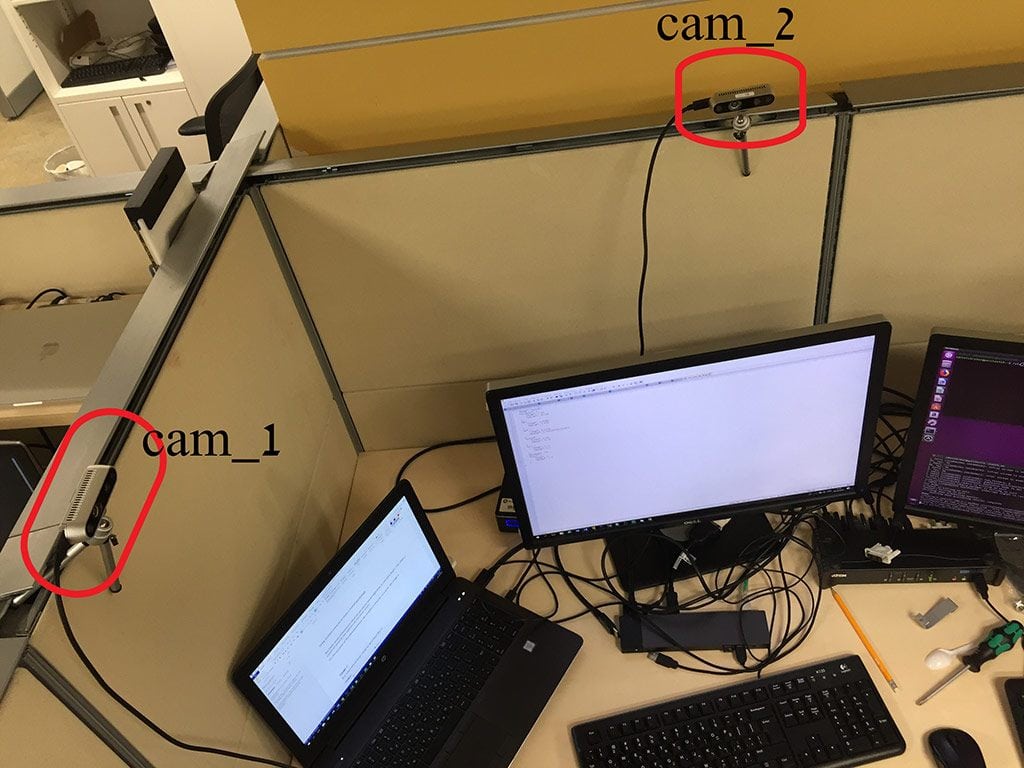

+The D430 series of RealSense cameras use stereo based algorithm to calculate depth. This mean, a couple of cameras can operate on the same scene. For the purpose of this demonstration, let's say 2 cameras can be coupled to look at the same scene from 2 different points of view. See image:

+

+

+

+The schematic settings could be described as:

+X--------------------------------->cam_2

+| (70 cm)

+|

+|

+| (60 cm)

+|

+|

+/

+cam_1

+

+The cameras have no data regarding their relative position. Thats up to a third party program to set. To simplify things, the coordinate system of cam_1 can be considered as the refernce coordinate system for the whole scene.

+

+The estimated translation of cam_2 from cam_1 is 70(cm) on X-axis and 60(cm) on Y-axis. Also, the estimated yaw angle of cam_2 relative to cam_1 as 90(degrees) clockwise. These are the initial parameters to be set for setting the transformation between the 2 cameras as follows:

+

+```

+ros2 launch realsense2_camera rs_dual_camera_launch.py serial_no1:=_207322251310 serial_no2:=_234422060144 tf.translation.x:=0.7 tf.translation.y:=0.6 tf.translation.z:=0.0 tf.rotation.yaw:=-90.0 tf.rotation.pitch:=0.0 tf.rotation.roll:=0.0

+```

+

+If the unified pointcloud result is not good, follow the below steps to fine-tune the calibaration.

+

+## Visualizing the pointclouds and fine-tune the camera calibration

+Launch 2 cameras in separate terminals:

+

+**Terminal 1:**

+```

+ros2 launch realsense2_camera rs_launch.py serial_no:="'207322251310'" camera_name:='camera1' camera_namespace:='camera1'

+```

+**Terminal 2:**

+```

+ros2 launch realsense2_camera rs_launch.py serial_no:="'234422060144'" camera_name:='camera2' camera_namespace:='camera2'

+```

+**Terminal 3:**

+```

+rviz2

+```

+Open rviz and set 'Fixed Frame' to camera1_link

+Add Pointcloud2-> By topic -> /camera1/camera1/depth/color/points

+Add Pointcloud2 -> By topic -> /camera2/camera2/depth/color/points

+

+**Terminal 4:**

+Run the 'set_cams_transforms.py' tool. It can be used to fine-tune the calibaration.

+```

+python src/realsense-ros/realsense2_camera/scripts/set_cams_transforms.py camera1_link camera2_link 0.7 0.6 0 -90 0 0

+```

+

+**Instructions printed by the tool:**

+```

+Using default file /home/user_name/ros2_ws/src/realsense-ros/realsense2_camera/scripts/_set_cams_info_file.txt

+

+Use given initial values.

+

+Press the following keys to change mode: x, y, z, (a)zimuth, (p)itch, (r)oll

+

+For each mode, press 6 to increase by step and 4 to decrease

+

+Press + to multiply step by 2 or - to divide

+

+Press Q to quit

+```

+

+Note that the tool prints the path of the current configuration file. It saves its last configuration automatically, all the time, to be used on the next run.

+

+After a lot of fiddling around, unified pointcloud looked better with the following calibaration:

+```

+x = 0.75

+y = 0.575

+z = 0

+azimuth = -91.25

+pitch = 0.75

+roll = 0

+```

+

+Now, use the above results in the launch file:

+```

+ros2 launch realsense2_camera rs_dual_camera_launch.py serial_no1:=_207322251310 serial_no2:=_234422060144 tf.translation.x:=0.75 tf.translation.y:=0.575 tf.translation.z:=0.0 tf.rotation.yaw:=-91.25 tf.rotation.pitch:=0.75 tf.rotation.roll:=0.0

+```

+

diff --git a/realsense2_camera/examples/dual_camera/rs_dual_camera_launch.py b/realsense2_camera/examples/dual_camera/rs_dual_camera_launch.py

index 4a4db4a275..81643d2c7e 100644

--- a/realsense2_camera/examples/dual_camera/rs_dual_camera_launch.py

+++ b/realsense2_camera/examples/dual_camera/rs_dual_camera_launch.py

@@ -19,7 +19,7 @@

# For each device, the parameter name was changed to include an index.

# For example: to set camera_name for device1 set parameter camera_name1.

# command line example:

-# ros2 launch realsense2_camera rs_dual_camera_launch.py camera_name1:=D400 device_type2:=l5. device_type1:=d4..

+# ros2 launch realsense2_camera rs_dual_camera_launch.py serial_no1:= serial_no2:=

"""Launch realsense2_camera node."""

import copy

@@ -68,7 +68,7 @@ def duplicate_params(general_params, posix):

return local_params

def launch_static_transform_publisher_node(context : LaunchContext):

- # dummy static transformation from camera1 to camera2

+ # Static transformation from camera1 to camera2

node = launch_ros.actions.Node(

name = "my_static_transform_publisher",

package = "tf2_ros",

@@ -104,6 +104,6 @@ def generate_launch_description():

namespace='',

executable='rviz2',

name='rviz2',

- arguments=['-d', [ThisLaunchFileDir(), '/dual_camera_pointcloud.rviz']]

+ arguments=['-d', [ThisLaunchFileDir(), '/rviz/dual_camera_pointcloud.rviz']]

)

])

diff --git a/realsense2_camera/examples/dual_camera/dual_camera_pointcloud.rviz b/realsense2_camera/examples/dual_camera/rviz/dual_camera_pointcloud.rviz

similarity index 98%

rename from realsense2_camera/examples/dual_camera/dual_camera_pointcloud.rviz

rename to realsense2_camera/examples/dual_camera/rviz/dual_camera_pointcloud.rviz

index 3469eda9d8..1a4c0caa11 100644

--- a/realsense2_camera/examples/dual_camera/dual_camera_pointcloud.rviz

+++ b/realsense2_camera/examples/dual_camera/rviz/dual_camera_pointcloud.rviz

@@ -74,7 +74,7 @@ Visualization Manager:

Filter size: 10

History Policy: Keep Last

Reliability Policy: Reliable

- Value: /camera1/depth/color/points

+ Value: /camera1/camera1/depth/color/points

Use Fixed Frame: true

Use rainbow: true

Value: true

@@ -108,7 +108,7 @@ Visualization Manager:

Filter size: 10

History Policy: Keep Last

Reliability Policy: Reliable

- Value: /camera2/depth/color/points

+ Value: /camera2/camera2/depth/color/points

Use Fixed Frame: true

Use rainbow: true

Value: true

diff --git a/realsense2_camera/examples/launch_from_rosbag/README.md b/realsense2_camera/examples/launch_from_rosbag/README.md

new file mode 100644

index 0000000000..5bfbb017f5

--- /dev/null

+++ b/realsense2_camera/examples/launch_from_rosbag/README.md

@@ -0,0 +1,17 @@

+# Launching RS ROS2 node from rosbag File

+The following example allows streaming a rosbag file, saved by Intel RealSense Viewer, instead of streaming live with a camera. It can be used for testing and repetition of the same sequence.

+```

+ros2 launch realsense2_camera rs_launch_from_rosbag.py

+```

+By default, the 'rs_launch_from_rosbag.py' launch file uses the "/rosbag/D435i_Depth_and_IMU_Stands_still.bag" rosbag file.

+

+User can also provide a different rosbag file through cmd line as follows:

+```

+ros2 launch realsense2_camera rs_launch_from_rosbag.py rosbag_filename:="/full/path/to/rosbag/file"

+```

+or

+```

+ros2 launch realsense2_camera rs_launch.py rosbag_filename:="/full/path/to/rosbag/file"

+```

+

+Check-out [sample-recordings](https://github.com/IntelRealSense/librealsense/blob/master/doc/sample-data.md) for a few recorded samples.

\ No newline at end of file

diff --git a/realsense2_camera/examples/launch_from_rosbag/rs_launch_from_rosbag.py b/realsense2_camera/examples/launch_from_rosbag/rs_launch_from_rosbag.py

index ad9b76667b..a0d9620cad 100644

--- a/realsense2_camera/examples/launch_from_rosbag/rs_launch_from_rosbag.py

+++ b/realsense2_camera/examples/launch_from_rosbag/rs_launch_from_rosbag.py

@@ -37,7 +37,7 @@

{'name': 'enable_depth', 'default': 'true', 'description': 'enable depth stream'},

{'name': 'enable_gyro', 'default': 'true', 'description': "'enable gyro stream'"},

{'name': 'enable_accel', 'default': 'true', 'description': "'enable accel stream'"},

- {'name': 'rosbag_filename', 'default': [ThisLaunchFileDir(), "/D435i_Depth_and_IMU_Stands_still.bag"], 'description': 'A realsense bagfile to run from as a device'},

+ {'name': 'rosbag_filename', 'default': [ThisLaunchFileDir(), "/rosbag/D435i_Depth_and_IMU_Stands_still.bag"], 'description': 'A realsense bagfile to run from as a device'},

]

def set_configurable_parameters(local_params):

diff --git a/realsense2_camera/examples/launch_params_from_file/README.md b/realsense2_camera/examples/launch_params_from_file/README.md

new file mode 100644

index 0000000000..ce9980aa5b

--- /dev/null

+++ b/realsense2_camera/examples/launch_params_from_file/README.md

@@ -0,0 +1,33 @@

+# Get RS ROS2 node params from YAML file

+The following example gets the RS ROS2 node params from YAML file.

+```

+ros2 launch realsense2_camera rs_launch_get_params_from_yaml.py

+```

+

+By default, 'rs_launch_get_params_from_yaml.py' launch file uses the "/config/config.yaml" YAML file.

+

+User can provide a different YAML file through cmd line as follows:

+```

+ros2 launch realsense2_camera rs_launch_get_params_from_yaml.py config_file:="/full/path/to/config/file"

+```

+or

+```

+ros2 launch realsense2_camera rs_launch.py config_file:="/full/path/to/config/file"

+```

+

+## Syntax for defining params in YAML file

+```

+param1: value

+param2: value

+```

+

+Example:

+```

+enable_color: true

+rgb_camera.profile: 1280x720x15

+enable_depth: true

+align_depth.enable: true

+enable_sync: true

+publish_tf: true

+tf_publish_rate: 1.0

+```

\ No newline at end of file

diff --git a/realsense2_camera/examples/launch_params_from_file/config.yaml b/realsense2_camera/examples/launch_params_from_file/config/config.yaml

similarity index 74%

rename from realsense2_camera/examples/launch_params_from_file/config.yaml

rename to realsense2_camera/examples/launch_params_from_file/config/config.yaml

index 8b8bcc1709..d15a19a0fe 100644

--- a/realsense2_camera/examples/launch_params_from_file/config.yaml

+++ b/realsense2_camera/examples/launch_params_from_file/config/config.yaml

@@ -3,4 +3,5 @@ rgb_camera.profile: 1280x720x15

enable_depth: true

align_depth.enable: true

enable_sync: true

-

+publish_tf: true

+tf_publish_rate: 1.0

diff --git a/realsense2_camera/examples/launch_params_from_file/rs_launch_get_params_from_yaml.py b/realsense2_camera/examples/launch_params_from_file/rs_launch_get_params_from_yaml.py

index a6b5582039..c0ebd7bfbd 100644

--- a/realsense2_camera/examples/launch_params_from_file/rs_launch_get_params_from_yaml.py

+++ b/realsense2_camera/examples/launch_params_from_file/rs_launch_get_params_from_yaml.py

@@ -34,7 +34,7 @@

local_parameters = [{'name': 'camera_name', 'default': 'camera', 'description': 'camera unique name'},

{'name': 'camera_namespace', 'default': 'camera', 'description': 'camera namespace'},

- {'name': 'config_file', 'default': [ThisLaunchFileDir(), "/config.yaml"], 'description': 'yaml config file'},

+ {'name': 'config_file', 'default': [ThisLaunchFileDir(), "/config/config.yaml"], 'description': 'yaml config file'},

]

def set_configurable_parameters(local_params):

diff --git a/realsense2_camera/examples/pointcloud/README.md b/realsense2_camera/examples/pointcloud/README.md

new file mode 100644

index 0000000000..1285e0a843

--- /dev/null

+++ b/realsense2_camera/examples/pointcloud/README.md

@@ -0,0 +1,17 @@

+# PointCloud Visualization

+The following example starts the camera and simultaneously opens RViz GUI to visualize the published pointcloud.

+```

+ros2 launch realsense2_camera rs_pointcloud_launch.py

+```

+

+Alternatively, start the camera terminal 1:

+```

+ros2 launch realsense2_camera rs_launch.py pointcloud.enable:=true

+```

+and in terminal 2 open rviz to visualize pointcloud.

+

+# PointCloud with different coordinate systems

+This example opens rviz and shows the camera model with different coordinate systems and the pointcloud, so it presents the pointcloud and the camera together.

+```

+ros2 launch realsense2_camera rs_d455_pointcloud_launch.py

+```

\ No newline at end of file

diff --git a/realsense2_camera/examples/pointcloud/rs_d455_pointcloud_launch.py b/realsense2_camera/examples/pointcloud/rs_d455_pointcloud_launch.py

index 4f013b1c87..85ee0ff48d 100644

--- a/realsense2_camera/examples/pointcloud/rs_d455_pointcloud_launch.py

+++ b/realsense2_camera/examples/pointcloud/rs_d455_pointcloud_launch.py

@@ -77,7 +77,7 @@ def generate_launch_description():

namespace='',

executable='rviz2',

name='rviz2',

- arguments=['-d', [ThisLaunchFileDir(), '/urdf_pointcloud.rviz']],

+ arguments=['-d', [ThisLaunchFileDir(), '/rviz/urdf_pointcloud.rviz']],

output='screen',

parameters=[{'use_sim_time': False}]

),

diff --git a/realsense2_camera/examples/pointcloud/rs_pointcloud_launch.py b/realsense2_camera/examples/pointcloud/rs_pointcloud_launch.py

index f0a5b541e0..f0acfe80b2 100644

--- a/realsense2_camera/examples/pointcloud/rs_pointcloud_launch.py

+++ b/realsense2_camera/examples/pointcloud/rs_pointcloud_launch.py

@@ -57,6 +57,6 @@ def generate_launch_description():

namespace='',

executable='rviz2',

name='rviz2',

- arguments=['-d', [ThisLaunchFileDir(), '/pointcloud.rviz']]

+ arguments=['-d', [ThisLaunchFileDir(), '/rviz/pointcloud.rviz']]

)

])

diff --git a/realsense2_camera/examples/pointcloud/pointcloud.rviz b/realsense2_camera/examples/pointcloud/rviz/pointcloud.rviz

similarity index 69%

rename from realsense2_camera/examples/pointcloud/pointcloud.rviz

rename to realsense2_camera/examples/pointcloud/rviz/pointcloud.rviz

index 055431f228..bb8955146f 100644

--- a/realsense2_camera/examples/pointcloud/pointcloud.rviz

+++ b/realsense2_camera/examples/pointcloud/rviz/pointcloud.rviz

@@ -8,14 +8,12 @@ Panels:

- /Status1

- /Grid1

- /PointCloud21

- - /Image1

Splitter Ratio: 0.5

- Tree Height: 222

+ Tree Height: 382

- Class: rviz_common/Selection

Name: Selection

- Class: rviz_common/Tool Properties

Expanded:

- - /2D Nav Goal1

- /Publish Point1

Name: Tool Properties

Splitter Ratio: 0.5886790156364441

@@ -65,13 +63,17 @@ Visualization Manager:

Min Intensity: 0

Name: PointCloud2

Position Transformer: XYZ

- Queue Size: 10

Selectable: true

Size (Pixels): 3

Size (m): 0.009999999776482582

Style: Flat Squares

- Topic: /camera/depth/color/points

- Unreliable: false

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ Filter size: 10

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /camera/camera/depth/color/points

Use Fixed Frame: true

Use rainbow: true

Value: true

@@ -82,9 +84,12 @@ Visualization Manager:

Min Value: 0

Name: Image

Normalize Range: true

- Queue Size: 10

- Topic: /camera/color/image_raw

- Unreliable: false

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /camera/camera/color/image_raw

Value: true

- Class: rviz_default_plugins/Image

Enabled: true

@@ -93,31 +98,12 @@ Visualization Manager:

Min Value: 0

Name: Image

Normalize Range: true

- Queue Size: 10

- Topic: /camera/depth/image_rect_raw

- Unreliable: false

- Value: true

- - Class: rviz_default_plugins/Image

- Enabled: true

- Max Value: 1

- Median window: 5

- Min Value: 0

- Name: Image

- Normalize Range: true

- Queue Size: 10

- Topic: /camera/infra1/image_rect_raw

- Unreliable: false

- Value: true

- - Class: rviz_default_plugins/Image

- Enabled: true

- Max Value: 1

- Median window: 5

- Min Value: 0

- Name: Image

- Normalize Range: true

- Queue Size: 10

- Topic: /camera/infra2/image_rect_raw

- Unreliable: false

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /camera/camera/depth/image_rect_raw

Value: true

Enabled: true

Global Options:

@@ -132,12 +118,30 @@ Visualization Manager:

- Class: rviz_default_plugins/Measure

Line color: 128; 128; 0

- Class: rviz_default_plugins/SetInitialPose

- Topic: /initialpose

+ Covariance x: 0.25

+ Covariance y: 0.25

+ Covariance yaw: 0.06853891909122467

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /initialpose

- Class: rviz_default_plugins/SetGoal

- Topic: /move_base_simple/goal

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /move_base_simple/goal

- Class: rviz_default_plugins/PublishPoint

Single click: true

- Topic: /clicked_point

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /clicked_point

Transformation:

Current:

Class: rviz_default_plugins/TF

@@ -168,18 +172,18 @@ Visualization Manager:

Window Geometry:

Displays:

collapsed: false

- Height: 1025

+ Height: 1016

Hide Left Dock: false

Hide Right Dock: true

Image:

collapsed: false

- QMainWindow State: 000000ff00000000fd000000040000000000000156000003a7fc0200000010fb0000001200530065006c0065006300740069006f006e00000001e10000009b0000005c00fffffffb0000001e0054006f006f006c002000500072006f007000650072007400690065007302000001ed000001df00000185000000a3fb000000120056006900650077007300200054006f006f02000001df000002110000018500000122fb000000200054006f006f006c002000500072006f0070006500720074006900650073003203000002880000011d000002210000017afb000000100044006900730070006c006100790073010000003d0000011b000000c900fffffffb0000000a0049006d006100670065010000015e0000009b0000002800fffffffb0000000a0049006d00610067006501000001ff000000b20000002800fffffffb0000000a0049006d00610067006501000002b70000009a0000002800fffffffb0000002000730065006c0065006300740069006f006e00200062007500660066006500720200000138000000aa0000023a00000294fb00000014005700690064006500530074006500720065006f02000000e6000000d2000003ee0000030bfb0000000c004b0069006e0065006300740200000186000001060000030c00000261fb0000000a0049006d00610067006501000003570000008d0000002800fffffffb0000000a0049006d00610067006501000001940000005d0000000000000000fb0000000a0049006d00610067006501000001f70000007a0000000000000000fb0000000a0049006d00610067006501000002770000009d0000000000000000fb0000000a0049006d006100670065010000031a000000ca0000000000000000000000010000010f000001effc0200000003fb0000001e0054006f006f006c002000500072006f00700065007200740069006500730100000041000000780000000000000000fb0000000a00560069006500770073000000003d000001ef000000a400fffffffb0000001200530065006c0065006300740069006f006e010000025a000000b20000000000000000000000020000073d000000a9fc0100000002fb0000000a0049006d00610067006503000001c5000000bb000001f8000001b0fb0000000a00560069006500770073030000004e00000080000002e10000019700000003000004420000003efc0100000002fb0000000800540069006d00650100000000000004420000000000000000fb0000000800540069006d00650100000000000004500000000000000000000005e1000003a700000004000000040000000800000008fc0000000100000002000000010000000a0054006f006f006c00730100000000ffffffff0000000000000000

+ QMainWindow State: 000000ff00000000fd0000000400000000000001560000039efc0200000010fb0000001200530065006c0065006300740069006f006e00000001e10000009b0000005c00fffffffb0000001e0054006f006f006c002000500072006f007000650072007400690065007302000001ed000001df00000185000000a3fb000000120056006900650077007300200054006f006f02000001df000002110000018500000122fb000000200054006f006f006c002000500072006f0070006500720074006900650073003203000002880000011d000002210000017afb000000100044006900730070006c006100790073010000003d000001bb000000c900fffffffb0000000a0049006d006100670065010000010c0000001c0000000000000000fb0000000a0049006d006100670065010000012e0000001f0000000000000000fb0000000a0049006d00610067006501000001530000001c0000000000000000fb0000002000730065006c0065006300740069006f006e00200062007500660066006500720200000138000000aa0000023a00000294fb00000014005700690064006500530074006500720065006f02000000e6000000d2000003ee0000030bfb0000000c004b0069006e0065006300740200000186000001060000030c00000261fb0000000a0049006d0061006700650100000175000000190000000000000000fb0000000a0049006d00610067006501000001fe000000cd0000002800fffffffb0000000a0049006d00610067006501000002d10000010a0000002800fffffffb0000000a0049006d0061006700650000000233000000b70000000000000000fb0000000a0049006d00610067006501000002b2000001290000000000000000000000010000010f000001effc0200000003fb0000001e0054006f006f006c002000500072006f00700065007200740069006500730100000041000000780000000000000000fb0000000a00560069006500770073000000003d000001ef000000a400fffffffb0000001200530065006c0065006300740069006f006e010000025a000000b20000000000000000000000020000073d000000a9fc0100000002fb0000000a0049006d00610067006503000001c5000000bb000001f8000001b0fb0000000a00560069006500770073030000004e00000080000002e10000019700000003000004420000003efc0100000002fb0000000800540069006d00650100000000000004420000000000000000fb0000000800540069006d00650100000000000004500000000000000000000005da0000039e00000004000000040000000800000008fc0000000100000002000000010000000a0054006f006f006c00730100000000ffffffff0000000000000000

Selection:

collapsed: false

Tool Properties:

collapsed: false

Views:

collapsed: true

- Width: 1853

- X: 67

+ Width: 1846

+ X: 74

Y: 27

diff --git a/realsense2_camera/examples/pointcloud/urdf_pointcloud.rviz b/realsense2_camera/examples/pointcloud/rviz/urdf_pointcloud.rviz

similarity index 92%

rename from realsense2_camera/examples/pointcloud/urdf_pointcloud.rviz

rename to realsense2_camera/examples/pointcloud/rviz/urdf_pointcloud.rviz

index 500e60114e..294917ad48 100644

--- a/realsense2_camera/examples/pointcloud/urdf_pointcloud.rviz

+++ b/realsense2_camera/examples/pointcloud/rviz/urdf_pointcloud.rviz

@@ -8,12 +8,12 @@ Panels:

- /Status1

- /RobotModel1

- /RobotModel1/Description Topic1

- - /TF1

- /TF1/Frames1

+ - /PointCloud21

- /Image1

- /Image2

- Splitter Ratio: 0.6360294222831726

- Tree Height: 362

+ Splitter Ratio: 0.36195287108421326

+ Tree Height: 308

- Class: rviz_common/Selection

Name: Selection

- Class: rviz_common/Tool Properties

@@ -245,7 +245,7 @@ Visualization Manager:

Filter size: 10

History Policy: Keep Last

Reliability Policy: Reliable

- Value: /camera/depth/color/points

+ Value: /camera/camera/depth/color/points

Use Fixed Frame: true

Use rainbow: true

Value: true

@@ -261,7 +261,7 @@ Visualization Manager:

Durability Policy: Volatile

History Policy: Keep Last

Reliability Policy: Reliable

- Value: /camera/color/image_raw

+ Value: /camera/camera/color/image_raw

Value: true

- Class: rviz_default_plugins/Image

Enabled: true

@@ -275,7 +275,7 @@ Visualization Manager:

Durability Policy: Volatile

History Policy: Keep Last

Reliability Policy: Reliable

- Value: /camera/depth/image_rect_raw

+ Value: /camera/camera/depth/image_rect_raw

Value: true

Enabled: true

Global Options:

@@ -323,7 +323,7 @@ Visualization Manager:

Views:

Current:

Class: rviz_default_plugins/Orbit

- Distance: 3.9530911445617676

+ Distance: 4.639365196228027

Enable Stereo Rendering:

Stereo Eye Separation: 0.05999999865889549

Stereo Focal Distance: 1

@@ -351,7 +351,7 @@ Window Geometry:

Hide Right Dock: false

Image:

collapsed: false

- QMainWindow State: 000000ff00000000fd0000000400000000000001560000039efc020000000afb0000001200530065006c0065006300740069006f006e00000001e10000009b0000005c00fffffffb0000001e0054006f006f006c002000500072006f007000650072007400690065007302000001ed000001df00000185000000a3fb000000120056006900650077007300200054006f006f02000001df000002110000018500000122fb000000200054006f006f006c002000500072006f0070006500720074006900650073003203000002880000011d000002210000017afb000000100044006900730070006c006100790073010000003d000001f5000000c900fffffffb0000002000730065006c0065006300740069006f006e00200062007500660066006500720200000138000000aa0000023a00000294fb00000014005700690064006500530074006500720065006f02000000e6000000d2000003ee0000030bfb0000000c004b0069006e0065006300740200000186000001060000030c00000261fb0000000a0049006d0061006700650100000238000000b00000002800fffffffb0000000a0049006d00610067006501000002ee000000ed0000002800ffffff000000010000010f0000039efc0200000003fb0000001e0054006f006f006c002000500072006f00700065007200740069006500730100000041000000780000000000000000fb0000000a00560069006500770073010000003d0000039e000000a400fffffffb0000001200530065006c0065006300740069006f006e010000025a000000b200000000000000000000000200000490000000a9fc0100000001fb0000000a00560069006500770073030000004e00000080000002e10000019700000003000004420000003efc0100000002fb0000000800540069006d00650100000000000004420000000000000000fb0000000800540069006d00650100000000000004500000000000000000000004c50000039e00000004000000040000000800000008fc0000000100000002000000010000000a0054006f006f006c00730100000000ffffffff0000000000000000

+ QMainWindow State: 000000ff00000000fd0000000400000000000002540000039efc020000000cfb0000001200530065006c0065006300740069006f006e00000001e10000009b0000005c00fffffffb0000001e0054006f006f006c002000500072006f007000650072007400690065007302000001ed000001df00000185000000a3fb000000120056006900650077007300200054006f006f02000001df000002110000018500000122fb000000200054006f006f006c002000500072006f0070006500720074006900650073003203000002880000011d000002210000017afb000000100044006900730070006c006100790073010000003d000001bf000000c900fffffffb0000002000730065006c0065006300740069006f006e00200062007500660066006500720200000138000000aa0000023a00000294fb00000014005700690064006500530074006500720065006f02000000e6000000d2000003ee0000030bfb0000000c004b0069006e0065006300740200000186000001060000030c00000261fb0000000a0049006d0061006700650100000132000000b00000000000000000fb0000000a0049006d00610067006501000001e8000000ed0000000000000000fb0000000a0049006d0061006700650100000202000000e90000002800fffffffb0000000a0049006d00610067006501000002f1000000ea0000002800ffffff000000010000010f0000039efc0200000003fb0000001e0054006f006f006c002000500072006f00700065007200740069006500730100000041000000780000000000000000fb0000000a00560069006500770073010000003d0000039e000000a400fffffffb0000001200530065006c0065006300740069006f006e010000025a000000b200000000000000000000000200000490000000a9fc0100000001fb0000000a00560069006500770073030000004e00000080000002e10000019700000003000004420000003efc0100000002fb0000000800540069006d00650100000000000004420000000000000000fb0000000800540069006d00650100000000000004500000000000000000000003c70000039e00000004000000040000000800000008fc0000000100000002000000010000000a0054006f006f006c00730100000000ffffffff0000000000000000

Selection:

collapsed: false

Tool Properties:

diff --git a/realsense2_camera/include/base_realsense_node.h b/realsense2_camera/include/base_realsense_node.h

index a1cfe45037..34c5e8ebae 100755

--- a/realsense2_camera/include/base_realsense_node.h

+++ b/realsense2_camera/include/base_realsense_node.h

@@ -39,8 +39,6 @@

#include

#include

#include

-#include

-#include

#include

#include

@@ -249,7 +247,6 @@ namespace realsense2_camera

void FillImuData_LinearInterpolation(const CimuData imu_data, std::deque& imu_msgs);

void imu_callback(rs2::frame frame);

void imu_callback_sync(rs2::frame frame, imu_sync_method sync_method=imu_sync_method::COPY);

- void pose_callback(rs2::frame frame);

void multiple_message_callback(rs2::frame frame, imu_sync_method sync_method);

void frame_callback(rs2::frame frame);

@@ -294,7 +291,6 @@ namespace realsense2_camera

std::map> _image_publishers;

std::map::SharedPtr> _imu_publishers;

- std::shared_ptr> _odom_publisher;

std::shared_ptr _synced_imu_publisher;

std::map::SharedPtr> _info_publishers;

std::map::SharedPtr> _metadata_publishers;

@@ -317,7 +313,6 @@ namespace realsense2_camera

bool _is_accel_enabled;

bool _is_gyro_enabled;

bool _pointcloud;

- bool _publish_odom_tf;

imu_sync_method _imu_sync_method;

stream_index_pair _pointcloud_texture;

PipelineSyncer _syncer;

diff --git a/realsense2_camera/include/constants.h b/realsense2_camera/include/constants.h

index cd53468e0f..0f99fee585 100644

--- a/realsense2_camera/include/constants.h

+++ b/realsense2_camera/include/constants.h

@@ -31,19 +31,7 @@

#define ROS_WARN(...) RCLCPP_WARN(_logger, __VA_ARGS__)

#define ROS_ERROR(...) RCLCPP_ERROR(_logger, __VA_ARGS__)

-#ifdef DASHING

-// Based on: https://docs.ros2.org/dashing/api/rclcpp/logging_8hpp.html

-#define MSG(msg) (static_cast(std::ostringstream() << msg)).str()

-#define ROS_DEBUG_STREAM(msg) RCLCPP_DEBUG(_logger, MSG(msg))

-#define ROS_INFO_STREAM(msg) RCLCPP_INFO(_logger, MSG(msg))

-#define ROS_WARN_STREAM(msg) RCLCPP_WARN(_logger, MSG(msg))

-#define ROS_ERROR_STREAM(msg) RCLCPP_ERROR(_logger, MSG(msg))

-#define ROS_FATAL_STREAM(msg) RCLCPP_FATAL(_logger, MSG(msg))

-#define ROS_DEBUG_STREAM_ONCE(msg) RCLCPP_DEBUG_ONCE(_logger, MSG(msg))

-#define ROS_INFO_STREAM_ONCE(msg) RCLCPP_INFO_ONCE(_logger, MSG(msg))

-#define ROS_WARN_STREAM_COND(cond, msg) RCLCPP_WARN_EXPRESSION(_logger, cond, MSG(msg))

-#else

-// Based on: https://docs.ros2.org/foxy/api/rclcpp/logging_8hpp.html

+// Based on: https://docs.ros2.org/latest/api/rclcpp/logging_8hpp.html

#define ROS_DEBUG_STREAM(msg) RCLCPP_DEBUG_STREAM(_logger, msg)

#define ROS_INFO_STREAM(msg) RCLCPP_INFO_STREAM(_logger, msg)

#define ROS_WARN_STREAM(msg) RCLCPP_WARN_STREAM(_logger, msg)

@@ -52,15 +40,12 @@

#define ROS_DEBUG_STREAM_ONCE(msg) RCLCPP_DEBUG_STREAM_ONCE(_logger, msg)

#define ROS_INFO_STREAM_ONCE(msg) RCLCPP_INFO_STREAM_ONCE(_logger, msg)

#define ROS_WARN_STREAM_COND(cond, msg) RCLCPP_WARN_STREAM_EXPRESSION(_logger, cond, msg)

-#endif

#define ROS_WARN_ONCE(msg) RCLCPP_WARN_ONCE(_logger, msg)

#define ROS_WARN_COND(cond, ...) RCLCPP_WARN_EXPRESSION(_logger, cond, __VA_ARGS__)

namespace realsense2_camera

{

- const uint16_t SR300_PID = 0x0aa5; // SR300

- const uint16_t SR300v2_PID = 0x0B48; // SR305

const uint16_t RS400_PID = 0x0ad1; // PSR

const uint16_t RS410_PID = 0x0ad2; // ASR

const uint16_t RS415_PID = 0x0ad3; // ASRC

@@ -80,11 +65,7 @@ namespace realsense2_camera

const uint16_t RS430i_PID = 0x0b4b; // D430i

const uint16_t RS405_PID = 0x0B5B; // DS5U

const uint16_t RS455_PID = 0x0B5C; // D455

- const uint16_t RS457_PID = 0xABCD; // D457

- const uint16_t RS_L515_PID_PRE_PRQ = 0x0B3D; //

- const uint16_t RS_L515_PID = 0x0B64; //

- const uint16_t RS_L535_PID = 0x0b68;

-

+ const uint16_t RS457_PID = 0xABCD; // D457

const bool ALLOW_NO_TEXTURE_POINTS = false;

const bool ORDERED_PC = false;

@@ -100,15 +81,10 @@ namespace realsense2_camera

const std::string HID_QOS = "SENSOR_DATA";

const bool HOLD_BACK_IMU_FOR_FRAMES = false;

- const bool PUBLISH_ODOM_TF = true;

const std::string DEFAULT_BASE_FRAME_ID = "link";

- const std::string DEFAULT_ODOM_FRAME_ID = "odom_frame";

const std::string DEFAULT_IMU_OPTICAL_FRAME_ID = "camera_imu_optical_frame";

- const std::string DEFAULT_UNITE_IMU_METHOD = "";

- const std::string DEFAULT_FILTERS = "";

-

const float ROS_DEPTH_SCALE = 0.001;

static const rmw_qos_profile_t rmw_qos_profile_latched =

diff --git a/realsense2_camera/include/image_publisher.h b/realsense2_camera/include/image_publisher.h

index 6bc0bab8e6..3d7d004c74 100644

--- a/realsense2_camera/include/image_publisher.h

+++ b/realsense2_camera/include/image_publisher.h

@@ -17,11 +17,7 @@

#include

#include

-#if defined( DASHING ) || defined( ELOQUENT )

-#include

-#else

#include

-#endif

namespace realsense2_camera {

class image_publisher

diff --git a/realsense2_camera/include/profile_manager.h b/realsense2_camera/include/profile_manager.h

index b753ff67a9..3021e95f6c 100644

--- a/realsense2_camera/include/profile_manager.h

+++ b/realsense2_camera/include/profile_manager.h

@@ -103,12 +103,4 @@ namespace realsense2_camera

protected:

std::map > _fps;

};

-

- class PoseProfilesManager : public MotionProfilesManager

- {

- public:

- using MotionProfilesManager::MotionProfilesManager;

- void registerProfileParameters(std::vector all_profiles, std::function update_sensor_func) override;

- };

-

}

diff --git a/realsense2_camera/include/ros_utils.h b/realsense2_camera/include/ros_utils.h

index 4df1def396..feccd4647d 100644

--- a/realsense2_camera/include/ros_utils.h

+++ b/realsense2_camera/include/ros_utils.h

@@ -31,12 +31,8 @@ namespace realsense2_camera

const stream_index_pair INFRA0{RS2_STREAM_INFRARED, 0};

const stream_index_pair INFRA1{RS2_STREAM_INFRARED, 1};

const stream_index_pair INFRA2{RS2_STREAM_INFRARED, 2};

- const stream_index_pair FISHEYE{RS2_STREAM_FISHEYE, 0};

- const stream_index_pair FISHEYE1{RS2_STREAM_FISHEYE, 1};

- const stream_index_pair FISHEYE2{RS2_STREAM_FISHEYE, 2};

const stream_index_pair GYRO{RS2_STREAM_GYRO, 0};

const stream_index_pair ACCEL{RS2_STREAM_ACCEL, 0};

- const stream_index_pair POSE{RS2_STREAM_POSE, 0};

bool isValidCharInName(char c);

diff --git a/realsense2_camera/launch/default.rviz b/realsense2_camera/launch/default.rviz

index 055431f228..c4374772c6 100644

--- a/realsense2_camera/launch/default.rviz

+++ b/realsense2_camera/launch/default.rviz

@@ -9,13 +9,15 @@ Panels:

- /Grid1

- /PointCloud21

- /Image1

+ - /Image2

+ - /Image3

+ - /Image4

Splitter Ratio: 0.5

- Tree Height: 222

+ Tree Height: 190

- Class: rviz_common/Selection

Name: Selection

- Class: rviz_common/Tool Properties

Expanded:

- - /2D Nav Goal1

- /Publish Point1

Name: Tool Properties

Splitter Ratio: 0.5886790156364441

@@ -65,13 +67,17 @@ Visualization Manager:

Min Intensity: 0

Name: PointCloud2

Position Transformer: XYZ

- Queue Size: 10

Selectable: true

Size (Pixels): 3

Size (m): 0.009999999776482582

Style: Flat Squares

- Topic: /camera/depth/color/points

- Unreliable: false

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ Filter size: 10

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /camera/camera/depth/color/points

Use Fixed Frame: true

Use rainbow: true

Value: true

@@ -82,9 +88,12 @@ Visualization Manager:

Min Value: 0

Name: Image

Normalize Range: true

- Queue Size: 10

- Topic: /camera/color/image_raw

- Unreliable: false

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /camera/camera/color/image_raw

Value: true

- Class: rviz_default_plugins/Image

Enabled: true

@@ -93,9 +102,12 @@ Visualization Manager:

Min Value: 0

Name: Image

Normalize Range: true

- Queue Size: 10

- Topic: /camera/depth/image_rect_raw

- Unreliable: false

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /camera/camera/depth/image_rect_raw

Value: true

- Class: rviz_default_plugins/Image

Enabled: true

@@ -104,9 +116,12 @@ Visualization Manager:

Min Value: 0

Name: Image

Normalize Range: true

- Queue Size: 10

- Topic: /camera/infra1/image_rect_raw

- Unreliable: false

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /camera/camera/infra1/image_rect_raw

Value: true

- Class: rviz_default_plugins/Image

Enabled: true

@@ -115,9 +130,12 @@ Visualization Manager:

Min Value: 0

Name: Image

Normalize Range: true

- Queue Size: 10

- Topic: /camera/infra2/image_rect_raw

- Unreliable: false

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /camera/camera/infra2/image_rect_raw

Value: true

Enabled: true

Global Options:

@@ -132,12 +150,30 @@ Visualization Manager:

- Class: rviz_default_plugins/Measure

Line color: 128; 128; 0

- Class: rviz_default_plugins/SetInitialPose

- Topic: /initialpose

+ Covariance x: 0.25

+ Covariance y: 0.25

+ Covariance yaw: 0.06853891909122467

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /initialpose

- Class: rviz_default_plugins/SetGoal

- Topic: /move_base_simple/goal

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /move_base_simple/goal

- Class: rviz_default_plugins/PublishPoint

Single click: true

- Topic: /clicked_point

+ Topic:

+ Depth: 5

+ Durability Policy: Volatile

+ History Policy: Keep Last

+ Reliability Policy: Reliable

+ Value: /clicked_point

Transformation:

Current:

Class: rviz_default_plugins/TF

@@ -145,7 +181,7 @@ Visualization Manager:

Views:

Current:

Class: rviz_default_plugins/Orbit

- Distance: 1.0510121583938599

+ Distance: 3.677529811859131

Enable Stereo Rendering:

Stereo Eye Separation: 0.05999999865889549

Stereo Focal Distance: 1

@@ -168,18 +204,18 @@ Visualization Manager:

Window Geometry:

Displays:

collapsed: false

- Height: 1025

+ Height: 1016

Hide Left Dock: false

Hide Right Dock: true

Image:

collapsed: false

- QMainWindow State: 000000ff00000000fd000000040000000000000156000003a7fc0200000010fb0000001200530065006c0065006300740069006f006e00000001e10000009b0000005c00fffffffb0000001e0054006f006f006c002000500072006f007000650072007400690065007302000001ed000001df00000185000000a3fb000000120056006900650077007300200054006f006f02000001df000002110000018500000122fb000000200054006f006f006c002000500072006f0070006500720074006900650073003203000002880000011d000002210000017afb000000100044006900730070006c006100790073010000003d0000011b000000c900fffffffb0000000a0049006d006100670065010000015e0000009b0000002800fffffffb0000000a0049006d00610067006501000001ff000000b20000002800fffffffb0000000a0049006d00610067006501000002b70000009a0000002800fffffffb0000002000730065006c0065006300740069006f006e00200062007500660066006500720200000138000000aa0000023a00000294fb00000014005700690064006500530074006500720065006f02000000e6000000d2000003ee0000030bfb0000000c004b0069006e0065006300740200000186000001060000030c00000261fb0000000a0049006d00610067006501000003570000008d0000002800fffffffb0000000a0049006d00610067006501000001940000005d0000000000000000fb0000000a0049006d00610067006501000001f70000007a0000000000000000fb0000000a0049006d00610067006501000002770000009d0000000000000000fb0000000a0049006d006100670065010000031a000000ca0000000000000000000000010000010f000001effc0200000003fb0000001e0054006f006f006c002000500072006f00700065007200740069006500730100000041000000780000000000000000fb0000000a00560069006500770073000000003d000001ef000000a400fffffffb0000001200530065006c0065006300740069006f006e010000025a000000b20000000000000000000000020000073d000000a9fc0100000002fb0000000a0049006d00610067006503000001c5000000bb000001f8000001b0fb0000000a00560069006500770073030000004e00000080000002e10000019700000003000004420000003efc0100000002fb0000000800540069006d00650100000000000004420000000000000000fb0000000800540069006d00650100000000000004500000000000000000000005e1000003a700000004000000040000000800000008fc0000000100000002000000010000000a0054006f006f006c00730100000000ffffffff0000000000000000

+ QMainWindow State: 000000ff00000000fd0000000400000000000001560000039efc0200000010fb0000001200530065006c0065006300740069006f006e00000001e10000009b0000005c00fffffffb0000001e0054006f006f006c002000500072006f007000650072007400690065007302000001ed000001df00000185000000a3fb000000120056006900650077007300200054006f006f02000001df000002110000018500000122fb000000200054006f006f006c002000500072006f0070006500720074006900650073003203000002880000011d000002210000017afb000000100044006900730070006c006100790073010000003d000000fb000000c900fffffffb0000000a0049006d006100670065010000011e000000780000000000000000fb0000000a0049006d006100670065010000013e000000cd0000002800fffffffb0000000a0049006d0061006700650100000211000000b10000002800fffffffb0000002000730065006c0065006300740069006f006e00200062007500660066006500720200000138000000aa0000023a00000294fb00000014005700690064006500530074006500720065006f02000000e6000000d2000003ee0000030bfb0000000c004b0069006e0065006300740200000186000001060000030c00000261fb0000000a0049006d00610067006501000002c8000000a20000002800fffffffb0000000a0049006d00610067006501000003700000006b0000002800fffffffb0000000a0049006d00610067006501000001f70000007a0000000000000000fb0000000a0049006d00610067006501000002770000009d0000000000000000fb0000000a0049006d006100670065010000031a000000ca0000000000000000000000010000010f000001effc0200000003fb0000001e0054006f006f006c002000500072006f00700065007200740069006500730100000041000000780000000000000000fb0000000a00560069006500770073000000003d000001ef000000a400fffffffb0000001200530065006c0065006300740069006f006e010000025a000000b20000000000000000000000020000073d000000a9fc0100000002fb0000000a0049006d00610067006503000001c5000000bb000001f8000001b0fb0000000a00560069006500770073030000004e00000080000002e10000019700000003000004420000003efc0100000002fb0000000800540069006d00650100000000000004420000000000000000fb0000000800540069006d00650100000000000004500000000000000000000005da0000039e00000004000000040000000800000008fc0000000100000002000000010000000a0054006f006f006c00730100000000ffffffff0000000000000000

Selection:

collapsed: false

Tool Properties:

collapsed: false

Views:

collapsed: true

- Width: 1853

- X: 67

+ Width: 1846

+ X: 74

Y: 27

diff --git a/realsense2_camera/launch/rs_intra_process_demo_launch.py b/realsense2_camera/launch/rs_intra_process_demo_launch.py

index 103afee220..a4e54391a0 100644

--- a/realsense2_camera/launch/rs_intra_process_demo_launch.py

+++ b/realsense2_camera/launch/rs_intra_process_demo_launch.py

@@ -41,22 +41,32 @@

'\nplease make sure you run "colcon build --cmake-args \'-DBUILD_TOOLS=ON\'" command before running this launch file')

-configurable_parameters = [{'name': 'camera_name', 'default': 'camera', 'description': 'camera unique name'},

- {'name': 'serial_no', 'default': "''", 'description': 'choose device by serial number'},

- {'name': 'usb_port_id', 'default': "''", 'description': 'choose device by usb port id'},

- {'name': 'device_type', 'default': "''", 'description': 'choose device by type'},

- {'name': 'log_level', 'default': 'info', 'description': 'debug log level [DEBUG|INFO|WARN|ERROR|FATAL]'},

- {'name': 'rgb_camera.profile', 'default': '0,0,0', 'description': 'color image width'},

- {'name': 'enable_color', 'default': 'true', 'description': 'enable color stream'},

- {'name': 'enable_depth', 'default': 'false', 'description': 'enable depth stream'},

- {'name': 'enable_infra1', 'default': 'false', 'description': 'enable infra1 stream'},

- {'name': 'enable_infra2', 'default': 'false', 'description': 'enable infra2 stream'},

- {'name': 'enable_gyro', 'default': 'false', 'description': "enable gyro stream"},

- {'name': 'enable_accel', 'default': 'false', 'description': "enable accel stream"},

- {'name': 'intra_process_comms', 'default': 'true', 'description': "enable intra-process communication"},

- {'name': 'publish_tf', 'default': 'true', 'description': '[bool] enable/disable publishing static & dynamic TF'},

- {'name': 'tf_publish_rate', 'default': '0.0', 'description': '[double] rate in HZ for publishing dynamic TF'},

- ]

+realsense_node_params = [{'name': 'serial_no', 'default': "''", 'description': 'choose device by serial number'},

+ {'name': 'usb_port_id', 'default': "''", 'description': 'choose device by usb port id'},

+ {'name': 'device_type', 'default': "''", 'description': 'choose device by type'},

+ {'name': 'log_level', 'default': 'info', 'description': 'debug log level [DEBUG|INFO|WARN|ERROR|FATAL]'},

+ {'name': 'rgb_camera.profile', 'default': '0,0,0', 'description': 'color image width'},

+ {'name': 'enable_color', 'default': 'true', 'description': 'enable color stream'},

+ {'name': 'enable_depth', 'default': 'true', 'description': 'enable depth stream'},

+ {'name': 'enable_infra', 'default': 'false', 'description': 'enable infra stream'},

+ {'name': 'enable_infra1', 'default': 'true', 'description': 'enable infra1 stream'},

+ {'name': 'enable_infra2', 'default': 'true', 'description': 'enable infra2 stream'},

+ {'name': 'enable_gyro', 'default': 'true', 'description': "enable gyro stream"},

+ {'name': 'enable_accel', 'default': 'true', 'description': "enable accel stream"},

+ {'name': 'unite_imu_method', 'default': "1", 'description': '[0-None, 1-copy, 2-linear_interpolation]'},

+ {'name': 'intra_process_comms', 'default': 'true', 'description': "enable intra-process communication"},

+ {'name': 'enable_sync', 'default': 'true', 'description': "'enable sync mode'"},

+ {'name': 'pointcloud.enable', 'default': 'true', 'description': ''},

+ {'name': 'enable_rgbd', 'default': 'true', 'description': "'enable rgbd topic'"},

+ {'name': 'align_depth.enable', 'default': 'true', 'description': "'enable align depth filter'"},

+ {'name': 'publish_tf', 'default': 'true', 'description': '[bool] enable/disable publishing static & dynamic TF'},

+ {'name': 'tf_publish_rate', 'default': '1.0', 'description': '[double] rate in HZ for publishing dynamic TF'},

+ ]

+

+frame_latency_node_params = [{'name': 'topic_name', 'default': '/camera/color/image_raw', 'description': 'topic to which latency calculated'},

+ {'name': 'topic_type', 'default': 'image', 'description': 'topic type [image|points|imu|metadata|camera_info|rgbd|imu_info|tf]'},

+ ]

+

def declare_configurable_parameters(parameters):

return [DeclareLaunchArgument(param['name'], default_value=param['default'], description=param['description']) for param in parameters]

@@ -68,7 +78,8 @@ def set_configurable_parameters(parameters):

def generate_launch_description():

- return LaunchDescription(declare_configurable_parameters(configurable_parameters) + [

+ return LaunchDescription(declare_configurable_parameters(realsense_node_params) +

+ declare_configurable_parameters(frame_latency_node_params) +[

ComposableNodeContainer(

name='my_container',

namespace='',

@@ -80,14 +91,14 @@ def generate_launch_description():

namespace='',

plugin='realsense2_camera::' + rs_node_class,

name="camera",

- parameters=[set_configurable_parameters(configurable_parameters)],

+ parameters=[set_configurable_parameters(realsense_node_params)],

extra_arguments=[{'use_intra_process_comms': LaunchConfiguration("intra_process_comms")}]) ,

ComposableNode(

package='realsense2_camera',

namespace='',

plugin='rs2_ros::tools::frame_latency::' + rs_latency_tool_class,

name='frame_latency',

- parameters=[set_configurable_parameters(configurable_parameters)],

+ parameters=[set_configurable_parameters(frame_latency_node_params)],

extra_arguments=[{'use_intra_process_comms': LaunchConfiguration("intra_process_comms")}]) ,

],

output='screen',

diff --git a/realsense2_camera/launch/rs_launch.py b/realsense2_camera/launch/rs_launch.py

index ef0f167f7e..c6c14db6c4 100644

--- a/realsense2_camera/launch/rs_launch.py

+++ b/realsense2_camera/launch/rs_launch.py

@@ -40,8 +40,6 @@

{'name': 'enable_infra', 'default': 'false', 'description': 'enable infra0 stream'},

{'name': 'enable_infra1', 'default': 'false', 'description': 'enable infra1 stream'},

{'name': 'enable_infra2', 'default': 'false', 'description': 'enable infra2 stream'},

- {'name': 'enable_fisheye1', 'default': 'true', 'description': 'enable fisheye1 stream'},

- {'name': 'enable_fisheye2', 'default': 'true', 'description': 'enable fisheye2 stream'},

{'name': 'depth_module.profile', 'default': '0,0,0', 'description': 'depth module profile'},

{'name': 'depth_module.depth_format', 'default': 'Z16', 'description': 'depth stream format'},

{'name': 'depth_module.infra_format', 'default': 'RGB8', 'description': 'infra0 stream format'},

@@ -55,7 +53,6 @@

{'name': 'depth_module.gain.1', 'default': '16', 'description': 'Depth module first gain value. Used for hdr_merge filter'},

{'name': 'depth_module.exposure.2', 'default': '1', 'description': 'Depth module second exposure value. Used for hdr_merge filter'},

{'name': 'depth_module.gain.2', 'default': '16', 'description': 'Depth module second gain value. Used for hdr_merge filter'},

- {'name': 'enable_confidence', 'default': 'true', 'description': 'enable confidence'},

{'name': 'enable_sync', 'default': 'false', 'description': "'enable sync mode'"},

{'name': 'enable_rgbd', 'default': 'false', 'description': "'enable rgbd topic'"},

{'name': 'enable_gyro', 'default': 'false', 'description': "'enable gyro stream'"},

@@ -63,8 +60,6 @@

{'name': 'gyro_fps', 'default': '0', 'description': "''"},

{'name': 'accel_fps', 'default': '0', 'description': "''"},

{'name': 'unite_imu_method', 'default': "0", 'description': '[0-None, 1-copy, 2-linear_interpolation]'},

- {'name': 'enable_pose', 'default': 'true', 'description': "'enable pose stream'"},

- {'name': 'pose_fps', 'default': '200', 'description': "''"},

{'name': 'clip_distance', 'default': '-2.', 'description': "''"},

{'name': 'angular_velocity_cov', 'default': '0.01', 'description': "''"},

{'name': 'linear_accel_cov', 'default': '0.01', 'description': "''"},

@@ -101,37 +96,18 @@ def yaml_to_dict(path_to_yaml):

def launch_setup(context, params, param_name_suffix=''):

_config_file = LaunchConfiguration('config_file' + param_name_suffix).perform(context)

params_from_file = {} if _config_file == "''" else yaml_to_dict(_config_file)

- # Realsense

- if (os.getenv('ROS_DISTRO') == "dashing") or (os.getenv('ROS_DISTRO') == "eloquent"):

- return [

- launch_ros.actions.Node(

- package='realsense2_camera',

- node_namespace=LaunchConfiguration('camera_namespace' + param_name_suffix),

- node_name=LaunchConfiguration('camera_name' + param_name_suffix),

- node_executable='realsense2_camera_node',

- prefix=['stdbuf -o L'],

- parameters=[params

- , params_from_file

- ],

- output=LaunchConfiguration('output' + param_name_suffix),

- arguments=['--ros-args', '--log-level', LaunchConfiguration('log_level' + param_name_suffix)],

- )

- ]

- else:

- return [

- launch_ros.actions.Node(

- package='realsense2_camera',

- namespace=LaunchConfiguration('camera_namespace' + param_name_suffix),

- name=LaunchConfiguration('camera_name' + param_name_suffix),

- executable='realsense2_camera_node',

- parameters=[params

- , params_from_file

- ],

- output=LaunchConfiguration('output' + param_name_suffix),

- arguments=['--ros-args', '--log-level', LaunchConfiguration('log_level' + param_name_suffix)],

- emulate_tty=True,

- )

- ]

+ return [

+ launch_ros.actions.Node(

+ package='realsense2_camera',

+ namespace=LaunchConfiguration('camera_namespace' + param_name_suffix),

+ name=LaunchConfiguration('camera_name' + param_name_suffix),

+ executable='realsense2_camera_node',

+ parameters=[params, params_from_file],

+ output=LaunchConfiguration('output' + param_name_suffix),

+ arguments=['--ros-args', '--log-level', LaunchConfiguration('log_level' + param_name_suffix)],

+ emulate_tty=True,

+ )

+ ]

def generate_launch_description():

return LaunchDescription(declare_configurable_parameters(configurable_parameters) + [

diff --git a/realsense2_camera/package.xml b/realsense2_camera/package.xml

index faedde2dd2..6db46cff68 100644

--- a/realsense2_camera/package.xml

+++ b/realsense2_camera/package.xml

@@ -3,7 +3,7 @@

realsense2_camera4.54.1

- RealSense camera package allowing access to Intel SR300 and D400 3D cameras

+ RealSense camera package allowing access to Intel D400 3D camerasLibRealSense ROS TeamApache License 2.0

@@ -38,7 +38,8 @@

sensor_msgs_pypython3-requeststf2_ros_py

-

+ ros2topic

+

launch_rosros_environment

diff --git a/realsense2_camera/scripts/rs2_listener.py b/realsense2_camera/scripts/rs2_listener.py

index 1fcbc97834..9cc356f7bd 100644

--- a/realsense2_camera/scripts/rs2_listener.py

+++ b/realsense2_camera/scripts/rs2_listener.py

@@ -19,18 +19,12 @@

from rclpy import qos

from sensor_msgs.msg import Image as msg_Image

import numpy as np

-import inspect

import ctypes

import struct

import quaternion

-import os

-if (os.getenv('ROS_DISTRO') != "dashing"):

- import tf2_ros

-if (os.getenv('ROS_DISTRO') == "humble"):

- from sensor_msgs.msg import PointCloud2 as msg_PointCloud2

- from sensor_msgs_py import point_cloud2 as pc2

-# from sensor_msgs.msg import PointCloud2 as msg_PointCloud2

-# import sensor_msgs.point_cloud2 as pc2

+import tf2_ros

+from sensor_msgs.msg import PointCloud2 as msg_PointCloud2

+from sensor_msgs_py import point_cloud2 as pc2

from sensor_msgs.msg import Imu as msg_Imu

try:

@@ -220,9 +214,8 @@ def wait_for_messages(self, themes):

node.get_logger().info('Subscribing %s on topic: %s' % (theme_name, theme['topic']))

self.func_data[theme_name]['sub'] = node.create_subscription(theme['msg_type'], theme['topic'], theme['callback'](theme_name), qos.qos_profile_sensor_data)

- if (os.getenv('ROS_DISTRO') != "dashing"):

- self.tfBuffer = tf2_ros.Buffer()

- self.tf_listener = tf2_ros.TransformListener(self.tfBuffer, node)

+ self.tfBuffer = tf2_ros.Buffer()

+ self.tf_listener = tf2_ros.TransformListener(self.tfBuffer, node)

self.prev_time = time.time()

break_timeout = False

diff --git a/realsense2_camera/scripts/rs2_test.py b/realsense2_camera/scripts/rs2_test.py

index 697f72ad5b..b1351aba66 100644

--- a/realsense2_camera/scripts/rs2_test.py

+++ b/realsense2_camera/scripts/rs2_test.py

@@ -21,8 +21,7 @@

from rclpy.node import Node

from importRosbag.importRosbag import importRosbag

import numpy as np

-if (os.getenv('ROS_DISTRO') != "dashing"):

- import tf2_ros

+import tf2_ros

import itertools

import subprocess

import time

@@ -277,20 +276,16 @@ def print_results(results):

def get_tfs(coupled_frame_ids):

res = dict()

- if (os.getenv('ROS_DISTRO') == "dashing"):

- for couple in coupled_frame_ids:

+ tfBuffer = tf2_ros.Buffer()

+ node = Node('tf_listener')

+ listener = tf2_ros.TransformListener(tfBuffer, node)

+ rclpy.spin_once(node)

+ for couple in coupled_frame_ids:

+ from_id, to_id = couple

+ if (tfBuffer.can_transform(from_id, to_id, rclpy.time.Time(), rclpy.time.Duration(nanoseconds=3e6))):

+ res[couple] = tfBuffer.lookup_transform(from_id, to_id, rclpy.time.Time(), rclpy.time.Duration(nanoseconds=1e6)).transform

+ else:

res[couple] = None

- else:

- tfBuffer = tf2_ros.Buffer()

- node = Node('tf_listener')

- listener = tf2_ros.TransformListener(tfBuffer, node)

- rclpy.spin_once(node)

- for couple in coupled_frame_ids:

- from_id, to_id = couple

- if (tfBuffer.can_transform(from_id, to_id, rclpy.time.Time(), rclpy.time.Duration(nanoseconds=3e6))):

- res[couple] = tfBuffer.lookup_transform(from_id, to_id, rclpy.time.Time(), rclpy.time.Duration(nanoseconds=1e6)).transform

- else:

- res[couple] = None

return res

def kill_realsense2_camera_node():

@@ -338,7 +333,7 @@ def run_tests(tests):

listener_res = msg_retriever.wait_for_messages(themes)

if 'static_tf' in [test['type'] for test in rec_tests]:

print ('Gathering static transforms')

- frame_ids = ['camera_link', 'camera_depth_frame', 'camera_infra1_frame', 'camera_infra2_frame', 'camera_color_frame', 'camera_fisheye_frame', 'camera_pose']

+ frame_ids = ['camera_link', 'camera_depth_frame', 'camera_infra1_frame', 'camera_infra2_frame', 'camera_color_frame']

coupled_frame_ids = [xx for xx in itertools.combinations(frame_ids, 2)]

listener_res['static_tf'] = get_tfs(coupled_frame_ids)

@@ -372,17 +367,12 @@ def main():

#{'name': 'points_cloud_1', 'type': 'pointscloud_avg', 'params': {'rosbag_filename': outdoors_filename, 'pointcloud.enable': 'true'}},

{'name': 'depth_w_cloud_1', 'type': 'depth_avg', 'params': {'rosbag_filename': outdoors_filename, 'pointcloud.enable': 'true'}},

{'name': 'align_depth_color_1', 'type': 'align_depth_color', 'params': {'rosbag_filename': outdoors_filename, 'align_depth.enable':'true'}},

- {'name': 'align_depth_ir1_1', 'type': 'align_depth_ir1', 'params': {'rosbag_filename': outdoors_filename, 'align_depth.enable': 'true',

- 'enable_infra1':'true', 'enable_infra2':'true'}},

+ {'name': 'align_depth_ir1_1', 'type': 'align_depth_ir1', 'params': {'rosbag_filename': outdoors_filename, 'align_depth.enable': 'true', 'enable_infra1':'true', 'enable_infra2':'true'}},

{'name': 'depth_avg_decimation_1', 'type': 'depth_avg_decimation', 'params': {'rosbag_filename': outdoors_filename, 'decimation_filter.enable':'true'}},

{'name': 'align_depth_ir1_decimation_1', 'type': 'align_depth_ir1_decimation', 'params': {'rosbag_filename': outdoors_filename, 'align_depth.enable':'true', 'decimation_filter.enable':'true'}},

- ]

- if (os.getenv('ROS_DISTRO') != "dashing"):

- all_tests.extend([

- {'name': 'static_tf_1', 'type': 'static_tf', 'params': {'rosbag_filename': outdoors_filename,

- 'enable_infra1':'true', 'enable_infra2':'true'}},

- {'name': 'accel_up_1', 'type': 'accel_up', 'params': {'rosbag_filename': './records/D435i_Depth_and_IMU_Stands_still.bag', 'enable_accel': 'true', 'accel_fps': '0.0'}},

- ])

+ {'name': 'static_tf_1', 'type': 'static_tf', 'params': {'rosbag_filename': outdoors_filename, 'enable_infra1':'true', 'enable_infra2':'true'}},

+ {'name': 'accel_up_1', 'type': 'accel_up', 'params': {'rosbag_filename': './records/D435i_Depth_and_IMU_Stands_still.bag', 'enable_accel': 'true', 'accel_fps': '0.0'}},

+ ]

# Normalize parameters:

for test in all_tests:

diff --git a/realsense2_camera/scripts/show_center_depth.py b/realsense2_camera/scripts/show_center_depth.py

index fb957664f4..acf8ac0db2 100644

--- a/realsense2_camera/scripts/show_center_depth.py

+++ b/realsense2_camera/scripts/show_center_depth.py

@@ -31,8 +31,6 @@ def __init__(self, depth_image_topic, depth_info_topic):

self.bridge = CvBridge()

self.sub = self.create_subscription(msg_Image, depth_image_topic, self.imageDepthCallback, 1)

self.sub_info = self.create_subscription(CameraInfo, depth_info_topic, self.imageDepthInfoCallback, 1)

- confidence_topic = depth_image_topic.replace('depth', 'confidence')

- self.sub_conf = self.create_subscription(msg_Image, confidence_topic, self.confidenceCallback, 1)

self.intrinsics = None

self.pix = None

self.pix_grade = None

@@ -62,17 +60,6 @@ def imageDepthCallback(self, data):

except ValueError as e:

return

- def confidenceCallback(self, data):

- try:

- cv_image = self.bridge.imgmsg_to_cv2(data, data.encoding)

- grades = np.bitwise_and(cv_image >> 4, 0x0f)

- if (self.pix):

- self.pix_grade = grades[self.pix[1], self.pix[0]]

- except CvBridgeError as e:

- print(e)

- return

-

-

def imageDepthInfoCallback(self, cameraInfo):

try:

@@ -106,7 +93,6 @@ def main():

print ('Application subscribes to %s and %s topics.' % (depth_image_topic, depth_info_topic))