The new Object Detection processor provides out-of-the-box support for the TensorFlow Object Detection API. It allows for real-time localization and identification of multiple objects in a single image or image stream. The Object Detection processor uses one of the pre-trained object detection models and corresponding object labels.

If the pre-trained model is not set explicitly set then following defaults are used:

-

tensorflow.modelFetch:detection_scores,detection_classes,detection_boxes,num_detections -

tensorflow.model:http://dl.bintray.com/big-data/generic/faster_rcnn_resnet101_coco_2018_01_28_frozen_inference_graph.pb -

tensorflow.object.detection.labels:http://dl.bintray.com/big-data/generic/mscoco_label_map.pbtxt

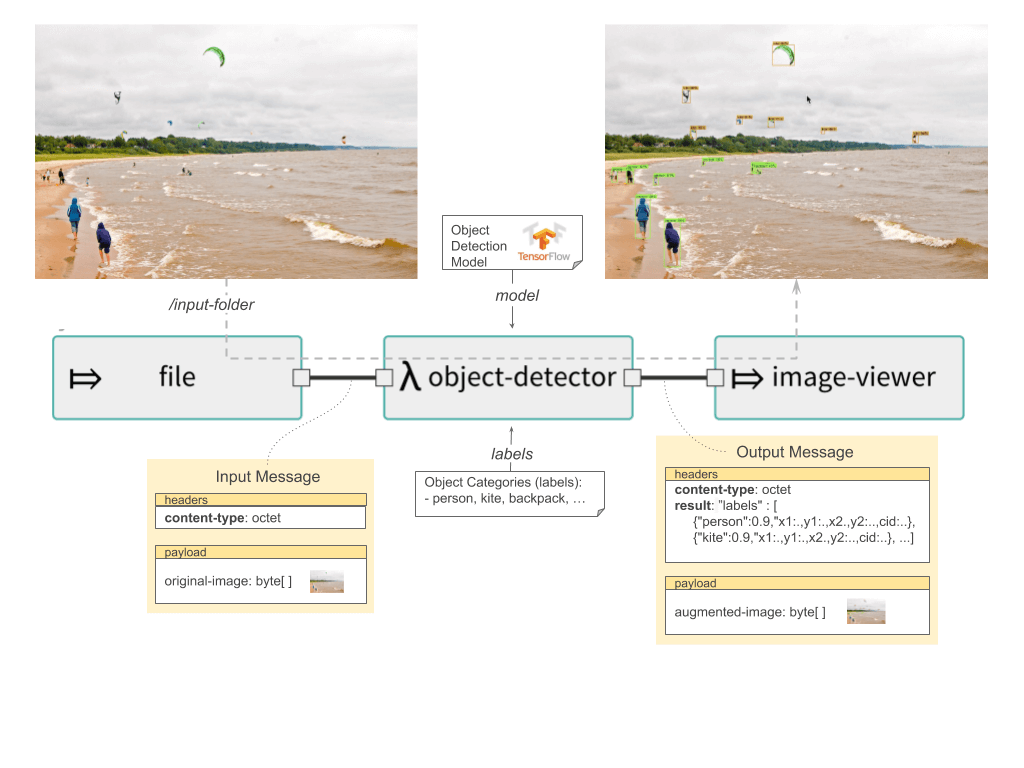

The following diagram illustrates a Spring Cloud Data Flow streaming pipeline that predicts object types from the images in real-time.

Processor’s input is an image byte array and the output is a Tuple (or JSON) message in this format:

{

"labels" : [

{"name":"person", "confidence":0.9996774,"x1":0.0,"y1":0.3940161,"x2":0.9465165,"y2":0.5592592,"cid":1},

{"name":"person", "confidence":0.9996604,"x1":0.047891676,"y1":0.03169123,"x2":0.941098,"y2":0.2085562,"cid":1},

{"name":"backpack", "confidence":0.96534747,"x1":0.15588468,"y1":0.85957795,"x2":0.5091308,"y2":0.9908878,"cid":23},

{"name":"backpack", "confidence":0.963343,"x1":0.1273736,"y1":0.57658505,"x2":0.47765,"y2":0.6986431,"cid":23}

]

}The output format is:

-

object-name:confidence - human readable name of the detected object (e.g. label) with its confidence as a float between [0-1]

-

x1, y1, x2, y2 - Response also provides the bounding box of the detected objects represented as (x1, y1, x2, y2). The coordinates are relative to the size of the image size.

-

cid - Classification identifier as defined in the provided labels configuration file.

The object-detection processor has the following options:

- tensorflow.expression

-

How to obtain the input data from the input message. If empty it defaults to the input message payload. The payload.myInTupleName expression treats the input payload as a Tuple, and myInTupleName stands for a Tuple key. The headers[myHeaderName] expression to get input data from message's header using myHeaderName as a key. (Expression, default:

<none>) - tensorflow.mode

-

Defines how to store the output data and if the input payload is passed through or discarded. Payload (Default) stores the output data in the outbound message payload. The input payload is discarded. Header stores the output data in outputName message's header. The the input payload is passed through. Tuple stores the output data in an Tuple payload, using the outputName key. The input payload is passed through in the same Tuple using the 'original.input.data'. If the input payload is already a Tuple that contains a 'original.input.data' key, then copy the input Tuple into the new Tuple to be returned. (OutputMode, default:

<none>, possible values:payload,tuple,header) - tensorflow.model

-

The location of the TensorFlow model file. (Resource, default:

<none>) - tensorflow.model-fetch

-

The TensorFlow graph model outputs. Comma separate list of TensorFlow operation names to fetch the output Tensors from. (List<String>, default:

<none>) - tensorflow.object.detection.color-agnostic

-

If disabled (default) the bounding box colors are selected as a function of the object class id. If enabled all bounding boxes are visualized with a single color. (Boolean, default:

false) - tensorflow.object.detection.confidence

-

Probability threshold. Only objects detected with probability higher then the confidence threshold are accepted. Value is between 0 and 1. (Float, default:

0.4) - tensorflow.object.detection.draw-bounding-box

-

When set to true, the output image will be annotated with the detected object boxes (Boolean, default:

true) - tensorflow.object.detection.draw-mask

-

For models with mask support enable drawing the mask of the detected objects (Boolean, default:

true) - tensorflow.object.detection.labels

-

The text file containing the category names (e.g. labels) of all categories that this model is trained to recognize. Every category is on a separate line. (Resource, default:

<none>) - tensorflow.output-name

-

The output data key used in the Header or Tuple modes. (String, default:

result)

$ ./mvnw clean install -PgenerateApps

$ cd appsYou can find the corresponding binder based projects here. You can then cd into one of the folders and build it:

$ ./mvnw clean packagejava -jar object-detection-processor.jar --tensorflow.object.detection.labels= --tensorflow.model= \

--tensorflow.modelFetch= --tensorflow.mode=And here is a example pipeline that process images in file source and outputs the annotated images to image-viewer:

object-detector-stream=file --directory='/tmp/images'

| object-detector --tensorflow.mode=header

--tensorflow.model='http://dl.bintray.com/big-data/generic/faster_rcnn_resnet101_coco_2018_01_28_frozen_inference_graph.pb'

--tensorflow.model-fetch='detection_scores,detection_classes,detection_boxes'

--tensorflow.object.detection.labels='http://dl.bintray.com/big-data/generic/mscoco_label_map.pbtxt'

| image-viewer