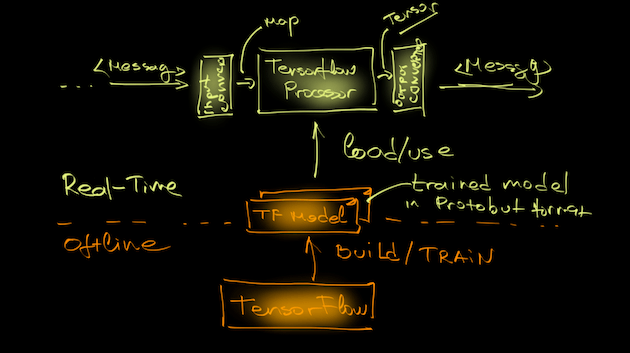

A processor that evaluates a machine learning model stored in TensorFlow Protobuf format.

Following snippet shows how to export a TensorFlow model into ProtocolBuffer binary format as required by the Processor.

from tensorflow.python.framework.graph_util import convert_variables_to_constants

...

SAVE_DIR = os.path.abspath(os.path.curdir)

minimal_graph = convert_variables_to_constants(sess, sess.graph_def, ['<model output>'])

tf.train.write_graph(minimal_graph, SAVE_DIR, 'my_graph.proto', as_text=False)

tf.train.write_graph(minimal_graph, SAVE_DIR, 'my.txt', as_text=True)The --tensorflow.model property configures the Processor with the location of the serialized Tensorflow model.

The TensorflowInputConverter converts the input data into the format, specific for the given model.

The TensorflowOutputConverter converts the computed Tensors result into a pipeline Message.

The --tensorflow.modelFetch property defines the list of TensorFlow graph outputs to fetch the output Tensors from.

The --tensorflow.mode property defines whether the computed results are passed in the message payload or in the message header.

The TensorFlow Processor uses a TensorflowInputConverter to convert the input data into data format compliant with the

TensorFlow Model used. The input converter converts the input Messages into key/value Map, where

the Key corresponds to a model input placeholder and the content is org.tensorflow.DataType compliant value.

The default converter implementation expects either Map payload or flat json message that can be converted into a Map.

The TensorflowInputConverter can be extended and customized.

See link::https://raw.githubusercontent.com/spring-cloud-stream-app-starters/tensorflow/master/spring-cloud-starter-stream-processor-twitter-sentiment/src/main/java/org/springframework/cloud/stream/app/twitter/sentiment/processor/TwitterSentimentTensorflowInputConverter.java[TwitterSentimentTensorflowInputConverter.java] for example.

Processor’s output uses TensorflowOutputConverter to convert the computed Tensor result into a serializable

message. The default implementation uses JSON.

Custom TensorflowOutputConverter can provide more convenient data representations.

See link::https://raw.githubusercontent.com/spring-cloud-stream-app-starters/tensorflow/master/spring-cloud-starter-stream-processor-twitter-sentiment/src/main/java/org/springframework/cloud/stream/app/twitter/sentiment/processor/TwitterSentimentTensorflowOutputConverter.java[TwitterSentimentTensorflowOutputConverter.java].

The tensorflow processor has the following options:

- tensorflow.expression

-

How to obtain the input data from the input message. If empty it defaults to the input message payload. The headers[myHeaderName] expression to get input data from message's header using myHeaderName as a key. (Expression, default:

<none>) - tensorflow.mode

-

The outbound message can store the inference result either in the payload or in a header with name outputName. The payload mode (default) stores the inference result in the outbound message payload. The inbound payload is discarded. The header mode stores the inference result in outbound message's header defined by the outputName property. The the inbound message payload is passed through to the outbound such. (OutputMode, default:

<none>, possible values:payload,header) - tensorflow.model

-

The location of the pre-trained TensorFlow model file. The file, http and classpath schemas are supported. For archive locations takes the first file with '.pb' extension. Use the URI fragment parameter to specify an exact model name (e.g. https://foo/bar/model.tar.gz#frozen_inference_graph.pb) (Resource, default:

<none>) - tensorflow.model-fetch

-

The TensorFlow graph model outputs. Comma separate list of TensorFlow operation names to fetch the output Tensors from. (List<String>, default:

<none>) - tensorflow.output-name

-

The output data key used for the Header modes. (String, default:

result)

$ ./mvnw clean install -PgenerateApps

$ cd appsYou can find the corresponding binder based projects here. You can then cd into one of the folders and build it:

$ ./mvnw clean package