diff --git a/code/planning/src/global_planner/global_planner.py b/code/planning/src/global_planner/global_planner.py

index 291e161b..deeb0f32 100755

--- a/code/planning/src/global_planner/global_planner.py

+++ b/code/planning/src/global_planner/global_planner.py

@@ -1,17 +1,15 @@

#!/usr/bin/env python

-import rospy

-import tf.transformations

-import ros_compatibility as roscomp

-from ros_compatibility.node import CompatibleNode

from xml.etree import ElementTree as eTree

-from geometry_msgs.msg import PoseStamped, Pose, Point, Quaternion

+import ros_compatibility as roscomp

+import rospy

+import tf.transformations

from carla_msgs.msg import CarlaRoute # , CarlaWorldInfo

+from geometry_msgs.msg import Point, Pose, PoseStamped, Quaternion

from nav_msgs.msg import Path

-from std_msgs.msg import String

-from std_msgs.msg import Float32MultiArray

-

from preplanning_trajectory import OpenDriveConverter

+from ros_compatibility.node import CompatibleNode

+from std_msgs.msg import Float32MultiArray, String

RIGHT = 1

LEFT = 2

@@ -21,7 +19,7 @@

class PrePlanner(CompatibleNode):

"""

This node is responsible for collecting all data needed for the

- preplanning and calculate a trajectory based on the OpenDriveConverter

+ preplanning and calculating a trajectory based on the OpenDriveConverter

from preplanning_trajectory.py.

Subscribed/needed topics:

- OpenDrive Map: /carla/{role_name}/OpenDRIVE

@@ -29,7 +27,7 @@ class PrePlanner(CompatibleNode):

- global Plan: /carla/{role_name}/global_plan

- current agent position: /paf/{role_name}/current_pos

Published topics:

- - preplanned trajectory: /paf/{role_name}/trajectory

+ - preplanned trajectory: /paf/{role_name}/trajectory_global

- prevailing speed limits:/paf/{role_name}/speed_limits_OpenDrive

"""

@@ -90,7 +88,7 @@ def global_route_callback(self, data: CarlaRoute) -> None:

"""

when the global route gets updated a new trajectory is calculated with

the help of OpenDriveConverter and published into

- '/paf/ self.role_name /trajectory'

+ '/paf/ self.role_name /trajectory_global'

:param data: global Route

"""

if data is None:

@@ -213,7 +211,7 @@ def global_route_callback(self, data: CarlaRoute) -> None:

def world_info_callback(self, opendrive: String) -> None:

"""

- when the map gets updated a mew OpenDriveConverter instance is created

+ when the map gets updated a new OpenDriveConverter instance is created

(needed for the trajectory preplanning)

:param opendrive: updated CarlaWorldInformation

"""

diff --git a/code/planning/src/global_planner/preplanning_trajectory.py b/code/planning/src/global_planner/preplanning_trajectory.py

index 478addd7..2300627e 100755

--- a/code/planning/src/global_planner/preplanning_trajectory.py

+++ b/code/planning/src/global_planner/preplanning_trajectory.py

@@ -1,9 +1,9 @@

import copy

-from xml.etree import ElementTree as eTree

-import help_functions

+from math import cos, degrees, sin

from typing import Tuple

-from math import sin, cos, degrees

+from xml.etree import ElementTree as eTree

+import help_functions

# Check small distance between two points

SMALL_DIST = 0.001

@@ -220,7 +220,7 @@ def calculate_intervalls_id(

):

"""

The function assumes, that the current chosen road is not the only

- one that is possible. The current raad is calculated based on all

+ one that is possible. The current road is calculated based on all

possible scenarios not assuming that the succ and pred road are

both junctions.

:param agent: current position of the agent with x and y coordinate

@@ -287,7 +287,7 @@ def calculate_intervalls_id(

def get_special_case_id(self, road: int, current: int, agent: Tuple[float, float]):

"""When the function get_min_dist() returns two solutions with the

- same distance, this function calculated the distance based on the

+ same distance, this function calculates the distance based on the

interpolation of the two possible roads.

:param road: id value of the successor or predecessor road

:param current: id value of the current road

diff --git a/code/planning/src/local_planner/ACC.py b/code/planning/src/local_planner/ACC.py

index 74b681b2..2e3fa8c8 100755

--- a/code/planning/src/local_planner/ACC.py

+++ b/code/planning/src/local_planner/ACC.py

@@ -1,19 +1,18 @@

#!/usr/bin/env python

+import numpy as np

import ros_compatibility as roscomp

-from ros_compatibility.node import CompatibleNode

-from rospy import Subscriber, Publisher

-from geometry_msgs.msg import PoseStamped

from carla_msgs.msg import CarlaSpeedometer # , CarlaWorldInfo

+from geometry_msgs.msg import PoseStamped

from nav_msgs.msg import Path

-from std_msgs.msg import Float32MultiArray, Float32, Bool

-import numpy as np

-from utils import interpolate_speed, calculate_rule_of_thumb

+from ros_compatibility.node import CompatibleNode

+from rospy import Publisher, Subscriber

+from std_msgs.msg import Bool, Float32, Float32MultiArray

+from utils import calculate_rule_of_thumb, interpolate_speed

class ACC(CompatibleNode):

- """

- This node recieves a possible collision and

- """

+ """ACC (Adaptive Cruise Control) calculates and publishes the desired speed based on

+ possible collisions, the current speed, the trajectory, and the speed limits."""

def __init__(self):

super(ACC, self).__init__("ACC")

diff --git a/code/planning/src/local_planner/motion_planning.py b/code/planning/src/local_planner/motion_planning.py

index 48abf69b..589e6456 100755

--- a/code/planning/src/local_planner/motion_planning.py

+++ b/code/planning/src/local_planner/motion_planning.py

@@ -1,23 +1,22 @@

#!/usr/bin/env python

# import tf.transformations

-import ros_compatibility as roscomp

-import rospy

-import sys

+import math

import os

+import sys

+from typing import List

+import numpy as np

+import ros_compatibility as roscomp

+import rospy

+from carla_msgs.msg import CarlaSpeedometer

+from geometry_msgs.msg import Point, Pose, PoseStamped, Quaternion

+from nav_msgs.msg import Path

+from perception.msg import LaneChange, Waypoint

from ros_compatibility.node import CompatibleNode

from rospy import Publisher, Subscriber

-from std_msgs.msg import String, Float32, Bool, Float32MultiArray, Int16

-from nav_msgs.msg import Path

-from geometry_msgs.msg import PoseStamped, Pose, Point, Quaternion

-from carla_msgs.msg import CarlaSpeedometer

-import numpy as np

from scipy.spatial.transform import Rotation

-import math

-

-from perception.msg import Waypoint, LaneChange

-

-from utils import convert_to_ms, spawn_car, NUM_WAYPOINTS, TARGET_DISTANCE_TO_STOP

+from std_msgs.msg import Bool, Float32, Float32MultiArray, Int16, String

+from utils import NUM_WAYPOINTS, TARGET_DISTANCE_TO_STOP, convert_to_ms, spawn_car

sys.path.append(os.path.abspath(sys.path[0] + "/../../planning/src/behavior_agent"))

from behaviours import behavior_speed as bs # type: ignore # noqa: E402

@@ -30,10 +29,9 @@

class MotionPlanning(CompatibleNode):

"""

- This node selects speeds according to the behavior in the Decision Tree

- and the ACC.

- Later this Node should compute a local Trajectory and forward

- it to the Acting.

+ This node selects speeds based on the current behaviour and ACC to forward to the

+ acting components. It also handles the generation of trajectories for overtaking

+ maneuvers.

"""

def __init__(self):

@@ -41,12 +39,14 @@ def __init__(self):

self.role_name = self.get_param("role_name", "hero")

self.control_loop_rate = self.get_param("control_loop_rate", 0.05)

+ # TODO: add type hints

self.target_speed = 0.0

self.__curr_behavior = None

self.__acc_speed = 0.0

self.__stopline = None # (Distance, isStopline)

self.__change_point = None # (Distance, isLaneChange, roadOption)

self.__collision_point = None

+ # TODO: clarify what the overtake_status values mean (by using an enum or ...)

self.__overtake_status = -1

self.published = False

self.current_pos = None

@@ -162,9 +162,11 @@ def __init__(self):

Float32, f"/paf/{self.role_name}/target_velocity", qos_profile=1

)

+ # TODO move up to subscribers

self.wp_subs = self.new_subscription(

Float32, f"/paf/{self.role_name}/current_wp", self.__set_wp, qos_profile=1

)

+

self.overtake_success_pub = self.new_publisher(

Float32, f"/paf/{self.role_name}/overtake_success", qos_profile=1

)

@@ -235,12 +237,31 @@ def change_trajectory(self, distance_obj):

pose_list = self.trajectory.poses

# Only use fallback

- self.overtake_fallback(distance_obj, pose_list)

+ self.generate_overtake_trajectory(distance_obj, pose_list)

self.__overtake_status = 1

self.overtake_success_pub.publish(self.__overtake_status)

return

- def overtake_fallback(self, distance, pose_list, unstuck=False):

+ def generate_overtake_trajectory(self, distance, pose_list, unstuck=False):

+ """

+ Generates a trajectory for overtaking maneuvers.

+

+ This method creates a new trajectory for the vehicle to follow when an

+ overtaking maneuver is required. It adjusts the waypoints based on the current

+ waypoint and distance, and applies an offset to the waypoints to create a path

+ that avoids obstacles or gets the vehicle unstuck.

+

+ Args:

+ distance (int): The distance over which the overtaking maneuver should be

+ planned.

+ pose_list (list): A list of PoseStamped objects representing the current

+ planned path.

+ unstuck (bool, optional): A flag indicating whether the vehicle is stuck

+ and requires a larger offset to get unstuck. Defaults to False.

+

+ Returns:

+ None: The method updates the self.trajectory attribute with the new path.

+ """

currentwp = self.current_wp

normal_x_offset = 2

unstuck_x_offset = 3 # could need adjustment with better steering

@@ -306,10 +327,25 @@ def __set_trajectory(self, data: Path):

"""

self.trajectory = data

self.loginfo("Trajectory received")

+

self.__corners = self.__calc_corner_points()

- def __calc_corner_points(self):

+ def __calc_corner_points(self) -> List[List[np.ndarray]]:

+ """

+ Calculate the corner points of the trajectory.

+

+ This method converts the poses in the trajectory to an array of coordinates,

+ calculates the angles between consecutive points, and identifies the points

+ where there is a significant change in the angle, indicating a corner or curve.

+ The corner points are then grouped by proximity and returned.

+

+ Returns:

+ list: A list of lists, where each sublist contains 2D points that form a

+ corner.

+ """

coords = self.convert_pose_to_array(np.array(self.trajectory.poses))

+

+ # TODO: refactor by using numpy functions

x_values = np.array([point[0] for point in coords])

y_values = np.array([point[1] for point in coords])

@@ -320,13 +356,25 @@ def __calc_corner_points(self):

threshold = 1 # in degree

curve_change_indices = np.where(np.abs(np.diff(angles)) > threshold)[0]

- sublist = self.create_sublists(curve_change_indices, proximity=5)

+ sublist = self.group_points_by_proximity(curve_change_indices, proximity=5)

coords_of_curve = [coords[i] for i in sublist]

return coords_of_curve

- def create_sublists(self, points, proximity=5):

+ def group_points_by_proximity(self, points, proximity=5):

+ """

+ Groups a list of points into sublists based on their proximity to each other.

+

+ Args:

+ points (list): A list of points to be grouped.

+ proximity (int, optional): The maximum distance between points in a sublist

+

+ Returns:

+ list: A list of sublists, where each sublist contains points that are within

+ the specified proximity of each other. Sublists with only one point are

+ filtered out.

+ """

sublists = []

current_sublist = []

@@ -345,6 +393,7 @@ def create_sublists(self, points, proximity=5):

if current_sublist:

sublists.append(current_sublist)

+ # TODO: Check if it is intended to filter out sublists with only one point

filtered_list = [in_list for in_list in sublists if len(in_list) > 1]

return filtered_list

@@ -354,6 +403,7 @@ def get_cornering_speed(self):

pos = self.current_pos[:2]

def euclid_dist(vector1, vector2):

+ # TODO replace with numpy function

point1 = np.array(vector1)

point2 = np.array(vector2)

@@ -369,7 +419,7 @@ def map_corner(dist):

elif dist < 50:

return 7

else:

- 8

+ 8 # TODO add return

distance_corner = 0

for i in range(len(corner) - 1):

@@ -395,14 +445,14 @@ def map_corner(dist):

return self.__get_speed_cruise()

@staticmethod

- def convert_pose_to_array(poses: np.array):

- """convert pose array to numpy array

+ def convert_pose_to_array(poses: np.ndarray) -> np.ndarray:

+ """Convert an array of PoseStamped objects to a numpy array of positions.

Args:

- poses (np.array): pose array

+ poses (np.ndarray): Array of PoseStamped objects.

Returns:

- np.array: numpy array

+ np.ndarray: Numpy array of shape (n, 2) containing the x and y positions.

"""

result_array = np.empty((len(poses), 2))

for pose in range(len(poses)):

@@ -424,6 +474,11 @@ def __check_emergency(self, data: Bool):

self.emergency_pub.publish(data)

def update_target_speed(self, acc_speed, behavior):

+ """

+ Updates the target velocity based on the current behavior and ACC velocity and

+ overtake status and publishes it. The unit of the velocity is m/s.

+ """

+

be_speed = self.get_speed_by_behavior(behavior)

if behavior == bs.parking.name or self.__overtake_status == 1:

self.target_speed = be_speed

@@ -439,6 +494,10 @@ def __set_acc_speed(self, data: Float32):

self.__acc_speed = data.data

def __set_curr_behavior(self, data: String):

+ """

+ Sets the received current behavior of the vehicle.

+ If the behavior is an overtake behavior, a trajectory change is triggered.

+ """

self.__curr_behavior = data.data

if data.data == bs.ot_enter_init.name:

if np.isinf(self.__collision_point):

@@ -480,6 +539,7 @@ def get_speed_by_behavior(self, behavior: str) -> float:

return speed

def __get_speed_unstuck(self, behavior: str) -> float:

+ # TODO check if this 'global' is necessary

global UNSTUCK_OVERTAKE_FLAG_CLEAR_DISTANCE

speed = 0.0

if behavior == bs.us_unstuck.name:

@@ -507,7 +567,9 @@ def __get_speed_unstuck(self, behavior: str) -> float:

# create overtake trajectory starting 6 meteres before

# the obstacle

# 6 worked well in tests, but can be adjusted

- self.overtake_fallback(self.unstuck_distance, pose_list, unstuck=True)

+ self.generate_overtake_trajectory(

+ self.unstuck_distance, pose_list, unstuck=True

+ )

self.logfatal("Overtake Trajectory while unstuck!")

self.unstuck_overtake_flag = True

self.init_overtake_pos = self.current_pos[:2]

@@ -628,8 +690,8 @@ def __calc_virtual_overtake(self) -> float:

def run(self):

"""

- Control loop

- :return:

+ Control loop that updates the target speed and publishes the target trajectory

+ and speed over ROS topics.

"""

def loop(timer_event=None):

@@ -649,10 +711,6 @@ def loop(timer_event=None):

if __name__ == "__main__":

- """

- main function starts the MotionPlanning node

- :param args:

- """

roscomp.init("MotionPlanning")

try:

node = MotionPlanning()

diff --git a/doc/assets/planning/planning_structure.drawio b/doc/assets/planning/planning_structure.drawio

new file mode 100644

index 00000000..cb63bdef

--- /dev/null

+++ b/doc/assets/planning/planning_structure.drawio

@@ -0,0 +1,340 @@

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

+

diff --git a/doc/assets/planning/planning_structure.png b/doc/assets/planning/planning_structure.png

new file mode 100644

index 00000000..568fa982

Binary files /dev/null and b/doc/assets/planning/planning_structure.png differ

diff --git a/doc/assets/research_assets/curr_behavior_example.png b/doc/assets/research_assets/curr_behavior_example.png

new file mode 100644

index 00000000..e36672ff

Binary files /dev/null and b/doc/assets/research_assets/curr_behavior_example.png differ

diff --git a/doc/assets/research_assets/nav_msgs_Path_type.png b/doc/assets/research_assets/nav_msgs_Path_type.png

new file mode 100644

index 00000000..7720d804

Binary files /dev/null and b/doc/assets/research_assets/nav_msgs_Path_type.png differ

diff --git a/doc/assets/research_assets/node_path_ros.png b/doc/assets/research_assets/node_path_ros.png

new file mode 100644

index 00000000..04def851

Binary files /dev/null and b/doc/assets/research_assets/node_path_ros.png differ

diff --git a/doc/assets/research_assets/planning_acting_communication.svg b/doc/assets/research_assets/planning_acting_communication.svg

new file mode 100644

index 00000000..3d46178e

--- /dev/null

+++ b/doc/assets/research_assets/planning_acting_communication.svg

@@ -0,0 +1,4 @@

+

+

+

+

\ No newline at end of file

diff --git a/doc/assets/research_assets/planning_internal.png b/doc/assets/research_assets/planning_internal.png

new file mode 100644

index 00000000..ed9c9d1c

Binary files /dev/null and b/doc/assets/research_assets/planning_internal.png differ

diff --git a/doc/assets/research_assets/rosgraph.svg b/doc/assets/research_assets/rosgraph.svg

new file mode 100644

index 00000000..f6aa5d32

--- /dev/null

+++ b/doc/assets/research_assets/rosgraph.svg

@@ -0,0 +1,10221 @@

+

+

diff --git a/doc/assets/research_assets/rosgraph_leaf_topics.svg b/doc/assets/research_assets/rosgraph_leaf_topics.svg

new file mode 100644

index 00000000..c1831985

--- /dev/null

+++ b/doc/assets/research_assets/rosgraph_leaf_topics.svg

@@ -0,0 +1,4669 @@

+

+

diff --git a/doc/assets/research_assets/trajectory_example.png b/doc/assets/research_assets/trajectory_example.png

new file mode 100644

index 00000000..8e313a14

Binary files /dev/null and b/doc/assets/research_assets/trajectory_example.png differ

diff --git a/doc/general/architecture.md b/doc/general/architecture.md

index 72eacdce..f8eaf839 100644

--- a/doc/general/architecture.md

+++ b/doc/general/architecture.md

@@ -198,12 +198,12 @@ Subscriptions:

- ```collision``` ([std_msgs/Float32MultiArray](https://docs.ros.org/en/api/std_msgs/html/msg/Float32MultiArray.html))

- ```traffic_light_y_distance``` ([std_msgs/Int16](https://docs.ros.org/en/api/std_msgs/html/msg/Int16.html))

- ```unstuck_distance``` ([std_msgs/Float32](https://docs.ros.org/en/api/std_msgs/html/msg/Float32.html))

+- ```current_wp``` ([std_msgs/Float32](https://docs.ros.org/en/api/std_msgs/html/msg/Float32.html))

Publishes:

- ```trajectory``` ([nav_msgs/Path Message](http://docs.ros.org/en/noetic/api/nav_msgs/html/msg/Path.html))

- ```target_velocity``` ([std_msgs/Float32](https://docs.ros.org/en/api/std_msgs/html/msg/Float32.html))

-- ```current_wp``` ([std_msgs/Float32](https://docs.ros.org/en/api/std_msgs/html/msg/Float32.html))

- ```overtake_success``` ([std_msgs/Float32](https://docs.ros.org/en/api/std_msgs/html/msg/Float32.html))

## Acting

diff --git a/doc/perception/README.md b/doc/perception/README.md

index 9b747565..f28f24b7 100644

--- a/doc/perception/README.md

+++ b/doc/perception/README.md

@@ -17,6 +17,10 @@ This folder contains further documentation of the perception components.

11. [Efficient PS](./efficientps.md)

1. not used scince paf22 and never successfully tested

+## Overview Localization

+

+An overview over the different nodes working together to localize the vehicle is provided in the [localization](./localization.md) file.

+

## Experiments

- The overview of performance evaluations is located in the [experiments](./experiments/README.md) folder.

diff --git a/doc/perception/localization.md b/doc/perception/localization.md

new file mode 100644

index 00000000..9558091b

--- /dev/null

+++ b/doc/perception/localization.md

@@ -0,0 +1,132 @@

+# Localization

+

+There are currently three nodes working together to localize the vehicle:

+

+- [position_heading_publisher_node](./position_heading_publisher_node.md)

+- [kalman_filter](./kalman_filter.md)

+- [coordinate_transformation](./coordinate_transformation.md)

+

+The [position_heading_filter_debug_node](./position_heading_filter_debug_node.md) node is another useful node but it does not actively localize the vehicle.

+Instead it makes it possible to compare and tune different filters and the [viz.py](../../code/perception/src/experiments/Position_Heading_Datasets/viz.py) file is the recommended way to visualizes the results.

+

+## position_heading_publisher_node

+

+The [position_heading_publisher_node](./position_heading_publisher_node.md) node is responsible for publishing the position and heading of the car. It publishes raw as well as already filtered data. The goal of filtering the raw data is the elimination / reduction of noise.

+

+### Published / subscribed topics

+

+The following topics are therefore published by this node:

+

+- `unfiltered_pos` (raw data, subscribed to by filter nodes e.g. kalman_filter)

+- `unfiltered_heading` (raw data, subscribed to by filter nodes e.g. kalman_filter)

+- `current_pos` (filtered data, position of the car)

+- `current_heading` (filtered data, orientation of the car around the z-axis)

+

+To gather the necessary information for the topics above the node subscribes the following topics:

+

+- OpenDrive (map information)

+- IMU (Inertial Measurement Unit)

+- GPS

+- the topic published by the filter that is used (e.g. kalman_pos)

+

+As you can see the node first subscribes the filtered data (e.g. kalman_pos) and then publishes this data as the current position / heading. It merely passes along the data.

+

+This makes it possible for multiple filter nodes to be running and only the data produced by one filter is published as the current position / heading. Otherwise different filters would publish to the same topic (e.g. current_pos) which is not desirable.

+

+### Available filters

+

+The filter to be used is specified in the [perception.launch](../../code/perception/launch/perception.launch) file.

+

+The currently available filters are as follows:

+

+- position filter:

+ - Kalman (Kalman Filter)

+ - RunningAvg (Running Average Filter)

+ - None (No Filter)

+- heading filter:

+ - Kalman (Kalman Filter)

+ - None (No Filter)

+ - Old (heading is calculated the WRONG way, for demonstration purposes only)

+

+To use a certain / new filter two files need to be updated:

+

+- First make sure that in the [perception.launch](../../code/perception/launch/perception.launch) file:

+ - the node of the filter you want to use is included (e.g. kalman_filter.py)

+ - the `pos_filter` / `heading_filter` arguments are set accordingly (e.g. "Kalman") in the code of the position_heading_publisher_node node

+

+- Then the according subscriber and publisher need to be added in the init function of the position_heading_publisher_node.py file.

+

+For further details on the position_heading_publisher_node node click [here](./position_heading_publisher_node.md).

+

+## kalman_filter

+

+The currently used filter is the (linear) Kalman Filter. It is responsible for filtering the location and heading data so the noise can be eliminated / reduced.

+

+### Published / subscribed topics

+

+Therefore the published topics are:

+

+- `kalman_pos` (filtered position of the vehicle)

+- `kalman_heading` (filtered heading of the vehicle)

+

+The variables to be estimated are put together in the state vector. It consists of the following elements:

+

+- `x` (position on the x-axis)

+- `y` (position on the y-axis)

+- `v_x` (velocity in the x-direction)

+- `v_y` (velocity in the y-direction)

+- `yaw` (orientation, rotation around z-axis)

+- `omega_z` (angular velocity)

+

+The z-position is currently not estimated by the Kalman Filter and is calculated using the rolling average.

+

+The x-/y-position is measured by the GNSS sensor. The measurement is provided by the unfiltered_pos topic.

+

+The velocity in x-/y-direction can be derived from the speed measured by the Carla Speedometer in combination with the current orientation.

+

+To get the orientation and angular velocity of the vehicle the data provided by the IMU (Inertial Measurement Unit) sensor is used.

+

+### Possible improvements

+

+In earlier experiments it was shown that the Kalman Filter performs better than using the rolling average or the unfiltered data. But it seems likely that further improvements can be made.

+

+For further details on the current implementation of the kalman_filter node click [here](./kalman_filter.md).

+

+Currently the model assumes that the vehicle drives at a constant speed. Adding acceleration in the model (as proposed [here](https://www.youtube.com/watch?v=TEKPcyBwEH8)) could ultimately improve the filter.

+

+It is also likely to achieve a better performance by using a non-linear filter like the Extended or Unscented Kalman Filter.

+The latter is the most generic of the three options as it does not even assume a normal distribution of the system but it is also the most complex Kalman Filter.

+

+The localization of the vehicle could further be improved by combining the current estimate of the position with data generated by image processing.

+Using the vision node and the lidar distance node it is possible to calculate the distance to detected objects (for details see [distance_to_objects.md](./distance_to_objects.md).

+Objects such as signals (traffic signs, traffic lights, ...) have a specified position in the OpenDrive map.

+For details see: (Disclaimer: the second source belongs to a previous version of OpenDrive but is probably still applicable)

+

+- [source_1](https://www.asam.net/standards/detail/opendrive/) and

+- [source_2](https://www.asam.net/index.php?eID=dumpFile&t=f&f=4422&token=e590561f3c39aa2260e5442e29e93f6693d1cccd#top-016f925e-bfe2-481d-b603-da4524d7491f) (menu point "12. Signals")

+

+The knowledge of the map could be combined with the calculated distance to a detected object. For a better understanding look at the following example:

+The car is driving on "road1". This road is 100 meters long and there is a traffic light in the middle of it.

+If the program detects a traffic light next to the road with a distance of 20 meters this suggests that the vehicle is 30 meters down "road 1".

+That information could be used to refine the position estimation of the vehicle.

+

+## coordinate_transformation

+

+The [coordinate_transformation](./coordinate_transformation) node provides useful helper functions such as quat_to_heading which transforms a given quaternion into the heading of the car.

+

+This node is used by the [position_heading_publisher_node](./position_heading_publisher_node) node as well as the [kalman_filter](./kalman_filter) node. Both nodes use the node for its quat_to_heading function and its CoordinateTransformer class.

+

+The node is not fully documented yet but for further details on the quat_to_heading function click [here](./coordinate_transformation.md).

+

+## position_heading_filter_debug_node

+

+This node processes the data provided by the IMU and GNSS so the errors between the is-state and the measured state can be seen.

+To get the is-state the Carla API is used to get the real position and heading of the car.

+Comparing the real position / heading with the position / heading estimated by a filter (e.g. Kalman Filter) the performance of a filter can be evaluated and the parameters used by the filter can be tuned.

+

+The recommended way to look at the results is using the mathplotlib plots provided by the [viz.py](../../code/perception/src/experiments/Position_Heading_Datasets/viz.py) file even though they can also be shown via rqt_plots.

+

+Because the node uses the Carla API and therefore uses the ground truth it should only be used for combaring and tuning filters and not for any other purposes.

+It might be best to remove this node before submitting to the official leaderboard because otherwise the project could get disqualified.

+

+For more details on the node see [position_heading_filter_debug_node](./position_heading_filter_debug_node.md) and [viz.py](../../code/perception/src/experiments/Position_Heading_Datasets/viz.py).

diff --git a/doc/planning/Global_Planner.md b/doc/planning/Global_Planner.md

index 2d3e4ea9..94a66612 100644

--- a/doc/planning/Global_Planner.md

+++ b/doc/planning/Global_Planner.md

@@ -1,11 +1,10 @@

# Global Planner

**Summary:** [global_planner.py](.../code/planning/global_planner/src/global_planner.py):

-The global planner is responsible for collecting and preparing all data from the leaderboard and other intern

+The global planner is responsible for collecting and preparing all data from the leaderboard and other internal

components that is needed for the preplanning component.

After finishing that this node initiates the calculation of a trajectory based on the OpenDriveConverter

-from preplanning_trajectory.py. In the end the computed trajectory and prevailing speed limits are published

-to the other components of this project (acting, decision making,...).

+from preplanning_trajectory.py. In the end the computed trajectory and prevailing speed limits are published.

This component and so most of the documentation was taken from the previous project PAF22 (Authors: Simon Erlbacher, Niklas Vogel)

@@ -25,8 +24,7 @@ No extra installation needed.

## Description

-First the global planner is responsible for collecting and preparing all data from the leaderboard and other intern

-components that is needed for the preplanning component.

+First the global planner is responsible for collecting and preparing all data from the leaderboard and other internal components that is needed for the preplanning component.

To get an instance of the OpenDriveConverter (ODC) the received OpenDrive Map prevailing in String format

has to be converted. In our case we use the

@@ -56,8 +54,8 @@ The received agent spawn position is valid if it´s closer to the first waypoint

parameter expresses. This is necessary to prevent unwanted behaviour in the startup phase where the

current agent position is faulty.

-When the ODC got initialised, the current agent position is received and the global plan is obtained from

-the leaderboard the trajectory can be calculated by iterating through the global route and passing it to the ODC.

+When the ODC is initialised, the current agent position is received and the global plan is obtained from

+the leaderboard. The trajectory can be calculated by iterating through the global route and passing it to the ODC.

After smaller outliners are removed the x and y coordinates as well as the yaw-orientation and the prevailing

speed limits can be acquired from the ODC in the following form:

diff --git a/doc/planning/README.md b/doc/planning/README.md

index 378d94bd..aff116ab 100644

--- a/doc/planning/README.md

+++ b/doc/planning/README.md

@@ -1,20 +1,21 @@

# Planning Wiki

+

+

## Overview

-### [Preplanning](./Preplanning.md)

+### [OpenDrive Converter (preplanning_trajectory.py)](./Preplanning.md)

-Preplanning is very close to the global plan. The challenge of the preplanning focuses on creating a trajectory out of

+This module focuses on creating a trajectory out of

an OpenDrive map (ASAM OpenDrive). As input it receives an xodr file (OpenDrive format) and the target points

from the leaderboard with the belonging actions. For example action number 3 means, drive through the intersection.

-### [Global plan](./Global_Planner.md)

+### [Global Planning (PrePlanner)](./Global_Planner.md)

-The global planner is responsible for collecting and preparing all data from the leaderboard and other intern

+The global planner is responsible for collecting and preparing all data from the leaderboard and other internal

components that is needed for the preplanning component.

After finishing that this node initiates the calculation of a trajectory based on the OpenDriveConverter

-from preplanning_trajectory.py. In the end the computed trajectory and prevailing speed limits are published

-to the other components of this project (acting, decision making,...).

+from preplanning_trajectory.py. In the end the computed trajectory and prevailing speed limits are published.

@@ -28,7 +29,9 @@ decision tree, which is easy to adapt and to expand.

### [Local Planning](./Local_Planning.md)

-The Local Planning component is responsible for evaluating short term decisions in the local environment of the ego vehicle. It containes components responsible for detecting collisions and reacting e. g. lowering speed.

-The local planning also executes behaviors e. g. changes the trajectory for an overtake.

+This module includes the Nodes: ACC, CollisionCheck, MotionPlanner

+

+The Local Planning package is responsible for evaluating short term decisions in the local environment of the ego vehicle. It containes components responsible for detecting collisions and reacting e. g. lowering speed.

+The local planning also executes behaviors e.g. changes the trajectory for an overtake.

diff --git a/doc/planning/motion_planning.md b/doc/planning/motion_planning.md

index dd05ed42..83a6960b 100644

--- a/doc/planning/motion_planning.md

+++ b/doc/planning/motion_planning.md

@@ -1,65 +1,65 @@

-# Motion Planning

-

-**Summary:** [motion_planning.py](.../code/planning/local_planner/src/motion_planning.py):

-The motion planning is responsible for collecting all the speeds from the different components and choosing the optimal one to be fowarded into the acting. It also is capabale to change the trajectory for a overtaking maneuver.

-

-- [Motion Planning](#motion-planning)

- - [Overview](#overview)

- - [Component](#component)

- - [ROS Data Interface](#ros-data-interface)

- - [Subscribed Topics](#subscribed-topics)

- - [Published Topics](#published-topics)

- - [Node Creation + Running Tests](#node-creation--running-tests)

-

-## Overview

-

-This module is responsible for adjusting the current speed and the current trajectory according to the traffic situation. It subscribes to topics that provide information about the current speed of the vehicle, the current heading and many more to navigate safely in the simulation.

-It publishes topics that provide information about the target speed, trajectoy changes, current waypoint and if an overtake was successful.

-

-This file is also responsible for providing a ```target_speed of -3``` for acting, whenever we need to use the Unstuck Behavior. -3 is the only case we can drive backwards right now,

-since we only need it for the unstuck routine. It also creates an overtake trajectory, whenever the unstuck behavior calls for it.

-

-## Component

-

-The Motion Planning only consists of one node that contains all subscriper and publishers. It uses some utility functions from [utils.py](../../code/planning/src/local_planner/utils.py).

-

-## ROS Data Interface

-

-### Subscribed Topics

-

-This node subscribes to the following topics:

-

-- `/paf/hero/Spawn_car`: Can spawn a car on the first straight in the dev environment, if this message is manually published.

-- `/paf/hero/speed_limit`: Subscribes to the speed Limit.

-- `/carla/hero/Speed`: Subscribes to the current speed.

-- `/paf/hero/current_heading`: Subscribes to the filtered heading of the ego vehicle.

-- `/paf/hero/trajectory_global`: Subscribes to the global trajectory, which is calculated at the start of the simulation.

-- `/paf/hero/current_pos`: Subscribes to the filtered position of our car.

-- `/paf/hero/curr_behavior`: Subscribes to Current Behavior pubished by the Decision Making.

-- `/paf/hero/unchecked_emergency`: Subscribes to check if the emergency brake is not triggered.

-- `/paf/hero/acc_velocity`: Subscribes to the speed published by the acc.

-- `/paf/hero/waypoint_distance`: Subscribes to the Carla Waypoint to get the new road option.

-- `/paf/hero/lane_change_distance`: Subscribes to the Carla Waypoint to check if the next Waypoint is a lane change.

-- `/paf/hero/collision`: Subscribes to the collision published by the Collision Check.

-- `/paf/hero//Center/traffic_light_y_distance`: Subscribes to the distance the traffic light has to the upper camera bound in pixels.

-- `/paf/hero/unstuck_distance`: Subscribes to the distance travelled by the unstuck maneuver.

-

-### Published Topics

-

-This node publishes the following topics:

-

-- `/paf/hero/trajectory`: Publishes the new adjusted trajectory.

-- `/paf/hero/target_velocity`: Publishes the new calcualted Speed.

-- `/paf/hero/current_wp`: Publishes according to our position the index of the current point on the trajectory.

-- `/paf/hero/overtake_success`: Publishes if an overtake was successful.

-

-## Node Creation + Running Tests

-

-To run this node insert the following statement in the [planning.launch](../../code/planning/launch/planning.launch) file:

-

-```xml

-

-

-

-

-```

+# Motion Planning

+

+**Summary:** [motion_planning.py](.../code/planning/local_planner/src/motion_planning.py):

+The motion planning is responsible for collecting all the velocity recommendations from the different components and choosing the optimal one to be fowarded into the acting. It also is capable to change the trajectory for an overtaking maneuver.

+

+- [Overview](#overview)

+- [ROS Data Interface](#ros-data-interface)

+ - [Subscribed Topics](#subscribed-topics)

+ - [Published Topics](#published-topics)

+- [Node Creation + Running Tests](#node-creation--running-tests)

+

+## Overview

+

+This module contains one ROS node and is responsible for adjusting and publishing the target velocity and the target trajectory according to the traffic situation.

+It subscribes to topics that provide information about the current velocity of the vehicle, the current heading and many more to navigate safely in the simulation.

+It also publishes a topic that indicates wether an overtake maneuver was successful or not.

+

+This component is also responsible for providing a target_speed of `-3` for acting, whenever we need to use the Unstuck Behavior. This is currently the only behavior that allows the car to drive backwards.

+

+The trajectory is calculated by the global planner and is adjusted by the motion planning node.

+When the decision making node decides that an overtake maneuver is necessary, the motion planning node will adjust the trajectory accordingly.

+Otherwise the received trajectory is published without any changes.

+

+## ROS Data Interface

+

+### Subscribed Topics

+

+This node subscribes to the following topics:

+

+- `/carla/hero/Speed`: Subscribes to the current speed.

+- `/paf/hero/acc_velocity`: Subscribes to the speed published by the acc.

+- `/paf/hero/Center/traffic_light_y_distance`: Subscribes to the distance the traffic light has to the upper camera bound in pixels.

+- `/paf/hero/collision`: Subscribes to the collision published by the Collision Check.

+- `/paf/hero/curr_behavior`: Subscribes to Current Behavior pubished by the Decision Making.

+- `/paf/hero/current_heading`: Subscribes to the filtered heading of the ego vehicle.

+- `/paf/hero/current_pos`: Subscribes to the filtered position of our car.

+- `/paf/hero/current_wp`: Subscribes to the current waypoint.

+- `/paf/hero/lane_change_distance`: Subscribes to the Carla Waypoint to check if the next Waypoint is a lane change.

+- `/paf/hero/speed_limit`: Subscribes to the speed limit.

+- `/paf/hero/trajectory_global`: Subscribes to the global trajectory, which is calculated at the start of the simulation.

+- `/paf/hero/unstuck_distance`: Subscribes to the distance travelled by the unstuck maneuver.

+- `/paf/hero/waypoint_distance`: Subscribes to the Carla Waypoint to get the new road option.

+

+### Published Topics

+

+This node publishes the following topics:

+

+- `/paf/hero/overtake_success`: Publishes if an overtake was successful. ([std_msgs/Float32](http://docs.ros.org/en/api/std_msgs/html/msg/Float32.html))

+- `/paf/hero/target_velocity`: Publishes the target velocity in m/s. ([std_msgs/Float32](http://docs.ros.org/en/api/std_msgs/html/msg/Float32.html))

+- `/paf/hero/trajectory`: Publishes the new adjusted trajectory. ([nav_msgs/Path](https://docs.ros.org/en/lunar/api/nav_msgs/html/msg/Path.html))

+

+## Node Creation + Running Tests

+

+To run this node insert the following statement in the [planning.launch](../../code/planning/launch/planning.launch) file:

+

+```xml

+

+

+

+

+```

+

+The motion planning node listens to the following debugging topics:

+

+- `/paf/hero/Spawn_car`: Can spawn a car on the first straight in the dev environment, if this message is manually published.

diff --git a/doc/research/paf24/general/current_state.md b/doc/research/paf24/general/current_state.md

new file mode 100755

index 00000000..bffc2432

--- /dev/null

+++ b/doc/research/paf24/general/current_state.md

@@ -0,0 +1,183 @@

+# Current state of the simulation

+

+**Summary:** The current state of the simulation is assessed by doing three runs of 20 mins (real world time), where all mistakes or anomalies are written down.

+

+- [Goal](#goal)

+- [Methodology](#methodology)

+- [Observed Errors Grouped by Domains](#observed-errors-grouped-by-domains)

+ - [Infrastructure](#infrastructure)

+ - [Testing and Validation](#testing-and-validation)

+ - [Perception](#perception)

+ - [Localization and Mapping](#localization-and-mapping)

+ - [Decision-Making](#decision-making)

+ - [Path Planning](#path-planning)

+ - [Control](#control)

+- [Raw notes](#raw-notes)

+ - [Run 1](#run-1)

+ - [Run 2](#run-2)

+ - [Run 3](#run-3)

+

+## Goal

+

+In order to understand the current state of the agent, it is crucial to assess the status quo and note the challenges it is faced with.

+

+## Methodology

+

+This assessment was done by three leaderboard runs in the CARLA simulator with the handover-state for PAF24. While doing so, all mistakes made by the agent have been noted, as well as possible anomalies occurring during the inspection.

+After the review, the mistakes have been grouped by the roles defined in the project in order to make it easier to address the challenges in the respective domains. **Note:** Some mistakes overlap and communication is key when tackling these issues.

+

+## Observed Errors Grouped by Domains

+

+### Infrastructure

+

+These issues relate to foundational aspects of the simulation environment and underlying software stability:

+

+- **Simulator Performance Degradation:**

+ - Simulation slows down over time (from .33 to .29 rate), potentially impacting reaction times and sensor data processing.

+- **Vehicle Despawning:**

+ - Random despawning of cars and potential timeout for stuck vehicles may interfere with the agent’s perception and response.

+

+---

+

+### Testing and Validation

+

+These errors highlight the gaps in the testing and validation process, particularly areas that may need further testing to ensure proper functioning in the real environment:

+

+- **Consistency in Object Detection:**

+ - Image segmentation flickering (e.g., police car with indicators), suggesting inadequate validation for dynamic objects with flashing lights.

+- **Vision Node Stability:**

+ - Vision node appears to freeze occasionally, indicating possible untested scenarios or bugs in the perception pipeline.

+- **Unrealistic Emergency Braking and Recovery Testing:**

+ - Unstable lane holding and recovery, resulting in inappropriate emergency braking maneuvers, suggests insufficient validation in complex recovery scenarios.

+- **Misclassification of Tree Trunks:**

+ - Trees being detected as cars, indicating the need for validation of object detection in diverse environmental conditions.

+

+---

+

+### Perception

+

+Errors within perception involve how the agent senses and understands its surroundings:

+

+- **Object Misclassification and Collision:**

+ - Tree trunks mistakenly detected as cars.

+ - Crashes into bikers and parked cars, suggesting perception failures in identifying and avoiding static and moving obstacles.

+- **Segmentation and Detection Instability:**

+ - Vision node freezing.

+ - Flickering segmentation for objects like police cars with indicators.

+- **Lane Detection and Holding Errors:**

+ - Difficulty in stable lane holding, leading to unexpected lane deviations and emergency braking.

+ - Misinterpretation of open car doors, causing lane intrusions without sufficient clearance.

+

+---

+

+### Localization and Mapping

+

+Issues with localization and mapping involve understanding and positioning within the environment:

+

+- **Positioning Errors in Turns:**

+ - Turns are too wide, leading the agent onto the walkway, indicating potential localization issues in tight maneuvers.

+- **Lane Holding and Position Drift:**

+ - Unstable lane holding with constant left and right drifting suggests potential mapping or localization inaccuracies.

+

+---

+

+### Decision-Making

+

+Errors in decision-making relate to the agent's ability to make appropriate choices in response to various scenarios:

+

+- **Right of Way Violations:**

+ - Fails to yield to oncoming traffic when turning left and when merging into traffic.

+ - Ignores open car doors when passing parked cars, causing dangerous close passes.

+- **Erroneous Stopping and Acceleration:**

+ - Stops unnecessarily at green lights and struggles to resume smoothly after stopping.

+ - Abrupt stopping and starting at green lights, potentially due to aggressive speed control.

+- **Repeated Mistakes in Overtaking and Lane Changes:**

+ - Treats temporary parked cars as regular vehicles to overtake without checking oncoming traffic, leading to unsafe lane changes.

+

+---

+

+### Path Planning

+

+Path planning issues include errors in determining the correct and safest path:

+

+- **Incorrect Overtaking Paths:**

+ - Attempts to overtake trees and temporary parked cars without considering oncoming traffic, showing flaws in path generation.

+- **Wide Turning Paths:**

+ - Takes overly wide turns that lead to walkway intrusions.

+- **Aggressive Lane Changes:**

+ - Lane change planning is overly aggressive, causing the vehicle to abruptly veer, triggering emergency stops to avoid collisions.

+

+---

+

+### Control

+

+Control-related issues concern the vehicle’s execution of planned actions, like maintaining speed and stability:

+

+- **Abrupt and Aggressive Speed Control:**

+ - Speed controller is too aggressive when accelerating from green lights, leading to abrupt stopping and starting.

+- **Instability in Lane Holding:**

+ - Inconsistent lane holding, particularly after getting unstuck, results in unexpected deviations onto walkways.

+- **Inconsistent Recovery Behavior:**

+ - Repeatedly gets stuck in various situations (e.g., speed limit signs or temporary parked cars) and fails to recover smoothly, indicating control issues in re-engaging the driving path.

+

+---

+

+## Raw notes

+

+Here are the raw notes in case misunderstandings have been made when grouping the mistakes

+

+### Run 1

+

+- Scared to get out of parking spot

+- lane not held causing problems when avoiding open car door

+- stopping for no apparent reason

+- does not keep lane (going left and right)

+- driving into still standing car at red light

+- impatient when waiting for light to turn green (after the crash, going back and forth)

+- abrupt stopping and going when light turns green without reason → speed controller too aggressive?

+- Problems to keep lane is causing emergency(?) brake maneuvers

+- vision node seems to be frozen ?

+- Detects bikers, crashes into them nonetheless

+- lane change very aggressive causing emergency stop in order to not go into oncoming traffic

+- gets stuck as a result

+- simulator despawns cars randomly

+- left turn does not give way to oncoming traffic when seeing them

+- does the turn too wide, gets onto walkway

+- simulation gets slower as time progresses, started at .33 rate, now at .29

+- gets stuck in front of speed limit sign after doing turn too wide

+- gets unstuck, lane holding too aggressive goes onto walkway again (integrator windup while being stuck?)

+- gets stuck again (→ unstuck behavior bad)

+- when getting unstuck, merges onto street without giving way to traffic on the road

+- drives into oncoming traffic, traffic on the same lane overtakes on the right side and does not stop

+- really stuck now

+

+### Run 2

+

+- merges without giving way to traffic

+- does not respect open car door

+- crashes into car in front when going after stop at red light

+- stops at green light

+- crashes into bikers

+- kid runs onto street, agent crashes into oncoming traffic, gets stuck

+- nudges away from the car it crashed into

+- is now free but does not move

+- crashes again

+- police car with indicators on standing on the side is crashed into

+- image segmentation for police car seems to be flickering

+- tree trunk has bounding box (are trees detected as cars?)

+

+### Run 3

+

+- does not give way when exiting a parking spot

+- LIDAR detects floor

+- trajectory for overtaking is wrong / no overtake needed

+- stops without reason

+- tries to "overtake" tree (detects tree as car)

+- playback ration temperature dependent likely

+- after emergency brake stops too long

+- left turn doesn't give way to oncoming traffic

+- recovery leads to oncoming traffic (left turn situation maybe doesn't recognize street?) 9 min

+- temporary parked car with indicators on counts as normal overtake (does not check oncoming traffic)

+- temporary parked car with indicators is the crux

+- Despawn time of cars ? Cars despawn when stuck → over time limit ?

+- Trajectory correctly generated, just too deep in the mistakes

diff --git a/doc/research/paf24/general/driving_score.md b/doc/research/paf24/general/driving_score.md

new file mode 100644

index 00000000..4eaeea7e

--- /dev/null

+++ b/doc/research/paf24/general/driving_score.md

@@ -0,0 +1,82 @@

+# Driving Score Computation

+

+**Summary:** The Driving score is the main performance metric of the agent and therefore has to be examined.

+

+- [A Starting Point for Metrics and Measurements](#a-starting-point-for-metrics-and-measurements)

+- [Driving score](#driving-score)

+- [Route completion](#route-completion)

+- [Infraction penalty](#infraction-penalty)

+- [Infractions](#infractions)

+- [Off-road driving](#off-road-driving)

+- [Additional Events](#additional-events)

+- [Sources](#sources)

+

+## A Starting Point for Metrics and Measurements

+

+The CARLA Leaderboard sets a public example for evaluation and comparison.

+The driving proficiency of an agent can be characterized by multiple metrics. For this leaderboard, the CARLA team selected a set of metrics that help understand different aspects of driving.

+

+## Driving score

+

+$\frac{1}{N}\sum^i_N R_i P_i$

+

+- The main metric of the leaderboard, serving as an aggregate of the average route completion and the number of traffic infractions. Here $N$ stands for the number of routes, $R_i$ is the percentage of completion of the $i$-th route, and $P_i$ is the infraction penalty of the $i$-th route.

+

+## Route completion

+

+$\frac{1}{N}\sum^i_N R_i$

+

+- Percentage of route distance completed by an agent, averaged across $N$ routes.

+

+## Infraction penalty

+

+$\prod_j^{ped, veh, ... stop} (p_j^i)^{n_{infractions}}$

+

+- Aggregates the number of infractions triggered by an agent as a geometric series. Agents start with an ideal 1.0 base score, which is reduced by a penalty coefficient for every instance of these.

+

+## Infractions

+

+The CARLA leaderboard offers individual metrics for a series of infractions. Each of these has a penalty coefficient that will be applied every time it happens. Ordered by severity, the infractions are the following.

+

+- Collisions with pedestrians $0.50$

+- Collisions with other vehicles $0.60$

+- Collisions with static elements $0.65$

+- Running a red light $0.70$

+- Running a stop sign $0.80$

+

+Some scenarios feature behaviors that can block the ego-vehicle indefinitely. These scenarios will have a timeout of 4 minutes after which the ego-vehicle will be released to continue the route. However, a penalty is applied when the time limit is breached

+

+- Scenario Timeout $0.70$

+

+The agent is expected to maintain a minimum speed in keeping with nearby traffic. The agent’s speed will be compared with the speed of nearby vehicles. Failure to maintain a suitable speed will result in a penalty.

+The penalty applied is dependent on the magnitude of the speed difference, up to the following value:

+

+- Failure to maintain speed $0.70$

+

+The agent should yield to emergency vehicles coming from behind. Failure to allow the emergency vehicle to pass will incur a penalty:

+

+- Failure to yield to emergency vehicle $0.70$

+

+Besides these, there is one additional infraction which has no coefficient, and instead affects the computation of route completion $(R_i)$.

+

+## Off-road driving

+

+If an agent drives off-road, that percentage of the route will not be considered towards the computation of the route completion score.

+

+## Additional Events

+

+Some events will interrupt the simulation, preventing the agent to continue.

+

+- Route deviation

+If an agent deviates more than $30$ meters from the assigned route.

+- Agent blocked

+If an agent is blocked in traffic without taking any actions for $180$ simulation seconds.

+- Simulation timeout

+If no client-server communication can be established in $60$ seconds.

+- Route timeout

+This timeout is triggered if the simulation of a route takes more than the allowed time. This allowed time is computed by multiplying the route distance in meters by a factor of $0.8$.

+

+## Sources

+

+- [Alpha Drive Carla Leaderboard Case Study](https://alphadrive.ai/industries/automotive/carla-leaderboard-case-study/)

+- [Carla Leaderboard](https://leaderboard.carla.org/)

diff --git a/doc/research/paf24/general/leaderboard_summary.md b/doc/research/paf24/general/leaderboard_summary.md

new file mode 100644

index 00000000..193095ca

--- /dev/null

+++ b/doc/research/paf24/general/leaderboard_summary.md

@@ -0,0 +1,131 @@

+# Leaderboard summary

+

+**Summary:** This document depicts general informations regarding the leaderboard as a quick overview in a more condensed form.

+

+- [General Information](#general-information)

+- [Traffic Scenarios](#traffic-scenarios)

+ - [Generic](#generic)

+ - [Control Loss](#control-loss)

+ - [Traffic negotiation](#traffic-negotiation)

+ - [Highway](#highway)

+ - [Obstacle avoidance](#obstacle-avoidance)

+ - [Braking and lane changing](#braking-and-lane-changing)

+ - [Parking](#parking)

+- [Available Sensors](#available-sensors)

+

+## General Information

+

+The CARLA Leaderboard includes a variety of scenarios to test autonomous driving models in realistic urban environments. This document provides a quick overview of all possible scenarios and available sensors.

+The leaderboard offers a driving score metric based on infractions happening during scenarios.

+

+[#366](https://github.com/una-auxme/paf/issues/366) provides a more indepth look at how this score is calculated.

+

+Each time an infraction takes place several details are recorded and appended to a list specific to the infraction type in question. Besides scenario and score handling, the leaderboard also provides a plethora of sensors to get data from.

+

+The leaderboard provides predefined routes with a starting and destination point. The route description can contain GPS style coordinates, map coordinates or route instructions.

+

+Two participation modalities are offered, "Sensors" and "Map". "Sensors" only offers the sensors listed down below while "Map" offers HD map data in addition to all the sensors.

+

+## Traffic Scenarios

+

+- ### **Generic:**

+

+ - Traffic lights

+ - Signs (stop, speed limit, yield)

+

+- ### **Control Loss:**

+

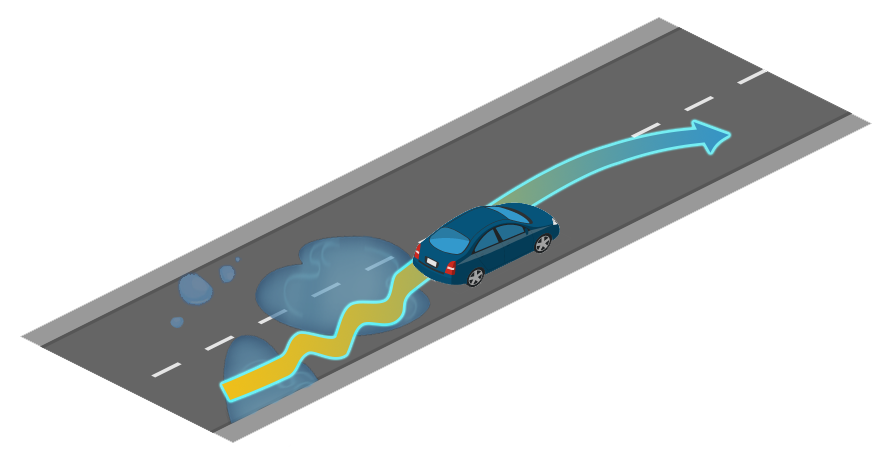

+ - The ego-vehicle loses control due to bad conditions on the road and it must recover, coming back to its original lane.

+

+

+- ### **Traffic negotiation:**

+

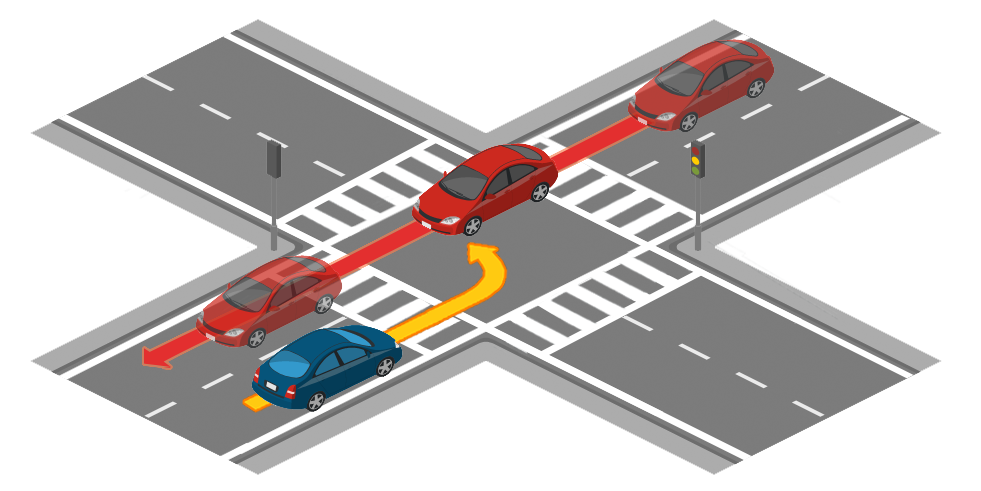

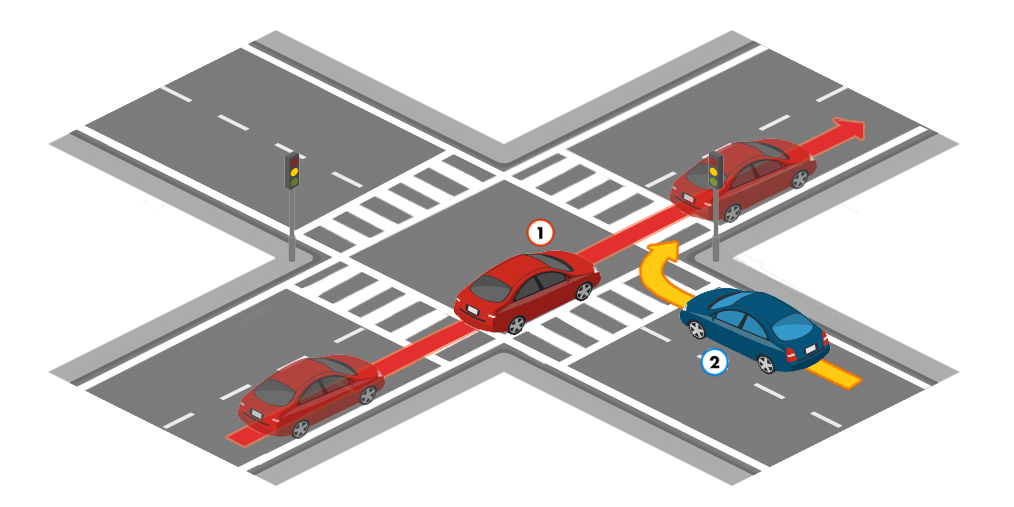

+ - The ego-vehicle is performing an unprotected left turn at an intersection, yielding to oncoming traffic.

+

+ - The ego-vehicle is performing a right turn at an intersection, yielding to crossing traffic.

+

+ - The ego-vehicle needs to negotiate with other vehicles to cross an unsignalized intersection.

+

+ - The ego-vehicle is going straight at an intersection but a crossing vehicle runs a red light, forcing the ego-vehicle to avoid the collision.

+

+ - The ego-vehicle needs to perform a turn at an intersection yielding to bicycles crossing from either the left or right.

+

+

+- ### **Highway:**

+

+ - The ego-vehicle merges into moving highway traffic from a highway on-ramp.

+

+ - The ego-vehicle encounters a vehicle merging into its lane from a highway on-ramp.

+

+ - The ego-vehicle encounters a vehicle cutting into its lane from a lane of static traffic.

+

+ - The ego-vehicle must cross a lane of moving traffic to exit the highway at an off-ramp.

+

+ - The ego-vehicle is approached by an emergency vehicle coming from behind.

+

+

+- ### **Obstacle avoidance:**

+

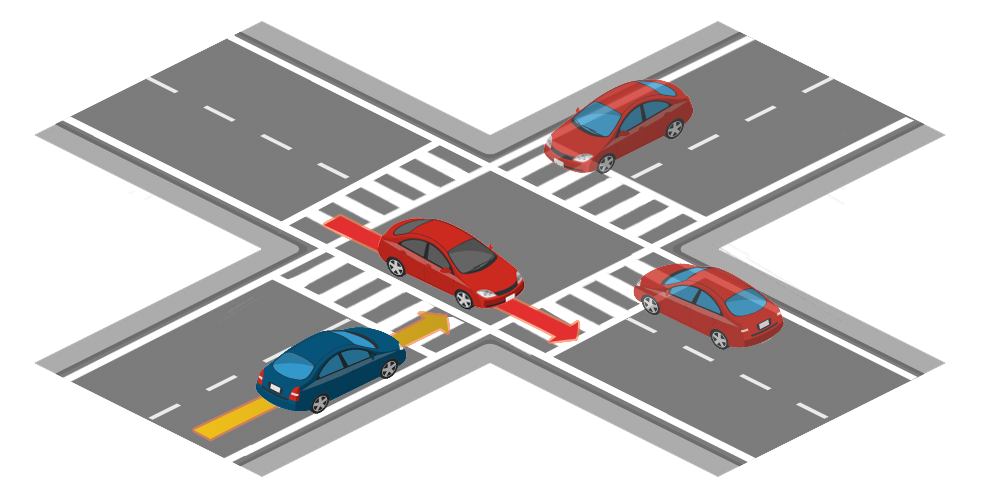

+ - The ego-vehicle encounters an obstacle blocking the lane and must perform a lane change into traffic moving in the same or opposite direction to avoid it. The obstacle may be a construction site, an accident or a parked vehicle.

+

+ - The ego-vehicle encounters a parked vehicle opening a door into its lane and must maneuver to avoid it.

+

+ - The ego-vehicle encounters a slow moving hazard (e.g. bicycles) blocking part of the lane. The ego-vehicle must brake or maneuver next to a lane of traffic moving in the same or opposite direction to avoid it.

+

+ - The ego-vehicle encounters an oncoming vehicles invading its lane on a bend due to an obstacle.

+

+

+- ### **Braking and lane changing:**

+

+ - The leading vehicle decelerates suddenly due to an obstacle and the ego-vehicle must perform an emergency brake or an avoidance maneuver.

+

+ - The ego-vehicle encounters an obstacle / unexpected entity on the road and must perform an emergency brake or an avoidance maneuver.

+

+ - The ego-vehicle encounters an pedestrian emerging from behind a parked vehicle and advancing into the lane.

+

+ - While performing a maneuver, the ego-vehicle encounters an obstacle in the road, either a pedestrian or a bicycle, and must perform an emergency brake or an avoidance maneuver.

+

+ - While performing a maneuver, the ego-vehicle encounters a stopped vehicle in the road and must perform an emergency brake or an avoidance maneuver.

+

+ - The ego-vehicle must slow down or brake to allow a parked vehicle exiting a parallel parking bay to cut in front.

+

+

+- ### **Parking:**

+

+ - The ego-vehicle must exit a parallel parking bay into a flow of traffic.

+

+

+## Available Sensors

+

+- **[GNSS](https://carla.readthedocs.io/en/latest/ref_sensors/#gnss-sensor)**

+ - GPS sensor returning geo location data.

+- **[IMU](https://carla.readthedocs.io/en/latest/ref_sensors/#imu-sensor)**

+ - 6-axis inertial measurement unit.

+- **[LIDAR](https://carla.readthedocs.io/en/latest/ref_sensors/#lidar-sensor)**

+ - Laser to detect obstacles.

+- **[RADAR](https://carla.readthedocs.io/en/latest/ref_sensors/#radar-sensor)**

+ - Long-range RADAR (up to 100 meters).

+- **[RGB CAMERA](https://carla.readthedocs.io/en/latest/ref_sensors/#rgb-camera)**

+ - Regular camera for image capture

+- **[COLLISION DETECTOR](https://carla.readthedocs.io/en/latest/ref_sensors/#collision-detector)**

+ - Used to detect collisions with other actors.

+- **[DEPTH CAMERA](https://carla.readthedocs.io/en/latest/ref_sensors/#depth-camera)**

+ - Provides a distance-coded picture to create a depth map of the scene.

+- **[LANE INVASION DETECTOR](https://carla.readthedocs.io/en/latest/ref_sensors/#lane-invasion-detector)**

+ - Uses road data to detect when the vehicle crosses a lane marking.

+- **[OBSTACLE DETECTOR](https://carla.readthedocs.io/en/latest/ref_sensors/#obstacle-detector)**

+ - Detects obstacles in front of the vehicle within a capsular shape. Works geometry based so requires obstacles to exist as geometry in the scene. (seems like a pure simulation sensor)

+- **[RSS SENSOR](https://carla.readthedocs.io/en/latest/ref_sensors/#rss-sensor)**

+ - Integrates the responisbility sensitive safety model in CARLA. Basically a framwork for mathematical guidelines on how to react in various scenarios. Disabled by default.

+- **[SEMANTIC LIDAR](https://carla.readthedocs.io/en/latest/ref_sensors/#semantic-lidar-sensor)**

+ - Similiar to LIDAR with a different data structure/focus.

+- **[SEMANTIC SEGMENTATION CAMERA](https://carla.readthedocs.io/en/latest/ref_sensors/#semantic-segmentation-camera)**

+ - Classifies objects in sight by tag (seems like a pure simulation sensor).

+- **[INSTANCE SEGMENTATION CAMERA](https://carla.readthedocs.io/en/latest/ref_sensors/#instance-segmentation-camera)**

+ - Classifies every object by class and instance ID (seems like a pure simulation sensor).

+- **[DVS CAMERA](https://carla.readthedocs.io/en/latest/ref_sensors/#dvs-camera)**

+ - Different kind of camera that works in high-speed scenarios. Pixels asynchronously respond to local changes in brightness instead of globally by shutter.

+- **[OPTICAL FLOW CAMERA](https://carla.readthedocs.io/en/latest/ref_sensors/#optical-flow-camera)**

+ - Captures motion(velocity per pixel) perceived from the point of view of the camera.

+- **[V2X SENSOR](https://carla.readthedocs.io/en/latest/ref_sensors/#v2x-sensor)**

+ - Vehicle to everything sensor, allows vehicle to communicate with other vehicles and elements in the environment. More like a concept for the future, not widespread implemented yet.

diff --git a/doc/research/paf24/general/old_research_overview.md b/doc/research/paf24/general/old_research_overview.md

new file mode 100644

index 00000000..db2627b9

--- /dev/null

+++ b/doc/research/paf24/general/old_research_overview.md

@@ -0,0 +1,57 @@

+# Research Summary

+

+**Summary:** The research of the previous groups is condensed into this file to make it an entry point for this year's project.

+

+- [Research and Resources](#research-and-resources)

+- [Acting and Control Modules](#acting-and-control-modules)

+- [Planning and Trajectory Generation](#planning-and-trajectory-generation)

+- [State Machine for Decision-Making](#state-machine-for-decision-making)

+- [OpenDrive Integration and Navigation Data](#opendrive-integration-and-navigation-data)

+

+## Research and Resources

+

+- This section provides an extensive foundation for the autonomous vehicle project by consolidating previous research from **PAF22** and **PAF23**.

+- **PAF22**: Established core methods for autonomous vehicle control and perception, including traffic light detection and emergency braking features. It also set up the base components of the CARLA simulator integration, essential sensor configurations, and data processing pipelines.

+- **PAF23**: Enhanced lane-change algorithms and expanded intersection-handling strategies. This project introduced a more robust approach to decision-making at intersections, factoring in pedestrian presence, oncoming traffic, and improved signal detection. Additionally, **PAF23** refined vehicle

+- behavior for more fluid lane-change maneuvers, optimizing control responses to avoid obstacles and maintain lane positioning during highway merging and overtaking scenarios.

+- The resources also include CARLA-specific tools such as the CARLA Leaderboard and ROS Bridge integration, which link CARLA’s simulation environment to the Robot Operating System (ROS). Detailed references to CARLA’s sensor suite are provided, covering RGB cameras, LIDAR, radar, GNSS, and IMU

+sensors essential for perception and control.

+

+## Acting and Control Modules

+

+- The **acting module** focuses on the vehicle’s control actions, including throttle, steering, and braking.

+- **Core Controllers**: Contains controllers like the **PID controller** for longitudinal (speed) control and **Pure Pursuit** and **Stanley controllers** for lateral (steering) control. These controllers work in unison to achieve precise vehicle handling, especially on turns and at varying speeds.

+- **PAF22 Contributions**: Implemented the PID-based longitudinal controller and integrated the Pure Pursuit controller for basic trajectory following, setting a solid foundation for steering and throttle control. PAF22 also laid out the preliminary **emergency braking** logic, designed to override

+other controls in hazardous situations.

+- **PAF23 Contributions**: Introduced refinements in lateral control, including the adaptive Stanley controller, which adjusts steering sensitivity based on vehicle speed to maintain a smooth trajectory. **PAF23** also optimized the emergency braking logic to respond more quickly to obstacles, with

+improvements in lane-changing safety.

+- **Sensor Integration**: The acting module subscribes to **navigation and sensor data** topics to remain updated on vehicle position and velocity, integrating sensor feedback for real-time control adjustments.

+

+## Planning and Trajectory Generation

+

+- The **planning module** is critical for determining safe, efficient routes by combining **global and local path planning** techniques.

+- **Global Planning**: Uses the **CommonRoad route planner** from TUM, creating a high-level path based on predefined waypoints. **PAF22** initially set up this planner, while **PAF23** added finer adjustments for lane selection and obstacle navigation.

+- **Local Planning**: Tailored for dynamic obstacles, this component focuses on immediate adjustments to the vehicle’s path, particularly useful in urban environments with unpredictable elements.

+Local planning includes **trajectory tracking** using Pure Pursuit and Stanley controllers to maintain a steady path.

+- **PAF23 Enhancements**: Improved **collision avoidance** algorithms and added real-time updates to the trajectory based on sensor data. The local planner now adapts quickly to lane-change requests or route deviations due to traffic, creating a seamless flow between global and local path planning.

+Furthermore, the integration of **behavior trees** has been researched, offering some advantages in computing power and explainability. Its drawbacks in uncertain situations and complex environments have been presented.

+

+## State Machine for Decision-Making

+

+- This modular state machine handles various driving behaviors, including **lane changes**, **intersections**, and **traffic light responses**.

+- **Core State Machines**: The **driving state machine** manages normal vehicle navigation, controlling target speed and ensuring lane compliance. The **lane-change state machine** makes safe decisions based on lane availability and traffic,

+while the **intersection state machine** manages vehicle approach, stop, and turn behaviors at intersections.

+

+- **PAF22 Contributions**: Developed the base decision-making states, enabling lane following, simple lane-change maneuvers, and basic intersection handling.

+- **PAF23 Contributions**: Significantly enhanced the state machine by adding specialized states for complex maneuvers, like responding to oncoming traffic at intersections, merging onto highways, and making priority-based decisions at roundabouts.

+The **intersection state machine** now incorporates detailed behaviors for handling left turns, straight passes, and right turns, considering pedestrian zones and cross-traffic. **PAF23** also introduced an adaptive lane-change state,

+which calculates safety based on vehicle speed, distance to adjacent vehicles, and road type.

+

+## OpenDrive Integration and Navigation Data

+

+- **OpenDrive** files provide a structured road network description, detailing lanes, road segments, intersections, and traffic signals. The navigation data is published in CARLA as ROS topics containing GPS/world coordinates and route instructions.

+- **PAF22 Setup**: Established OpenDrive as the core format for map data, integrating it with the CARLA simulator. Initial work involved parsing road and lane data to create accurate trajectories.

+- **PAF23 Enhancements**: Improved the parsing of OpenDrive files, focusing on high-level map data relevant to the vehicle's route, such as signal placements and lane restrictions. This project also optimized the navigation data integration with ROS,

+ensuring the vehicle receives consistent updates on its location relative to the route, intersections, and nearby obstacles.

+- **Navigation Data Structure**: The system uses navigation data points, including **GPS coordinates, world coordinates, and high-level route instructions** (e.g., turn left, change lanes) to guide the vehicle. Each point is matched with a **road option command**,

+instructing the vehicle on how to proceed at specific waypoints.

diff --git a/doc/research/paf24/general/rviz.md b/doc/research/paf24/general/rviz.md

new file mode 100644

index 00000000..f6cbd0a7

--- /dev/null

+++ b/doc/research/paf24/general/rviz.md

@@ -0,0 +1,78 @@

+# Research about RViz

+

+**Summary:** This page contains information on how to use RViz and how it is integrated into the project.

+

+- [General overview](#general-overview)

+- [Displays panel](#displays-panel)

+ - [Display types](#display-types)

+ - [Camera](#camera)

+ - [Image](#image)

+ - [PointCloud(2)](#pointcloud2)

+ - [Path](#path)

+- [RViz configuration](#rviz-configuration)

+- [Sources](#sources)

+

+## General overview

+

+Description from the git repository: **"rviz is a 3D visualizer for the Robot Operating System (ROS) framework."**

+

+It can be used to visualize the state of the car in real-time.

+

+- The Visualizer always has a 3D View panel in the middle. This is where all 3D data, from for example lidar and radar sensors, is shown.

+- The most important panel is *Displays*. It is used to configure what data is displayed.

+- All other panels are available under *Panels* in the menu bar.