diff --git a/doc/content/squeezed/_index.md b/doc/content/squeezed/_index.md

new file mode 100644

index 00000000000..45985d6499f

--- /dev/null

+++ b/doc/content/squeezed/_index.md

@@ -0,0 +1,17 @@

++++

+title = "Squeezed"

+weight = 50

++++

+

+Squeezed is the XAPI Toolstack's host memory manager (aka balloon driver).

+Squeezed uses ballooning to move memory between running VMs, to avoid wasting

+host memory.

+

+Principles

+----------

+

+1. Avoid wasting host memory: unused memory should be put to use by returning

+ it to VMs.

+2. Memory should be shared in proportion to the configured policy.

+3. Operate entirely at the level of domains (not VMs), and be independent of

+ Xen toolstack.

diff --git a/doc/content/squeezed/architecture/index.md b/doc/content/squeezed/architecture/index.md

new file mode 100644

index 00000000000..6d85730ae30

--- /dev/null

+++ b/doc/content/squeezed/architecture/index.md

@@ -0,0 +1,42 @@

++++

+title = "Architecture"

++++

+

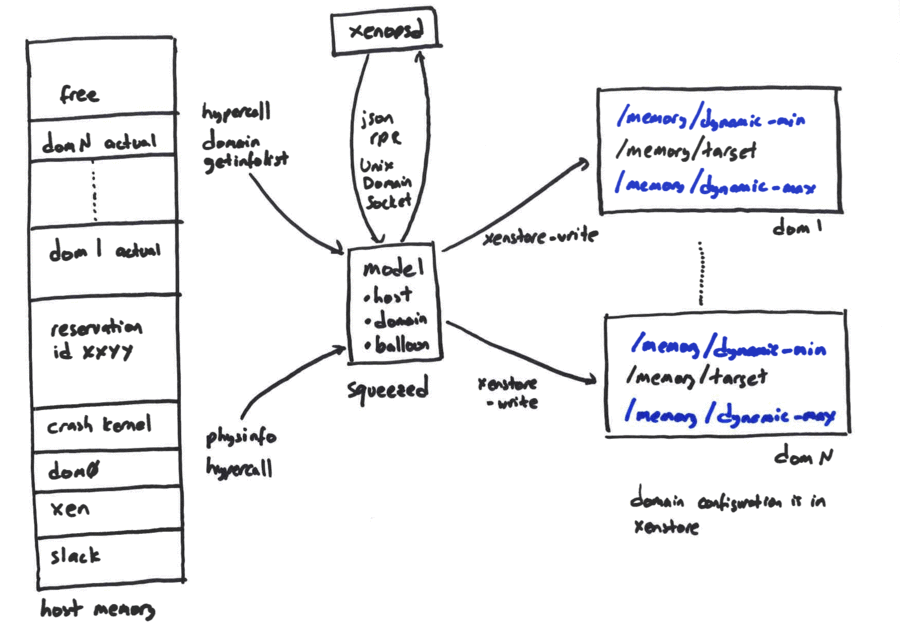

+Squeezed is responsible for managing the memory on a single host. Squeezed

+"balances" memory between VMs according to a policy written to Xenstore.

+

+The following diagram shows the internals of Squeezed:

+

+

+

+At the center of squeezed is an abstract model of a Xen host. The model

+includes:

+

+- The amount of already-used host memory (used by fixed overheads such as Xen

+ and the crash kernel).

+- Per-domain memory policy specifically `dynamic-min` and `dynamic-max` which

+ together describe a range, within which the domain's actual used memory

+ should remain.

+- Per-domain calibration data which allows us to compute the necessary balloon

+ target value to achive a particular memory usage value.

+

+Squeezed is a single-threaded program which receives commands from xenopsd over

+a Unix domain socket. When Xenopsd wishes to start a new VM, squeezed will be

+asked to create a "reservation". Note this is different to the Xen notion of a

+reservation. A squeezed reservation consists of an amount of memory squeezed

+will guarantee to keep free labelled with an id. When Xenopsd later creates the

+domain to notionally use the reservation, the reservation is "transferred" to

+the domain before the domain is built.

+

+Squeezed will also wake up every 30s and attempt to rebalance the memory on a

+host. This is useful to correct imbalances caused by balloon drivers

+temporarily failing to reach their targets. Note that ballooning is

+fundamentally a co-operative process, so squeezed must handle cases where the

+domains refuse to obey commands.

+

+The "output" of squeezed is a list of "actions" which include:

+

+- Set domain x's `memory/target` to a new value.

+- Set the `maxmem` of a domain to a new value (as a hard limit beyond which the

+ domain cannot allocate).

+

diff --git a/doc/content/squeezed/architecture/squeezed.png b/doc/content/squeezed/architecture/squeezed.png

new file mode 100644

index 00000000000..eb26f3eba46

Binary files /dev/null and b/doc/content/squeezed/architecture/squeezed.png differ

diff --git a/doc/content/squeezed/design/calculation.svg b/doc/content/squeezed/design/calculation.svg

new file mode 100644

index 00000000000..0b24ce7beeb

--- /dev/null

+++ b/doc/content/squeezed/design/calculation.svg

@@ -0,0 +1,892 @@

+

+

+

+

diff --git a/ocaml/squeezed/doc/design/figs/fraction.latex b/doc/content/squeezed/design/figs/fraction.latex

similarity index 100%

rename from ocaml/squeezed/doc/design/figs/fraction.latex

rename to doc/content/squeezed/design/figs/fraction.latex

diff --git a/ocaml/squeezed/doc/design/figs/g.latex b/doc/content/squeezed/design/figs/g.latex

similarity index 100%

rename from ocaml/squeezed/doc/design/figs/g.latex

rename to doc/content/squeezed/design/figs/g.latex

diff --git a/ocaml/squeezed/doc/design/figs/hostfreemem.latex b/doc/content/squeezed/design/figs/hostfreemem.latex

similarity index 100%

rename from ocaml/squeezed/doc/design/figs/hostfreemem.latex

rename to doc/content/squeezed/design/figs/hostfreemem.latex

diff --git a/ocaml/squeezed/doc/design/figs/reservation.latex b/doc/content/squeezed/design/figs/reservation.latex

similarity index 100%

rename from ocaml/squeezed/doc/design/figs/reservation.latex

rename to doc/content/squeezed/design/figs/reservation.latex

diff --git a/ocaml/squeezed/doc/design/figs/unused.latex b/doc/content/squeezed/design/figs/unused.latex

similarity index 100%

rename from ocaml/squeezed/doc/design/figs/unused.latex

rename to doc/content/squeezed/design/figs/unused.latex

diff --git a/ocaml/squeezed/doc/design/figs/x.latex b/doc/content/squeezed/design/figs/x.latex

similarity index 100%

rename from ocaml/squeezed/doc/design/figs/x.latex

rename to doc/content/squeezed/design/figs/x.latex

diff --git a/ocaml/squeezed/doc/design/figs/xtotpages.latex b/doc/content/squeezed/design/figs/xtotpages.latex

similarity index 100%

rename from ocaml/squeezed/doc/design/figs/xtotpages.latex

rename to doc/content/squeezed/design/figs/xtotpages.latex

diff --git a/doc/content/squeezed/design/fraction.svg b/doc/content/squeezed/design/fraction.svg

new file mode 100644

index 00000000000..92fbce499f4

--- /dev/null

+++ b/doc/content/squeezed/design/fraction.svg

@@ -0,0 +1,265 @@

+

+

diff --git a/doc/content/squeezed/design/g.svg b/doc/content/squeezed/design/g.svg

new file mode 100644

index 00000000000..5136128f6bd

--- /dev/null

+++ b/doc/content/squeezed/design/g.svg

@@ -0,0 +1,92 @@

+

+

diff --git a/doc/content/squeezed/design/hostfreemem.svg b/doc/content/squeezed/design/hostfreemem.svg

new file mode 100644

index 00000000000..c97fd91ae90

--- /dev/null

+++ b/doc/content/squeezed/design/hostfreemem.svg

@@ -0,0 +1,162 @@

+

+

diff --git a/ocaml/squeezed/doc/design/README.md b/doc/content/squeezed/design/index.md

similarity index 90%

rename from ocaml/squeezed/doc/design/README.md

rename to doc/content/squeezed/design/index.md

index 8b46a0c0009..b1ef3a4cb30 100644

--- a/ocaml/squeezed/doc/design/README.md

+++ b/doc/content/squeezed/design/index.md

@@ -1,40 +1,34 @@

-Squeezed: a host memory ballooning daemon for Xen

-=================================================

++++

+title = "Design"

++++

Squeezed is a single host memory ballooning daemon. It helps by:

-1. allowing VM memory to be adjusted dynamically without having to reboot;

+1. Allowing VM memory to be adjusted dynamically without having to reboot;

and

-

-2. avoiding wasting memory by keeping everything fully utilised, while retaining

+2. Avoiding wasting memory by keeping everything fully utilised, while retaining

the ability to take memory back to start new VMs.

-Squeezed currently includes a simple

-[Ballooning policy](#ballooning-policy)

-which serves as a useful default.

-The policy is written with respect to an abstract

-[Xen memory model](#the-memory-model), which is based

-on a number of

-[assumptions about the environment](#environmental-assumptions),

-for example that most domains have co-operative balloon drivers.

-In theory the policy could be replaced later with something more sophisticated

-(for example see

-[xenballoond](https://github.com/avsm/xen-unstable/blob/master/tools/xenballoon/xenballoond.README)).

-

-The [Toolstack interface](#toolstack-interface) is used by

-[Xenopsd](https://github.com/xapi-project/xenopsd) to free memory

-for starting new VMs.

-Although the only known client is Xenopsd,

-the interface can in theory be used by other clients. Multiple clients

-can safely use the interface at the same time.

-

-The [internal structure](#the-structure-of-the-daemon) consists of

-a single-thread event loop. To see how it works end-to-end, consult

-the [example](#example-operation).

-

-No software is ever perfect; to understand the flaws in Squeezed,

-please consult the

-[list of issues](#issues).

+Squeezed currently includes a simple [Ballooning policy](#ballooning-policy)

+which serves as a useful default. The policy is written with respect to an

+abstract [Xen memory model](#the-memory-model), which is based on a number of

+[assumptions about the environment](#environmental-assumptions), for example

+that most domains have co-operative balloon drivers. In theory the policy could

+be replaced later with something more sophisticated (for example see

+[xenballoond](https://github.com/avsm/xen-unstable/blob/master/tools/xenballoon/

+xenballoond.README)).

+

+The [Toolstack interface](#toolstack-interface) is used by Xenopsd to free

+memory for starting new VMs. Although the only known client is Xenopsd, the

+interface can in theory be used by other clients. Multiple clients can safely

+use the interface at the same time.

+

+The [internal structure](#the-structure-of-the-daemon) consists of a

+single-thread event loop. To see how it works end-to-end, consult the

+[example](#example-operation).

+

+No software is ever perfect; to understand the flaws in Squeezed, please

+consult the [list of issues](#issues).

Environmental assumptions

=========================

@@ -45,11 +39,10 @@ Environmental assumptions

is granted full access to xenstore, enabling it to modify every

domain’s `memory/target`.

-2. The Squeezed daemon calls

- `setmaxmem` in order to cap the amount of memory a domain can use.

- This relies on a patch to

- [xen which allows `maxmem` to be set lower than `totpages`](http://xenbits.xen.org/xapi/xen-3.4.pq.hg?file/c01d38e7092a/max-pages-below-tot-pages).

- See Section [maxmem](#use-of-maxmem) for more information.

+2. The Squeezed daemon calls `setmaxmem` in order to cap the amount of memory

+ a domain can use. This relies on a patch to xen which allows `maxmem` to

+ be set lower than `totpages` See Section [maxmem](#use-of-maxmem) for more

+ information.

3. The Squeezed daemon

assumes that only domains which write `control/feature-balloon` into

@@ -101,7 +94,7 @@ Environmental assumptions

guests from allocating *all* host memory (even

transiently) we guarantee that memory from within these special

ranges is always available. Squeezed operates in

- [two phases](#twophase-section): first causing memory to be freed; and

+ [two phases](#two-phase-target-setting): first causing memory to be freed; and

second causing memory to be allocated.

8. The Squeezed daemon

@@ -126,10 +119,10 @@ internal Squeezed concept and Xen is

completely unaware of it. When the daemon is moving memory between

domains, it always aims to keep

-

+

where *s* is the size of the “slush fund” (currently 9MiB) and

-

+

is the amount corresponding to the *i*th

reservation.

@@ -226,7 +219,7 @@ meanings:

If all balloon drivers are responsive then Squeezed daemon allocates

memory proportionally, so that each domain has the same value of:

-

+

So:

@@ -311,7 +304,7 @@ Note that non-ballooning aware domains will always have

since the domain will not be

instructed to balloon. Since a domain which is being built will have

0 <= `totpages` <= `reservation`, Squeezed computes

-

+

and subtracts this from its model of the host’s free memory, ensuring

that it doesn’t accidentally reallocate this memory for some other

purpose.

@@ -361,7 +354,7 @@ Each iteration of the main loop generates the following actions:

1. Domains which were active but have failed to make progress towards

their target in 5s are declared *inactive*. These

domains then have:

- `maxmem` set to the minimum of `target` and `totpages.

+ `maxmem` set to the minimum of `target` and `totpages`.

2. Domains which were inactive but have started to make progress

towards their target are declared *active*. These

@@ -429,7 +422,7 @@ domain 2) and a host. For a domain, the square box shows its

memory. Note the highlighted state where the host’s free memory is

temporarily exhausted

-

+

In the

initial state (at the top of the diagram), there are two domains, one

@@ -470,7 +463,7 @@ domain `maxmem` value is used to limit memory allocations by the domain.

The rules are:

1. if the domain has never been run and is paused then

- `maxmem` is set to `reservation (reservations were described

+ `maxmem` is set to `reservation` (reservations were described

in the [Toolstack interface](#toolstack-interface) section above);

- these domains are probably still being built and we must let

@@ -513,7 +506,7 @@ computing ideal target values and the third diagram shows the result

after targets have been set and the balloon drivers have

responded.

-

+

The scenario above includes 3 domains (domain 1,

domain 2, domain 3) on a host. Each of the domains has a non-ideal

@@ -532,12 +525,12 @@ which would be freed if we set each of the 3 domain’s

situation we would now have

`x` + `s` + `d1` + `d2` + `d3` free on the host where

`s` is the host slush fund and `x` is completely unallocated. Since we

-always want to keep the host free memory above $s$, we are free to

+always want to keep the host free memory above `s`, we are free to

return `x` + `d1` + `d2` + `d3` to guests. If we

use the default built-in proportional policy then, since all domains

have the same `dynamic-min` and `dynamic-max`, each gets the same

fraction of this free memory which we call `g`:

-

+

For each domain, the ideal balloon target is now

`target` = `dynamic-min` + `g`.

Squeezed does not set all the targets at once: this would allow the

@@ -601,7 +594,7 @@ Issues

removed.

- It seems unnecessarily evil to modify an *inactive*

- domain’s `maxmem` leaving `maxmem` less than `target}``, causing

+ domain’s `maxmem` leaving `maxmem` less than `target`, causing

the guest to attempt allocations forwever. It’s probably neater to

move the `target` at the same time.

diff --git a/doc/content/squeezed/design/reservation.svg b/doc/content/squeezed/design/reservation.svg

new file mode 100644

index 00000000000..d7ac27e4639

--- /dev/null

+++ b/doc/content/squeezed/design/reservation.svg

@@ -0,0 +1,75 @@

+

+

diff --git a/doc/content/squeezed/design/twophase.svg b/doc/content/squeezed/design/twophase.svg

new file mode 100644

index 00000000000..b009fa686c0

--- /dev/null

+++ b/doc/content/squeezed/design/twophase.svg

@@ -0,0 +1,540 @@

+

+

+

+

diff --git a/doc/content/squeezed/design/unused.svg b/doc/content/squeezed/design/unused.svg

new file mode 100644

index 00000000000..e85445d7e0b

--- /dev/null

+++ b/doc/content/squeezed/design/unused.svg

@@ -0,0 +1,199 @@

+

+

diff --git a/doc/content/squeezed/squeezer.md b/doc/content/squeezed/squeezer.md

new file mode 100644

index 00000000000..059d295a532

--- /dev/null

+++ b/doc/content/squeezed/squeezer.md

@@ -0,0 +1,263 @@

+---

+title: Overview of the memory squeezer

+hidden: true

+---

+

+{{% notice warning %}}

+This was converted to markdown from squeezer.tex. It is not clear how much

+of this document is still relevant and/or already present in the other docs.

+{{% /notice %}}

+

+summary

+-------

+

+- ballooning is a per-domain operation; not a per-VM operation. A VM

+ may be represented by multiple domains (currently localhost migrate,

+ in the future stubdomains)

+

+- most free host memory is divided up between running domains

+ proportionally, so they all end up with the same value of

+ ratio

+

+

+

+ where ratio(domain) =

+ if domain.dynamic_max - domain.dynamic_min = 0

+ then 0

+ else (domain.target - domain.dynamic_min)

+ / (domain.dynamic_max - domain.dynamic_min)

+

+Assumptions

+-----------

+

+- all memory values are stored and processed in units of KiB

+

+- the squeezing algorithm doesn’t know about host or VM overheads but

+ this doesn’t matter because

+

+- the squeezer assumes that any free host memory can be allocated to

+ running domains and this will be directly reflected in their

+ memory\_actual i.e. if x KiB is free on the host we can tell a guest

+ to use x KiB and see the host memory goes to 0 and the guest’s

+ memory\_actual increase by x KiB. We assume that no-extra ’overhead’

+ is required in this operation (all overheads are functions of

+ static\_max only)

+

+Definitions

+-----------

+

+- domain: an object representing a xen domain

+

+- domain.domid: unique identifier of the domain on the host

+

+- domaininfo(domain): a function which returns live per-domain

+ information from xen (in real-life a hypercall)

+

+- a domain is said to “have never run” if never\_been\_run(domain)

+

+ where never_been_run(domain) = domaininfo(domain).paused

+ and not domaininfo(domain).shutdown

+ and domaininfo(domain).cpu_time = 0

+

+- xenstore-read(path): a function which returns the value associated

+ with ’path’ in xenstore

+

+- domain.initial\_reservation: used to associate freshly freed memory

+ with a new domain which is being built or restored

+

+ domain.initial_reservation =

+ xenstore-read(/local/domain//memory/initial-reservation)

+

+- domain.target: represents what we think the balloon target currently

+ is

+

+ domain.target =

+ if never_been_run(domain)

+ then xenstore-read(/local/domain//memory/target)

+ else domain.initial_reservation

+

+- domain.dynamic\_min: represents what we think the dynamic\_min

+ currently is

+

+ domain.dynamic_min =

+ if never_been_run(domain)

+ then xenstore-read(/local/domain//memory/dynamic_min)

+ else domain.initial_reservation

+

+- domain.dynamic\_max: represents what we think the dynamic\_max

+ currently is

+

+ domain.dynamic_max =

+ if never_been_run(domain)

+ then xenstore-read(/local/domain//memory/dynamic_max)

+ else domain.initial_reservation

+

+- domain.memory\_actual: represents the memory we think the guest is

+ using (doesn’t take overheads like shadow into account)

+

+ domain.memory_actual =

+ if never_been_run(domain)

+ max domaininfo(domain).total_memory_pages domain.initial_reservation

+ else domaininfo(domain).total_memory_pages

+

+- domain.memory\_actual\_last\_update\_time: time when we saw the last

+ change in memory\_actual

+

+- domain.unaccounted\_for: a fresh domain has memory reserved for it

+ but xen doesn’t know about it. We subtract this from the host memory

+ xen thinks is free.

+

+ domain.unaccounted_for =

+ if never_been_run(domain)

+ then max 0 (domain.initial_reservation - domaininfo(domain).total_memory_pages)

+

+- domain.max\_mem: an upper-limit on the amount of memory a domain

+ can allocate. Initially static\_max.

+

+ domain.max_mem = domaininfo(domain).max_mem

+

+- assume\_balloon\_driver\_stuck\_after: a constant number of seconds

+ after which we conclude that the balloon driver has stopped working

+

+ assume_balloon_driver_stuck_after = 2

+

+- domain.active: a boolean value which is true when we think the

+ balloon driver is functioning

+

+ domain.active = has_hit_target(domain)

+ or (now - domain.memory_actual_last_update_time)

+ > assume_balloon_driver_stuck_after

+

+- a domain is said to “have hit its target”

+ if has\_hit\_target(domain)

+

+ where has_hit_target(domain) = floor(memory_actual / 4) = floor(target / 4)

+

+ NB this definition might have to be loosened if it turns out that

+ some drivers are less accurate than this.

+

+- a domain is said to “be capable of ballooning” if

+ can\_balloon(domain) where can\_balloon(domain) = not

+ domaininfo(domain).paused

+

+- host: an object representing a XenServer host

+

+- host.domains: a list of domains present on the host

+

+- physinfo(host): a function which returns live per-host information

+ from xen (in real-life a hypercall)

+

+- host.free\_mem: amount of memory we consider to be free on the host

+

+ host.free_mem = physinfo(host).free_pages + physinfo(host).scrub_pages

+ - \sigma d\in host.domains. d.unaccounted_for

+

+Squeezer APIs

+-------------

+

+The squeezer has 2 APIs:

+

+1. allocate-memory-for-domain(host, domain, amount): frees “amount” and

+ “reserves” (as best it can) it for a particular domain

+

+2. rebalance-memory: called after e.g. domain destruction to rebalance

+ memory between the running domains

+

+allocate-memory-for-domain keeps contains the main loop which performs

+the actual target and max\_mem adjustments:

+

+ function allocate-memory-for-domain(host, domain, amount):

+ \forall d\in host.domains. d.max_mem <- d.target

+ while true do

+ -- call change-host-free-memory with a "success condition" set to

+ -- "when the host memory is >= amount"

+ declared_active, declared_inactive, result =

+ change-host-free-memory(host, amount, \lambda m >= amount)

+ if result == Success:

+ domain.initial_reservation <- amount

+ return Success

+ elif result == DynamicMinsTooHigh:

+ return DynamicMinsTooHigh

+ elif result == DomainsRefusedToCooperate:

+ return DomainsRefusedToCooperate

+ elif result == AdjustTargets(adjustments):

+ \forall (domain, target)\in adjustments:

+ domain.max_mem <- target

+ domain.target <- target

+

+ \forall d\in declared_inactive:

+ domain.max_mem <- min domain.target domain.memory_actual

+ \forall d\in declared_active:

+ domain.max_mem <- domain.target

+ done

+

+The helper function change-host-free-memory(host, amount) does the

+“thinking”:

+

+1. it keeps track of whether domains are active or inactive (only for

+ the duration of the squeezer API call – when the next call comes in

+ we assume that all domains are active and capable of ballooning... a

+ kind of “innocent until proven guilty” approaxh)

+

+2. it computes what the balloon targets should be

+

+

+

+ function change-host-free-memory(host, amount, success_condition):

+ \forall d\in host.domains. recalculate domain.active

+ active_domains <- d\in host.domains where d.active = true

+ inactive_domains <- d\in host.domains where d.active = false

+ -- since the last time we were called compute the lists of domains

+ -- which have become active and inactive

+ declared_active, declared_inactive <- ...

+ -- compute how much memory we could free or allocate given only the

+ -- active domains

+ maximum_freeable_memory =

+ sum(d\in active_domains)(d.memory_actual - d.dynamic_min)

+ maximum_allocatable_memory =

+ sum(d\in active_domains)(d.dynamic_max - d.memory_actual)

+ -- hypothetically consider freeing the maximum memory possible.

+ -- How much would we have to give back after we've taken as much as we want?

+ give_back = max 0 (maximum_freeable_memory - amount)

+ -- compute a list of target changes to 'give this memory back' to active_domains

+ -- NB this code is careful to allocate *all* memory, not just most

+ -- of it because of a rounding error.

+ adjustments = ...

+ -- decide whether every VM has reached its target (a good thing)

+ all_targets_reached = true if \forall d\in active_domains.has_hit_target(d)

+

+ -- If we're happy with the amount of free memory we've got and the active

+ -- guests have finished ballooning

+ if success_condition host.free_mem = true

+ and all_targets_reached and adjustments = []

+ then return declared_active, declared_inactive, Success

+

+ -- If we're happy with the amount of free memory and the running domains

+ -- can't absorb any more of the surplus

+ if host.free_mem >= amount and host.free_mem - maximum_allocatable_memory = 0

+ then return declared_active, declared_inactive, Success

+

+ -- If the target is too aggressive because of some non-active domains

+ if maximum_freeable_memory < amount and inactive_domains <> []

+ then return declared_active, declared_inactive,

+ DomainsRefusedToCooperate inactive_domains

+

+ -- If the target is too aggressive not because of the domains themselves

+ -- but because of the dynamic_mins

+ return declared_active, declared_inactive, DynamicMinsTooHigh

+

+The API rebalance-memory aims to use up as much host memory as possible

+EXCEPT it is necessary to keep some around for xen to use to create

+empty domains with.

+

+ Currently we have:

+ -- 10 MiB

+ target_host_free_mem = 10204

+ -- it's not always possible to allocate everything so a bit of slop has

+ -- been added here:

+ free_mem_tolerance = 1024

+

+ function rebalance-memory(host):

+ change-host-free-memory(host, target_host_free_mem,

+ \lambda m. m - target_host_free_mem < free_mem_tolerance)

+ -- and then wait for the xen page scrubber

diff --git a/doc/content/toolstack/features/events/index.md b/doc/content/toolstack/features/events/index.md

new file mode 100644

index 00000000000..3da4b6a35f7

--- /dev/null

+++ b/doc/content/toolstack/features/events/index.md

@@ -0,0 +1,256 @@

++++

+title = "Event handling in the Control Plane - Xapi, Xenopsd and Xenstore"

+menuTitle = "Event handling"

++++

+

+Introduction

+------------

+

+Xapi, xenopsd and xenstore use a number of different events to obtain

+indications that some state changed in dom0 or in the guests. The events

+are used as an efficient alternative to polling all these states

+periodically.

+

+- **xenstore** provides a very configurable approach in which each and

+ any key can be watched individually by a xenstore client. Once the

+ value of a watched key changes, xenstore will indicate to the client

+ that the value for that key has changed. An ocaml xenstore client

+ library provides a way for ocaml programs such as xenopsd,

+ message-cli and rrdd to provide high-level ocaml callback functions

+ to watch specific key. It's very common, for instance, for xenopsd

+ to watch specific keys in the xenstore keyspace of a guest and then

+ after receiving events for some or all of them, read other keys or

+ subkeys in xenstored to update its internal state mirroring the

+ state of guests and its devices (for instance, if the guest has pv

+ drivers and specific frontend devices have established connections

+ with the backend devices in dom0).

+- **xapi** also provides a very configurable event mechanism in which

+ the xenapi can be used to provide events whenever a xapi object (for

+ instance, a VM, a VBD etc) changes state. This event mechanism is

+ very reliable and is extensively used by XenCenter to provide

+ real-time update on the XenCenter GUI.

+- **xenopsd** provides a somewhat less configurable event mechanism,

+ where it always provides signals for all objects (VBDs, VMs

+ etc) whose state changed (so it's not possible to select a subset of

+ objects to watch for as in xenstore or in xapi). It's up to the

+ xenopsd client (eg. xapi) to receive these events and then filter

+ out or act on each received signal by calling back xenopsd and

+ asking it information for the specific signalled object. The main

+ use in xapi for the xenopsd signals is to update xapi's database of

+ the current state of each object controlled by xenopsd (VBDs,

+ VMs etc).

+

+Given a choice between polling states and receiving events when the

+state change, we should in general opt for receiving events in the code

+in order to avoid adding bottlenecks in dom0 that will prevent the

+scalability of XenServer to many VMs and virtual devices.

+

+

+

+Xapi

+----

+

+### Sending events from the xenapi

+

+A xenapi user client, such as XenCenter, the xe-cli or a python script,

+can register to receive events from XAPI for specific objects in the

+XAPI DB. XAPI will generate events for those registered clients whenever

+the corresponding XAPI DB object changes.

+

+

+

+This small python scripts shows how to register a simple event watch

+loop for XAPI:

+

+```python

+import XenAPI

+session = XenAPI.Session("http://xshost")

+session.login_with_password("username","password")

+session.xenapi.event.register(["VM","pool"]) # register for events in the pool and VM objects

+while True:

+ try:

+ events = session.xenapi.event.next() # block until a xapi event on a xapi DB object is available

+ for event in events:

+ print "received event op=%s class=%s ref=%s" % (event['operation'], event['class'], event['ref'])

+ if event['class'] == 'vm' and event['operatoin'] == 'mod':

+ vm = event['snapshot']

+ print "xapi-event on vm: vm_uuid=%s, power_state=%s, current_operation=%s" % (vm['uuid'],vm['name_label'],vm['power_state'],vm['current_operations'].values())

+ except XenAPI.Failure, e:

+ if len(e.details) > 0 and e.details[0] == 'EVENTS_LOST':

+ session.xenapi.event.unregister(["VM","pool"])

+ session.xenapi.event.register(["VM","pool"])

+```

+

+

+

+### Receiving events from xenopsd

+

+Xapi receives all events from xenopsd via the function

+xapi\_xenops.events\_watch() in its own independent thread. This is a

+single-threaded function that is responsible for handling all of the

+signals sent by xenopsd. In some situations with lots of VMs and virtual

+devices such as VBDs, this loop may saturate a single dom0 vcpu, which

+will slow down handling all of the xenopsd events and may cause the

+xenopsd signals to accumulate unboundedly in the worst case in the

+updates queue in xenopsd (see Figure 1).

+

+The function xapi\_xenops.events\_watch() calls

+xenops\_client.UPDATES.get() to obtain a list of (barrier,

+barrier\_events), and then it process each one of the barrier\_event,

+which can be one of the following events:

+

+- **Vm id:** something changed in this VM,

+ run xapi\_xenops.update\_vm() to query xenopsd about its state. The

+ function update\_vm() will update power\_state, allowed\_operations,

+ console and guest\_agent state in the xapi DB.

+- **Vbd id:** something changed in this VM,

+ run xapi\_xenops.update\_vbd() to query xenopsd about its state. The

+ function update\_vbd() will update currently\_attached and connected

+ in the xapi DB.

+- **Vif id:** something changed in this VM,

+ run xapi\_xenops.update\_vif() to query xenopsd about its state. The

+ function update\_vif() will update activate and plugged state of in

+ the xapi DB.

+- **Pci id:** something changed in this VM,

+ run xapi\_xenops.update\_pci() to query xenopsd about its state.

+- **Vgpu id:** something changed in this VM,

+ run xapi\_xenops.update\_vgpu() to query xenopsd about its state.

+- **Task id:** something changed in this VM,

+ run xapi\_xenops.update\_task() to query xenopsd about its state.

+ The function update\_task() will update the progress of the task in

+ the xapi DB using the information of the task in xenopsd.

+

+

+

+All the xapi\_xenops.update\_X() functions above will call

+Xenopsd\_client.X.stat() functions to obtain the current state of X from

+xenopsd:

+

+

+

+There are a couple of optimisations while processing the events in

+xapi\_xenops.events\_watch():

+

+- if an event X=(vm\_id,dev\_id) (eg. Vbd dev\_id) has already been

+ processed in a barrier\_events, it's not processed again. A typical

+ value for X is eg. "<vm\_uuid>.xvda" for a VBD.

+- if Events\_from\_xenopsd.are\_supressed X, then this event

+ is ignored. Events are supressed if VM X.vm\_id is migrating away

+ from the host

+

+#### Barriers

+

+When xapi needs to execute (and to wait for events indicating completion

+of) a xapi operation (such as VM.start and VM.shutdown) containing many

+xenopsd sub-operations (such as VM.start – to force xenopsd to change

+the VM power\_state, and VM.stat, VBD.stat, VIF.stat etc – to force the

+xapi DB to catch up with the xenopsd new state for these objects), xapi

+sends to the xenopsd input queue a barrier, indicating that xapi will

+then block and only continue execution of the barred operation when

+xenopsd returns the barrier. The barrier should only be returned when

+xenopsd has finished the execution of all the operations requested by

+xapi (such as VBD.stat and VM.stat in order to update the state of the

+VM in the xapi database after a VM.start has been issued to xenopsd).

+

+A recent problem has been detected in the xapi\_xenops.events\_watch()

+function: when it needs to process many VM\_check\_state events, this

+may push for later the processing of barriers associated with a

+VM.start, delaying xapi in reporting (via a xapi event) that the VM

+state in the xapi DB has reached the running power\_state. This needs

+further debugging, and is probably one of the reasons in CA-87377 why in

+some conditions a xapi event reporting that the VM power\_state is

+running (causing it to go from yellow to green state in XenCenter) is

+taking so long to be returned, way after the VM is already running.

+

+Xenopsd

+-------

+

+Xenopsd has a few queues that are used by xapi to store commands to be

+executed (eg. VBD.stat) and update events to be picked up by xapi. The

+main ones, easily seen at runtime by running the following command in

+dom0, are:

+

+```bash

+# xenops-cli diagnostics --queue=org.xen.xapi.xenops.classic

+{

+ queues: [ # XENOPSD INPUT QUEUE

+ ... stuff that still needs to be processed by xenopsd

+ VM.stat

+ VBD.stat

+ VM.start

+ VM.shutdown

+ VIF.plug

+ etc

+ ]

+ workers: [ # XENOPSD WORKER THREADS

+ ... which stuff each worker thread is processing

+ ]

+ updates: {

+ updates: [ # XENOPSD OUTPUT QUEUE

+ ... signals from xenopsd that need to be picked up by xapi

+ VM_check_state

+ VBD_check_state

+ etc

+ ]

+ } tasks: [ # XENOPSD TASKS

+ ... state of each known task, before they are manually deleted after completion of the task

+ ]

+}

+```

+

+### Sending events to xapi

+

+Whenever xenopsd changes the state of a XenServer object such as a VBD

+or VM, or when it receives an event from xenstore indicating that the

+states of these objects have changed (perhaps because either a guest or

+the dom0 backend changed the state of a virtual device), it creates a

+signal for the corresponding object (VM\_check\_state, VBD\_check\_state

+etc) and send it up to xapi. Xapi will then process this event in its

+xapi\_xenops.events\_watch() function.

+

+

+

+These signals may need to wait a long time to be processed if the

+single-threaded xapi\_xenops.events\_watch() function is having

+difficulties (ie taking a long time) to process previous signals in the

+UPDATES queue from xenopsd.

+

+### Receiving events from xenstore

+

+Xenopsd watches a number of keys in xenstore, both in dom0 and in each

+guest. Xenstore is responsible to send watch events to xenopsd whenever

+the watched keys change state. Xenopsd uses a xenstore client library to

+make it easier to create a callback function that is called whenever

+xenstore sends these events.

+

+

+

+Xenopsd also needs to complement sometimes these watch events with

+polling of some values. An example is the @introduceDomain event in

+xenstore (handled in xenopsd/xc/xenstore\_watch.ml), which indicates

+that a new VM has been created. This event unfortunately does not

+indicate the domid of the VM, and xenopsd needs to query Xen (via libxc)

+which domains are now available in the host and compare with the

+previous list of known domains, in order to figure out the domid of the

+newly introduced domain.

+

+ It is not good practice to poll xenstore for changes of values. This

+will add a large overhead to both xenstore and xenopsd, and decrease the

+scalability of XenServer in terms of number of VMs/host and virtual

+devices per VM. A much better approach is to rely on the watch events of

+xenstore to indicate when a specific value has changed in xenstore.

+

+Xenstore

+--------

+

+### Sending events to xenstore clients

+

+If a xenstore client has created watch events for a key, then xenstore

+will send events to this client whenever this key changes state.

+

+### Receiving events from xenstore clients

+

+Xenstore clients indicate to xenstore that something state changed by

+writing to some xenstore key. This may or may not cause xenstore to

+create watch events for the corresponding key, depending on if other

+xenstore clients have watches on this key.

diff --git a/doc/content/toolstack/features/events/obtaining-current-state.png b/doc/content/toolstack/features/events/obtaining-current-state.png

new file mode 100644

index 00000000000..3bf2c15bc2a

Binary files /dev/null and b/doc/content/toolstack/features/events/obtaining-current-state.png differ

diff --git a/doc/content/toolstack/features/events/receiving-events-from-xenopsd.png b/doc/content/toolstack/features/events/receiving-events-from-xenopsd.png

new file mode 100644

index 00000000000..52afdcca8c3

Binary files /dev/null and b/doc/content/toolstack/features/events/receiving-events-from-xenopsd.png differ

diff --git a/doc/content/toolstack/features/events/receiving-events-from-xenstore.png b/doc/content/toolstack/features/events/receiving-events-from-xenstore.png

new file mode 100644

index 00000000000..022ba4c1097

Binary files /dev/null and b/doc/content/toolstack/features/events/receiving-events-from-xenstore.png differ

diff --git a/doc/content/toolstack/features/events/sending-events-from-xapi.png b/doc/content/toolstack/features/events/sending-events-from-xapi.png

new file mode 100644

index 00000000000..cfbb4e8b572

Binary files /dev/null and b/doc/content/toolstack/features/events/sending-events-from-xapi.png differ

diff --git a/doc/content/toolstack/features/events/sending-events-to-xapi.png b/doc/content/toolstack/features/events/sending-events-to-xapi.png

new file mode 100644

index 00000000000..e7a9da8caff

Binary files /dev/null and b/doc/content/toolstack/features/events/sending-events-to-xapi.png differ

diff --git a/doc/content/toolstack/features/events/xapi-xenopsd-events.png b/doc/content/toolstack/features/events/xapi-xenopsd-events.png

new file mode 100644

index 00000000000..1adf6d50c43

Binary files /dev/null and b/doc/content/toolstack/features/events/xapi-xenopsd-events.png differ

diff --git a/doc/content/xapi/cli/_index.md b/doc/content/xapi/cli/_index.md

new file mode 100644

index 00000000000..a4ae338390a

--- /dev/null

+++ b/doc/content/xapi/cli/_index.md

@@ -0,0 +1,183 @@

++++

+title = "XE CLI architecture"

+menuTitle = "CLI"

++++

+

+{{% notice info %}}

+The links in this page point to the source files of xapi

+[v1.132.0](https://github.com/xapi-project/xen-api/tree/v1.132.0), not to the

+latest source code. Meanwhile, the CLI server code in xapi has been moved to a

+library separate from the main xapi binary, and has its own subdirectory

+`ocaml/xapi-cli-server`.

+{{% /notice %}}

+

+## Architecture

+

+- **The actual CLI** is a very lightweight binary in

+ [ocaml/xe-cli](https://github.com/xapi-project/xen-api/tree/v1.132.0/ocaml/xe-cli)

+ - It is just a dumb client, that does everything that xapi tells

+ it to do

+ - This is a security issue

+ - We must trust the xenserver that we connect to, because it

+ can tell xe to read local files, download files, ...

+ - When it is first called, it takes the few command-line arguments

+ it needs, and then passes the rest to xapi in a HTTP PUT request

+ - Each argument is in a separate line

+ - Then it loops doing what xapi tells it to do, in a loop, until

+ xapi tells it to exit or an exception happens

+

+- **The protocol** description is in

+ [ocaml/xapi-cli-protocol/cli_protocol.ml](https://github.com/xapi-project/xen-api/blob/v1.132.0/ocaml/xapi-cli-protocol/cli_protocol.ml)

+ - The CLI has such a protocol that one binary can talk to multiple

+ versions of xapi as long as their CLI protocol versions are

+ compatible

+ - and the CLI can be changed without updating the xe binary

+ - and also for performance reasons, it is more efficient this way

+ than by having a CLI that makes XenAPI calls

+

+- **Xapi**

+ - The HTTP POST request is sent to the `/cli` URL

+ - In `Xapi.server_init`, xapi [registers the appropriate function

+ to handle these

+ requests](https://github.com/xapi-project/xen-api/blob/v1.132.0/ocaml/xapi/xapi.ml#L804),

+ defined in [common_http_handlers in the same

+ file](https://github.com/xapi-project/xen-api/blob/v1.132.0/ocaml/xapi/xapi.ml#L589):

+ `Xapi_cli.handler`

+ - The relevant code is in `ocaml/xapi/records.ml`,

+ `ocaml/xapi/cli_*.ml`

+ - CLI object definitions are in `records.ml`, command

+ definitions in `cli_frontend.ml` (in

+ [cmdtable_data](https://github.com/xapi-project/xen-api/blob/v1.132.0/ocaml/xapi/cli_frontend.ml#L72)),

+ implementations of commands in `cli_operations.ml`

+ - When a command is received, it is parsed into a command name and

+ a parameter list of key-value pairs

+ - and the command table

+ [is](https://github.com/xapi-project/xen-api/blob/v1.132.0/ocaml/xapi/xapi_cli.ml#L157)

+ [populated

+ lazily](https://github.com/xapi-project/xen-api/blob/v1.132.0/ocaml/xapi/cli_frontend.ml#L3005)

+ from the commands defined in `cmdtable_data` in

+ `cli_frontend.ml`, and [automatically

+ generated](https://github.com/xapi-project/xen-api/blob/v1.132.0/ocaml/xapi/cli_operations.ml#L740)

+ low-level parameter commands (the ones defined in [section

+ A.3.2 of the XenServer Administrator's

+ Guide](http://docs.citrix.com/content/dam/docs/en-us/xenserver/xenserver-7-0/downloads/xenserver-7-0-administrators-guide.pdf))

+ are also added for a list of standard classes

+ - the command table maps command names to records that contain

+ the implementation of the command, among other things

+ - Then the command name [is looked

+ up](https://github.com/xapi-project/xen-api/blob/v1.132.0/ocaml/xapi/xapi_cli.ml#L86)

+ in the command table, and the corresponding operation is

+ executed with the parsed key-value parameter list passed to it

+

+## Walk-through: CLI handler in xapi (external calls)

+

+### Definitions for the HTTP handler

+

+ Constants.cli_uri = "/cli"

+

+ Datamodel.http_actions = [...;

+ ("post_cli", (Post, Constants.cli_uri, false, [], _R_READ_ONLY, []));

+ ...]

+

+ (* these public http actions will NOT be checked by RBAC *)

+ (* they are meant to be used in exceptional cases where RBAC is already *)

+ (* checked inside them, such as in the XMLRPC (API) calls *)

+ Datamodel.public_http_actions_with_no_rbac_check` = [...

+ "post_cli"; (* CLI commands -> calls XMLRPC *)

+ ...]

+

+ Xapi.common_http_handlers = [...;

+ ("post_cli", (Http_svr.BufIO Xapi_cli.handler));

+ ...]

+

+ Xapi.server_init () =

+ ...

+ "Registering http handlers", [], (fun () -> List.iter Xapi_http.add_handler common_http_handlers);

+ ...

+

+Due to there definitions, `Xapi_http.add_handler` does not perform RBAC checks for `post_cli`. This means that the CLI handler does not use `Xapi_http.assert_credentials_ok` when a request comes in, as most other handlers do. The reason is that RBAC checking is delegated to the actual XenAPI calls that are being done by the commands in `Cli_operations`.

+

+This means that the `Xapi_http.add_handler call` so resolves to simply:

+

+ Http_svr.Server.add_handler server Http.Post "/cli" (Http_svr.BufIO Xapi_cli.handler))

+

+...which means that the function `Xapi_cli.handler` is called directly when an HTTP POST request with path `/cli` comes in.

+

+### High-level request processing

+

+`Xapi_cli.handler`:

+

+- Reads the body of the HTTP request, limitted to `Xapi_globs.http_limit_max_cli_size = 200 * 1024` characters.

+- Sends a protocol version string to the client: `"XenSource thin CLI protocol"` plus binary encoded major (0) and (2) minor numbers.

+- Reads the protocol version from the client and exits with an error if it does not match the above.

+- Calls `Xapi_cli.parse_session_and_args` with the request's body to extract the session ref, if there.

+- Calls `Cli_frontend.parse_commandline` to parse the rest of the command line from the body.

+- Calls `Xapi_cli.exec_command` to execute the command.

+- On error, calls `exception_handler`.

+

+`Xapi_cli.parse_session_and_args`:

+

+- Is passed the request body and reads it line by line. Each line is considered an argument.

+- Removes any CR chars from the end of each argument.

+- If the first arg starts with `session_id=`, the the bit after this prefix is considered to be a session reference.

+- Returns the session ref (if there) and (remaining) list of args.

+

+`Cli_frontend.parse_commandline`:

+

+- Returns the command name and assoc list of param names and values. It handles `--name` and `-flag` arguments by turning them into key/value string pairs.

+

+`Xapi_cli.exec_command`:

+

+- Finds username/password params.

+- Get the rpc function: this is the so-called "`fake_rpc` callback", which does not use the network or HTTP at all, but goes straight to `Api_server.callback1` (the XenAPI RPC entry point). This function is used by the CLI handler to do loopback XenAPI calls.

+- Logs the parsed xe command, omitting sensitive data.

+- Continues as `Xapi_cli.do_rpcs`

+- Looks up the command name in the command table from `Cli_frontend` (raises an error if not found).

+- Checks if all required params have been supplied (raises an error if not).

+- Checks that the host is a pool master (raises an error if not).

+- Depending on the command, a `session.login_with_password` or `session.slave_local_login_with_password` XenAPI call is made with the supplied username and password. If the authentication passes, then a session reference is returned for the RBAC role that belongs to the user. This session is used to do further XenAPI calls.

+- Next, the implementation of the command in `Cli_operations` is executed.

+

+### Command implementations

+

+The various commands are implemented in `cli_operations.ml`. These functions are only called after user authentication has passed (see above). However, RBAC restrictions are only enforced inside any XenAPI calls that are made, and _not_ on any of the other code in `cli_operations.ml`.

+

+The type of each command implementation function is as follows (see `cli_cmdtable.ml`):

+

+ type op =

+ Cli_printer.print_fn ->

+ (Rpc.call -> Rpc.response) ->

+ API.ref_session -> ((string*string) list) -> unit

+

+So each function receives a printer for sending text output to the xe client, and rpc function and session reference for doing XenAPI calls, and a key/value pair param list. Here is a typical example:

+

+ let bond_create printer rpc session_id params =

+ let network = List.assoc "network-uuid" params in

+ let mac = List.assoc_default "mac" params "" in

+ let network = Client.Network.get_by_uuid rpc session_id network in

+ let pifs = List.assoc "pif-uuids" params in

+ let uuids = String.split ',' pifs in

+ let pifs = List.map (fun uuid -> Client.PIF.get_by_uuid rpc session_id uuid) uuids in

+ let mode = Record_util.bond_mode_of_string (List.assoc_default "mode" params "") in

+ let properties = read_map_params "properties" params in

+ let bond = Client.Bond.create rpc session_id network pifs mac mode properties in

+ let uuid = Client.Bond.get_uuid rpc session_id bond in

+ printer (Cli_printer.PList [ uuid])

+

+- The necessary parameters are looked up in `params` using `List.assoc` or similar.

+- UUIDs are translated into reference by `get_by_uuid` XenAPI calls (note that the `Client` module is the XenAPI client, and functions in there require the rpc function and session reference).

+- Then the main API call is made (`Client.Bond.create` in this case).

+- Further API calls may be made to output data for the client, and passed to the `printer`.

+

+This is the common case for CLI operations: they do API calls based on the parameters that were passed in.

+

+However, other commands are more complicated, for example `vm_import/export` and `vm_migrate`. These contain a lot more logic in the CLI commands, and also send commands to the client to instruct it to read or write files and/or do HTTP calls.

+

+Yet other commands do not actually do any XenAPI calls, but instead get "helpful" information from other places. Example: `diagnostic_gc_stats`, which displays statistics from xapi's OCaml GC.

+

+## Tutorials

+

+The following tutorials show how to extend the CLI (and XenAPI):

+

+- [Adding a field]({{< relref "../guides/howtos/add-field.md" >}})

+- [Adding an operation]({{< relref "../guides/howtos/add-function.md" >}})

diff --git a/doc/content/xapi/database/_index.md b/doc/content/xapi/database/_index.md

new file mode 100644

index 00000000000..1928f8afac2

--- /dev/null

+++ b/doc/content/xapi/database/_index.md

@@ -0,0 +1,4 @@

++++

+title = "Database"

++++

+

diff --git a/doc/content/xapi/database/redo-log/index.md b/doc/content/xapi/database/redo-log/index.md

new file mode 100644

index 00000000000..8f8ac202f6c

--- /dev/null

+++ b/doc/content/xapi/database/redo-log/index.md

@@ -0,0 +1,395 @@

++++

+title = "Metadata-on-LUN"

++++

+

+In the present version of XenServer, metadata changes resulting in

+writes to the database are not persisted in non-volatile storage. Hence,

+in case of failure, up to five minutes’ worth of metadata changes could

+be lost. The Metadata-on-LUN feature addresses the issue by

+ensuring that all database writes are retained. This will be used to

+improve recovery from failure by storing incremental *deltas* which can

+be re-applied to an old version of the database to bring it more

+up-to-date. An implication of this is that clients will no longer be

+required to perform a ‘pool-sync-database’ to protect critical writes,

+because all writes will be implicitly protected.

+

+This is implemented by saving descriptions of all persistent database

+writes to a LUN when HA is active. Upon xapi restart after failure, such

+as on master fail-over, these descriptions are read and parsed to

+restore the latest version of the database.

+

+Layout on block device

+======================

+

+It is useful to store the database on the block device as well as the

+deltas, so that it is unambiguous on recovery which version of the

+database the deltas apply to.

+

+The content of the block device will be structured as shown in

+the table below. It consists of a header; the rest of the

+device is split into two halves.

+

+| | Length (bytes) | Description

+|-----------------------|------------------:|----------------------------------------------

+| Header | 16 | Magic identifier

+| | 1 | ASCII NUL

+| | 1 | Validity byte

+| First half database | 36 | UUID as ASCII string

+| | 16 | Length of database as decimal ASCII

+| | *(as specified)* | Database (binary data)

+| | 16 | Generation count as decimal ASCII

+| | 36 | UUID as ASCII string

+| First half deltas | 16 | Length of database delta as decimal ASCII

+| | *(as specified)* | Database delta (binary data)

+| | 16 | Generation count as decimal ASCII

+| | 36 | UUID as ASCII string

+| Second half database | 36 | UUID as ASCII string

+| | 16 | Length of database as decimal ASCII

+| | *(as specified)* | Database (binary data)

+| | 16 | Generation count as decimal ASCII

+| | 36 | UUID as ASCII string

+| Second half deltas | 16 | Length of database delta as decimal ASCII

+| | *(as specified)* | Database delta (binary data)

+| | 16 | Generation count as decimal ASCII

+| | 36 | UUID as ASCII string

+

+After the header, one or both halves may be devoid of content. In a half

+which contains a database, there may be zero or more deltas (repetitions

+of the last three entries in each half).

+

+The structure of the device is split into two halves to provide

+double-buffering. In case of failure during write to one half, the other

+half remains intact.

+

+The magic identifier at the start of the file protect against attempting

+to treat a different device as a redo log.

+

+The validity byte is a single `ascii character indicating the

+state of the two halves. It can take the following values:

+

+| Byte | Description

+|-------|------------------------

+| `0` | Neither half is valid

+| `1` | First half is valid

+| `2` | Second half is valid

+

+The use of lengths preceding data sections permit convenient reading.

+The constant repetitions of the UUIDs act as nonces to protect

+against reading in invalid data in the case of an incomplete or corrupt

+write.

+

+Architecture

+============

+

+The I/O to and from the block device may involve long delays. For

+example, if there is a network problem, or the iSCSI device disappears,

+the I/O calls may block indefinitely. It is important to isolate this

+from xapi. Hence, I/O with the block device will occur in a separate

+process.

+

+Xapi will communicate with the I/O process via a UNIX domain socket using a

+simple text-based protocol described below. The I/O process will use to

+ensure that it can always accept xapi’s requests with a guaranteed upper

+limit on the delay. Xapi can therefore communicate with the process

+using blocking I/O.

+

+Xapi will interact with the I/O process in a best-effort fashion. If it

+cannot communicate with the process, or the process indicates that it

+has not carried out the requested command, xapi will continue execution

+regardless. Redo-log entries are idempotent (modulo the raising of

+exceptions in some cases) so it is of little consequence if a particular

+entry cannot be written but others can. If xapi notices that the process

+has died, it will attempt to restart it.

+

+The I/O process keeps track of a pointer for each half indicating the

+position at which the next delta will be written in that half.

+

+Protocol

+--------

+

+Upon connection to the control socket, the I/O process will attempt to

+connect to the block device. Depending on whether this is successful or

+unsuccessful, one of two responses will be sent to the client.

+

+- `connect|ack_` if it is successful; or

+

+- `connect|nack||` if it is unsuccessful, perhaps

+ because the block device does not exist or cannot be read from. The

+ `` is a description of the error; the `` of the message

+ is expressed using 16 digits of decimal ascii.

+

+The former message indicates that the I/O process is ready to receive

+commands. The latter message indicates that commands can not be sent to

+the I/O process.

+

+There are three commands which xapi can send to the I/O

+process. These are described below, with a high level description of the

+operational semantics of the I/O process’ actions, and the corresponding

+responses. For ease of parsing, each command is ten bytes in length.

+

+### Write database

+

+Xapi requests that a new database is written to the block device, and

+sends its content using the data socket.

+

+##### Command:

+

+: `writedb___|||`

+: The UUID is expressed as 36 ASCII

+ characters. The *length* of the data and the *generation-count* are

+ expressed using 16 digits of decimal ASCII.

+

+##### Semantics:

+

+1. Read the validity byte.

+2. If one half is valid, we will use the other half. If no halves

+ are valid, we will use the first half.

+3. Read the data from the data socket and write it into the

+ chosen half.

+4. Set the pointer for the chosen half to point to the position

+ after the data.

+5. Set the validity byte to indicate the chosen half is valid.

+

+##### Response:

+

+: `writedb|ack_` in case of successful write; or

+: `writedb|nack||` otherwise.

+: For error messages, the *length* of the message is expressed using

+ 16 digits of decimal ascii. In particular, the

+ error message for timeouts is the string `Timeout`.

+

+### Write database delta

+

+Xapi sends a description of a database delta to append to the block

+device.

+

+##### Command:

+

+: `writedelta||||`

+: The UUID is expressed as 36 ASCII

+ characters. The *length* of the data and the *generation-count* are

+ expressed using 16 digits of decimal ASCII.

+

+##### Semantics:

+

+1. Read the validity byte to establish which half is valid. If

+ neither half is valid, return with a `nack`.

+2. If the half’s pointer is set, seek to that position. Otherwise,

+ scan through the half and stop at the position after the

+ last write.

+3. Write the entry.

+4. Update the half’s pointer to point to the position after

+ the entry.

+

+##### Response:

+

+: `writedelta|ack_` in case of successful append; or

+: `writedelta|nack||` otherwise.

+: For error messages, the *length* of the message is expressed using

+ 16 digits of decimal ASCII. In particular, the

+ error message for timeouts is the string `Timeout`.

+

+### Read log

+

+Xapi requests the contents of the log.

+

+##### Command:

+

+: `read______`

+

+##### Semantics:

+

+1. Read the validity byte to establish which half is valid. If

+ neither half is valid, return with an `end`.

+2. Attempt to read the database from the current half.

+3. If this is successful, continue in that half reading entries up

+ to the position of the half’s pointer. If the pointer is not

+ set, read until a record of length zero is found or the end of

+ the half is reached. Otherwise—if the attempt to the read the

+ database was not successful—switch to using the other half and

+ try again from step 2.

+4. Finally output an `end`.

+

+##### Response:

+

+: `read|nack_||` in case of error; or

+: `read|db___|||` for a database record, then a

+ sequence of zero or more

+: `read|delta|||` for each delta record, then

+: `read|end__`

+: For each record, and for error messages, the *length* of the data or

+ message is expressed using 16 digits of decimal ascii. In particular, the

+ error message for timeouts is the string `Timeout`.

+

+### Re-initialise log

+

+Xapi requests that the block device is re-initialised with a fresh

+redo-log.

+

+##### Command:

+

+: `empty_____`\

+

+##### Semantics:

+

+: 1. Set the validity byte to indicate that neither half is valid.

+

+##### Response:

+

+: `empty|ack_` in case of successful re-initialisation; or

+ `empty|nack||` otherwise.

+: For error messages, the *length* of the message is expressed using

+ 16 digits of decimal ASCII. In particular, the

+ error message for timeouts is the string `Timeout`.

+

+Impact on xapi performance

+==========================

+

+The implementation of the feature causes a slow-down in xapi of around

+6% in the general case. However, if the LUN becomes inaccessible this

+can cause a slow-down of up to 25% in the worst case.

+

+The figure below shows the result of testing four configurations,

+counting the number of database writes effected through a command-line

+‘xe pool-param-set’ call.

+

+- The first and second configurations are xapi *without* the

+ Metadata-on-LUN feature, with HA disabled and

+ enabled respectively.

+

+- The third configuration shows xapi *with* the

+ Metadata-on-LUN feature using a healthy LUN to which

+ all database writes can be successfully flushed.

+

+- The fourth configuration shows xapi *with* the

+ Metadata-on-LUN feature using an inaccessible LUN for

+ which all database writes fail.

+

+

+

+Testing strategy

+================

+

+The section above shows how xapi performance is affected by this feature. The

+sections below describe the dev-testing which has already been undertaken, and

+propose how this feature will impact on regression testing.

+

+Dev-testing performed

+---------------------

+

+A variety of informal tests have been performed as part of the

+development process:

+

+Enable HA.

+

+: Confirm LUN starts being used to persist database writes.

+

+Enable HA, disable HA.

+

+: Confirm LUN stops being used.

+

+Enable HA, kill xapi on master, restart xapi on master.

+

+: Confirm that last database write before kill is successfully

+ restored on restart.

+

+Repeatedly enable and disable HA.

+

+: Confirm that no file descriptors are leaked (verified by counting

+ the number of descriptors in /proc/*pid*/fd/).

+

+Enable HA, reboot the master.

+

+: Due to HA, a slave becomes the master (or this can be forced using

+ ‘xe pool-emergency-transition-to-master’). Confirm that the new

+ master starts is able to restore the database from the LUN from the

+ point the old master left off, and begins to write new changes to

+ the LUN.

+

+Enable HA, disable the iSCSI volume.

+

+: Confirm that xapi continues to make progress, although database

+ writes are not persisted.

+

+Enable HA, disable and enable the iSCSI volume.

+

+: Confirm that xapi begins to use the LUN when the iSCSI volume is

+ re-enabled and subsequent writes are persisted.

+

+These tests have been undertaken using an iSCSI target VM and a real

+iSCSI volume on lannik. In these scenarios, disabling the iSCSI volume

+consists of stopping the VM and unmapping the LUN, respectively.

+

+Proposed new regression test

+----------------------------

+

+A new regression test is proposed to confirm that all database writes

+are persisted across failure.

+

+There are three types of database modification to test: row creation,

+field-write and row deletion. Although these three kinds of write could

+be tested in separate tests, the means of setting up the pre-conditions

+for a field-write and a row deletion require a row creation, so it is

+convenient to test them all in a single test.

+

+1. Start a pool containing three hosts.

+

+2. Issue a CLI command on the master to create a row in the

+ database, e.g.

+

+ `xe network-create name-label=a`.

+

+3. Forcefully power-cycle the master.

+

+4. On fail-over, issue a CLI command on the new master to check that

+ the row creation persisted:

+

+ `xe network-list name-label=a`,

+

+ confirming that the returned string is non-empty.

+

+5. Issue a CLI command on the master to modify a field in the new row

+ in the database:

+

+ `xe network-param-set uuid= name-description=abcd`,

+

+ where `` is the UUID returned from step 2.

+

+6. Forcefully power-cycle the master.

+

+7. On fail-over, issue a CLI command on the new master to check that

+ the field-write persisted:

+

+ `xe network-param-get uuid= param-name=name-description`,

+

+ where `` is the UUID returned from step 2. The returned string

+ should contain

+

+ `abcd`.

+

+8. Issue a CLI command on the master to delete the row from the

+ database:

+

+ `xe network-destroy uuid=`,

+

+ where `` is the UUID returned from step 2.

+

+9. Forcefully power-cycle the master.

+

+10. On fail-over, issue a CLI command on the new master to check that

+ the row does not exist:

+

+ `xe network-list name-label=a`,

+

+ confirming that the returned string is empty.

+

+Impact on existing regression tests

+-----------------------------------

+

+The Metadata-on-LUN feature should mean that there is no

+need to perform an ‘xe pool-sync-database’ operation in existing HA

+regression tests to ensure that database state persists on xapi failure.

diff --git a/doc/content/xapi/database/redo-log/performance.svg b/doc/content/xapi/database/redo-log/performance.svg

new file mode 100644

index 00000000000..fae19ce0f77

--- /dev/null

+++ b/doc/content/xapi/database/redo-log/performance.svg

@@ -0,0 +1,306 @@

+

+

\ No newline at end of file

diff --git a/doc/content/xapi/storage/_index.md b/doc/content/xapi/storage/_index.md

new file mode 100644

index 00000000000..c265353869a

--- /dev/null

+++ b/doc/content/xapi/storage/_index.md

@@ -0,0 +1,415 @@

++++

+title = "XAPI's Storage Layers"

+menuTitle = "Storage"

++++

+

+{{% notice info %}}

+The links in this page point to the source files of xapi

+[v1.127.0](https://github.com/xapi-project/xen-api/tree/v1.127.0), and xcp-idl

+[v1.62.0](https://github.com/xapi-project/xcp-idl/tree/v1.62.0), not to the

+latest source code.

+

+In the beginning of 2023, significant changes have been made in the layering.

+In particular, the wrapper code from `storage_impl.ml` has been pushed down the

+stack, below the mux, such that it only covers the SMAPIv1 backend and not

+SMAPIv3. Also, all of the code (from xcp-idl etc) is now present in this repo

+(xen-api).

+{{% /notice %}}

+

+Xapi directly communicates only with the SMAPIv2 layer. There are no

+plugins directly implementing the SMAPIv2 interface, but the plugins in

+other layers are accessed through it:

+

+{{}}

+graph TD

+A[xapi] --> B[SMAPIv2 interface]

+B --> C[SMAPIv2 <-> SMAPIv1 translation: storage_access.ml]

+B --> D[SMAPIv2 <-> SMAPIv3 translation: xapi-storage-script]

+C --> E[SMAPIv1 plugins]

+D --> F[SMAPIv3 plugins]

+{{< /mermaid >}}

+

+## SMAPIv1

+

+These are the files related to SMAPIv1 in `xen-api/ocaml/xapi/`:

+

+- [sm.ml](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/sm.ml):

+ OCaml "bindings" for the SMAPIv1 Python "drivers" (SM)

+- [sm_exec.ml](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/sm_exec.ml):

+ support for implementing the above "bindings". The

+ parameters are converted to XML-RPC, passed to the relevant python

+ script ("driver"), and then the standard output of the program is

+ parsed as an XML-RPC response (we use

+ `xen-api-libs-transitional/http-svr/xMLRPC.ml` for parsing XML-RPC).

+ When adding new functionality, we can modify `type call` to add parameters,

+ but when we don't add any common ones, we should just pass the new

+ parameters in the args record.

+- `smint.ml`: Contains types, exceptions, ... for the SMAPIv1 OCaml

+ interface

+

+## SMAPIv2

+

+These are the files related to SMAPIv2, which need to be modified to

+implement new calls:

+

+- [xcp-idl/storage/storage\_interface.ml](https://github.com/xapi-project/xcp-idl/blob/v1.62.0/storage/storage_interface.ml):

+ Contains the SMAPIv2 interface

+- [xcp-idl/storage/storage\_skeleton.ml](https://github.com/xapi-project/xcp-idl/blob/v1.62.0/storage/storage_skeleton.ml):

+ A stub SMAPIv2 storage server implementation that matches the

+ SMAPIv2 storage server interface (this is verified by

+ [storage\_skeleton\_test.ml](https://github.com/xapi-project/xcp-idl/blob/v1.62.0/storage/storage_skeleton_test.ml)),

+ each of its function just raise a `Storage_interface.Unimplemented`

+ error. This skeleton is used to automatically fill the unimplemented

+ methods of the below storage servers to satisfy the interface.

+- [xen-api/ocaml/xapi/storage\_access.ml](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/storage_access.ml):

+ [module SMAPIv1](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/storage_access.ml#L104):

+ a SMAPIv2 server that does SMAPIv2 -> SMAPIv1 translation.

+ It passes the XML-RPC requests as the first command-line argument to the

+ corresponding Python script, which returns an XML-RPC response on standard

+ output.

+- [xen-api/ocaml/xapi/storage\_impl.ml](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/storage_impl.ml):

+ The

+ [Wrapper](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/storage_impl.ml#L302)

+ module wraps a SMAPIv2 server (Server\_impl) and takes care of

+ locking and datapaths (in case of multiple connections (=datapaths)

+ from VMs to the same VDI, it will use the superstate computed by the

+ [Vdi_automaton](https://github.com/xapi-project/xcp-idl/blob/v1.62.0/storage/vdi_automaton.ml)

+ in xcp-idl). It also implements some functionality, like the `DP`

+ module, that is not implemented in lower layers.

+- [xen-api/ocaml/xapi/storage\_mux.ml](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/storage_mux.ml):

+ A SMAPIv2 server, which multiplexes between other servers. A

+ different SMAPIv2 server can be registered for each SR. Then it

+ forwards the calls for each SR to the "storage plugin" registered

+ for that SR.

+

+### How SMAPIv2 works:

+

+We use [message-switch] under the hood for RPC communication between

+[xcp-idl](https://github.com/xapi-project/xcp-idl) components. The

+main `Storage_mux.Server` (basically `Storage_impl.Wrapper(Mux)`) is

+[registered to

+listen](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/storage_access.ml#L1279)

+on the "`org.xen.xapi.storage`" queue [during xapi's

+startup](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/xapi.ml#L801),

+and this is the main entry point for incoming SMAPIv2 function calls.

+`Storage_mux` does not really multiplex between different plugins right

+now: [earlier during xapi's

+startup](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/xapi.ml#L799),

+the same SMAPIv1 storage server module [is

+registered](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/storage_access.ml#L934)

+on the various "`org.xen.xapi.storage.`" queues for each

+supported SR type. (This will change with SMAPIv3, which is accessed via

+a SMAPIv2 plugin outside of xapi that translates between SMAPIv2 and

+SMAPIv3.) Then, in

+[Storage\_access.create\_sr](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/storage_access.ml#L1531),

+which is called

+[during SR.create](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/xapi_sr.ml#L326),

+and also

+[during PBD.plug](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/xapi_pbd.ml#L121),

+the relevant "`org.xen.xapi.storage.`" queue needed for that

+PBD is [registered with Storage_mux in

+Storage\_access.bind](https://github.com/xapi-project/xen-api/blob/v1.127.0/ocaml/xapi/storage_access.ml#L1107)

+for the SR of that PBD.\

+So basically what happens is that xapi registers itself as a SMAPIv2

+server, and forwards incoming function calls to itself through

+`message-switch`, using its `Storage_mux` module. These calls are

+forwarded to xapi's `SMAPIv1` module doing SMAPIv2 -> SMAPIv1

+translation.

+

+#### Registration of the various storage servers

+

+{{}}

+sequenceDiagram

+participant q as message-switch

+participant v1 as Storage_access.SMAPIv1

+participant svr as Storage_mux.Server

+

+Note over q, svr: xapi startup, "Starting SMAPIv1 proxies"

+q ->> v1:org.xen.xapi.storage.sr_type_1

+q ->> v1:org.xen.xapi.storage.sr_type_2

+q ->> v1:org.xen.xapi.storage.sr_type_3

+

+Note over q, svr: xapi startup, "Starting SM service"

+q ->> svr:org.xen.xapi.storage

+

+Note over q, svr: SR.create, PBD.plug

+svr ->> q:org.xapi.storage.sr_type_2

+{{< /mermaid >}}

+

+#### What happens when a SMAPIv2 "function" is called

+

+{{}}

+graph TD

+

+call[SMAPIv2 call] --VDI.attach2--> org.xen.xapi.storage

+

+subgraph message-switch

+org.xen.xapi.storage

+org.xen.xapi.storage.SR_type_x

+end

+

+org.xen.xapi.storage --VDI.attach2--> Storage_impl.Wrapper

+

+subgraph xapi

+subgraph Storage_mux.server

+Storage_impl.Wrapper --> Storage_mux.mux

+end

+Storage_access.SMAPIv1

+end

+

+Storage_mux.mux --VDI.attach2--> org.xen.xapi.storage.SR_type_x

+org.xen.xapi.storage.SR_type_x --VDI.attach2--> Storage_access.SMAPIv1

+

+subgraph SMAPIv1

+driver_x[SMAPIv1 driver for SR_type_x]

+end

+

+Storage_access.SMAPIv1 --vdi_attach--> driver_x

+{{< /mermaid >}}

+

+### Interface Changes, Backward Compatibility, & SXM

+

+During SXM, xapi calls SMAPIv2 functions on a remote xapi. Therefore it

+is important to keep all those SMAPIv2 functions backward-compatible

+that we call remotely (e.g. Remote.VDI.attach), otherwise SXM from an