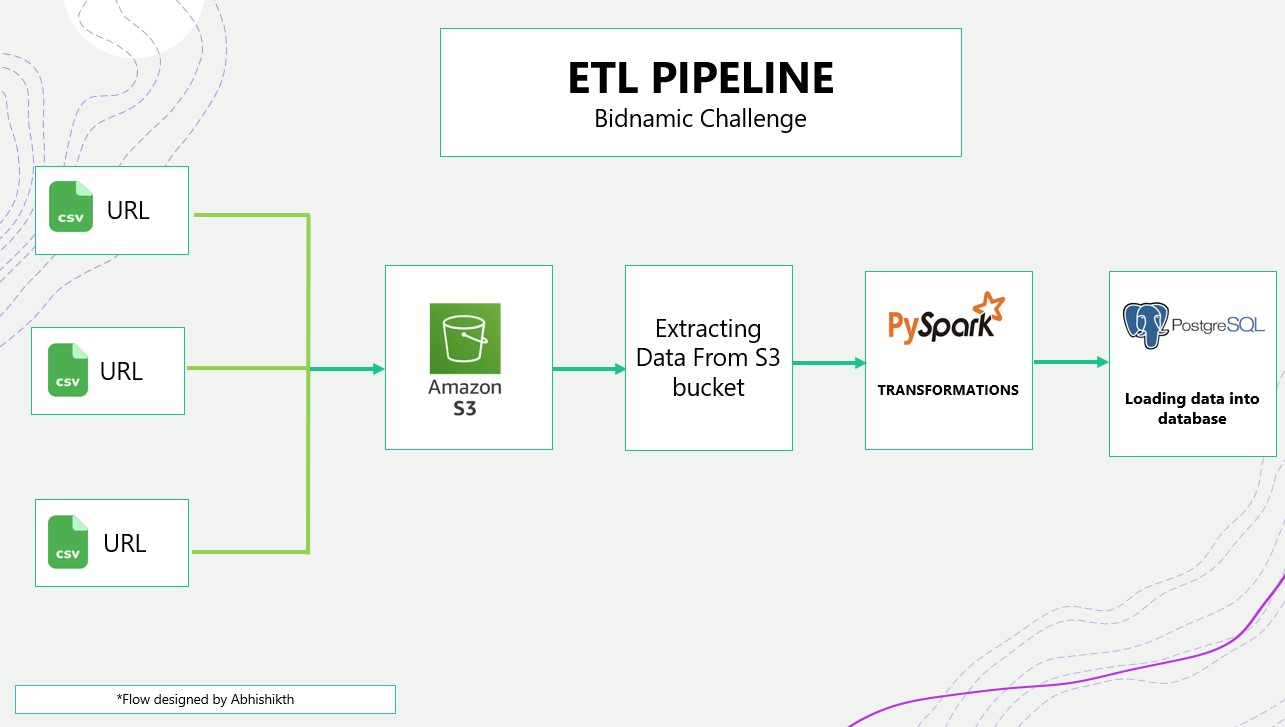

Bidnamic's Data Engineering Coding Challenge

- Ingested data from raw url files campaings, adgroups, search_terms into AWS S3 which considered as data lake with folowing Python code.

- Extracted the ingested data objects AWS S3 bucket using boto3 with the following python Script in jupyter Notebook by this we can perform the tranfomations and data processing accroding to the business requirement.

- In the data preprocessing steps for good practice while stroing extracted data into the postgreSQL we created data frames using the pyspark jupyter Notebook and inserted additional columns which are "created_timestamp", "created_by" and "modified_timestamp" by these columns we can track the data flow while extracting the data from source.

-

while data preprocessing i considered to store the data in three diffirent following levels of schema's which are

- 1. Stagedata schema

- - Raw data is loaded into this schema and we assign data types as per data.

- 2. Storedata schema

- - In this level we will load clean data by filtering the duplicates and creat the distinct value data frame with transformations as per business requiremtn.

- 3. Reportdata schema

- - This schema contains the completed busness required data tables.

by segregating schema's in postgreSQL database we can track the data utlisation as per busness requirement. - These schema's created by using the psycopg2 library where it used to connect the PgAdmin with configuration and it helps to execute the SQL statments

- Data loading is done by configuring the postgreSQL jdbc driver

- For entire cofiguration process like AWS key acess, postgreSQL connection and other input paths are used by loading aws_config.json file where this method is considered as good practice

- hadoop-aws-3.3.1

- aws-java-sdk-bundle-1.11.901

- Python

- postgreSQl