-

Notifications

You must be signed in to change notification settings - Fork 8

MPEG H UI manager

| ASI | Audio Scene Information; a technical term (AudioSceneInfo) related to MAE/Metadata Audio Elements. The term is described in chapter 15 of the MPEG-H standard. An ASI will for example describe the audio objects available, including their properties. |

| MAE | Metadata Audio Elements; an MPEG-H term in the context of audio objects. The set of metadata (for audio elements) consists of:

See also ASI and chapter 15 of the MPEG-H standard on 3D Audio. |

| MHAS | MPEG-H 3D Audio Stream; a self-contained stream format to transport packetized MPEG-H 3D Audio data. Both, configuration data as well as coded audio payload data is embedded into separate packets. Synchronization and length information is included for a self-synchronizing syntax. |

| MPEG-H | SO/IEC 23008 - High Efficiency Coding and Media Delivery in Heterogeneous Environments; formal title of a set of standards under development by the ISO/IEC Moving Picture Experts Group (MPEG) including but not limited to following parts:

In the current document the term "MPEG-H" refers to MPEG-H Part 3: 3D audio. |

| UI | User Interface |

| DRC | Dynamic Range Control |

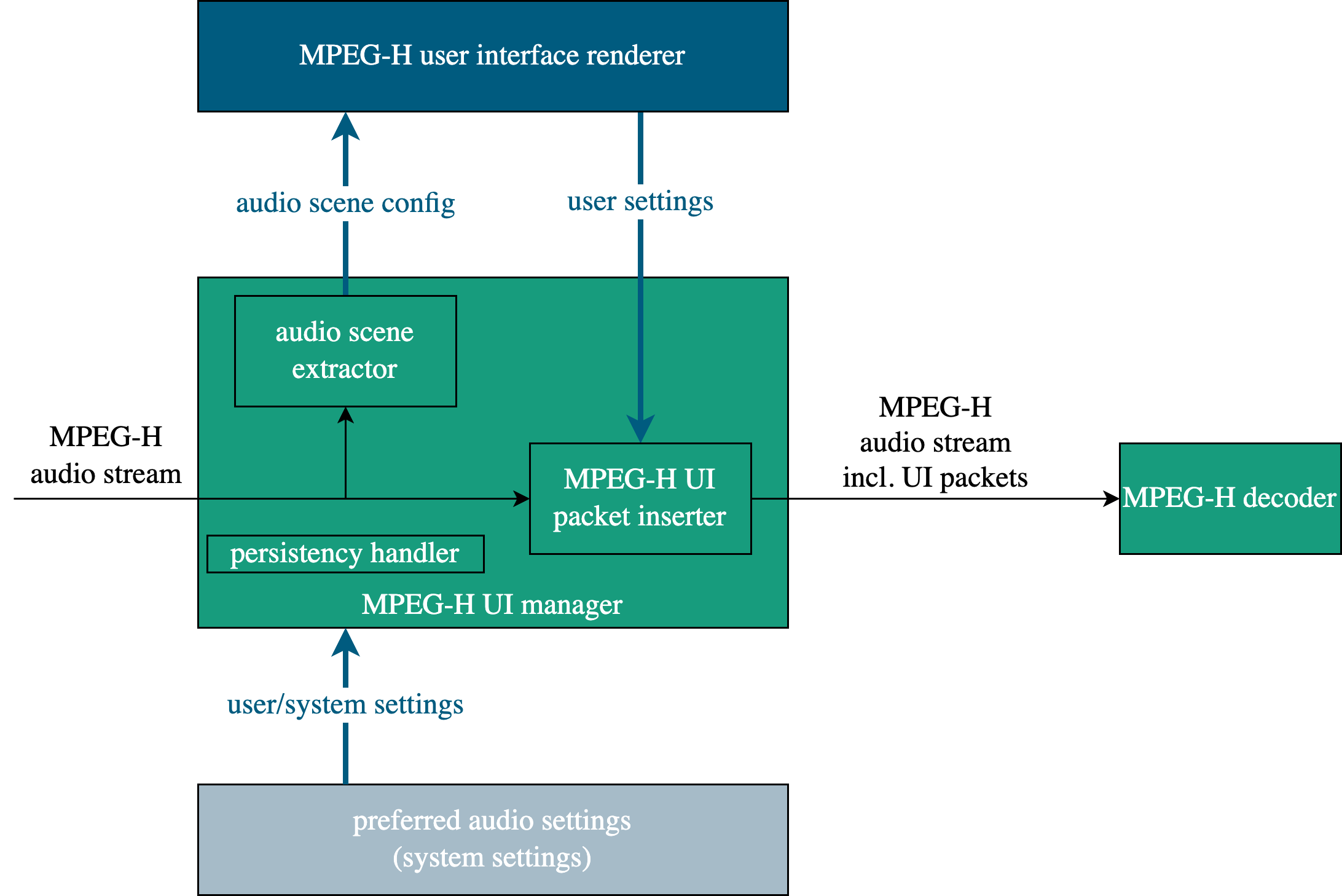

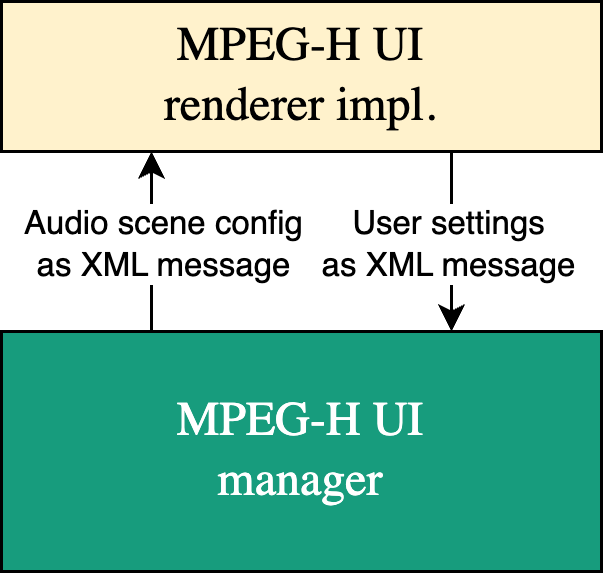

As explained in the "MPEG-H interactivity background" document, the MPEG-H receiver ecosystem defines the MPEG-H UI manager component, which is responsible to handle all MPEG-H UI related tasks:

- extract the audio scene information from the MPEG-H bitstream and provide this information in a compact form to the component, which is responsible to present this information to the user and to interact with the user (MPEG-H UI renderer)

- provide an interface to receive the user interaction from the MPEG-H UI renderer

- provide an interface to receive the user / system preferred settings

- insert the user settings into the bitstream

- perform persistency handling

Fig.: MPEG-H manager overview.

The scope of the document is:

- to provide technical background for MPEG-H Audio Scene and User Interactivity information

- to show system integration examples

- to define the MPEG-H UI manager component interfaces

If the metadata in an MPEG-H Audio bitstream enables user interactivity, the user may change certain aspects of the decoded audio scene during playback, e.g., change the level or position of an audio element. This metadata is called "Audio Scene Information" (short: ASI) in MPEG-H Audio and is transported in MPEG-H packets with MHASPacketType PACTYP_AUDIOSCENEINFO as defined in "ISO/IEC 23008-3:2022". It contains information as to what the user is allowed to change and by how much, e.g., which audio elements are enabled for interactivity and what the maximum allowed changes of those audio elements are (e.g. in terms of gain or position). The audio elements can also be combined together to build an "Audio Element Switch" group ("switch group"), where all audio elements share the same interactivity properties (e.g. gain or position) and only one of the audio elements in this group can be active at the same time. The ASI generally contains descriptions of "Presets", i.e., combinations of audio elements that are subsets of the complete audio scene with specific gains and positions. Typical examples for such presets are:

- "Dialogue Enhancement" with increased dialogue signal level and attenuated background signal level, and

- "Live Mix," e.g., for a sports event, with enhanced ambience, an additional ambience object signal, and a muted dialogue object that contains commentary.

The ASI also may contain textual labels with descriptions of audio elements or presets that can be presented to the consumer.

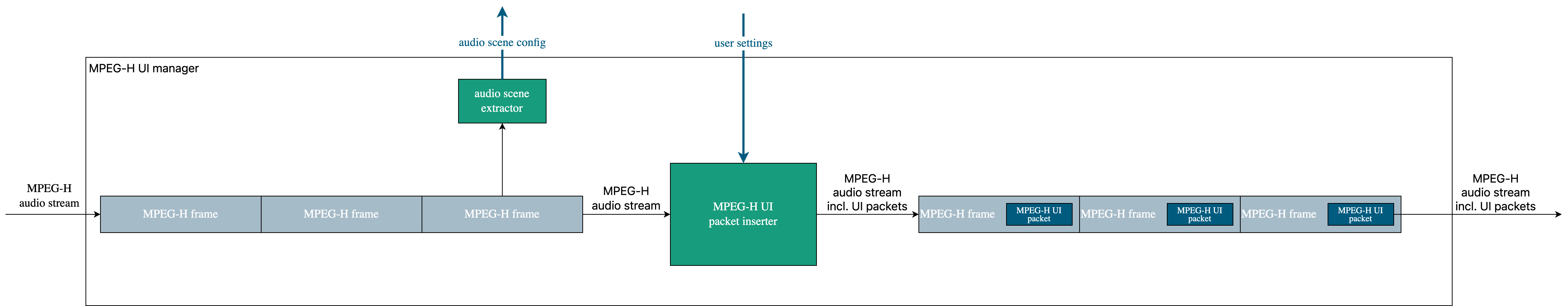

The performed user changes will be inserted as MPEG-H User Interactivity packets, MPEG-H packets with MHASPacketType PACTYP_USERINTERACTION and PACTYP_LOUDNESS_DRC as defined in "ISO/IEC 23008-3:2022, Chapter 14.4.9" and "ISO/IEC 23008-3:2022, Chapter 14.4.10", into the processed MPEG-H Audio bitstream. The subsequent MPEG-H Audio decoder applies the inserted MPEG-H UI packets to render the audio scene according to the user selected preferences.

Fig.: Audio scene extraction and UI packet insertion in MPEG-H UI manager.

Fig.: Audio scene extraction and UI packet insertion in MPEG-H UI manager.

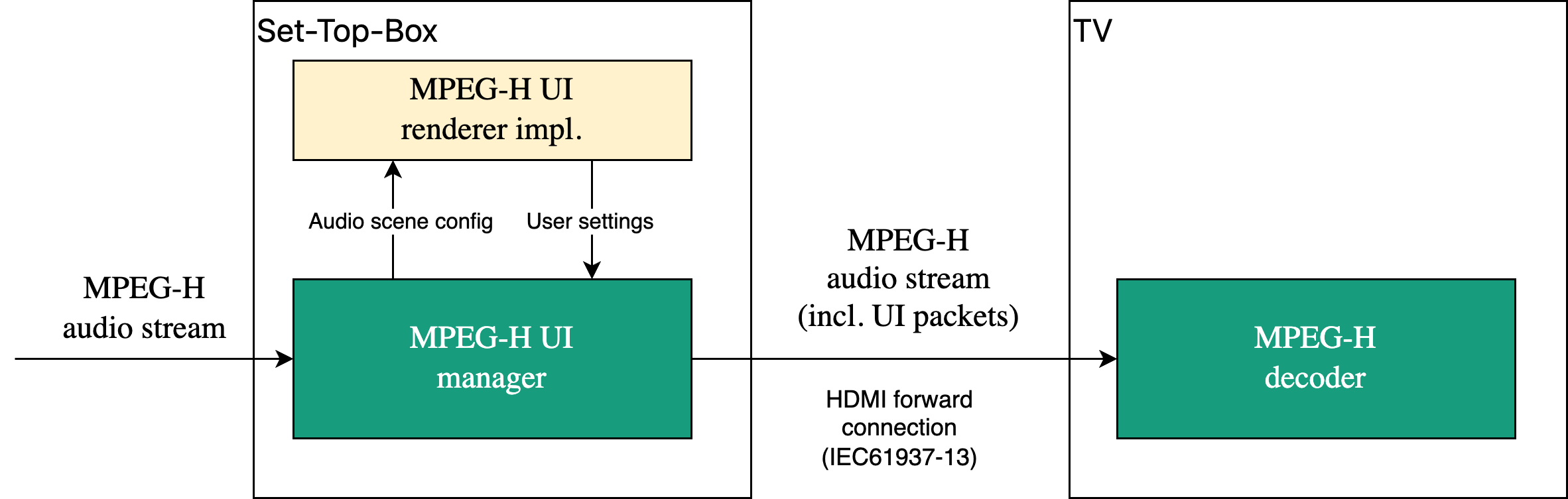

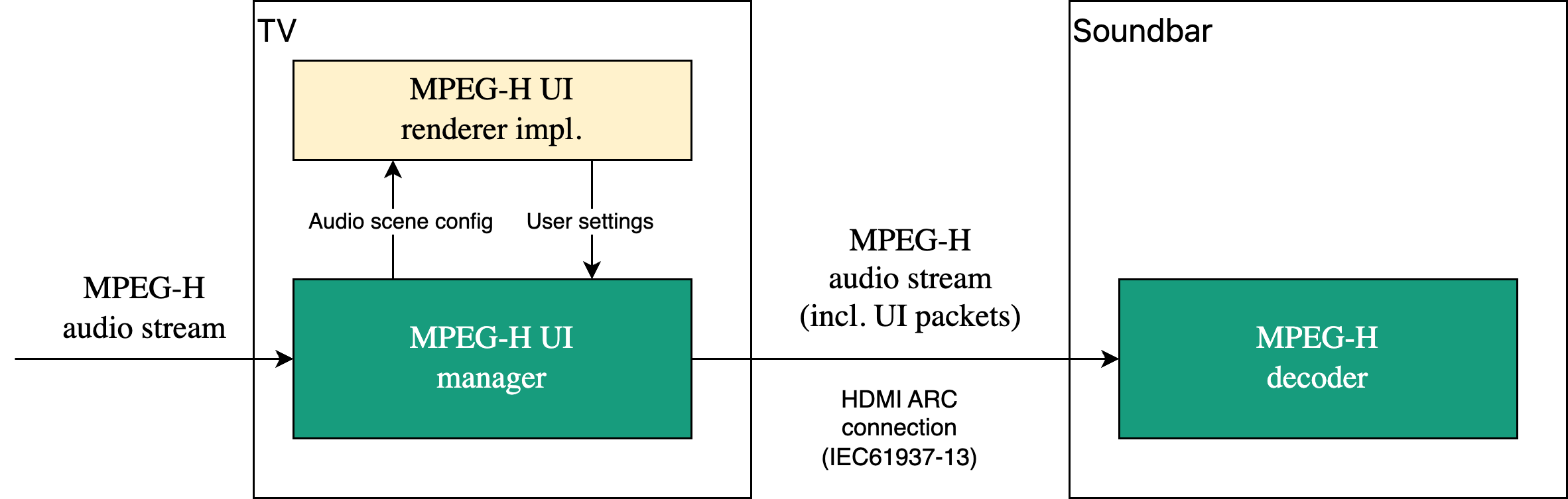

The MPEG-H receiver ecosystem with the split processes for the UI related tasks, covered by the MPEG-H UI manager component, and the MPEG-H bitstream decoding by the MPEG-H decoder component, allows for flexible implementation design on consumer devices. The MPEG-H UI manager as a separate component can be placed at arbitrary positions between the transport layer handling component and the MPEG-H decoder, e.g. closely placed to the MPEG-H UI renderer.

One of these implementation designs allows to implement the MPEG-H device connectivity feature, where:

- one device (e.g. Set-Top-Box or TV) receives the MPEG-H bitstream from the internet or from a broadcast channel and provides the MPEG-H User Interface handling

- and a second device (e.g. soundbar or AVR), connected via HDMI or SPDIF, finally decodes the MPEG-H bitstream

Fig: implementing MPEG-H UI handling in a Set-Top-Box and MPEG-H decoding on a TV.

NOTE: HDMI transmission of an MPEG-H audio stream between devices is defined in the IEC61937-13 standard

Fig.: implementing MPEG-H UI handling in a TV and MPEG-H decoding on a connected soundbar.

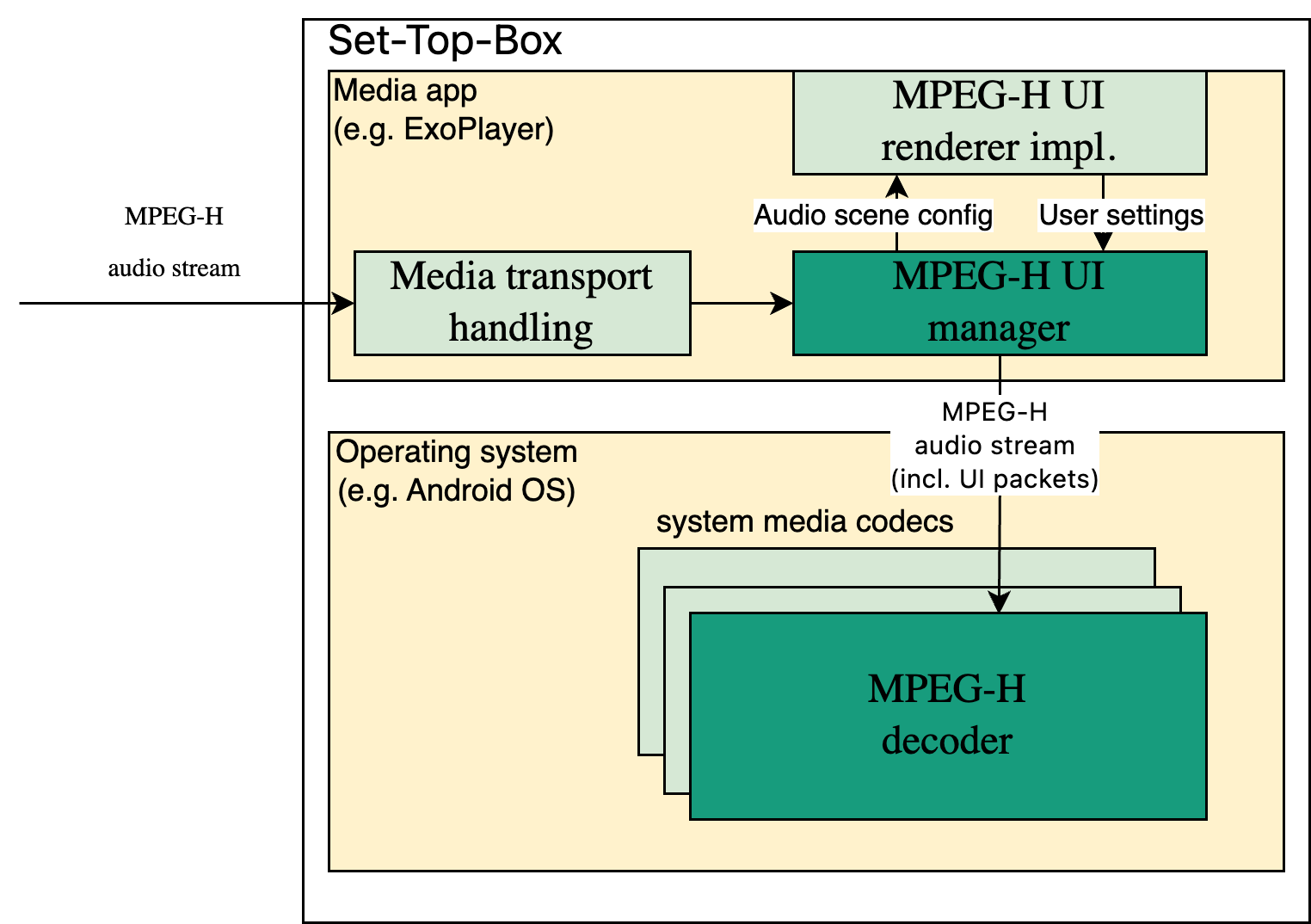

For devices implementing the complete MPEG-H integration (MPEG-H UI handling and MPEG-H decoding on the same device), the MPEG-H UI manager also allows for flexible integration based on the used system architecture requirements. Considering a device with integrated support for compressed audio decoding & playback on operating system layer (e.g. Android OS) and media transport handling on the application layer (e.g. ExoPlayer framework), the MPEG-H UI manager concept facilitates the integration effort. In this case the MPEG-H decoder will be integrated on the operating system layer together with other media codecs, and the MPEG-H UI manager on the app layer will handle media content transport and the user interface.

Fig.: implementing MPEG-H UI handling on the application layer.

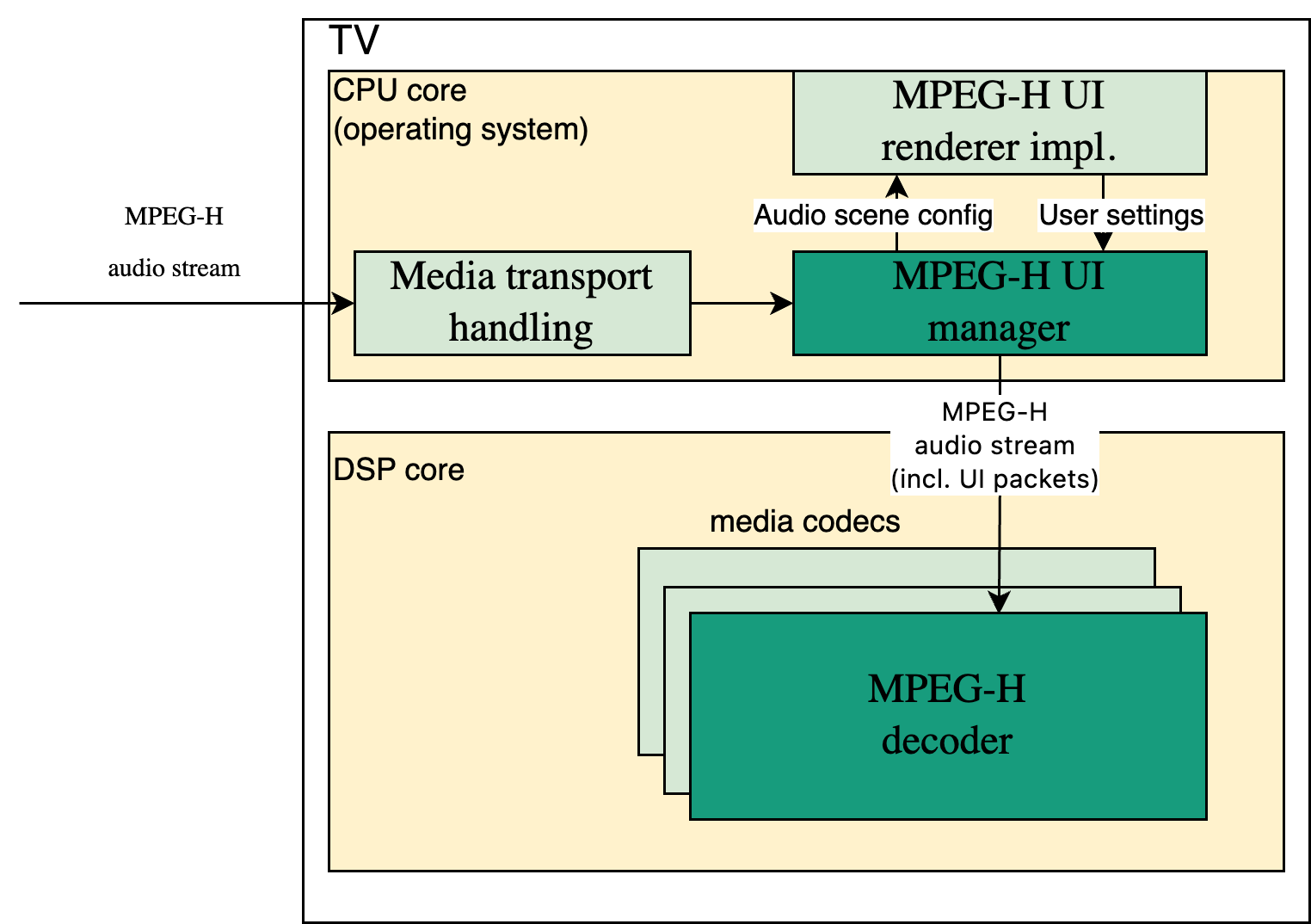

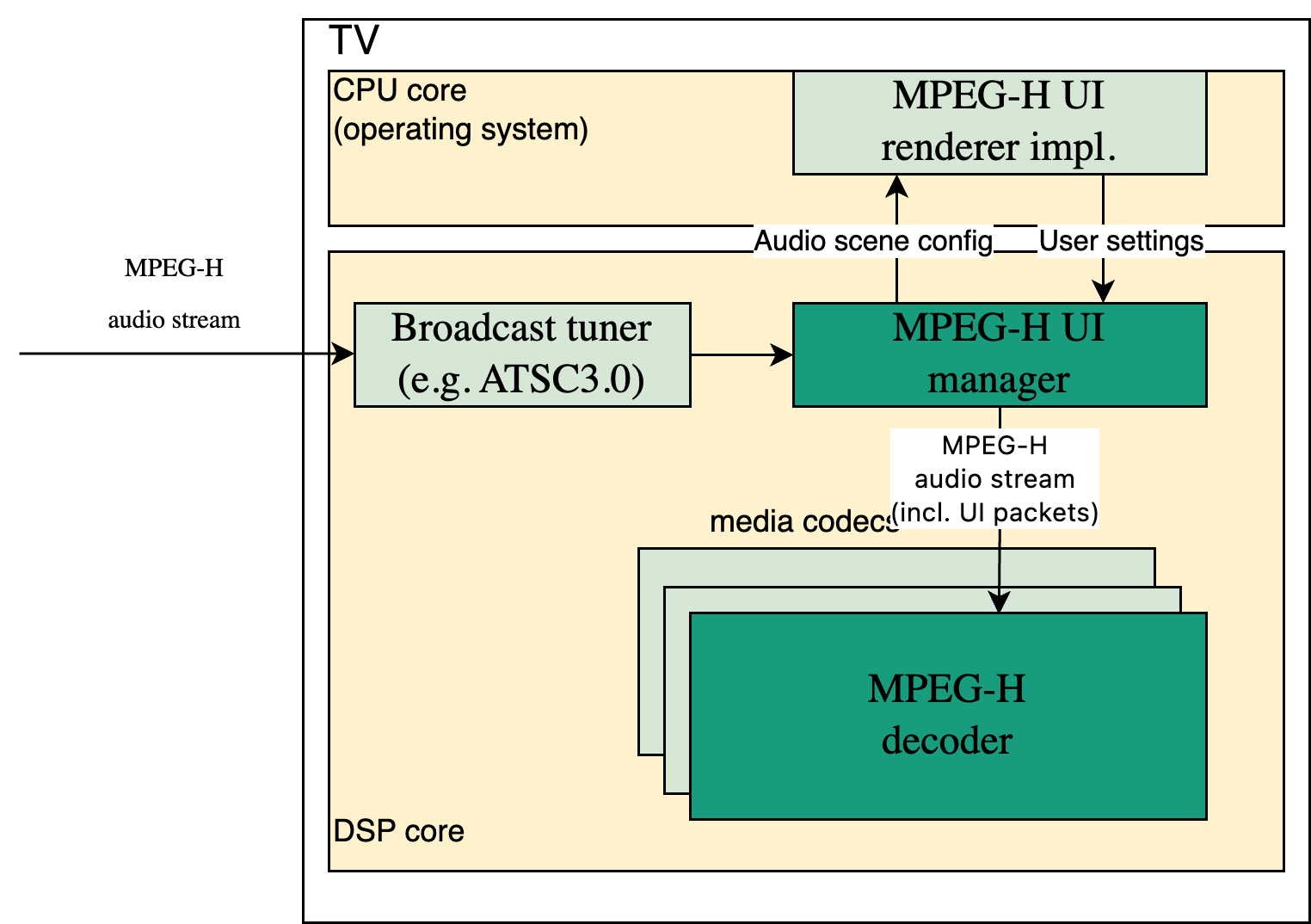

In case a CE device platform features a dedicated processor for signal processing tasks (DSP), the MPEG-H UI manager component can be integrated, depending on the system requirements, either on the main CPU core, handling the operating system, or on a DSP core, handling the media codecs.

Fig.: implementing MPEG-H UI handling on the CPU core and MPEG-H decoding on the DSP core.

Fig.: implementing MPEG-H UI handling and MPEG-H decoding on the DSP core.

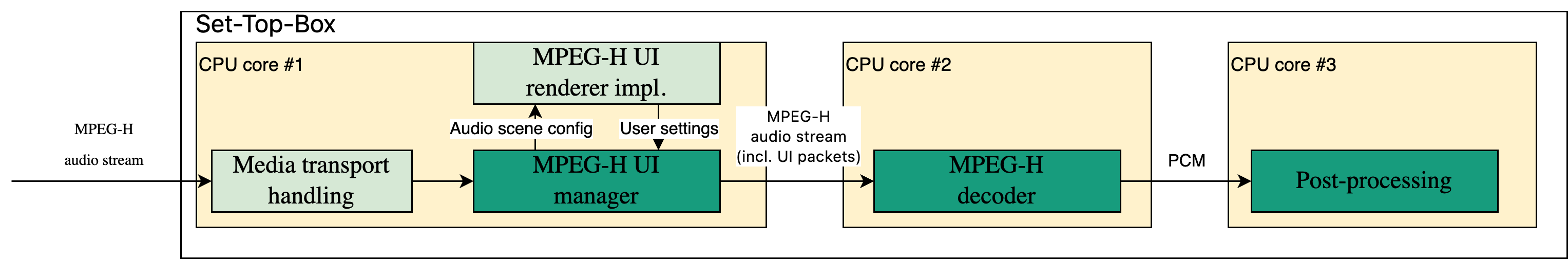

The MPEG-H UI manager concept also provides an easier integration in a multicore environment, where one core is responsible for the UI handling and other core(s) for MPEG-H decoding and post-processing.

Fig. implementing MPEG-H UI handling in multicore environment.

NOTE: the above diagram does not reflect the workload requirements of the MPEG-H components. The diagram just shows a possible splitting of the MPEG-H components in a multicore environment.

The MPEG-H UI manager has the following interfaces:

- [INPUT] MPEG-H Audio bitstream

- [OUTPUT] processed MPEG-H Audio bitstream incl. inserted MPEG-H UI packets

- [OUTPUT] Audio scene configuration

- [INPUT] User/System settings

For easy interfacing between the MPEG-H UI manager and the MPEG-H UI a 2-way MPEG-H UI XML protocol is defined.

Fig.: MPEG-H UI manager XML interface.

The "audio scene configuration" XML message describes the MPEG-H audio scene in a simple XML format. This XML message contains all required information to implement the MPEG-H UI renderer.

With the "user setting" XML message the MPEG-H UI renderer implementation forwards the user selection(s) in a simple XML format to the MPEG-H UI manager allowing user control for:

- Preset selection

- Selection of an audio object

- Prominence level control of an audio object

- Position control of an audio object

The "user setting" XML format is also used to set the system settings, like:

- Preferred audio language

- Preferred label language

- Selection of device category

- Selection of accessibility mode

- Selection of DRC effect/gains

- Selection of Album mode

The XML format is described in "MPEG-H UI manager XML format" document.