-

Notifications

You must be signed in to change notification settings - Fork 0

Home

This report was written June 17th 2019 when the internal version of this tool was being stress- and load-tested at the KB - National Library of the Netherlands.

As of this week, September 23rd 2019, the openjpeg-decoder-service hosted on this github repository is running production and can be seen in action at for instance the website Delpher.

This report documents performance tests run against a REST-service for image processing, employing openjpeg 2.3.1 for decoding .jp2 files.

The KBNLResearch github account hosts an experimental reusable fork of the openjpeg-decoder-service we tested internally: here.

The National Library of the Netherlands boasts a large collection of digitized newspapers, books and periodicals. Access images for these main collections are stored in jp2 format in order to manage the scale of the collection. To give an impression, some current production numbers (2019-06-11):

- Newspaper issues: 1,445,947 - resulting in 12,176,671 images, averaging about 7MB in (compressed) size

- Periodical issues: 200,595 - resulting in 5,257,651 images, averaging about 1MB in (compressed) size

- Books: 83,575 - resulting in 12,038,036 images, averaging also about 1MB in (compressed) size

These images and more are exposed by our flagship digitized content website Delpher.

Access images for the web are decoded on-the-fly via client requests.

Currently we are migrating the entire software-park from Jboss Application Server 7 to standalone deployables using Ansible, in combinaton with migrating Windows server hosting to Redhat 7 hosting.

This offered the opportunity to test a new solution for the jp2 decoder exposed by our imaging service: openjpeg 2.3

The National Library of the Netherlands was an early adopter of the new jp2 technology, at a time when standards for imaging API's like IIIF were not available. Over a period of approximately 12 years multiple websites were built as clients of the aforementioned imaging service. This makes the choice for a (more) standardized solution like IIP-image or Cantaloupe less cost-effective than building a backward compatible API shim for the .jp2 new decoder. In order to be more future-proof, the new solution aims to also support a recent IIIF image API version (currently 2.1) at level 1.

The move to open-source was also motivated by the potential of scaling out, without extra licence cost.

Static resources, including the .jp2 files, are still being hosted on windows network shares on SAN's in the same physical space as the webservices. The software runs on virtual machines and files are exposed for decoding via network mounts. As our current solution runs on a windows environment, standard windows networking can be used. For the new solution we employed cifs+samba to mount the static resources environment. Network latency is minimized due to the fact that all networking is virtual. The long-term stability test ('soak'-test) in the next section was primarily aimed at proving that cifs+samba mounts remain stable at regular load for longer periods of time.

In the new solution, the decoder is wrapped by a java REST-service which handles cropping, scaling and rotating logic in the same way as the original software.

As in the original setup the decoder service is wrapped by another java REST-service which has multiple responsibilities:

- resolving file locations based on ID (via a remote resolver REST-service)

- scaling, cropping and rotating of images which are not .jp2

- applying word highlighting based on alto-XML files (segmentation and OCR data) combined with a query parameter.

Like the current productive setup, the proof-of-concept openjpeg environment runs 2 jvms, each hosting its own service (decoder, and wrapping imaging service).

Both jvm's were assigned 1.5GB of RAM, from a total of 4GB available on the virtual machine.

After memory swapping and out-of-memory exceptions were observed in the first load test, the jvm's were ramped up to 4GB's each and the OS was assigned 12 GB of memory.

The virtual machine was outfitted with 4 CPUs.

This entire setup has been tested, and in some cases compared, in an integrated manner. That is, apart from functional unit-tests, separate components were not tested in an isolated manner. This is because the software should perform in a comparable manner to the current solution and in case of lacking performance, extra out-scaling should compensate.

As test data an access-log was taken from a single node of the production environment. The access-log represents the busiest month of the year 2019 (february). Indicative amounts of requests per second during peak hours were isolated. Requested images varied greatly in source type and scale, from 100 pixel width thumbnails up to full resolution, browser sized crop-areas and 40% downscaled full images.

Faulty response statuses were not filtered out in order to mimic reality as closely as possible.

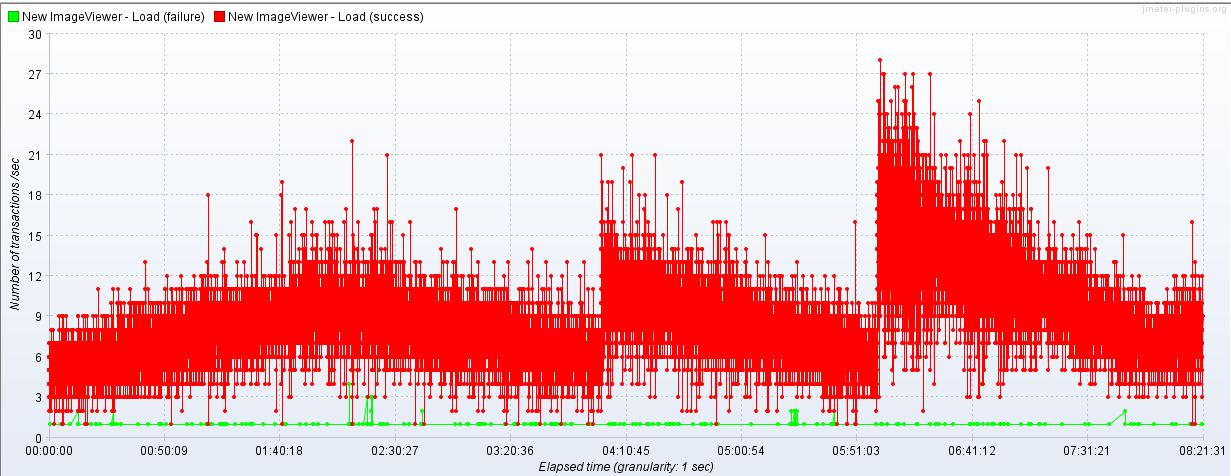

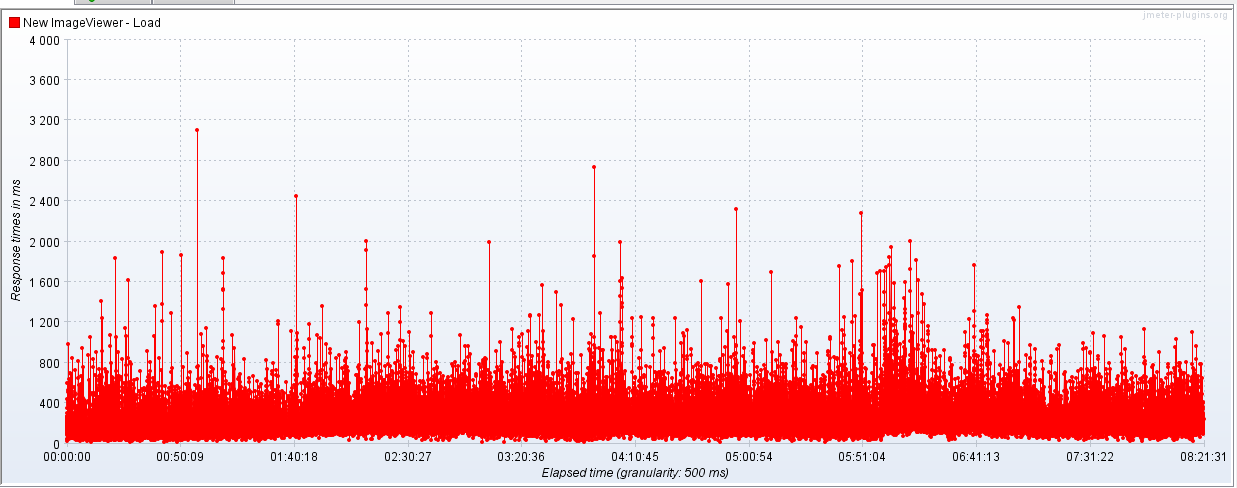

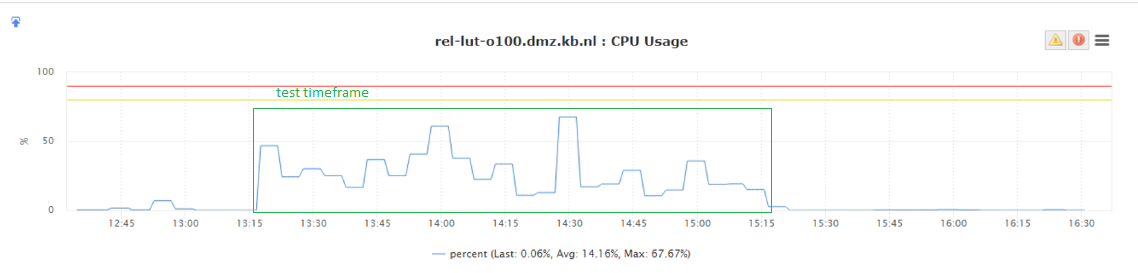

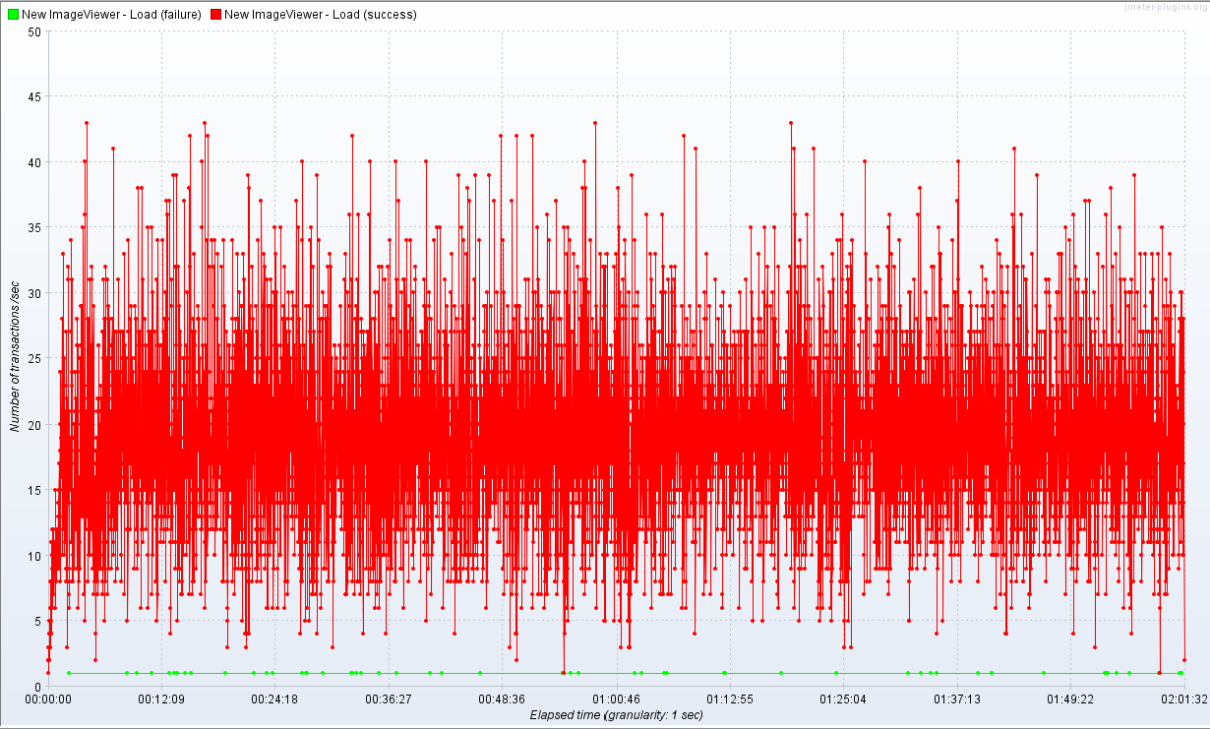

An eight-hour 'Soak'-test was run with jmeter on the new openjpeg service primarily to test the stable continuity of the cifs+samba mounts.

The first image shows the requests per second (rps) spawned by jmeter:

The second image shows response-times.

Except for a slight rise in response-times once rps was ramped up, no worrying measurements were observed.

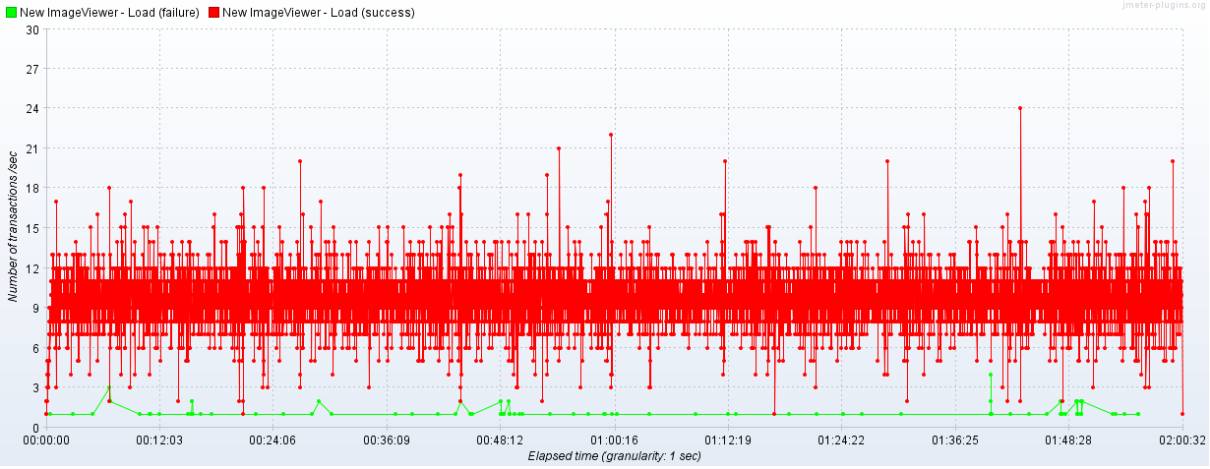

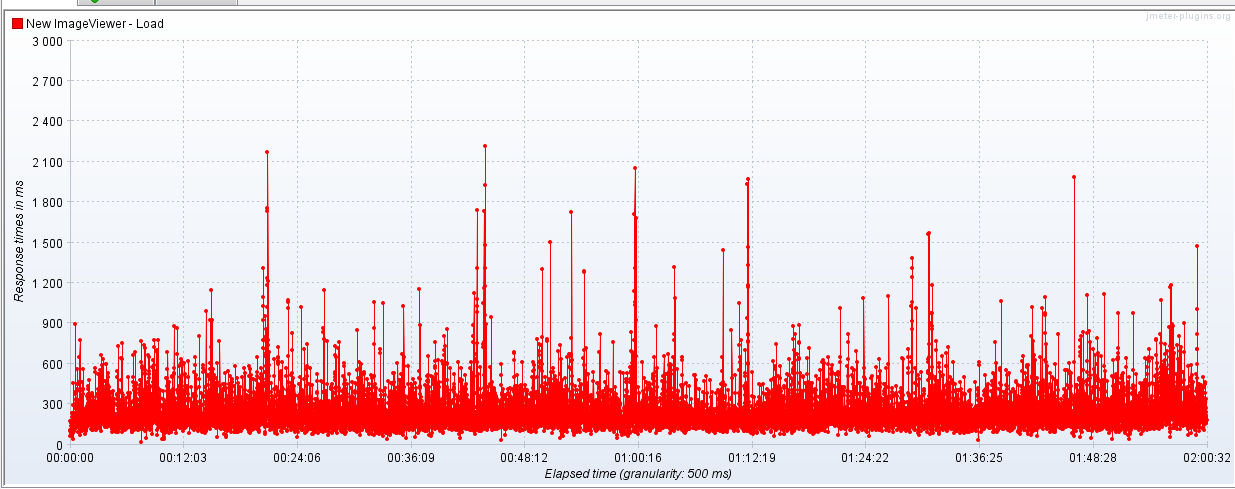

Two 2-hour load tests were performed on the new openjpeg service. One aiming at an average of 10 rps, the other at 20 rps. The first test is similar to loads we encounter in our productive environment at a regular basis. The second test indicated that more available memory would be required for stable operation (as mentioned above). However, 20 rps is quite a notch above realistic productive load.

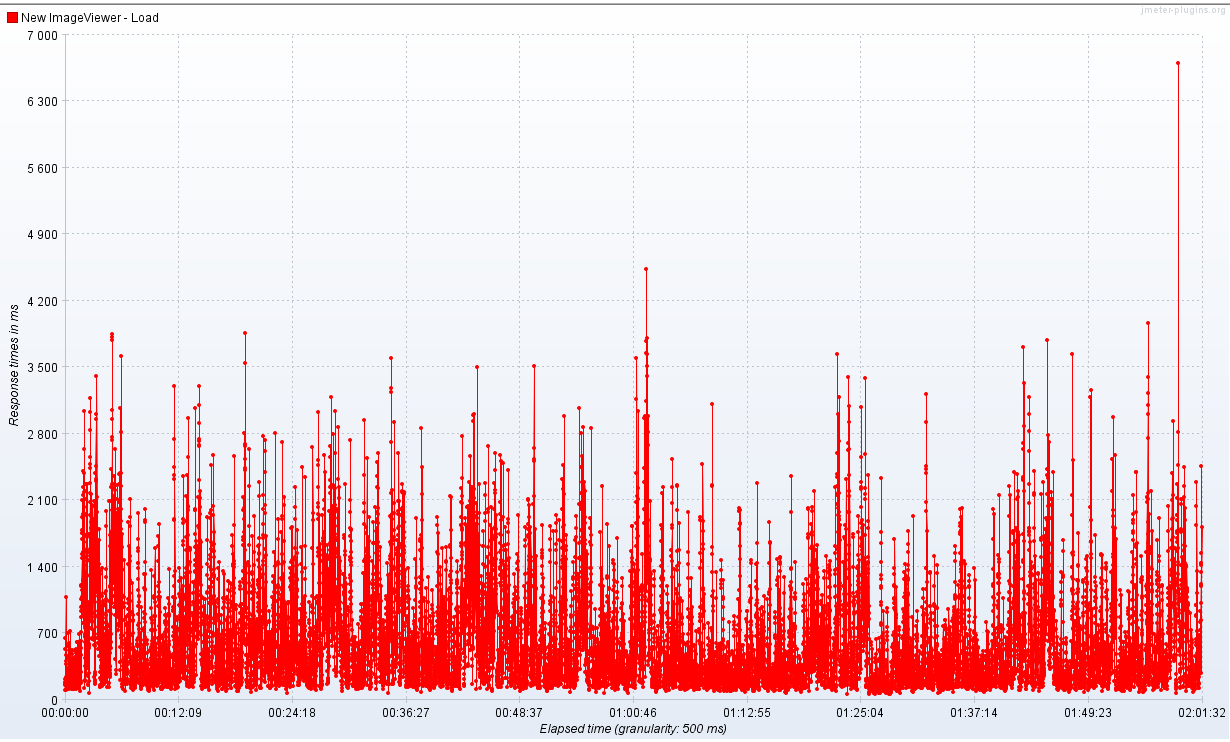

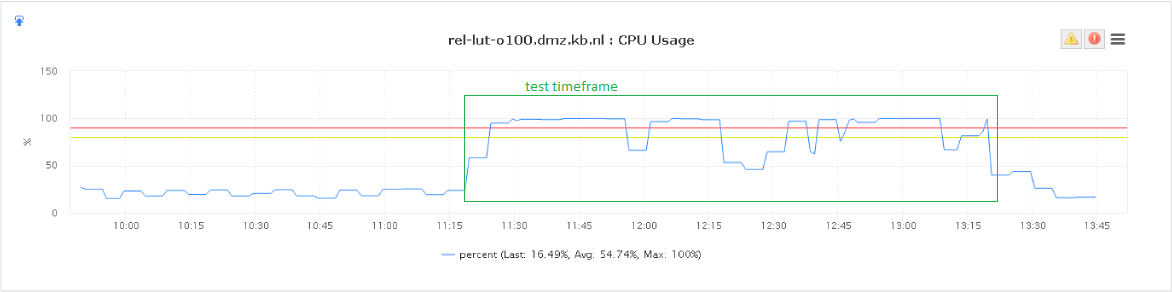

The graphics below represent the same numbers as in the 'soak'-test above: the requests per second spawned by jmeter and the corresponding response times. The third image in each series reports the cpu usage measured by Nagios. An average of 10 rps kept CPU usage within acceptable bounds. As expected, the average of 20 rps stressed the CPUs above acceptable criteria.

Requests per second (rps) spawned by jmeter averaging 10 rps:

Response times in the corresponding timeframe at 10rps:

CPU load in the corresponding timeframe at 10rps:

Requests per second (rps) spawned by jmeter averaging 20 rps:

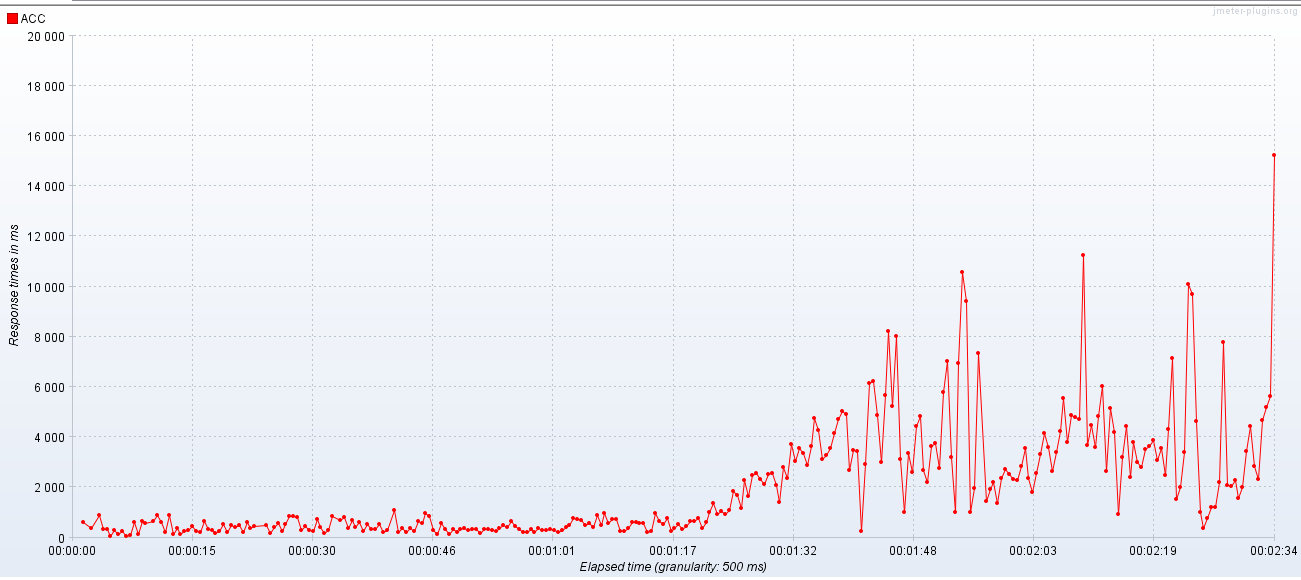

Response times in the corresponding timeframe at 20rps:

CPU load in the corresponding timeframe at 20rps:

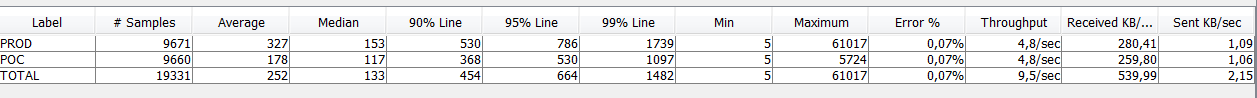

The same 10rps load-test was also run against all 6 production nodes behind the load-balancer to get an early indication of performance expressed in response times when compared with the new openjpeg solution. The - quite promising - results are reported below.

Comparative load-test firing at production first, proof-of-concept openjpeg version second:

Comparative load-test firing at proof-of-concept openjpeg version first, production second:

As can be observed in these numbers, the jvm's garbage collection strategy in the production environment might be suboptimal under considerable load as compared to the jvm's in the proof-of-concept environment.

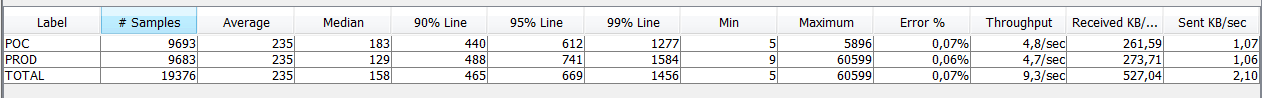

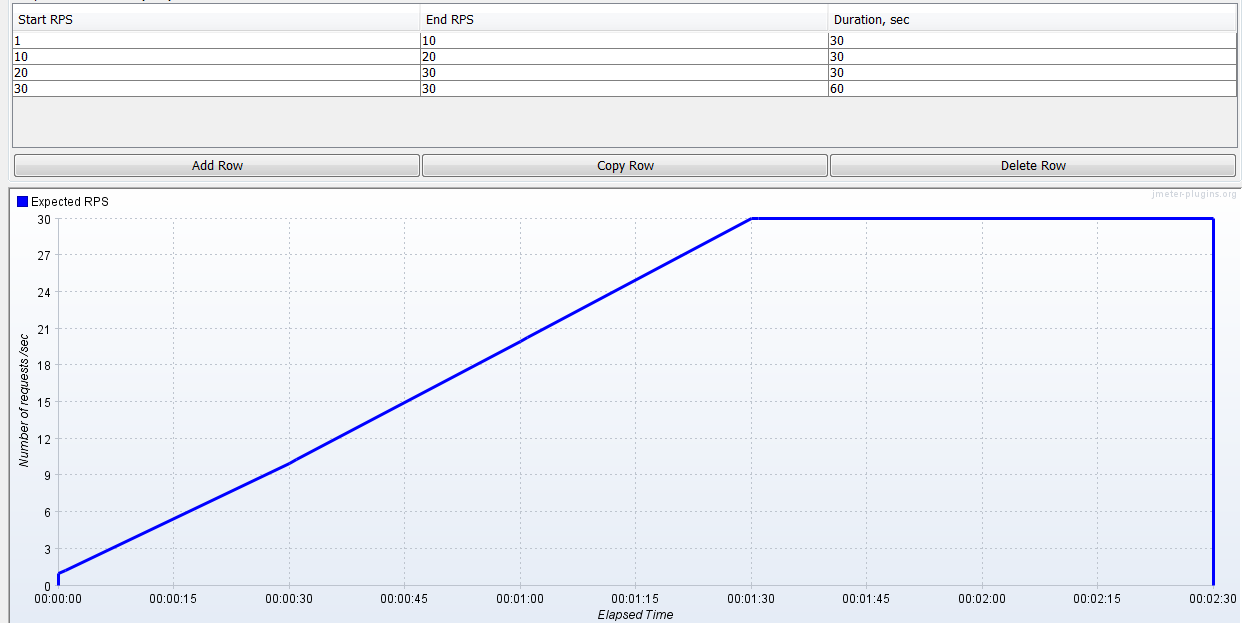

A stress test was run against both the new openjpeg environment and a single node of the original solution with the same memory settings (12GB OS memory / 2x 4GB JVM) and 4 CPUs. The only difference was a load-balancer proxying traffic to the single node of the old solution, due to security restrictions on the server running the old software.

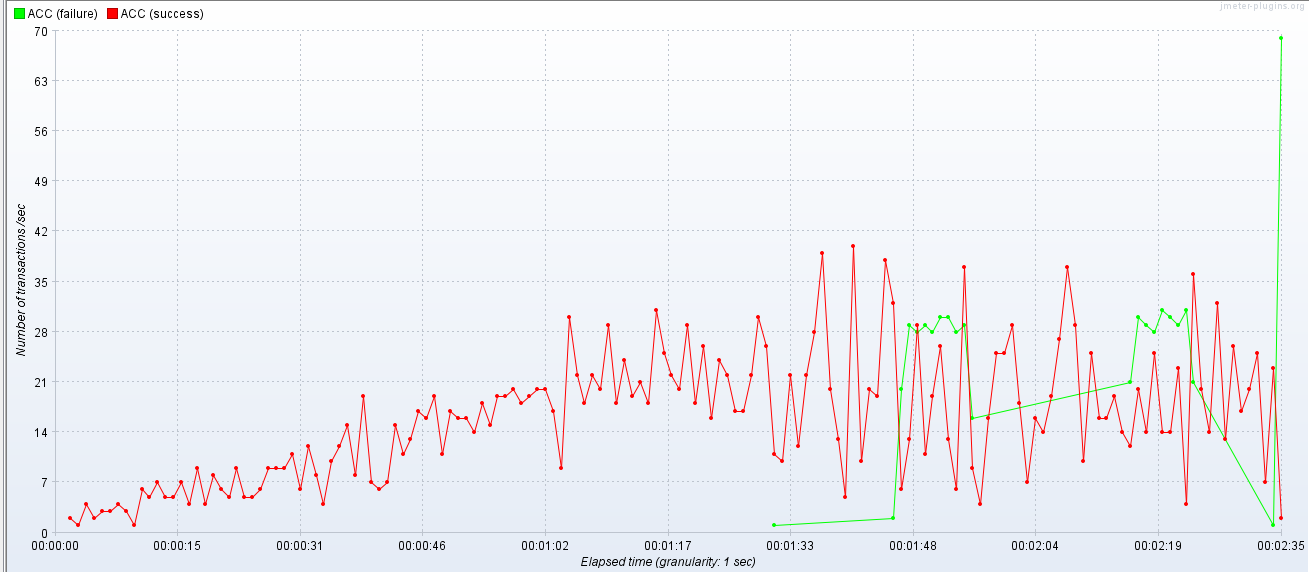

RPS used in the 2:30 minute time window:

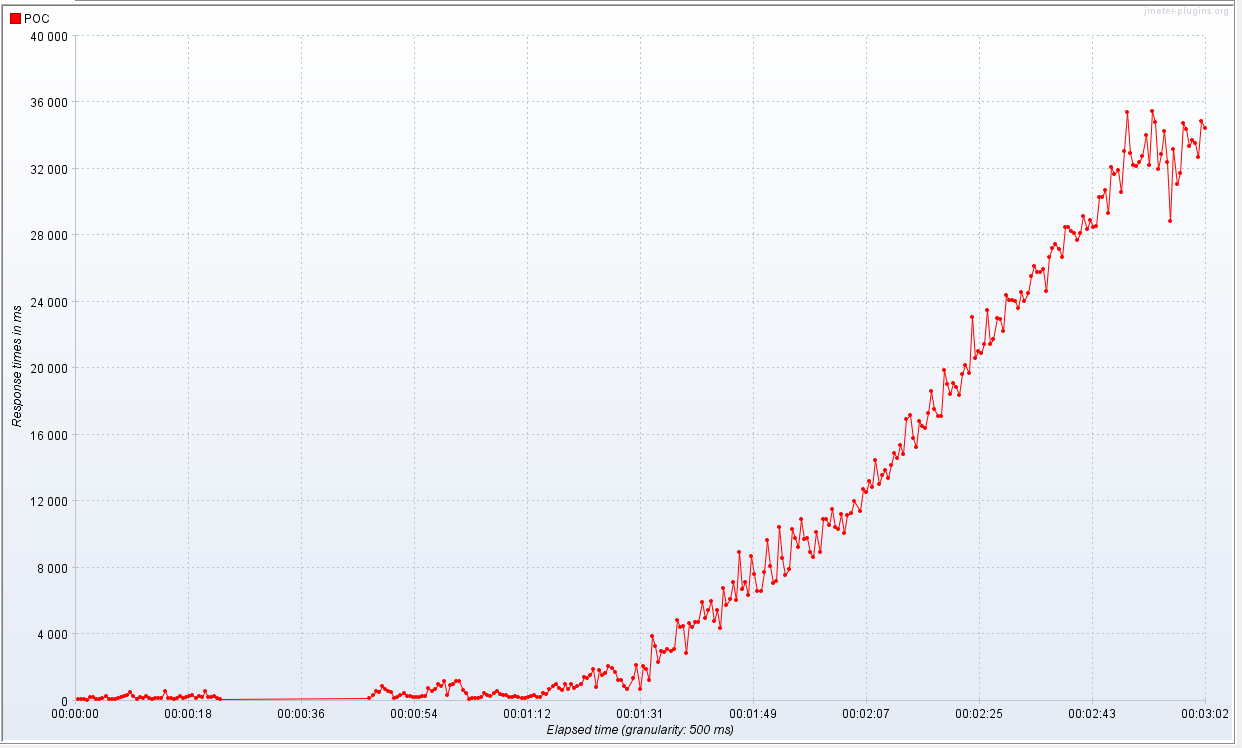

Response times of the new openjpeg solution. Response times start to dramatically rise at the 1:30 minute threshold (at the start of the 30rps ramp):

Response times of the original solution. In this case the response times start showing very erratic pattern at the same 1:30 minute threshold due to connection failures reported by the load-balancer, as seen in the following image:

Protective influence of the load-balancer refusing connections at heavy loads:

Qualitative comparisons were run using imagemagick pixel-to-pixel comparison. In a next step, the image pairs that gave the highest divergence were compared visually. Even with those few pairs where the viewers claimed that minor qualitive differences were visible, it was not possible to agree on which decoder had produced the best image quality. Some pairs could not be compared using imagemagick because the images had a one pixel difference in height or width. Most likely, this deviation was not caused by errors in the image decoders but by minor rounding or truncating differences in the calling code in cases where a scaling factor had to be translated to an absolute width and height.

All tests indicate that the openjpeg decoder alternative stays within our acceptance criteria, as performance is comparable to our production environment.

Based on that we are ready to continue with chain integration testing and testing behind a load-balancer in test- and staging-environments.