Mantis is an autonomous differential drive robot with two wheels. Its main processing unit is a Jetson Nano running Ubuntu Mate 20.04 and the ROS 1 (ROS Noetic) middleware. This respository contains ROS driver packages, ROS Control Hardware Interface for the real robot and configurations for simulating Mantis.

| Gazebo Simulation |

|---|

|

It provides mounts for different camera modules, such as Realsense D435 and you can even design your own if you like. There is also support for different single board computers (Nvidia Jetson Nano) through two changable decks.

mantis_base: ROS Control hardware interface includingcontroller_managercontrol loop for the real robot. Thescripts folderof this package contains the low-levelbase_controllerthat is running on the Teensy microcontroller.mantis_bringup: Launch files to bring up the hardware drivers (camera, lidar, imu, ultrasonic, ...) for the real mantis robot.mantis_control: Configurations for thediff_drive_controllerof ROS Control used in Gazebo simulation and the real robot.mantis_description: URDF description of mantis including its sensors.mantis_gazebo: Simulation specific launch and configuration files for mantis.mantis_msgs: Message definitions specific to mantis, for example the message for encoder data.mantis_navigation: Navigation based onmove_basepackage; launch and configuration files.mantis_slam: Simultaneous localization and mapping using different implementations (e.g., gmapping) to create a map of the environment

The required Ubuntu packages are listed in software package sections found in the documentation. Other ROS catkin packages such as rplidar_ros need to be cloned into the catkin workspace.

For an automated and simplified dependency installation process install the vcstool, which is used in the next steps.

sudo apt install python3-vcstoolTo build the packages in this repository including the Remo robot follow these steps:

-

cdinto an existing ROS Noetic catkin workspace or create a new one:mkdir -p catkin_ws/src -

Clone this repository in the

srcfolder of your ROS Noetic catkin workspace:cd catkin_ws/srcgit clone https://github.com/Aakash872/mantis -

Execute the

vcs importcommand from the root of the catkin workspace and pipe in themantis_dev.reposorremo_robot.reposYAML file, depending on where you execute the command, either the development PC or the SBC of Remo to clone the listed dependencies. Run the following command only on your development machine:vcs import < src/mantis/mantis_dev.repos ```roslaunch mantis_navigation mantis.launch -

Install the requried binary dependencies of all packages in the catkin workspace using the following

rosdepcommand:rosdep install --from-paths src --ignore-src -r -y -

After installing the required dependencies build the catkin workspace, either with

catkin_make:catkin_ws$ catkin_makeor using catkin-tools:

catkin_ws$ catkin build -

Finally, source the newly built packages with the

devel/setup.*script, depending on your used shell:For bash use:

catkin_ws$ source devel/setup.bashFor zsh use:

catkin_ws$ source devel/setup.zsh

The following sections describe how to run the robot simulation and how to make use of the real hardware using the available package launch files.

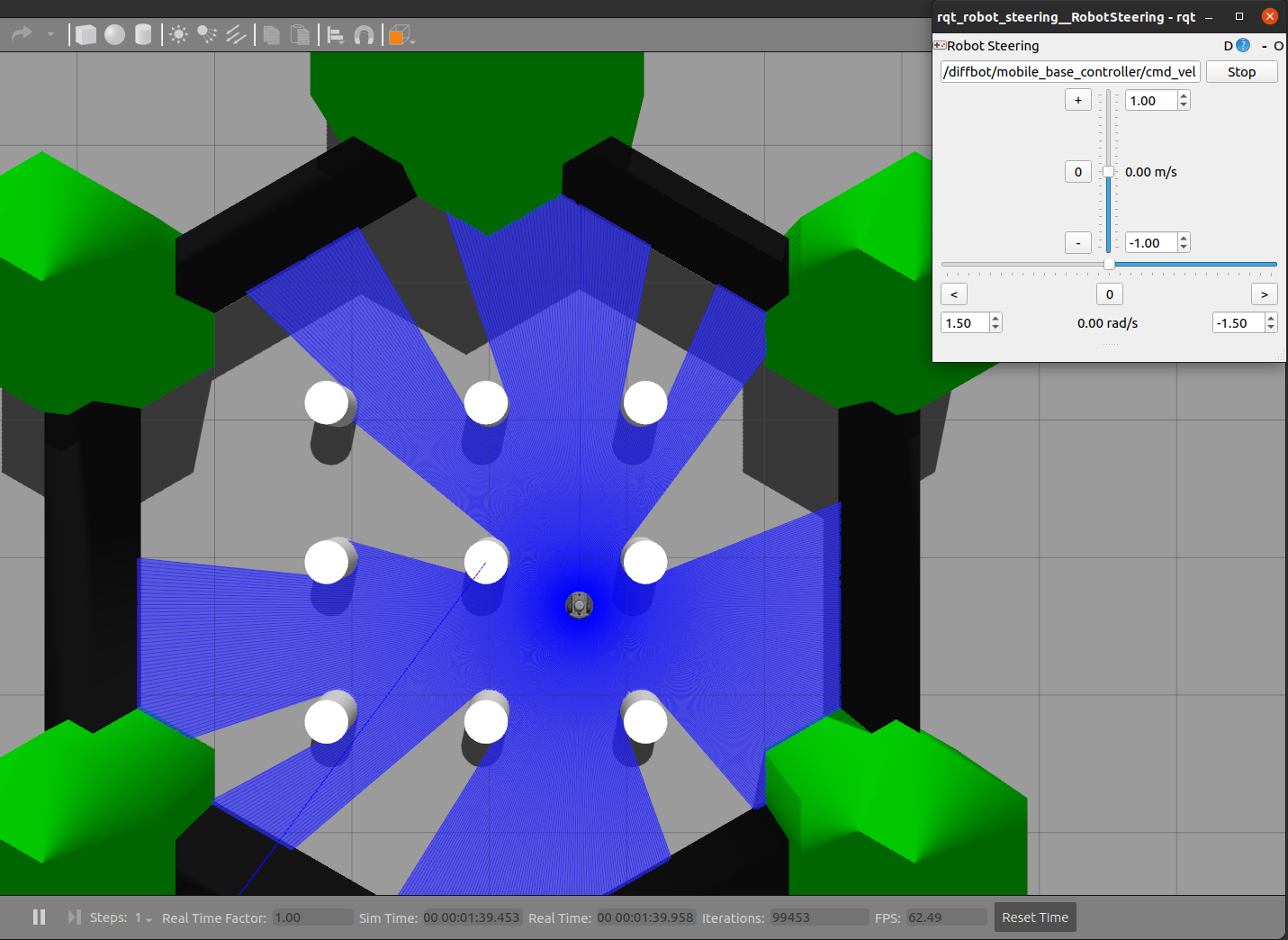

Control the robot inside Gazebo and view what it sees in RViz using the following launch file:

roslaunch mantis_control mantis.launchThis will launch the default mantis world turtlebot3_world.world.

turtlebot3_world.world |

|---|

|

To navigate the robot in the Gazebo simulator in turtlebot3_world.world run the command:

roslaunch mantis_navigation mantis.launchThis uses a previously mapped map of turtlebot3_world.world (found in mantis_navigation/maps) that is served by

the map_server. With this you can use the 2D Nav Goal in RViz directly to let the robot drive autonomously in the turtlebot3_world.world.

To map a new simulated environment using slam gmapping, first run

roslaunch mantis_gazebo mantis.launch world_name:='$(find mantis_gazebo)/worlds/turtlebot3_world.world'and in a second terminal execute

roslaunch mantis_slam mantis_slam.launch slam_method:=gmappingThen explore the world with the teleop_twist_keyboard or with the already launched rqt_robot_steering GUI plugin:

When you finished exploring the new world, use the map_saver node from the map_server package to store the mapped enviornment:

rosrun map_server map_saver -f ~/mapStart by setting up the ROS Network, by making the development PC the rosmaster set the ROS_MASTER_URI environment variable accordingly,

Then follow the steps listed below to run the real mantis or Remo robot hardware:

-

First, brinup the robot hardware including its laser with the following launch file from the

mantis_bringuppackage. Make sure to run this on the real robot (e.g. connect to it viassh):roslaunch mantis_bringup bringup_with_laser.launch -

Then, in a new terminal on your remote/work development machine (not the single board computer) run the slam gmapping with the same command as in the simulation:

roslaunch mantis_slam mantis_slam.launch slam_method:=gmappingAs you can see in the video, this should open up RViz and the

rqt_robot_steeringplugin. -

Next, steer the robot around manually either using the

keyboard_teleopnode or using therqt_robot_steeringnode and save the map with the following command when you are done exploring:rosrun map_server map_saver -f real-world

After the mapping process it is possible to use the created map for navigation, after running the following launch files:

-

On the single board computer (e.g. Jetson nano) make sure that the following is launched:

roslaunch mantis_bringup bringup_with_laser.launch -

Then on the work/remote development machine run the

mantis_hw.lauchfrom themantis_navigationpackage:roslaunch mantis_navigation mantis_hw.lauchAmong other essential navigation and map server nodes, this will also launch an instance of RViz on your work pc where you can use its tools to:

- Localize the robot with the "2D Pose Estimate" tool (green arrow) in RViz

- Use the "2D Nav Goal" tool in RViz (red arrow) to send goals to the robot