-

Notifications

You must be signed in to change notification settings - Fork 11

Architecture

This page outlines the general architecture and design principles of CDOGS and is mainly intended for a technical audience, and for people who want to have a better understanding of how the system works.

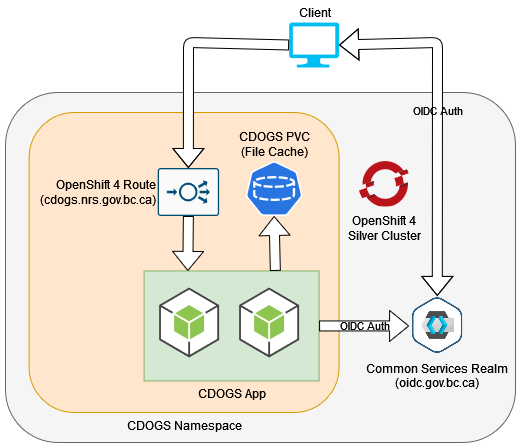

The Common Document Generation Service is designed from the ground up to be a cloud-native containerized microservice. It is intended to be operated within a Kubernetes/OpenShift container ecosystem, where it can dynamically scale based on incoming load and demand. The following diagram provides a general overview of how the three main components relate to one another, and how network traffic generally flows between the components.

Figure 1 - The general infrastructure and network topology of CDOGS

The CDOGS API is designed to be highly available within the OpenShift environment by being a scalable and atomic microservice. On the OCP4 platform, multiple replicas of CDOGS are running at once. This allows the service to reliably handle a large variety of request volumes and scale resources accordingly.

In general, all network traffic follows the standard OpenShift Route to Service pattern. When a client connects to the CDOGS API, they will be going through OpenShift's router and load balancer, which will then forward that connection to one of the CDOGS API pod replicas. Figure 1 represents the general network traffic direction through the use of the outlined fat arrows, and the direction of those arrows represents which component is initializing the TCP/IP connection. The CDOGS pods leverage a shared, RWX PVC for file caching purposes. The OpenShift 4 Route and load balancer follows the general default scheduling behavior as defined by Kubernetes.

While CDOGS itself is a relatively small and compact microservice with a very focused approach to handling template requests, not all design choices are self-evident just from inspecting the codebase. The following section will cover some of the main reasons why the code was designed the way it is.

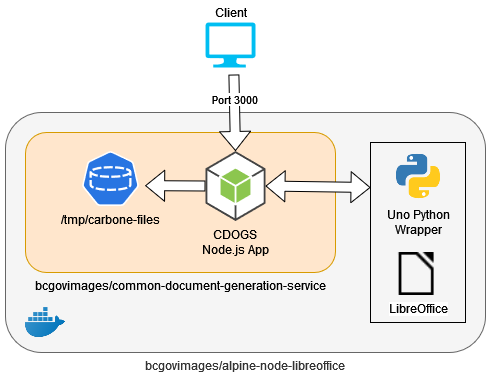

The Common Document Generation Service image consists of a simple node application with a dependency to a certain specified directory to serve as a file cache. The API listens for network traffic on port 3000. The CDOGS image is built on top of the Alpine Node LibreOffice image, which contains an LTS version of Node.js, Uno Python wrapper, and LibreOffice. Figure 2 shows how all these components reside in the Docker image hierarchy.

Figure 2 - The general Docker image hierarchy of CDOGS

In order to support PDF generation, CDOGS needs to leverage LibreOffice's conversion capabilities. The Carbone library in CDOGS interfaces with LibreOffice through the Uno Python wrapper, which facilitates and LibreOffice conversion jobs.

In order to make sure our application can horizontally scale (run many copies of itself), we had to ensure that all processes in the application are self-contained and atomic. Since we do not have any guarantees of which pod instance would be handling what task at any specific moment, the only thing we can do is to ensure that every unit of work is clearly defined and atomic so that we can prevent situations where there is deadlock, or double executions.

While implementing Horizontal Autoscaling is relatively simple by using a Horizontal Pod Autoscaler construct in OpenShift, we can only take advange of it if the application is able to handle the different type of lifecycles. Based on usage metrics such as CPU and memory load, the HPA can increase or decrease the number of replicas on the platform in order to meet the demand.

At this time, CDOGS does not have a HPA policy implemented as there is not yet enough variable load to justify it. Instead, we are currently set at 2 constant replicas, which appears to be sufficient for current demand. While we can expect to increase CDOGS pod count and immediately see throughput improvements, we still need to evaluate whether there needs to be some form of round-robin task scheduler to ensure load is distributed evenly across the replicas above and beyond what our OCP4 load balancer can do for us.

Another factor we will need to consider is whether our current file cache lock implementation will scale with more pods accessing the same PVC. We currently implement a semaphore lock onto the directory when a CDOGS instance is about to do a file manipulation to the directory. This allows us to achieve read-write integrity in the PVC, but at the cost of potentially stalling out other CDOGS pods while they wait for the lock to be released.

Our current limiting factor for scaling CDOGS to higher replica counts is the uncertainty of our file cache semaphore implementation, as well as questions regarding cluster-level network scheduling. With a short burst of requests, it is possible for later requests to become starved and time out because they were unlucky and routed to a CDOGS pod that was busy with a different template at the time. Further exploration will be needed before we can scale more freely.

Return Home