| title | tags | status | |||||

|---|---|---|---|---|---|---|---|

Expose Open Policy Agent/Gatekeeper Constraint Violations with Prometheus and Grafana |

|

draft |

Expose Open Policy Agent/Gatekeeper Constraint Violations for Kubernetes Applications with Prometheus and Grafana

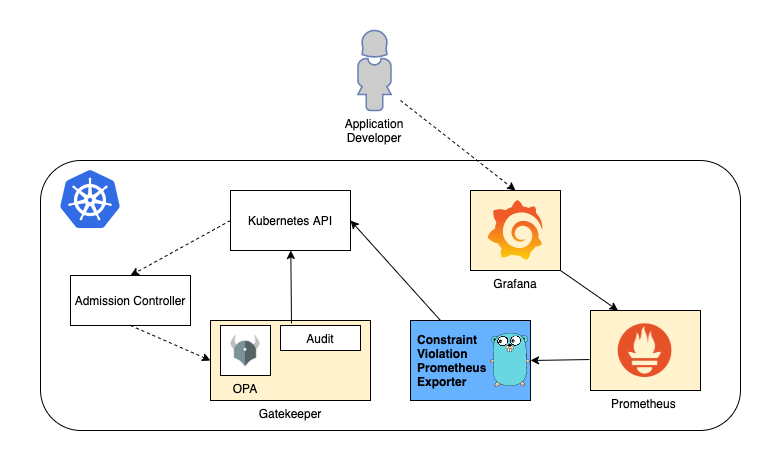

TL;DR: In this blog post, we talk about a solution which gives platform users a succinct view about which Gatekeeper constraints are violated by using Prometheus & Grafana.

Andy Knapp and Murat Celep has worked together on this blog post.

Application teams that just start to use Kubernetes might find it a bit difficult to get into it as Kubernetes is a quiet complex & large ecosystem (see CNCF ecosystem landscape). Moreover, although Kubernetes is starting to mature, it's still being developed very actively and it keeps getting new features at a faster pace than many other enterprise software out there. On top of that, Kubernetes platform deployments into the rest of a company's ecosystem (Authenticaton, Authorization, Security, Network,storage) are tailored specifically for each company due to the integration requirements. So even for a seasoned Kubernetes expert there are usually many things to consider to deploy an application in a way that it fulfills security, resiliency, performance requirements. How can you assure that applications that run on Kubernetes keep fulfilling those requirements?

Open Policy Agent (OPA) and its Kubernetes targeting component Gatekeeper gives you means to enforce policies on Kubernetes clusters. What we mean by policies here, is a formal definition of rules & best practices & behavior that you want to see in your company's Kubernetes clusters. When using OPA, you use a Domain Specific Language called Rego to write policies. By doing this, you leave no room for misinterpretations that would occur if you tried to explain a policy in free text on your company's internal wiki.

Moreover, when using Gatekeeper, different policies can have different enforcement actions. There might be certain policies that are treated as MUST whereas other policies are treated as NICE-TO-HAVE. A MUST policy will stop a Kubernetes resource being admitted onto a cluster and a NICE-TO-HAVE policy will only cause warning messages which should be noted by platform users.

In this blog post, we talk about about how you can:

- Apply example OPA policies, so-called Gatekeeper constraints, to K8S clusters

- Expose prometheus metrics from Gatekeeper constraint violations

- Create a Grafana dashboard to display key information about violations

If you want to read more about enforcing policies in Kubernetes, check out this article.

The goal of the system we put together is to provide insights to developers and platform users insights about OPA constraints that their application might be violating in a given namespace. We use Grafana for creating an example dashboard. Grafana fetches data it needs for creating the dashboard from Prometheus. We've written a small Go program - depicted as 'Constraint Violation Prometheus Exporter' in the diagram above - to query the Kubernetes API for constraint violations and expose data in Prometheus format. Gatekeeper/OPA is used in Audit mode for our setup, we don't leverage Gatekeeper's capability to deny K8S resources that don't fulfill policy expectations.

Every company has its own set of requirements for applications running on Kubernetes. You might have heard about the production readiness checklist concept (in a nutshell, you want to create a checklist of items for your platform users to apply before they deploy an an application in production). You want to have your own production readiness checklist based on Rego and these links below might give you a good starting point for creating your own list:

- Application Readiness Checklist on Tanzu Developer Center

- Production best practices on learnk8s.io

- Kubernetes in Production: Readiness Checklist and Best Practices on replex.io

Bear in mind that, you will need to create a OPA policies off of your production readiness checklist and you might not be able to cover all of the concerns you might have in your checklist using OPA/Rego. The goal is to focus on things that are easy to extract based on Kubernetes resources definitions, e.g. number of replicas for a K8S Deployment.

For our blog post, we will be using the open source project gatekeeper-library which contains a good set of example constraints. Moreover, the project structure is quite helpful in the sense of providing an example of how you can manage OPA constraints for your company: Rego language which is used for creating OPA policies should be unit tested thoroughly and in src folder, you can find pure rego files and unit tests. The library folder finally contains the Gatekeeper constraint templates that are created out of the rego files in the src folder. Additionally, there's an example constraint for each template together with some target data that would result in both positive and negative results for the constraint. Rego based policies can get quite complex, so in our view it's a must to have Rego unit tests which cover both happy & unhappy paths. We'd recommend to go ahead and fork this project and remove and add policies that represent your company's requirements by following the overall project structure. With this approach you achieve compliance as code that can be easily applied to various environments.

As mentioned earlier, there might be certain constraints which you don't want to directly enforce (MUST vs NICE-TO-HAVE): e.g. on a dev cluster you might not want to enforce >1 replicas, or before enforcing a specific constraint you might want to give platform users enough time to take the necessary precautions (as opposed to blocking their changes immediately). You control this behavious using enforcementAction. By default, enforcementAction is set to deny which is what we would describe as a MUST condition.

In our example, we will install all constraints with the NICE-TO-HAVE condition using enforcementAction: dryrun property. This will make sure that we don't directly impact any workload running on K8S clusters (we could also use enforcementAction: warn for this scenario).

We decided to use Prometheus and Grafana for gathering constraint violation metrics and displaying them, as these are good and popular open source tools.

For exporting/emitting Prometheus metrics, we've written a small program in Golang that uses the prometheus golang library. This program uses the Kubernetes API to discover all constraints applied to the cluster and export certain metrics.

Here's an example metric:

opa_scorecard_constraint_violations{kind="K8sAllowedRepos",name="repo-is-openpolicyagent",violating_kind="Pod",violating_name="utils",violating_namespace="default",violation_enforcement="dryrun",violation_msg="container <utils> has an invalid image repo <mcelep/swiss-army-knife>, allowed repos are [\"openpolicyagent\"]"} 1

Labels are used to represent each constraint violation and we will be using these labels later in the Grafana dashboard.

The Prometheus exporter program listens on tcp port 9141 by default and exposes metrics on path /metrics. It can run locally on your development box as long as you have a valid Kubernetes configuration in your home folder (i.e. if you can run kubectl and have the right permissions). When running on the cluster a incluster parameter is passed in so that it knows where to look up for the cluster credentials. Exporter program connects to Kubernetes API every 10 seconds to scrape data from Kubernetes API.

We've used this blog post as the base for the code.