-

Notifications

You must be signed in to change notification settings - Fork 3

Linting and Testing

For the latest code-coverage information, see codecov.io, for the latest benchmark results, see the benchmark results wiki page.

Currently, flowR contains two testing suites: one for functionality and one for performance. We explain each of them in the following.

In addition to running those tests, you can use the more generalized npm run checkup. This will include the construction of the docker image, the generation of the wiki pages, and the linter.

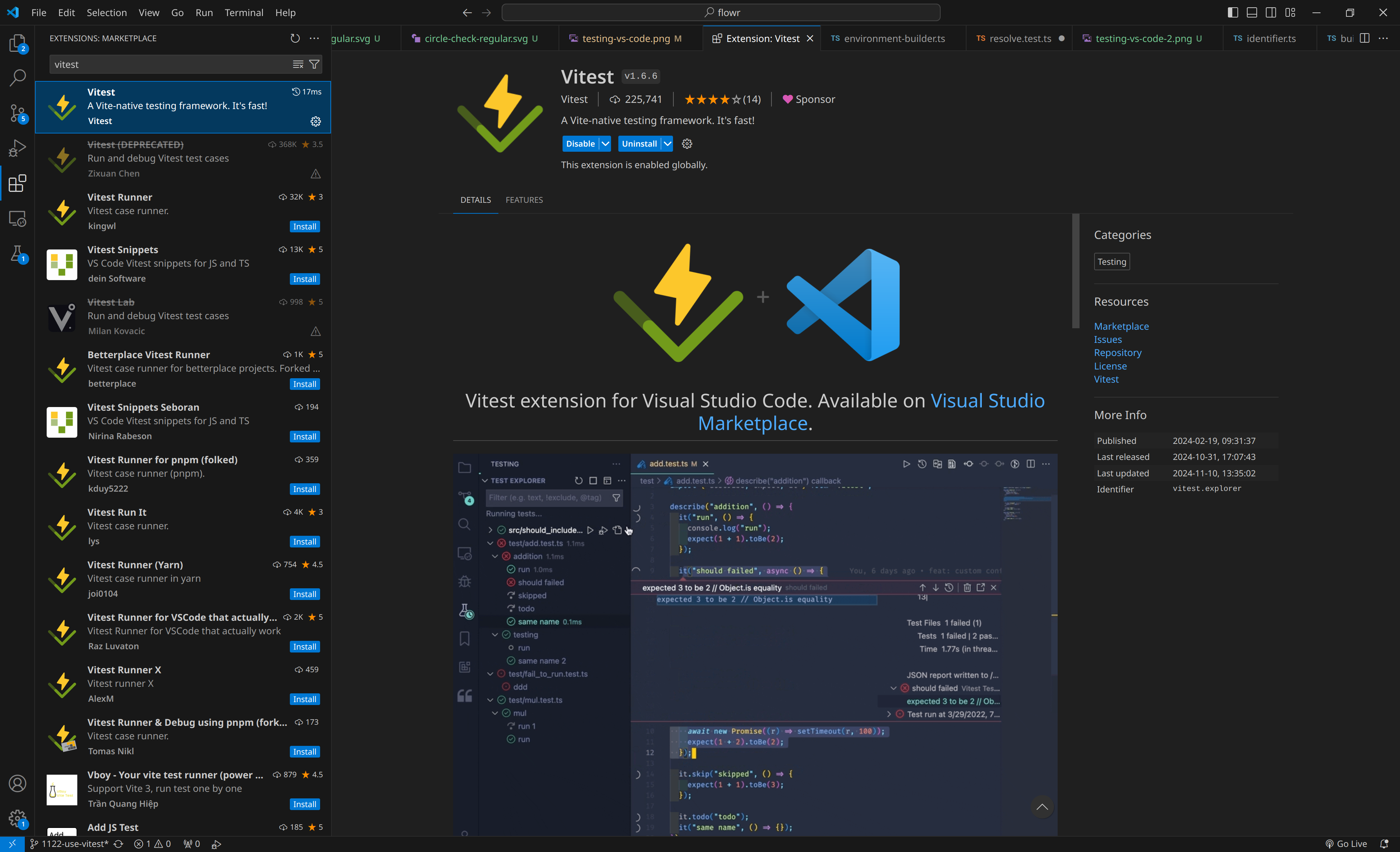

The functionality tests represent conventional unit (and depending on your terminology component/api) tests. We use vitest as our testing framework. You can run the tests by issuing:

npm run testWithin the commandline,

this should automatically drop you into a watch mode which will automatically re-run the tests if you change the code.

If, at any time there are too many errors, you can use --bail=<value> to stop the tests after a certain number of errors.

For example:

npm run test -- --bail=1If you want to run the tests without the watch mode, you can use:

npm run test -- --no-watchTo run all tests, including a coverage report and label summary, run:

npm run test-fullHowever, depending on your local R version, your network connection and potentially other factors, some tests may be skipped automatically as they don't apply to your current system setup

(or can't be tested with the current prerequisites).

Each test can specify such requirements as part of the TestConfiguration, which is then used in the test.skipIf function of vitest.

It is up to the ci to run the tests on different systems to ensure that those tests are ensured to run.

All functionality tests are to be located under test/functionality.

This folder contains three special and important elements:

-

test-setupwhich is the entry point if all tests are run. It should automatically disable logging statements and configure global variables (e.g., if installation tests should run). -

_helperwhich contains helper functions to be used by other tests. -

test-summarywhich may produce a summary of the covered capabilities.

We name all tests using the .test.ts suffix and try to run them in parallel.

Whenever this is not possible (e.g., when using withShell), please use describe.sequential to disable parallel execution for the respective test.

Currently, this is heavily dependent on what you want to test (normalization, dataflow, quad-export, ...) and it is probably best to have a look at existing tests in that area to get an idea of what comfort functionality is available.

Generally, tests should be labeled according to the flowR capabilities they test. The set of currently supported capabilities and their IDs can be found in ./src/r-bridge/data/data.ts. The resulting labels are used in the test report that is generated as part of the test output. They group tests by the capabilities they test and allow the report to display how many tests ensure that any given capability is properly supported.

Various helper functions are available to ease in writing tests with common behaviors, like testing for dataflow, slicing or query results. These can be found in the _helper subdirectory.

For example, an existing test that tests the dataflow graph of a simple variable looks like this:

assertDataflow(label('simple variable', ['name-normal']), shell,

'x', emptyGraph().use('0', 'x')

);When writing dataflow tests, additional settings can be used to reduce the amount of graph data that needs to be pre-written. Notably:

-

expectIsSubgraphindicates that the expected graph is a subgraph, rather than the full graph that the test should generate. The test will then only check if the supplied graph is contained in the result graph, rather than an exact match. -

resolveIdsAsCriterionindicates that the ids given in the expected (sub)graph should be resolved as slicing criteria rather than actual ids. For example, passing12@aas an id in the expected (sub)graph will cause it to be resolved as the corresponding id.

The following example shows both in use.

assertDataflow(label('without distractors', [...OperatorDatabase['<-'].capabilities, 'numbers', 'name-normal', 'newlines', 'name-escaped']),

shell, '`a` <- 2\na',

emptyGraph()

.use('2@a')

.reads('2@a', '1@`a`'),

{

expectIsSubgraph: true,

resolveIdsAsCriterion: true

}

);To run only some tests, vitest allows you to filter tests.

Besides, you can use the watch mode (with npm run test) to only run tests that are affected by your changes.

The performance test suite of flowR uses several suites to check for variations in the required times for certain steps. Although we measure wall time in the CI (which is subject to rather large variations), it should give a rough idea of the performance of flowR. Furthermore, the respective scripts can be used locally as well. To run them, issue:

npm run performance-testSee test/performance for more information on the suites, how to run them, and their results. If you are interested in the results of the benchmarks, see here.

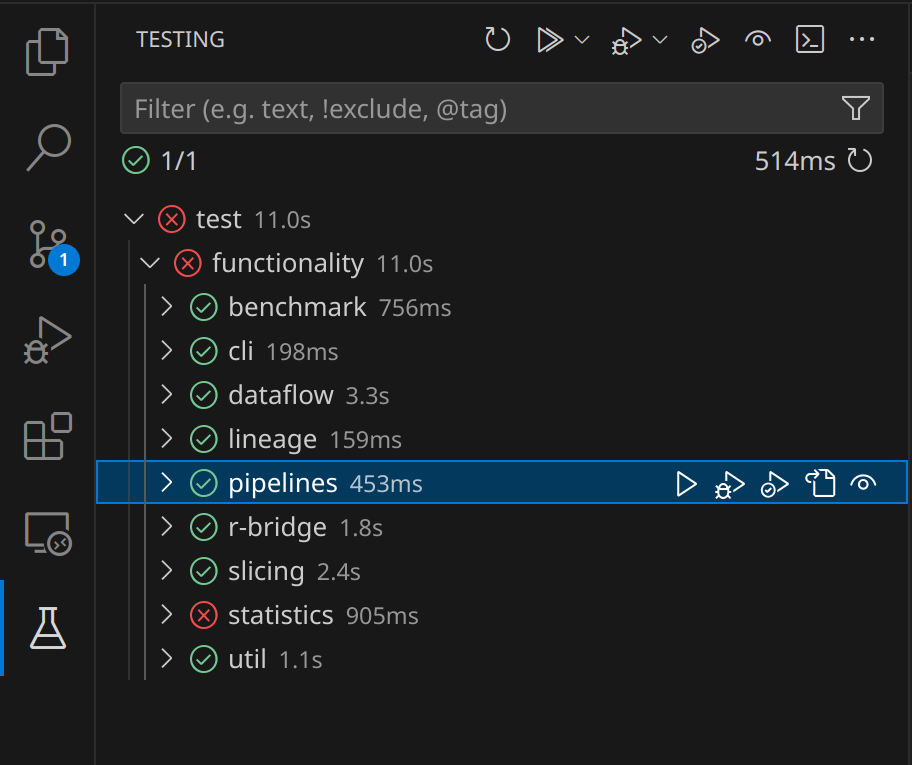

Using the vitest Extension for Visual Studio Code, you can start tests directly from the definition and explore your suite in the Testing tab. To get started, install the vitest Extension.

| Testing Tab | In Code |

|---|---|

|

|

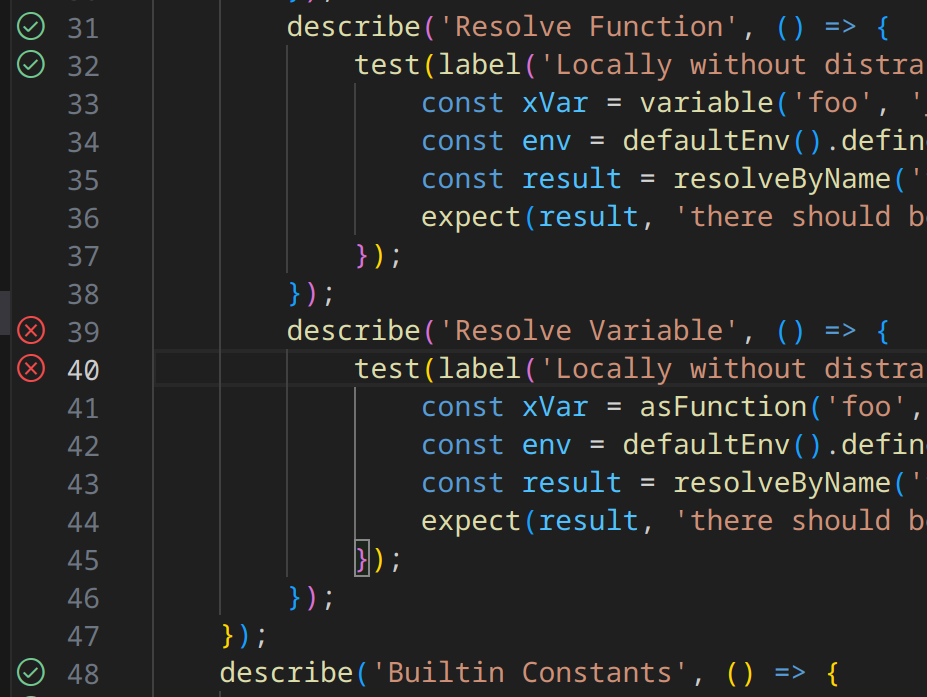

- Left-clicking the

or

Icon next to the code will rerun the test. Right-clicking will open a context menu, allowing you to debug the test.

- In the Testing tab, you can run (and debug) all tests, individual suites or individual tests.

Please follow the official guide here.

We have several workflows defined in .github/workflows. We explain the most important workflows in the following:

-

qa.yaml is the main workflow that will run different steps depending on several factors. It is responsible for:

- running the functionality and performance tests

- uploading the results to the benchmark page for releases

- running the functionality tests on different operating systems (Windows, macOS, Linux) and with different versions of R

- reporting code coverage

- running the linter and reporting its results

- deploying the documentation to GitHub Pages

- running the functionality and performance tests

- release.yaml is responsible for creating a new release, only to be run by repository owners. Furthermore, it adds the new docker image to docker hub.

- broken-links-and-wiki.yaml repeatedly tests that all links are not dead!

There are two linting scripts. The main one:

npm run lintAnd a weaker version of the first (allowing for todo comments) which is run automatically in the pre-push githook as explained in the CONTRIBUTING.md:

npm run lint-localBesides checking coding style (as defined in the package.json), the full linter runs the license checker.

In case you are unaware, eslint can automatically fix several linting problems. So you may be fine by just running:

npm run lint-local -- --fixBy now, the rules should be rather stable and so, if the linter fails, it is usually best if you (if necessary) read the respective description and fix the respective problem. Rules in this project cover general JavaScript issues using regular ESLint, TypeScript-specific issues using typescript-eslint, and code formatting with ESLint Stylistic.

However, in case you think that the linter is wrong, please do not hesitate to open a new issue.

flowR is licensed under the GPLv3 License requiring us to only rely on compatible licenses. For now, this list is hardcoded as part of the npm license-compat script so it can very well be that a new dependency you add causes the checker to fail — even though it is compatible. In that case, please either open a new issue or directly add the license to the list (including a reference to why it is compatible).