FastAPI based API for transcribing audio files using faster-whisper and NVIDIA NeMo

More details on this project on this blog post.

- 🤗 Open-source: Our project is open-source and based on open-source libraries, allowing you to customize and extend it as needed.

- ⚡ Fast: The faster-whisper library and CTranslate2 make audio processing incredibly fast compared to other implementations.

- 🐳 Easy to deploy: You can deploy the project on your workstation or in the cloud using Docker.

- 🔥 Batch requests: You can transcribe multiple audio files at once because batch requests are implemented in the API.

- 💸 Cost-effective: As an open-source solution, you won't have to pay for costly ASR platforms.

- 🫶 Easy-to-use API: With just a few lines of code, you can use the API to transcribe audio files or even YouTube videos.

- Linux (tested on Ubuntu Server 22.04)

- Python 3.9

- Docker

- NVIDIA GPU + NVIDIA Container Toolkit

To learn more about the prerequisites to run the API, check out the Prerequisites section of the blog post.

Build the image.

docker build -t wordcab-transcribe:latest .Run the container.

docker run -d --name wordcab-transcribe \

--gpus all \

--shm-size 1g \

--restart unless-stopped \

-p 5001:5001 \

-v ~/.cache:/root/.cache \

wordcab-transcribe:latestYou can mount a volume to the container to load local whisper models.

If you mount a volume, you need to update the WHISPER_MODEL environment variable in the .env file.

docker run -d --name wordcab-transcribe \

--gpus all \

--shm-size 1g \

--restart unless-stopped \

-p 5001:5001 \

-v ~/.cache:/root/.cache \

-v /path/to/whisper/models:/app/whisper/models \

wordcab-transcribe:latestYou can simply enter the container using the following command:

docker exec -it wordcab-transcribe /bin/bashThis is useful to check everything is working as expected.

⏱️ Profile the API

You can profile the process executions using py-spy as a profiler.

# Launch the container with the cap-add=SYS_PTRACE option

docker run -d --name wordcab-transcribe \

--gpus all \

--shm-size 1g \

--restart unless-stopped \

--cap-add=SYS_PTRACE \

-p 5001:5001 \

-v ~/.cache:/root/.cache \

wordcab-transcribe:latest

# Enter the container

docker exec -it wordcab-transcribe /bin/bash

# Install py-spy

pip install py-spy

# Find the PID of the process to profile

top # 28 for example

# Run the profiler

py-spy record --pid 28 --format speedscope -o profile.speedscope.json

# Launch any task on the API to generate some profiling data

# Exit the container and copy the generated file to your local machine

exit

docker cp wordcab-transcribe:/app/profile.speedscope.json profile.speedscope.json

# Go to https://www.speedscope.app/ and upload the file to visualize the profileOnce the container is running, you can test the API.

The API documentation is available at http://localhost:5001/docs.

- Audio file:

import json

import requests

filepath = "/path/to/audio/file.wav" # or any other convertible format by ffmpeg

data = {

"alignment": True, # Longer processing time but better timestamps

"diarization": True, # Longer processing time but speaker segment attribution

"dual_channel": False, # Only for stereo audio files with one speaker per channel

"source_lang": "en", # optional, default is "en"

"timestamps": "s", # optional, default is "s". Can be "s", "ms" or "hms".

"word_timestamps": False, # optional, default is False

}

with open(filepath, "rb") as f:

files = {"file": f}

response = requests.post(

"http://localhost:5001/api/v1/audio",

files=files,

data=data,

)

r_json = response.json()

filename = filepath.split(".")[0]

with open(f"{filename}.json", "w", encoding="utf-8") as f:

json.dump(r_json, f, indent=4, ensure_ascii=False)- YouTube video:

import json

import requests

headers = {"accept": "application/json", "Content-Type": "application/json"}

params = {"url": "https://youtu.be/JZ696sbfPHs"}

data = {

"alignment": True, # Longer processing time but better timestamps

"diarization": True, # Longer processing time but speaker segment attribution

"source_lang": "en", # optional, default is "en"

"timestamps": "s", # optional, default is "s". Can be "s", "ms" or "hms".

"word_timestamps": False, # optional, default is False

}

response = requests.post(

"http://localhost:5001/api/v1/youtube",

headers=headers,

params=params,

data=json.dumps(data),

)

r_json = response.json()

with open("youtube_video_output.json", "w", encoding="utf-8") as f:

json.dump(r_json, f, indent=4, ensure_ascii=False)Before launching the API, be sure to install torch and torchaudio on your machine.

pip install --upgrade torch==1.13.1+cu117 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu117Then, you can launch the API using the following command.

poetry run uvicorn wordcab_transcribe.main:app --reload- Ensure you have the following tools :

- poetry

- nox and nox-poetry

- Clone the repo

git clone

cd wordcab-transcribe- Install dependencies and start coding

poetry shell

poetry install --no-cache

# install pre-commit hooks

nox --session=pre-commit -- install

# open your IDE

code .- Run tests

# run all tests

nox

# run a specific session

nox --session=tests # run tests

nox --session=pre-commit # run pre-commit hooks

# run a specific test

nox --session=tests -- -k test_something- Create an issue for the feature or bug you want to work on.

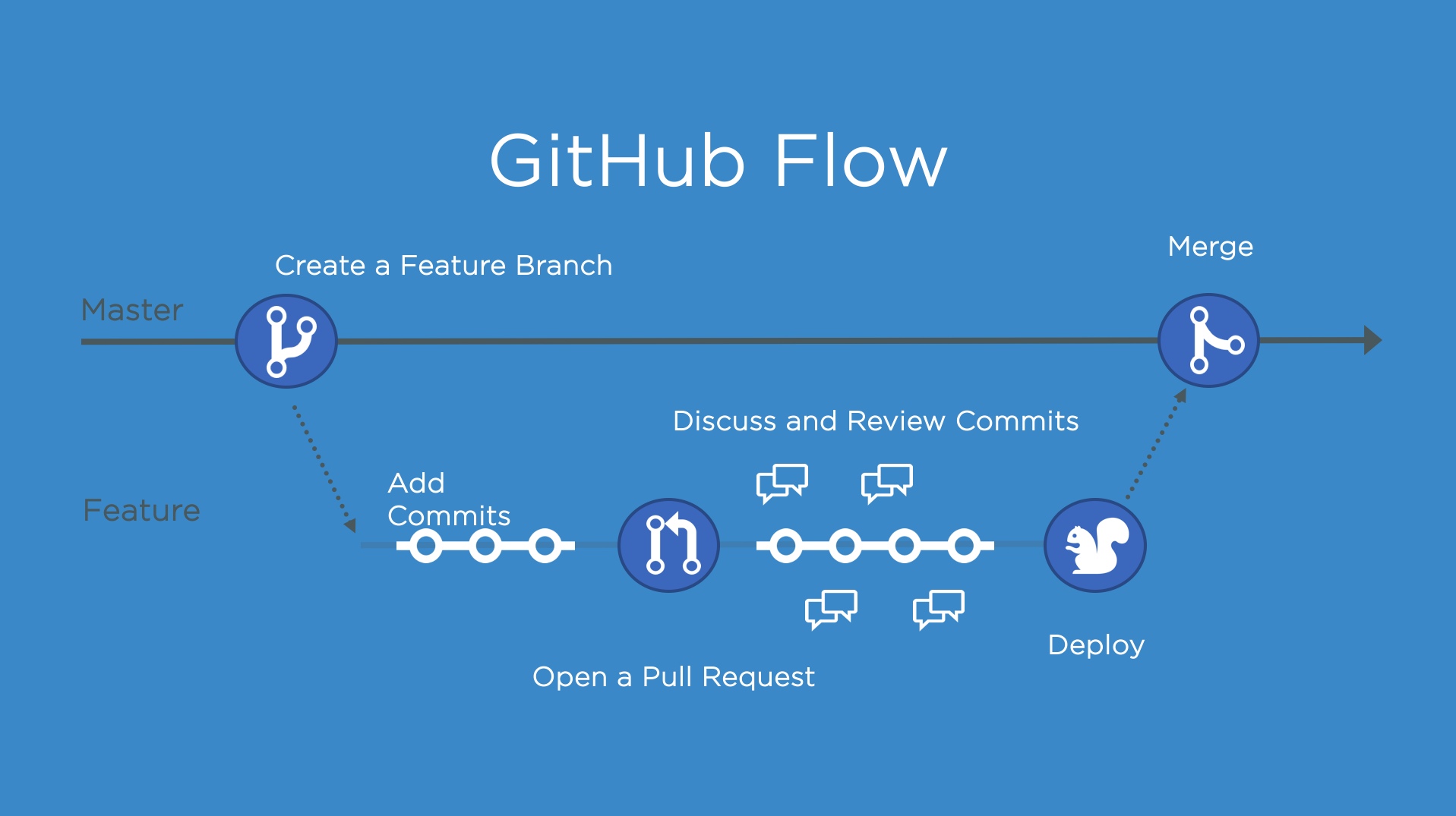

- Create a branch using the left panel on GitHub.

git fetchandgit checkoutthe branch.- Make changes and commit.

- Push the branch to GitHub.

- Create a pull request and ask for review.

- Merge the pull request when it's approved and CI passes.

- Delete the branch.

- Update your local repo with

git fetchandgit pull.