-

Notifications

You must be signed in to change notification settings - Fork 0

1.1 Knowing Framework

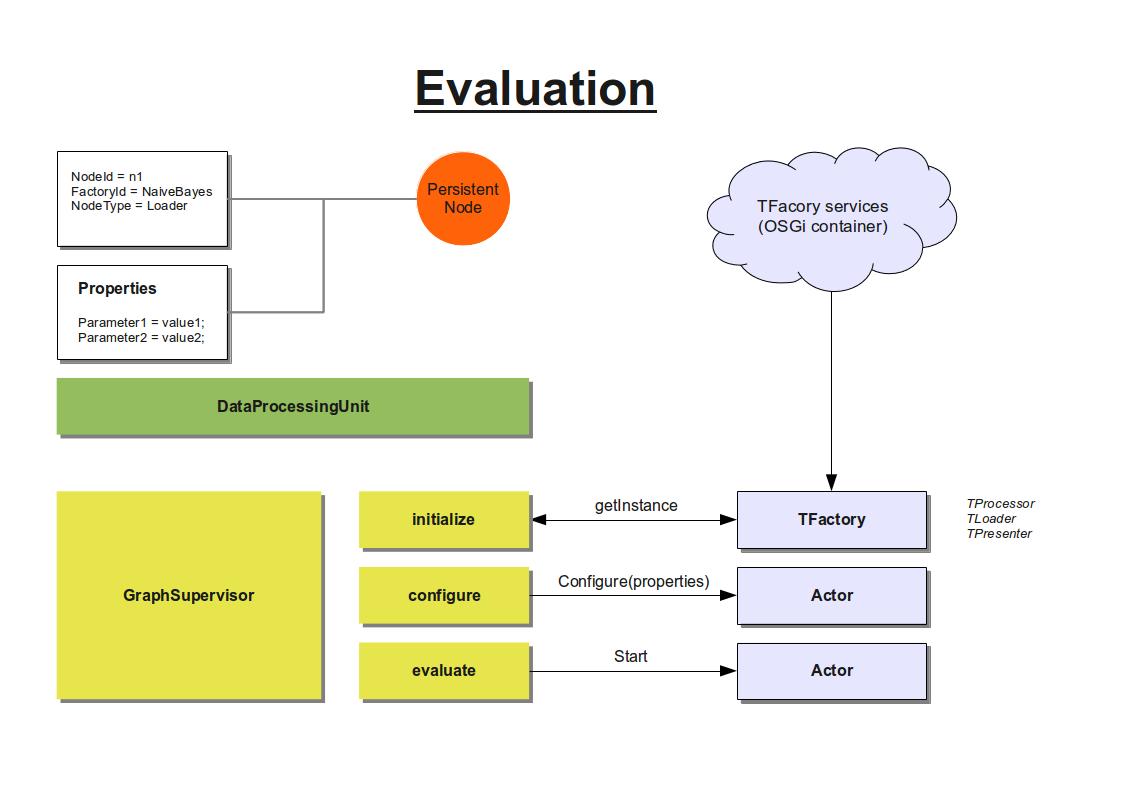

The "Knowing" Framework is designed to represent Data Mining algorithms as services in an OSGi Environment. These algorithms can be connected to a so called DataProcessingUnits (DPU) and be persistet as a XML file. The DPUs are being evaluated via the framework. The framework works with a MessagePassing-System to run each Node in the DPU in parallel.

An example DPU could look like this

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<DataProcessingUnit name="arff-cross-validation">

<description>Cross Validation with NaiveBayes output to TablePresenter</description>

<tags>Cross, Validation, Cross Validation, ARFF, NaiveBayes</tags>

<nodes>

<node nodeType="processor" factoryId="de.lmu.ifi.dbs.knowing.core.validation.CrossValidator" id="validator">

<properties>

<property key="classifier" value="weka.classifiers.bayes.NaiveBayes" />

<property key="fold" value="5" />

<property key="folds" value="10" />

<property key="kernel-estimator" value="false" />

<property key="supervised-discretization" value="false" />

</properties>

</node>

<node id="ARFF" type="loader" factoryId="weka.core.converters.ArffLoader" >

<properties>

<property key="file" value="iris.arff" />

</properties>

</node>

<node id="TablePresenter" type="presenter" factoryId="de.lmu.ifi.dbs.knowing.core.swt.TablePresenter" >

<properties>

<property key="rows" value="100" />

</properties>

</node>

</nodes>

<edges>

<edge id="arff_validator" source="ARFF" target="validator" />

<edge id="validator_presenter" source="validator" target="TablePresenter" />

</edges>

</DataProcessingUnit>You can imagine a DPU as a graph. This could look like this

!

Every node contains a type, factoryId and optional properties. Via the factoryId the factory-service (OSGi) is obtained. The factory creates an actor within the akka framework. A GraphSupervisor manages all and starts and stops the evaluation process.

A GraphSupervisor is instantiated in scala like this

val supervisor = actorOf(new GraphSupervisor(dpu, uifactory, dpuPath)).start

supervisor ! StartThe GraphSupervisor needs the dpu it should evaluate and the path to this DPU to set relative filepaths that some loaders may use. The uifactory is responsible for creating valid UI-components the TPresenter nodes can use to show their results. Currently there is only a SWT-Plugin which can be used to created UIFactory that create org.eclipse.swt.widgets.Composite containers.

Knowing is build on some simple ideas inspired of WEKA and Akka.

The data mining process is strucuted as a graph where each node represents another step in the evaluation process. These steps can depend on each other or be completly independet. Every dependency is implemented as an EventListener. All nodes are instantiated as actors and send their events as asynchronous messages. Actors are leightweight threads (yeah, some years ago threads where leightweight processes). In Knowing every actor that takes part in the data mining process is called processor and is represented by a trait called TProcessor.

Processors encapsulate every functionality inside the data mining process. If you want to created new features, you have to use the TProcessor. Otherwise you can use one of the standard implementations described below.

A processor handles incoming messages by calling corresponding methods. Important messages (events) are:

- Results(instances: Instances, port: Option[String])

- Query(query: Instance)

- Queries(queries: Instances)

- QueryResults(results: Instances, query: Instance)

All are events here

def result(instances: Instances)Results messages are handled in the build-method by default. After the build method is executed, the isBuild flag is set to true. This flag causes Queries to be cached, until the build-method was called once.

def query(query: Instance): InstancesIs called once if a Query event arrives, or foreach query inside a Queries event. The value returned is send to the actor which sent the query.

def result(result: Instances, query: Instance)After sending a Query or Queries event, this method is called when the QueryResults event arrives. The query is always included to help assigning queries to results.

def configure(properties: Properties)Before the data mining process is started every processor is configured. This method is inherited by the TConfigurable trait and gets called when a Configure event arrives.

def start = debug(this, "Running " + self.getActorClassName)Every processor is started via a Start event, which doesn't contain any data. The default implementation just prints a debug messages, that the processor has started correctly.

Maybe you want to react different on some events. For this purpose you can override

protected def customReceive: Receivewhich is a partial function and tries to pattern match on a single input parameter. If failing, it falls back to defaultReceive.

Currently there are two classes which extend TProcessor to provide data mining functionality.

TFilter provides a simple trait to implement filters.

- Results events are being filtered and send to all conntected nodes

- A filter is by default build. (isBuild = true)

- Only query method must be implemented to provide functionality.

- filter method could be overriden, if instances have to be processed completly

TClassifier is used to implement classifiers. It has only one additional method getClassLabels():Array[String], which is currently not relevant.

These special processors provide functionality for IO-operations.

TLoader. At the beginning of every data mining process there is data. A loader gets this data and sends it to his depending processors via a Results event.

TSaver saves intermediary results or created data.

At the end of every data mining process there shoulde be a result. We want to see that. For this purpose presenters show the results in tables, charts and reports. This is handle by by the TPresenter trait. Every presenter is bound to a special UI-System (e.g. SWT, Swing, Vaadin,..) and can handle only UI-Factories which use the same UI-System.

- Example---simple-classifier-training

- Create-Classifer-Processor-(Scala)

- Create-Classifier-(Java)

- Create-Processor-(Java)

Sometimes processors create different outputs which should be send to different targets. For this purpose you can define ports in in your edges, which connect the specific nodes.

<edge id="edgeId" source="mySource" sourcePort="output1" target="myTarget" targetPort="input1" />The source/targetPort property stores the port, default is "default". Your processor can then send events to this specific output or determine to which input the message belongs. For example:

sendEvent(Results(one), OUTPUT1)

sendEvent(Results(two), OUTPUT2)All examples can be explored in knowing.test