-

Notifications

You must be signed in to change notification settings - Fork 70

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

1 parent

6cd6199

commit 4f3a43c

Showing

10 changed files

with

496 additions

and

2 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,223 @@ | ||

| { | ||

| "cells": [ | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": { | ||

| "id": "oWgwstPBqiJP" | ||

| }, | ||

| "source": [ | ||

| "---\n", | ||

| "description: Cookbook with examples of the Langfuse Integration for Mirascope (Python).\n", | ||

| "category: Integrations\n", | ||

| "---\n", | ||

| "\n", | ||

| "# Cookbook: Mirascope x Langfuse integration\n", | ||

| "\n", | ||

| "[Mirascope](https://www.mirascope.io/) is a Python toolkit for building with LLMs. It allows devs to write Pythonic code while profiting from its abstractions to common LLM use cases and models.\n", | ||

| "\n", | ||

| "[Langfuse](https://langfuse.com/docs) is an open source LLM engineering platform. Traces, evals, prompt management and metrics to debug and improve your LLM application.\n", | ||

| "\n", | ||

| "With the [Langfuse <-> Mirascope integration](https://langfuse.com/docs/integrations/mirascope), you can log your application to Langfuse by adding the `@with_langfuse` decorator.\n", | ||

| "\n", | ||

| "Let's dive right in with some examples:" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": { | ||

| "colab": { | ||

| "base_uri": "https://localhost:8080/" | ||

| }, | ||

| "id": "S3PsmedXPfRb", | ||

| "outputId": "864c38c2-2234-4f91-dbc3-92ce8dde423e" | ||

| }, | ||

| "outputs": [], | ||

| "source": [ | ||

| "# Install Mirascope and Langfuse\n", | ||

| "%pip install mirascope[all] langfuse" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": { | ||

| "id": "k_HOC3bzgG5u" | ||

| }, | ||

| "outputs": [], | ||

| "source": [ | ||

| "import os\n", | ||

| "\n", | ||

| "# Get keys for your project from the project settings page\n", | ||

| "# https://cloud.langfuse.com\n", | ||

| "os.environ[\"LANGFUSE_PUBLIC_KEY\"] = \"\"\n", | ||

| "os.environ[\"LANGFUSE_SECRET_KEY\"] = \"\"\n", | ||

| "os.environ[\"LANGFUSE_HOST\"] = \"https://cloud.langfuse.com\" # 🇪🇺 EU region\n", | ||

| "# os.environ[\"LANGFUSE_HOST\"] = \"https://us.cloud.langfuse.com\" # 🇺🇸 US region\n", | ||

| "\n", | ||

| "# Your openai key\n", | ||

| "os.environ[\"OPENAI_API_KEY\"] = \"\"" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": { | ||

| "id": "vbNfTv2ZpNnl" | ||

| }, | ||

| "source": [ | ||

| "## Log a first simple call" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": { | ||

| "colab": { | ||

| "base_uri": "https://localhost:8080/" | ||

| }, | ||

| "id": "vkhWieQqQ0V0", | ||

| "outputId": "f3f67311-c314-40ec-debf-b14f792bade9" | ||

| }, | ||

| "outputs": [], | ||

| "source": [ | ||

| "from mirascope.langfuse import with_langfuse\n", | ||

| "from mirascope.openai import OpenAICall, OpenAICallParams\n", | ||

| "\n", | ||

| "@with_langfuse\n", | ||

| "class GeographyGenius(OpenAICall):\n", | ||

| " prompt_template = \"What's the capital of {country}?\"\n", | ||

| " country: str\n", | ||

| " call_params = OpenAICallParams(model=\"gpt-4o\", temperature=1)\n", | ||

| "\n", | ||

| "genius = GeographyGenius(country=\"Japan\")\n", | ||

| "response = genius.call() # logs to langfuse\n", | ||

| "print(response.content)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": { | ||

| "id": "fb6VXNG-KDIb" | ||

| }, | ||

| "source": [ | ||

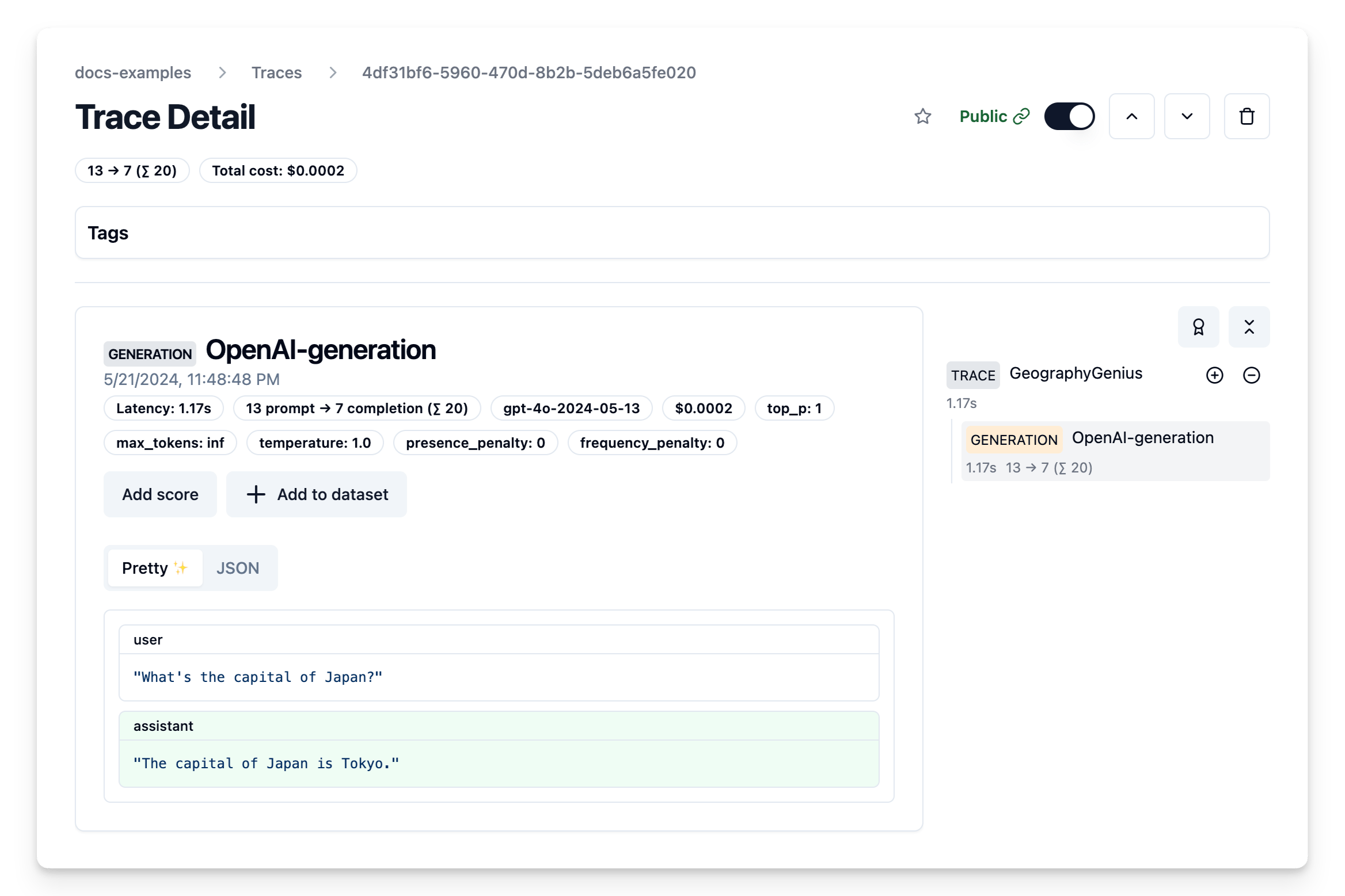

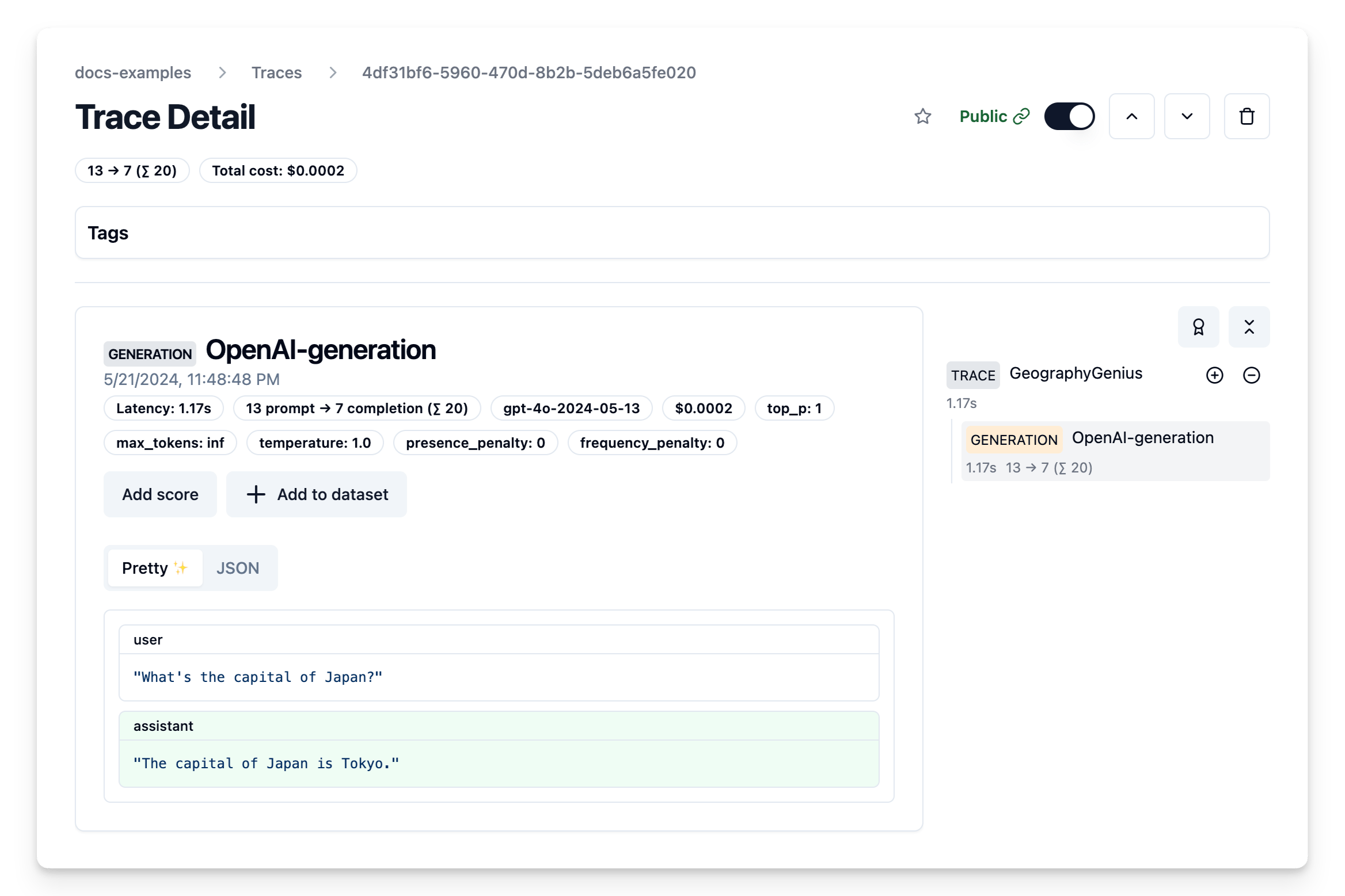

| "[**Example trace**](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/4df31bf6-5960-470d-8b2b-5deb6a5fe020?observation=90de9754-c5df-4c3d-8e38-87d507392495)\n", | ||

| "\n", | ||

| "" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": { | ||

| "id": "-gTt8qBAqdUZ" | ||

| }, | ||

| "source": [ | ||

| "## Let's make this more complex\n", | ||

| "\n", | ||

| "We'll use\n", | ||

| "- Mirascope's `@with_langfuse` decorator to log the call to Langfuse within the Mirascope classes\n", | ||

| "- and Langfuse default [`@observe` decorator](https://langfuse.com/docs/sdk/python/decorators) which works with any Python function\n", | ||

| "\n", | ||

| "to create and trace a fun rap battle and group everything into a single trace." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": { | ||

| "colab": { | ||

| "base_uri": "https://localhost:8080/" | ||

| }, | ||

| "id": "xzjuB5VsiPf3", | ||

| "outputId": "efd35a18-e2cd-46d4-c659-839c9bee7497" | ||

| }, | ||

| "outputs": [], | ||

| "source": [ | ||

| "from openai.types.chat import ChatCompletionMessageParam\n", | ||

| "from mirascope.openai import OpenAICall\n", | ||

| "from langfuse.decorators import observe\n", | ||

| "\n", | ||

| "@with_langfuse\n", | ||

| "class Rapper(OpenAICall):\n", | ||

| " prompt_template = \"\"\"\n", | ||

| " SYSTEM: This is a rap battle. You are {person}. Make sure to defend you {position}. Only drop two lines at a time, make them rhyme.\n", | ||

| " MESSAGES: {history}\n", | ||

| " \"\"\"\n", | ||

| " history: list[ChatCompletionMessageParam] = []\n", | ||

| " person: str\n", | ||

| " position: str\n", | ||

| "\n", | ||

| "zuck = Rapper(person=\"Mark Zuckerberg\", position=\"Open source will win in VR/AR/Visual Computing\", history=[])\n", | ||

| "timapple = Rapper(person=\"Tim Cook\", position=\"Apple builds the best headsets as we are integrated in software and hardware\", history=[])\n", | ||

| "\n", | ||

| "# utility function to update the history of both rappers\n", | ||

| "def add_to_history(new_line: str, rapper: str):\n", | ||

| " zuck.history += [\n", | ||

| " {\"role\": \"assistant\" if rapper == \"zuck\" else \"user\", \"content\": new_line},\n", | ||

| " ]\n", | ||

| " timapple.history += [\n", | ||

| " {\"role\": \"assistant\" if rapper == \"timapple\" else \"user\", \"content\": new_line},\n", | ||

| " ]\n", | ||

| "\n", | ||

| "## use the langfuse @observe decorator to log any Python function and wrap all logs within it into a single trace\n", | ||

| "@observe()\n", | ||

| "def rap_battle(lines: int):\n", | ||

| "\n", | ||

| " # Make sure that the battle starts of juicy\n", | ||

| " add_to_history(\"Yo wassup Zuck, I hate OSS\", \"timapple\")\n", | ||

| "\n", | ||

| " for i in range(lines):\n", | ||

| " zuck_line = zuck.call()\n", | ||

| " print(f\"(Zuck): {zuck_line.content}\")\n", | ||

| " add_to_history(zuck_line.content, \"zuck\")\n", | ||

| "\n", | ||

| " timapple_line = timapple.call()\n", | ||

| " print(f\"(Tim Apple): {timapple_line.content}\")\n", | ||

| " add_to_history(timapple_line.content, \"timapple\")\n", | ||

| " return [item[\"content\"] for item in timapple.history]\n", | ||

| "\n", | ||

| "rap_battle(4);" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": { | ||

| "id": "lL8LNzRFr4eW" | ||

| }, | ||

| "source": [ | ||

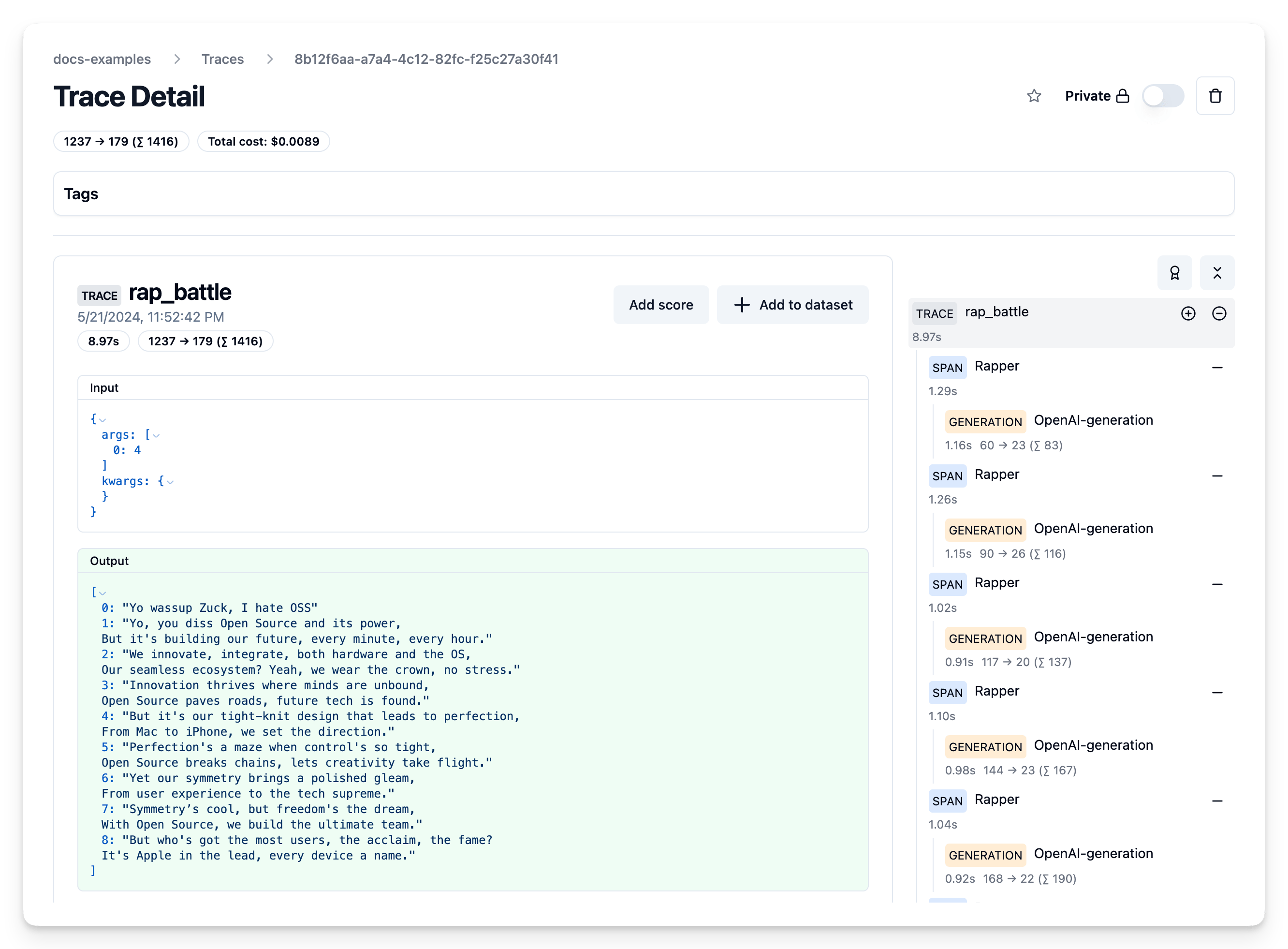

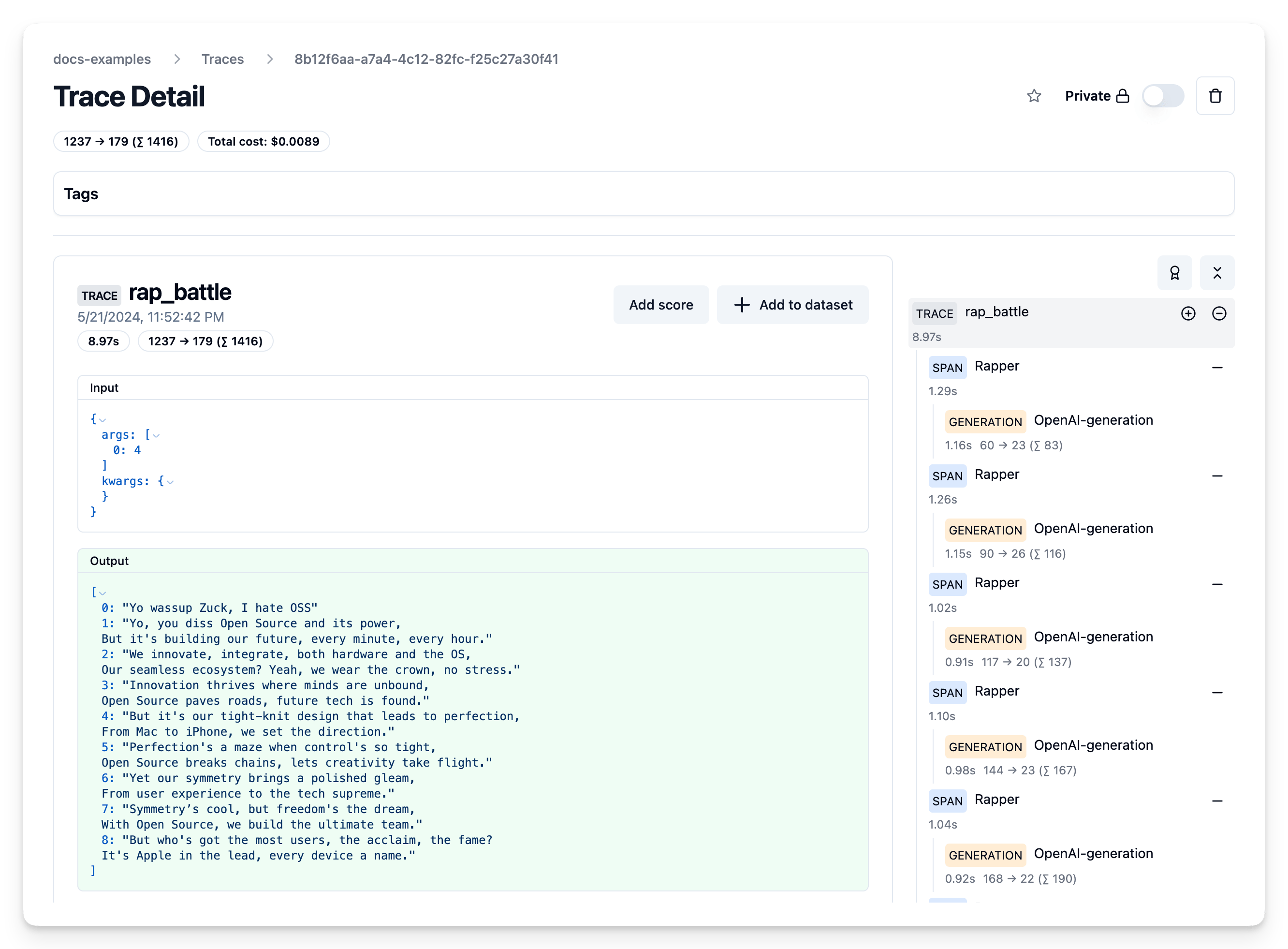

| "Head over to the Langfuse Traces table [in Langfuse Cloud](https://cloud.langfuse.com ) to see the entire chat history, token counts, cost, model, latencies and more\n", | ||

| "\n", | ||

| "[**Example trace**](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/8b12f6aa-a7a4-4c12-82fc-f25c27a30f41)\n", | ||

| "\n", | ||

| "" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": { | ||

| "id": "sgNkqrAZsOnj" | ||

| }, | ||

| "source": [ | ||

| "## That's a wrap.\n", | ||

| "\n", | ||

| "There's a lot more you can do:\n", | ||

| "\n", | ||

| "- **Mirascope**: Head over to [their docs](https://https://docs.mirascope.io/latest/) to learn more about what you can do with the framework.\n", | ||

| "- **Langfuse**: Have a look at Evals, Datasets, Prompt Management to start exploring [all that Langfuse can do](https://langfuse.com/docs)." | ||

| ] | ||

| } | ||

| ], | ||

| "metadata": { | ||

| "colab": { | ||

| "provenance": [] | ||

| }, | ||

| "kernelspec": { | ||

| "display_name": "Python 3", | ||

| "name": "python3" | ||

| }, | ||

| "language_info": { | ||

| "name": "python" | ||

| } | ||

| }, | ||

| "nbformat": 4, | ||

| "nbformat_minor": 0 | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,4 @@ | ||

| { | ||

| "tracing": "Tracing", | ||

| "example-python": "Example Notebook" | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,126 @@ | ||

| --- | ||

| description: Cookbook with examples of the Langfuse Integration for Mirascope (Python). | ||

| category: Integrations | ||

| --- | ||

|

|

||

| # Cookbook: Mirascope x Langfuse integration | ||

|

|

||

| [Mirascope](https://www.mirascope.io/) is a Python toolkit for building with LLMs. It allows devs to write Pythonic code while profiting from its abstractions to common LLM use cases and models. | ||

|

|

||

| [Langfuse](https://langfuse.com/docs) is an open source LLM engineering platform. Traces, evals, prompt management and metrics to debug and improve your LLM application. | ||

|

|

||

| With the [Langfuse <-> Mirascope integration](https://langfuse.com/docs/integrations/mirascope), you can log your application to Langfuse by adding the `@with_langfuse` decorator. | ||

|

|

||

| Let's dive right in with some examples: | ||

|

|

||

|

|

||

| ```python | ||

| # Install Mirascope and Langfuse | ||

| %pip install mirascope[all] langfuse | ||

| ``` | ||

|

|

||

|

|

||

| ```python | ||

| import os | ||

|

|

||

| # Get keys for your project from the project settings page | ||

| # https://cloud.langfuse.com | ||

| os.environ["LANGFUSE_PUBLIC_KEY"] = "" | ||

| os.environ["LANGFUSE_SECRET_KEY"] = "" | ||

| os.environ["LANGFUSE_HOST"] = "https://cloud.langfuse.com" # 🇪🇺 EU region | ||

| # os.environ["LANGFUSE_HOST"] = "https://us.cloud.langfuse.com" # 🇺🇸 US region | ||

|

|

||

| # Your openai key | ||

| os.environ["OPENAI_API_KEY"] = "" | ||

| ``` | ||

|

|

||

| ## Log a first simple call | ||

|

|

||

|

|

||

| ```python | ||

| from mirascope.langfuse import with_langfuse | ||

| from mirascope.openai import OpenAICall, OpenAICallParams | ||

|

|

||

| @with_langfuse | ||

| class GeographyGenius(OpenAICall): | ||

| prompt_template = "What's the capital of {country}?" | ||

| country: str | ||

| call_params = OpenAICallParams(model="gpt-4o", temperature=1) | ||

|

|

||

| genius = GeographyGenius(country="Japan") | ||

| response = genius.call() # logs to langfuse | ||

| print(response.content) | ||

| ``` | ||

|

|

||

| [**Example trace**](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/4df31bf6-5960-470d-8b2b-5deb6a5fe020?observation=90de9754-c5df-4c3d-8e38-87d507392495) | ||

|

|

||

|  | ||

|

|

||

| ## Let's make this more complex | ||

|

|

||

| We'll use | ||

| - Mirascope's `@with_langfuse` decorator to log the call to Langfuse within the Mirascope classes | ||

| - and Langfuse default [`@observe` decorator](https://langfuse.com/docs/sdk/python/decorators) which works with any Python function | ||

|

|

||

| to create and trace a fun rap battle and group everything into a single trace. | ||

|

|

||

|

|

||

| ```python | ||

| from openai.types.chat import ChatCompletionMessageParam | ||

| from mirascope.openai import OpenAICall | ||

| from langfuse.decorators import observe | ||

|

|

||

| @with_langfuse | ||

| class Rapper(OpenAICall): | ||

| prompt_template = """ | ||

| SYSTEM: This is a rap battle. You are {person}. Make sure to defend you {position}. Only drop two lines at a time, make them rhyme. | ||

| MESSAGES: {history} | ||

| """ | ||

| history: list[ChatCompletionMessageParam] = [] | ||

| person: str | ||

| position: str | ||

|

|

||

| zuck = Rapper(person="Mark Zuckerberg", position="Open source will win in VR/AR/Visual Computing", history=[]) | ||

| timapple = Rapper(person="Tim Cook", position="Apple builds the best headsets as we are integrated in software and hardware", history=[]) | ||

|

|

||

| # utility function to update the history of both rappers | ||

| def add_to_history(new_line: str, rapper: str): | ||

| zuck.history += [ | ||

| {"role": "assistant" if rapper == "zuck" else "user", "content": new_line}, | ||

| ] | ||

| timapple.history += [ | ||

| {"role": "assistant" if rapper == "timapple" else "user", "content": new_line}, | ||

| ] | ||

|

|

||

| ## use the langfuse @observe decorator to log any Python function and wrap all logs within it into a single trace | ||

| @observe() | ||

| def rap_battle(lines: int): | ||

|

|

||

| # Make sure that the battle starts of juicy | ||

| add_to_history("Yo wassup Zuck, I hate OSS", "timapple") | ||

|

|

||

| for i in range(lines): | ||

| zuck_line = zuck.call() | ||

| print(f"(Zuck): {zuck_line.content}") | ||

| add_to_history(zuck_line.content, "zuck") | ||

|

|

||

| timapple_line = timapple.call() | ||

| print(f"(Tim Apple): {timapple_line.content}") | ||

| add_to_history(timapple_line.content, "timapple") | ||

| return [item["content"] for item in timapple.history] | ||

|

|

||

| rap_battle(4); | ||

| ``` | ||

|

|

||

| Head over to the Langfuse Traces table [in Langfuse Cloud](https://cloud.langfuse.com ) to see the entire chat history, token counts, cost, model, latencies and more | ||

|

|

||

| [**Example trace**](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/8b12f6aa-a7a4-4c12-82fc-f25c27a30f41) | ||

|

|

||

|  | ||

|

|

||

| ## That's a wrap. | ||

|

|

||

| There's a lot more you can do: | ||

|

|

||

| - **Mirascope**: Head over to [their docs](https://https://docs.mirascope.io/latest/) to learn more about what you can do with the framework. | ||

| - **Langfuse**: Have a look at Evals, Datasets, Prompt Management to start exploring [all that Langfuse can do](https://langfuse.com/docs). |

Oops, something went wrong.