Supervision 0.25.0 is here! Featuring a more robust LineZone crossing counter, support for tracking KeyPoints, Python 3.13 compatibility, and 3 new metrics: Precision, Recall and Mean Average Recall. The update also includes smart label positioning, improved Oriented Bounding Box support, and refined error handling. Thank you to all contributors - especially those who answered the call of Hacktoberfest!

Changelog

🚀 Added

- Essential update to the

LineZone: when computing line crossings, detections that jitter might be counted twice (or more!). This can now be solved with theminimum_crossing_thresholdargument. If you set it to2or more, extra frames will be used to confirm the crossing, improving the accuracy significantly. (#1540)

hb-final.mp4

- It is now possible to track objects detected as

KeyPoints. See the complete step-by-step guide in the Object Tracking Guide. (#1658)

import numpy as np

import supervision as sv

from ultralytics import YOLO

model = YOLO("yolov8m-pose.pt")

tracker = sv.ByteTrack()

trace_annotator = sv.TraceAnnotator()

def callback(frame: np.ndarray, _: int) -> np.ndarray:

results = model(frame)[0]

key_points = sv.KeyPoints.from_ultralytics(results)

detections = key_points.as_detections()

detections = tracker.update_with_detections(detections)

annotated_image = trace_annotator.annotate(frame.copy(), detections)

return annotated_image

sv.process_video(

source_path="input_video.mp4",

target_path="output_video.mp4",

callback=callback

)track-keypoints-with-smoothing.mp4

See the guide for the full code used to make the video

-

Added

is_emptymethod toKeyPointsto check if there are any keypoints in the object. (#1658) -

Added

as_detectionsmethod toKeyPointsthat convertsKeyPointstoDetections. (#1658) -

Added a new video to

supervision[assets]. (#1657)

from supervision.assets import download_assets, VideoAssets

path_to_video = download_assets(VideoAssets.SKIING)- Supervision can now be used with

Python 3.13. The most renowned update is the ability to run Python without Global Interpreter Lock (GIL). We expect support for this among our dependencies to be inconsistent, but if you do attempt it - let us know the results! (#1595)

- Added

Mean Average RecallmAR metric, which returns a recall score, averaged over IoU thresholds, detected object classes, and limits imposed on maximum considered detections. (#1661)

import supervision as sv

from supervision.metrics import MeanAverageRecall

predictions = sv.Detections(...)

targets = sv.Detections(...)

map_metric = MeanAverageRecall()

map_result = map_metric.update(predictions, targets).compute()

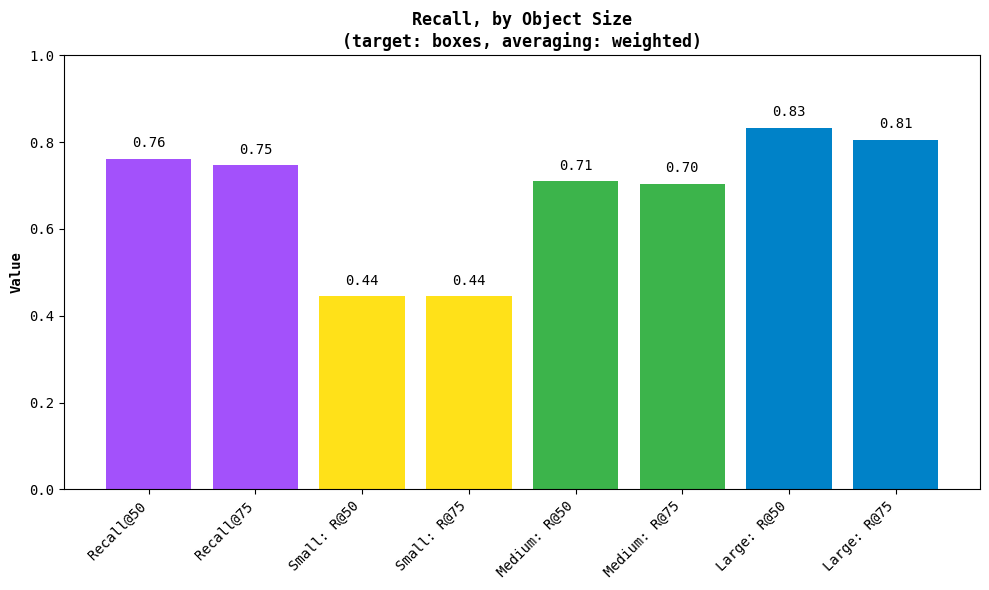

map_result.plot()- Added

PrecisionandRecallmetrics, providing a baseline for comparing model outputs to ground truth or another model (#1609)

import supervision as sv

from supervision.metrics import Recall

predictions = sv.Detections(...)

targets = sv.Detections(...)

recall_metric = Recall()

recall_result = recall_metric.update(predictions, targets).compute()

recall_result.plot()- All Metrics now support Oriented Bounding Boxes (OBB) (#1593)

import supervision as sv

from supervision.metrics import F1_Score

predictions = sv.Detections(...)

targets = sv.Detections(...)

f1_metric = MeanAverageRecall(metric_target=sv.MetricTarget.ORIENTED_BOUNDING_BOXES)

f1_result = f1_metric.update(predictions, targets).compute()- Introducing Smart Labels! When

smart_positionis set forLabelAnnotator,RichLabelAnnotatororVertexLabelAnnotator, the labels will move around to avoid overlapping others. (#1625)

import supervision as sv

from ultralytics import YOLO

image = cv2.imread("image.jpg")

label_annotator = sv.LabelAnnotator(smart_position=True)

model = YOLO("yolo11m.pt")

results = model(image)[0]

detections = sv.Detections.from_ultralytics(results)

annotated_frame = label_annotator.annotate(first_frame.copy(), detections)

sv.plot_image(annotated_frame)fish-z.mp4

- Added the

metadatavariable toDetections. It allows you to store custom data per-image, rather than per-detected-object as was possible withdatavariable. For example,metadatacould be used to store the source video path, camera model or camera parameters. (#1589)

import supervision as sv

from ultralytics import YOLO

model = YOLO("yolov8m")

result = model("image.png")[0]

detections = sv.Detections.from_ultralytics(result)

# Items in `data` must match length of detections

object_ids = [num for num in range(len(detections))]

detections.data["object_number"] = object_ids

# Items in `metadata` can be of any length.

detections.metadata["camera_model"] = "Luxonis OAK-D"- Added a

py.typedtype hints metafile. It should provide a stronger signal to type annotators and IDEs that type support is available. (#1586)

🌱 Changed

ByteTrackno longer requiresdetectionsto have aclass_id(#1637)draw_line,draw_rectangle,draw_filled_rectangle,draw_polygon,draw_filled_polygonandPolygonZoneAnnotatornow comes with a default color (#1591)- Dataset classes are treated as case-sensitive when merging multiple datasets. (#1643)

- Expanded metrics documentation with example plots and printed results (#1660)

- Added usage example for polygon zone (#1608)

- Small improvements to error handling in polygons: (#1602)

🔧 Fixed

- Updated

ByteTrack, removing shared variables. Previously, multiple instances ofByteTrackwould share some date, requiring liberal use oftracker.reset(). (#1603), (#1528) - Fixed a bug where

class_agnosticsetting inMeanAveragePrecisionwould not work. (#1577) hacktoberfest - Removed welcome workflow from our CI system. (#1596)

✅ No removals or deprecations this time!

⚙️ Internal Changes

- Large refactor of

ByteTrack(#1603)- STrack moved to separate class

- Remove superfluous

BaseTrackclass - Removed unused variables

- Large refactor of

RichLabelAnnotator, matching its contents withLabelAnnotator. (#1625)

🏆 Contributors

@onuralpszr (Onuralp SEZER), @kshitijaucharmal (KshitijAucharmal), @grzegorz-roboflow (Grzegorz Klimaszewski), @Kadermiyanyedi (Kader Miyanyedi), @PrakharJain1509 (Prakhar Jain), @DivyaVijay1234 (Divya Vijay), @souhhmm (Soham Kalburgi), @joaomarcoscrs (João Marcos Cardoso Ramos da Silva), @AHuzail (Ahmad Huzail Khan), @DemyCode (DemyCode), @ablazejuk (Andrey Blazejuk), @LinasKo (Linas Kondrackis)

A special thanks goes out to everyone who joined us for Hacktoberfest! We hope it was a rewarding experience and look forward to seeing you continue contributing and growing with our community. Keep building, keep innovating—your efforts make a difference! 🚀