One shot learning using siamese twins architecture on Omniglot dataset

Show/Hide

- Dataset

- File Structure

- Technologies Used

- Structure

- Executive Summary

- [ 1. Why use One-Shot Learning ](#Why use One-Shot Learning)

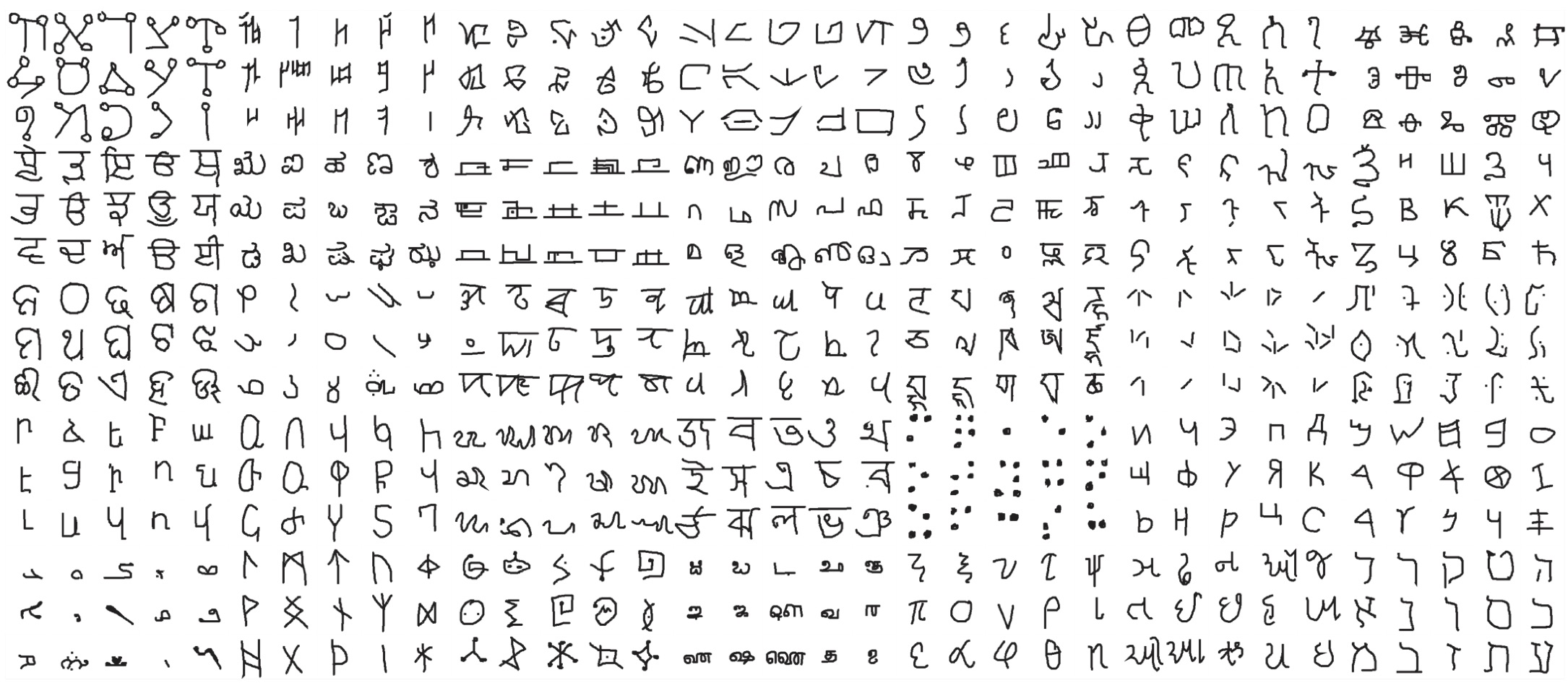

- Dataset : The Omniglot dataset is a collection of 1623 hand-drawn characters from 50 alphabets. For every character there are just 20 examples, each drawn by a different person at resolution 105x105. Each image is paired with stroke data, a sequence of [x,y,t] coordinates with time (t) in milliseconds. This data is split as 30 alphabets used for training and the other 20 used for validation of the model.

- Python

- Pandas

- Numpy

- Matplotlib

- Open CV

- Scikit-Learn

- Keras

- Tensorflow

In conventional image processing, an image is put through a CNN(Convolution Neural Network) to extract features from which an object/edge is detected or classified, but this is very computationally intense and requires a very large training set to alleviate all bias. Another issue is that when a new set of training data is added the model has to be re-trained. So one-shot learning proposes to extract features from a small set of training data and make a faster prediction based on similarity.

Ex Face unlock in phones take only multiple images of and feature for your face and store it on device but when a new face is added it does not have re-train the entire model, it extracts the features from each image and compares for similarity thus reducing the time it takes to unlock while still being reliable.