-

Notifications

You must be signed in to change notification settings - Fork 1

Design Document

v1.0 - 2023.10.08 Initial version

v1.1 - 2023.10.22 Upload Frontend Class Diagram, Update API Documentation & External Libraries

v1.2 - 2023.11.05 Update Backend Class Diagram & API Documentation

v1.3 - 2023.11.19 Update Testing Plan

v1.4 - 2023.11.28 Update on

- Frontend Class diagram

- Backend Class diagram

- ML Deploy

- UI Demo

- Design Patterns

v1.5 - 2023.11.29 Update on

- UnitTesting

- Update about Design Patterns

- API Documentation

v1.6 2023.12.10 Update on

- Backend Class Diagram

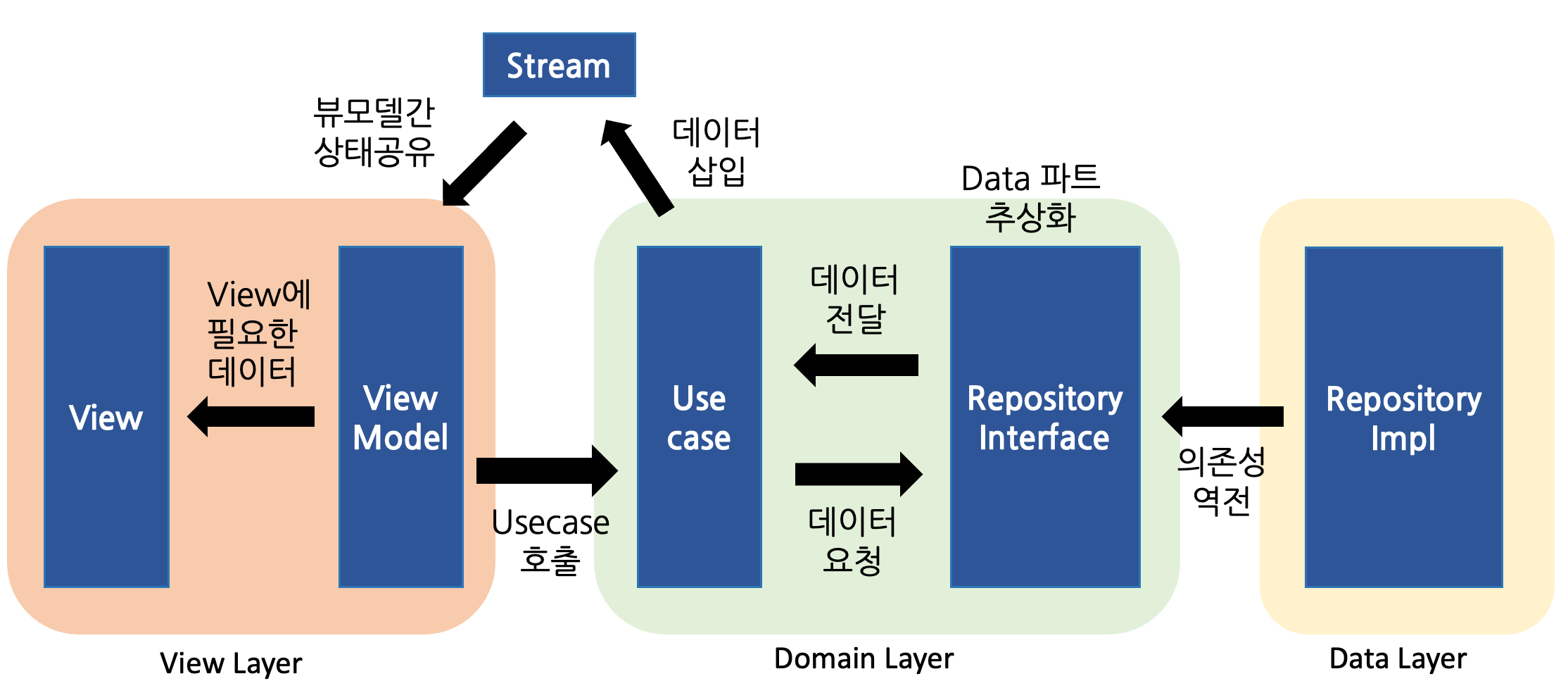

- The code is comprised of implementations of the screens that users see.

- It displays data received from the server to the users and accepts user inputs.

- If any user input is related to business logic, the methods performing those actions are all taken from within the ViewModel.

- If a user's input is not particularly related to business logic, the methods performing those actions are declared and used within the View.

- The ViewModel acts as a mediator connecting the View and UseCase (business logic).

- It holds the data to be displayed in the View, and if a user's input from the View is related to business logic, the methods related to those inputs should be declared within the ViewModel.

- The Necessity of sharing ViewModel States

- The main issue with ViewModel is the need for sharing states between ViewModels. A ViewModel shouldn't be too large, and some degree of separation is necessary. For example, the user’s personal information and the list of posts written by the user can both be managed within a single ViewModel since they pertain to user information. However, managing both sets of information within one ViewModel makes it cumbersome and creates problems due to high dependencies. Therefore, it would be appropriate to manage these sets of information in separate ViewModels.

- However, by dividing the ViewModel, there are definitely cases where multiple ViewModels need to be updated simultaneously. Although I can't think of an example off the top of my head to write here, you would agree that such cases exist. However, in Flutter, using Provider for ViewModel requires context, making it difficult to share states between ViewModels. Using navigatorKey to use context universally also feels like not fully utilizing Flutter itself.

- State Sharing Between ViewModels Using Stream

- Therefore, if there is a need for ViewModels to share states, we have decided to use Stream. If specific data needs to be data in multiple ViewModels, the data is placed in a Stream, and each ViewModel subscribes to the Stream to update their states.

- In other words, Stream acts as a mediator that allows multiple ViewModels to share states.

- Separation of Data Calls and Screen Rendering Using Stream

- Additionally, using Stream allows for the separation of data call logic and screen rendering logic. The implementation method is as follows:

-

The View receives user input, and the ViewModel makes a data call through the UseCase.

-

Once the UseCase receives data from the server, it places this data into a Stream.

-

Example

class FetchMembersUseCase { final MemberRepository memberRepository; const FetchMembersUseCase({ required this.memberRepository, }); Future<void> call() async { final memberDTOListStatus = await memberRepository.fetchMembers(); if (memberDTOListStatus.isFail) { return; } final List<MemberDTO> memberDTOList = memberDTOListStatus.data; final List<Member> memberList = memberDTOList.map((e) => Member.fromMemberDTO(e)).toList(); MemberListStream.add(memberList); } }

-

-

The ViewModel subscribes to the Stream and renders the screen when data is received

-

- As stated above, the ViewModel requests data at step 1 and renders the screen at step 3 when data is received, thus separating the two logics.

- Additionally, using Stream allows for the separation of data call logic and screen rendering logic. The implementation method is as follows:

- A UseCase can be thought of as a specification document that defines the functions needed in this app. All planning intentions should be well encapsulated in the UseCase.

- If a planner who knows a bit of coding wants to check the code, they should be able to confirm the plan by looking at the UseCase.

- UseCases should be as clear and concise as possible.

- **UseCases should only be called from the ViewModel. **They should not be called from the View. UseCases call service_interface and repository_interface internally.

- A Repository is defined as the area used when accessing the database from the server.

- It is named "repository interface" because it is declared as an abstract class for the inversion of dependency.

- For example, the current repository interface includes admin_repository, demand_repository, member_repository, and work_history_repository.

- The Repository Interface, a collection of abstract classes, is inherited to implement actual functions. Through this, the Domain Layer can use business logic without knowing the actual internal implementation of the Repository.

- Controller (Server-Side Logic):

- Processes requests from the client, executes business logic, interacts with the model, and sends back the response.

- Implement using backend frameworks ‘Django’.

- Model (Data Handling):

- Responsible for data retrieval, storage, and processing.

- Interacts with databases, external APIs, or other data sources.

- Implement using ORM tools, database drivers, or data handling libraries.

- Database

- Stores users’ emotional state records, investment decisions, and other relevant data.

- Consider using SQL databases ‘MySQ’L for structured data, or NoSQL databases MongoDB for flexible data schema.

1. User Authentication

-

Register

- Endpoint:

api/user/signup/ - Request

- POST

{ "google_id": "string", "nickname": "string" }

- POST

- Response

- Status: 201 Created

{ "access token": "string", "refresh token": "string" } - Status: 400 Bad Request

{ "<error type>": [ "<error message>" ] }

- Status: 201 Created

- Endpoint:

-

Sign in

- Endpoint:

api/user/signin/ - Request

- POST

{ "google_id": "string" }

- POST

- Response

- Status: 200 OK

{ "access token": "string", "refresh token": "string" } - Status: 401 Unauthorized

{ "message": "User with Google id ‘<google_id>’ doesn’t exist." }

- Status: 200 OK

- Endpoint:

-

Sign out

- Endpoint:

api/user/signout/ - Request

- POST

{}

- POST

- Response

- Status: 200 OK

{ "message": "Signout Success" } - Status: 401 Unauthorized

{ "<error type>": [ "<error message>" ] }

- Status: 200 OK

- Endpoint:

-

Verify

- Endpoint:

api/user/verify/ - Request

- POST

{}

- POST

- Response

- Status: 200 OK

{ "user_id": "int", "google_id": "string", "nickname": "string", } - Status: 401 Unauthorized

{ "<error type>": [ "<error message>" ] }

- Status: 200 OK

- Endpoint:

2. Emotion

-

Get emotion data by month (for calendar)

- Endpoint:

api/emotions/<int: year>/<int: month>/ - Description: 유저의 월별 감정 기록을 불러옵니다.

- Request

- GET

{}

- GET

- Response

- Status: 200 OK

{ "emotions": [ { "date": "int", "emotion": "int", "text": "string", }, ... ] }

- Status: 200 OK

- Endpoint:

-

Get emotion data by range (for report)

- Endpoint:

api/emotions/ - Description: 유저의 지정한 날짜 범위의 감정 기록을 불러옵니다.

- Request

- GET

Query: sdate=<string(ex: 2023-12-03)>&edate=<string>

- GET

- Response

- Status: 200 OK

[ { "id": "int", "date": "string", "value": "int", "text": "string", "user": "list", }, ... ]

- Status: 200 OK

- Endpoint:

-

Record

- Endpoint:

api/emotions/<int: year>/<int: month>/<int: day>/ - Description: 유저가 메인 화면의 달력을 통해 감정을 등록/수정/삭제합니다.

- Request

- PUT

{ "emotion": "int", "text": "string" } - DELETE

{}

- PUT

- Response (PUT)

- Status: 201 Created / 200 OK

{ "message": "Record Success" } - Status: 405 Method Not Allowed

{ "message": "Not allowed to modify emotions from before this week" }

- Status: 201 Created / 200 OK

- Response (DELETE)

- Status: 200 OK

{ "message": "Delete Success" } - Status: 404 Not Found

{ "message": "No emotion record for that date" } - Status: 405 Method Not Allowed

{ "message": "Not allowed to modify emotions from before this week" }

- Status: 200 OK

- Endpoint:

3. Stock

-

Get stock information by name

- Endpoint:

stock/ - Description: 요청한 문자열이 종목 이름에 포함되어 있는 주식 종목들의 정보를 불러옵니다.

- Request

- GET

Query: name=<string>

- GET

- Response

- Status: 200 OK

{ [ { "ticker": "int", "name": "string", "market_type": "char", "current_price": "int", "closing_price": "int", "fluctuation_rate": "float" }, ... ] } - Status: 404 Not Found

- Status: 200 OK

- Endpoint:

-

Get stock information by ticker

- Endpoint:

stock/<int: ticker>/ - Description: 특정 주식 종목의 세부 정보를 불러옵니다.

- Request

- GET

{}

- GET

- Response

- Status: 200 OK

{ { "ticker": "int", "name": "string", "market_type": "char", "current_price": "int", "closing_price": "int", "fluctuation_rate": "float" } } - Status: 404 Not Found

{ "message": "Stock with ticker ‘<ticker>’ doesn’t exist." }

- Status: 200 OK

- Endpoint:

-

Get my_stock_history information

- Endpoint:

mystock/ - Description: 모든 주식 매매 기록 정보를 불러옵니다.

- Request

- GET

Query: name=<string>

- GET

- Response

- Status: 200 OK

{ [ { "id": "int", "ticker": "int", "stock": "char", "user": "int", "price": "int", "transaction_type": "char", "quantity": "float" }, ... ] } - Status: 404 Not Found

- Status: 200 OK

- Endpoint:

-

Get my_stock_history by ticker

- Endpoint:

mystock/<int: ticker>/ - Description: 특정 주식 종목의 history를 불러옵니다.

- Request

- GET

{}

- GET

- Response

- Status: 200 OK

{ { "id": "int", "ticker": "int", "stock": "char", "user": "int", "price": "int", "transaction_type": "char", "quantity": "float" } } - Status: 404 Not Found

{ "message": "Stock with ticker ‘<ticker>’ doesn’t exist." }

- Status: 200 OK

- Endpoint:

-

Create my_stock_history

- Endpoint:

mystock/ - Description: 주식 매매기록을 생성합니다.

- Request

- POST

{ { "stock": "char", "user": "int", "price": "int", "transaction_type": "char", "quantity": "float" } }

- POST

- Response

- Status: 200 OK

{ { "id": "int", "ticker": "int", "stock": "char", "user": "int", "price": "int", "transaction_type": "char", "quantity": "float" } } - Status: 404 Not Found

- Status: 200 OK

- Endpoint:

-

Update my_stock_history

- Endpoint:

mystock/{id:int} - Description: 특정 주식 매매기록을 수정합니다.

- Request

- PUT

{ { "stock": "char", "user": "int", "price": "int", "transaction_type": "char", "quantity": "float" } }

- PUT

- Response

- Status: 200 OK

{ { "id": "int", "ticker": "int", "stock": "char", "user": "int", "price": "int", "transaction_type": "char", "quantity": "float" } } - Status: 404 Not Found

- Status: 200 OK

- Endpoint:

-

Delete my_stock_history

- Endpoint:

mystock/{id:int} - Description: 특정 주식 매매기록을 삭제합니다.

- Request

- Delete

{ }

- Delete

- Response

- Status: 200 OK

- Status: 404 Not Found

- Endpoint:

-

Get my_stock_history information by date

- Endpoint:

mystock/?year=<int>&month=<int>&day=<int> - Description: 날짜별 주식 종목의 history를 불러옵니다.

- Request

- GET

{}

- GET

- Response

- Status: 200 OK

{ { "id": "int", "ticker": "int", "stock": "char", "user": "int", "price": "int", "transaction_type": "char", "quantity": "float" } } - Status: 404 Not Found

- Status: 200 OK

- Endpoint:

-

User balance

- Endpoint:

balance/ - Description: 유저의 주식 잔고를 불러옵니다.

- Request

- GET

{}

- GET

- Response

- Status: 200 OK

{ { "ticker": "int", "balance": "int", "quantity": "int", "return": "int", }, ... } - Status: 404 Not Found

{ "message": "User with Google id ‘<google_id>’ doesn’t exist." }

- Status: 200 OK

- Endpoint:

-

Get my_stock_history information by date

- Endpoint:

balance/return_rate/?sdate=%Y-%m-%d&edate=%Y-%m-%d - Description: 감정별 수익률 계산.

- Request

- GET

{}

- GET

- Response

- Status: 200 OK

{ { "emotion": "int", "net_price": "int", "total_price": "int", "return_rate": "int", }, ... } - Status: 404 Not Found

{ "message": "User with Google id ‘<google_id>’ doesn’t exist." }

- Status: 200 OK

- Endpoint:

4. Report

- Get weekly report

- Endpoint:

report/ - Description: 유저가 보유한 주식의 최신 리포트를 불러옵니다. (만약 보유 주식 관련 리포트가 존재하지 않는다면, 시가총액 top 5 종목에 대한 최신 리포트를 불러옵니다.)

- Request

- GET

{}

- GET

- Response

json { { "title": "string", "body": "string", }, }- Status: 404 Not Found

{ "message": "User with Google id ‘<google_id>’ doesn’t exist." } - Status: 404 Not Found

{ "message": "No report for that week" }

- Status: 404 Not Found

- Endpoint:

The stock report texts are about the process of extracting essential data (labels) from an unlabeled summary. It involves extracting the top five keywords using keyword extraction algorithms for creating good summaries. Although extracting summaries on a sentence level was considered, keyword-level extractive summary is more suitable for this project since important content in an article is often scattered.

Choosing a keyword extraction algorithm is a complex issue requiring justification. It necessitates creating a dataset for uniform training and defining quantitative metrics to compare algorithm performance. Code optimization to enhance each algorithm's performance is also needed.

Our group did some experiment about extracting keyword, and we chose KeyBERT. KEYBERT IS a keyword extraction algorithm using BERT for text embedding, extracts keywords considering the overall content of a document and distinguishes between top and bottom keywords through context understanding. Its high keyword extraction accuracy is a key advantage. However, it requires a large amount of data for model training and takes a long time to train. The method of extracting keywords using KeyBERT is as follows:

- Represent at the document level through document embeddings extracted by BERT.

- Represent at the phrase level using word embeddings for N-gram words/phrases and BERT.

- Find the most similar words/phrases to the document using cosine similarity.

- Here, one can use MMR or Max Sum Similarity methods.

- Extract words/phrases that best describe the entire document.

- MMR adjusts the diversity and relevance of search results in search engines, minimizing redundancy and maximizing diversity in text summarization. It involves repeatedly selecting new candidates that are similar to the document but not to already selected keywords.

The reason for the experiment with MMR: MMR was used because it calculates document similarity using cosine similarity between vectors. It was chosen to adjust the diversity and relevance of search results related to text searches in search engines, avoiding redundancy of keywords with similar meanings in simple distance-based approaches. MMR minimizes redundancy and maximizes diversity in text summarization. The process involves repeatedly selecting new candidates that are similar to the document but different from already chosen keywords.

Experiment method:

- Select the most similar keyword to the document.

- Repeatedly choose new candidates that are similar to the document and not similar to the already selected keywords.

- Generate five diverse keywords with high diversity values.

Experiment result: Extracting keywords using MMR did not always include all five keywords in the summary, resulting in a performance of only 0.622. MMR tended to select frequently occurring words in the article body without understanding the context.

The core content of articles obtained through keyword extraction methods in the previous step is composed of a collection of words, which is not user-friendly and, while containing important contents of the article, does not encompass all the essential information. Therefore, to present it to users, a summary generation model should be used to create natural language sentences that include the important content extracted from the keywords.

Task Challenges: Creating summaries for texts is difficult because defining what constitutes an appropriate summary is challenging, and there is no definitive answer as there are no existing summaries for training. Hence, it's hard to resolve this with supervised learning model training, and even after training a generative model, evaluating its performance is difficult. For performance evaluation and training in this project, although the core content of articles was extracted in the form of keywords, designing and training a model to generate summaries that must include this essential information is also a complex problem.

Emostock_solution: Existing methods involve research on pre-training encoder-decoder structure transformer models to gain summary generation capabilities. However, these methods ultimately show decreased summarization performance without supervised learning using datasets with provided correct summaries. Therefore, in this project, we decided to use the Korean-trained BART model, KoBART, and fine-tune it through supervised learning.

For supervised learning fine-tuning, a dataset of original text-summary pairs is required. However, as there are no correct summaries for articles in the app database, we generated similar summaries for these articles using OPENAI's GPT 3.5 API to use as labels for supervised learning. The reason for considering the summaries generated by GPT as similar summaries, not correct ones, is that the Korean generation capability of GPT 3.5 is not perfect, and there's no way to confirm if the summaries generated by GPT are appropriate. Therefore, it is inappropriate to consider the summaries generated by GPT 3.5 as correct and rely on them for model training.

Experiment Reason: We conducted an experiment to see if economic-related content associations could be derived using Sentence Embedding vectors. Additionally, the experiment aimed to determine if specific company names significantly influence the composition of these vectors when extracted through Sentence Embedding.

Experiment Method:

- Data was structured as a list of [{company name, original article text}, ...].

- Data preprocessing was done to form [{company name, original text embedding vector list, replacement list, deletion list}].

- We checked if there was a divergence in similarity values between original/replacement/deletion vectors for the same company name.

- A matrix was created by calculating the similarity of one article's vector with the vectors of other articles.

Certainly! Here's a detailed explanation of the steps involved in your process of using a Google Cloud instance for machine learning (ML) model execution and report generation for EmoStocks, as well as the transfer of those reports to your own database:

-

Set Up Google Cloud Instance:

- Initialize a new virtual machine (VM) instance on Google Cloud Platform (GCP).

- Configure the instance with the necessary computing resources.

- Install all the required dependencies Python, libraries for ML, and any specific packages needed by our ML model.

-

Deploy ML Model:

- Transfer ML model files to the Google Cloud instance through direct upload in the GCP console.

- Set up the environment within the instance to run ML model. This could involve setting up a virtual environment, installing additional dependencies, and configuring system variables.

-

Crawl Reports:

- Implement a web crawling script on the instance that is capable of fetching new stock reports from various sources.

- Schedule the crawling script to run at regular intervals to ensure you get the most recent data.

-

Run the ML Model:

- Process the crawled stock reports with your ML model. The model will analyze the reports and potentially assess the impact of various factors on stock performance, including emotional sentiment.

- The output of this model run would be the EmoStock report, which includes insights and analytics derived from the crawled data.

-

Save EmoStock Report:

- Store the generated EmoStock reports within the file system of the Google Cloud instance.

- Ensure that the reports are stored in a secure and organized manner, potentially within a specific directory designated for these reports.

-

Transfer Reports to EmoStocks DB:

- When your EmoStocks database requires the new reports, initiate an SCP command from your server to the Google Cloud instance.

- Authenticate securely with the Google Cloud instance using SSH keys or other secure methods.

- Pull the required reports from the Google instance to your database server. Ensure this process is secure and encrypted to prevent any unauthorized access to the data during transfer.

-

Database Update:

- Once the reports are transferred, your database system will ingest and process them.

- Update your database with the new report data, ensuring that any necessary data transformation or integration is performed.

- Your application can now access the latest EmoStock reports for further presentation or analytics.

-

Automation and Monitoring:

- Automate the process of generating and transferring reports as much as possible to reduce manual intervention and to ensure timely updates.

-

Security and Compliance:

- Regularly review the security settings of both the Google Cloud instance and Emostock server, making sure that only authorized processes and users have access to the EmoStock reports.

- Ensure that your data handling practices comply with relevant data protection regulations.

-

Abstract Factory Pattern: Consistent Object Creation:It provides a way to encapsulate a group of individual factories with a common goal. This ensures consistent object creation across different parts of your application, enhancing code reliability. Promotes Scalability: By abstracting the creation process, it becomes easier to introduce new families of related objects without modifying existing code, making the system more scalable.

-

Observer Pattern: Dynamic Interaction: Allows for a dynamic and flexible way to handle communication between objects. In a frontend context, this can be particularly useful for updating the UI in response to state changes. Real-Time Updates: Facilitates real-time updates in a way that when the state of one object changes, all its dependents are notified automatically. This is ideal for responsive user interfaces. Loose Coupling: The subjects and observers are loosely coupled. The subject doesn't need to know anything about the observers, thereby making the components easily reusable and extensible.

What patterns does your team implement in your project code? Frontend: implements two core design patterns in our project code: the Abstract Factory Pattern and the Observer Pattern. Where are the patterns implemented in the code? The Abstract Factory Pattern is implemented in the core module of our application where objects are created. This pattern is used whenever the application needs to instantiate families of related objects, ensuring they are compatible with each other. The Observer Pattern is implemented in the UI module of our application. It's used to handle the dynamic interactions between the application state and the user interface, such that any changes in the underlying data model are reflected in the UI in real-time. Why does your team implement the patterns? We implement the Abstract Factory Pattern to encapsulate the object creation logic, making our codebase more organized and consistent. This pattern helps avoid conditional statements scattered throughout the application for object creation and instead centralizes the creation logic in one place. The Observer Pattern is implemented to establish a well-defined communication protocol between the application's state and its UI. This enables a push-based notification mechanism where changes in the application state are automatically propagated to the UI components that need to reflect these changes. For what purpose (benefit) does your team implement the patterns? The Abstract Factory Pattern is implemented for its benefits in consistency and reliability in object creation. It allows our application to remain flexible to changes, such as introducing new object families without altering existing code, thus promoting scalability. The Observer Pattern is used primarily for its benefits in creating dynamic and responsive user interfaces. It allows us to update the UI in real-time as the application state changes, ensuring that the user is always presented with the most up-to-date information. Moreover, by decoupling the subjects and observers, we enhance the reusability and extensibility of our UI components. Both patterns collectively contribute to a codebase that is robust, maintainable, and adaptable to future requirements or changes in technology. Class diagram explaining the patterns with your code (Simply). I have chose abstract factory, observer design patterns because while using them, I didn’t have to change any of my class diagram. It fits wells, and I have applied before actually thinking about using the design patterns.

-

The 'Composite' pattern belongs to the 'Structural Patterns' category and is designed to organize objects into a tree structure. This allows individual objects and composite objects (grouped objects) to be treated uniformly. In the given model, the Stock model is structured based on base classes like BaseModel or TimestampModel. BaseModel and TimestampModel are used to form the larger structure of the Stock model, resembling the idea of the Composite pattern, which aims to combine and configure objects. The purpose of the Composite pattern is to manipulate objects in a consistent manner and to treat single and composite objects indistinguishably. Therefore, the structure of the Stock model based on BaseModel and TimestampModel in the given model can have a structure similar to the Composite design pattern.

-

The 'Factory' pattern. There are only two types of users in our app: regular users and administrators (superusers), but this may change during the process of developing and servicing the app. For example, a special user who manages only the stock database may be needed. Therefore, we decided to apply the Factory method pattern to the code that creates users. Django's authentication system provides a class called BaseUserManager that contains a factory method that creates User objects. We created a class called UserManager that inherits BaseUserManager, through which we can create user or superuser objects.

What patterns does your team implement in your project code?

In the backend of our project, we implement the Composite Pattern for structuring our models and the Factory Method Pattern for user object creation.

Where are the patterns implemented in the code?

The Composite Pattern is implemented within our model architecture. Specifically, it's used in the Stock model, which inherits behaviours and attributes from base classes like BaseModel and TimestampModel. These base classes provide a common interface and shared functionality, allowing us to treat all related objects uniformly.

The Factory Pattern is applied in the user management part of our code, where we handle the creation of different types of user accounts. We have a custom UserManager class that extends Django's BaseUserManager, providing us with the flexibility to create various user objects as needed.

Why does your team implement the patterns?

The Composite Pattern is utilized to maintain a consistent and hierarchical structure among our models. This pattern simplifies the process of managing and using various model instances by enabling us to treat them uniformly, whether they are individual objects or complex composites.

We implement the Factory Pattern to abstract the creation logic of user objects. This is particularly important for our application as it allows us to encapsulate the instantiation logic and makes the user creation process adaptable to future changes, such as introducing new types of users. For example, a special user who manages only the stock database may be needed during the process of developing and servicing our app.

For what purpose (benefit) does your team implement the patterns?

The Composite Pattern

- Structured Code and Consistent Interface: By using the Composite Pattern to structure models, related objects can be treated uniformly. For instance, the Stock model inherits from the BaseModel and TimestampModel base classes, ensuring that all Stock-related objects share common attributes and behaviors.

- Code Reusability and Ease of Maintenance: Concentrating repeated functionalities and properties in the BaseModel simplifies the code and makes it easier to maintain. Defining functionalities common to all models in one place enables easier modifications or additions by only altering the BaseModel when needed.

- Enhanced Extensibility and Flexibility: Utilizing the Factory Pattern for user object creation encapsulates object creation logic, making it easier to modify or extend the creation process. If new types of user objects need to be added or modified, changes are confined within the factory, minimizing impacts on other parts of the codebase.

- Time and Cost Savings: Utilizing reusable code and structured design patterns reduces development time and lowers maintenance costs. Leveraging existing patterned structures allows for the incorporation of new features or system expansion while utilizing established patterns, thereby saving time and resources. By implementing these patterns, the advantages encompass structured code, consistency, improved reusability, easier maintenance, enhanced flexibility for future modifications, and significant time and cost savings in development and upkeep.

The Factory Pattern offers the benefit of flexibility in object creation. By using this pattern, we can introduce new user types without changing the existing code. It is also in alignment with the Open/Closed Principle, one of the SOLID principles, which states that software entities should be open for extension but closed for modification.

In summary, the implementation of these design patterns in the backend of our project aligns with our goals for a robust, maintainable, and scalable system that can gracefully evolve as the needs of our application grow and change.

- Database: PostgreSQL

1. Unit Testing & Integration Testing:

Framework:

- Backend: Django test framework

- Coverage Goals: Aim for at least 80% code coverage for both frontend and backend components.

- All the APIs, Emotion, Stock files

- Procedure: Integration Testing, create tests that cover the interaction between multiple units (e.g., function calls, data flow).

2. Acceptance Testing: User stories