-

Notifications

You must be signed in to change notification settings - Fork 231

Databend

Databend is a next-generation cloud-native data warehouse developed in Rust and designed for cloud architecture. It leverages object storage to provide enterprises with a unified lakehouse architecture, offering a big data analytics platform with separated compute and storage.

This article will introduce how to ingest data from AutoMQ into Databend using bend-ingest-kafka.

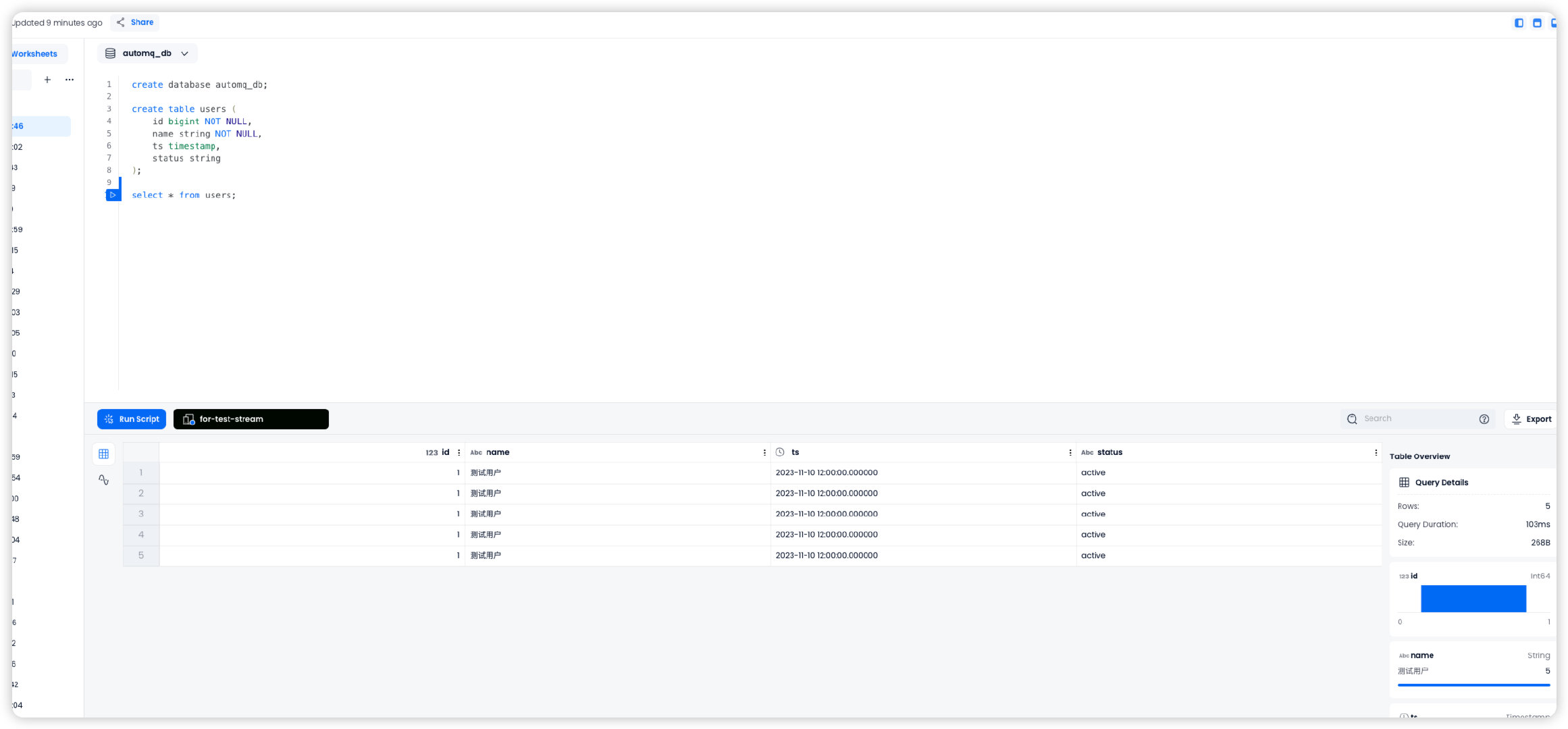

First, go to Databend Cloud to activate a Warehouse, and create a database and a test table in the worksheet.

create database automq_db;

create table users (

id bigint NOT NULL,

name string NOT NULL,

ts timestamp,

status string

)

Refer to Deploy Locally▸ to deploy AutoMQ, ensuring network connectivity between AutoMQ and Databend.

Quickly create a Topic named example_topic in AutoMQ and write a test JSON data into it, following the steps below.

To create a topic using the Apache Kafka® command-line tool, ensure that you have access to a Kafka environment and that the Kafka service is running. Here is an example command to create a topic:

./kafka-topics.sh --create --topic exampleto_topic --bootstrap-server 10.0.96.4:9092 --partitions 1 --replication-factor 1

When executing the command, replace topic and bootstrap-server with the actual Kafka server address you are using.

After creating the topic, you can use the following command to verify that the topic has been successfully created.

./kafka-topics.sh --describe example_topic --bootstrap-server 10.0.96.4:9092

Generate JSON formatted test data that corresponds with the table mentioned earlier.

{

"id": 1,

"name": "test user"

"timestamp": "2023-11-10T12:00:00",

"status": "active"

}

Write test data into a Topic named example_topic using Kafka command line tools or programmatically. Below is an example using command line tools:

```sh

```markdown

echo '{"id": 1, "name": "Test User", "timestamp": "2023-11-10T12:00:00", "status": "active"}' | sh kafka-console-producer.sh --broker-list 10.0.96.4:9092 --topic example_topic

When executing the command, make sure to replace topic and bootstrap-server with the actual Kafka server address.

Use the following command to view the data just written to the topic:

sh kafka-console-consumer.sh --bootstrap-server 10.0.96.4:9092 --topic example_topic --from-beginning

bend-ingest-kafka can monitor Kafka and batch write data into Databend Table. After deploying bend-ingest-kafka, you can start the data import job.

bend-ingest-kafka --kafka-bootstrap-servers="localhost:9094" --kafka-topic="example_topic" --kafka-consumer-group="Consumer Group" --databend-dsn="https://cloudapp:password@host:443" --databend-table="automq_db.users" --data-format="json" --batch-size=5 --batch-max-interval=30s

When executing the command, make sure to replace kafka-bootstrap-servers with the actual Kafka server address.

Databend Cloud provides a DSN for connecting to the warehouse, which can be referenced in this documentation.

bend-ingest-kafka accumulates data up to the batch size before triggering a data synchronization.

Navigate to the Databend Cloud worksheet and query the automq_db.users table. You will see that the data has been synchronized from AutoMQ to the Databend table.

- What is automq: Overview

- Difference with Apache Kafka

- Difference with WarpStream

- Difference with Tiered Storage

- Compatibility with Apache Kafka

- Licensing

- Deploy Locally

- Cluster Deployment on Linux

- Cluster Deployment on Kubernetes

- Example: Produce & Consume Message

- Example: Simple Benchmark

- Example: Partition Reassignment in Seconds

- Example: Self Balancing when Cluster Nodes Change

- Example: Continuous Data Self Balancing

-

S3stream shared streaming storage

-

Technical advantage

- Deployment: Overview

- Runs on Cloud

- Runs on CEPH

- Runs on CubeFS

- Runs on MinIO

- Runs on HDFS

- Configuration

-

Data analysis

-

Object storage

-

Kafka ui

-

Observability

-

Data integration