-

Notifications

You must be signed in to change notification settings - Fork 231

Don't let Apache Kafka on Kubernetes get you fired

Apache Kafka, a cornerstone in data streaming, wasn't originally designed with Kubernetes in mind. While Kubernetes offers a robust platform for resource management and DevOps, it also introduces complexities for applications not natively built for it. To harness the full power of Kubernetes, applications must support rapid pod migration and recovery across nodes.

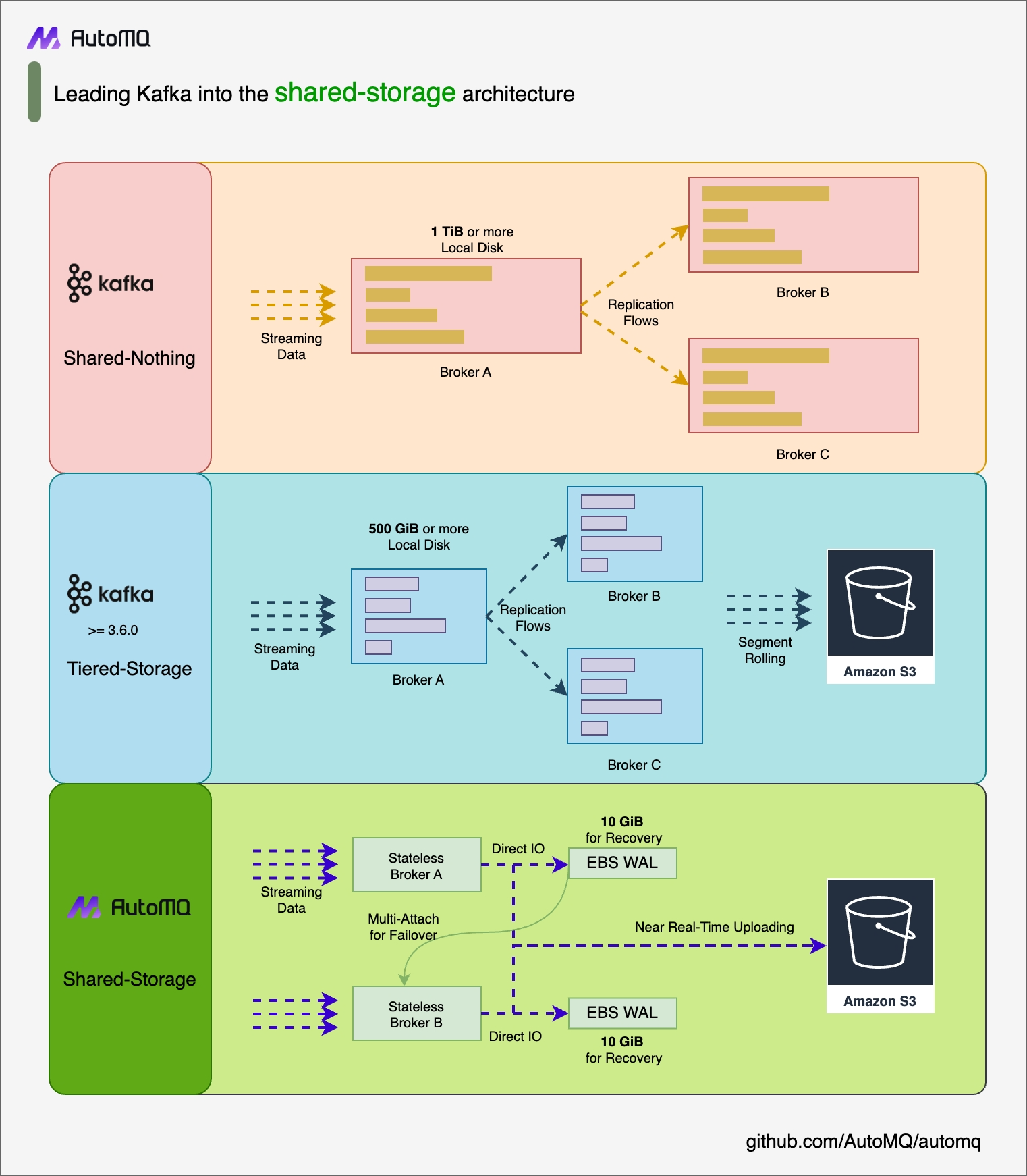

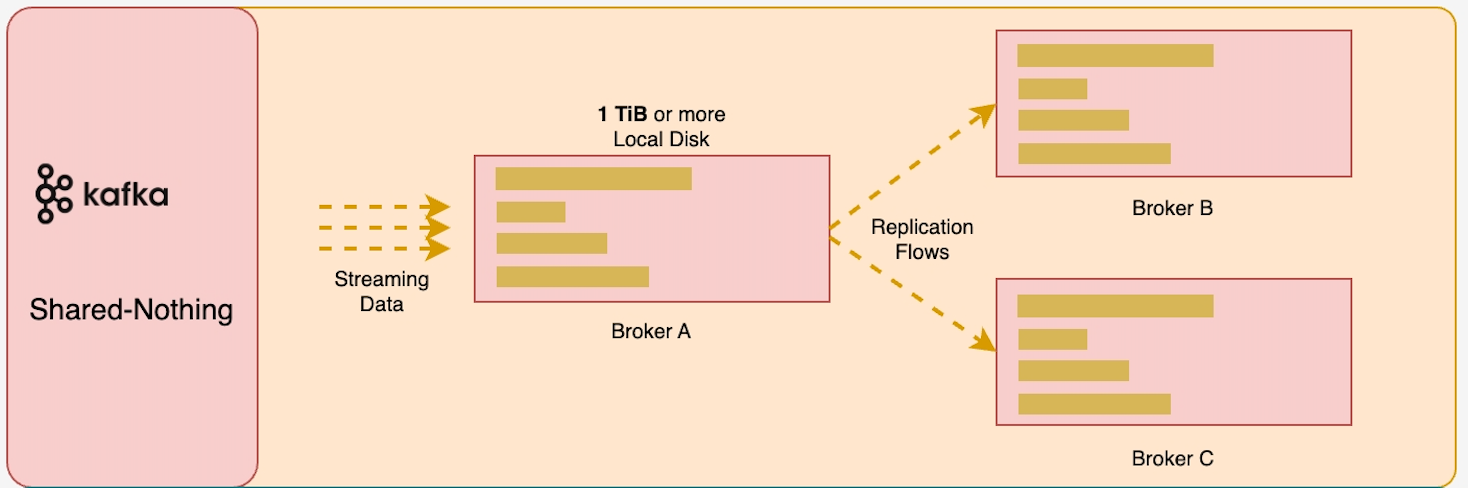

Kafka developed over a decade ago for traditional data centers, operates on an integrated storage-computation architecture. This design presents several challenges in a cloud-native environment: limited elasticity, suboptimal use of cloud services, operational complexity, and high costs. These factors make Kafka a less-than-ideal candidate for seamless integration with Kubernetes' cloud-native ethos.

Deploying a non-Kubernetes-native Kafka on Kubernetes can expose your production environment to significant risks, demanding rigorous maintenance to ensure performance and uptime. Any lapse in management could lead to Kafka outages, potentially jeopardizing your job.

AutoMQ emerges as a next-generation Kafka solution, built with cloud-native principles at its core. It effectively addresses the limitations of traditional Kafka, offering a Kubernetes-native Kafka service. This article delves into the challenges of running Apache Kafka on Kubernetes and how AutoMQ provides a streamlined, resilient solution.

AutoMQ[1] is a modern alternative to Apache Kafka, purpose-built with cloud-native principles in mind. It reimagines Kafka's traditional architecture to leverage cloud infrastructure, offering significant improvements in cost efficiency and scalability. The open-source Community Edition is available on GitHub[2], while the SaaS and BYOC Business Editions cater to various enterprise needs in the cloud.

By decoupling storage from computing and utilizing services like EBS and S3, AutoMQ achieves a tenfold reduction in costs and a hundredfold increase in elasticity, all while maintaining full compatibility with Kafka. Additionally, AutoMQ delivers superior performance compared to traditional Kafka setups.

For a deeper dive into how AutoMQ stacks up against Apache Kafka, explore the comparative articles linked below.

The concept of Kubernetes Native[6] was first mentioned by Red Hat's Quarkus. Kubernetes Native is a specialized form of Cloud Native. Kubernetes itself is Cloud Native, fully utilizing cloud-native technologies defined by CNCF such as containerization, immutable infrastructure, and service mesh. Programs labeled as Kubernetes Native have all the advantages of Cloud Native. Additionally, they emphasize deeper integration with Kubernetes. Kubernetes Native Kafka indicates a Kafka service that deeply integrates with Kubernetes, fully leveraging all the advantages of Kubernetes. Kubernetes Native Kafka can thoroughly exploit the following advantages of Kubernetes:

-

Enhancing Resource Utilization : Kubernetes provides finer-grained scheduling units (Pods) and robust resource isolation capabilities. Containerized virtualization technology allows Pods to quickly reassign between nodes, and resource isolation ensures that Pods on the same node can use resources efficiently. Combined with Kubernetes' powerful orchestration capabilities, this significantly improves resource utilization.

-

Hiding IaaS Layer Differences and Supporting Hybrid Cloud to Avoid Vendor Lock-In : By using Kubernetes to hide IaaS layer differences, enterprises can more easily adopt hybrid cloud architectures and avoid vendor lock-in, thus gaining more bargaining power when procuring services from cloud providers.

-

More Efficient DevOps : By following Kubernetes' best practices, enterprises can achieve immutable infrastructure through Infrastructure as Code (IaC). By integrating with internal CI/CD processes and utilizing GitOps along with Kubernetes' native deployment support, DevOps efficiency and security can be greatly enhanced.

Kubernetes is becoming increasingly popular in medium and large enterprises. For these enterprises, daily resource consumption represents a significant cost. By deploying all applications on Kubernetes, resource utilization can be significantly improved, achieving unified standardized management and maximizing benefits in the DevOps process.

When all applications and data infrastructure within an enterprise are running on Kubernetes, it becomes strategically imperative for core data infrastructure, such as Kafka, to also run on Kubernetes. Among AutoMQ's clients, companies like JD.com and Great Wall Motors mandate that Kafka must operate on Kubernetes as part of their group strategy.

Additionally, medium to large enterprises have a greater need for hybrid cloud solutions compared to smaller enterprises to avoid vendor lock-in. By leveraging multi-cloud strategies, these enterprises can further enhance system availability. These factors drive the demand for Kubernetes Native Kafka.

In summary, Kubernetes Native Kafka provides significant advantages to medium and large enterprises in terms of resource utilization, standardized management, DevOps efficiency, hybrid cloud strategies, and system availability, making it an inevitable choice for these businesses.

Although Kafka has given rise to excellent Kubernetes ecosystem products such as Strimzi[12] and Bitnami[13] Kafka due to its robust ecosystem, it is undeniable that Apache Kafka is not inherently Kubernetes Native. Deploying Apache Kafka on Kubernetes essentially involves rehosting Apache Kafka on Kubernetes. Even with the capabilities of Strimzi and Bitnami Kafka, Apache Kafka still cannot fully leverage the potential of Kubernetes, including:

Apache Kafka's impressive throughput and performance are closely tied to its implementation based on Page Cache. Containers do not virtualize the operating system kernel. Therefore, when Pods drift between Nodes, the Page Cache needs to be re-warmed[8], which affects Kafka's performance. During peak Kafka business periods, this performance impact becomes even more pronounced. Under these circumstances, if Kafka users are concerned about the impact of performance on their business, they would be hesitant to let Kafka Broker Pods drift freely between Nodes. If Pods cannot drift quickly and freely between Nodes, it greatly undermines the flexibility of Kubernetes scheduling, failing to harness its orchestration and resource utilization advantages. The figure below shows how disk reads due to an un-warmed Page Cache affect Kafka performance when Broker Pods drift.

Apache Kafka itself ensures data durability based on multi-replica ISR. When horizontally scaling the cluster on Kubernetes, Apache Kafka requires a significant amount of manual intervention. The entire process is not only non-automated but also carries substantial operational risks. The complete workflow includes:

-

Partition Reassignment Assessment : Before scaling, Kafka operations personnel who have a thorough understanding of the cluster's business and load must assess which topic partitions should be reassigned to the newly created nodes. They must ensure that the new nodes meet the read and write traffic requirements of these partitions and evaluate the reassignment duration as well as its impact on the business system. This first step alone is very cumbersome and difficult to implement.

-

Preparation of Partition Reassignment Plan : A partition reassign policy file needs to be prepared, which specifically lists which partitions will be reassigned to the new nodes.

-

Execution of Partition Reassignment : Apache Kafka executes the partition reassignment according to the user-defined partition reassign policy. The time taken for this process depends on the amount of data retained on the local disk. This process generally takes several hours or even longer. During reassignment, the large amount of data copying will compete for disk and network I/O, affecting normal read and write requests. At this time, the read and write throughput of the cluster will be significantly impacted .

Due to the lack of elasticity and strong reliance on Page Cache, Apache Kafka further fails to perform efficient and safe rolling upgrades on K8s. Rolling restarts of high-traffic, high-capacity Apache Kafka on K8s are very challenging. During the reassignment process, Kafka operations personnel must constantly monitor the health status of the cluster. Partition data replication and Disk Reads triggered by Page Cache Miss can affect the overall read and write performance of the cluster, thereby further impacting applications that rely on Kafka.

Currently, K8s does not support PV shrinkage[11]. K8s is very friendly for stateless or compute-storage fully decoupled programs. However, there are significant limitations for stateful programs. The lack of PV shrinkage means Kafka must maintain storage space based on peak throughput. To ensure high throughput and low latency, users often need to use expensive SSDs to store Kafka data. When users have high throughput and long data retention periods, this can cost users a significant amount of money.

Thanks to AutoMQ's 100% compatibility with Apache Kafka, AutoMQ can fully leverage the existing Kubernetes (K8s) ecosystem products for Kafka, such as the Kafka chart provided by Bitnami and the Kafka operator provided by Strimzi. If users are already using Bitnami or Strimzi's Kafka K8s solutions, they can seamlessly transition to AutoMQ and immediately enjoy the cost-effectiveness and elasticity that cloud-native technologies offer.

Without relying on Page Cache, pods can migrate freely across containers without performance concerns.

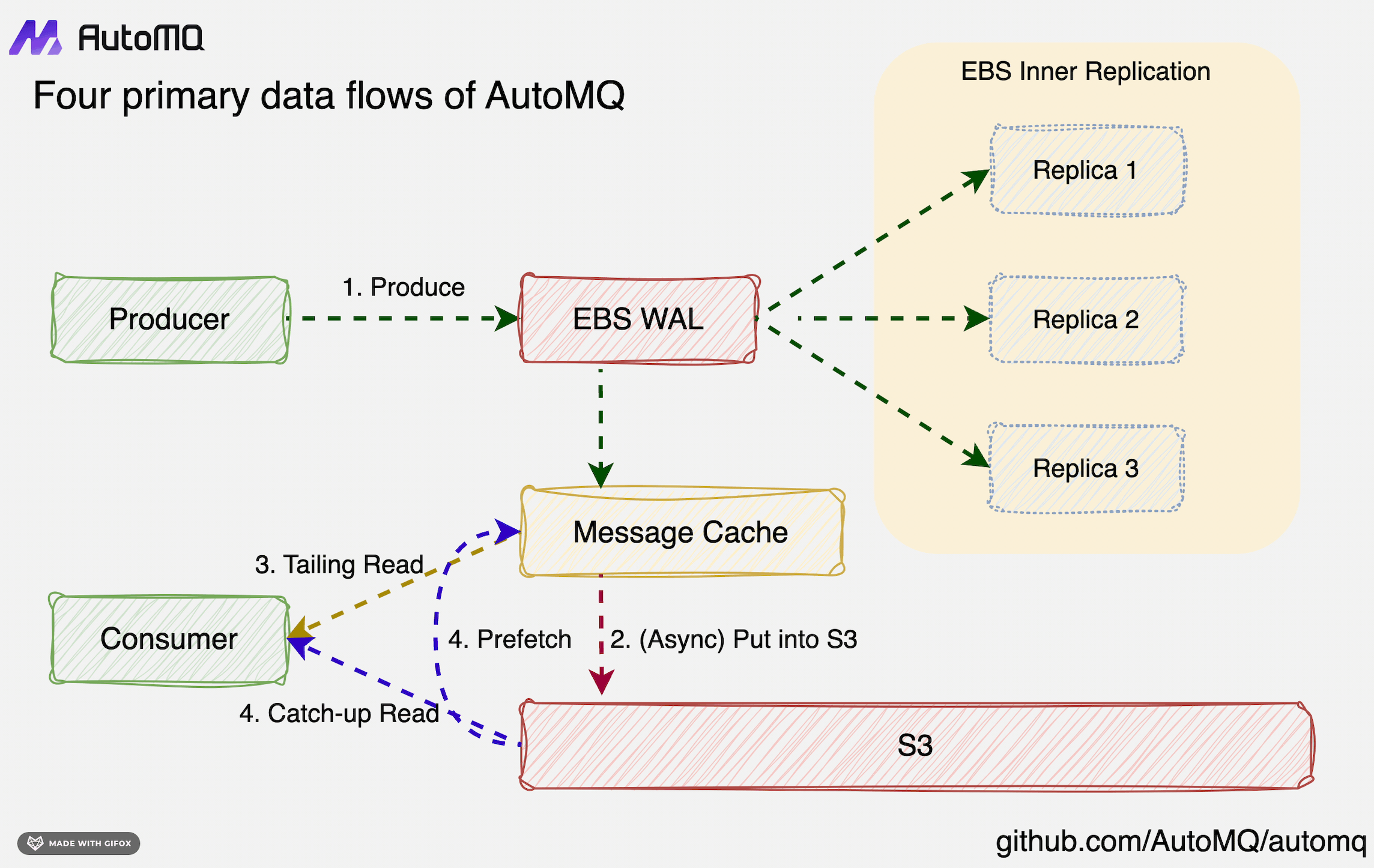

AutoMQ does not need to worry about performance degradation due to unprimed Page Cache during pod migration as Apache Kafka® does. Unlike Apache Kafka®, which ensures data durability through multiple replicas and ISR, AutoMQ offloads data durability to cloud storage EBS using WAL. EBS's internal multi-replica mechanism and high availability guarantee data durability. Although Page Cache is not used, the combination of Direct I/O and the inherent low-latency and high-performance characteristics of EBS allows AutoMQ to achieve sub-millisecond latency. For specific metrics, refer to AutoMQ vs. Kafka performance report[15].

Powerful elasticity fully leverages the potential of K8s resource management and automated operational deployment

Using AutoMQ on K8s eliminates the concerns about Apache Kafka®'s lack of elasticity, which prevents automatic scaling and efficient rolling updates. Only when Kafka truly supports automated elasticity and efficient, safe rolling updates can K8s automatically and efficiently migrate pods to optimize resource utilization and enhance operational efficiency through its IaC-based DevOps automation. AutoMQ ensures that users can automatically and safely perform elasticity and rolling updates on K8s through the following technologies:

-

Partition Reassignment in Seconds : In AutoMQ, partition reassignment does not involve any data copying. When a partition needs to be moved across brokers, it merely involves metadata changes, allowing partition reassignment to be completed within seconds.

-

Continuous Traffic Self-Balancing : Apache Kafka® provides a partition reassignment tool, but the actual reassignment plan must be determined by the operations team. For Kafka clusters with hundreds or thousands of nodes, manually monitoring the cluster state and devising a comprehensive partition reassignment plan is almost an impossible task. To address this, third-party external plugins such as Cruise Control for Apache Kafka[16] are available to assist in generating reassignment plans. However, due to the multitude of variables involved in Apache Kafka's self-balancing process (e.g., replica distribution, leader traffic distribution, node resource utilization, etc.) and the resource contention and time consumption (hours to days) due to data synchronization during rebalancing, existing solutions are highly complex and have low decision-making timeliness. Consequently, implementing self-balancing strategies still requires operations personnel's review and continuous monitoring, failing to solve the issue of uneven traffic distribution in Apache Kafka effectively. AutoMQ, on the other hand, integrates an automatic self-balancing component that, based on collected metric information, can automatically help users generate and execute partition reassignment plans, ensuring that cluster traffic is automatically rebalanced after elastic scaling.

AutoMQ can work seamlessly with its auto-scaling related ecosystem products on Kubernetes (K8s), such as Karpenter[17] and Cluster Autoscaler[8]. If interested in AutoMQ's auto-scaling solutions on K8s, you can refer to the AWS official blog post "Cost and Efficiency Optimization of Large-Scale Kafka Using AutoMQ"[19].

We must acknowledge that not all enterprises or applications can benefit from Kubernetes. Kubernetes adds a new layer of abstraction between applications and underlying VMs, introducing new complexities in dimensions such as security, networking, and storage. Forcing non-Kubernetes-native applications to rehost on Kubernetes can further amplify these complexities, causing new issues. Users may need to perform a lot of hacky behaviors that go against Kubernetes best practices and involve considerable manual intervention to make non-Kubernetes-native applications run well on Kubernetes. Taking Apache Kafka as an example, neither Strimz nor Bitnami can solve its horizontal scaling issue because human intervention is necessary to ensure cluster availability and performance during scaling operations. These manual operations conflict with the automated DevOps philosophy of Kubernetes. Using AutoMQ can truly eliminate these human interventions, leveraging Kubernetes' mechanisms to perform efficient, automated cluster capacity adjustments, updates, and upgrades for Kafka clusters.

Kubernetes is not designed for stateful data infrastructure, and many of its default capabilities are not friendly to applications with stateful storage or incomplete storage decoupling. Kubernetes encourages users to deploy stateless applications on it, thoroughly decoupling stateful data to take full advantage of its benefits, such as improved resource utilization and enhanced DevOps efficiency. Apache Kafka's architecture, which combines storage and computation, heavily relies on local storage, and the inability to shrink Persistent Volumes (PVs) necessitates reserving large storage resources, exacerbating storage cost overhead. AutoMQ fully separates its storage and compute layers, using only a fixed-size (10GB) block storage as a Write-Ahead Log (WAL), with data offloaded to S3 storage. This storage architecture leverages the unlimited scalability and pay-as-you-go features of cloud object storage services, allowing AutoMQ to function like a stateless program on Kubernetes and fully realize Kubernetes' potential.

AutoMQ, through its innovative WAL and S3-based shared storage architecture, as well as features like partition reassignment in seconds and continuous traffic self-balancing, has built a truly Kubernetes-native Kafka service that fully exploits Kubernetes' advantages. You can experience it using the source code available AutoMQ Community Edition available on Github or apply for a free enterprise edition PoC trial on the AutoMQ official website.

[1] AutoMQ: https://www.automq.com

[2] AutoMQ Github: https://github.com/AutoMQ/automq

[3] Kafka Alternative Comparision: AutoMQ vs Apache Kafka:https://www.automq.com/blog/automq-vs-apache-kafka

[4] Kafka Alternative Comparision: AutoMQ vs. AWS MSK (serverless): https://www.automq.com/blog/automq-vs-aws-msk-serverless

[5] Kafka Alternative Comparision: AutoMQ vs. Warpstream: https://www.automq.com/blog/automq-vs-warpstream

[6] Why Kubernetes native instead of cloud native? https://developers.redhat.com/blog/2020/04/08/why-kubernetes-native-instead-of-cloud-native#

[7]Kafka on Kubernetes: What could go wrong? https://www.redpanda.com/blog/kafka-kubernetes-deployment-pros-cons

[8] Common issues when deploying Kafka on K8s: https://dattell.com/data-architecture-blog/kafka-on-kubernetes/

[9] Apache Kafka on Kubernetes – Could You? Should You?:https://www.confluent.io/blog/apache-kafka-kubernetes-could-you-should-you/

[10] Kafka on Kubernetes: Reloaded for fault tolerance: https://engineering.grab.com/kafka-on-kubernetes

[11] Kubernetes 1.24: Volume Expansion Now A Stable Feature: https://kubernetes.io/blog/2022/05/05/volume-expansion-ga/

[12] Strimz: https://strimzi.io/

[13] Bitnami Kafka: https://artifacthub.io/packages/helm/bitnami/kafka

[14] How to implement high-performance WAL based on raw devices?:https://www.automq.com/blog/principle-analysis-how-automq-implements-high-performance-wal-based-on-raw-devices#what-is-delta-wal

[15] Benchmark: AutoMQ vs. Apache Kafka: https://docs.automq.com/automq/benchmarks/benchmark-automq-vs-apache-kafka

[16] Cruise Control for Apache Kafka: https://github.com/linkedin/cruise-control

[17] Karpenter: https://karpenter.sh/

[18] Cluster Autoscaler: https://github.com/kubernetes/autoscaler

[19] Using AutoMQ to Optimize Kafka Costs and Efficiency at Scale: https://aws.amazon.com/cn/blogs/china/using-automq-to-optimize-kafka-costs-and-efficiency-at-scale/

- What is automq: Overview

- Difference with Apache Kafka

- Difference with WarpStream

- Difference with Tiered Storage

- Compatibility with Apache Kafka

- Licensing

- Deploy Locally

- Cluster Deployment on Linux

- Cluster Deployment on Kubernetes

- Example: Produce & Consume Message

- Example: Simple Benchmark

- Example: Partition Reassignment in Seconds

- Example: Self Balancing when Cluster Nodes Change

- Example: Continuous Data Self Balancing

-

S3stream shared streaming storage

-

Technical advantage

- Deployment: Overview

- Runs on Cloud

- Runs on CEPH

- Runs on CubeFS

- Runs on MinIO

- Runs on HDFS

- Configuration

-

Data analysis

-

Object storage

-

Kafka ui

-

Observability

-

Data integration