-

Notifications

You must be signed in to change notification settings - Fork 7

Assessment and Logging Data Analytics

Objective: Walk through the process of writing and executing RESTful QBANK queries to extract data that can then be analyzed for research purposes.

The GStudio dashboard already gathers basic student analytics about assessment questions. For this demo, we are going to directly access the QBank RESTful endpoints to create other kinds of reports for research, in the same way the GStudio script does. The tech team already has the ability to develop such scripts, and the associated test_script.py can be used as an example.

Here we include an example of how you would use the above test_script.py.

Pre-requisites:

- You have Python 2.7 installed on your computer.

- The student data you want is loaded into a qbank instance available online or locally to your computer.

First, you need to modify the script to point to the qbank instance where the data is stored. You do this by editing the BASE_URL variable value:

https://gist.github.com/birdland/16b4ef3d46cd994afe96b220bb6245d8#file-test_script-py-L30

BASE_URL = 'https://clix-data-staging.mit.edu/api/v1/'

For example, you could change it to:

BASE_URL = 'https://clix-data.tiss.edu/api/v1/'

Contact the tech team for the correct URL to use.

Once you have saved the script with the right qbank URL, open up a command line terminal and navigate to the directory where the script is located. All command line commands start with $, which does not need to be re-typed (NOTE that Windows commands will be slightly different to list directory contents -- you may need to use dir instead). You will also need to fill in the values of bank_id and assessment_offered_id with the values from your iframe code. See the instructions below to figure out the values for a specific assessment:

$ ls

test_script.py

$ python

Python 2.7.10 (default, Feb 7 2017, 00:08:15)

[GCC 4.2.1 Compatible Apple LLVM 8.0.0 (clang-800.0.34)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>> from test_script import make

>>> bank_id = "assessment.Bank%3A58bd8cc691d0d90b7c45ddaf%40ODL.MIT.EDU"

>>> assessment_offered_id = "assessment.AssessmentOffered%3A59a3edbb91d0d971736ee1dd%40ODL.MIT.EDU"

>>> make(bank_id, assessment_offered_id)

>>> exit()

$ ls

log.csv results.csv test_script.py

You may also want to grab item data to make the results.csv more human-readable. An example script can be found here, as test_item_script.py. We can walk through the major code differences and explain how they work. To run this script, you use the python shell, as with the original script.

I ran this against a local copy of qbank, where I had aggregated multiple sets of school data together. So I had to point the script to my local qbank URL:

https://gist.github.com/cjshawMIT/c6b2a409120663b899eccbc281bdd9ab#file-test_item_script-py-L31

Then, we will also need to grab all of the actual items used in the assessment. This is done with another request to qbank:

https://gist.github.com/cjshawMIT/c6b2a409120663b899eccbc281bdd9ab#file-test_item_script-py-L43-L45 https://gist.github.com/cjshawMIT/c6b2a409120663b899eccbc281bdd9ab#file-test_item_script-py-L52

This data can then be passed on to other methods.

https://gist.github.com/cjshawMIT/c6b2a409120663b899eccbc281bdd9ab#file-test_item_script-py-L62

We also need to update the column headers for our CSV output file, to reflect the new data that is included:

https://gist.github.com/cjshawMIT/c6b2a409120663b899eccbc281bdd9ab#file-test_item_script-py-L95-L97

We introduce several helper methods that select an item from the list of all items, and also return just the question text, submission time(s), and choice text(s).

https://gist.github.com/cjshawMIT/c6b2a409120663b899eccbc281bdd9ab#file-test_item_script-py-L95-L97

We then modify the output script to use our new helper methods and write the actual question text / choice text to the CSV:

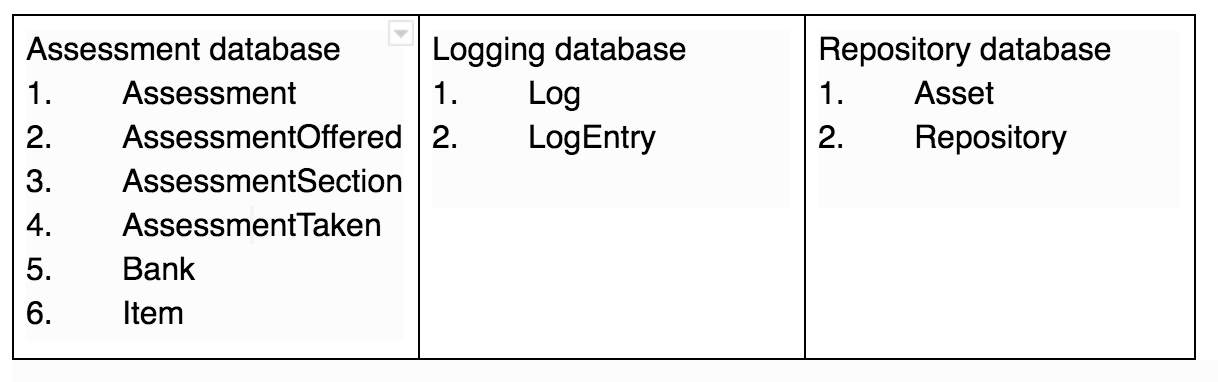

3 important databases for looking at student actions

- assessment

- logging

- repository

What will I need?

An Internet connection and a Browser. It is recommended that you also install some sort of browser extension for formatting JSON to make things easier to read - in Chrome I am using JSONview

The dataset you want to analyze must be hosted on a server along with the DLKit and QBank libraries, and properly configured runtime manager.

For this demonstration we combined 2 of the datasets that the Tech team collected in December and are hosting them on our server that is running DLkit and QBank.

For this example we are going to focus on a particular assessment offered:

<iframe src="https://clix-authoring.mit.edu/index.html?unlock_next=ON_CORRECT&bank=assessment.Bank%3A58bd900291d0d90b7c45de23%40ODL.MIT.EDU&assessment_offered_id=assessment.AssessmentOffered%3A5922756991d0d9751e30b297%40ODL.MIT.EDU"/>

From this iframe code we know 2 things:

- Assessment Bank = assessment.Bank%3A58bd900291d0d90b7c45de23%40ODL.MIT.EDU

- AssessmentOfferedId = assessment_offered_id=assessment.AssessmentOffered%3A5922756991d0d9751e30b297%40ODL.MIT.EDU

With this information we can construct a RESTful URL to retrieve all the student responses to this assessment offered:

The pattern for assembling this RESTful query looks like this:

https://clix-data-staging.mit.edu/api/v1/assessment/banks/<AssessmentBankId>/assessmentsoffered/<AssessmentOfferedId>/results?additionalAttempts

And for our example, it looks like this:

https://clix-data-staging.mit.edu/api/v1/assessment/banks/assessment.Bank%3A58bd900291d0d90b7c45de23%40ODL.MIT.EDU/assessmentsoffered/assessment.AssessmentOffered%3A5922756991d0d9751e30b297%40ODL.MIT.EDU/results?additionalAttempts

Note that the additionalAttempts argument is optional, but when present the returned JSON includes a list of all previous responses to the questions.

For each result object there are a number of elements that will be of interest:

- assessmentOfferedId: The id of the assessmentOffered, as above.

- takingAgentId: the id of the student. The actual GStudio id is located between the %3A and %40 delimiters.

- actualStartTime: the time that the student launched the assessment.

- completionTime: the datetime elements corresponding to when the student finished the whole assessment. If the student never clicked the “finish” button this will not be recorded. A note about time - time is set and determined by the server’s date and time. Note: times are in UTC time and will need to be converted

- sections: The assessment sections that the student took. For CLIx all the student data will be in a single section.

- questions: Some number of questions that were available in the assessment. For CLIx the number of questions will always be the same for a particular assessment/assessmentoffered.

- itemId: The id of the actual authored item/question. This can be passed to another RESTful endpoint to get the details of this assessment Item.

- isCorrect: Whether the students’ final response to this question was correct.

- responded: Whether the student submitted a response to the question.

- genusTypeId: An Id referencing the “type” of the question. Depending on the genusType, the developer or researcher can expect various other information to be available particular that that type.

- response: The final, “official” response to this question.

- itemId: Different from the authored itemId above, this itemId is a reference to the actual question the student responded to. This itemId will also match to the itemIds in assessment related LogEntries.

- submissionTime: The time this response was submitted.

- <other information specific to the genusType of the question.

- additionalResponses: any other responses that the student submitted prior to the final one. These response objects are modelled identically to the actual response.

- questions: Some number of questions that were available in the assessment. For CLIx the number of questions will always be the same for a particular assessment/assessmentoffered.

Now that we know how to see the data using the browser - we wrote a script that runs the exact same URL query we just looked at and saves the data to a CSV file for research analysis. The script example is here:

https://gist.github.com/birdland/16b4ef3d46cd994afe96b220bb6245d8

We can get all the LogEntries associated with our lesson, English Standard - Unit 0 - Lesson 1, by using the AssessementBank id as before, but passing it in as a Log id to the RESTful logging endpoint:

The pattern for assembling this RESTful query looks like this:

https://clix-data-staging.mit.edu/api/v1/logging/logs/<AssessmentBankId>/logentries

https://clix-data-staging.mit.edu/api/v1/logging/logs/assessment.Bank%3A58bd900291d0d90b7c45de23%40ODL.MIT.EDU/logentries

These LogEntries results are a bit simpler than the assessment response results:

- agentId: The id of the student. The actual GStudio id is located between the %3A and %40 delimiters.

- timestamp: The datetime that this LogEntry was entered.

- text: The actual message of the LogEntry. This is a string representation of the dictionary map of the logged information, and may include:

- action: Text representing the type of action that was logged

- assessmentOfferedId: the id of the AssessmentOffered from which this entry was logged.

- questionId: the id of the question from which this entry was logged. This maps to the itemId found in the assessment results response.

- Other information depending on the type of action that was logged