-

Notifications

You must be signed in to change notification settings - Fork 61

2021_CommunitySurveyResults

Alex Mitrevski edited this page Jan 14, 2022

·

4 revisions

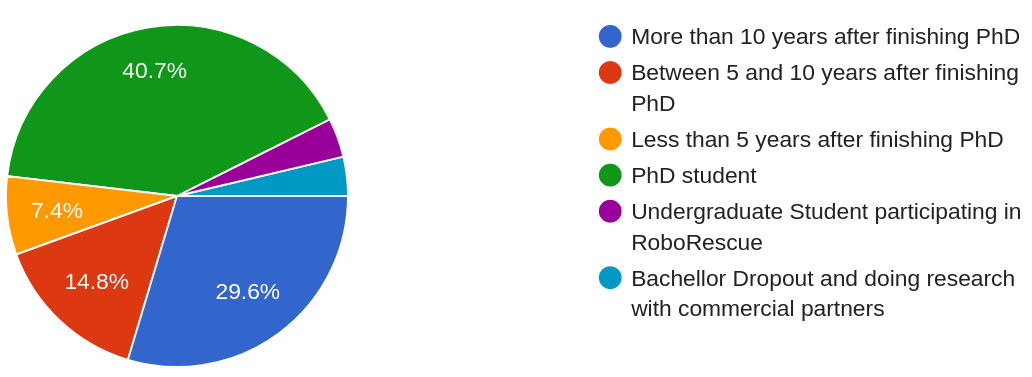

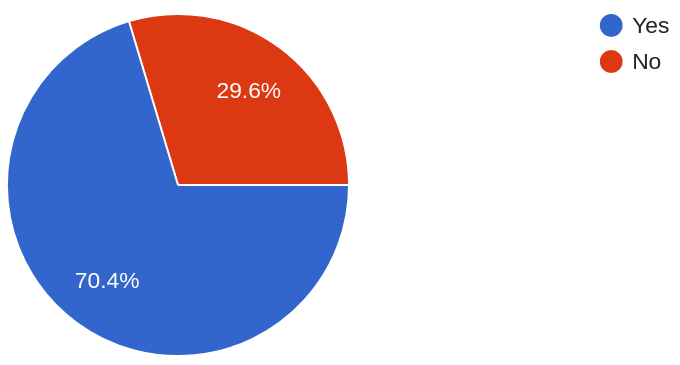

There were 27 respondents to the survey in total. The questions and provided answers are given below.

- Multi-robot systems and long-term autonomy

- Perception, failure detection

- Environment perception and machine learning

- Model-based methods

- Robot Vision

- Gaze-related HRI

- Robotics and computer vision

- Social robotics

- Robot perception, system and software engineering, decision making and robot control architectures

- Automated planning

- Robot vision

- Robot causal reasoning / cognitive robotics

- Software and hardware

- Control

- Teleoperation, manipulation planning

- RL, multi-agent systems, planning

- Multimodal behavior recognition and generation, social signal processing

- Object recognition

- Service robotics for assistive applications

- Socially-aware robotics

- Perception & learning

- Reinforcement learning in autonomous driving and service robots

- Human-robot interaction

- Human-robot interaction, social robotics

- Robotics as a learning tool to engage students in learning robotics, CS and/or AI

- Service robotics

- Reasoning under uncertainty

Are you currently or have you previously been a member of a team participating in RoboCup@Home or similar competitions?

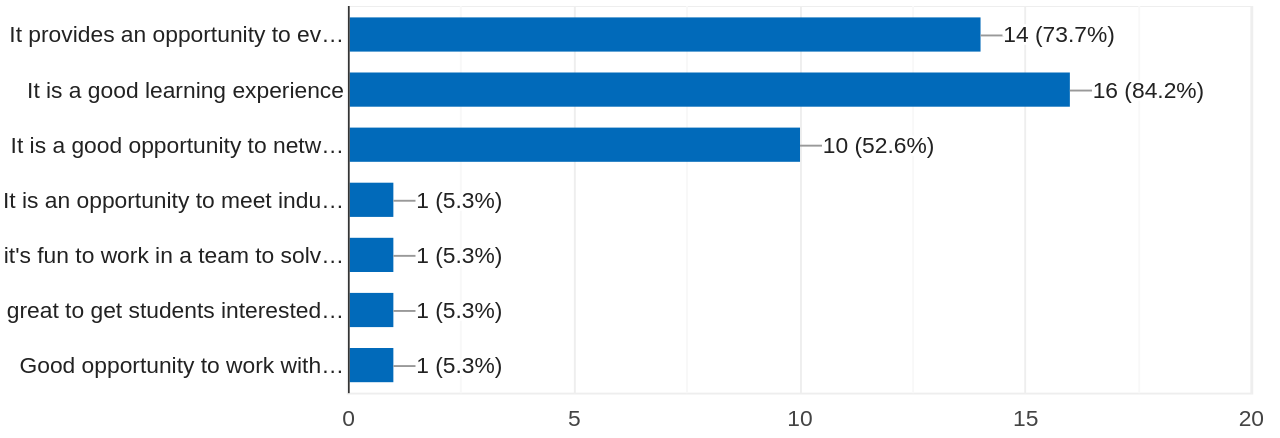

If you answered "Yes" to the question above, what was/is the main motivation for participating in the competition?

In the above diagram, the options are:

It provides an opportunity to evaluate my research in a realistic scenarioIt is a good learning experienceIt is a good opportunity to network and exchange experiences with other teamsIt is an opportunity to meet industrial partners for finding a jobIt is fun to work in a team to solve problemsGreat to get students interested in roboticsGood opportunity to work with a complete robot hardware system, with support from Toyota. Also, it is a nice way to meet people within my research institute and do fun work together.

- We intended to participate but sending the robot to the competition location was too expensive. I believe that it provides an opportunity to evaluate my research in a realistic scenario

- It would be good for our research group to actively have this integration goal and to be represented in the community

- The social aspect, the interaction that is expected from the robot with the human. That would be my focus

- A plug-and-play framework, where I can just easily plug in my research

- I think it would provide a somewhat standardized way to evaluate approaches for manipulation planning and overall teleoperation systems.

- Better software tools for enabling interoperability and repeatability between robot platforms.

- Research impact, shared open source components, realistic scenarios derived from real end-users rather than competitors/developers

- Organization of competitions

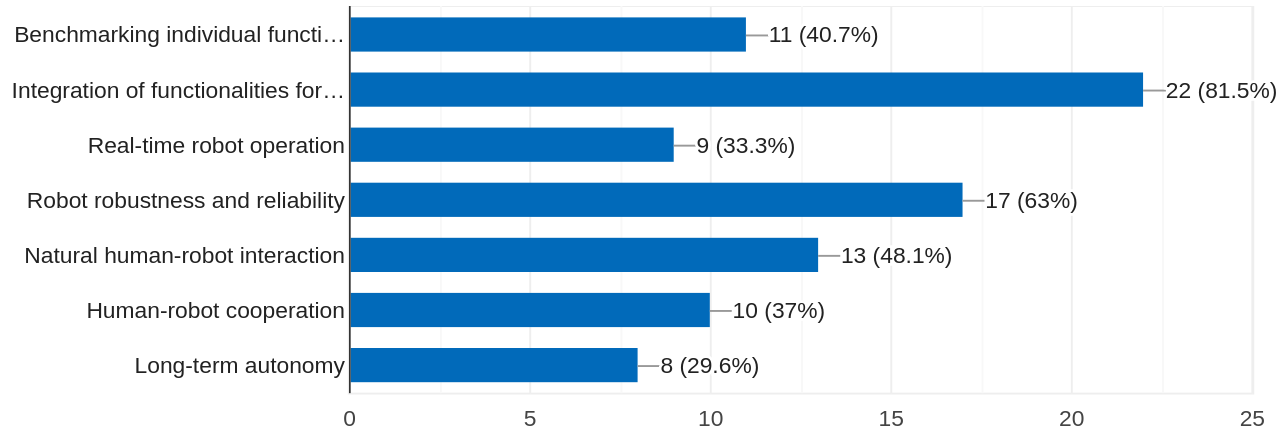

Which of the following aspects are the most important to cover in a domestic robot competition? Please select up to three answers.

The options in the above diagram are:

Benchmarking individual functionalities on well-defined tasksIntegration of functionalities for solving complex tasksReal-time robot operationRobot robustness and reliabilityNatural human-robot interactionHuman-robot cooperationLong-term autonomy

- Shared datasets generated on a realistic scenario (with dynamic aspects in the environment) -> All teams start with same data for ML models, implementations/training/methods should be what makes a difference. Some tasks should be about continuous run-time (longest time without running into failures).

- Great opportunity to generate datasets from different robots in real world scenarios. However this has to be done at the organization level.

- The competition should aim at finding scenarios that are somewhat but not totally rule-based (i.e. weakly structured), but not impossible to solve (to create the necessary feeling of competitiveness). In my personal research, I would benefit from community efforts to share recorded data in a structured format between teams.

- Potentially increasing transparency in failures: which component(s) are failing, why, when. Maybe publication of data collected during competition runs, ideally with documented failures.

- Evaluate robotic methods when used in combination (testing robustness, speed, fitting in with the hardware limitations)

- as an area for validating implementations, as a drive to think about integration and feasibility

- It's a natural benchmark for robot applications

- focusing on tasks that the user wants to execute with a robot

- Making the evaluation more systematic

- Demonstrate planning capabilities

- scenarios that are highly relevant to future applications

- Well-defined tasks make it easier for team members to develop functionalities because they are more targeted.

- Points for additional functionality or novel approaches to defined tasks, in addition to the baseline task score metrics, would provide a greater incentive to go beyond the basic task requirements and integrate novel research from our institution.

- I am unsure to what extent this has occurred in past competitions, but knowledge sharing between teams in the form of papers, presentations, and conversations would benefit the team. Team members can then apply these new learning to better their future competition performance and their own research.

- Deadlines are good motivators for student team members to develop new functionality.

- Creativity of solutions

- Provide good task settings for evaluating my research

- By providing concrete but varied tasks to benchmark algorithms and systems against, and by providing a venue to discuss ideas with other researchers.

- By having interesting, challenging tasks such that solving them will likely require original, publishable research.

- Would allow us to design, develop, and deploy robot applications that rely on the recognition of multimodal human behaviors and the generation of multimodal robot behaviors in practical real-world scenarios.

- Practical need for quickly adapting to the environment and (un)known objects used in order to develop robust routines. Having fixed deadlines to motivate people to really work on something.

- By providing an environment to share and compete on end-user and research relevant tasks

- Helping to develop a benchmark for a commercially viable home robot

- It allows benchmarking

- For benchmarking the reinforcement learning solutions developed

- By introducing an experimental methodology for HRI evaluation

- Testing robot behaviours and functionalities in real time scenarios that require not only accuracy by a certain speed, adaptability and autonomy.

- Helping novice and/or new teams to participate in part of the competition to promote learning, and raise potential of them fully participating in @Home competitions/research in the future.

- New approaches to solve the same problems!

- By providing a set of tasks that test different capabilities and are not too difficult

Do you have any concrete suggestions about research areas and tasks/benchmarking scenarios that should be included in the competition?

- Ways to measure the robustness in real-world settings. The arena and tasks are still very much "controlled" experiments with people familiar with robotics.

- Object detection, object pose estimation, grasp pose estimation are of particular interest for us, so creating tasks involving object-aware and task-driven manipulation (carrying a cup without dropping the content, etc.)

- provide a well-defined human-robot joint action scenario as one competition track

- We should focus on GPSR and EGPSR tasks that include specific skills (rather than different skill-based tasks)

- I would like a robocup light version where groups of students can participate who have no experience yet. Maybe even with virtual robots instead of real ones?

- More repetitions

- All included already

- Making tasks/benchmarking scenarios more advanced w.r.t. manipulation and locomotion capabilities of the robot (e.g. instead of just having pick and place for simple objects or door opening scenarios, you can introduce something like aligning multiple plates on shelves, arranging chairs with tables, etc.).

- The recent Behavior benchmark released by Stanford has many examples of tasks that are relevant to working in the home. Decluttering an area is a common challenge.

- Sim2Real and social navigation are two important areas.

- Introduce a challenge in which a robot software application is developed and deployed across multiple different robot hardware platforms.

- Introduce environmental interference (e.g., loud noises, changes in lighting, visual occlusions, etc.), which will have an impact on both human behavior recognition (e.g., speech recognition, person tracking, object recognition, etc.) and robot behavior generation (e.g., speech volume level, gesture selection, etc.).

- I think the scoring could be in parts a bit more sophisticated about the steps taken to solve some bigger task. If possible, more advanced solutions should score higher points.

- There needs to be a focus on execution speed (which is usually too slow to be usable in real life) and identifying tasks that would genuinely be useful in assistive or care settings. It is not clear how useful standard fetch tasks are in general.

- More real world scenario and less trying to apply research to the problem

- To include tests related to long-term memory

- Creation of a fully simulated environment and the possibility of remote participation with real robots

- It would be important to include cooperative and collaborative scenarios with humans and other robots

- What helps new/novice teams to accelerate their learning and research which enable them to participate fully in the future.

- i) robot has to use information from internet to solve a task, ii) autonomous map building, (iii) manipulate complex objects

Are there any important aspects about service robots that you find particularly underrepresented in RoboCup@Home or similar competitions?

- Explainable AI. Nobody knows what is going on behind the robots' actions or why it's doing what it's doing

- Dealing with unexpected situations

- Based on videos from previous challenges, the object layout seem to be simplistic. Having different level of complexity for objects and object heaps and more natural-looking layouts could be more interesting in the evaluation of object detection, object pose estimation and object manipulation

- Human-Robot collaboration has not been exploited enough

- Long-term autonomy

- Real, comparable, benchmark: objects at same locations for all; multiple illumination; separating vision and motion planning methods/competitions - which might make it easier to share and combine code and experience, hardly teams are experts in both

- Tasks that demonstrate aspects of cognitive robotics, such as inference/reasoning (i.e., answer how? what if? and why? questions about itself, humans, and the environment) and explainable autonomy (i.e., systems which allow the robot to generate explanations for actions and events to increase trust in human users).

- Efficiency, in terms of time and energy-consumption while performing the tasks

- Standards in creating cross-platform robot software applications beyond just a single robot (see above)

- The lack of environmental interference (see above), as this introduces the need for dynamic behaviors that go beyond what most models currently capture

- Clean positioning of objects hasn't really been needed in the past, although I know that limitations here a the biggest due to robotic arm costs...

- Real world scenario and testing with untrained operator

- Yes, service robots that perform tasks towards physically disabled persons

- Human Robot interaction

- The interaction with humans is missing in RoboCup, and it is fundamental for the 2050 challenge

- Diversity of the participation

- Robots without cables

- Robot learning

Are there any additional comments you would like to add about robotics competitions in general or RoboCup@Home in particular?

- I think the competition should foster more cooperation, perhaps through shared components or interfaces maintained/used by all teams (making it easy for newer teams to join). I know this has been attempted in the past with the refbox and with some visualization stuff from TUE, but back then there were no incentives for people to use this

- I also know it's hard, but rulebooks need to be defined much earlier, final versions of the rulebook a few months before German Open lends itself to a lot of hacking

- (general) It is my impression that the most performant, thus winning approaches are not the ones that necessarily drive progress in the field. Teams with methods that are still immature and not en-par should be given the opportunity to present and discuss the weaknesses with the community (not sure if this is happening at RoboCup@Home already though)

- When full integrated runs are not possible for some teams, partial runs and scoring with simpler setup maybe more encouraging small/new teams.

- Transporting the robot to a competition can be very challenging, and expensive.

- Investigate networked devices in the testbeds

- It would be great if the tasks and benchmarking are arranged in such a way that do not allow for much of hacking... i.e. it require systematic solutions that can generalize over the whole competition

- It's important to make sure the set of tasks includes some current research challenges

- Have reward mechanisms that encourage reuse and portability (across different robots) of robot software applications developed as part of the competition. One-off hardware/software solutions are limited in their impact

- If we are going virtual again, we should work on somehow integrating recordings of humans at least or be more flexible in adding new, unknown objects in the virtual environment.

- Integrate low floor high ceiling approaches when designing competitions

- Tasks should be designed so that they have a level of difficulty according to the current state of the art and the capabilities of the competitors, so at least 50% of the teams can complete each task